Abstract

Mixture distributions are naturally extra attractive to model the heterogeneous environment of processes in reliability analysis than simple probability models. This focus of the study is to develop and Bayesian inference on the 3-component mixture of power distributions. Under symmetric and asymmetric loss functions, the Bayes estimators and posterior risk using priors are derived. The presentation of Bayes estimators for various sample sizes and test termination time (a fact of time after that test is terminated) is examined in this article. To assess the performance of Bayes estimators in terms of posterior risks, a Monte Carlo simulation along with real data study is presented.

Similar content being viewed by others

Introduction

The power distribution is frequently proposed to study the electrical element reliability (Saleem et al.1) and in many practical situations, it provides a good fit to data as compared to other distributions, e.g., Rayleigh or gamma distribution. We considered this particular distribution due to skewed nature and applied in different fields like electrical engineering (Amanulla et al.2), reliability analysis (Shahzad et al.3), city population sizes, stock prices fluctuation, magnitude of earthquakes (Parsa and Murty4) and average wealth of a country's citizens etc. However, simple probability distribution may not be well fitted due to heterogeneous environment of reliability data. Therefore, mixture distributions of some suitable distributions are interesting to model the heterogeneous environment of procedures in reliability study. For instance, if the values randomly picked from this population are invented to be considered from three different probability distribution, 3-components mixture of that distribution is recommended. Use of a mixture distribution becomes unavoidable when values are not given for every distribution rather for the overall mixture distribution, so-called direct use of mixture distributions. Li5 and Li and Sedransk6 discussed type-I mixture distribution (mixture of probability distributions from the same family) and type-II mixture distribution (mixture of probability distributions from various family).

Many researchers have analyzed 2-component mixture models of different probability distributions and applied them to various real life problems under classical and Bayesian framework. Similar to 2-component mixture distribution, some researchers have studied the situations where data are taken from a 3-component mixture distribution. For illustration, in order to know amount of failure because of a definite reason of failure and to expand industrial procedure, Acheson and McElwee7 separated electrical tube failures into three types of flaws, namely, gaseous flaws, mechanical flaws, and usual deterioration of the cathode. Davis8 also described a mixture data on lifetimes of different parts composed from aircraft failure. Also, Tahir et al.9 used the real life mixture data on three parts, namely, Combination of Transformers, Transmitter Tube and Combination of Relays. Haq and Al-Omari10 studied the mixture of three Rayleigh distributions using type-I censored data under different scenarios. The application of such methodologies can further be seen in Luo et al.11, Wang et al.12 and Zhou et al.13. Thus, the practical significance of 3-component mixtures of distributions is evident to the cited literature.

Because of time and price restrictions, it is difficult proceed the testing till end value. Consequently, the observations larger than fixed test termination time are retained equally censored observations. It is stating that censoring is an asset of data and it is usually used in real lifetime tests. The practical reason of censoring is stated in Romeu14, Gijbels15 and Kalbfleisch and Prentice16.

Inspired by wide application of mixture distributions, here we define a mixture of the power distributions for capable modeling of practical data under Bayesian paradigm. Different types of loss functions and priors will be assumed to derive Bayes estimators along with posterior risks.

The 3-component mixture of power distributions (3-CMPD) has following pdf and survival function:

where \(\lambda_{1} , \, \lambda_{2}\) and \(\, \lambda_{3}\) are component parameters, \(p_{1}\) and \(p_{2}\) are mixing proportions and

The pdf \(f_{m} \left( y \right)\) and the survival function \(S_{m} \left( y \right)\) of the mth component, \(m = 1, \, 2, \, 3\), are written as:

Sampling structure for likelihood function

Suppose a data consists of \(n\) values from the 3-CMPD are taken in a real life test with fixed \(t\) (test termination time). Let \(y_{1} , \, y_{2} , \, ...,y_{u}\) be the values that can be observed and remaining \(n - u\) greatest values are taken as censored, that is, their failure time cannot be noted. So, \({\varvec{y}}_{1} = y_{11} , \, ..., \, y_{{1u_{1} }}\), \({\varvec{y}}_{2} = y_{21} , \, ..., \, y_{{2u_{2} }}\) and \({\varvec{y}}_{3} = y_{31} , \, ..., \, y_{{3u_{3} }}\) are failed data representing to 1st, 2nd and 3rd subpopulations. Remaining of the data which are greater than \(y_{u}\) taken to be censored from each subpopulation, while the numbers \(u_{1}\), \(u_{2}\) and \(u_{3}\) of failed values can be taken from 1st, 2nd and 3rd subpopulations. Rest of the \(n_{1} - u_{1}\), \(n_{2} - u_{2}\) and \(n_{3} - u_{3}\) values are picked as censored data from three subpopulations, whereas \(u = u_{1} + u_{2} + u_{3}\). Using the type-I right censored data, \({\mathbf{y}} = \left\{ {\left( {{\varvec{y}}_{1} = y_{11} , \, ..., \, y_{{1u_{1} }} } \right)} \right.\), \(\left( {{\varvec{y}}_{2} = y_{21} , \, ..., \, y_{{2u_{2} }} } \right)\), \(\left. {\left( {{\varvec{y}}_{3} = y_{31} , \, ..., \, y_{{3u_{3} }} } \right)} \right\}\), the likelihood function is:

On simplification, the likelihood function is:

Posterior distributions assuming different priors

In this section, using the non-informative priors (NIPs) and informative prior (IP), the posterior distributions of parameters are derived.

Posterior distribution assuming uniform prior (UP)

If no prior or additional prior knowledge is given, the use of UP and JP (Jeffreys’ prior) as NIPs are recommended in Bayesian estimation. We assume the \({\text{uniform (}}0, \, \infty {)}\) for component parameter \(\lambda_{m} \, (m = 1, \, 2, \, 3)\) and the \({\text{uniform (}}0,{ 1)}\) for the proportion parameter \(p_{s} \, (s = 1, \, 2)\). The joint prior distribution is \(\pi_{1} \left( {{\varvec{\Theta}}} \right) \propto 1\). Thus, the joint posterior distribution given censored data \({\mathbf{y}}\) is:

where \(A_{11} = 1 + u_{1}\), \(A_{21} = 1 + u_{2}\), \(A_{31} = 1 + u_{3}\), \(B_{11} = \left( {i - j} \right)\ln \left( \frac{1}{t} \right) + \sum\limits_{w = 1}^{{u_{1} }} {\ln \left( {\frac{1}{{y_{1w} }}} \right)}\), \(B_{21} = \left( {j - k} \right)\ln \left( \frac{1}{t} \right) + \sum\limits_{w = 1}^{{u_{2} }} {\ln \left( {\frac{1}{{y_{2w} }}} \right)}\), \(B_{31} = \left( k \right)\ln \left( \frac{1}{t} \right) + \sum\limits_{w = 1}^{{u_{3} }} {\ln \left( {\frac{1}{{y_{3w} }}} \right)}\), \(A_{01} = i + u_{1} + 1 - j\), \(B_{01} = j + u_{2} + 1 - k\), \(C_{01} = k + u_{3} + 1\),\(\Omega_{1} = \sum\limits_{i = 0}^{n - u} {\sum\limits_{j = 0}^{i} {\sum\limits_{k = 0}^{j} {\left( { - 1} \right)^{i} } \left( \begin{gathered} n - u \hfill \\ \, i \hfill \\ \end{gathered} \right)\left( \begin{gathered} i \hfill \\ j \hfill \\ \end{gathered} \right)\left( \begin{gathered} j \hfill \\ k \hfill \\ \end{gathered} \right)\Gamma \left( {A_{11} } \right)\Gamma \left( {A_{21} } \right)\Gamma \left( {A_{31} } \right)B_{11}^{{ - A_{11} }} B_{21}^{{ - A_{21} }} B_{31}^{{ - A_{31} }} } } B\left( {A_{01} ,B_{01} ,C_{01} } \right)\).

After simplification, the marginal posterior distributions are derived as:

Posterior distribution assuming Jeffreys’ prior (JP)

Jeffreys17,18 suggested a formula for finding the JP as: \(p\left( {\lambda_{m} } \right) \propto \left[ {\left| { - E\left\{ {\frac{{\partial^{2} \ln L\left( {\lambda_{m} \left| {{\varvec{y}}_{m} } \right.} \right)}}{{\partial \lambda_{m}^{2} }}} \right\}} \right|} \right]^{{{1 \mathord{\left/ {\vphantom {1 2}} \right. \kern-0pt} 2}}}\), where \(- E\left\{ {\frac{{\partial^{2} \ln L\left( {\lambda_{m} \left| {{\varvec{y}}_{m} } \right.} \right)}}{{\partial \lambda_{m}^{2} }}} \right\}\) is Fisher’s information. Here, we take prior distributions of \(p_{s} \, (s = 1, \, 2)\) are \({\text{uniform (}}0,{ 1)}\). So, the joint posterior distribution given censored data \({\mathbf{y}}\) using \(\pi_{2} \left( {{\varvec{\Theta}}} \right) \propto \frac{1}{{\lambda_{1} \lambda_{2} \lambda_{3} }}\) as joint prior distribution is:

where \(A_{12} = u_{1}\), \(A_{22} = u_{2}\), \(A_{32} = u_{3}\), \(B_{12} = \left( {i - j} \right)\ln \left( \frac{1}{t} \right) + \sum\limits_{w = 1}^{{u_{1} }} {\ln \left( {\frac{1}{{y_{1w} }}} \right)}\), \(B_{22} = \left( {j - k} \right)\ln \left( \frac{1}{t} \right) + \sum\limits_{w = 1}^{{u_{2} }} {\ln \left( {\frac{1}{{y_{2w} }}} \right)}\), \(B_{32} = \left( k \right)\ln \left( \frac{1}{t} \right) + \sum\limits_{w = 1}^{{u_{3} }} {\ln \left( {\frac{1}{{y_{3w} }}} \right)}\), \(A_{02} = i - j + u_{1} + 1\), \(B_{02} = j - k + u_{2} + 1\), \(C_{02} = k + u_{3} + 1\), \(\Omega_{2} = \sum\limits_{i = 0}^{n - u} {\sum\limits_{j = 0}^{i} {\sum\limits_{k = 0}^{j} {\left( { - 1} \right)^{i} } \left( \begin{gathered} n - u \hfill \\ \, i \hfill \\ \end{gathered} \right)\left( \begin{gathered} i \hfill \\ j \hfill \\ \end{gathered} \right)\left( \begin{gathered} j \hfill \\ k \hfill \\ \end{gathered} \right)B_{12}^{{ - A_{12} }} B_{22}^{{ - A_{22} }} B_{32}^{{ - A_{32} }} \Gamma \left( {A_{12} } \right)\Gamma \left( {A_{22} } \right)\Gamma \left( {A_{32} } \right)B\left( {A_{02} ,B_{02} ,C_{02} } \right)} }\).

The marginal posterior distributions are derived as:

Posterior distribution assuming the Informative prior

As an IP, we assume \(Gamma\left( {a_{m} , \, b_{m} } \right)\) for parameter \(\lambda_{m}\) and \(Bivariate \, Beta\left( {a, \, b, \, c} \right)\) for the proportion parameter \(p_{s}\). The joint prior distribution is:

So, the joint posterior distribution given censored data \({\mathbf{y}}\) is:

where \(A_{13} = a_{1} + u_{1}\), \(B_{13} = \sum\limits_{w = 1}^{{u_{1} }} {\ln \left( {\frac{1}{{y_{1w} }}} \right)} + \left( {i - j} \right)\ln \left( \frac{1}{t} \right) + b_{1}\), \(A_{23} = a_{2} + u_{2}\), \(B_{23} = \sum\limits_{w = 1}^{{u_{2} }} {\ln \left( {\frac{1}{{y_{2w} }}} \right)} + \left( {j - k} \right)\ln \left( \frac{1}{t} \right) + b_{2}\), \(A_{33} = a_{3} + u_{3}\), \(B_{33} = \sum\limits_{w = 1}^{{u_{3} }} {\ln \left( {\frac{1}{{y_{3w} }}} \right)} + \left( k \right)\ln \left( \frac{1}{t} \right) + b_{3}\), \(A_{03} = i - j + u_{1} + a\), \(B_{03} = j - k + u_{2} + b\), \(C_{03} = k + u_{3} + c\),\(\Omega_{3} = \sum\limits_{i = 0}^{n - u} {\sum\limits_{j = 0}^{i} {\sum\limits_{k = 0}^{j} {\left( { - 1} \right)^{i} } \left( \begin{gathered} n - u \hfill \\ \, i \hfill \\ \end{gathered} \right)\left( \begin{gathered} i \hfill \\ j \hfill \\ \end{gathered} \right)\left( \begin{gathered} j \hfill \\ k \hfill \\ \end{gathered} \right)B\left( {A_{03} ,B_{03} ,C_{03} } \right)\Gamma \left( {A_{13} } \right)\Gamma \left( {A_{23} } \right)\Gamma \left( {A_{33} } \right)B_{13}^{{ - A_{13} }} B_{23}^{{ - A_{23} }} B_{33}^{{ - A_{33} }} } }\).

The marginal posterior distributions are derived as:

Bayesian estimation using loss functions

Here, we derived the Bayes estimators (BEs) and their respective posterior risks (PRs) using Squared error loss function (SELF) and quadratic loss function (QLF) as symmetric loss functions, whereas, DeGroot loss function (DLF) and precautionary loss function (PLF) as asymmetric loss functions. The SELF, PLF and DLF introduced by Legendre19, Norstrom20 and DeGroot21, respectively. For a given posterior, the general expressions of the BEs and PRs are presented in Table 1.

Expressions for BEs and PRs using SELF

After simplification, the closed form expressions of BEs and PRs are given below:

where \(v = 1\), \(v = 2\) and \(v = 3\) for the UP, JP and IP, respectively.

Also, the BEs and PRs under other three loss functions can also be derived. For sake of shortness, we have not given these derived BEs and PRs but presented upon request to the corresponding author.

Elicitation of hyperparameters

Elicitation is a process used to enumerate a person’s prior professional knowledge about some unidentified quantity of concern which can be used to improvement any values which we may have. In Bayesian analysis, specification and elicitation of hyperparameters of prior density is a common difficulty. For different statistical models, different procedures for specification of opinions to elicit hyperparameters of prior distribution have been established.

Aslam22 suggested different methods which are depend upon the prior predictive distribution (PPD). In his study, one method based on prior predictive probabilities (PPPs) for elicitation of hyperparameters is used. The rule of evaluation is to link PPD with professional’s evaluation of this distribution and to select hyperparameters which make evaluation agree narrowly with a part of family. So, subsequent the rules of probability the professional would be consistent in elicitation of the probabilities. A few conflicts may arise which are not significant. A function \(\Phi \left( {\xi_{1} ,\xi_{2} } \right) = \mathop {\min }\limits_{{\xi_{1} , \, \xi_{2} }} \sum\limits_{z} {\left\{ {\frac{{p\left( z \right) - p_{0} \left( z \right)}}{p\left( z \right)}} \right\}^{2} }\) can be applied to elicit the hyperparameters \(\xi_{1}\) and \(\xi_{2}\), where \(p_{0} \left( z \right)\) represent the elicited PPPs and \(p\left( z \right)\) denote the PPPs considered by hyperparameters \(\xi_{1}\) and \(\xi_{2}\). For elicitation, the above equations are simplified numerically in Mathematica. A method depend upon PPPs is considered to elicit the hyperparameters of the IP In this study.

Elicitation of hyperparameters

Using the IP \(\pi_{3} \left( {{\varvec{\Theta}}} \right)\), the PPD is define as:

After substitution and simplification, the PPD is obtained as:

Using the above PPD (22), nine integrals based on limits of \(Y\), i.e., \(0.05 \le y \le 0.15\), \(0.15 \le y \le 0.25\), \(0.25 \le y \le 0.35\), \(0.35 \le y \le 0.45\), \(0.45 \le y \le 0.55\), \(0.55 \le y \le 0.65\), \(0.65 \le y \le 0.75\)\(0.75 \le y \le 0.85\) and \(0.85 \le y \le 0.95\) are considered with associated predictive probabilities 0.08, 0.07, 0.06, 0.06, 0.065, 0.07, 0.08, 0.09 and 0.10, respectively. It is stating that predictive probabilities may have been taken from professional(s) as their belief related to likelihood of given intervals. Now, to elicit the hyperparameters, the above equations are solved numerically using Mathematica software. From the above methodology, the values of hyperparameters are \(a_{1} = 0.9379\), \(b_{1} = 0.8332\), \(a_{2} = 0.7530\), \(b_{2} = 0.6344\), \(a_{3} = 0.5335\), \(b_{3} = 0.4339\), \(a = 2.4950\), \(b = 2.5060\) and \(c = 2.0200\).

Monte Carlo simulation study

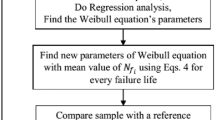

From the Bayes estimators’ expressions, it is clear that analytical assessments between BEs (using priors and loss functions) are not suitable. Therefore, the Monte Carlo simulation study is used to assess the presentation of BEs under various loss functions and priors. Moreover, the presentation of BEs has been checked under sample sizes and test termination time. We calculated the BEs and PRs of a 3-CMPD through a Monte Carlo simulation as:

-

1.

From given 3-component mixture distribution, a sample consists of \(n \, p_{1}\), \(n \, p_{2}\) and \(n\left( {1 - p_{1} - p_{2} } \right)\) values out of n values is taken randomly from \(f_{1} \left( y \right)\),\(f_{1} \left( y \right)\) and \(f_{3} \left( y \right)\), respectively.

-

2.

Select values which are larger than \(t\) as the censored values. The selection of \(t\) has been prepared in such a way that there is approximately 10% to 30% censoring rate in resultant data.

-

3.

Find the simulated Bayes estimates and posterior risks as \(\hat{\omega } = \frac{1}{500}\sum\limits_{i = 1}^{500} {\left( {\hat{\omega }_{i} } \right)}\) and \(\rho \left( {\hat{\omega }} \right) = \frac{1}{500}\sum\limits_{i = 1}^{500} {\rho \left( {\hat{\omega }_{i} } \right)}\), where Bayes estimates \(\hat{\omega }_{i}\) and posterior risks \(\rho \left( {\hat{\omega }_{i} } \right)\) of a parameter say \(\omega\) are determine assuming censored values by solving (21)-(30).

-

4.

Repeat steps 1–3 for \(n = 30,{ 5}0,{ 1}00\), \(\left( {\lambda_{1} , \, \lambda_{2} , \, \lambda_{3} , \, p_{1} , \, p_{2} } \right) = \left( {0.4,{ 0}{\text{.3}},{ 0}{\text{.2}}, \, 0.5, \, 0.3} \right)\) and \(t = 0.9, \, 0.6.\)

The simulated results have been arranged in Tables 2, 3, 4, 5, 6, 7, 8, 9. From Tables 2, 3, 4, 5, 6, 7, 8, 9, it is revealed that the extent of under-estimation of all five parameters assuming priors under SELF, QLF, PLF and DLF is larger for smaller n as compared to larger n for fixed t. Assuming fixed n, a similar trend is observed for smaller t as compared to larger t. It is also observed that PRs had inverse relationship with n, i.e., PRS decreased by increasing \(n\) (cf. Tables 2, 3, 4, 5, 6, 7, 8, 9). Also, it is noticed that PRs had inverse relationship with t, i.e., PRS increased by decreasing \(t\) (cf. Table 2, 3, 4, 5, 6, 7, 8, 9).

In case of choosing an appropriate prior, it is observed that IP materializes as an efficient prior because of lesser related PR as compared to NIP for estimating all five parameters under both symmetric and asymmetric loss functions (cf. Tables 2, 3, 4, 5, 6, 7, 8, 9). Also, it is noticed that JP (UP) emerges as a greater efficient because of smaller related PR as compared to UP (JP) for estimating component (proportion) parameters using both SELF and PLF (cf. Tables 2 and 6 vs Tables 4 and 8). Moreover, the UP is more efficient prior as compared to the JP under QLF and DLF due to smaller PR. On the other hand, the presentation of SELF is better than remaining three loss functions for estimating all parameters (cf. Tables 2 and 6).

It is also noticed that selection of an appropriate prior and loss function does not depend t. It is worth mentioning that our prior or loss function selection criterion is a posterior risk, i.e., we consider a loss function or prior the best if it yields minimum posterior risk as compared to others.

A real-life application

Here, the analysis of a lifetime data to explain the procedure for practical situations is presented. Gómez et al.23 stated a lifetime data on exhaustion fracture of Kevlar 373/epoxy with respect to fix pressure at 90% pressure level till all had expired. Gómez et al.23 showed that data \({\mathbf{x}}\) can be modeled with an exponential mixture model. However, the \(y = \exp \left( { - x} \right)\) as a transformation of an exponentially distributed data \(\left( {\mathbf{x}} \right)\) provides the power random variable and we can use the resulting data to describe the proposed Bayesian analysis. The lifetime data are divided into three groups of values with 26 values from 1st subpopulation, next 25 values from 2nd subpopulation, and the last 25 values from 3rd subpopulation. To use type-I censored samplings, we used the 3.4 as a censoring time and noted down the \({\varvec{x}}_{1} = x_{11} , \, ..., \, x_{{1u_{1} }}\), \({\varvec{x}}_{2} = x_{21} , \, ..., \, x_{{2u_{2} }}\) and \({\varvec{x}}_{3} = x_{31} , \, ..., \, x_{{3u_{3} }}\) failed values from subpopulations I, II and III, respectively. The remaining values, which were greater than 3.4, have been taken censored values from each subpopulation. At the end of test, we have the following numbers of failed values, \(u_{1} = 22\), \(u_{2} = 22\) and \(u_{3} = 21\). The remaining \(n - u = 11\) values were assumed censored values, whereas \(u = 65\) were the uncensored values, such that \(u = u_{1} + u_{2} + u_{3}\). The data have been summarized as below:

Here \(n - u = 11\), therefore we have 14.5% approximately censored data. BEs and PRs are given in following Table 10.

It is noticed that from the results, given in Table 10, are appropriate with the results given in simulation study section. The presentation of the BEs using IP is shown better than NIP as a result of smaller associated PRs for estimating all parameters under the different symmetric and asymmetric loss functions. Also, the BEs assuming JP (UP) is observed more suitable prior than UP (JP) based on smaller PRs for estimating component (proportion) parameters under SELF and PLF (SELF, QLF, PLF and DLF). In addition, it is revealed that the SELF is preferable to PLF, QLF and DLF due to minimum PRs for estimating all parameters.

Further to see how well the 3-CMPD performs as compared to other existing 3-component mixture distributions, we take 3-component mixture of exponential distributions (3-CMED), 3-component mixture of Burr type-XII distributions (3-CMBD), 3-component mixture of Rayleigh distributions (3-CMRD), and 3-CMPD. The Akaike information criterion (AIC) and Bayesian information criterion (BIC) are used to check their relative performance using the life span of fatigue fracture data. The AIC and BIC precise the relative loss of evidence so the lesser values of AIC and BIC reveal the best distribution. The AIC and BIC can be determined as: \({\text{AIC}} = 2k - 2\ln (L)\) and \({\text{BIC}} = k\ln (n) - 2\ln (L)\), where, L = likelihood value of given data, k = number of parameters in distribution and n = number of observations in data.

It is observed from the results, given in Table 11, our proposed mixture distribution provides the least values of AIC and BIC as compared to the other mixture distributions and fits the best on the life span of fatigue fracture data. Also, the p-value of Kolmogorov-Smirnov (KS) test also indicates the proposed model fits better than the rest models.

Conclusion and recommendation

In this article, a 3-CMPD using type-I right censored sample was developed to model lifetime mixture data using the Bayesian approach. Assuming the availability of IP and NIP under symmetric and asymmetric loss functions, the algebraic expressions of the BEs and PRs have also been presented. To assess the relative performance of BEs across different n with a fixed t, a comprehensive Monte Carlo simulation study has been performed. In addition to this, a real-life application has also been discussed to show the utility of the proposed methodology. From the results presented in the previous sections, we observed that as n increased, the BEs approached to their true value. To be more precise, smaller (larger) n results in larger (smaller) extent of under and/or over estimation at fixed value of t. We also noticed that the posterior risk decreased by increasing n. Finally, it is revealed that for a Bayesian analysis of 3-CMPD, the IP can be used to estimate component and proportion parameters under SELF. In future, the performance of the predictive distribution and predictive interval can be assessed. Also, other censoring schemes, like progressive and interval, can be used to develop mixture models in Bayesian framework.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Saleem, M., Aslam, M. & Economou, P. On the Bayesian analysis of the mixture of power function distribution using the complete and the censored sample. J. Appl. Stat. 37(1), 25–40 (2010).

Amanulla, B., Chakrabarti, S. & Singh, S. N. Reconfiguration of power distribution systems considering reliability and power loss. IEEE Trans. Power Deliv. 27(2), 918–926 (2012).

Shahzad, M. N., Asghar, Z., Shehzad, F. & Shahzadi, M. Parameter estimation of power function distribution with TL-moments. Revista Colombiana de Estadística 38(2), 321–334 (2015).

Parsa, A. R. & Murty, G. S. Power-laws and applications to earthquake populations. Pure Appl. Geophys. 147, 455–466 (1996).

Li, L. A. Decomposition Theorems, Conditional Probability, and Finite Mixtures Distributions (State University, 1983).

Li, L. A. & Sedransk, N. Mixtures of distributions: A topological approach. Ann. Stat. 16(4), 1623–1634 (1988).

Acheson, M. A. & McElwee, E. M. Concerning the reliability of electron tubes. Sylvania Technol. 4, 1204–1206 (1951).

Davis, D. J. An analysis of some failure data. J. Am. Stat. Assoc. 47, 113–150 (1952).

Tahir, M., Aslam, M. & Hussain, Z. On the Bayesian analysis of 3-componen mixture of exponential distribution under different loss functions. Hacettepe J. Math. Stat. 45, 609–628 (2016).

Haq, A. & Al-Omari, A. I. Bayes estimation and prediction of a three component mixture of Rayleigh distribution under type-I censoring. Revista Investigacion Operacional 37, 22–37 (2016).

Luo, C., Shen, L. & Xu, A. Modelling and estimation of system reliability under dynamic operating environments and lifetime ordering constraints. Reliab. Eng. Syst. Saf. 218, 1–9 (2022).

Wang, W., Cui, Z., Chen, R., Wang, Y. & Zhao, X. Regression analysis of clustered panel count data with additive mean models. Stat. Pap. 1–22 (2023).

Zhou, S., Xu, A., Tang, Y. & Shen, L. Fast Bayesian inference of reparameterized gamma process with random effects. IEEE Trans. Reliab. 78, 1–14 (2023).

Romeu, L. J. Censored data. Strateg. Arms Reduct. Treaty 11(3), 1–8 (2004).

Gijbels, I. Censored data. Wiley Interdiscip. Rev. Comput. Stat. 2(2), 178–188 (2010).

Kalbfleisch, J. D. & Prentice, R. L. The Statistical Analysis of Failure Time Data Vol. 360 (Wiley, 2011).

Jeffreys, H. An invariant form for the prior probability in estimation problems. Proc. R. Soc. Lond. Math. Phys. Sci. 186(1007), 453–461 (1946).

Jeffreys, H. Theory of probability (Claredon Press, 1961).

Legendre, A. M. Nouvelles méthodes pour la détermination des orbites des comètes: appendice sur la méthode des moindres carŕes (Courcier, 1806).

Norstrom, J. G. The use of precautionary loss function in risk analysis. IEEE Trans. Reliab. 45, 400–403 (1996).

DeGroot, M. H. Optimal Statistical Decision (Wiley, 2005).

Aslam, M. An application of prior predictive distribution to elicit the prior density. J. Stat. Theory Appl. 2, 70–83 (2014).

Gómez, Y. M., Bolfarine, H. & Gómez, H. W. A new extension of the exponential distribution. Revista Colombiana de Estadística 37, 25–34 (2014).

Author information

Authors and Affiliations

Contributions

"T.A. M.T. and M.A. wrote the main manuscript text and S.M. and S.A prepared Tables 1-5. All authors reviewed the manuscript".

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Abbas, T., Tahir, M., Abid, M. et al. The 3-component mixture of power distributions under Bayesian paradigm with application of life span of fatigue fracture. Sci Rep 14, 8074 (2024). https://doi.org/10.1038/s41598-024-58245-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-58245-x

Keywords

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.