Abstract

In this study, a versatile model, called \(\alpha\)-monotone inverse Weibull distribution (\(\alpha\)IW), for lifetime of a component under stress is introduced by using the \(\alpha\)-monotone concept. The \(\alpha\)IW distribution is also expressed as a scale-mixture between the inverse Weibull distribution and uniform distribution on (0, 1). The \(\alpha\)IW distribution includes \(\alpha\)-monotone inverse exponential and \(\alpha\)-monotone inverse Rayleigh distributions as submodels and converenges to the inverse Weibull, inverse exponential, and inverse Rayleigh distributions as limiting cases. Also, slash Weibull, slash Rayleigh, and slash exponential distribuitons can be obtained under certain variable transformation and parameter settings. The \(\alpha\)IW distribution is characterized by its hazard rate function and characterizing conditions are provided as well. Maximum likelihood, maximum product of spacing, and least squares methods are used to estimate distribution parameters. A Monte-Carlo simulation study is conducted to compare the efficiencies of the considered estimation methods. In the application part, two practical data sets, Kevlar 49/epoxy and Kevlar 373/epoxy, are modeled via the \(\alpha\)IW distribution. Modeling performance of the \(\alpha\)IW distribution is compared with its rivals by means of some well-known goodness-of-fit statistics and results show that \(\alpha\)IW distribution performs better modeling than them. Results of comparison also indicate that obtaining the \(\alpha\)IW distribution by using the \(\alpha\)-monotone concept is cost effective since the new shape parameter added to the distribution by using the \(\alpha\)-monotone concept significantly increases the modeling capability of the IW distribution. As a result of this study, it is shown that the \(\alpha\)IW distribution can be an alternative to the well-known and recently-introduced distributions for modeling purposes.

Similar content being viewed by others

Introduction

Inverse Weibull (IW) distribution, also known as Fréchet or type-II extreme value distribution, is introduced by Keller and Kanath1 to model the degeneration phenomena of mechanical components, such as dynamic components (piston, crankshaft, etc.) of diesel engines; see also Nelson2 in the context of modeling breakdown of insulating fluid. Later on, it has been used in analysing data from different areas of science, e.g. actuaria, agricaulture, energy, hydrology, medicine, and so on.

The probability density function (pdf) of the IW distribution is

and its cumulative distribution function (cdf) is

where \(\beta\) and \(\sigma\) are the shape and scale parameters, respectively. Herein after, random variable having pdf in (1) is denoted by \(X \sim IW(\beta , \sigma )\). As stated in Helu3, the IW distribution has longer right tail than the other well-known distributions and also has hazard function like log-normal and inverse Gaussian distributions.

The IW distribution and its sub-models inverse Rayleigh and inverse exponential distributions are widely used in reliability engineeering. However, in some cases, IW and its sub-models can not model reliability data adequately. Therefore, generalized/extended versions of the IW distribution are proposed to improve its modeling capability, i.e. to model reliability data more accurately; see for example Chakrabarty and Chowdhury4, Fayomi5, Hanagal and Bhalerao6, Jahanshahi et al.7, Saboori et al.8, Afify et al.9, Hussein et al.10 and references given them. Note that IW distribution is also called Fréchet distribution. Therefore, we recommend reading Afify et al.11, Hussein et al.10 and references given them in the context of extension/generalization of the Fréchet distribution.

There are quite a variety of methods for extending/generating distribution; see Lee et al.12 for an overview on it. In this context, slash distribution introduced by Andrews et al.13 has been popular. Later on, various slash distributions were introduced; e.g., Oliveres-Pacheco et al.14, Iriarte et al.15, Korkmaz16, Gómez et al.17, and references that given by them for univariate slash distributions.

Recently, Jones18 considered the distribution of \(X \times Y^{\frac{1}{\alpha }}\) that is formally different form of the slash distribution, and called it as \(\alpha\)-monotone density. Here, X and Y are independent random variables following distributions on \(\mathbb {R^{+}}\) and uniform distribution having range 0 to 1, i.e., U(0,1), respectively. See Jones18 and Arslan19,20,21 for the theoretical view points of the \(\alpha\)-monotone distribution and application of it, respectively. Arslan20,21 show that the \(\alpha\)-monotone concept is easly applied for the baseline distribution and make significant effect on the modeling capability of the baseline distribution.

It is known that IW distribution is a well-known candidate in modeling lifetime data. However, its modeling performance may be inadequate since it has one shape parameter; therefore, it has to be improved in such cases. For example, when lifetime of a component under the stress wanted to be modeled and the effect of stress level wanted to be estimated, a new parameter, called stress parameter or shape parameter, may be included into density of the IW distribution. As a consequance, lifetime of a component under the stress is expected to be lower than the routine case. The division operation is usually used to reduce the value of a random variable arbitrarily. However, multiply it by a random variable taking value between 0 and 1 can also be used. Here, the reason prefering the multiplication rather than division is because having a very useful variable following U(0,1). Therefore, a random variable T defined as \(T=X \times Y^{\frac{1}{\alpha }}\) is very important in lifetime analysis. The T can be represent the lifetime of X under the stress.

Since the inherent of \(\alpha\)-monotone distribution, a random variable having an \(\alpha\)-monotone distribution will preciously represent a lifetime of a component under the stress. In this regard, \(\alpha\)-monotone distribution can be an alternative to the IW distribution. However, to the best of authors' knowledge, there are limited number of studies in the literature concerning the \(\alpha\)-monotone distribution spesifically in modeling the lifetime data. The motivation of this study comes from filling the gap in the literature about the \(\alpha\)-monotone distributions in lifetime data modeling. Therefore, in this study, a practical model, for lifetime of a component under stress, is proposed. The pdf of the proposed model meets the condition for having \(\alpha\)-monotone density; therefore it is called \(\alpha\)-monotone IW (\(\alpha\)IW) distribution. Also, the cdf and hazard rate function (hrf) of the \(\alpha\)IW distribution are obtained; then, the \(\alpha\)IW distribution is characterized by its hrf and characterizing conditions are provided as well. The r-th moment of the \(\alpha\)IW distribution is also formulated. Furthermore, it is showed that the \(\alpha\)IW distribution can be expressed as a scale-mixture between the IW and U(0, 1) distributions. Data generation process is also developed by using the stochastic representation of the random variable having the \(\alpha\)IW distribution. Maximum likelihood, maximum product of spacing, and least squares estimation methods are used to estimate parameters of the \(\alpha\)IW distribution. The \(\alpha\)IW distribution includes the \(\alpha\)-monotone inverse Rayleigh, \(\alpha\)-monotone inverse exponential, IW, inverse Rayleigh, and inverse exponential distributions for the different parameter settings and limiting cases. Thus, it can be considered as a general class of the IW distribution by adding a new shape parameter that allows to distribution being more flexible than the IW distribution. In light of this, the \(\alpha\)IW can be an alternative to the IW and its rivals in lifetime data analysis.

The rest of the paper is organized as follows. The \(\alpha\)IW distribution, its characterization, and properties are given; then submodels of the \(\alpha\)IW distribution are obtained and data generation process for the \(\alpha\)IW distribution is provided. By the following sections, parameter estimation for the \(\alpha\)IW distribution is handled and real-life data sets are used to show modeling capability of the \(\alpha\)IW distribution and compare it with its rivals. The paper is finalized with some concludings and remarks.

The \(\alpha\)IW distribution

Proposition 1

A random variable T defined by \(T=X \times Y^{1/\alpha }\), where X \(\sim\) IW(\(\beta , \sigma\)) and Y \(\sim\) U(0, 1) are independent random variables, follows the \(\alpha\)IW distribution having the pdf

Here, \(\Gamma (a)=\displaystyle \int _{0}^{\infty }u^{a-1}e^{-u}du\) and \(G(t;a,b)=\frac{a^b}{\Gamma (a)}\displaystyle \int _{0}^{z}u^{a-1} \exp (-b u) du\) are the gamma function and the cdf of the gamma distribution, respectively.

Proof

The proof is completed by using the Jacobian transformation, where J is the Jacobian,

Then, the joint pdf of T and W is

The marginal pdf of the random variable \(T\) is obtained immediately by taking integration with respect to the random variable \(W\) using the transformation \(t^{-\beta } w^{\frac{\beta }{\alpha }}=u\). Herein after, random variable having pdf in (3) is denoted by \(T \sim \alpha \text {IW}(\alpha , \beta , \sigma )\). \(\square\)

Proposition 2

The cdf and hrf of the \(\alpha\)IW distribution are

and

respectively.

Proof

The results follow from the definitions of the \(\alpha\)-monotone distribution and hrf; see Jones18. \(\square\)

The plots for the pdf and hrf of the \(\alpha\)IW distribution for certain values of the parameters are shown in Fig. 1a,b and c, respectively.

It should be noted that pdf of the \(\alpha\)IW distirbution can be skewed to the right or left, and also has various shapes for the different parameter settings; see Fig. 1a and b, respectively. Besides, shapes of the hrf of the \(\alpha\)IW distribution, given in Fig. 1c, can be monotone decrease, monotone decrease-increase (bathup-shape), or monotone increase-decrease. These properties make \(\alpha\)IW distribution desirable in modeling reliability data.

Characterization

In this section, the proposed model is characterized by its hrf and characterizing conditions are provided as well.

The pdf of the \(\alpha\)IW distribution can be expressed as

where \(\Gamma (a, z)=\displaystyle \int _{z}^{\infty }u^{a-1} \exp (-u) du\) is an upper incomplete gamma function. Therefore, cdf, survival function, and hrf of the \(\alpha\)IW distribution can also be expressed as

and

respectively. Note that the conditions \(F(0)=0\) and \(F(\infty )=1\) are satisfied, which implies the function F(t) is a cdf.

Proposition 3

The random variable \(T:\Omega \longrightarrow \left( 0,+\infty \right)\) has continuous pdf f(t) if and only if the hrf h(t) satisfies the following equation:

Proof

According to definition of the hrf, given by in (5), it follows:

Thus, the statement of proposition immediately follows. \(\square\)

Proposition 4

The random variable \(T:\Omega \longrightarrow \left( 0,+\infty \right)\) has \(\alpha\)IW\((\alpha , \beta , \sigma )\) if and only if the hrf h(t), defined by (5), satisfies the following equation:

Proof

Necessity: Assume that \(T\sim \alpha\)IW(\(\alpha , \beta , \sigma\)), with the pdf f(t), defined by (4). Then, natural logarithm of this pdf, can be expressed as:

Differentiating both sides of this equality with respect to t, we get:

Thus, according to (5), (6), and (8), it follows:

which after certain simplification yields (7).

Sufficiency: Suppose that (7) holds. After integration, it can be rewritten as follows:

from the above differential equation, we have

Integrating (9) from 0 to t, we obtain:

which after simplification yields

whereby from the conditions \(F(0)=0\) and \(F(\infty )=1\). Thus, the function F(t) is indeed the cdf from \(\alpha \text {IW}(\alpha ,\beta ,\sigma )\), which completes the proof. \(\square\)

Proposition 5

The r-th moment of the \(\alpha\)IW distribution is formulated as follows

Proof

The random variable \(T\sim \alpha \text {IW}(\alpha , \beta , \sigma )\) can be expressed by using the stochastic representation \(T=X \times Y^{\frac{1}{\alpha }}\). Then,

which completes the proof. \(\square\)

Proposition 6

The random variable T, having pdf given in (3), has an \(\alpha\)-monotone density since its pdf satisfies the condition

Proof

From the Proposition 1,

By using the variable transformation \(tw^{-1/\alpha }=u\), \(f_{T}(t)\) is expressed as

It is seen that \(f_{T}(t)\) can be expressed as

Then,

From there, the proof is completed by following lines given below.

\(\square\)

The \(\alpha\)IW distribution is obtained as a scale-mixture between the IW and U(0,1) distributions as shown in Proposition 7.

Proposition 7

Let \(T|Y=y\sim \text {IW}(\beta , \sigma y^{\frac{\beta }{\alpha }})\) and \(Y\sim \text {U}(0, 1)\), then \(T\sim \alpha \text {IW}(\alpha ,\beta ,\sigma )\). Therefore, the \(\alpha \text {IW}(\alpha ,\beta , \sigma )\) distribution is a scale-mixture between the \(\text {IW}(\beta , \sigma y^{\frac{\beta }{\alpha }})\) and U(0, 1) distributions.

Proof

The proof is completed after following the transformation \(y^{\beta /\alpha }t^{-\beta }=u\). \(\square\)

It can be seen from the propositions given above that the \(\alpha\)-monotone concept is easy to be applied and adds essential propoerties to the baseline distribution, i.e., IW distribution. For example, the cdf of the \(\alpha\)IW distribution is formed in terms of the cdf of the IW distribution and the pdf of the \(\alpha\)IW distribution. In addition, the moments of the \(\alpha\)IW distribution can be easily obtained with the help of the moments of the IW and uniform distributions. Furthermore, the pdf of the \(\alpha\)IW distribution can be written as a scale-mixture between the IW and uniform distributions and this property may make it attractive in the application.

Data generation

The steps given below should be followed for obtaining the random variates from the \(\alpha \text {IW}(\alpha , \beta , \sigma )\) distribution:

-

Step 1

Generate random variate x from the IW(\(\alpha , \beta\)) distribution via equation

$$\begin{aligned} x=\left[ -\sigma ^{-1}\ln (p)\right] ^{-1\big /\beta }; \quad 0<p<1,\quad i.e. \quad p\sim \text {U}(0,1). \end{aligned}$$ -

Step 2

Generate random variate y from the U(0,1) distribution.

-

Step 3

Obtain the random variate from the \(\alpha \text {IW}(\alpha , \beta , \sigma )\) distribution via the equation

$$\begin{aligned} t=x \times y^{1/\alpha }. \end{aligned}$$

Note that steps given above come from the definition for the random variable that follows a \(\alpha\)IW distribution; see Proposition 1.

Related distributions

The \(\alpha\)IW distribution includes some distributions as sub-models, converges to the some other well-known distributions as a limiting case and be slash Weibull distribution under variable transformation. In this section, referred distributions are given briefly.

Sub-models

Let \(T \sim \alpha \text {IW}(\alpha , \beta , \sigma )\), then we have the next submodels.

-

i.

If \(\beta =1\), then T has an \(\alpha\)-monotone inverse exponential density given below

$$\begin{aligned} g(t; \alpha , \sigma )=\dfrac{\alpha ^2}{\sigma ^{\alpha }} \Gamma \left( \alpha \right) t^{\alpha -1} G\left( t^{-1}; \alpha +1, \sigma \right) ;\quad t>0 \quad \alpha , \sigma >0. \end{aligned}$$ -

ii.

If \(\beta =2\), then T has an \(\alpha\)-monotone inverse Rayleigh density as follows:

$$\begin{aligned} g(t; \alpha , \sigma )=\dfrac{\alpha ^2}{2 \sigma ^{\alpha /2}} \Gamma \left( \dfrac{\alpha }{2}\right) t^{\alpha -1} G\left( t^{-2}; \dfrac{\alpha }{2}+1, \sigma \right) ;\quad t>0 \quad \alpha , \sigma >0. \end{aligned}$$

Limiting distributions

Let \(T \sim \alpha \text {IW}(\alpha , \beta , \sigma )\).

-

i.

The stochastic representation of the random variable T having \(\alpha\)-monotone distribution is \(T=X \times Y^{\frac{1}{\alpha }}\). Therefore, it is trivial that if \(\alpha\) goes to infinity, the random variable T converenges to the X. As a result of this, if \(\alpha \rightarrow \infty\), then \(\alpha \text {IW}(\alpha , \beta , \sigma )\) converenges to the IW(\(\beta , \sigma\)).

-

ii.

If \(\alpha \rightarrow \infty\) and \(\beta =1\), then \(\alpha \text {IW}(\alpha , \beta , \sigma )\) converenges to the inverse exponential distribution

$$\begin{aligned} g(t; \sigma )=\sigma t^{-2}\exp \big ({-\sigma t^{-1}}\big ); \quad t>0, \quad \sigma >0. \end{aligned}$$ -

iii.

If \(\alpha \rightarrow \infty\) and \(\beta =2\), then \(\alpha \text {IW}(\alpha , \beta , \sigma )\) converenges to the inverse Rayleigh distribution

$$\begin{aligned} g(t; \alpha , \sigma )=2 \sigma t^{-3}\exp \big ({-\sigma t^{-2}}\big ); \quad t>0, \quad \sigma >0. \end{aligned}$$

Under variable transformation

Let \(T \sim \alpha \text {IW}(\alpha , \beta , \sigma )\). Then, the random variable Z defined by \(Z=T^{-1}\) has the pdf

and follows the slash Weibull distribution with a certain reparametrization. Note that slash exponential and slash Rayleigh distributions are sub-models of the slash Weibull distribution.

Estimation

Let \(\underset{\sim }{t}=(t_1, t_2, \cdots , t_n)\) be the observed values of a random sample from \(\alpha \text {IW}(\alpha , \beta , \sigma )\) distribution. Then, estimation methods can be used to obtain the estimators of paramaters \(\alpha\), \(\beta\), and \(\sigma\), say \({\hat{\alpha }}, {\hat{\beta }}\), and \({\hat{\sigma }}\), for the \(\alpha\)IW distribution. In this study, well-known estimation methods maximum likelihood (ML), maximum product of spacing (MPS), and least squares (LS) are considered to obtain estimators of the parameters of the \(\alpha\)IW distribution. Also, efficiencies of the ML, MPS, and LS estimation methods are compared by conducting a Monte-Carlo simulation study. Note that optimization tools “fminsearch” and “fminunc”, which are available in software MATLAB2015b can be used to find the ML, MPS, and LS estimates of the parameters \(\alpha , \beta\), and \(\sigma\).

ML estimation

The idea of the ML method is coming from maximization of the log-likelihood (\(\ln L\)) function

After taking partial derivative of the \(\ln L\) function given in (10) with respect to the parameters of interest and setting them equal to 0, the following likelihood equations

and

are obtianed. Here, \(\Psi {(\cdot )}\) represents the digamma function. Since likelihood Eqs. (11–13) include nonlinear functions of the parameters \(\alpha\), \(\beta\) and \(\sigma\), they cannot be solved explicitly. Therefore, the ML estimates of the parameters \(\alpha\), \(\beta\), and \(\sigma\), say \({\hat{\alpha }}_{ML}\), \({\hat{\beta }}_{ML}\), and \({\hat{\sigma }}_{ML}\), are obtained by solving likelihood equations (11–13) simulatanously. Note that the ML estimators has approximately a \(N_{3}(\Theta , \varvec{I^{-1}(\Theta )})\) distribution where \(\varvec{I(\Theta )}\) is the expected information matrix. However, the matrix \(\varvec{J(\Theta )}\), which is equal to \(-\varvec{H}(\Theta )\) and \(\varvec{H}\) denotes the Hessian matrix, evaluated at \({\hat{\Theta }}\) can be used if the matrix \(\varvec{I(\Theta )}\) for \(\Theta\) can not be obtained explicitly. Therefore, asymptotic confidence intervals for the parameters \(\alpha , \beta\), and \(\sigma\) are defined by using the matrix \(\varvec{J}(\Theta )\), where \(\Theta =(\alpha , \beta , \sigma )^{\top }\). The entries of \(\varvec{H}\) are given in the Supplementary Material of the paper as an appendix. Also, ML estimation of the parameters \(\alpha\), \(\beta\), and \(\sigma\) is considered under progressive Type-II censored sample and provided in the Supplementary Material of the paper as an appendix.

MPS estimation

The MPS estimates of the parameters \(\alpha\), \(\beta\), and \(\sigma\), say \({\hat{\alpha }}_{MPS}\), \({\hat{\beta }}_{MPS}\), and \({\hat{\sigma }}_{MPS}\), of the \(\alpha\)IW distribution are the points in which the objective function

attains its maximum. Here, \(t_{(\cdot )}\) denotes ordered observation, i.e., \(t_{(1)} \le t_{(2)} \le \cdots \le t_{(n-1)} \le t_{(n)}\). Note that \(t_{0}\) and \(t_{(n+1)}\) are the values in which \(F_{T}(t_{0}; \alpha , \beta , \sigma )\equiv 0\) and \(F_{T}(t_{n+1}; \alpha , \beta , \sigma )\equiv 1\); see Bagci et al.22 and references given there in.

LS estimation

In the LS method, for the case of the \(\alpha\)IW distribution, it is aimed to minimize the objective function

with respect to the parameters of interest (\(\alpha\), \(\beta\), and \(\sigma\)) and the results are called the LS estimates of the parameters \(\alpha\), \(\beta\), and \(\sigma\), i.e., \({\hat{\alpha }}_{LS}\), \({\hat{\beta }}_{LS}\), and \({\hat{\sigma }}_{MPS}\); see Acitas and Arslan23 and references therein for further information.

Monte-Carlo simulation

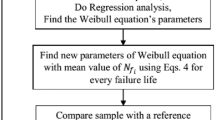

In this subsection, a Monte-Carlo simulation study is conducted to compare the efficiencies of the ML, MPS, and LS estimation methods. Random samples are generated from the \(\alpha\)IW distribution, as presented in the data generation subsection, for the sample sizes 100, 200, and 300 and different parameter settings; see Table 1.

Note that the scale parameter \(\sigma\) is taken to be 1 througout all simulation scenario without loss of generality. All the simulations are conducted for \(\lfloor 100,000/n \rfloor\) Monte-Carlo runs, where \(\lfloor \cdot \rfloor\) denotes the integer value function via MATLAB2015b. The ML, MPS, and LS estimates of the parameters are obtained by using the optimization tools “fminunc”, which is available in software MATLAB2015b. Efficiencies of the ML, MPS, and LS methods are compared by using bias, variance, and mean squared error (MSE) criteria. The simulation results are given in Table 2 and summarized as follows.

-

Scenario 1: The ML method gives the smallest bias values for \(\alpha\) in all sample sizes. However, in terms of the MSE criterion, the MPS method results the smallest values for \(\alpha\) in all sample sizes. Concerning the \(\beta\), and \(\sigma\), the ML, MPS, and LS methods have negligible biases and small variances for all sample sizes n.

-

Scenario 2: The ML, MPS and LS methods have the negligible bias values and small variances for the \(\alpha\), and \(\beta\) for all sample sizes; however the LS method gives the largest MSE values for \(\sigma\). When \(n=300\), the ML, MPS, and LS show more or less the same performances.

-

Scenario 3: The MPS method has the largest bias values and the LS gives the largest MSE values for \(\alpha\) in all sample sizes. However, the ML and MPS methods give more or less the same bias and the MSE values for \(\beta\) and \(\sigma\) for all sample sizes.

-

Scenario 4: The ML, MPS, and LS methods result negligible bias and small MSE values for \(\alpha\), \(\beta\), and \(\sigma\) when sample size \(n=200\) and \(n=300\). However, the ML, MPS, and LS do not show the good performances for \(\sigma\) in the sample size \(n=100\).

-

Scenario 5: The LS method gives the biggest MSE values for \(\alpha\) \(\beta\), and \(\sigma\) in all sample sizes. The ML, MPS and LS methods have the small bias values and small variances for the \(\alpha\), \(\beta\) and \(\sigma\) for the sample size \(n=200\) and \(n=300\).

-

Scenario 6: The MPS method produces smallest bias and MSE values for \(\alpha\), \(\beta\), and \(\sigma\) when the sample size \(n=100\). The ML, MPS, and LS methods show more or less the same performances for \(\alpha\), \(\beta\), and \(\sigma\) when the sample size \(n=300\).

-

Scenario 7: The ML, MPS, and LS methods have negligible bias values for \(\alpha\) and \(\beta\); however the LS gives the largest MSE values for \(\alpha\) and \(\sigma\), except the sample size \(n=300\). The MPS and ML have similar efficiencies for \(\alpha\), \(\beta\), and \(\sigma\) for all sample sizes n.

-

Scenario 8: The LS method shows the worst performance for \(\alpha\) when the sample \(n=100\). Concerning the \(\beta\) and \(\sigma\), the ML, MPS, and LS methods produce more or less the same results for sample sizes \(n=200\) and \(n=300\).

To sum up, the ML and MPS methods are very closed easch other and stand one step ahead of the LS method. It should also be noted that the simulated MSE values for each parameter of the ML, MPS, and LS estimates are decreasing when the sample size increases, as expected.

Applications

In this section, \(\alpha\)IW distribution is used to model two popular data sets,called Kevlar 49/epoxy and Kevlar 373/epoxy, from the related literature.

There exist various extended/generalized versions of the IW distribution in the literature. To the best of authors' knowledge, Kumaraswamy inverse Weibull (KIW)24, alpha power inverse Weibull (APIW)25, Marshall-Olkin extended inverse Weibull (MOEIW)26, and exponentiated exponential inverse Weibull (EEIW)27 distributions, which can be strong alternative to the \(\alpha\)IW distribution, have not been used in modeling Kevlar 49/epoxy and Kevlar 371/epoxy data sets. Therefore, the modeling performance of the \(\alpha\)IW distribution is compared with the KIW, APIW, MOEIW, and EEIW distributions. In the comparisons, the well known information criteria the corrected Akaike Information Criterion (AICc), Bayesian Information Criterion (BIC), and the following goodness-of-fit statistics; Kolmogorov-Smirnov (KS), Anderson-Darling (AD), and Cramér-von Misses (CvM) are considered. In the estimation, the ML, MPS, and LS methods are used to obtain estimates of the parameters of the \(\alpha\)IW distribution. The ML method is considered for the KIW, APIW, MOEIW, EEIW distributions. The optimization tool “fminunc” and “fminsearch” available in software MATLAB2015b is utilised to obtain the corresponding estimates of the parameters of the \(\alpha\)IW, IW, KIW, APIW, MOEIW, and EEIW distributions.

Application-I

The Kevlar 49/epoxy data set, given in Table 3, involves stress-rupture life of kevlar 49/epoxy strands subjecting to constant sustained pressure at the 90% stress level until the all had failed; see Andrews and Herzberg28.

Many authors proposed different distributions to model the Kevlar 49/epoxy data. For example, Paranaiba et al.29 derived Kumaraswamy Burr XII distribution, Olmos et al.30 derived slash generalized half-normal distribution, and Sen et al.31 introduced quasi Xgamma-Poisson (QXGP) distribution to model Kevlar 49/epoxy. The QXGP distribution modeled Kevlar 49/epoxy data better than the distributions formerly used and also recently proposed by the others. Therefore, in modeling Kevlar 49/epoxy, the QXGP distribution is also considered besides with the IKW, APIW, MOEIW, and EEIW for the sake of completeness of comparisons. The estimates of the parameters of the \(\alpha\)IW, IW, QXGP, KIW, APIW, MOEIW, and EEIW distributions and the corresponding fitting results are given in Table 4.

The results given in Table 4 show that the \(\alpha\)IW distribution performs better modeling perfomance than the QXGP, IW, KIW, APIW, MOEIW, and EEIW distributions when the AICc, BIC, KS, AD, and CvM criteria are taken into account. The fitting performance of the \(\alpha\)IW distribution is also illustrated graphically by Fig. 2.

It is seen from Fig. 2 that the pdf and cdf of the \(\alpha\)IW distribution preciously fit the histogram and emprical cdf of the Kevlar 49/epoxy, respectively. This conclusion is also supported by values of the information criteria and goodness-of-fit statistics given in Table 4. Moreover, the \(\alpha\)IW attains this conclusion when compared to the other competitive distributions and with a considerably bigger difference as well, as per Raftery32, which states that the significant difference in the BIC of the models should be more than 2. Also, note that the ML, MPS, and LS estimation methods give more or less the same fitting performances; see the corresponding goodness-of-fit statistics given in Table 4.

Application-II

The Kevlar 373/epoxy data set involves life of fatigue fracture of kevlar 373/epoxy subjecting to constant pressure at the 90% stress level until all had failed, therefore it includes the exact times of failure. This data set was provided in Glaser33; see also Table 5.

In the literature, there exist various distributions used in modeling Kevler 373/epoxy data. For example, Merovci et al.34 proposed generalized transmuted exponential distribution, Alizadeh et al.35 proposed an other generalized transmuted exponential distribution, Dey et al.36 introduced alpha power transformed Weibull distribution, and Jamal and Chesneau37 proposed transformation of Weibull distribution using sine and cosine functions (TSCW) to model Kevlar 373/epoxy. Note that TSCW distribution introduced by Jamal and Chesneau37 is preferable over among the others, since it has smaller values of the goodness-of-fit statistics. Therefore, besides with the KIW, APIW, MOEIW, and EEIW distributions, the TSCW distribution is also taken into account to make comparisons complete. The ML estimates of the parameters for the \(\alpha\)IW, IW, TSCW, KIW, APIW, MOEIW, and EEIW distributions, and the corresponding fitting results are given in Table 6. Also, the MPS and LS estimates of the parameters of the \(\alpha\)IW distribution along with the corresponding goodness-of-fit statistcs are given in Table 6.

When taking into account the KS, AD, and CvM values, it can be seen from Table 6 that the \(\alpha\)IW distribution is preferable to the IW, KIW, APIW, MOEIW, and EEIW distributions. The \(\alpha\)IW and TSCW distributions show more or less the same modeling performace based on the infromation criteria; however, the \(\alpha\)IW stands one step ahead of the TSCW distribution when goodness-of-fit statistics are taken into account.The fitting performance of the \(\alpha\)IW distribution is also illustrated in Fig. 3.

Note that the results given in Table 6 is also supported by the Fig. 3, i.e., the density and cdf of the \(\alpha\)IW distribution preciously fit the histogram and emprical cdf of the Kevlar 373/epoxy, respectively.

Conclusion

In this study, the \(\alpha\)IW distribution is derived as a scale-mixture between IW and U(0, 1) distributions. Some statistical properties of the \(\alpha\)IW distribution are provided. It should be noted that \(\alpha\)-monotone inverse Rayleigh, \(\alpha\)-monotone inverse exponential distributions are sub-models of the \(\alpha\)IW distribution, and also IW, inverse Rayleigh, and inverse exponential distributions are limiting distributions of the \(\alpha\)IW distribution for the different parameters settings.

The \(\alpha\)IW distribution is used to model two popular data sets from the reliability area, i.e. Kevlar 49/epoxy and Kevlar 373/epoxy data sets. Literature review show that QXGP distribution proposed by Sen et al.31 and TSCW distribution introduced by Jamal and Chesneau37 are modeled the Kevlar 49/epoxy and Kevlar 373/epoxy data better than the formerly used distributions, respectively. Therefore, modeling capability of the \(\alpha\)IW distribution is compared with the modeling capability of the QXGP and TSCW distributions for the corresponding data set. It should be noted IW, KIW, APIW, MOEIW, and EEIW distributions are also included to the comparisons to make the study complete. In the comparisons, AICc, BIC, KS, AD, and CvM criteria are used. Results show that the \(\alpha\)IW distribution performs better fitting performance than the QXGP, TSCW, KIW, APIW, MOEIW, and EEIW distributions and therewithal the other distributions that are formerly used in modeling these data sets.

The results in Tables 4 and 6 show that the \(\alpha\)-monotone concept significantly contributes to increase the modeling performance of the baseline distribution, i.e., IW distribution. Thus, obtaining the \(\alpha\)IW distribution by using the \(\alpha\)-monotone concept is cost effective. In other words, the new shape parameter added to the distribution by using the \(\alpha\)-monotone concept significantly increases the modeling capability of the IW distribution. As a result of this study, it is shown that the \(\alpha\)IW distribution can be an alternative to the well-known and recently-introduced distributions for modeling purposes.

It is known that censored samples may be occured in lifetime analysis and there are many other estimaiton methods, such as method of moments, probabilty weighted moments, L-moments, and so on. Expectation-maximization algorithm can also be utilised to find the maximum of the likelihood function since \(\alpha\)IW distribution has scale-mixture representation. However, in this study, the parameters of the \(\alpha\)IW distribution are estimated by using the ML, MPS, and LS methods for the complete sample case. Furthermore, only, the ML estimation method is considered for the progressively type-II censored sample case; see the appendix in the Supplementary Material. Therefore, estimation of the parameters of the \(\alpha\)IW distribution by using different estimation methods for complete and censored sample cases can be considered as future works.

Data availability

The data presented in this study are available in Section "Estimation"; see Tables 3 and 5.

References

Keller, A. Z. & Kanath, A. R. R. Alternative reliability models for mechanical systems. In Proceeding of the 3rd International Conference on Reliability and Maintainability, 411-415 (Toulse, France, 1982).

Nelson, W. Applied Life Data Analysis (Wiley, 1982).

Helu, A. On the maximum likelihood and least squares estimation for the inverse Weibull parameters with progressively first-failure censoring. Open J. Stat. 5, 75–89 (2015).

Chakrabarty, J. B. & Chowdhury, S. Compounded inverse Weibull distributions: Properties, inference and applications. Commun. Stat. Comput. 48, 2012–2033 (2019).

Fayomi, A. The odd Frechét inverse Weibull distribution with application. J. Nonlinear Sci. Appl. 12, 165–172 (2019).

Hanagal, D. D. & Bhalerao, N. N. Modeling on generalized extended inverse Weibull software reliability growth model. J. Data Sci. 17, 575–591 (2019).

Jahanshahi, S., Yousof, H. & Sharma, V. K. The Burr X Frechét model for extreme values: Mathematical properties, classical inference and Bayesian analysis. Pak. J. Stat. Oper. Res. 15, 797–818 (2019).

Saboori, H., Barmalzan, G. & Ayat, S. Generalized modified inverse Weibull distribution: Its properties and applications. Sankhya B 8, 247–69 (2020).

Afify, A. Z., Shawky, A. I. & Nassar, M. A new inverse Weibull distribution: Properties, classical and Bayesian estimation with applications. Kuwait J. Sci. 48, 1–10 (2021).

Hussein, E. A., Aljohani, H. M. & Afify, A. Z. The extended Weibull-Frechét distribution: Properties, inference, and applications in medicine and engineering. AIMS Math. 7, 225–246 (2022).

Afify, A. Z., Yousof, H. M., Cordeiro, G. M., Ortega, E. M. & Nofal, Z. M. The Weibull Frechét distribution and its applications. J. Appl. Stat. 43, 2608–2626 (2016).

Lee, C., Famoye, F. & Alzaatreh, A. Y. Methods for generating families of univariate continuous distributions in the recent decade. Wiley Interdiscip. Rev. Comput. Stat. 5, 219–238 (2013).

Andrews, D. D. et al. Robust Estimates of Location: Survey and Advances (Princeton University Press, 1972).

Oliveres-Pacheco, J. F., Cornide-Reyes, H. C. & Monasterio, M. An extension of the two-parameter Weibull distribution. Revista Comobiana de Estadistica 33, 219–231 (2010).

Iriarte, Y. A., Vilca, F., Varela, H. & Gomez, H. W. Slashed generalized Rayleigh distribution. Commun. Stat. Methods 46, 4686–4699 (2017).

Korkmaz, M. C. A generalized skew slash distribution via gamma-normal distribution. Commun. Stat. Comput. 46, 1647–1660 (2017).

Gomez, Y. M., Bolfarine, H. & Gomez, H. W. Gumbel distribution with heavy tails and applications to environmental data. Math. Comput. Simul. 157, 115–129 (2019).

Jones, M. C. On univariate slash distributions, continuous and discrete. Ann. Inst. Stat. Math. 72, 645–657 (2020).

Arslan, T. Scale-mixture extension of inverse Weibull distribution. In Olomoucian Days of Applied Mathematics (ODAM), 12 (Olomouc, Czech Republic, 2019).

Arslan, T. An \(\alpha\)-monotone generalized log-Moyal distribution with applications to environmental data. Mathematics 9, 1400 (2021).

Arslan, T. An extension of the inverse Gaussian distribution. In Modeling and Advanced Techniques in Modern Economics (eds Aladag, C. H. & Potas, N.) 211–219 (World Scientific, 2022).

Bagci, K., Erdogan, N., Arslan, T. & Celik, H. E. Alpha power inverted Kumaraswamy distribution: Definition, different estimation methods and application. Pak. J. Stat. Oper. Res. 18, 13–25 (2022).

Acitas, S. & Arslan, T. A comparison of different estimation methods for the three parameters of the the Weibull Lindley distribution. Eskisehir Techn. Univ. J. Sci. Technol. B- Theor. Sci. 8, 19–33 (2020).

Shahbaz, M. Q., Shahbaz, S. & Butt, N. S. The Kumaraswamy-inverse Weibull distribution. Pak. J. Stat. Oper. Res. 8, 479–489 (2012).

Basheer, A. M. Alpha power inverse Weibull distribution with reliability application. J. Taibah Univ. Sci. 13, 423–432 (2019).

Okasha, H. M., El-Baz, A. H., Tarabia, A. M. K. & Basheer, A. M. Extended inverse Weibull distribution with reliability application. J. Egyptian Math. Soc. 25, 343–349 (2017).

Badr, M. M. & Sobahi, G. The exponentiated exponential inverse Weibull model: theory and application to COVID-19 data in Saudi Arabia. Hindawi Journal of Mathematics Article ID 8521026 (2022).

Andrews, D. F. & Herzberg, A. M. Data: A Collection of Problems from many Fields for the Student and Research Worker (Springer Series in Statistics, 1985).

Paranaiba, P., Ortega, E., Cordeiro, G. & Pascoa, M. The Kumaraswamy Burr XII distribution: Theory and practice. J. Stat. Comput. Simul. 83, 2117–43 (2013).

Olmos, N. M., Varela, H., Bolfarine, H. & Gomez, H. W. An extension of the generalized half-normal distribution. Stat. Pap. 55, 967–981 (2014).

Sen, S., Korkmaz, M. & Yousof, H. The quasi xgamma-Poisson distribution: Properties and application. Istatistik: J. Turkish Stat. Assoc. 11, 65–76 (2018).

Raftery, A. E. Bayesian model selection in social research. Sociol. Methodol. 25, 111–163 (1995).

Glaser, R. Statistical analysis of Kevlar 49/epoxy composite stress-rupture data (No. UCID-19849). Ph.D. thesis, Lawrence Livermore National Lab., CA, USA (1983).

Merovci, F., Alizadeh, M. & Hamedani, G. G. Another generalized transmuted family of distributions: Properties and applications. Austrian J. Stat. 45, 71–93 (2016).

Alizadeh, M., Merovci, F. & Hamedani, G. G. Generalized transmuted family of distributions: Properties and applications. Hacettepe J. Math. Stat. 46, 645–667 (2017).

Dey, S., Sharma, V. K. & Mesfioui, M. A new extension of Weibull distribution with application to lifetime data. Annals Data Sci. 4, 31–61 (2017).

Jamal, F. & Chesneau, C. A new family of polyno-expo-trigonometric distributions with applications. Infin. Dimen. Anal. Quant. Probab. Relat. Top. 22, 1950027 (2019).

Acknowledgements

This work was supported by the Deanship of Scientific Research, Vice Presidencyfor Graduate Studies and Scientific Research, King Faisal University, Saudi Arabia [Grant No. 2504].

Author information

Authors and Affiliations

Contributions

Conceptualization, T.A. and H.S.B.; Methodology, T.A. and H.S.B.; Formal analysis, F.E.A. and T.A.; Investigation, F.E.A., T.A., H.S.B., and A.M.A.; Writing-original draft, T.A.; Writing-review & editing, F.E.A., T.A., H.S.B., and A.M.A.; Software, funding acquisition, F.E.A. and A.M.A. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Almuhayfith, F.E., Arslan, T., Bakouch, H.S. et al. A versatile model for lifetime of a component under stress. Sci Rep 13, 20202 (2023). https://doi.org/10.1038/s41598-023-47313-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-47313-3

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.