Abstract

Alzheimer’s disease (AD) is a physical illness, which damages a person’s brain; it is the most common cause of dementia. AD can be characterized by the formation of amyloid-beta (Aβ) deposits. They exhibit diverse morphologies that range from diffuse to dense-core plaques. Most of the histological images cannot be described precisely by traditional geometry or methods. Therefore, this study aims to employ multifractal geometry in assessing and classifying amyloid plaque morphologies. The classification process is based on extracting the most descriptive features related to the amyloid-beta (Aβ) deposits using the Naive Bayes classifier. To eliminate the less important features, the Random Forest algorithm has been used. The proposed methodology has achieved an accuracy of 99%, sensitivity of 100%, and specificity of 98.5%. This study employed a new dataset that had not been widely used before.

Similar content being viewed by others

Introduction

Alzheimer's disease (AD) is one of the most dreadful and generic classes of dementia that causes a progressive loss of memory and cognitive function, leading to poor quality of life. It accounts for almost 60–80% of dementia cases and it is ranked globally as the fifth leading cause of death.

Pathologically, the primary characteristic of neuropathological lesions in AD is the extracellular deposition of amyloid plaques. Amyloid plaque aggregates are composed of amyloid-beta (Aβ), a fragment of amyloid precursor protein (APP) and a single transmembrane protein1. As in Fig. 1, APP is processed by two alternative pathways: nonamyloidogenic and amyloidogenic2. In the nonamyloidogenic pathway, APP is cleaved by α-secretase and γ-secretase generating the extracellular soluble APP-α (sAPP-α), APP intracellular domain (AICD) fragment and a short fragment p3 (N-truncated Aβ fragment)3. In the amyloidogenic pathway, at which Aβ fragments are produced, there are sequationuential cleavages by β- and γ-secretase. APP, at first, is cleaved by β-secretase-producing soluble APP-β (sAPP-β), and then the membrane-retained fragment is cleaved by γ-secretase generating. Another AICD fragment translocated to the nucleus where it affects the transcriptional regulation of several proteins and drives neuroprotective pathways and Aβ fragments of 40 (Aβ40) or 42 (Aβ42) amino acids interacting initially with apolipoprotein E result in an aggregation of beta oligomers to generate beta-amyloid plaques. Eventually, Aβ fragments are involved in several downstream pathways related to AD4.

Recently, researchers have introduced novel therapeutic approaches for AD that target the reduction of amyloid oligomer levels, including (1) the use of small molecule inhibitors to prevent oligomerization. (2) Employ the immunotherapy to neutralize oligomeric species. (3) Accurate determination of Aβ-degrading enzymes to dominate Aβ oligomer levels in the brain. (4) Stimulation of the immune system to produce Aβ antibodies to attack aggregates. (5) Use of Aβ blockers to block amyloid channels. All these approaches are currently under development in the preclinical research stages5. However, biological studies can reveal the initiation of the Aβ pathways before the outset of AD symptoms, which contributes to targeting studies of early stages of treatment and slowing disease progression. Early diagnosis of AD, therefore, is needed to provide adequate treatment and avoid deterioration stages5.

Generally, the main challenge is not only to clear but also to prevent the formation of Aβ plaques requiring accurate measures of plaque morphologies for understanding disease progression and pathophysiology. Indeed, there are numerous forms of plaque, but the most prevalent form is characterized as a diffuse, cerebral amyloid angioplasty (CAA), and dense-core (see Fig. 2). The diffuse plaques are loosely organized amorphous clouds. Dense-core plaques are related to synaptic loss. They are surrounded by dystrophic neuritis, activated microglial cells, and reactive astrocytes. The dystrophic neurites are used for the pathological diagnosis of AD as they are associated with the presence of cognitive impairment. In CAA, the Aβ plaques deposit in the tunica media of leptomeningeal arteries and cortical capillaries, small arterioles, and medium-size arteries, particularly in posterior areas of the brain. Some degrees of CAA, usually mild ones, are presented in about 80% of AD patients. In case it is severe, CAA can weaken the vessel wall and cause life-threatening lobar hemorrhages6.

Related works

The application of fractal geometry has become a new trend in studying biological systems in the last years7,8,9,10,11,12,13,14, including AD15,16,17,18,19,20. Fractal is an amorphous geometric concept with infinite nesting of a self-similar structure at different scales providing a general framework for studying different irregular sets. Fractal dimension (FD) seems to be a measure of the fractal properties that describe the space-filling properties of networks, including biological systems. FD has been applied to histopathological studies to determine the complexity of certain tissue components21,22,23,24. Biscetti et al.25 measured FD and other parameters of superficial capillary plexus (SCP), intermediate capillary plexus (ICP), deep capillary plexus (DCP), and choriocapillaris of subjects with mild cognitive impairment (MCI) due to AD and cognitively healthy controls (CN). They found that FD shows early vessel recruitment as a compensative mechanism at disease onset. The calculation of FD from optical coherence tomography angiography (OCT-A) is scanned to show the retinal vascular changes in subjects with AD, MCI, and CN shown in26. They found that FD decreases in elderly people and is lower in males.

The limitation of fractal analysis in describing more complex structures like Aβ plaques by one exponent FD can be solved by multifractal analysis. Multifractal is a generalization of fractal geometry when FD is not sufficient as it provides a spectrum of fractal dimensions FDs27,28,29. Multifractal measures have been observed in different physical situations as neural networks, fluid turbulence, rainfall distribution, mass distribution across the universe, viscous fingering, and many other phenomena.

Machine learning (ML) is a branch of artificial intelligence, which extracts information “training data” from a dataset to make accurate predictions or decisions without being explicitly programmed. Many studies have focused on applying machine learning techniques to diagnose and classify the various stages of AD via different types of physical tests in the last years30,31,32,33,34,35,36,37,38,39,40, and recently using immunohistochemistry images41,42. In43, they use the convolutional neural network (CNN) model on IHC images to classify between Aβ morphologies as dense core plaques, diffuse plaques, and CAA. The utilization of deep learning (DL) to differentiate tauopathies, including AD, progressive supranuclear palsy (PSP), corticobasal degeneration (CBD), and Pick's disease (PID), based on IHC images shown in44. Using MRI scans, Majumder et al.45 applied the artificial neural network (ANN) technique to distinguish between AD and cognitively normal (CN). Mild cognitive impairment (MCI) to Alzheimer's disease (AD) transition prediction was carried out, in46, using the ANN algorithm in MRI images. Additionally, Richhariya et al.47 classified between several stages as CN vs. AD, MCI versus AD, and CN vs. MCI using recursive feature elimination and SVM.

Therefore, the principal objective of the current research is to study the morphologies of amyloid plaques in AD using multifractal analysis that may represent a vital pathway for the increase in the number of neurodegenerative diseases, including Alzheimer's, as well as structure-based drug discovery, which may contribute to the creation of novel treatment strategies for various degenerative diseases. The variety of tissue structures in Whole-Slide Imaging (WSI) in the temporal gyri of the AD patient brain have been discussed in this research. To automate the classification process, the Naive Bayes has been used as a classifier.

The research contributions

The current study contribution can be summed up as follows:

1. A new strategy in assessing of amyloid plaques morphologies using multifractal geometry of analysis.

2. Accurate measure of plaques morphologies for understanding disease pathology.

3. Using Naive Bayes classifier as a classifier saves time and effort other than algorithms that require training procedures.

4. It provides high performance measures compared with other recent classification techniques.

Materials

Data used by Tang et al. are available at48. There are 63 subjects in the sample, and each has a single temporal gyri whole slide image (WSI). The subjects were chosen to represent a broad spectrum of pathological burden for each of the three AD pathologies of interest: cerebral amyloid angiopathy (CAA), dense-core and diffuse plaques. Glass slides with 5 mm sections of the superior and middle temporal gyrus that had been formalin-fixed and paraffin-embedded made up all of the WSIs. Amyloid beta (Aβ) antibody was used to perform immunohistochemistry staining on the tissue. An Aperio AT2 was used to digitize every slide at a magnification up to 40 times. The open-source library PyVips was used to apply the color normalization and subsequently tile the WSI into small images in a structured format (256 × 256 pixels). The used dataset contains 1200 images divided into 400 images for diffuse, 400 images for cerebral amyloid angioplasty (CAA), and 400 images for dense-core cases. Using a custom program written by MATLAB v.9.4 for R2018a (Mathworks, MA, USA), the hardware system is composed of a CPU core i7, 8GB RAM, and 1TB HD.

In this study, the first step of the proposed classification system is the image-processing step. The images, firstly, have been processed to enhance the contrast and resolution. Secondly, the images have been passed through two processing stages: the first stage is responsible for converting the images from an RGB image to a Grayscale image. In the second stage, the images have been converted to a binary form; this can be illustrated in Figure 3. The binarization process is based on converting the image pixel level into two values 1 or 0; therefore, the resulting image has only two colors (Black and white). The pixel conversion process can be achieved through two steps. In the first step, obtain the image histogram, which describes the gray color distribution of the pixels in an image. In the second step, compute the threshold value according to the used threshold technique. In this study, Otsu’s method49 has been used as a threshold technique. This technique is based on maximizing the inter-cluster variation to minimize the intra-cluster variation; hence, it divides all the pixels into two clusters (foreground and background) based on the grayscale intensity values of the image pixels.

Methods

Multifractal analysis

In the last decades, a broad range of complex structures of interest to scientists, engineers, and physicians have been quantitatively characterized using the idea of a fractal dimension: a dimension that uniquely correlates to the geometrical shape under study and is often not an integer50. The key to this trend is the recognition that many random structures obey a symmetry as remarkable as that obeyed by regular structures. This "scale symmetry" implies that objects appear to be the same at many different scales of observation. To describe a fractal set, it is supposed that S is a subset of a d-dimensional space covered with boxes of length L, then the local density Pi(L) of the object is the mass function of the i-th counting box,

where MT denotes the object's total mass and Mi(L) is the number of pixels that comprise the mass in the box. On the other hand, Pi(L) in heterogeneous objects can vary as:

where αi is the Holder exponent that characterizes the scaling of the i-th region or spatial location. Consequently, the local behavior of Pi(L) around the center of a counting box with length L is thus demonstrated by αi. The number of boxes N(α) where the mass function has exponents range between α and α + dα scales as:

where f(α) is the fractal dimension of the fractal units at particular sizes. Scaling of the q-th moments of the density function Pi(L) yields to multifractal measures as

Hence, the exponent in Eq. (4) is called the mass exponent of q-th moment of order τ(q) that admits the following equation:

It is well known as:

where Dq denotes the generalized dimensions defined as:

The multifractal spectrum illustrated in Fig. 4 is a convex function with a maximum Do at q = 0 and is known as the box-counting dimension51. For q = 1, f (α) = α = D1 is the information dimension. D1 represents the scaling of information generation that describes the rate of information gain by successive measurements or the rate of information loss by time52.

In fact, the set of local scales that may be stated as powers of L is the only one used to estimate the multifractal spectrum because it cannot be calculated as infinity. Additionally, this fact limits the variety of moment q that can be applied53. Therefore, the multifractal spectrum can be computed from:

Thus, the computation of f (q) and α(q) goes as follows:

And

The second commonly used graph discussed here is the generalized dimension curve (Dq vs. q), which is analogous to applying warping filters to an image to exaggerate parameters that might otherwise be unnoticeable. The term "warp filters" refers to a group of arbitrary exponents represented by the symbol "q". Hence, we can construct a generalized dimension Dq for each q as shown in Fig. 5.

The generalized dimension Dq can be defined as:

where I (q, r) is the partition function given by:

Equation (11) becomes:

where r denotes the scale of measurement, q is the order of the moment, N(L) is the number of fractal copies based on the scale L and Pi(L) is the growth probability function of the i-th fractal unit. From the general dimension definition, at q = 0, Do describe the box-counting dimension (DB), also known as the capacity dimension. In Eq. (13), when we use a grid of boxes to cover a given space, the box-counting dimension D0 is given by:

When N(L) is the number of nonempty boxes with length L that cover the space and include at least some part of the attractor (not necessarily the total number of points). At q = 1, D1 is the information dimension (DI) that characterizes the rate of information loss by time or the rate of information gain by sequential measurements. DI analogous to a quantity known as the Shannon entropy. It is given by:

Provided we apply the Taylor expansion to Eq. (12), we have:

So, Eq. (13) becomes:

At q = 2, D2 is the correlation dimension54, which characterizes the correlation between pairs of points on a reconstructed attractor. From Eq. (13), the correlation dimension (DC) is given by:

If D0 = D1 = D2, the structure is termed as monofractal or unifractal. If Do > D1 > D2, the structure is termed as multifractals.

Lacunarity measurement

Lacunarity is a measure of the different gaps distribution throughout an image55. It gives an assessment of the structure heterogeneity. The higher lacunarity value, the less heterogeneous in the fractal geometry. The mean lacunarity Λ can be written as:

where µ: the mean for pixels per box, σ: the standard deviation.

Naïve Bayes

It is a supervised learning algorithm based on Bayes’ theorem. Naïve Bayes is considered as a probabilistic classifier with an assumption of independence among predictors. It has several advantages: (1) Fast, easy and simple to implement. (2) No need for large training datasets. (3) It can be used for discrete and analogue data. The main idea in the Naive Bayes classifier is that the presence of a particular feature is unrelated to the presence of any other features. Therefore, it cannot be learnt if there is a relation between the features56,57,58.

Bayes' theorem is used to determine the probability of a hypothesis with the prior knowledge of a class. It can be described by:

where \(P\left(C|x\right)\) "Posterior probability": is the probability of hypothesis/class "C" on the observed event/features "x"; \(P(C)\) "Prior probability": is the probability of hypothesis before observing the evidence. \(P\left(x|C\right)\) "Likelihood probability": is the probability of the evidence given that the probability of a hypothesis is true. \(P(x)\) "Marginal Probability": is the probability of the evidence or the prior probability of predictor.

Assuming that X represents as the extracted features and can be written as:

Therefore, the probability of a hypothesis/class c for the selected features X with number n can be written as:

Equation (22) can be written in simple form as:

According to the used datasets, the classifier system may have m classes:

Then the classifier system can select the class with the highest probability value as:

In this study, there are three classes (m = 3) of Aβ plaques \(\left({c}_{1},{c}_{2},{c}_{3}\right)\) as diffuse, CAA, and dense-core. The RF optimized hyperparameters59 can be listed in Table 1.

The methodology is based on extracting the most changeable features related to AD, the system has 12 extracted features (X = 12). These features can be illustrated in Fig. 6 and listed as follows:

-

1.

The lacunarity (λ),

-

2.

The maximum value of α (αmax) in the singularity spectrum,

-

3.

The singularity spectrum at the αmax (f(αmax)),

-

4.

The minimum value of α (αmin) in the singularity spectrum,

-

5.

The singularity spectrum at the αmin (f(αmin)),

-

6.

The α value at the maximum of the singularity spectrum curve (α0),

-

7.

The width of the singularity spectrum curve (width),

-

8.

The symmetrical shift of the singularity spectrum curve,

-

9.

The box-counting dimension (D0),

-

10.

The Information dimension (D1),

-

11.

The correlation dimension (D2).

These features can be illustrated in Fig. 6

Most of the time, reducing the number of input variables or the extracted features might enhance the efficiency of the model, as well as lowering the computing cost of modelling. Therefore, when creating a predictive model, it is desired to perform a feature selection process to reduce the number of extracted features. This can be done by using a feature selection algorithm as a Random Forest (RF) algorithm60,61.

Random forest algorithm

Random forest is a supervised machine learning algorithm. It is a modified version of the decision trees. It is usually trained using the “bagging” method. It is a collection of multiple decision trees to increase the overall result. To start the training of the RF algorithm, three parameters have to be adjusted first to be operated as a classifier procedure. These parameters can be summarized as (1) the number of the used trees, (2) the number of nodes, and (3) the number of the features sampled. As shown in Fig. 7.

Several advantages can be obtained as a result of using the RF algorithm, these advantages can be listed as (1) reducing the risk of overfitting, (2) performing both classification and regression tasks, (3) giving a good explanation for the resultant, (4) easily determination of the important features, and (5) easily handling of large datasets. However, RF suffers from disadvantages as (1) large time-consuming, (2) more computation resources, and (3) more complex in prediction than the decision tree.

In almost all classification systems, hundreds or thousands of features are used to obtain accurate results. On the other hand, not all the extracted features are important or play a strong influence in the classification processes. Therefore, it is required to create a classification model that includes the most important features, called "Feature Selection". This makes the model simpler, reduces the computational time, and reduces the model variance.

The feature selection can be performed by using a Recursive Feature Elimination procedure62,63. In this study, after creating the classification model, the less relevant feature is removed. Features are ranked by the model performance measures, eliminating the less important features per loop. Repeat the procedures until reaches the high-ranked features.

The workflow of the proposed methodology can be summarized as shown in Fig. 8.

Results and discussions

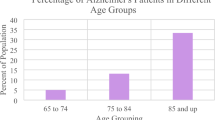

The dataset demographic characteristics

The proposed methodology based on using the archived images in Davis Alzheimer’s Disease Center Brain Bank64 at California University. These samples had the following features:

-

1)

In order to ride of endogenous protein, the samples were pretreated with formic acid.

-

2)

An amyloid-β antibody had been used to stain the tissue.

-

3)

The samples were 5 μm formalin fixed

-

4)

Portions of the human brain's superior and middle temporal gyrus that had been encased in paraffin.

-

5)

Aperio Digital Pathology Slide Scanners were used for digitalizing the slides with magnification factor up to 40x.

The dataset demographic characteristics can be summarized in Table 2.

The image singularity spectrums

The image analyses using multifractal are shown in Figs. 9, 10, 11. Figure 9 shows the singularity spectrum for diffuse cases. Figure 10 shows the singularity spectrum for the CAA cases. Figure 11 shows the singularity spectrum for the Dense-core cases. As the amyloid plaques increase, the heterogeneity in the brain tissue increases. Therefore, the spectrum became wider with different asymmetrical shapes as shown in Fig. 12. As the amyloid plaques increase, the curves have moved to the right as the image heterogeneities have grown, with differing singularity spectrum start and end values αmin and αmax respectively. Table 3 summarizes 15 sample images for AD with the extracted feature values.

According to the proposed methodology, eleven features have been extracted; they described the changes in the brain tissue related to AD. To reduce the used features, the RF algorithm is employed to remove the less relevant features and is described in Fig. 13. According to Fig. 13; the important features can be concluded as lacunarity, αmax, αmin, Symmetrical shift, and D0. They have an importance weight of not less than 0.5.

The Figure 14 shows the ranking of the feature importance provided by RF59. It represents the raking of the feature importance for the diffuse cases, CCA, and dense-core cases for different thresholds. The blue pars (features) are discarded as being under the threshold value. Performing a model evaluation using multiple thresholds, the optimum threshold value can be chosen as 0.5, due to the lack of importance of the discarded features as f(αmax), α0, f(αmin), The width, D1, and D2.

To explain the importance of the selected features, Figure 15 illustrates the statistical representation of the most important features.

As shown in Figure 15a,b, the diffuse stage has the lowest values of (αmax) and (αmin) while the dense-core stage has the largest value due to the increase in the amyloid plaques accumulation. In Figure 15c,d, the diffuse stage has achieved the highest (D0) and (Lacunarity) due to fewer Amyloid-beta plaques, which resulted from more homogeneity in the diffuse dataset images than other stages. As illustrated in Figure 13 and 15e, the diffuse stage has a shift left to the symmetrical axis of the singularity spectrum rather than CCA and dense-core stages have a shift right to the symmetrical axis.

Performance measures

To ensure the effectiveness of the proposed NB algorithm using the most important features, another classifier as K-Nearest Neighbor (KNN) classifier has been used as a benchmark analysis. Several performance measures have been calculated as shown in Tables 4 and 5, and Fig. 16.

The statistical characteristics obtained from the shown tables demonstrate that the proposed Naïve Bayes classifier has achieved the best performance. It has an accuracy of 99%. The classification method achieves a sensitivity of 100%, specificity of 98.5%, precision of 97.1%, and F-score of 98.5%.

A Comparative analysis

A comparison of the suggested classification system with different classification parameters has been included in Table 6 to confirm its efficacy. Only one scientific paper43 used the same working datasets; the comparison with other researchers who used other datasets may not be fair for all algorithms. Therefore, the comparative results are as follows:

As shown in Table 6, the proposed methodology has achieved high accuracy with less dataset images.

Conclusion

Alzheimer's disease (AD) is one of the most dreadful and generic classes of dementia, which causes a progressive loss of memory and cognitive function, leading to poor quality of life. The deposition of amyloid plaques is the cause of AD. Amyloid plaque aggregates are composed of amyloid-beta (Aβ), which causes the progression of AD disease. The current study proposed the assessment of the amyloid-beta using multifractal geometry. To automate the classification of AD stages, Naïve Bayes and Random Forest as a Feature selection were used. The proposed methodology achieved an accuracy of 99% and a sensitivity of 100%. The quality of the dataset images is the main limitation of the proposed methodology. It should be not less than 35% to obtain good extracted features.

Future work

-

Design a new Graphical User Interface application (GUI) to extract the most important features related to amyloid plaque morphologies as an aiding diagnosis tool.

-

Using multifractal geometry as an analysis tool for detecting or classifying brain tumors.

Data availability

The datasets were collected from https://www.keiserlab.org/resources/.

References:

Pirici, D. et al. Fractal analysis in neurodegenerative diseases. Fractal Geom. Brain 23, 233–249 (2016).

Morris, G. P., Clark, I. A. & Vissel, B. Inconsistencies and controversies surrounding the amyloid hypothesis of Alzheimer’s disease. Acta Neuropathol. Commun. 2, 1–21 (2014).

Kojro, E. & Falk, F. The non-amyloidogenic pathway: structure and function of α-secretases. Alzheimer’s Dis. Cell Mol. Aspects. Amyloid. 12, 105–127 (2005).

Chen, G.-F. et al. Amyloid beta: structure, biology and structure-based therapeutic development. Acta Pharmacol. Sin. 38(9), 1205–1235 (2017).

Hampel, H. et al. The amyloid-β pathway in Alzheimer’s disease. Mol. Psychiat. 26(10), 5481–5503 (2021).

Serrano-Pozo, A. et al. Cold spring harbor perspect. Med 1(1), a006189 (2011).

Abdelsalam, M. M. & Zahran, M. A. "A novel approach of diabetic retinopathy early detection based on multifractal geometry analysis for OCTA macular images using support vector machine. IEEE Access 9, 22844–22858 (2021).

Yoshioka, H. et al. Fractal analysis method for the complexity of cell cluster staining on breast FNAB. Acta Cytol. 65(1), 4–12 (2021).

Damrawi, G. E., Zahran, M. A., Amin, E. & Abdelsalam, M. M. Numerical detection of diabetic retinopathy stages by multifractal analysis for OCTA macular images using multistage artificial neural network. J. Ambient Intell. Hum. Comput. 25, 1–13 (2021).

Nawn, D. et al. Multifractal alterations in oral sub-epithelial connective tissue during progression of pre-cancer and cancer. IEEE J. Biomed. Health Inf. 25(1), 152–162 (2020).

Bayat, S. et al. Fractal analysis reveals functional unit of ventilation in the lung. J. Physiol. 599(22), 5121–5132 (2021).

El Damrawi, G., Zahran, M. A., El Shaimaa, A. & Mohamed, M. Abdelsalam (2020) "Enforcing artificial neural network in the early detection of diabetic retinopathy OCTA images analysed by multifractal geometry. J. Taibah Univ. Sci. 14(1), 1067–1076. https://doi.org/10.1080/16583655.2020.1796244 (2020).

Essey, M. & Maina, J. N. Fractal analysis of concurrently prepared latex rubber casts of the bronchial and vascular systems of the human lung. Open Biol. 10(7), 190249 (2020).

da Silva, L. G. et al. Fractal dimension analysis as an easy computational approach to improve breast cancer histopathological diagnosis. Appl. Microsc. 51(1), 1–9 (2021).

Villamizar, J., et al. Fractal analysis of neuroimaging: comparison between control patients and patients with the presence of Alzheimer’s disease. J. Phys. Conf. Ser. vol 2159(1). (IOP Publishing, 2022).

Elgammal, Y. M., Zahran, M. A. & Abdelsalam, M. M. A new strategy for the early detection of alzheimer disease stages using multifractal geometry analysis based on K-Nearest Neighbor algorithm. Sci. Rep. 12(1), 22381 (2022).

Prada, D., et al. Fractal analysis in diagnostic printing in cases of neurodegenerative disorder: Alzheimer type. J. Phys. Conf. Ser., vol. 1329(1). (IOP Publishing, 2019).

Lemmens, S. et al. Systematic review on fractal dimension of the retinal vasculature in neurodegeneration and stroke: assessment of a potential biomarker. Front. Neurosci. 14, 16 (2020).

Armstrong, G. W. et al. Retinal imaging findings in carriers with PSEN1-associated early-onset familial alzheimer disease before onset of cognitive symptoms. JAMA Ophthalmol. 139(1), 49–56 (2021).

Bordescu, D. et al. Fractal analysis of neuroimagistic. Lacunarity degree, a precious indicator in the detection of Alzheimer’s disease. Univ. Politeh. Buchar. Sci. Bull. Ser. A. Appl. Math. Phys. 80, 309–320 (2018).

Bianciardi, G., et al. Fractal approaches to image analysis in oncopathology.

da Silva, L. G., da Silva Monteiro, W. R. S., De Souza, G. T., Rabelo, M. E. & de Assis, E. A. C. P. Analysis of fractal dimension allows identification of malignancies in breast tissue histopathological images. Eur. J. Cancer 138, S105 (2020).

Panigrahi, S., et al. Fractal Geometry for Early Detection and Histopathological Analysis of Oral Cancer. Mining Intelligence and Knowledge Exploration: 7th International Conf., MIKE 2019, Goa, India, December 19–22, 2019, Proc 7. Springer International Publishing, 2020.

Maeda, Y. et al. Fractal analysis of 11C-methionine PET in patients with newly diagnosed glioma. EJNMMI Phys. 8(1), 1–9 (2021).

Biscetti, L. et al. Novel noninvasive biomarkers of prodromal Alzheimer disease: The role of optical coherence tomography and optical coherence tomography–angiography. Eur. J. Neurol. 28(7), 2185–2191 (2021).

Wang, X. et al. Decreased retinal vascular density in Alzheimer’s disease (AD) and mild cognitive impairment (MCI): An optical coherence tomography angiography (OCTA) study. Front. Aging Neurosci. 12, 572484 (2021).

Danila, E. & Valentin, H. Puiu lucian georgescu and luminița moraru “survey of forest cover changes by means of multifractal analysis”. Carpathian J. Earth Environ. Sci. 14(1), 51–60. https://doi.org/10.26471/cjees/2019/014/057 (2019).

Danila, E. et al. Multifractal analysis of ceramic pottery sem images in cucuteni-tripolye culture”, optik – international journal for light and electron optics. Optik 164, 538–546. https://doi.org/10.1016/j.ijleo.2018.03.052,ISSN:0030-4026 (2018).

Moldovanu, S. et al. Skin lesion classification based on surface fractal dimensions and statistical color cluster features using an ensemble of machine learning techniques. Cancers 13(21), 5256. https://doi.org/10.3390/cancers13215256 (2021).

Murugan, S. et al. DEMNET: A deep learning model for early diagnosis of Alzheimer diseases and dementia from MR images. IEEE Access 9, 90319–90329 (2021).

Yang, B.-H. et al. "Classification of alzheimer’s disease from 18 F-FDG and 11 C-PiB PET imaging biomarkers using support vector machine. J. Med. Biol. Eng. 40, 545–554 (2020).

Scholar, M. & Jitendra, S. C. A review on support vector machine based classification of alzheimer’s disease from brain MRI. Int. J. Sci. Res. Eng. Trends 6, 45 (2020).

Vichianin, Y. et al. Accuracy of support-vector machines for diagnosis of Alzheimer’s disease, using volume of brain obtained by structural MRI at Siriraj hospital. Front. Neurol. 12, 640696 (2021).

Shahparian, N., Yazdi, M. & Khosravi, M. R. Alzheimer disease diagnosis from fMRI images based on latent low rank features and support vector machine (SVM). Curr. Signal Trans. Therap. 16(2), 171–177 (2021).

Taie, S. A. & Ghonaim, W. A new model for early diagnosis of alzheimer’s disease based on BAT-SVM classifier. Bull. Electr. Eng. Inf. 10(2), 759–766 (2021).

Kamath, D. et al. Survey on early detection of alzheimer’s disease using different types of neural network architecture. Int. J. Artif. Intell. 8(1), 25–32 (2021).

Bhagwat, N. et al. An artificial neural network model for clinical score prediction in Alzheimer disease using structural neuroimaging measures. J. Psychiat. Neurosci. 44(4), 246–260 (2019).

Popuri, K. et al. Using machine learning to quantify structural MRI neurodegeneration patterns of Alzheimer’s disease into dementia score: Independent validation on 8,834 images from ADNI, AIBL, OASIS, and MIRIAD databases. Hum. Brain Mapp. 41(14), 4127–4147 (2020).

Tanveer, M. et al. Machine learning techniques for the diagnosis of Alzheimer’s disease: A review. ACM Trans. Multim. Comput. Commun. Appl. TOMM 16(1), 1–35 (2020).

Fisher, C. K., Smith, A. M. & Walsh, J. R. Machine learning for comprehensive forecasting of Alzheimer’s disease progression. Sci. Rep. 9(1), 1–14 (2019).

Shakir, M. N. & Dugger, B. N. Advances in deep neuropathological phenotyping of Alzheimer disease: Past, present, and future. J. Neuropathol. Exp. Neurol. 81(1), 2–15 (2022).

Muñoz-Castro, C. et al. "Characterization of glial responses in Alzheimer’s disease with cyclic multiplex fluorescent immunohistochemistry and machine learning. Alzheimer’s Dem. 17, e050902 (2021).

Tang, Z. et al. Interpretable classification of Alzheimer’s disease pathologies with a convolutional neural network pipeline. Nat. Commun. 10(1), 2173 (2019).

Koga, S., Ikeda, A. & Dickson, D. W. Deep learning-based model for diagnosing Alzheimer’s disease and tauopathies. Neuropathol. Appl. Neurobiol. 48(1), e12759 (2022).

Kar, S. & Dutta Majumder, D. A novel approach of diffusion tensor visualization based neuro fuzzy classification system for early detection of Alzheimer’s disease. J. Alzheimer Dis. Rep. 3(1), 1–18 (2019).

Kuang, J. et al. Prediction of transition from mild cognitive impairment to Alzheimer’s disease based on a logistic regression–artificial neural network–decision tree model. Geriatr. Gerontol. Int. 21(1), 43–47 (2021).

Richhariya, B. et al. Diagnosis of Alzheimer’s disease using universum support vector machine based recursive feature elimination (USVM-RFE). Biomed. Signal Process. Control 59, 101903 (2020).

https://www.keiserlab.org/resources/ accessed in December 2022.

Otsu, N. A threshold selection method from gray level histograms. IEEE Trans. Syst. Man Cybern. 9, 62–66 (1979).

Mandelbrot, B. B. & Wheeler, J. A. The fractal geometry of nature. Am. J. Phys. 51(3), 287 (1983).

Bouda, M., Caplan, J. S. & Saiers, J. E. Box-counting dimension revisited: presenting an efficient method of minimizing quantization error and an assessment of the self-similarity of structural root systems. Front. Plant Sci. 7, 149. https://doi.org/10.3389/fpls.2016.00149 (2016).

Turiel, A., Pérez-Vicente, C. J. & Grazzini, J. Numerical methods for the estimation of multifractal singularity spectra on sampled data: A comparative study. J. Comput. Phys. 216, 362–390. https://doi.org/10.1016/j.jcp.2005.12.004 (2006).

Xiong, G., Zhang, S. & Liu, Q. The time-singularity multifractal spectrum distribution. Phys. A Stat. Mech. Appl. 391, 4727–4739. https://doi.org/10.1016/j.physa.2012.05.026 (2012).

Lacasa, L. & Gómez-Gardeñes, J. Analytical estimation of the correlation dimension of integer lattices. Chaos Interdiscip. J. Nonlinear Sci. 24, 043101. https://doi.org/10.1063/1.4896332 (2014).

Ştefan, Ţ et al. Fractal and lacunarity analysis of human retinal vessel arborisation in normal and amblyopic eyes. Hum. Vet. Med. 45(5), 51 (2013).

Wibawa, A. P. et al. Naïve Bayes classifier for journal quartile classification. Int. J. Rec. Contrib. Eng. Sci. II 7(2), 91–99 (2019).

Chen, H. et al. Improved naive Bayes classification algorithm for traffic risk management. EURASIP J. Adv. Signal Process. 1, 1–12 (2021).

Yang, F-J. "An implementation of naive bayes classifier. 2018 International Conf. on Computational Science and Computational Intelligence (CSCI). (IEEE, 2018).

Georgescu, P.-L. et al. Assessing and forecasting water quality in the Danube River by using neural network approaches. Sci. Total Environ. 879, 162998. https://doi.org/10.1016/j.scitotenv.2023.162998 (2023).

Breiman, L. Random forests. Mach. Learn. 45, 5–32 (2001).

Ali, J. et al. Random forests and decision trees. Int. J. Comput. Sci. Issues IJCSI 9(5), 272 (2012).

Deviaene, Margot, et al. Feature selection algorithm based on random forest applied to sleep apnea detection. 2019 41st Annual International Conf. of the IEEE Engineering in Medicine and Biology Society (EMBC). (IEEE, 2019).

Pudjihartono, N. et al. A review of feature selection methods for machine learning-based disease risk prediction. Front. Bioinf. 2, 927312 (2022).

https://www.ucdmc.ucdavis.edu/alzheimers/ accessed in January 2023.

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB).

Author information

Authors and Affiliations

Contributions

E.A., M.A. and M.M. conceived of and designed the study. Y.M. and E.A. prepared the manuscript. M.A. and E.A. reviewed and edited the manuscript. M.M. developed the software. M.A., E.A. and M.M. developed the algorithm. E.A. and Y.M. collected the manuscript images. E.A, Y.M., M.A., and M.M. analysed the data.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Amin, E., Elgammal, Y.M., Zahran, M.A. et al. Alzheimer’s disease: new insight in assessing of amyloid plaques morphologies using multifractal geometry based on Naive Bayes optimized by random forest algorithm. Sci Rep 13, 18568 (2023). https://doi.org/10.1038/s41598-023-45972-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-45972-w

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.