Abstract

Alzheimer's disease is a form of general dementia marked by amyloid plaques, neurofibrillary tangles, and neuron degeneration. The disease has no cure, and early detection is critical in improving patient outcomes. Magnetic resonance imaging (MRI) is important in measuring neurodegeneration during the disease. Computer-aided image processing tools have been used to aid medical professionals in ascertaining a diagnosis of Alzheimer's in its early stages. As characteristics of non and very-mild dementia stages overlap, tracking the progression is challenging. Our work developed an adaptive multi-thresholding algorithm based on the morphology of the smoothed histogram to define features identifying neurodegeneration and track its progression as non, very mild, mild, and moderate. Gray and white matter volume, statistical moments, multi-thresholds, shrinkage, gray-to-white matter ratio, and three distance and angle values are mathematically derived. Decision tree, discriminant analysis, Naïve Bayes, SVM, KNN, ensemble, and neural network classifiers are designed to evaluate the proposed methodology with the performance metrics accuracy, recall, specificity, precision, F1 score, Matthew’s correlation coefficient, and Kappa values. Experimental results showed that the proposed features successfully label the neurodegeneration stages.

Similar content being viewed by others

Introduction

Alzheimer's disease (AD) is a specific form of dementia noted for the widespread deterioration of neural tissue it causes. This degenerative disease primarily affects elderly individuals, with a high co-morbidity among patients over 601. As the patient ages, the risk of developing the disease increases exponentially. For example, between the ages of 60 and 85, there is a 15-fold increase in disease prevalence, as seen in Fig. 1. Once the onset of the disease begins, treatment only focuses on ameliorating symptoms. No current cure is available2. As such, acceptable patient outcomes depend on early detection of the disease. Dementia is a broad term that describes a decline in cognitive skills and memory over a long period. This decline is so pronounced that it affects day-to-day living. While there are many forms of dementia, AD is the most common— it is identified as the primary cause in 70% of cases3. As of 2020, 50 million cases of Alzheimer's have been identified. The burden on the healthcare system is significant—over $1 trillion is spent globally mitigating the disease4. This cost is due to the substantial post-diagnosis care required for Alzheimer's patients. Regarding global prevalence, AD is the most common in the United States. This can be linked to increased surveillance and advanced detection methods in this country5.

This graph shows the increasing incidence of Alzheimer's with age1.

Several clinical signs mark the pathophysiology of AD, including neuronal degeneration. Neural degeneration is another pathological hallmark of AD. As the disease progresses, the white and grey matter of the brain deteriorates as neurons die6. This clinical sign is especially important as it can be detected via Magnetic Resonance Imaging (MRI) by clinical professionals.

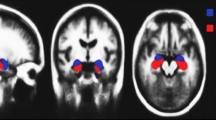

One of the key signs of the disease is the changing of tissue volumes in the brain over time. As AD progresses, neural tissue atrophy becomes more and more notable7. This progression can be seen in Fig. 2. A clinician can observe these changes in a patient over time and make a positive or negative diagnosis of Alzheimer's based on the atrophy8. To perform this procedure, MRI scans are the preferred method of diagnosis due to their non-invasive nature.

Building off previous algorithmic models for AD detection, we hand-crafted features based on an adaptive multi-thresholding algorithm to track AD progression, which ranges from no AD to moderate advancement.

Methodology

The model has the following steps: contrast enhancement, adaptive thresholding algorithm, white and gray matter segmentation, feature modeling and extraction, and machine learning models for tracking AD progression. Figure 3 depicts the mentioned stages.

Contrast enhancement and mask preparation

The contrast between the white and gray matter in brain MRI images is often low, so segmentation of the tissues becomes difficult. Increasing contrast between the two matters is needed to analyze dementia stages accurately. We used linear stretching to prevent any gray-and-white matter ratio change. Linear stretching maps the minimum intensity value to 0 and the maximum intensity value to the highest intensity value, which is 255 in our image dataset. It linearly scales the values in between.

Consider an image \(I\left(m,n\right)\) of size \(M\times N\) pixels. \({I}_{C}\left(m,n\right)\) is the contrast-enhanced image obtained using linear stretching. \({I}_{min}\) and \({I}_{max}\) are the minimum and maximum intensity values in the image and k is the number of bits, which is 8 in the employed dataset.

Figure 4 shows the results for non-dementia and moderate dementia cases. This step also standardizes the variety of image intensities.

Adaptive multi-thresholding algorithm

Measuring the grey-to-white matter ratio (GWR) requires segmenting the grey and white matter. We developed an adaptive multi-thresholding algorithm to calculate two threshold values from the histogram of contrast-enhanced images. Figure 5a depicts the histogram of one of the non-dementia cases. A lowpass filter with a 0.005 rad/s normalized frequency was applied to the histogram to smooth the envelope of the histogram; see Fig. 5b. The lowpass filter uses a minimum-order filter with a stopband attenuation of 60 dB and compensates for the delay introduced by the filter. Two scalar threshold values are determined by using the smoothed envelope. Note that the lowpass filter does not affect the high-intensity information in the MRI images.

Two thresholding values were calculated using the smoothed histogram. Figure 6a and b depict the adaptive multi-thresholding algorithm and measured features using it.

\({\alpha }_{1}\) and \({\alpha }_{2}\) are defined —three distance parameters \({d}_{1}\), \({d}_{2}\), \({d}_{3}\) are defined.

\({P}_{{I}_{c}}\left(l\right)\) is the histogram of the \({I}_{C}\left(m,n\right)\) and calculated as below.

where \(l=0, 1, 2, \dots , L-1\) and delta function \({\delta }_{d}[k]=\left\{\begin{array}{c}1 \,if\, k=0\\ 0 \,if\, k\ne 0\end{array}\right.\). The sum of all the entries in a histogram equals the total number of pixels in the image, \(\sum_{l=0}^{L-1}{P}_{lc}(l)=MN.\)9. The smoothed histogram \({P}_{s}\) was obtained using Eq. (2), \({H}_{L}\) is the low pass filter as \(*\) represents the convolution operation.

Threshold points \(\left({x}_{1}, {y}_{1}\right), \left({x}_{2}, {y}_{2}\right)\) are the local minimum values of \({P}_{s}\). The coordinates \(\left({x}_{1}, {y}_{1}\right)\) and \(\left({x}_{2}, {y}_{2}\right)\) are the second and the last local minimum on the x-axis. Thresholds are defined as

\(\left({x}_{3}, {y}_{3}\right)\) is the absolute maximum of \({P}_{s}\). This point is used to calculate the slopes and distances between the threshold points. We defined the following parameters in Eqs. (5) through (13) to investigate the possibility of their use in the analysis of different dementia stages.

The grey and white matter segmentation is performed by using the threshold values. In the equations below, \({I}_{gm}\left(m,n\right)\) and \({I}_{wm}\left(m,n\right)\) represent the grey and white matters, respectively. Volume, mean, and standard deviation of the grey and white matter are calculated and employed as potential features. GWR is the grey-to-white matter ratio calculated by Eqs. (10) and (11). Figure 7 shows the segmented white and gray matter obtained by using the \(T{h}_{1}\) and \(T{h}_{2}\) values.

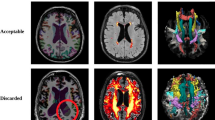

Database

Alzheimer's disease MRI images were obtained from Kaggle, an open-source website10. For 4-class classification studies, the dataset contains MRI images of four stages of the disease as non-demented (3200 images), very mild demented (2240 images), mild demented (897 images), and moderate demented (64 images). The set is imbalanced. The image resolution is 128 \(\times\) 128. Binary classification studies merge very mild, mild, and moderate classes. In that case, the set has 3200 images for both classes. Some of these images can be seen in Fig. 8. The set is known as the Kaggle set and is widely used in AD research for evaluating models.

Experimental results and analysis

The reduction in pixel intensity can be explained by tissue atrophy in the brain as Alzheimer's progresses. As grey matter atrophies, it is replaced by void space, which appears as black pixels in an MRI11. Alzheimer's deterioration occurs within grey matter first, as it is the external tissue of the brain. Therefore, the grey matter tissue volume will decrease before the white matter does. The calculated features are analyzed in terms of their efficiency in labeling the dementia stages. Figures 9 through 11 show the 2D and 3D plots of four dementia stages for measured features.

It is observed that \(T{h}_{1}\) and \({d}_{1}\) features are effective in distinguishing moderate and mild dementia. However, non-dementia and very mild dementia overlap significantly (shown in Fig. 9). Shrinkage, GWR, and especially \(\mathrm{\alpha }1, { {\text{and}} d}_{2}\) features help distinguish non and very-mild dementia (shown in Figs. 10 and 11). We ranked the features using the minimum redundancy maximum relevance (MRMR) algorithm and calculated the most significant five features as \(T{h}_{1}\), the mean intensity value of the white matter, \({d}_{1}\), shrinkage, and the volume of the white matter. The chi-square test univariate feature ranking algorithm supported the MRMR results. Figure 12 depicts the feature ranking using the MRMR algorithm. The drop in the importance score represents the confidence in the selection algorithm. There is a significant drop between the first, second, third, and fourth predictors, as seen in Fig. 12. The features after the fourth have a slight decrease in importance score referring to non-significant features.

The statistical distribution of the most compelling features is given in Fig. 13. As can be seen, non and very-mild dementia have overlapping characteristics and more outliers compared to mild and moderate dementia. We observed that white matter characteristics are more effective in distinguishing different stages.

Labeling dementia stages

We evaluated the performance of the defined features of AD in MRI images using decision trees, Discriminant Analysis, Naïve Bayes, Support vector machines (SVMs), KNN, Ensemble, and Neural Network (NN) classifiers. Appendix A presents the specifications of the designed classifiers, as Tables 1 and 2 show the performances. tenfold cross validation is employed with 60% of the dataset used for training, 20% for validation, and the remaining used for testing in each class. Model complexity is reduced by cross-validation to avoid overfitting. Table 1 uses all the features in labeling the AD stages, while Table 2 uses the most significant five features identified by the feature reduction algorithm in Sect. "Experimental results and analysis". Performance metrics are accuracy, recall, specificity, precision, F1 score, Matthews correlation coefficient, and Kappa values.

Employing all features achieved higher performance than the five significant features. Discriminant analysis, SVM, Ensemble, and Neural network classifiers performed almost perfect classification. Tables 3 and 4 show the confusion matrices for narrow neural networks employing 17 (entire set) and 5 (the most significant) features. Classes are 0-non, 1-very mild, 2-mild, and 3-moderate dementia. Although the MRMR algorithm did not report the remaining features as effective, they perfected the classifier performances, as seen in Table 1. Figures 10 and 11 show mild and moderate dementia and minimize the overlap between non and very-mild dementia.

Although the five features achieved high performance compared to the works in the literature, they failed to separate the non and very-mild dementia classes, which have overlapping features, observed in Fig. 13, a statistical presentation of the classes.

Table 5 presents the runtime and total cost metrics of the classifiers based on the11th Gen Intel(R) Core(TM) i7-11390H @ 3.40 GHz 2.92 GHz, 16.0 GB (15.7 GB usable), 64-bit operating system, × 64-based processor computer specifications. For the NNN classifier, the iteration limit was set to 1000.

Feature extraction done automatically using convolutional neural networks (CNNs) or other deep neural networks (DNNs) has been trending in recent years. We compare the performance of the hand-crafted features extracted in this work with the other works in the literature in Table 6.

Liang and Gu proposed a weakly supervised learning (WSL)-based deep learning (DL) framework called ADGNET. It consisted of a backbone network, a task network, and image reconstruction. The work reported high performance, outperforming the state-of-the-art ResNeXt WSL and SimCLR models12. Murugan et al. designed a deep learning model DEMNET and evaluated it using the Kaggle dataset. DEMNET consists of a CNN to extract features using the normalized data. Their work achieved an overall accuracy of 95.23%, 95% of recall, and 96% of precision for 4-class classification13.

Kaplan et al. proposed a feed-forward local phase quantization network (LPQNet) consisting of multilevel feature generation, feature selection, and classification phases. The LPQNet was designed to have high accuracy and low computational complexity. The model was tested on a private AD dataset and the Kaggle dataset, achieving 99.62% accuracy on the Kaggle dataset using four classes14. In another study, Kaplan et al. used vision transformers and generated 16 exemplars. Several histogram-based feature extraction methods were used. Their work achieved 100% accuracy for binary classification using cubic support vector machine (CSVM) and fine KNN classifiers17. The shortfall of their work is that the details highlighting the healthy and AD slices were manually selected in MRI/CT images.

A 4-class AD detection model using CNN with activation Leaky ReLU was designed in Ref.16. The data was oversampled using SMOTE technique. They reported an overall accuracy of 96.35% on the Kaggle dataset. Sharma et al. used a transfer learning-based modified inception model, including normalization in the preprocessing stage for 4-class AD detection. Vertical and horizontal flipping, rotation, and brightness techniques were used in the augmentation step to balance the class sizes in the Kaggle dataset. Their work obtained 94.93%, 94.94%, 98.3%, and 94.92% precision, recall, specificity, and accuracy, respectively. Without any details, the authors stated that the work could not guarantee reproducibility15.

In another work, one machine-learning model took longitudinal brain scans and patient classifiers such as age to make informed decisions about the presence of AD. This model was able to predict cases of the disease with a 97.58% accuracy rating11. In work18, convolutional neural networks were used to detect AD patients from stable controls with an accuracy of 88%. Research has shown the grey-and-white matter ratio (GWR) decreases as the disease progresses19. The work in20 studied the changes in brain volume, focusing on the occipital lobe and hippocampal region. In a recent study, Agarwal et al. labeled cognitively normal, AD, and mild cognitive impairment using CNN-based DL models21 and achieved promising results. CCN-based feature extraction is done automatically by feeding the images to the model raw or after the preprocessing stage. The origin of the obtained features is not completely known. Considering that the brain has a very complicated structure and the etiology of AD is unknown, there is a need to develop mathematical descriptors to define the structure and changes in the brain. The advantage of our work is the hand-crafted feature modeling. The state-of-the-art works in the literature use CNN or similar deep-learning techniques to extract features to detect the AD stages. The performance of those models highly depends on the size of the dataset. Another work22 used hybrid clustering and a game theory-based approach to monitor the progress of AD using MRI images. Their work consisted of stages-registration, skull stripping, histogram normalization, feature selection, segmentation, and classification. Calculated features were based on the co-occurrence matrix. Evaluation of the work was limited to three performance metrics—accuracy, sensitivity, and specificity—and three previous studies. They reported accuracies between 83.45 and 88.83% with balanced sensitivity and specificity on the OASIS dataset. In our work, we mathematically modeled a set of features based on the histogram of the contrast enhanced MRI images that can be used to track Alzheimer's disease progression. In addition to employing supervised classifiers, unsupervised or rule-based classifiers can be designed using the proposed features.

Conclusion

This work developed a model for tracking Alzheimer's disease progression. An adaptive multi-thresholding algorithm was proposed, and a set of novel features were mathematically defined. The system was evaluated on the Kaggle dataset consisting of non-demented, very mild, mild, and moderate dementia MRI images. The algorithm was based on the geometric shape of the envelope of the smoothed histogram. The grey and white matter were segmented using the obtained threshold values. The features included the grey-to-white matter ratio, shrinkage, slope values, white and grey matter volume, statistical moments, and distance parameters. The MRMR feature ranking algorithm, used for feature ranking and selection, showed high confidence for the five features containing \(T{h}_{1}\), the mean intensity and volume of the white matter, \({d}_{1}\), and shrinkage.

The proposed model was evaluated by various classification algorithms—decision tree, discriminant analysis, Naïve Bayes, SVM, KNN, ensemble, and neural network classifiers. Performance metrics were accuracy, recall, specificity, precision, F1 score, Matthews correlation coefficient, and Kappa values. Discriminant analysis, SVM, ensemble, and neural network classifiers achieved perfect accuracy using all features.

Data availability

The work used an open-source dataset. Link https://www.kaggle.com/tourist55/alzheimers-dataset-4-class-of-images.

References

Alzheimer’s Association Report. Alzheimer’s disease facts and figures. Alzheimers Dement. 19(4), 1598–1695. https://doi.org/10.1002/alz.13016 (2023).

Yiannopoulou, K. G. & Sokratis, G. P. Current and future treatments in Alzheimer disease: An update. J. Central Nerv. Syst. Dis. 12, 1179573520907397. https://doi.org/10.1177/1179573520907397 (2020).

P.D. Emmady, C. Schoo, P. Tadi, Major Neurocognitive Disorder (Dementia), In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2023, https://www.ncbi.nlm.nih.gov/books/NBK557444/.

Breijyeh, Z. & Karaman, R. Comprehensive review on Alzheimer’s disease: Causes and treatment. Molecules 25(24), 5789. https://doi.org/10.3390/molecules25245789 (2020).

Mayeux, R. & Stern, Y. Epidemiology of Alzheimer disease. Cold Spring Harb. Persp. Med. 2(8), a006239. https://doi.org/10.1101/cshperspect.a006239 (2012).

DeTure, M. A. & Dickson, D. W. The neuropathological diagnosis of Alzheimer’s disease. Mol. Neurodegen. 14, 32. https://doi.org/10.1186/s13024-019-0333-5 (2019).

Johnson, K. A., Fox, N. C., Sperling, R. A. & Klunk, W. E. Brain imaging in Alzheimer disease. Cold Spring Harb. Persp. Med. 2(4), a006213. https://doi.org/10.1101/cshperspect.a006213 (2012).

Barnes, J. et al. A meta-analysis of hippocampal atrophy rates in Alzheimer’s disease. Neurobiol. Aging 30(11), 1711–1723. https://doi.org/10.1016/j.neurobiolaging.2008.01.010 (2009).

R. M. Rangayyan, Biomedical Image Analysis, The Biomedial Engineering Series. CRC Press, pp. 78.

S. Dubey, Alzheimer's Dataset. [Online]. https://www.kaggle.com/tourist55/alzheimers-dataset-4-class-of-images. Accessed 21 February 2023.

Wu, Z. et al. Gray matter deterioration pattern during Alzheimer’s disease progression: A regions-of-interest based surface morphometry study. Front. Aging Neurosci. https://doi.org/10.3389/fnagi.2021.593898 (2021).

Liang, S. & Gu, Y. Computer-aided diagnosis of Alzheimer’s disease through weak supervision deep learning framework with attention mechanism. Sensors (Basel) 21(1), 220. https://doi.org/10.3390/s21010220 (2020).

Murugan, S. et al. DEMNET: A deep learning model for early diagnosis of Alzheimer diseases and dementia from MR images. IEEE Access 9, 90319–90329. https://doi.org/10.1109/ACCESS.2021.3090474 (2021).

Kaplan, E., Dogan, S., Tuncer, T., Baygin, M. & Altunisik, E. Feed-forward LPQNet based automatic Alzheimer’s disease detection model. Comput. Biol. Med. 137, 104828. https://doi.org/10.1016/j.compbiomed.2021.104828 (2021).

Sharma, S. et al. Transfer learning-based modified inception model for the diagnosis of Alzheimer’s disease. Front. Computat. Neurosci. https://doi.org/10.3389/fncom.2022.1000435 (2022).

Avşar, M. & Polat, K. Classifying Alzheimer’s disease based on a convolutional neural network with MRI images. J. Artif. Intell. Syst. 5, 46–57. https://doi.org/10.33969/AIS.2023050104 (2023).

Kaplan, E. et al. ExHiF: Alzheimer’s disease detection using exemplar histogram-based features with CT and MR images. Med. Eng. Phys. 115, 103971. https://doi.org/10.1016/j.medengphy.2023.103971 (2023).

Yee, E. et al. Construction of MRI-based Alzheimer’s disease score based on efficient 3D convolutional neural network: Comprehensive validation on 7902 images from a MultiCenter dataset. J. Alzheimer’s Dis. 79(1), 47–58. https://doi.org/10.3233/JAD-200830 (2021).

Jefferson, A. L. et al. Alzheimer’s disease neuroimaging initiative, gray & white matter tissue contrast differentiates mild cognitive impairment converters from non-converters. Brain Imaging Behav. 9(2), 141–148. https://doi.org/10.1007/s11682-014-9291-2 (2015).

R. Alattas, B.D. Barkana, A comparative study of brain volume changes in Alzheimer's disease using MRI scans, Proceedings of Long Island Systems, Applications and Technology (LISAT), Farmingdale, NY, USA, 2015, pp. 1–6, doi: https://doi.org/10.1109/LISAT.2015.7160197.

Agarwal, D. et al. Automated medical diagnosis of Alzheimer´s disease using an efficient net convolutional neural network. J. Med. Syst. 47, 57. https://doi.org/10.1007/s10916-023-01941-4 (2023).

Rajesh Kumar, P., Arunprasath, T., Pallikonda Rajasekaran, M. & Vishnunvarthanan, G. Computer-aided automated discrimination of Alzheimer’s disease and its clinical progression in magnetic resonance images using hybrid clustering and game theory-based classification strategies. Comput. Electr. Eng. 72, 283–295. https://doi.org/10.1016/j.compeleceng.2018.09.019 (2018).

Author information

Authors and Affiliations

Contributions

N.P.: Conceptualization, Methodology, Software, Data curation, Formal Analysis, Investigation, Validation, Writing-Original draft preparation. T.F.-G.: Software, Data Curation, Visualization, Investigation. B.D.B.: Supervision, Project administration, Resources, Conceptualization, Methodology, Formal Analysis, Software, Investigation, Validation, Writing-Reviewing and Editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pasnoori, N., Flores-Garcia, T. & Barkana, B.D. Histogram-based features track Alzheimer's progression in brain MRI. Sci Rep 14, 257 (2024). https://doi.org/10.1038/s41598-023-50631-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-50631-1

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.