Abstract

Making accurate predictions of chaotic dynamical systems is an essential but challenging task with many practical applications in various disciplines. However, the current dynamical methods can only provide short-term precise predictions, while prevailing deep learning techniques with better performances always suffer from model complexity and interpretability. Here, we propose a new dynamic-based deep learning method, namely the dynamical system deep learning (DSDL), to achieve interpretable long-term precise predictions by the combination of nonlinear dynamics theory and deep learning methods. As validated by four chaotic dynamical systems with different complexities, the DSDL framework significantly outperforms other dynamical and deep learning methods. Furthermore, the DSDL also reduces the model complexity and realizes the model transparency to make it more interpretable. We firmly believe that the DSDL framework is a promising and effective method for comprehending and predicting chaotic dynamical systems.

Similar content being viewed by others

Introduction

Complex, nonlinear dynamical systems are almost ubiquitous in the natural and human world, such as climate systems, ecosystems and financial systems1,2,3. Centuries-old efforts to comprehend and predict such systems have spurred developments in various fields, but have been hindered by their chaotic behaviors4, which makes it exceptionally difficult to achieve long-term precise predictions5. Studies revealed that the chaotic time series generated by any variable contains abundant dynamical information of the whole system5,6. How to exploit the information hidden in the time series data and establish an effective prediction model to accurately predict the future as long as possible, is of great importance in many disciplines7,8,9.

After decades of research, various methods have been proposed to reconstruct dynamics and make predictions of chaotic dynamical systems, and the phase-space reconstruction is undoubtedly one of the most representative dynamical methods. The Takens embedding theorem10,11,12 shows how delayed-coordinates of a single time series can be used as proxy variables to reconstruct dynamics for the underlying deterministic process. Sauer et al.13 and Deyle et al.14 further generalized the delayed embedding theorem and demonstrated that multivariate time series can also be used in reconstructing dynamics. Ma et al.15 proposed an inverse delayed embedding (IDE) method, which is the inverse implementation of the delayed embedding reconstruction. Furthermore, studies16,17 combined the delayed embedding theorem with the generalized embedding theorem, but only consider the linear relationships of various factors while ignore the nonlinear interactions among them.

In recent years, with rapid developments in computing power and algorithmic innovations of deep learning techniques, more and more studies have applied various deep learning methods to predictions of chaotic time series, such as long short-term memory network18,19,20, reservoir computing20,21,22, residual network23,24, anticipated learning machine25, etc. Despite good performances due to the ability of considering nonlinear interactions among variables, deep learning techniques have always been called the “black-box”, which leads to deep learning models being untrusted in some key areas for the lack of model interpretability26,27. In addition, the era of big data has witnessed a rapid accumulation of various data, and samples with massive size and high dimensionality pose unique computational and statistical challenges for deep learning methods28,29.

Here, we propose a new dynamics-based deep learning method, namely the dynamical system deep learning (DSDL), combining dynamical methods with deep learning methods. Several experiments on four chaotic dynamical systems with different complexities have significantly demonstrated the superior performances of the DSDL over other dynamical and deep learning methods used for comparison in this work. Despite the trade-off between model interpretability and performance30, the DSDL not only greatly improves the model performance, but also realizes the model transparency to make it more interpretable.

DSDL framework

As particularly shown in Fig. 1A, with \(n\) dimensional time series data \(x_{i} \left( t \right), i = 1,{ }2,{ } \ldots ,{ }n\), of one chaotic dynamical system (we name it as the target system), every time series can be inputted as one primitive system variable into the DSDL framework, and all primitive system variables constitute the primitive variable set \({\varvec{X}}\), \({\varvec{X}} = \{ x_{1} ,x_{2} , \ldots ,x_{n} \} .\) In a chaotic dynamical system, due to dissipation, the steady dynamics after a transient phase is generally constrained into a subspace31, which is the attractor (\({\mathbf{A}}\)) of the target system. The phase-space technique10,11,12,13,14 makes it possible to have two different dynamical methods of reconstructing an attractor of the target system, which are respectively named as the univariate and multivariate way.

Architecture of the DSDL framework. (A) The input data is constructed by n-dimensional time series data \(x_{i} \left( t \right), i = 1, 2, \ldots , n\) of one chaotic dynamical system, which has an original attractor \(\mathbf {A}\). (B) After selecting one time series as the target variable \(x_{k} \left( t \right)\), \(k \in \left[ {1,n} \right]\), we can reconstruct a delayed attractor \(\mathbf {D}\) based on the time-lagged coordinates of \(x_{k} \left( t \right)\) with suitable embedding dimension and time delay. (C) To take full advantage of the nonlinear interactions among variables, we construct a multi-layer nonlinear network and we can get the candidate variable set \({\varvec{X}}_{{\varvec{N}}}\). From \({\varvec{X}}_{{\varvec{N}}}\), we can select the key variable set \({\varvec{X}}_{{{\varvec{K}},\user2{ x}_{{\varvec{k}}} }}\) of the target variable \(x_{k} \left( t \right)\) though the CVSR method to reconstruct a non-delayed attractor \(\mathbf {N}\). (D) Based on the embedding theorems, both reconstructed attracts (\(\mathbf {D}, \mathbf {N}\)) are topologically conjugated to the original one (\(\mathbf {A}\)), so there is a diffeomorphism map \({ }{{\varvec{\Phi}}}:\mathbf {N} \to \mathbf {D}\). Then we can obtain the DSDL prediction model for the target variable \(x_{k} \left( t \right)\). using the corresponding training set to fit \({{\varvec{\Phi}}}\).

The univariate way (Fig. 1B), according to the delayed embedding theory10,11,12, is reconstructed by the time-lagged coordinates of a single variable \(x_{k} \left( t \right)\), \(k \in \left[ {1, n} \right]\) (we name it as the target variable), thus we can get a “delayed attractor” in the form of \(\mathbf {D}\)(\(x_{k} \left( t \right),{ }x_{k} \left( {t + \tau } \right),x_{k} \left( {t + 2\tau } \right), \ldots\)) with suitable embedding dimension \(L\) and time delay interval \(\tau\). This delayed attractor \(\mathbf {D}\) is aimed to obtain the temporal information of the target variable16. For the DSDL framework, in order to establish a complete prediction model for the target system, every primitive system variable needs to be used as the target variable, that is to say, we must separately establish the DSDL model for each primitive system variable.

The multivariate way (Fig. 1C), based on the generalized embedding theorem13,14,15, is reconstructed by multiple variables and we can get a “non-delayed attractor” in the form of \(\bf {N}\)(\(x_{i} \left( t \right),{ }x_{j} \left( t \right),x_{s} \left( t \right), \ldots\)), \(i,{ }j, s, \ldots \in \left[ {1, n} \right]\). This non-delayed attractor \(\mathbf {N}\) is aimed to get the spatial information among system variables14. Ma et al.16 randomly chose index tuple \(l = \left( {i,{ }j, s, \ldots } \right)\) from any combinations of primitive system variables, which only consider the linear relationships among them. To take full advantage of the nonlinear interactions among system variables, we construct a multi-layer nonlinear network in the DSDL framework and get the candidate variable set \({\varvec{X}}_{{\varvec{N}}}\), \({\varvec{X}}_{{{\varvec{N}}_{{L_{i} }} }}^{{L_{i} }} \subset {\varvec{X}}_{{\varvec{N}}} { }\left( {i \in \left[ {1, m} \right]} \right)\), where \(m\) represents the number of layers in the network, \(L_{i}\) denotes the ith layer, \({\varvec{N}}_{{L_{i} }}\) is the sample size of this layer, and \({\varvec{X}}_{{{\varvec{N}}_{{L_{i} }} }}^{{L_{i} }}\) is the candidate variable subset of the ith layer constructed by all monomials of ith-order based on \({\varvec{X}},\) when \(i \ge 2\) the monomials are nonlinear. However, we stress that not all variables in the set \({\varvec{X}}_{{\varvec{N}}}\) have a positive impact on predicting the target variable \(x_{k} \left( t \right)\), thus we need to select those variables that truly control the evolution of the target variable from \({\varvec{X}}_{{\varvec{N}}}\) to construct the key variables set \({\varvec{X}}_{{{\varvec{K}},\user2{ x}_{{\varvec{k}}} }}\) of \(x_{k} \left( t \right)\) by using the cross-validation-based stepwise regression32 (CVSR) method. In this way, we can efficiently explore the crucial information and remove redundant information for the DSDL model to reduce model complexity. Thus the non-delayed attractor \(\mathbf {N}\) in the DSDL framework is constructed by those key variables we selected.

How to determine the embedding dimension \(L\) and time delay \(\tau\) is an important topic in the state space reconstruction process, and several criteria have been proposed to the time series33. From the embedding theorems10,11,12,13,14, we must ensure that the dimension \(L\) for reconstructing the above attractors (\(\mathbf {D}\), \(\mathbf {N}\)) is large enough, i.e. \(L > 2d_{A}\) where \(d_{A}\) is the box counting dimension of the attractor, and let \(\tau\) be a positive time interval. Here, we use the False Nearest Neighbors34 (FNNs) method to determine the minimal embedding dimension and simply set \(\tau\) as one lag in the time series. However, we find that the dimension \(L\) of our DSDL model (also the number of key variables selected, as shown in Table S1) is usually much larger than the minimal embedding dimension, which meets the requirement of reconstruction.

Ultimately, since both reconstructed attractors are topologically conjugated to the original attractor, there is a diffeomorphism map between them, that is, \({{\varvec{\Phi}}}:\mathbf {N} \to \mathbf {D}\)13 (Fig. S1). On this basis, we can obtain the DSDL prediction model for the target variable \(x_{k} \left( t \right)\), with several parameters to be determined, in the form of

Then, we can use the corresponding training data set to fit \(\varphi\) and train the model parameters (Fig. 1D). Unlike the statistical model, the DSDL model is more similar to the dynamical model, which is aimed to exploit the dynamical equations/operators to achieve successive predictions using one model, instead of getting discrete predictions using several statistical models.

Results

Model performances of DSDL in four chaotic dynamical systems with different complexities

Firstly, our proposed method is tested on three chaotic dynamical systems with different complexities to demonstrate its effectiveness and robustness, including the 3-variable Lorenz system35, 4-variable hyperchaotic Lorenz system36, 5-variable conceptual ocean–atmosphere coupled Lorenz system37 (SI Appendix). Among them, the first two systems are autonomous systems, and the 5-dimensional coupled Lorenz system belongs to a nonautonomous system. With multiple time series data outputted by these systems, sources of predictability are believed to come from the temporal and spatial information hidden in those time series15. Here, prediction results of linear models (A linear model refers to a prediction model established solely using the primitive system variables themselves and without nonlinear monomials) and DSDL models are compared in all three systems (Figs. S2, 2).

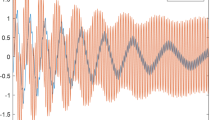

Prediction results of DSDL models in three different chaotic dynamical systems. (A) The prediction series of the Lorenz system. The light grey line shows the numerical solutions (true state), the blue line shows the training set, the red line represents the effective predictions and the dark grey line represents the invalid predictions in the corresponding test set. The vertical black dashed line marks the effective prediction time (EPT). Using a training set of 104 time points, only the last 103 time points are shown in this figure. (B) The prediction trajectory of the Lorenz attractor. (C) Same as (A), but for the hyperchaotic Lorenz system. (D) Same as (A), but for the conceptual ocean–atmosphere coupled Lorenz system.

As a paradigmatic chaotic dynamical system, the Lorenz system outputs three primitive system variables with obvious chaotic oscillations under certain parameter requirements. However, prediction series of the linear model only exhibit nonlinear characteristics for a very short period of time and converge quickly to a fixed point with basically no effective predictions (Fig. S2A). Besides, the prediction trajectory is always off the Lorenz attractor (Fig. S2B), which indicates that we cannot effectively reconstruct the dynamics by only considering the linear relationships among system variables. Using the same training/test sets as the linear model, the prediction series of the DSDL model always maintains the chaotic characteristics of the Lorenz system, accompanied by a significant improvement of effective prediction time (EPT, Fig. 2A). More importantly, the prediction trajectory is consistently on the Lorenz attractor (Fig. 2B), which demonstrates that the DSDL model is able to successfully reconstruct the nonlinear dynamics of the target system. Similar results are also found in the hyperchaotic Lorenz system and the conceptual ocean–atmosphere coupled Lorenz system (Figs. 2C, D, S2C, D).

Furthermore, we note that the EPTs of different variables in one system are not always the same (Fig. 2C, D). For example, the EPT of variable \(\eta\) in the conceptual coupled Lorenz system, representing the ocean pycnocline to simulate features of the slow-changing deep ocean, is much longer than other variables. This further indicates that prediction results of DSDL models are highly consistent with the actual physical properties and predictabilities of various variables in different dynamical processes.

Using the mean EPT (normalized by the Lyapunov time) of 100 different training/test sets to quantify the model predictive capability, we compare the DSDL with nine existing dynamical and machine learning methods used for predicting chaotic time series (SI Appendix). In order to make the results more rigorous, we also incorporate the Mackey–Glass equation38 for comparison, which is a nonlinear time delay differential equation and has a completely different construction from the three systems mentioned above. Clearly, the DSDL method shows the best predictive performances in all four chaotic dynamical systems, and is much ahead of other popular deep learning methods (Fig. 3). Gauthier et al.39 proposed the next generation reservoir computing (NG-RC) method, which has certain similarities with DSDL. However, the NG-RC method can accurately predict approximately ~ 6 Lyapunov time for the \(x\) variable of the Lorenz system, while the DSDL method can predict about ~ 14 Lyapunov time. Moreover, the modeling time of the DSDL (about 2 min for the Lorenz system) is significantly shorter than most of the methods, which further demonstrates the superiority of the DSDL method.

Comparisons between the DSDL and other existing dynamical and machine learning methods in four different chaotic dynamical systems. Using the mean EPT (Lyapunov time), we compare the model predictive capabilities of different methods in four chaotic dynamical systems. The mean EPT is obtained by 100 different training/test sets, and the higher the mean EPT, the better the method performs.

Interpretability of DSDL models

Traditional deep learning models have achieved remarkable performances in many important domains40. However, it is often difficult to explain the prediction results due to their over-parameterized “black-box” nature and lack of interpretability41,42,43. The opposite of “black-box” is transparency, and transparent models convey some degree of ante-hoc interpretability by themselves44. As a dynamics-based deep learning method, all processes of the DSDL framework are clearly visible during the establishment. And we can further explore and clarify the roles of various components in the DSDL model when we break down a complete DSDL model for one target variable into layers.

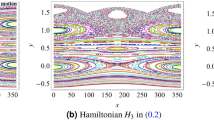

Chaotic characteristics

The prediction series of adding layer 1 is consistent with the linear model, which also converges quickly to a fixed point with basically no effective predictions (Fig. 4A). While the prediction series is immediately able to maintain the nonlinear characteristics and greatly improve the predictive ability after adding layer 2 (Fig. 4B). This indicates that layer 2, that is, key variables of second-order, plays a very important role in reconstructing dynamics of the Lorenz system and provides the necessary chaotic characteristics for the DSDL model.

The roles of different layers in the DSDL model for the Lorenz system. (A–E) The prediction series of variable \(x\) in the Lorenz system resulted from adding layer 1–5 into the DSDL model, respectively. (F–I) The prediction series of variable \(x\) after removing one key variable from the second-order to fifth-order prediction model, respectively. The light grey line shows the numerical solution (true state), the blue line shows the training data set, the red line represents the effective predictions while the dark grey line represents the invalid predictions. The vertical black dashed line marks the EPT. Using a training set of 104 time points, only the last 103 time points are shown in this figure.

Informativeness

Although adding layers 3– 5 cannot affect the chaotic characteristics of the DSDL model as much as layer 2, they will still change the evolution trajectories, improving prediction performance layer by layer (Fig. 4C– E). Therefore, the role of high-order key variables is likely to provide more information on nonlinear interactions among variables and add more constraints into the modulation of the DSDL model, so as to make model predictions follow the underlying evolution rules of the target variable as long as possible.

Robustness

Due to the difficulty in collecting all needful information of the target system in real-world modeling, we assume that a critical factor is unexpectedly missing from the prediction model. For example, after removing \(xz\) from layer 2, the second-order model only exhibits a quasi-periodic evolution (Fig. 4F). However, the DSDL model can still provide a certain degree of accurate predictions after adding higher-order layers, demonstrating the robustness of the DSDL model (Fig. 4G – I).

Discussion

In summary, we have proposed a new framework to make relative long-term accurate and transparent predictions of chaotic dynamical systems, and this DSDL method has been shown to be a successful scheme for dynamics-based deep learning. According to the embedding theorems, we can establish a prediction model based on the map between two kinds of reconstructed attractors. One is the delayed attractor reconstructed by the time-lagged coordinates of the target variable, and the other is the non-delayed attractor reconstructed by multiple key variables selected through CVSR method. The novelty of DSDL models, on the one hand, roots in a full exploitation of the nonlinear interactions among the multivariate time series data by constructing the multi-layer nonlinear network. On the other hand, using the CVSR method to select key variables that truly determine the evolution of the target variable, DSDL not only improves the model predictive capability, but also realizes the reduction of model complexity and improvement of model interpretability, that is to open the “black-box”. Notably, our DSDL model outperforms other existing dynamical and machine learning methods in four chaotic dynamical systems with different complexities.

However, we have to admit that the DSDL method still has certain limitations at present. In this study, we only focus on the prediction of ordinary differential equation systems, and we will continue to test the predictive performance of the DSDL model in partial differential equation systems. And we only focus on those chaotic dynamical systems whose equations are known. On this basis, we will further investigate those complex systems with uncertain structures and unknown equations. In addition, our study only considers data generated by noise-free numerical simulations, but noise is also inevitable in practical applications, thus the impact of noise on the DSDL model is also one of our focuses in the future. In this work, we simply set \(\tau\) as one lag in the time series, and we still need to discuss the impact of time delay \(\tau\) on the DSDL model in future work. Last but not least, Li and Chou45 proved the existence of the atmospheric attractor and the global analysis theory of climate system46 indicates that there exists a global attractor in the dynamical equations of climate, and any state of climate system will evolve into the global attractor as time increases. Therefore, this means that we may be able to apply the DSDL method to predict real-world systems in future work, but this requires more effort and validation.

Methods

Construction of multi-layer nonlinear network

Before describing the specific construction of the multi-layer nonlinear network in detail, we need to clarify some useful definitions. Suppose that there is a power function \(x^{a} \left( {a = 0,1,2,3, \ldots } \right)\), its order is denoted as \(O\left( {x^{a} } \right) = a\). On this basis, we can define a nonlinear monomial \(F\) constructed by the product of power functions of primitive system variables, denoted as

where \(\mathop \sum \limits_{i = 1}^{n} a_{i} = k\), and the order of this monomial is

Therefore, we can eventually give the definition of the ith layer of the multi-layer nonlinear network \({\varvec{X}}_{{N_{{L_{i} }} }}^{{L_{i} }} , i \in \left[ {1,m} \right]\), that is, containing all ith-order monomials \(F^{i} \left( {\varvec{X}} \right)\), which can be expressed as

What’s more, we need to further clarify some properties of the multi-layer network:

-

1.

The number of nonlinear layers (\(m\)) varies with different chaotic dynamical systems, which will be determined by corresponding training/test data sets.

-

2.

There is no intersection between two different nonlinear layers, which can be expressed as

$${\varvec{X}}_{{N_{{L_{i} }} }}^{{L_{i} }} \cap {\varvec{X}}_{{N_{{L_{j} }} }}^{{L_{j} }} = \emptyset \left( {i,j \in \left[ {1,m} \right],i \ne j} \right).$$(4) -

3.

Suppose that the target variable is \(x_{k} \left( t \right)\), the key variables set of \(x_{k} \left( t \right)\) selected from the ith layer is named as \({\varvec{X}}_{{K_{{L_{i} }} , x_{k} }}^{{L_{i} }} ,i \in \left[ {1,m} \right]\). Key variables selected in the previous layer will be input to the next layer and continue to join the selection procedure, denoted as,

$${\varvec{X}}_{{K_{{L_{1} }} , x_{k} }}^{{L_{1} }} \subseteq {\varvec{X}}_{{N_{{L_{1} }} }}^{{L_{1} }} ,\user2{ }$$(5.1)$${\varvec{X}}_{{K_{{L_{2} }} , x_{k} }}^{{L_{2} }} \subseteq {\varvec{X}}_{{N_{{L_{2} }} }}^{{L_{2} }} \cup {\varvec{X}}_{{K_{{L_{1} }} , x_{k} }}^{{L_{1} }} ,$$(5.2)$${\varvec{X}}_{{K_{{L_{m} }} , x_{k} }}^{{L_{m} }} \subseteq {\varvec{X}}_{{N_{{L_{m} }} }}^{{L_{m} }} \cup {\varvec{X}}_{{K_{{L_{m - 1} }} , x_{k} }}^{{L_{m - 1} }} .$$(5.3)

After all those procedures, we can achieve the final result of key variable set \(\user2{ X}_{{K, x_{k} }} = {\varvec{X}}_{{K_{{L_{m} }} , x_{k} }}^{{L_{m} }}\).

Selection method of key variables

After the construction of multi-layer nonlinear network, we need to select the key variables that play a decisive role in the time evolution of the target variable. Guo et al.32 proposed the cross-validation-based stepwise regression (CVSR) approach, which is a “forward” stepwise screening procedure to select the optimal predictors from the potential predictor set. The criteria for selecting key variables no longer rely on the fitting ability of the regression equation to be evaluated, but rather on the hindcast ability of the prediction model in cross validation. It employs k-fold cross validation to improve the robustness of selecting and avoid over-fitting effectively, and k in this work is equal to 10. The root-mean-square error between real data and cross-validation estimates is taken as the criterion to evaluate the performance of potential predictors.

Assessments of model predictive capability

In order to quantify the performances of different models, we use the effective prediction time (EPT) to represent the model predictive capability, defined as the elapsed time before the corresponding prediction error \(E\left( t \right)\) first exceeds an error threshold \(\varepsilon\), and we have

where \(\tilde{X}\left( t \right)\) represents the prediction series obtained by the model and \(\varepsilon\) is equal to one standard deviation of the predicted time series \(X\left( t \right)\) in this paper. The higher the EPT, the stronger the model predictive capability. Here, we denote the EPT in terms of model time units (MTUs), where 1 MTU \(=\) 100 \(\Delta t\). In order to make the results more robust, we use the mean EPT in assessing the model predictive capability, which is the average EPT of models in 100 different training/test sets. And we normalize the mean EPT by each attractor’s Lyapunov time.

Data availability

All data generated or analyzed during this study are included in this published article and its supplementary information files.

References

Palmer, T. N. Extended-range atmospheric prediction and the Lorenz model. Bull. Am. Meteorol. Soc. 74, 49–65 (1993).

Stein, R. R. et al. Ecological modeling from time-series inference: Insight into dynamics and stability of intestinal microbiota. PLoS Comput. Biol. 9, e1003388 (2013).

Soramaki, K. et al. The topology of interbank payment flows. Phys. A 379, 317–333 (2007).

Vlachas, P. R. et al. Data-driven forecasting of high-dimensional chaotic systems with long short-term memory networks. Proc. Math. Phys. Eng. Sci. 474, 20170844 (2018).

Xiong, Y. C. & Zhao, H. Chaotic time series prediction based on long short-term memory neural networks (in Chinese). Sci. Sin.-Phys. Mech. Astron. 49, 120501 (2019).

Huang, W. J., Li, Y. T. & Huang, Y. Prediction of chaotic time series using hybrid neural network and attention mechanism (in Chinese). Acta Phys. Sin. 70, 010501 (2021).

Lockhart, D. J. & Winzeler, E. A. Genomics, gene expression and DNA arrays. Nature 405, 827–836 (2000).

Jenouvier, S. et al. Evidence of a shift in the cyclicity of Antarctic seabird dynamics linked to climate. Proc. R. Soc. B 272, 887–895 (2005).

May, R. M., Levin, S. A. & Sugihara, G. Complex systems: Ecology for bankers. Nature 451, 893–895 (2008).

Packard, N. H. et al. Geometry from a time series. Phys. Rev. Lett. 45, 712–716 (1980).

Takens, F. Detecting strange attractors in turbulence. In Dynamical Systems and Turbulence, Warwick 1980, vol. 898 (eds. Rand, D. A. & Young, L.-S.) 366–381 (Springer, 1981).

Farmer, J. D. & Sidorowich, J. J. Predicting chaotic time series. Phys. Rev. Lett. 59, 845 (1987).

Sauer, T., Yorke, J. A. & Casdagli, M. Embedology. J. Stat. Phys. 65, 579–616 (1991).

Deyle, E. R. & Sugihara, G. Generalized theorems for nonlinear state space reconstruction. PloS One 6, e18295 (2011).

Ma, H. F. et al. Predicting time series from short-term high-dimensional data. Int. J. Bifurcat. Chaos 24, 143003 (2014).

Ma, H. F. et al. Randomly distributed embedding making short-term high-dimensional data predictable. Proc. Natl. A Sci. U. S. A. 115, 9994–10002 (2018).

Ye, H. & Sugihara, G. Information leverage in interconnected ecosystems: Overcoming the curse of dimensionality. Science 353, 922–925 (2016).

Hochreiter, S. & Schmidhuber, J. Long short-term memory. Neural Comput. 9, 1735–1780 (1997).

Li, H. & Chen, X. P. Wind power prediction based on phase space reconstruction and long short-term memory networks (in Chinese). J. New Industr. 10, 1–6 (2020).

Chattopadhyay, A., Hassanzadeh, P. & Subramanian, D. Data-driven predictions of a multiscale Lorenz 96 chaotic system using machine-learning methods: Reservoir computing, artificial neural network, and long short-term memory network. Nonlinear Process Geophys. 27, 373–389 (2020).

Maass, W., Natschläger, T. & Markram, H. Real-time computing without stable states: A new framework for neural computation based on perturbations. Neural Comput. 14, 2531–2560 (2002).

Pathak, J. et al. Model-free prediction of large spatiotemporally chaotic systems from data: A reservoir computing approach. Phys. Rev. Lett. 120, 024102 (2018).

He, K. M., Zhang, X. Y., Ren, S. Q. & Sun, J. Deep residual learning for image recognition. In IEEE Conference on Computer Vision and Pattern Recognition 770–778 (Springer, 2016).

Choi, H., Jung, C., Kang, T., Kim, H. J. & Kwak, I.-Y. Explainable time-series prediction using a residual network and gradient-based methods. IEEE Access 10, 108469–108482 (2022).

Chen, C. et al. Predicting future dynamics from short-term time series using an anticipated learning machine. Natl. Sci. Rev. 7, 1079–1091 (2020).

Reichstein, M. et al. Deep learning and process understanding for data-driven earth system science. Nature 566, 195–204 (2019).

Sun, Z. H. et al. A review of earth artificial intelligence. Comput. Geosci. 159, 105034 (2020).

Fan, J. Q., Han, F. & Liu, H. Challenges of big data analysis. Natl. Sci. Rev. 1, 293–314 (2014).

Hagras, H. Toward human-understandable, explainable AI. Computer 51, 28–36 (2018).

Arrieta, A. B. et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 58, 82–115 (2020).

Bradley, E. & Kantz, H. Nonlinear time-series analysis revisited. Chaos 25, 097610 (2015).

Guo, Y., Li, J. P. & Li, Y. A time-scale decomposition approach to statistically downscale summer rainfall over North China. J. Clim. 25, 572–591 (2012).

Kantz, H. & Schreiber, T. Nonlinear Time Series Analysis, 7 (Cambridge University Press, 2004).

Kennel, M. B., Brown, R. & Abarbanel, H. D. I. Determining embedding dimension for phase-space reconstruction using a geometrical construction. Phys. Rev. A 45, 3403–3411 (1992).

Lorenz, E. N. Deterministic nonperiodic flow. J. Atmos. Sci. 20, 130–141 (1963).

Li, Y. X., Wallace, K. S. & Chen, G. R. Hyperchaos evolved from the generalized Lorenz equation. Int. J. Circuit Theory Appl. 33, 235–251 (2005).

Zhang, S. Q. A Study of impacts of coupled model initial shocks and state–parameter optimization on climate predictions using a simple pycnocline prediction model. J. Clim. 23, 6210–6226 (2011).

Mackey, M. & Glass, L. Oscillation and chaos in physiological control systems. Science 197, 287 (1977).

Gauthier, D. J. et al. Next generation reservoir computing. Nat. Commun. 12, 5564 (2021).

Li, X. H. et al. Interpretable deep learning: Interpretation, interpretability, trustworthiness, and beyond. Knowl. Inf. Syst. 64, 3194–3234 (2022).

Fan, F. L. et al. On interpretability of artificial neural networks: A survey. IEEE Trans. Radiat. Plasma Med. Sci. 5, 741–760 (2021).

Montavon, G., Samek, W. & Müller, K. R. Methods for interpreting and understanding deep neural networks. Digit. Signal Process. 73, 1–15 (2017).

Schwalbe, G. & Finzel, B. A comprehensive taxonomy for explainable artificial intelligence: A systematic survey of surveys on methods and concepts. Data Min. Knowl. Disc. (2023).

Cheng, K. Y. et al. Research advances in the interpretability of deep learning (in chinese). J. Comput. Res. Develop. 57, 1208–1217 (2020).

Li, J. P. & Chou, J. F. Existence of the atmosphere attractor. Sci. China (Ser. D) 40, 215–224 (1997).

Li, J. P. & Chou, J. F. Global analysis theory of climate system and its applications. Chin. Sci. Bull. 48, 1034–1039 (2003).

Acknowledgements

The authors are deeply indebted to Ruipeng Sun, Hao Li and Zixiang Wu for their help. This work was jointly supported by National Natural Science Foundation of China (42130607, 42288101), and Laoshan Laboratory (No.LSKJ202202600). Thanks for the Center for High Performance Computing and System Simulation, Laoshan Laboratory (Qingdao) for providing computing resource. And we sincerely appreciate the comments and suggestions provided by the two reviewers during the revision process.

Author information

Authors and Affiliations

Contributions

J.P.L. and M.Y.W. contributed equally to this work. J.P.L. conceived the idea. M.Y.W. performed all calculations and wrote the initial manuscript with the help of J.P.L. Both authors contributed to analyses, interpretation and writing of results.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, M., Li, J. Interpretable predictions of chaotic dynamical systems using dynamical system deep learning. Sci Rep 14, 3143 (2024). https://doi.org/10.1038/s41598-024-53169-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-53169-y

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.