Abstract

Three pollination methods are commonly used in the greenhouse cultivation of tomato. These are pollination using insects, artificial pollination (by manually vibrating flowers), and plant growth regulators. Insect pollination is the preferred natural technique. We propose a new pollination method, using flower classification technology with Artificial Intelligence (AI) administered by drones or robots. To pollinate tomato flowers, drones or robots must recognize and classify flowers that are ready to be pollinated. Therefore, we created an AI image classification system using a machine learning convolutional neural network (CNN). A challenge is to successfully classify flowers while the drone or robot is constantly moving. For example, when the plant is shaking due to wind or vibration caused by the drones or robots. The AI classifier was based on an image analysis algorithm for pollination flower shape. The experiment was performed in a tomato greenhouse and aimed for an accuracy rate of at least 70% for sufficient pollination. The most suitable flower shape was confirmed by the fruiting rate. Tomato fruit with the best shape were formed by this method. Although we targeted tomatoes, the AI image classification technology is adaptable for cultivating other species for a smart agricultural future.

Similar content being viewed by others

Introduction

Smart agriculture actively incorporates engineering and chemical technologies in agriculture, especially crop cultivation. Smart agriculture aims for labor saving and high-quality production1,2. In particular, the digitized data (such as temperature, humidity, sunshine hours, and soil components) assist cultivation management by providing information and communications technology (ICT) and the Internet of Things (IoT)3,4,5,6,7. For example, physiological and physiological plant growth models can provide optimal methods8. However, the parameters interact with complex control variables. These parameters vary widely, including nutrients, flooding, photosynthetic light intensity, and carbon dioxide. Moreover, changes in plant species and environmental conditions lead to interactions between these parameters, limiting independent utility.

Therefore, to improve smart agriculture, we propose using artificial intelligence (AI) with machine learning9,10. Practical AI are already in operation in plant factories such as hydroponics11. For example, it is useful when cultivating in artificially controlled environments to optimize the water and air temperature and light intensity. AI is also used to improve soil management and analyze nutrient levels12,13,14. Furthermore, advanced technology is underway to use robots with AI to support cultivation to reduce labor costs. For example, pesticide application15 and automated harvesting16,17.

Tomato is a high demand and popular cultivation crop. Tomato ranks first in crop production in the world. A total of 1.8 million tons of tomato are produced annually, followed by onions (1.04 million tons) and cucumbers (0.91 million tons)18. Tomato are the most popular crops but have many cultivation problems, including pollination19. Pollination involves the pollens being created in the stamens, which pollinates the pistils to bear seeds and fruits. Cross pollinated plants must be pollinated by pollen from different strains. However, tomatoes can easily be pollinated within a single flower once pollen is created. Hence, tomatoes are self-pollinated when flowers are shaken. In tomato greenhouse cultivation, pollination schemes commonly use three methods. These are pollination using insects, artificial pollination (by manually vibrating flowers), and hormonal pollination using plant growth regulators.

Pollination using insects follows nature. Tomato flowers are pollinated by shaking of the flowers when honeybees and bumblebees collect pollen. However, insect management and rearing are difficult and bees are inactive in high temperatures, reducing pollination efficiency. Therefore, artificial pollination is often used to manually vibrate flowers. In artificial pollination, farm workers visually classify the shapes of flowers that are ready for pollination and shake them using a vibrating instrument. However, farm workers require experience and skills to classify flowers. Hence, many skilled farm workers are required, increasing expenses. Hormonal pollination involves plant growth agents, forcing fruiting, growth, and pollination. Hormonal pollination is an easy and useful technique that does not required experience or skills in classifying flowers20. However, if strict guidelines are not followed then phytotoxicity occurs, resulting in quality problems such as deformation of the fruit and limited taste. We propose new pollination schemes and have developed a system to solve these problems (see Fig. 1). The proposed pollination system uses small drones or robots instead of bees and humans. However, this is a complicated process.

For example, drones and robots must be able to find flowers like bees and humans do. Drones and robots require the ability to discriminate ripe flowers. Communication technology for remotely controlling drones and robots is required. A mechanism for pollinating flowers is also required. We focus on the technologies for drones and robots to distinguish flowers autonomously. Furthermore, the robots need to distinguish flowers and also identify the detailed shape of flowers that are ready for pollination.

Drones and robots equipped with cameras captured image data and classified the flowers. We developed an AI image classification system using a convolutional neural network (CNN) based on machine learning. Drones and robots classify the flowers while moving. For example, flowers are shaking due to the wind caused by the drone or the vibration caused by the robot. Movement impacts the analytical performance of the AI image classifier. Therefore, it is necessary classify the shape of flowers in conditions and environments involving drones and robots. We aim to develop the technology to be able use drones and robots to support cultivation by using complicated state-of-the-art technology. It is most important that the technology is effective and meets the requirements of both plant characteristics and actual cultivation conditions. Therefore, we have developed the technology and confirm the usefulness of this AI image classification system in a greenhouse experiment, resulting in fruiting tomatoes.

Methodology

This section mainly describes basic AI development techniques for robots to classify flowers. To classify pollinable flowers, we used a machine learning CNN algorithm21, which is generally used for image analysis. We created an AI image identification device as the basic technology for a pollination system that does not require human involvement, such as visual inspections or managing insects. The CNN techniques used in this study are detailed below. Using the flower classification criteria and CNN algorithms described in this section, the next section leads to mounted technology suitable for pollination robots.

Flower shape machine learning

The process of tomato flower budding, flowering, and fruiting is provided in Fig. 2. The process from (a) to (f), (a) is a bud, and fruits are grown after (f). The shape of flowers varies during the process of flower bud differentiation. A general empirical rule is that the shape of a pollination flower has petals curled in (d). The stamen protrudes from the center of the flower. Pollen is attached to the inside of the stamens. The stigma of the pistil is surrounded by stamens.

The shape (d) produces the most pollen when the stigma is long, increasing the probability of pollination. Tomatoes are self-pollinated, when the stamen vibrates, pollen adheres to the stigma and pollination begins. Therefore, the AI image classifier identifies shape (d) using the captured image. Assessing the images taken by drones and robots need to account for the additional shaking by the drone or robot. To simulate image blurring, the image was smoothed using a Gaussian filter. An example image after Gaussian filtering is provided in Fig. 3. We developed an AI image classifier using both normal images and smoothed images for the CNN machine learning.

Ingenuity of CNN in image analysis

The CNN used in this study was neural networks, extracting features using a convolution (Conv) layer and a Pooling layer. A configuration example using CNN is provided in Fig. 4. The figure numbers represent the pixel size of the image. Conv performs convolution processing on the image data converted into a cubic matrix of red/green/blue (RGB) and extracts the feature map. To extract features (such as edges), zero padding was performed by adding 0 surrounding the image of 32 × 32 pixels. Moreover, a feature map was obtained by shifting the 3 × 3 window one pixel at a time while applying a kernel filter. The maximum value pooling and average value pooling were performed in the pooling layer to counteract image shifts and differences in appearance. Next, Dense (connective layer) weighed the extracted features and transformed them into a one-dimensional vector. Finally, Output (output layer) calculated each classification using an activation function, such as a softmax function. CNN is mainly used in image analysis because of these functions9.

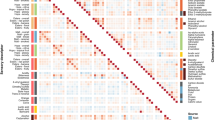

CNN algorithms require learning using a large amount of image data. However, it is difficult to collect a large amount of image data, so we padded the data by rotating or reversing the images. The AI image assessment used two types of flower image data to identify flowers ready for pollination and unripe flowers. After learning the two-class classifications, the accuracy rate of the classification results was assessed (see Fig. 5). The training image data involved the original image of 4608 × 2592-pixels. From these images, 100 images were prepared by removing the flower parts and dividing the images into six stages, from bud emergence to the initiation of fruiting.

The image size was condensed to 32 × 32 pixels with an average size of 100 KB. In addition, the padding and preprocessing of the training image data was performed, including rotations, grayscale conversions, binarizations, and preprocessing with a Gaussian filter (see Fig. 6). Processing to extract yellow from the image was also performed to learn the flower shape. At the time of learning, the number of training images was padded to approximately 85,000 by dividing them into training images and test images before performing preprocessing. Images of flowers with the high possibility of pollination were extracted to calculate the most accurate rate of pollination by the AI image classifier (see Fig. 2). Moreover, we confirmed the accuracy rate of the smoothed images (representing blurring by movement). Our evaluation assessed the accuracy rate (validation accuracy), which is the percentage of successful discrimination to test additional images not included in the initial image database.

Ethics declarations

The use of plants in this study complied with relevant institutional, national, and international guidelines and legislation.

Results

Evaluation of CNN machine learning

The analysis of the AI classification algorithm is provided in Fig. 7. The vertical axis provides the accuracy rate, and the horizontal axis is the number of epochs. The red line indicates validation accuracy (val_accuracy), and the blue line indicates training accuracy (accuracy). The experimental results converged at eight epochs (due to early stopping) and the validation accuracy was 87.3%. One of the reasons for the low accuracy rate (of \(\le\) 90%) is the insufficient number of original training images.

However, our result is a trade off as we decided it is preferable to determine the operation accuracy rather than focus on an extreme accuracy of the pollination images. Therefore, the accuracy rate may decrease due to images which were difficult to identify between ripe flowers and their boundaries. In contrast to the red line of val_accuracy, the yellow dashed line (nothing) is the validation accuracy when learning without data augmentation as shown in Fig. 7, but the accuracy rate was as low as approximately 70%. Therefore, we confirmed the effectiveness of padding. Table 1 shows the probability of the AI classification algorithm identifying pollination using the shape of the flower cluster. The flower shape of (d) was effectively ripe with approximately 97% accuracy. This technique provided the most accurate results, so the accuracy of image is sufficient. In addition, the smoothed images using the Gaussian filter produced an accuracy rate for the flower shape (d) of approximately 96%. This result suggests the AI remains effective even with shaking caused by drones and robots.

AI image classifier suitable for robot mounted technology by experimental results

We verified the accuracy of the image classification algorithm using real flowers in the tomato growing greenhouse. The image classification algorithm was used in a small computer as an AI image classification machine. The small computer used was a Raspberry Pi (hereafter RasPi)22, a single-board computer equipped with an ARM processor. The configuration and operation of the AI image classification machine is provided in Fig. 8. The RasPi was connected to a camera module, an ultrasonic sensor module, and an external LED display that outputs the results. The ultrasonic sensor measured the distance between the flower and RasPi to link the camera to take an image. Hence, the image was only automatically taken when the tomato stem was within 0.3 m of the camera. Since this function eliminates the need to take extra images and obtains only image data with a fixed view angle, the flower parts can be easily extracted. The output of the AI image classification machine displays an accuracy rate (%) on the external LED display. It also states outputs 1 when it determines pollination is possible, and outputs 0 when it determines pollination is not possible.

In this experiment, we aimed to confirm the performance of the AI image classification machine with simple information was displayed on the external display. When the pollination system in Fig. 1 was actually used, the output of the identification result notifies the robot or drone to undertake pollination. Conversely, when the output is 0, the operation resumes searching for ripe flowers. A photograph of the AI image classification machine in the experimental location is provided in Fig. 9. The displayed result of 1 or 0 allows us to assess the accuracy rate in real time using the LED external display. Therefore, the experimenter can visually confirm the data during the experiment. Moreover, when 1 is the output the accuracy rate is \(\ge\) 70%. When the output is 0 the accuracy rate is \(\le\) 70 %. This accuracy rate is intentionally relatively low to represent actual field operational conditions. We randomly selected 200 flower clusters were assessed using the AI image classification machine. If the output was 1 on the display, the pollination process was performed by physically vibrating the flower. The captured images, accuracy rate, and results (1/0) were saved separately in the internal memory and analyzed after the experiment. In addition, we marked the selected and pollinated flowers and continuously observed them to verify successful fruiting. The accuracy of the AI image classification machine is provided in Table 2. The fruiting rate is provided in Fig. 10. The figure provides the ratio of the number of setting fruits to the number of pollinated flowers. Fig. 11 provides the accuracy rate of the AI image classification machine with the fruiting rate.

Discussion

The accuracy of the AI image classification machine is provided in Table 2. The number of misclassified flowers was 6 flowers in approximately 200 flowers, resulting in a misclassification rate of approximately \(\le\) 5%. This misjudgment was due to the camera shaking during photography causing the flower to be misjudged by the ambiguous and indeterminate shapes, or altered with sunlight effects in the greenhouse. Therefore, the image analysis performed well. However, when performing the image analysis, attention must be paid to deal with light. An example of flowers with uncertain shapes degrading accuracy is provided in Fig. 12. Even when visually confirmed, these ambiguous shaped flowers don’t consistently pollinate. The fruiting rate results provided in Fig. 10 are compared with the desktop predictions in Table 1. We confirmed the flower shape (d) provides the highest accuracy rate. The fruit setting rate was \(\ge\) 70 %, confirming the flower shape in (d) is the most suitable for pollination.

The results confirmed successful pollination using the AI image classification machine. Therefore, we expect the AI image classification machine will sufficiently perform even when installed in a robot in an agricultural field. Figure 11 provides the success rate against the accuracy rate. When the accuracy rate of the AI image classification machine is below 70 %, the success rate is approximately 40 %. Conversely, when the accuracy rate of the AI image classification machine was 70 % or above, the fruiting rate was 60% or higher. Therefore, if the output accuracy rate is 70 % or above, the robot will correctly identify flowers ripe for pollination. The threshold of the AI image classification machine was set at \(\ge\) 70%, which should provide sufficient pollination in real agricultural situations.

The relationship between the shape of the pollinated flower and the shape of the fruit set is demonstrated in Fig. 13. The flower shapes were classified from types #1 to #6 using the fruiting results. When the artificially pollinated flower shapes were (c), (d) or (e), they produced relatively high fruiting rates. The highest quality fruit shape was set by #6 shaped flowers. Thus, the fruit setting rate of #6, (c) was 40 %, (d) was 50 %, and (e) was 19 %. From these results, we identified flower shape (d) provides the highest fruit setting rate and also forms the highest quality fruit.

Image classification accuracy of AI by machine learning is generally required to be close to 100% classification accuracy, when applied to medical and engineering fields. However, we considered sufficient if the accuracy rate of 70% or more is obtained for the shape of flowers that can be pollinated, as shown in the result of Fig. 11. The reason is that not only the flower shape of Fig. 2 (d) can be pollinated. Of course, the flower shape (d) has the highest fruiting success ratio, but the (c) and (e) shapes can also fruit. The fuzzy accuracy rate is effective for the performance of the AI classifier system to improve the tomato yield.

In point of classification accuracy, in order for this technology to be installed in various robots in the future, it is desirable to extend machine learning by the transfer learning (TL) and Fine Tuning technology23,24. TL or Fine Tuning can be transferred or reused based on the original deep learning. Conventionally, when the growing season or the type of tomato is different, the shape of the flower changes slightly, so new machine learning is required. However, in the future, we hope to advance to a technology with a wider range of versatility by adding technologies such as TL and Fine Tuning. TL and Fine Tuning technology are positioned as extensions of this research, and we will be a future works.

Possibility of implementing to small drones with low-resolution cameras

In order to consider the performance of the AI classification machine developed in this paper, we performed verification with a small camera for reference. Machine learning using CNN was performed assuming camera images mounted on ultra-small drones. The resolution of the camera used in the experiment in Fig. 9 is 1920 pixels × 1080 pixels, while the camera mounted on the ultra-small drone is 276 pixels × 196 pixels, which is about 1/6 the resolution. Figure 14 is the flower shape of (d) at this resolution. In the result of using AI image classification machine, the classification result of 88.7% was obtained. The classification result of the camera image used in the experiment was 95% or more, so this result was about 7% lower. However, since the accuracy rate of 70% or more is obtained, sufficient accuracy will be obtained even if the low-resolution camera for ultra-small drones is used. The reason for this result is machine learning was performed on images with Gaussian filter applied, and the high accuracy rate was obtained even when the image was blurred.

Conclusion

We developed an AI image classification machine using CNN machine learning for a robot to determine the shape of tomatoes ready for pollination without the need for humans or bees. The image analysis algorithm was implemented as an AI image classification machine and its effective operation was confirmed in a tomato growing greenhouse. Pollination was only performed on ripe flowers. We compared the fruiting rate of the artificially pollinated tomatoes. When the accuracy rate of the AI image classification machine was set at 70% or above, the practical use of the pollination robots is realized. Moreover, we also identified the flower shape that yielded the highest fruiting rate and the highest quality fruit shape.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Mitra, A. et al. Everything you wanted to know about smart agriculture. arXiv preprintarXiv:2201.04754 (2022).

Goel, R. K., Yadav, C. S., Vishnoi, S. & Rastogi, R. Smart agriculture-urgent need of the day in developing countries. Sustain. Comput. Inform. Syst. 30, 100512 (2021).

Patil, K. & Kale, N. A model for smart agriculture using IoT. In 2016 International Conference on Global Trends in Signal Processing, Information Computing and Communication (ICGTSPICC), 543–545 (IEEE, 2016).

Gondchawar, N. et al. IoT based smart agriculture. Int. J. Adv. Res. Comput. Commun. Eng. 5, 838–842 (2016).

Prathibha, S., Hongal, A. & Jyothi, M. Iot based monitoring system in smart agriculture. In 2017 International Conference on Recent Advances in Electronics and Communication Technology (ICRAECT), 81–84 (IEEE, 2017).

Ray, P. P. Internet of things for smart agriculture: Technologies, practices and future direction. J. Ambient Intell. Smart Environ. 9, 395–420 (2017).

Sushanth, G. & Sujatha, S. IoT based smart agriculture system. In 2018 International Conference on Wireless Communications, Signal Processing and Networking (WiSPNET), 1–4 (IEEE, 2018).

Cohen, A. R. et al. Dynamically controlled environment agriculture: Integrating machine learning and mechanistic and physiological models for sustainable food cultivation. ACS ES &T Eng. 2, 3–19 (2021).

Liakos, K. G., Busato, P., Moshou, D., Pearson, S. & Bochtis, D. Machine learning in agriculture: A review. Sensors 18, 2674 (2018).

Zhu, N. et al. Deep learning for smart agriculture: Concepts, tools, applications, and opportunities. Int. J. Agric. Biol. Eng. 11, 32–44 (2018).

Resh, H. M. Hydroponic Food Production: A Definitive Guidebook for the Advanced Home Gardener and the Commercial Hydroponic Grower (CRC Press, 2022).

Song, H. & He, Y. Crop nutrition diagnosis expert system based on artificial neural networks. In Third International Conference on Information Technology and Applications (ICITA’05), vol. 1, 357–362 (IEEE, 2005).

Eli-Chukwu, N. C. Applications of artificial intelligence in agriculture: A review. Eng. Technol. Appl. Sci. Res. 9, 4377–4383 (2019).

Vincent, D. R. et al. Sensors driven AI-based agriculture recommendation model for assessing land suitability. Sensors 19, 3667 (2019).

Adedoja, A., Owolawi, P. A. & Mapayi, T. Deep learning based on nasnet for plant disease recognition using leave images. In 2019 International Conference on Advances in Big Data, Computing and Data Communication Systems, 1–5 (IEEE, 2019).

Hemming, S., Zwart, F. D., Elings, A., Petropoulou, A. & Righini, I. Cherry tomato production in intelligent greenhouses-sensors and AI for control of climate, irrigation, crop yield, and quality. Sensors 20, 6430 (2020).

Park, S. & Kim, J. Design and implementation of a hydroponic strawberry monitoring and harvesting timing information supporting system based on nano ai-cloud and iot-edge. Electronics 10, 1400 (2021).

Statista. https://www.statista.com/statistics/264065/global-production-of-vegetables-by-type/ (2020).

Dingley, A. et al. Precision pollination strategies for advancing horticultural tomato crop production. Agronomy 12, 518 (2022).

Sekine, T. et al. Potential of substrate-borne vibration to control greenhouse whitefly Trialeurodes vaporariorum and increase pollination efficiencies in tomato Solanum lycopersicum. J. Pest Sci. 1–12 (2022).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. Imagenet classification with deep convolutional neural networks. Commun. ACM 60, 84–90 (2017).

Teach, learn, and make with raspberry pi https://www.raspberrypi.org/ (2021).

Pan, S. J. & Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 22, 1345–1359 (2010).

Zhuang, F. et al. A comprehensive survey on transfer learning. Proc. IEEE 109, 43–76 (2020).

Acknowledgements

This research was supported by the research program on development of innovative technology grants (JPJ007097) and the development and improvement program of strategic smart agricultural technology grants (JPJ011397) from the Project of the Bio-oriented Technology Research Advancement Institution (BRAIN).

Author information

Authors and Affiliations

Contributions

T.H. conceived and designed this research. T.K. analyzed the data and contributed in literature search. T.H. and T.K. wrote the manuscript. K.E. conducted the experiments. T.O. and T.T. considered the experimental procedure and supported the experiments. H.S. supervised this research. All authors reviewed the manuscript and approved the submission.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hiraguri, T., Kimura, T., Endo, K. et al. Shape classification technology of pollinated tomato flowers for robotic implementation. Sci Rep 13, 2159 (2023). https://doi.org/10.1038/s41598-023-27971-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-27971-z

This article is cited by

-

The Economic Value of Pollination Services for Seed Production: A Blind Spot Deserving Attention

Environmental and Resource Economics (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.