Abstract

A manual assessment of sperm motility requires microscopy observation, which is challenging due to the fast-moving spermatozoa in the field of view. To obtain correct results, manual evaluation requires extensive training. Therefore, computer-aided sperm analysis (CASA) has become increasingly used in clinics. Despite this, more data is needed to train supervised machine learning approaches in order to improve accuracy and reliability in the assessment of sperm motility and kinematics. In this regard, we provide a dataset called VISEM-Tracking with 20 video recordings of 30 seconds (comprising 29,196 frames) of wet semen preparations with manually annotated bounding-box coordinates and a set of sperm characteristics analyzed by experts in the domain. In addition to the annotated data, we provide unlabeled video clips for easy-to-use access and analysis of the data via methods such as self- or unsupervised learning. As part of this paper, we present baseline sperm detection performances using the YOLOv5 deep learning (DL) model trained on the VISEM-Tracking dataset. As a result, we show that the dataset can be used to train complex DL models to analyze spermatozoa.

Similar content being viewed by others

Background & Summary

Machine learning (ML) is increasingly being used to analyze videos of spermatozoa under a microscope for developing computer-aided sperm analysis (CASA) systems1,2. In the last few years, several studies have investigated the use of deep neural networks (DNNs) to automatically determine specific attributes of a semen sample, like predicting the proportion of progressive, non-progressive, and immotile spermatozoa3,4,5,6,7. However, a major challenge with using ML for semen analysis is the general lack of data for training and validation. Only a few open labeled datasets exist (Table 1), with most focus on still-frames of fixed and stained spermatozoa or very short sequences of sperm to analyze the morphology of the spermatozoa.

In this paper, we present a multi-modal dataset containing videos of spermatozoa with the corresponding manually annotated bounding boxes (localization) and additional clinical information about the sperm providers from the original study8. This dataset is an extension of our previously published dataset VISEM8, which included videos of spermatozoa labeled with quality metrics following the World Health Organization (WHO) recommendations9.

There have been several datasets released related to spermatozoa, for example, Ghasemian et al.10 have published an open sperm dataset called HSMA-DS: Human Sperm Morphology Analysis DataSet with normal and abnormal sperms. Experts annotated different features, namely vacuole, tail, midpiece, and head abnormality. The availability of abnormalities of these features were marked using binary notations such as 1 or 0, 1 is for abnormal, and 0 for normal. In total, there are 1,457 sperm for morphology analysis. These sperm images were captured with ×400 and ×600 magnification. The Modified Human Sperm Morphology Analysis Dataset (MHSMA)11 consists of 1,540 cropped images from the HSMA-DS dataset10. This dataset was collected for analyzing different parts of sperm (morphology). The maximum image size in the dataset is 128 × 128 pixels.

The HuSHEM12 and SCIAN-MorphoSpermGS13 datasets consist of images of sperm heads captured from fixed and stained semen smears. The main purpose of these datasets is sperm morphology classification into five categories, namely normal, tapered, pyriform, small, and amorphous. SMIDS14 is another dataset consisting of 3000 images cropped from 200 stained ocular images from 17 subjects between 19–39 years. From 3000 images, 2027 patches were manually annotated as normal and abnormal. Another 973 samples were classified as non-sperm using spatial-based automated features. McCallum et al.15 have published another similar dataset with bright-field sperm of six healthy participants within 1064 cropped images. The main purpose of this dataset is to find correlations between sperm images obtained by bright field microscopy and sperm DNA quality. However, these datasets do not provide spermatozoa’s motility and kinetics features.

Chen et al.16 introduced a sperm dataset called SVIA (Sperm Videos and Images Analysis dataset), which contains 101 short 1 to 3 seconds video clips and corresponding manually annotated objects. The dataset is divided into three subsets, namely subset-A, B, and C. Subset-A contains 101 video clips (30 FPS) containing 125,000 object locations and corresponding categories. Subset-B contains 10 videos with 451 ground truth segmentation masks and subset-C consists of cropped sperms for classification into 2 categories (impurity images and sperm images). The provided video clips are very short compared to VISEM-Tracking. Our dataset17 contains 7× more annotated video frames. In addition, VISEM-Tracking contains 2.3× more annotated objects compared to SVIA.

VISEM-Tracking offers annotated bounding boxes and sperm tracking information, making it more valuable for training supervised ML models than the original VISEM dataset8, which lacks these annotations. This additional data enables a variety of research possibilities in both biology (e.g., comparing with CASA tracking) and computer science (e.g., object tracking, integrating clinical and tracking data). Unlike other datasets, VISEM-Tracking’s motility features facilitate sperm identification within video sequences, resulting in a richer and more detailed dataset that supports novel research directions. Potential applications include sperm tracking, classifying spermatozoa based on motility, and analyzing movement patterns. To the best of our knowledge, this is the first open dataset of its kind.

Methods

The videos for this dataset were originally obtained to study overweight and obesity in the context of male reproductive function18,19. In the study, male participants aged 18 years or older were recruited between 2008 and 2013. Further details on the recruitment can be found in8. The study was approved by the Regional Committee for Medical and Health Research Ethics, South East, Norway (REK number: 2008/3957). All participants provided written informed consent and agreed to the publication of the data. The original project was finished in December 2017, and all data was fully anonymized.

The samples to be recorded were placed on a heated microscope stage (37° C) and examined under a 400× magnification using an Olympus CX31 microscope. The videos were recorded by a microscope-mounted UEye UI-2210C camera made by IDS Imaging Development Systems in Germany. According to the WHO recommendations9 light microscope equipped with phase-contrast optics is necessary for all examinations of unstained preparations of fresh semen. The videos are saved as AVI files. Motility assessment was performed based on the videos following the WHO recommendations9.

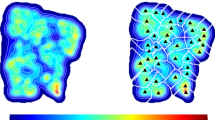

The bounding box annotation was performed by data scientists in close collaboration with researchers in the field of male reproduction. The data scientists labeled each video using the tool LabelBox (https://labelbox.com), which was then verified by the three biologists to ensure that the annotations were correct. Moreover, in addition to the per sperm tracking annotation, we also provide additional labels per spermatozoa, which are: ‘normal sperm’, ‘pinhead’, and ‘cluster’. The pinhead category consists of spermatozoa with abnormally small black heads within the view of the microscope. The cluster category consists of several spermatozoa grouped together. Sample annotations are presented in Fig. 1. The red boxes represent normal spermatozoa which constitute the majority of this dataset and are also biologically most relevant. The green boxes represent sperm clusters where few spermatozoa are clustered together, making it hard to annotate sperm separately. The blue color boxes represent small or pinhead spermatozoa which are smaller than normal spermatozoa and have very small heads compared to a normal sperm head.

Video frames of wet semen preparations with corresponding bounding boxes. Top: large images showing different classes of bounding boxes, red - sperm, green - sperm cluster, and blue - small or pinhead sperm. Bottom: presenting different sperm concentration levels from high to low (from left to right, respectively).

Data Records

VISEM-Tracking is available at Zenodo (https://zenodo.org/record/7293726)17 and the license for the data is Creative Commons Attribution 4.0 International (CC BY 4.0). This dataset contains 20 videos (collected from 20 different patients), each with a fixed duration of 30 seconds with the corresponding annotated bounding boxes. The 20 were chosen based on how different they are to all the videos in the dataset in order to obtain as many diverse tracking samples as possible. Since each video from the original dataset lasts for more than 30 seconds we also provide, in addition to the annotated video clips, the remaining video as 166 (30 seconds) video clips for the 20 annotated videos and 336 (30 seconds) video clips for all unlabelled videos of the VISEM dataset8 that were not used to provide tracking information. This was done to make it easy to use for future studies that aim to explore more advanced methods such as semi- or self-supervised learning20.

A length of 30 seconds was chosen to make it easier to annotate and process the video files. These videos can also be used for a possible extension of the tracking data in the future. The splitting process of the long videos is presented in Fig. 2. More details about the dataset itself are summarized in Table 2.

Splitting videos into 30 seconds clips. Green color represents the split used to manually annotate sperms using bounding boxes. Orange color represents the rest of 30s splits included in unlabeled dataset. Purple color section represents the last part of a video which does not have 30s long clip. Therefore, we do not include these endings in our dataset to maintain the consistency of 30s clips.

The folder containing annotated videos has 20 sub-folders with annotations of each video. Each folder of videos has a folder containing extracted frames of the video, a folder containing bounding box labels of each frame, and a folder containing bounding box labels and the corresponding tracking identifiers. In addition to these, a complete video file (.mp4) is provided in the same folder. All bounding box coordinates are given using the YOLO21 format. The folder containing bounding box details with tracking identifiers has ‘.txt‘ files with unique tracking ids to identify individual spermatozoa throughout the video. It is worth noting that the area of the bounding boxes of the same sperm changes over time depending on its position and movement in the videos, as depicted in Fig. 3. Moreover, the text files contain class labels, 0: normal sperm, 1: sperm clusters, and 2: small or pinhead sperm. Additionally, the sperm_counts_per_frame.csv file provides per frame sperm count, cluster count, small_or_pinhead count.

In most of the labeled videos, each frame contains bounding box information (1,470 frames on average per video). The video titled video_23 has 174 frames without spermatozoa. Furthermore, some videos are recorded at different frame rates (videos video_35 and video_52 have 1,440 total frames, and video video_82 has 1,500 total frames). The distribution of the bounding boxes is reflected in Fig. 4a, and the 2D histogram of the height and width of the bounding boxes is shown in Fig. 4b. Fig. 4a shows that the bounding boxes tend to be evenly distributed across the video frames, with a higher concentration of bounding boxes in the upper left of the video frames. According to Fig. 4b, the variation in bounding box size is quite small.

Statistics about bounding box coordinates and area. (a) - 2D histogram on the center coordinates of the bounding boxes of the sperm class. (b) - 2D histogram on the center coordinates of the bounding boxes of the cluster class. (c) - 2D histogram on the center coordinates of the bounding boxes of the small and pinhead class. (d) - 2D histogram on the height and width (normalized values) of the bounding boxes of sperm class. (e) - 2D histogram of the height and width (normalized values) of the bounding boxes of the cluster class. (f) - 2D histogram on the height and width (normalized values) of the bounding boxes of the small or pinhead class.

In addition to the bounding box details, several .csv files taken from the VISEM dataset8 are provided with additional information. These files include general information about participants (participant_related_data_Train.csv), the standard semen analysis results (semen_analysis_data_Train.csv), serum levels of sex hormones (sex_hormones_Train.csv: measured from blood samples), serum levels of the fatty acids in the phospholipids (fatty_acids_serum_Train.csv: measured from blood samples), fatty acid levels of spermatozoa (fatty_acids_spermatoza_Train.csv). The summary of the content of these files is listed in Table 3.

Technical Validation

We divided the 20 videos into a training dataset of 16 videos and a validation dataset of 4 videos (video IDs of the validation dataset are provided in the GitHub repository). The training set was used to train baseline deep learning (DL) models, and the validation dataset was used to evaluate our DL models. YOLOv521 was selected as the baseline sperm detection DNN model. This version of YOLO consists of five different models, namely, YOLOv5n (nano), YOLOv5s (small), YOLOv5m (medium), YOLOv5l (large), and YOLOv5x (XLarge). All models were trained using the training dataset with a number of class parameters of 3, which include normal sperm, cluster, and small or pinhead categories.

In the training process, we provided extracted frames and the corresponding bounding box details to the YOLOv5 models. We set the image size parameter to 640, batch size to 16, and the number of epochs to 300. All other hyperparameters, such as learning rate, batch size, and optimizer, were kept with default values of YOLOv5 (https://github.com/ultralytics/yolov5). Furthermore, all experiments were performed on two NVIDIA GeForce RTX 3080 graphic processing units with a total of 20GB memory (10GB per each GPU) with AMD Ryzen 9 3950X 16-Core Processor. The best model was found using the performance on the validation dataset.

Precision, recall, mAP_0.5, mAP_0.5:0.95, and fitness value, as calculated by Jocher et al.21, were used to measure the performance of different YOLOv5 models. The results are listed in Table 4, showing that YOLOv5l performs best with a fitness value of 0.0920. The fitness value presented in the table is calculated using the following equation, which is used in the YOLOv5 implementation to compare model performance.

Samples for visual comparisons of predictions from the five models are shown in Fig. 5. These predictions are from the first frame of the selected four validation videos.

Usage Notes

To the best of our knowledge, this is the first dataset containing long video clips of human semen samples (30 seconds with 45–50 FPS) that are manually annotated with bounding boxes for each spermatozoon. The performance of our DL experiments for detecting spermatozoa shows that the training data provided in this dataset is diverse and can be used to train advanced DL models.

The data enables different future research directions. For example, it can be used to prepare more labeled data using strategies like semi-supervised learning. Researchers can use the labeled data to train a DL model (such as YOLOv5) and predict bounding boxes for the unlabeled data. Then, those pseudo-labeled data can be passed to the experts in the domain to verify them. This method can make the data annotation process easier and produce accurate labeled datasets faster than manual annotations.

Sperm tracking is necessary to determine sperm dynamics and motility levels. We provide tracking IDs to identify the same spermatozoa throughout the video. Using this data, one can train sperm tracking algorithms, and the results of the tracking algorithms can help to identify different biomedical relevant parameters such as velocity and kinematics. Additionally, it is difficult to determine which spermatozoa in a semen sample have the highest motility, which is of clinical importance. The dataset can be used to train such algorithms for finding spermatozoa with the highest motility.

In addition to the sperm tracking annotations, we also provide additional metadata for the sperm samples. Using this data, researchers can train models that combine the metadata with the tracking information to obtain more accurate predictions of, for example, motility levels.

There is also a growing interest in exploring synthetic data to address data deficiencies and timely and costly data annotation problems in the medical domain22. Researchers can use the dataset to train deep generative models23,24 to generate synthetic data, which then can be used to train other ML models and achieve better generalizable performance. Furthermore, one can train conditional deep generative models25,26 to generate synthetic sperm data with the corresponding ground truth (bounding boxes) using our dataset to overcome the costly problem of getting annotated data.

Another hot topic in AI and medicine is simulating biological organs or creating digital twins. The dataset can, for example, be used to extract features of sperm motility to simulate spermatozoa and their behaviors. Simulations of spermatozoa can potentially lead to more accurate models than current solutions in the field.

Code availability

The code repository with the scripts of data preparations and technical validations (models and pre-trained checkpoints) is available at https://github.com/simulamet-host/visem-tracking. The original YOLOv5 code is available at https://github.com/ultralytics/yolov5.

References

Gill, M. E. & Quaas, A. M. Looking with new eyes: advanced microscopy and artificial intelligence in reproductive medicine. Journal of Assisted Reproduction and Genetics 1–5, https://doi.org/10.1007/s10815-022-02693-9 (2022).

Riegler, M. A. et al. Artificial intelligence in the fertility clinic: status, pitfalls and possibilities. Human Reproduction 36, 2429–2442, https://doi.org/10.1093/humrep/deab168 (2021).

Thambawita, V., Halvorsen, P., Hammer, H., Riegler, M. & Haugen, T. B. Stacked dense optical flows and dropout layers to predict sperm motility and morphology. In Proceedings of the CEUR Multimedia Benchmark Workshop (MediaEval) (2019).

Hicks, S. A. et al. Machine learning-based analysis of sperm videos and participant data for male fertility prediction. Scientific reports 9, 1–10, https://doi.org/10.1038/s41598-019-53217-y (2019).

Thambawita, V., Halvorsen, P., Hammer, H., Riegler, M. & Haugen, T. B. Extracting temporal features into a spatial domain using autoencoders for sperm video analysis. In Proceedings of the CEUR Multimedia Benchmark Workshop (MediaEval) (2019).

Javadi, S. & Mirroshandel, S. A. A novel deep learning method for automatic assessment of human sperm images. Computers in biology and medicine 109, 182–194, https://doi.org/10.1016/j.compbiomed.2019.04.030 (2019).

You, J. B. et al. Machine learning for sperm selection. Nature Reviews Urology 18, 387–403, https://doi.org/10.1038/s41585-021-00465-1 (2021).

Haugen, T. B. et al. Visem: A multimodal video dataset of human spermatozoa. In Proceedings of the ACM Multimedia Systems Conference (MMSys), 261–266, https://doi.org/10.1145/3304109.3325814 (2019).

World Health Organization. Who laboratory manual for the examination and processing of human semen https://www.who.int/publications/i/item/9789240030787 (2021).

Ghasemian, F., Mirroshandel, S. A., Monji-Azad, S., Azarnia, M. & Zahiri, Z. An efficient method for automatic morphological abnormality detection from human sperm images. Computer Methods and Programs in Biomedicine 122, 409–420, https://doi.org/10.1016/j.cmpb.2015.08.013 (2015).

Soroush, J. & Seyed, A. M. A novel deep learning method for automatic assessment of human sperm images. Computers in Biology and Medicine 109, 182–194, https://doi.org/10.1016/j.compbiomed.2019.04.030 (2019).

Shaker, F., Monadjemi, S. A., Alirezaie, J. & Naghsh-Nilchi, A. R. A dictionary learning approach for human sperm heads classification. Computers in Biology and Medicine 91, 181–190, https://doi.org/10.1016/j.compbiomed.2017.10.009 (2017).

Chang, V., Garcia, A., Hitschfeld, N. & Härtel, S. Gold-standard for computer-assisted morphological sperm analysis. Computers in biology and medicine 83, 143–150, https://doi.org/10.1016/j.compbiomed.2017.03.004 (2017).

Ilhan, H. O., Sigirci, I. O., Serbes, G. & Aydin, N. A fully automated hybrid human sperm detection and classification system based on mobile-net and the performance comparison with conventional methods. Medical & Biological Engineering & Computing 58, 1047–1068, https://doi.org/10.1007/s11517-019-02101-y (2020).

McCallum, C. et al. Deep learning-based selection of human sperm with high DNA integrity. Communications biology 2, 250, https://doi.org/10.1038/s42003-019-0491-6 (2019).

Chen, A. et al. Svia dataset: A new dataset of microscopic videos and images for computer-aided sperm analysis. Biocybernetics and Biomedical Engineering 42, 204–214, https://doi.org/10.1016/j.bbe.2021.12.010 (2022).

Thambawita, V. et al. Visem-tracking. Zenodo https://doi.org/10.5281/zenodo.7293726 (2022).

Andersen, J. M. et al. Fatty acid composition of spermatozoa is associated with bmi and with semen quality. Andrology 4, 857–865, https://doi.org/10.1111/andr.12227 (2016).

Boivin, J., Bunting, L., Collins, J. A. & Nygren, K. G. International estimates of infertility prevalence and treatment-seeking: potential need and demand for infertility medical care. Human reproduction 22, 1506–1512, https://doi.org/10.1093/humrep/dem046 (2007).

Van Engelen, J. E. & Hoos, H. H. A survey on semi-supervised learning. Machine Learning 109, 373–440, https://doi.org/10.1007/s10994-019-05855-6 (2020).

Jocher, G. et al. ultralytics/yolov5: v6.2 - YOLOv5 Classification Models, Apple M1, Reproducibility, ClearML and Deci.ai integrations https://doi.org/10.5281/zenodo.7002879 (2022).

Thambawita, V. et al. Deepsynthbody: the beginning of the end for data deficiency in medicine. In Proceedings of the IEEE International Conference on Applied Artificial Intelligence (ICAPAI), 1–8, https://doi.org/10.1109/ICAPAI49758.2021.9462062 (2021).

Ho, J., Jain, A. & Abbeel, P. Denoising diffusion probabilistic models. In Proceedings of Advances in Neural Information Processing Systems (NeurIPS) 33, 6840–6851 (2020).

Goodfellow, I. et al. Generative adversarial networks. Communications of the ACM 63, 139–144, https://doi.org/10.1145/3422622 (2020).

Mirza, M. & Osindero, S. Conditional generative adversarial nets. Preprint at arXiv:1411.1784 https://doi.org/10.48550/arXiv.1411.1784 (2014).

Sinha, A., Song, J., Meng, C. & Ermon, S. D2c: Diffusion-decoding models for few-shot conditional generation. Proceedings of Advances in Neural Information Processing Systems (NeurIPS) 34, 12533–12548 (2021).

Acknowledgements

The research presented in this paper has benefited from the Experimental Infrastructure for Exploration of Exascale Computing (eX3), which is financially supported by the Research Council of Norway under contract 270053.

Author information

Authors and Affiliations

Contributions

V.T., S.A.H. and M.A.R. made the conception and design of the work. V.T., S.A.H., A.S., H.L.H. and M.A.R. prepared and annotated data. J.A., O.W. and T.B.H. reviewed and verified data annotations. V.T. conceived and conducted the deep learning experiment(s). V.T., S.A.H., A.S.,T.N. and M.A.R. analysed the data and results. V.T., A.S., S.A.H., T.H., P.H. and M.A.R. prepared the draft of the work. All authors reviewed and revised the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Thambawita, V., Hicks, S.A., Storås, A.M. et al. VISEM-Tracking, a human spermatozoa tracking dataset. Sci Data 10, 260 (2023). https://doi.org/10.1038/s41597-023-02173-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-023-02173-4