Abstract

The simultaneous monitoring of both process mean and dispersion, particularly in normal processes, has garnered significant attention within the field. In this article, we present a new Bayesian Max-EWMA control chart that is intended to track a non-normal process mean and dispersion simultaneously. This is accomplished through the utilization of the inverse response function, especially in cases where the procedure follows a Weibull distribution. We used the average run length (ARL) and the standard deviation of run length (SDRL) to assess the efficacy of our suggested control chart. Next, we contrast our suggested control chart's performance with an already-existing Max-EWMA control chart. Our results show that compared to the control chart under consideration, the proposed control chart exhibits a higher degree of sensitivity. Finally, we present a useful case study centered around the hard-bake process in the semiconductor manufacturing sector to demonstrate the performance of our Bayesian Max-EWMA control chart under different Loss Functions (LFs) for a Weibull process. The case study highlights how flexible the chart is to various situations. Our results offer strong proof of the outstanding ability of the Bayesian Max-EWMA control chart to quickly identify out-of-control signals during the hard-bake procedure. This in turn significantly contributes to the enhancement of process monitoring and quality control.

Similar content being viewed by others

Introduction

In statistical quality control, distributions such as the Weibull are commonly used for the analysis of reliability data, which frequently involves failure times or life-test experiments. There are two primary categories of variations in processes that follow these distributions: assignable cause and common cause. Despite common cause variation occurring naturally and arbitrarily within the process, it is still considered under control. Assignable cause variation, on the other hand, denotes an out-of-control departure from the typical process state. Walter A. Shewhart1 pioneered control charts (CCs) and initially concentrating on using current sample data to identify changes in production processes, serve as tools to detect and address such deviations. Memory-type control charts like cumulative sum (CUSUM) and exponentially weighted moving average (EWMA), introduced by Page2 and Roberts3, have significantly improved sensitivity by incorporating both current and historical data. This advancement has been crucial in identifying subtle to moderate shifts in process parameters. Industries relying on precision, such as chemicals and manufacturing, extensively use CUSUM and EWMA control charts to promptly detect variations and maintain high product quality standards. Gen4 evaluated control-charting methods for monitoring process mean and variance concurrently, revealing limitations in some individual schemes. They proposed a combined scheme using two-sided EWMA charts for mean and variance, effectively identifying out-of-control situations. This study not only offers methods to estimate average run length (ARL) and run-length distribution percentages for this combined EWMA scheme but also provides a design procedure. Chen et al.5 introduced a novel EWMA control chart that integrates monitoring of process mean and variability into a single chart. This innovation enables the detection of both increases and decreases in mean and/or variability. BC Khoo et al.6 introduces the Max-DEWMA chart, an extension of the Max-EWMA, utilizing statistics derived from two DEWMA statistics for mean and variance, demonstrating superior performance in detecting small to moderate shifts in mean and/or variance. Huei Sheu et al.7 studied the Max-GWMA CC, detecting mean and/or variability changes simultaneously, outperforming the Max-EWMA chart in sensitivity through comprehensive simulations and diagnostic assessments. Sheu et al.7 developed the Max-GWMA CC, excelling in detecting changes in mean and variability. Simulations demonstrate its heightened sensitivity compared to the Max-EWMA chart, rendering it a valuable tool for monitoring process variations. Sanusi et al.8 presents four EWMA schemes for joint monitoring of Gaussian process mean and variance, comparing 'max' and 'distance' combining functions, revealing the superiority of distance-type schemes in detecting shifts with faster identification from industrial datasets. Arif et al.9 introduced a novel CC combining EWMA and generalized likelihood ratio test for simultaneous mean and dispersion monitoring using double RSS. Comparative simulations showcase its superior performance in simultaneous shift detection compared to RSS and PRSS charts, with real data applications demonstrating practical utility. Noor-ul-Amin et al.10 introduced a Max-EWMA CC for joint mean and dispersion monitoring in Weibull-distributed processes using the inverse response function. Their evaluation, using ARL and SDRL, demonstrated higher sensitivity compared to an existing Max-EWMA CC, illustrated through practical examples. Yang11 introduced an enhanced Qpm MQCAC method, aiming for efficient product quality optimization by addressing excessive or inadequate quality issues. This approach monitors process mean and standard deviation shifts, identifies influential factors, and minimizes resource consumption according to GM objectives. A practical demonstration using a steering knuckle pin example is provided, along with insights into potential future research directions. Chatterjee et al.12 extended the Max-EWMA CC to develop a single Max-GWMA CC for concurrent process mean and variability monitoring. Comparative analysis with Max-EWMA and Max-DEWMA charts revealed the efficiency of the Max-GWMA chart in detecting small shifts in both parameters, demonstrated through practical implementations using real and simulated datasets. Saemian et al.13 addressed concurrent process mean and variability monitoring in SPM with the Max-HEWMAMS CC, mitigating measurement imprecision due to gauge inaccuracies. Employing multiple measurements to reduce measurement errors' impact on chart detection, they highlighted benefits through various out-of-control scenarios, showcasing the negative effect of gauge imprecision using real data. Abbas et al.14 presented Bayesian CUSUM CCs for statistical process control profile monitoring, proving their superiority over rival techniques in an extensive comparative analysis. This highlighted the benefits of Bayesian methods and demonstrated their superiority with case studies and simulations that needed in-depth process parameter data. Erto et al.15 carried out a simulation study assessing semi-empirical Bayesian CCs for tracking Weibull distribution data. They utilized Weibull contour plots and reference data to provide real-world examples to illustrate the effects of changing Weibull parameters through Monte Carlo analysis. Erto et al.16 reported a new Bayesian CC technique that compares two processes by monitoring the ratio of the independent Weibull-distributed quality characteristics' percentiles. This graph demonstrated its performance using extensive simulations and real-world applications in the wood industry, taking into account variables such as shift magnitude, training data quality, and prior information quality. A Bayesian modified EWMA CC with four LFs and a conjugate prior was introduced by Aslam and Anwar17, who found that it was very effective at detecting small to moderate process shifts. Validation included monitoring the mechanical industry’s reaming process and assessing sports industry golf ball performance. Lin et al.18 introduced a Bayesian procedure constructing an EWMA CC for monitoring the variance of a distribution-free service process, capable of handling non-normal and time-varying distributions. Demonstrations with bank service time displayed its efficacy in quickly detecting variance shifts. Noor-ul-Amin and Noor19 proposed an AEWMA CC integrating Shewhart and EWMA approaches to detect various shifts in process mean. Bayesian theory with LFs and informative priors was employed, validated through Monte Carlo simulations and real-data examples. Yazdi et al.20 developed Bayesian CCs for monitoring multivariate linear profiles using regression models, outperforming non-Bayesian counterparts based on ARL criteria. They introduced a historical data-driven informative prior method, demonstrating applicability through extensive simulations. Khan et al.21 introduced a novel Bayesian AEWMA CC incorporating RSS designs, SELF, LLF, and an informative prior. Monte Carlo simulations validated its sensitivity in detecting mean shifts, exemplified in a semiconductor fabrication process. Furthermore, Khan et al.22 introduced a Bayesian HEWMA CC using RSS schemes with informative priors and various LFs, showing enhanced sensitivity in detecting out-of-control signals. The article uniquely focuses on Bayesian methods for joint monitoring of mean and variance, specifically for lifetime data. It introduces a Bayesian Max-EWMA CC for simultaneous monitoring of mean and variance in Weibull processes, evaluated through Monte Carlo simulations.. The article is structured with sections dedicated to Bayesian theory and various LFs in “Bayesian approach”, the proposed Bayesian Max-EWMA CC method in “Proposed Bayesian Max-EWMA CC for joint monitoring of the Weibull distribution”, comprehensive discussions in “Results and discussion”, “Main findings” contain the key findings, practical applications using real-life data in “Real data application”, and concluding remarks in “Conclusion”.

Bayesian approach

The Bayesian approach uses probability theory to model and analyze uncertainty, treating model parameters as random variables with associated probability distributions. It incorporates prior beliefs and updates them with observed data, resulting in posterior probability distributions. The key steps include defining prior distributions, likelihood functions for data generation, combining priors and likelihoods for posteriors, and Bayesian inference. This reiterative method provides a coherent outline, is robust to outliers and small samples, is flexible, integrates prior knowledge, allows for continuous improvement with new data, and quantifies uncertainty. Although it can be computationally exhaustive for complex models and requires prior assumptions, it is widely used in areas such as scientific research, data science, and machine learning. Consider a random variable denoted as V, representing lifetimes, and assume that it follows a Weibull distribution characterized by the shape parameter (\(\lambda\)) and scale parameter (\(\alpha\)), both of which are greater than zero (a > 0, k > 0). The probability density function (pdf) and cumulative distribution function (cdf) are mathematically described as follows:

Squared error loss function

To assess the discrepancy between expected and actual values, the squared error loss function—also called mean square error or MSE—is essential in Bayesian methodology. It is essential to both Bayesian inference and decision theory. Predictions or parameter estimates are represented as probability distributions in Bayesian modeling. The squared error loss measures the cost of differences by squaring them mathematically, which penalizes larger discrepancies more severely. The primary goal of Bayesian practice is to minimize the expected squared error loss by averaging it under the posterior distribution, resulting in point estimates or predictive distributions. This loss function is widely used in Bayesian applications, especially for continuous variables, and is closely related to the mean squared error. Ultimately, it helps assess the quality of predictions and parameter estimates by considering both estimate uncertainty and their proximity to actual values. The SELF is endorsed by Gauss 23, incorporating both the variable of interest, denoted as X, and the estimator \(\hat{\theta }\) used for estimating an unknown population parameter \(\theta\), denoted as theta. Its mathematical expression is as follows:

And the Bayes estimator using SELF is mathematized.

Linex loss function

The Linex loss function employed in Bayesian analysis measures the cost of prediction errors by balancing linear and exponential components. It assesses the difference between predicted and true values and is valuable in various Bayesian applications, allowing flexibility in quantifying asymmetric losses and adapting to scenarios with varying consequences of overestimation and underestimation errors. Varian 24 proposed LLF to mitigate risks in Bayes estimation. The LLF is mathematically described

Under LLF, the Bayesian estimator \(\hat{\theta }\) is mathematizied as

Proposed Bayesian Max-EWMA CC for joint monitoring of the Weibull distribution

We have a series of random samples, denoted as Vi1, Vi2, …, Vin, drawn from a Weibull distribution denoted as \(W(\alpha ,\lambda )\) at different time points, i.e., i = 1, 2, 3. Typically, the Weibull distribution’s parameters (\(\alpha\) and \(\lambda\)) are not known in advance and need to be estimated using available historical data. To estimate these parameters, an appropriate method is employed, usually with the consideration that the process is under control. Letus denote the estimated scale and shape parameters as \(\alpha_{0}\) and \(\lambda_{0}\), respectively. These parameter estimates are derived by leveraging a relationship between the Weibull and standard normal distributions, as given by Faraz et al. 25 in Eq. (7) as follows:

where the mean and variance are given by

And

Equations (8) and (9) provides insights into how shifts in the parameters of a Weibull distribution influence the mean and variance of a random variable following a standard normal distribution. Essentially, they quantify the impact of changing Weibull distribution parameters on the characteristics of the standard normal distribution.

Consider random samples: \(z_{i1} = W\left( {V_{i1} ,\alpha_{0} ,\lambda_{0} } \right)\), \(z_{i2} = W_{N} \left( {V_{i2} ,\alpha_{0} ,\lambda_{0} } \right)\), …, \(z_{in} = W_{N} \left( {V_{in} ,\alpha_{0} ,\lambda_{0} } \right)\). Each of these samples has a size of n and is transformed from a Weibull to a normal distribution. After this transformation, we introduce the Max-EWMA Control Chart, which leverages Bayesian theory to concurrently monitor the mean and variance of a normally distributed process.

In a Bayesian framework, when both the likelihood function and prior distribution are normally distributed, the resulting posterior distribution also follows a normal distribution characterized by a mean (θ) and variance (σ). The pdf is as follows:

where \(\theta_{n} = \frac{{n\overline{z}\delta_{0}^{2} + \delta^{2} \theta_{0} }}{{\delta^{2} + n\delta_{0}^{2} }}\) and \(\delta_{n}^{2} = \frac{{\delta^{2} \delta_{0}^{2} }}{{\delta^{2} + n\delta_{0}^{2} }}\) respectively.

To create a Max-EWMA chart using Bayesian methodology, we begin by selecting a sample of n values for a quality characteristic Z from the production process. Subsequently, we compute transformed statistics under SELF for both the mean and variance as follows

and

where \(\hat{\theta }_{(SELF)} = \frac{{n\overline{x}\delta_{0}^{2} + \delta^{2} \theta_{0} }}{{\delta^{2} + n\delta_{0}^{2} }}\) and \(\hat{\delta }_{(SELF)}^{2} = \frac{{\delta^{2} \delta_{0}^{2} }}{{\delta^{2} + n\delta_{0}^{2} }}\) are the Bayesian estimators using SELF for the population mean and variance, respectively, while using LLF, the Bayesian estimators for the population mean and variance are given as \(\hat{\theta }_{{\left( {_{LLF} } \right)}} = \frac{{n\overline{z}\delta_{0}^{2} + \delta^{2} \theta_{0} }}{{\delta^{2} + n\delta_{0}^{2} }} - \frac{C\prime }{2}\delta_{n}^{2}\) and \(\hat{\delta }_{{\left( {LLF} \right)}}^{2} = \frac{{\delta^{2} \delta_{0}^{2} }}{{\delta^{2} + n\delta_{0}^{2} }}\), the transform statistic under LLF for both the process mean and variance is mathematically discribed as:

and

where \(H\left( {n,\nu } \right)\) is a chi-square distribution characterized by \(\nu\) degrees of freedom, and \(\phi^{ - 1}\) denotes the inverse of the standard normal distribution function. The computations for EWMA EWMA statistics regarding both the process mean and variance are outlined as follows:

In this context, \(P_{0}\) and \(Q_{0}\) represent the initial values for the EWMA sequences Pt and Qt, respectively, with \(\lambda\) (a constant within the range [0, 1]) denoting the smoothing constant. Pt and Qt are also mutually independent because of the independence of Pt and Qt. When considering an in-control process, both Pt and Qt follow normal distributions, each with a mean of zero and variances of \(\delta_{{P_{t} }}^{2}\) and \(\delta_{{Q_{t} }}^{2}\), respectively. This is defined as follows

The plotting statistics, Bayesian Max-EWMA for jointly monitoring using \(P_{t(LF)}\) and \(Q_{t(LF)}\) is mathematically defined

For \(t = 1,2,..\)

As the Bayesian Max-EWMA statistic is a positive value, we required to plot only the upper control limit for jointly monitoring the process mean and variance. If the plotting statistic \(A_{t}\) within the UCL, then the process is in control and if the \(A_{t}\) cross the UCL, the process is out of control.

Results and discussion

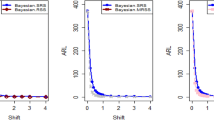

In this analysis, Tables 1, 2, 3 and 4 serve as a central platform for presenting the outcomes derived from the application of the Bayesian Max-EWMA CC for the Weibull process. This study undertakes a rigorous examination, specifically focusing on the influence of two distinct LFs designed to emphasize the significance of the posterior distribution. Importantly, these assessments are conducted within the framework of informative priors, introducing prior knowledge and beliefs into the analytical process. To ensure the reliability and robustness of our statistical conclusions, a substantial replication of 50, 000 replicates is employed for the calculation of both the ARL and SDRL. Furthermore, we exercise precision by carefully selecting smoothing constants, namely λ values of 0.10 and 0.25, which fine-tune our analysis and enable us to evaluate the performance of the Bayesian Max-EWMA CC method under diverse conditions. Furthermore, this study expands its scope and examines an extensive range of combinations with variance shift values (b) covering values from 0.25 to 3.00 and mean shift values (a) ranging from 0.00 to 3.00. This comprehensive analysis allows us to evaluate the performance of the Bayesian Max-EWMA CC approach, which specifically aims to comprehensively monitor process variance and mean simultaneously. The results of our study clearly show how sensitively the method can detect deviations from the standard within production processes. This demonstrates its enormous potential as a useful and trustworthy tool for continuous quality control and monitoring in a variety of industrial environments. It is important to ensure that the calculated plot statistic Si remains below the UCLi at all times. Each trial ends when the plot statistic exceeds the UCLi, indicating a significant change in the process mean and standard deviation. Changes in the parameters of the Weibull distribution are related to these variations. In particular, we analyze shifts in scale parameters ranging from 0.0 to 5.00 and shifts in shape parameters ranging from 0.25 to 4.00The process initially follows a normal distribution N(0, 1) and remains within the control limits when it comes to the Weibull distribution with parameters W(1, 1.5). The ARL under control conditions (ARL0) for the two different cases of the smoothing constant λ = 0.10 and 0.25 was found to be 370. The results shown in Tables 1 and 2 provide strong evidence for the effectiveness of the Bayesian Max-EWMA CC, particularly when used in conjunction with the SELF for the posterior distribution. Maintaining process stability and product quality depends on the combined approach's exceptional ability to simultaneously detect shifts in both process mean and variance. The results show a remarkable trend: the ARLs continuously decrease as the magnitude of the mean shift increases. Similarly, ARLs decrease when variance shifts occur. These recurring trends strongly suggest that the Bayesian Max-EWMA CC has the important ability to detect process changes in a timely manner and indicate what is needed for process control and early intervention. Due to these properties, it is an extremely valuable tool for thorough monitoring of production processes and ensures timely detection and correction of deviations from established standards. Ultimately, the use of these CCs improves process effectiveness and product quality, making them a valuable asset in a variety of industries. For example, if you look at the ARL results. The resulting ARL values for these shifts are as follows: 369.15, 80.19, 24.05, 13.71, 10.14, 8.16, 7.12, 4.88, and 4.13. Interestingly, the corresponding ARL values noticeably decrease with increasing displacement magnitude. This result shows the extent to which the proposed Bayesian Max-EWMA CC can be used to quickly identify changes in the shape parameter. The ability of the CC to quickly detect even small deviations from the process mean suggests that it is very sensitive. This means that these changes can be responded to quickly, which is critical to maintaining the consistency and quality of the process. The effects of changing the value of the shape parameter from a = 1.50 to 4.00 while maintaining the scaling parameter values are similar. The resulting ARL values are as follows: 369.15, 24.91, 16.92, 7.06, 5.80, 3.60, 2.60, 2.11, and 1.50. These ARL values show a clear trend: the ARL values sharply decline as the shape parameter deviates from the baseline value of 1. This pattern highlights how well the suggested Bayesian Max-EWMA CC performs in quickly identifying changes in process variance. Moreover, it is noteworthy that when examining the performance of the proposed Bayesian Max-EWMA CC in Table 2, we find that the CC becomes less effective as the smoothing constant increases. This observation suggests that in specific scenarios, opting for a lower smoothing constant might be more advantageous in achieving optimal performance. Similarly, Tables 3 and 4 present the ARL outcomes of the Bayesian Max-EWMA CC using the LLF with a consistent λ = 0.25 and n = 5. Across various trials involving shifts in the shape parameter ranging from 1.50 to 5.00 and corresponding shifts in the scale parameter fixed at 1.0, the resulting ARL values were 370.09, 20.34, 6.34, 2.81, 1.99, 1.57 and 1.17. These findings highlight a clear trend: as the magnitude of process shifts increases, the ARL values exhibit a rapid decrease, underscoring the exceptional accuracy of the proposed Max-EWMA CC in swiftly detecting shifts in both process mean and variance. Moreover, it is essential to note that the efficiency of the proposed CC for the simultaneous monitoring of the process mean and variance is influenced by the sample size. Across all the tables, a consistent pattern emerges: as the sample size increases, the corresponding ARL values decrease, indicating the enhanced effectiveness of the suggested CC in promptly identifying deviations from the expected process parameters. The following simulation steps have been considered for the calculations of ARLs and SDRLs.

Step 1: Establishing the control limits

-

i.

To commence, establish the initial control limits by computing the values for UCL and λ.

-

ii.

Generate a random sample of size n to depict the in-control process, utilizing normal distributions.

-

iii.

Calculate the statistic required for the suggested control chart.

Verify whether the plotted statistic lies within the UCL; if so, proceed to steps (iii–iv) once more.

Step 2: Assessing the out-of-control average run length (ARL)

-

i.

Generate a random sample reflecting a shifted process.

-

ii.

Calculate the statistic required for the suggested control chart.

-

iii.

Should the plotted statistic fall within the UCL, iterate through steps (i–ii). Otherwise, document the count of generated points, signifying a single out-of-control run length.

-

iv.

Iterate through the aforementioned process (i–iii) 50, 000 times to ascertain the out-of-control ARL1 and SDRL1.

Main findings

The main findings of the current study are given below:

-

The effectiveness of the proposed Max-EWMA CC using the Weibull process for simultaneously monitoring both process mean and variance, especially in identifying subtle to moderate shifts, becomes apparent when analyzing the run length profiles provided in all four tables related to this CC. These profiles showcase how the CC performs over a range of scenarios, and the consistent trend across these tables indicates that the CC is adept at promptly detecting deviations in both process mean and variance, making it a valuable tool for maintaining process quality and consistency.

-

The simulation results clearly demonstrate that the performance of the proposed Bayesian CC for simultaneous monitoring of processes improves as the smoothing constant decreases. In other words, when the smoothing constant is reduced, the CC becomes more sensitive and effective at promptly identifying shifts in the process mean and variance. This finding implies that choosing a lower smoothing constant may, in some cases or applications, improve process quality and reliability by facilitating better monitoring and faster identification of deviations from the expected process parameters.

-

The variation in sample size is one of the important factors that we have carefully examined in the context of our study. Our analysis's findings provide an important and persuasive insight. It is evident that there is a notable and significant improvement in the efficiency and performance of the proposed Bayesian Max-EWMA CC with increasing sample size. Put practically, this means that the CC can detect changes in process mean and variance more quickly and accurately when larger sample sizes are used. This improvement is particularly important because it can lead to more reliable and robust process monitoring, contributing to better overall process quality and consistency.

Real data application

Many researchers commonly employ the practice of demonstrating the functionality and effectiveness of proposed CCs using actual datasets and simulated scenarios. In this context, we analyze a real-life dataset to showcase the capabilities of the proposed CC. Monitoring the tensile strength of fibrous composites is crucial in industries ensuring material safety for aerospace and bridge construction. To achieve this, we examine the real-life dataset referenced in 26, which specifically outlines the breaking strengths of carbon fibers used in manufacturing these composite materials, as detailed in Table 5. These insights stem from research conducted at the U.S. Army Materials Technology Laboratory in Watertown, Massachusetts. The dataset consists of 20 samples, each comprising a sample size of n = 5, following the Weibull distribution with a scale parameter (η = 2.9437) and shape parameter (θ = 2.7929). Initially, 15 random samples of size n = 5 are drawn without replacement and marked as in-control samples. Subsequently, the dataset is altered by adding 1 to each observation. Following this modification, 10 random samples of size n = 5 are drawn without replacement and regarded as out-of-control samples. Both charts are employed to monitor variations in the process mean, and the resulting computations are presented in Table 6.

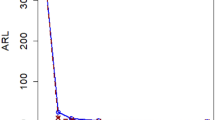

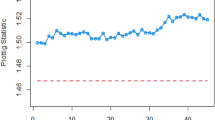

Figures 1 and 2 provide a visual representation of the implementation of the provided Bayesian Max-EWMA CC, designed for the simultaneous monitoring of both process mean and dispersion. This monitoring employs both the SELF and LLF approaches. A thorough examination of these charts reveals clear signals indicating that the process has gone out of control in the 23rd and 21st samples, especially when considering a smoothing constant value of 0.10. The identified departure from the normal process state, resulting in an out-of-control scenario, can be ascribed to two main factors: alterations in either the process mean or variance. These alterations stem from modifications in the shape and scale parameters of the Weibull distribution, thereby influencing the distribution's properties and giving rise to the observed deviations.

Conclusion

In this study, we introduce an innovative Bayesian Max-EWMA CC using the Weibull process designed for the simultaneous monitoring of the process mean and variance. This CC incorporates informative prior distributions and integrates two distinct LFs within the context of posterior distribution. The performance of this novel approach was rigorously evaluated through a comprehensive analysis, with results presented in Tables 1, 2, 3 and 4. These assessments employ crucial metrics such as ARL and SDRL. We conduct a practical case study focused on the hard bake process in semiconductor manufacturing. Interestingly, the proposed Bayesian Max-EWMA CC shows excellent performance in identifying out-of-control signals in the process when applied to posterior distributions. Crucially, the knowledge acquired from this research could be applied to the creation of other memory-type CCs, improving their efficacy in a variety of industrial applications. Extending this novel method to different kinds of CC instead of just nonnormal distributions can lead to a more thorough comprehension of the underlying data patterns. This broader application enables the early detection of potential quality issues in different domains and allows for swift corrective actions, thereby reducing the risk of costly errors and defects. This method is essential for quickly spotting irregularities in patient data, enabling prompt interventions, and enhancing the quality of patient care in real-world situations like healthcare. In manufacturing, extending this approach to non-normal distributions and diverse CC types aids in identifying variations in the production process, ultimately leading to improved product quality and a reduction in waste.

Data availability

The statement signifies that the datasets used or analyzed in the study are not publicly available but can be acquired from the corresponding author upon a reasonable request. It suggests the author holds the data and is open to sharing it with interested parties in an appropriate manner.

References

Shewhart, W. A. The application of statistics as an aid in maintaining quality of a manufactured product. J. Am. Stat. Assoc. 20(152), 546–548 (1925).

Page, E. S. Continuous inspection schemes. Biometrika 41(1/2), 100–115 (1954).

Roberts, S. Control chart tests based on geometric moving averages. Technometrics 42(1), 97–101 (1959).

Gan, F. Joint monitoring of process mean and variance using exponentially weighted moving average control charts. Technometrics 37(4), 446–453 (1995).

Chen, G., Cheng, S. W. & Xie, H. Monitoring process mean and variability with one EWMA chart. J. Qual. Technol. 33(2), 223–233 (2001).

Khoo, M. B., Teh, S. & Wu, Z. Monitoring process mean and variability with one double EWMA chart. Commun. Stat. Theory Methods 39(20), 3678–3694 (2010).

Sheu, S.-H., Huang, C.-J. & Hsu, T.-S. Extended maximum generally weighted moving average control chart for monitoring process mean and variability. Comput. Ind. Eng. 62(1), 216–225 (2012).

Sanusi, R. A., Mukherjee, A. & Xie, M. A comparative study of some EWMA schemes for simultaneous monitoring of mean and variance of a Gaussian process. Comput. Ind. Eng. 135, 426–439 (2019).

Arif, F., Noor-ul-Amin, M. & Hanif, M. Joint monitoring of mean and variance under double ranked set sampling using likelihood ratio test statistic. Commun. Stat.-Theory Methods 51(17), 6032–6048 (2022).

Noor-ul-Amin, M., Aslam, I. & Feroze, N. Joint monitoring of mean and variance using Max-EWMA for Weibull process. Commun. Stat.-Simul. Comput. 52(7), 3257–3272 (2023).

Yang, C.-M. An improved multiple quality characteristic analysis chart for simultaneous monitoring of process mean and variance of steering knuckle pin for green manufacturing. Qual. Eng. 33(3), 383–394 (2021).

Chatterjee, K., Koukouvinos, C., Lappa, A. & Roupa, P. A joint monitoring of the process mean and variance with a generally weighted moving average maximum control chart. Commun. Stat.-Simul. Comput. 4, 1–21 (2023).

Saemian, M., Maleki, M. R. & Salmasnia, A. Performance of Max-HEWMAMS control chart for simultaneous monitoring of process mean and variability in the presence of measurement errors. Int. J. Appl. Decis. Sci. 16(2), 165–188 (2023).

Abbas, T., Ahmad, S., Riaz, M. & Qian, Z. A Bayesian way of monitoring the linear profiles using CUSUM control charts. Commun. Stat.-Simul. Comput. 48(1), 126–149 (2019).

Erto, P., Pallotta, G., Palumbo, B. & Mastrangelo, C. M. The performance of semi-empirical Bayesian control charts for monitoring Weibull data. Qual. Technol. Quant. Manag. 15(1), 69–86 (2018).

Erto, P., Lepore, A., Palumbo, B. & Vanacore, A. A Bayesian control chart for monitoring the ratio of Weibull percentiles. Qual. Reliab. Eng. Int. 35(5), 1460–1475 (2019).

Aslam, M. & Anwar, S. M. An improved Bayesian modified-EWMA location chart and its applications in mechanical and sport industry. PLoS ONE 15(2), e0229422 (2020).

Lin, C.-H., Lu, M.-C., Yang, S.-F. & Lee, M.-Y. A bayesian control chart for monitoring process variance. Appl. Sci. 11(6), 2729 (2021).

Noor-ul-Amin, M. & Noor, S. An adaptive EWMA control chart for monitoring the process mean in Bayesian theory under different loss functions. Qual. Reliabil. Eng. Int. 37(2), 804–819 (2021).

Ahmadi Yazdi, A., Shafiee Kamalabad, M., Oberski, D. L. & Grzegorczyk, M. Bayesian multivariate control charts for multivariate profiles monitoring. Qual. Technol. Quant. Manag. 7, 1–36 (2023).

Khan, I., Noor-ul-Amin, M., Khan, D. M., AlQahtani, S. A. & Sumelka, W. Adaptive EWMA control chart using Bayesian approach under ranked set sampling schemes with application to Hard Bake process. Sci. Rep. 13(1), 9463 (2023).

Khan, I. et al. Hybrid EWMA control chart under bayesian approach using ranked set sampling schemes with applications to hard-bake process. Appl. Sci. 13(5), 2837 (2023).

Gauss, C. Methods Moindres Carres Memoire sur la Combination des Observations. Vol. 1810. Translated by J. Bertrand (1955).

Varian, H. R. A Bayesian approach to real estate assessment. In Studies in Bayesian Econometric and Statistics in Honor of Leonard J. Savage. 195–208 (1975).

Faraz, A., Saniga, E. M. & Heuchenne, C. Shewhart control charts for monitoring reliability with Weibull lifetimes. Qual. Reliab. Eng. Int. 31(8), 1565–1574 (2015).

Pascual, F. & Zhang, H. Monitoring the Weibull shape parameter by control charts for the sample range. Qual. Reliab. Eng Int. 27(1), 15–25 (2011).

Acknowledgements

Researchers Supporting Project number (RSPD2024R1060), King Saud University, Riyadh, Saudi Arabia.

Author information

Authors and Affiliations

Contributions

I.K. and M.N.A. bolstered the manuscript by conducting mathematical analyses and numerical simulations. J.I. and E.A.A.I. conceived the primary concept, analyzed the data, and aided in restructuring the manuscript. B.A. and Z.A. meticulously validated the findings, revised the manuscript, and secured funding. Furthermore, Z.A. and E.A.A.I. improved the manuscript's language and conducted additional numerical simulations. The ultimate manuscript version, prepared for submission, reflects a consensus achieved by all authors.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Noor-ul-Amin, M., Khan, I., Iqbal, J. et al. Memory type Max-EWMA control chart for the Weibull process under the Bayesian theory. Sci Rep 14, 3111 (2024). https://doi.org/10.1038/s41598-024-52109-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-52109-0

This article is cited by

-

Memory type Bayesian adaptive max-EWMA control chart for weibull processes

Scientific Reports (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.