Abstract

In this article, we introduce a novel Bayesian Max-EWMA control chart under various loss functions to concurrently monitor the mean and variance of a normally distributed process. The Bayesian Max-EWMA control chart exhibit strong overall performance in detecting shifts in both mean and dispersion across various magnitudes. To evaluate the performance of the proposed control chart, we employ Monte Carlo simulation methods to compute their run length characteristics. We conduct an extensive comparative analysis, contrasting the run length performance of our proposed charts with that of existing ones. Our findings highlight the heightened sensitivity of Bayesian Max-EWMA control chart to shifts of diverse magnitudes. Finally, to illustrate the efficacy of our Bayesian Max-EWMA control chart using various loss functions, we present a practical case study involving the hard-bake process in semiconductor manufacturing. Our results underscore the superior performance of the Bayesian Max-EWMA control chart in detecting out-of-control signals.

Similar content being viewed by others

Introduction

Embedded at the core of industries committed to unparalleled process excellence and quality assurance, Statistical Process Control (SPC) serves as a foundational strategy for achieving process excellence. It is built on meticulous statistical analysis, enabling robust monitoring, analysis, and optimization in alignment with benchmarks. By leveraging data insights, SPC adeptly discerns process variations, facilitating informed decisions and swift interventions. Framed within statistical methodologies, it deftly navigates process intricacies, promoting efficiency, defect reduction, and exceeding customer expectations. Applicable across sectors, SPC principles guide the intricate path of process refinement and continuous enhancement. At its core, the control chart (CC) is a key SPC component, facilitating ongoing monitoring and insightful process data analysis. Plotting data against control limits reveals trends and anomalies, enabling agile interventions for stability and quality assurance. The graphical representation offered by control charts serves as a guiding light in the realm of decision-making, all while nurturing a culture deeply rooted in perpetual process enhancement. Shewhart1 introduced CCs that exclusively employ current sample data to identify substantial variations within production processes. In contrast, memory-type CCs such as cumulative sum (CUSUM) and exponentially weighted moving average (EWMA) CCs were pioneered by Page2 and Roberts3, encompassing both current and historical sample data. It's noteworthy that CUSUM and EWMA CCs demonstrate heightened sensitivity in detecting slight to moderate shifts in process parameters compared to the traditional Shewhart CCs. These memory-type CCs, particularly CUSUM and EWMA, find extensive utilization across diverse domains, prominently in chemical and industrial production processes. Herdiani et al.4 noted that in SPC, the assumption of independent observations frequently becomes invalid, necessitating specialized CCs like Shewhart, CUSUM, and EWMA for correlated data. The inclusion of time series models becomes pivotal due to this correlation. Shewhart's modification for autoregressive processes incorporated the mean-to-target distance relative to the standard deviation of the autocorrelation process. The study assesses the EWMA mean for autocorrelation processes, with a focus on evaluating performance through the ARL utilizing the Markov Chain Method. Gan5 evaluated control-charting schemes for joint monitoring of process mean and variance, exposing their limitations and the risk of individual application. A combined two-sided EWMA CC jointly monitoring of mean and variance demonstrated efficacy across various out-of-control scenarios, with the study presenting a method for approximating average run length (ARL) and run-length distribution percentage points, along with a suggested design procedure for the combined EWMA scheme. Sanusi et al.6 compare four EWMA scheme combinations for jointly identifying Gaussian process mean and variance, addressing parameter estimation via maximum likelihood estimators. Distance-type schemes outperform certain existing methods in identifying slight-to-moderate shifts, supported by computational studies and real industrial data. Haq and Razzaq7 examined MaxWACCUSUM CC using three unbiased estimators for joint monitoring of mean and variance shifts, showing strong performance in detecting various shifts and outperforming existing charts like maximum EWMA and CUSUM, supported by Monte Carlo simulations and real datasets. Arif et al.8 assessed the effects of measurement errors (ME) on a joint monitoring CC using three techniques. They explored the chart's application with EWMA statistics and generalized likelihood ratio tests in ranked set sampling. The study evaluated the chart's performance through simulations involving various shifts, and it was further supported by a real-world data example. Javiad et al.9 investigated the effects of ME on monitoring mean and variance shifts in production processes. They analyzed Max-EMWA CC, employing a covariate model and multiple measurements to counter ME effects. Monte Carlo simulations were utilized to compute ARLs and SDRLs, and a real-world data example was used to validate findings and compare with other chart methods. Yang10 introduced an enhanced quality optimization approach, the Qpm MQCAC, which monitors shifts in process mean and standard deviation, ensuring quality adherence, efficient resource usage, and alignment with green manufacturing objectives. Noor-ul-Amin et al.11 introduced a Max-EWMA CC utilizing the inverse response function for simultaneous monitoring of process mean and dispersion in Weibull-distributed processes, demonstrating increased sensitivity compared to existing Max-EWMA CC, validated through ARL and SDRL metrics, and supported by practical examples. Earlier studies suggest a widespread reliance on conventional techniques that exclusively utilize sample data, often overlooking prior information. On the contrary, the Bayesian method combines sample data with previous knowledge to revise and establish a posterior distribution (P), thereby improving the estimation procedure. Noor-ul-Amin and Noor12 developed a new AEWMA CC for Bayesian process mean monitoring, investigating it under different LFs and informative priors. They conducted a comparative study with existing Bayesian EWMA CCs, utilizing run length as performance metrics, and substantiated their discoveries using Mnote Carlo simulation alongside a real-world data illustrations. Raiz et al.13 studied the Bayesian EWMA CC under three LFs (SELF, LLF, PLF) and diverse informative and non-informative priors. Performance metrics like ARL and SDRL, computed via P distributions, assess the chart's effectiveness. Monte Carlo simulations explore performance across smoothing constants, alongside an illustrative example highlighting practical use cases. Bayesian EWMA CCs for non-normal lifetime distributions, i.e., Exponential, Inverse Rayleigh, and Weibull distributions, are suggested by Noor et al.14. They utilized uniform priors with LFs, evaluating charts using ARL and SDRL. Through simulations, the Weibull-based chart demonstrated superior performance, corroborated by a real data illustration. Noor et al.15 used Bayesian methods to develop a hybrid EWMA CC, considering informative and non-informative priors along with two LFs. They evaluated performance using ARL and SDRL through posterior and predictive posterior distributions. Extensive simulations and a real-data example validated their approach. Ranked set sampling (RSS) is a statistical approach that improves estimating population parameters by ranking items within a population based on specific characteristics and selecting sets or groups of items according to their ranks, rather than through individual random selections. This method helps in scenarios with high measurement costs or significant population variation by reducing estimation variability through the use of entire subsets of items. Utilizing ranked sets enhances the precision of population parameter estimations, potentially providing cost-effective sampling strategies in diverse research or data collection contexts. Khan et al.16 introduce a Bayesian hybrid EWMA CC via RSS with informative priors and different loss functions (LFs). Their simulation-based evaluation using ARL and SDRL highlights its superiority in identifying out-of-control signals in semiconductor manufacturing compared to other Bayesian CCs. Aslam and Anwar17 developed a Bayesian Modified-EWMA CC for process location monitoring, integrating four LFs and a conjugate prior. The chart's effectiveness in detecting small to moderate shifts is demonstrated through performance assessment and real-life instances, including monitoring mechanical reaming and sports industry golf ball performance. Khan et al.18 proposed a novel Bayesian AEWMA CC using RSS and informative prior for mean shift monitoring. Extensive Monte Carlo simulations revealed its improved sensitivity in detecting mean shifts compared to existing Bayesian AEWMA charts based on SRS. Illustrated with a semiconductor fabrication example, it outperformed EWMA and AEWMA CCs using Bayesian approach under SRS for detecting out-of-control signals.

In manufacturing, Bayesian statistics plays a pivotal role, leveraging prior knowledge for informed parameter inferences and maintaining process control by dynamically updating parameters amidst changing conditions. This adaptability is crucial given the intricate nature of manufacturing processes. Bayesian methods excel in handling uncertainty, representing it through probability distributions for effective risk management and decision-making. Their strength lies in continuous monitoring via sequential analysis, enabling early fault detection and corrective actions. Their flexibility accommodates various manufacturing scenarios, integrating seamlessly into control systems for optimization, waste reduction, and quality maintenance. Offering a clear decision-making framework amid uncertainty, Bayesian modeling aids root cause analysis, contributing significantly to improved product quality, efficiency, and overall process control. Additionally, this article introduces a novel Bayesian Max-EWMA CC for simultaneous monitoring of both process mean and variance. The method's performance evaluation involves ARL and SDRL calculations, executed via Monte Carlo simulation techniques. The structure of the article is as follows: In “Bayesian approach” section, we introduce Bayesian theory and various LFs. In “Proposed Bayesian Max-EWMA CC for joint monitoring” section, we discuss the proposed Bayesian Max-EWMA CC method. Following that, "Performance evaluation" section comprises comprehensive discussions and emphasizes key findings, while “Real life data application” section provides practical illustrations of real-life data applications. Finally, “Conclusion” section serves as the conclusion of this article.

Bayesian approach

Bayesian theory, a foundational concept in statistics and probability, provides a unique and powerful framework for making inferences and drawing conclusions from data. Unlike traditional frequentist statistics, where parameters are considered fixed and unknown, Bayesian theory treats these parameters as probability distributions, allowing us to incorporate prior knowledge and update our beliefs as new evidence emerges. These prior distributions can be broadly categorized into two groups: non-informative and informative. Non-informative priors, such as Jeffreys and uniform priors, are commonly used, while informative priors frequently rely on conjugate priors, which are a widely recognized family of distributions. This approach not only offers a flexible and intuitive way to analyze data but also provides valuable insights into uncertainty, making it a fundamental tool in various fields, including science, engineering, and machine learning. Let's examine the study variable X within the confines of a controlled process, delineated by parameters \(\theta\) (mean) and \(\delta^{2}\) (variance). In this scenario, we employ a normal prior, with \(\theta_{0}\) and \(\delta_{0}^{2}\) serving as its associated parameters, defined as follows:

Generate the P distribution, it involves combining the likelihood function from the sample distribution with the prior distribution, forming a proportional relationship via multiplication. Consequently, the resulting P distribution, delineating the unknown parameter \(\theta\) based on the observed data X, can be expressed as follows:

The posterior predictive (PP) distribution is employed to predict future observations by considering the P distribution as prior distribution. It is frequently employed as a prior distribution for new data Y, facilitating predictions for upcoming observations while taking uncertainty into account. An integral component of Bayesian theory, the PP distribution enables the updating of prior distributions with new data. Its mathematical illustration is given below:

Squared error loss functions

In Bayesian estimation, the squared error LF (SELF) is a crucial tool for assessing the accuracy of parameter estimates. It measures the discrepancy between estimated and true values by squaring the difference between them. Bayesian estimation combines prior beliefs and observed data to infer unknown parameters. The SELF penalizes larger estimation errors more severely than smaller ones. The goal is to find the Bayesian posterior mean, minimizing the expected squared error under the posterior distribution. This approach leads to robust estimates, particularly when the posterior is approximately Gaussian. In this study, we employed Gauss's recommended LF19. The SELF, which considers both the variable of interest, denoted as X, and the estimator \(\hat{\theta }\) for the unknown population parameter \(\theta\), is expressed mathematically as follows:

and Bayes estimator utilizing SELF is mathematized as:

Linex loss functions

An asymmetric LF in Bayesian analysis quantifies the penalties for incorrect predictions, unlike a symmetric one that treats errors equally. It assigns different weights to overestimations and underestimations based on their relative costs, incorporating prior beliefs about data distribution and outcomes' costs to improve Bayesian inference precision and efficiency. Varian20 proposed the LLF to mitigate risks in Bayes estimation. The LLF is mathematically described as:

Under LLF, the Bayesian estimator \(\hat{\theta }\) is mathematizied as

Proposed Bayesian Max-EWMA CC for joint monitoring

In this section, we introduce the Max-EWMA Control Chart, which leverages Bayesian theory to concurrently monitor the mean and variance of a normally distributed process. Let X1, X2, … Xn represent independent and identically normally distributed random variables with a mean of θ and a variance of \(\sigma^{2}\). The corresponding probability function is mathematically expressed as:

In a Bayesian framework, if the likelihood function and prior distribution are both normally distributed, the resulting posterior distribution also conforms to a normal distribution, with a mean (θ) and variance (σ). The pdf is as follows:

where \(\theta_{n} = \frac{{n\overline{x} \sigma_{0}^{2} + \sigma^{2} \theta_{0} }}{{\sigma^{2} + n\sigma_{0}^{2} }}\) and \(\sigma_{n}^{2} = \frac{{\sigma^{2} \sigma_{0}^{2} }}{{\sigma^{2} + n\sigma_{0}^{2} }}\) respectively.

To create a Max-EWMA chart using Bayesian methodology, we begin by selecting a sample of n values for a quality characteristic X from the production process. Subsequently, we compute transformed statistics under SELF for both the mean and variance as follows

and

where \(\hat{\theta }_{(SELF)} = \frac{{n\overline{x} \sigma_{0}^{2} + \sigma^{2} \theta_{0} }}{{\sigma^{2} + n\sigma_{0}^{2} }}\) and \(\hat{\sigma }_{(SELF)}^{2} = \frac{{\sigma^{2} \sigma_{0}^{2} }}{{\sigma^{2} + n\sigma_{0}^{2} }}\) are the Bayes estimators using SELF for the population mean and variance respectively, while using LLF the Bayesian estimators for population mean and variance is given as \(\hat{\theta }_{{\left( {_{LLF} } \right)}} = \frac{{n\overline{x}_{{(RSS_{i} )}} \sigma_{0}^{2} + \sigma^{2} \theta_{0} }}{{\sigma^{2} + n\sigma_{0}^{2} }} - \frac{{C^{\prime} }}{2}\sigma_{n}^{2}\) and \(\hat{\sigma }_{(LLF)}^{2} = \frac{{\sigma^{2} \sigma_{0}^{2} }}{{\sigma^{2} + n\sigma_{0}^{2} }}\), the transform statistic under LLF for both the process mean and variance is mathematically described as:

and

where \(H\left( {n,\nu } \right)\) is a chi-square distribution characterized by \(\nu\) degrees of freedom, and \(\phi^{ - 1}\) denotes the inverse of the standard normal distribution function, the computations for EWMA statistics regarding both the process mean and variance are outlined as follows:

In this context,\(P_{0}\) and \(Q_{0}\) represent the initial values for the EWMA sequences Pt and Qt, respectively, with \(\lambda\) (a constant within the range [0, 1]) denoting the smoothing constant. Pt and Qt are also mutually independent due to the independence of Pt and Qt. When considering an in-control process, both Pt and Qt follow normal distributions, each with a mean of zero and variances of \(\delta_{{P_{t} }}^{2}\) and \(\delta_{{Q_{t} }}^{2}\), respectively. This is defined as follows

The plotting statistics, Bayesian Max-EWMA for jointly monitoring using \(P_{t(LF)}\) and \(Q_{t(LF)}\) is mathematically defined as:

For \(t = 1,2, \ldots\)

As the Bayesian Max-EWMA statistic is positive value, so we required to plot only the upper control limit for jointly monitoring of the process mean and variance, if the plotting statistic \(Z_{t}\) within the UCL, then the process is in-control and if the \(Z_{t}\) cross the UCL the process is out-of-control.

Performance evaluation

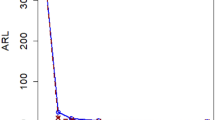

The performance of the proposed control charts has been assessed using Average Run Length (ARL) and Standard Deviation of Run Length (SDRL) as the key metrics. These metrics serve as the benchmark for evaluating the effectiveness of the control charts. The baseline ARL (ARL0) and SDRL (SDRL0) values represent the performance under normal, in-control conditions. On the other hand, ARL1 and SDRL1 denote the ARL and SDRL values, respectively, when the process deviates from the normal, indicating an out-of-control situation. The evaluation encompasses various mean shifts to provide a comprehensive understanding of the proposed charts' performance characteristics in different scenarios. We employed 50,000 replicates to calculate both the ARL and SDRL. The smoothing constants were set at λ = 0.10 and 0.25. Additionally, we explored various combinations of mean shift values, denoted as a = 0.00, 0.25, 0.50, 0.75, 1.00, 1.25, 1.50, 1.75, 2.00, 2.25, 2.50, 2.75, 3.00, as well as variance shift values, denoted as b = 0.25, 0.50, 0.75, 0.90, 1, 1.10, 1.25, 1.50, 2.00, 2.50, 3.00. These combinations were used in our study to assess the performance of the Bayesian Max-EWMA CC method in simultaneously monitoring both process mean and variance. The following simulation steps have been considered for the calculations of ARLs and SDRLs.

Step 1 Establishing the control limits

-

1.

Start by setting up the initial control limits, determining the values for UCL and λ.

-

2.

Create a random sample of size n, representing the in-control process by using normal distributions.

-

3.

Compute the statistic for the proposed control chart.

-

4.

Check if the plotting statistic falls within the UCL If it does then proceed to steps (iii–iv) again.

Step 2 Assessing the out-of-control Average Run Length (ARL).

-

1.

Create a random sample for the process with a shift.

-

2.

Compute the statistic of the proposed control chart.

-

3.

If the plotting statistic falls within the UCL, repeat steps (i–ii). Otherwise, record the number of generated points, representing a single out-of-control run length.

-

4.

Repeat the above process (i–iii) 50,000 times to determine the out-of-control ARL1 and SDRL1.

Tables 1, 2, 3 and 4 provide a framework for showcasing the outcomes derived from implementing the Bayesian Max-EWMA CC technique. This analysis meticulously evaluates the influence of distinct LFs tailored to emphasize the P and distributions, all assessed within the framework of informative priors. Based on the findings, the suggested Bayesian Max-EWMA CC designed for the simultaneous monitoring of production processes demonstrates an elevated degree of sensitivity when it comes to identifying signs of being out of control. Tables 1 and 2 provide compelling evidence that the Bayesian Max-EWMA CC, particularly under the SELF for P and PP distributions, efficiently detects shifts in both the process mean and variance in tandem. It is noteworthy that with each increment in the magnitude of the mean shift, there is a corresponding reduction in the values of ARLs. Similarly, each variance shift leads to a decrease in ARLs. These observations strongly suggest that this CC possesses the capability to promptly identify process shifts, making it a valuable tool for the early and comprehensive monitoring of production processes. For example, consider the ARL outcomes of the suggested Bayesian Max-EWMA CC when applying the SELF with a smoothing parameter \(\lambda = 0.10\), n = 5. i.e., a = 0.00, 0.25, 0.50, 0.75, 1.50, 3.00, while considered the corresponding shift in the process variance i.e., b = 1. The resulting ARL values for these shifts were 369.41, 28.27, 9.42, 5.59, 2.67, and 1.51, respectively. It is evident that as the magnitude of the mean shift increase, the ARL values significantly decrease. This observation underscores the higher efficiency of the suggested Bayesian Max-EWMA CC in detecting shifts in the process mean. Similarly, when we examine the impact of varying the process variance i.e., b = 0.25, 0.50, 0.75,1, 1.50, 3.00, with process mean a = 0.00. The ARL values are 3.39, 6.28, 20.59, 370.34, 7.87, and 1.76., the corresponding ARL values were 3.39, 6.28, 20.59, 370.34, 7.87, and 1.76. These results indicate that when the process variance changes from 1, the ARL outcomes decrease, demonstrating the significant performance of the proposed CC in detecting changes in process variance. Additionally, we observed from Table 2 that the performance of the suggested Bayesian Max-EWMA CC decreases as the smoothing constant values increase. This suggests that a lower smoothing constant may be more effective in certain scenarios.

Likewise, Tables 3 and 4 display the ARL results of the offered Bayesian Max-EWMA CC using the LLF with a fixed smoothing constant value of 0.25 and a sample size of 5. We considered various shifts in both the process i.e., a = 0.00, 0.25, 0.50, 0.75, 1.50, 3.00, along with the corresponding shift in the process variance i.e., b = 1 and obtained corresponding ARL values of 370.61, 44.74, 9.85, 4.96, 2.10, and 1.02. These results illustrate that as the process shifts increase, the ARL values decrease rapidly, indicating the accurate performance of the suggested Max-EWMA CC in detecting shifts in both the process mean and variance. Furthermore, it is important to note that the efficiency of the proposed CC for jointly monitoring the process mean and variance depends on the sample size. In Table 5, we have compared the suggested Bayesian Max-EWMA CC with the existing Bayesian EWMA CC using different values of smoothing constants i.e., \(\lambda\) = 0.10, 0.15 and 0.25 and with sample size n = 5. The ARL outcomes clearly shows that the proposed Bayesian Max-EWMA CC is more significantly identify signals indicating an out-of-control state more effectively than the existing Bayesian EWMA CC. From all the Tables 1, 2, 3, 4 and 5, it is evident that as the sample size increases, the ARL outcomes decrease, indicating the greater efficiency of the suggested CC in detecting deviations from the expected process parameters. The key findings of the study are given as:

-

The efficiency of the suggested Max-EWMA CC for simultaneously monitoring the process mean and variance, and for detecting minor to moderate shifts, is evident from the run length profiles presented in all four tables associated with the suggested CC.

-

Based on the simulation results, it is observed that the performance of the suggested Bayesian CC for joint monitoring improves as the smoothing constant value decreases.

-

In the current study, one of the most crucial factors under consideration is the variability in sample size. The results obtained from our analysis provide a clear and compelling insight. It is evident that as the sample size increases, the effectiveness and performance of the suggested Bayesian Max-EWMA CC experience a substantial and notable improvement.

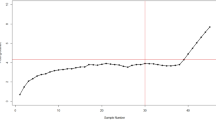

Real life data application

This article presents the practical implementation of the proposed Bayesian Max-EWMA CC. The data used for this demonstration is drawn from Montgomery21 and pertains to the hard-bake process in semiconductor manufacturing. The dataset consists of 45 samples, each containing 5 wafers, resulting in a total of 225 data points. These measurements, in microns, represent the flow width, and the time interval between each sample is consistently set at 1 h. Of these samples, the initial 30, comprising 150 observations, are considered indicative of a controlled process and are labeled as the phase-I dataset. Conversely, the remaining 15 samples, totaling 75 observations, are designated as representative of an out-of-control process and are referred to as the phase-II and the complete dataset is available in the appendix A. Both charts are employed to monitor variations in the process mean, and the computed results are showcased in Table 6.

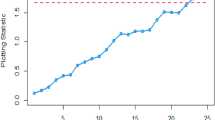

Figure 1 shows the Bayesian EWMA CC under SELF, in which all the points are within the control. Figures 2 and 3 provide a visual representation of the implementation of the recommended Bayesian Max-EWMA CC, designed to jointly monitor both the process mean and dispersion using the SELF approach. Upon closer examination of these charts, it becomes apparent that the process exhibits signals indicating it is out of control in the 39th and 43rd samples, for the smoothing constant values 0.10 and 0.25 respectively. Similarly, Figs. 4 and 5 depict the performance of the proposed CC using the LLF approach. These figures clearly show that the process displays out-of-control signals in the 40th and 42nd samples within the same context. This observation not only underscores the effectiveness of the Bayesian Max-EWMA CC but also indicates that the performance of the suggested CC deteriorates as the smoothing constant value increases.

Conclusion

This study introduces an innovative Bayesian Max-EWMA CC designed for concurrent monitoring of both process mean and variance. It utilizes informative prior distributions and incorporates two distinct LFs within the context of P distributions. The results, presented in Tables 1, 2, 3, and 4, evaluate the performance of the proposed CC using metrics such as ARL and SDRL. ARL plots (Figs. 1, 2, 3, 4) provide compelling evidence of the superior performance of the Bayesian CC. To further assess the CC under varying LFs, a practical example is applied to the semiconductor manufacturing hard bake process. Notably, the proposed Bayesian Max-EWMA CC, for both P distributions, excels in detecting out-of-control signals. Importantly, the principles of this study can be extended to other memory-type CCs.

Moreover, this approach is not confined to normal distributions; it can be tailored for data conforming binomial or Poisson distributions, albeit requiring adjustments to the likelihood function. Expanding this innovative technique to non-normal distributions and various CC types can yield a more comprehensive understanding of underlying data. This, in turn, facilitates early detection of potential quality issues, enables swift corrective actions, and reduces the risk of costly errors and defects. In practical applications, such as healthcare, this approach aids in promptly identifying anomalies in patient data, allowing for timely interventions. Within finance, it has the ability to reveal fraudulent activities and potential errors in financial transactions. In manufacturing, broadening the scope of this approach to encompass to non-normal distributions and various CC types helps in detecting variations in the production process, elevating product quality and reducing waste.

Data availability

The corresponding author holds the datasets utilized or analyzed in the ongoing study and can grant access to interested parties upon a reasonable request. This process ensures that those seeking access to the data for further examination or validation purposes can communicate with the corresponding author to obtain the necessary information.

References

Shewhart, W. A. The application of statistics as an aid in maintaining quality of a manufactured product. J. Am. Stat. Assoc. 20(152), 546–548 (1925).

Page, E. S. Continuous inspection schemes. Biometrika 41(1/2), 100–115 (1954).

Roberts, S. Control chart tests based on geometric moving averages. Technometrics 42(1), 97–101 (1959).

Herdiani, E. T., Fandrilla, G. & Sunusi, N. (2018). Modified Exponential Weighted Moving Average (EWMA) Control Chart on Autocorrelation Data. Paper presented at the Journal of Physics: Conference Series.

Gan, F. Joint monitoring of process mean and variance using exponentially weighted moving average control charts. Technometrics 37(4), 446–453 (1995).

Sanusi, R. A., Mukherjee, A. & Xie, M. A comparative study of some EWMA schemes for simultaneous monitoring of mean and variance of a Gaussian process. Comput. Ind. Eng. 135, 426–439 (2019).

Haq, A. & Razzaq, F. Maximum weighted adaptive CUSUM charts for simultaneous monitoring of process mean and variance. J. Stat. Comput. Simul. 90(16), 2949–2974 (2020).

Arif, F., Noor-Ul-Amin, M. & Hanif, M. Joint monitoring of mean and variance using likelihood ratio test statistic with measurement error. Qual. Technol. Quant. Manag. 18(2), 202–224 (2021).

Javaid, A., Noor-ul-Amin, M. & Hanif, M. Performance of Max-EWMA control chart for joint monitoring of mean and variance with measurement error. Commun. Stat.-Simul. Comput. 52(1), 1–26 (2023).

Yang, C.-M. An improved multiple quality characteristic analysis chart for simultaneous monitoring of process mean and variance of steering knuckle pin for green manufacturing. Qual. Eng. 33(3), 383–394 (2021).

Noor-ul-Amin, M., Aslam, I. & Feroze, N. Joint monitoring of mean and variance using Max-EWMA for Weibull process. Commun. Stat.-Simul. Comput. 52(7), 3257–3272 (2023).

Noor-ul-Amin, M. & Noor, S. An adaptive EWMA control chart for monitoring the process mean in Bayesian theory under different loss functions. Qual. Reliab. Eng. Int. 37(2), 804–819 (2021).

Riaz, S., Riaz, M., Nazeer, A. & Hussain, Z. On Bayesian EWMA control charts under different loss functions. Qual. Reliab. Eng. Int. 33(8), 2653–2665 (2017).

Noor, S., Noor-ul-Amin, M. & Abbasi, S. A. Bayesian EWMA control charts based on exponential and transformed exponential distributions. Qual. Reliab. Eng. Int. 37(4), 1678–1698 (2021).

Noor, S., Noor-ul-Amin, M., Mohsin, M. & Ahmed, A. Hybrid exponentially weighted moving average control chart using Bayesian approach. Commun. Stat.-Theory Methods 51(12), 3960–3984 (2022).

Khan, I. et al. Hybrid EWMA control chart under bayesian approach using ranked set sampling schemes with applications to hard-bake process. Appl. Sci. 13(5), 2837 (2023).

Aslam, M. & Anwar, S. M. An improved Bayesian Modified-EWMA location chart and its applications in mechanical and sport industry. PLoS ONE 15(2), e0229422 (2020).

Khan, I., Noor-ul-Amin, M., Khan, D. M., AlQahtani, S. A. & Sumelka, W. Adaptive EWMA control chart using Bayesian approach under ranked set sampling schemes with application to Hard Bake process. Sci. Rep. 13(1), 9463 (2023).

Gauss, C. (1955). Methods Moindres Carres Memoire sur la Combination des Observations, 1810 Translated by J. In: Bertrand.

Varian, H. R. (1975). A Bayesian approach to real estate assessment. Studies in Bayesian econometric and statistics in Honor of Leonard J. Savage, 195–208.

Montgomery, D. C. Introduction to Statistical Quality Control (Wiley, Hoboken, 2009).

Acknowledgements

This work was supported by Research Supporting Project Number (RSPD2024R585), King Saud University, Riyadh, Saudi Arabia.

Author information

Authors and Affiliations

Contributions

J.I. played an essential role in conceptualizing and designing the research, while M.N. supervised the study and had a pivotal role in the simulation. I.K. made significant contributions to statistical analysis and methodology, and S.A.A. contributed expertise in quantitative analysis and the simulation. U.Y. contributed to manuscript writing and provided valuable insights into CC methodology. Moreover, M.N. contributed to and applied the CC methodology in the manuscript. All authors participated in revising, interpreting results, and critically evaluating the CC. Their collaborative effort in diligently reviewing and approving the final manuscript showcases their commitment to both research and presentation.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Iqbal, J., Noor-ul-Amin, M., Khan, I. et al. A novel Bayesian Max-EWMA control chart for jointly monitoring the process mean and variance: an application to hard bake process. Sci Rep 13, 21224 (2023). https://doi.org/10.1038/s41598-023-48532-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-48532-4

This article is cited by

-

Memory type Bayesian adaptive max-EWMA control chart for weibull processes

Scientific Reports (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.