Abstract

Coeliac disease (CeD) is an immunological disease triggered by the consumption of gluten contained in food in individuals with a genetic predisposition. Diagnosis is based on the presence of small bowel mucosal atrophy and circulating autoantibodies (anti-type 2 transglutaminase antibodies). After diagnosis, patients follow a strict, life-long gluten-free diet. Although the criteria for diagnosis of this disease are well defined, the monitoring phase has been studied less and there is a lack of specific guidelines for this phase. To develop a set of clinical guidelines for CeD monitoring, we followed the Grading of Recommendations Assessment, Development and Evaluation methodology. Statements and recommendations with the level of evidence were developed and approved by the working group, which comprised gastroenterologists, pathologists, dieticians and biostatisticians. The proposed guidelines, endorsed by the North American and European coeliac disease scientific societies, make recommendations for best practices in monitoring patients with CeD based on the available evidence. The evidence level is low for many topics, suggesting that further research in specific aspects of CeD would be valuable. In conclusion, the present guidelines support clinicians in improving CeD treatment and follow-up and highlight novel issues that should be considered in future studies.

Similar content being viewed by others

Introduction

Coeliac disease

Coeliac disease (CeD) is an immunological disorder induced by gluten ingestion in individuals with genetic susceptibility, characterized by villous atrophy, intra-epithelial lymphocytosis and crypt hyperplasia of the small bowel. It is a chronic inflammatory state that improves when gluten-containing foods are excluded from the diet (gluten-free diet, GFD)1,2. The disease primarily affects the small bowel; however, the clinical manifestations are broad, comprising both intestinal and extra-intestinal symptoms3. CeD is more frequently diagnosed in women than in men (ratio 2:1), and it is one of the most common causes of chronic malabsorption, with a worldwide prevalence of around 1%4.

Gluten is a storage protein of the cereal grains wheat, rye and barley5. It is enriched in glutamines and prolines and, as a result, is incompletely digested by gastric, pancreatic and brush border peptidases, leaving large peptides6. In the small intestine, these peptides trigger an autoimmune reaction involving type 2 transglutaminase (TG2), the predominant autoantigen of CeD7. Deamidation increases the immunogenicity of gliadin fractions (the alcohol-soluble part of gluten with immunodominant properties), facilitating binding to HLA-DQ2 or HLA-DQ8 molecules. Gliadin peptides are then presented to T cells, and this leads to the formation of anti-type 2 transglutaminase antibodies (TG2Ab), pro-inflammatory cytokines, lymphocyte infiltration and subsequent tissue injury, leading to crypt hyperplasia and villous atrophy8.

Diagnosis of CeD in adulthood is based on serology (TG2Ab) and duodenal biopsy while the patient is on a gluten-containing diet. Analysis of TG2Ab serum levels should be done as a first-line screening test and has high sensitivity (93%) and specificity (98%)9; serum analysis of anti-endomysial antibodies (EMAs) is a second-line test with high specificity; although HLA typing is usually performed in uncertain cases1,10. CeD is highly correlated with the presence of HLA-DQ2 and/or HLA-DQ8 molecules. Nearly 100% of individuals diagnosed with CeD exhibit this specific genetic profile. Consequently, in cases for which the diagnosis of CeD is uncertain, HLA typing has a crucial role in ruling out the condition when the results are negative1. Currently, to make a definitive diagnosis of CeD in adults, evidence of compatible small bowel damage is required, and, therefore, an endoscopy with multiple bulb and distal duodenal biopsies must be performed. Histologically, evaluating the villous to crypt cell ratio in well-oriented biopsy specimens is crucial: it reduces misdiagnosis, whereas Marsh and Marsh–Oberhuber score systems are currently applied to describe the CeD-related small bowel lesion and endorsed by most CeD guidelines11,12. Originally, Marsh score described the small bowel damage as type 0 (normal mucosa), type 1 (presenting increased number of intra-epithelial lymphocytes, IELs), type 2 (increased IELs and crypt hyperplasia), type 3 (with villous atrophy) and type 4 (completely flattened and hypoplastic mucosa)12. Subsequently, Oberhuber further classified the Marsh 3 lesion into distinct subtypes based on the severity of villous atrophy: 3a for mild, 3b for moderate and 3c for severe11. Thus, the presence of TG2Ab in the serum and duodenal histological alterations usually make the diagnosis straightforward, and this diagnostic algorithm is supported by guidelines from various scientific societies, thereby supporting clinicians in CeD diagnosis1,4,10,13,14.

Currently, the only treatment for CeD is adherence to a life-long GFD; however, it is still unclear how to monitor patients with established CeD in the most appropriate way. Moreover, the therapeutic objectives of the GFD should be systematically addressed according to a temporal schedule, commencing with the resolution of symptoms, followed by achieving serological and mucosal normalization and ultimately, the prevention of comorbidities. Questions that require informed answers include the following. Which is the primary end point of the GFD? How should CeD activity be assessed? Is serology adequate for follow-up? Is the evaluation of small bowel mucosa necessary during the follow-up period, and at which time intervals? How should GFD adherence be measured? Currently, answers to these and other related questions are not always clear. Despite the paucity of evidence, it is common to use the same biomarkers as used for diagnosis (TG2Ab serology and duodenal histology) to monitor CeD activity and response to a GFD; furthermore, blood tests to evaluate intestinal function and the presence of common CeD comorbidities are routinely used in monitoring, often on a yearly basis. Using the Grading of Recommendations Assessment, Development and Evaluation (GRADE) methodology, we attempted to address these gaps and provide guidance to clinicians regarding a standardized and consensual approach to monitor CeD.

Scope and context

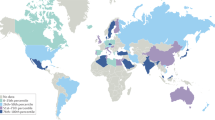

CeD is one of the most frequent immunological diseases. It is suggested that 1% of the global population could be affected by CeD6,15,16. Improvement in diagnostic tools and health-care systems in low-income countries, the possibility of making a diagnosis without duodenal biopsies in children17 and diet westernization in Asia are all factors that contribute to increases in the global CeD population.

The rapidly growing CeD population and the clear gaps in the current care of patients with CeD suggest the need to develop guidelines for CeD monitoring. Studies have revealed substantial variability in monitoring practices across different countries, highlighting a lack of consistent follow-up for patients with CeD18,19.

The present guidelines aim to analyse the best practices in monitoring CeD and patient response to a GFD and, therefore, provide a set of practical guidelines for clinicians using the GRADE methodology.

Methods

In October 2021, an independent guideline development group (GDG) was formed to compile best practices for the monitoring of CeD. The core group comprises dieticians, pathologists, gastroenterologists, endoscopists and methodologists, all experts in CeD and the GFD. Conflict of interest statements were collected from all panel members before and after the development of the present guidelines, and authors with specific conflicts of interest were excluded from voting. The guideline methodologist had no conflicts of interest. Notably, none of the GDG members received fees or funding for developing the present guidelines. Owing to the worldwide dispersion of the GDG, to maintain a sustainable profile, regular web calls were organized and a dedicated virtual space containing all the guideline-related material was available for all panel members20,21. The panel members addressed the specific tasks and clinical questions during the first call in November 2021 (Supplementary File 1). Subgroups formed by two or three experts were assigned the various tasks and questions. Key questions were developed following a format to outline the specific patient population, intervention, comparator and outcomes (PICO format), with subsequent voting to reach an agreement on the precise formulation22. An extensive literature search using search engines that include PubMed and Embase was conducted for English-written articles with appropriate medical subject heading (MeSH) terms (Supplementary File 1). Particular attention was given to the presence of meta-analyses on dedicated web pages (Cochrane). The levels of certainty of the evidence and strength of the recommendations were defined for every part of the statement, according to the GRADE system using GRADEpro23. All summaries of evidence for each PICO question, including research strategy and MeSH terms, answers to the questions and tables showing the studies analysed, are reported in Supplementary File 1. To define the strength of each recommendation, a 12-item decisional framework was administered to all GDG members who voted independently24. During the last call in January 2023, the panel agreed on the final recommendations (consensus was defined as 70% agreement with <15% disagreement), and a representative of patients provided comments on the manuscript (Supplementary File 2). The Society for the Study of Coeliac Disease and the European Society for the Study of Celiac Disease executive committees reviewed and endorsed this guideline.

Biomarkers and investigations used to monitor coeliac disease

Despite the absence of guidelines, clinical evaluations and varied investigations are routinely performed for CeD monitoring. GFD is expected to reduce symptoms and normalize biomarkers that were abnormal at diagnosis. Several scores are used in the diagnosis and monitoring of symptoms of gastrointestinal diseases, such as the Crohn’s disease activity index25, the gastro-oesophageal reflux disease impact scale26 and the gastrointestinal symptom rating scale (GSRS)27. These scores enable clinicians to compare patients’ progress and objectively assess outcomes. GSRS28 has often been used in CeD and contains five domains: abdominal discomfort, diarrhoea, indigestion, constipation and reflux. However, owing to the limitations of existing symptom scores (nonspecific and limited number of scored symptoms), a CeD-specific symptom index has been developed. The Celiac Symptom Index is a specific tool to measure and monitor CeD-related symptoms29. Together with clinical evaluation, various serological, endoscopic and histological markers are routinely used to monitor CeD and compliance with the GFD1. These biomarkers are based on common changes seen at diagnosis (increase in TG2Ab serum levels; endoscopic markers, such as scalloping or mosaic appearance; villous atrophy on biopsy samples; and vitamin deficiencies as a consequence of malabsorption) — consequently, normalization of these biomarkers is expected when CeD is in remission. However, clinical studies suggest that these tests might not be as accurate as commonly presumed in the evaluation of CeD activity30. Furthermore, the interval between each follow-up visit is largely unknown and not systematically investigated. Currently, it is suggested that patients be monitored on a yearly basis, but intervals can be shortened in the case of non-responsive CeD1.

In the next paragraphs and in the present guideline, we evaluate the clinical utility of the various available tests and biomarkers and provide related recommendations.

Blood tests

Blood tests are widely used to monitor chronic diseases owing to their non-invasive nature. In CeD, TG2Abs are usually used to evaluate disease activity and response to the GFD13. However, evidence differs regarding the correlation with CeD mucosal activity31 and the quantity of gluten required to be ingested before titres become positive32. Additional blood tests can be performed yearly, together with TG2Ab assessment, to evaluate the nutritional state of patients and the presence of comorbidities10,14.

Role of serological antibodies in prediction of adherence to the gluten-free diet

-

Recommendation 1. We recommend routine serological assessment with anti-TG2 IgA serum levels in patients with CeD on a GFD; a positive value suggests poor dietary adherence or gluten contamination, whereas a negative value cannot confirm strict adherence or lack of gluten exposure.

-

Level of evidence very low; strong recommendation.

CeD-specific antibodies start to decline within months of the introduction of the GFD, dropping rapidly during the first year and eventually falling to normal levels in most patients33,34,35,36. Persistently positive, or not decreasing, anti-TG2 IgA levels are strongly predictive of some degree of gluten intake (poor adherence or contamination)33,34,37,38. On the other hand, the sensitivity of anti-TG2 IgA for the detection of diet transgressions evaluated by patient self-reports or dietician-led assessments was as low as 52–57% in two cohort studies (53 and 95 patients, respectively)34,37. This makes coeliac serology a poor negative predictor of dietary adherence, illustrating that negative anti-TG2 IgA levels should not be considered a marker of strict dietary compliance.

EMA IgA and anti-deamidated gluten peptide (DGP) IgA perform similarly to anti-TG2Ab IgA detection in this setting33,37, with DGP IgA showing a tendency to lower sensitivity and EMA to higher specificity33.

With regard to patients with CeD with IgA deficiency, anti-TG2 IgG (often detected at CeD diagnosis in these patients) levels also decline over time on a GFD but fail to reach normalization in up to 80% of cases despite long-term strict diet adherence39,40. Serial measurements to assess the dynamics of anti-TG2 IgG levels might help to evaluate dietary compliance in CeD with IgA deficiency; however, data on this topic are scarce.

Overall, serology alone is not an accurate diagnostic tool for the assessment of dietary adherence in CeD. We still recommend routine serology assessments with anti-TG2 IgA in patients with CeD on a GFD. Positive anti-TG2 IgA detection levels are suggestive of poor diet adherence or gluten contamination, whereas negative anti-TG2 IgA detection cannot confirm strict adherence. In patients with IgA deficiency, anti-TG2 IgG levels tend to decline but often remain positive despite a strict GFD and should, therefore, not be used to assess adherence.

Role of serological antibodies to predict mucosal atrophy

-

Recommendation 2. We do not recommend normalization of serology (anti-TG2 IgA) as a marker of mucosal recovery during a long-term GFD owing to poor sensitivity for the identification of persistent villous atrophy.

-

Level of evidence very low; strong recommendation.

A meta-analysis of mostly prospective cohort studies showed that anti-TG2 IgA serology has relatively high specificity (0.83, 95% confidence interval (CI) 0.79–0.87) for CeD diagnosis but low sensitivity (0.50, 95% CI 0.41–0.60) for the identification of patients with persistent villous atrophy41. EMA IgA serology has a similar performance, with specificity 0.91 (95% CI 0.87–0.94) and sensitivity 0.45 (95% CI 0.34–0.57)41. A prospective study that involved 368 patients that aimed to assess the performance of bulbar histology for the detection of persistent villous atrophy confirmed the poor discriminatory ability of serological testing, with 39.7% sensitivity and 94.2% specificity for anti-TG2 IgA and 38.1% sensitivity and 96.4% specificity for EMA IgA42. Similar results were found in another study with 97 patients involved in evaluating the performance of faecal gluten immunogenic peptides and serum antibodies: anti-TG2 IgA serum analysis had 11.8–30% sensitivity and 100–72.7% specificity (depending on which of the two evaluated assays was considered) for the detection of residual mucosal damage, defined as Marsh 1 or higher43. In view of these data, coeliac serology can be considered a poor predictor of persistent mucosal damage because it misses most cases of villous flattening. Some studies have proposed the modification of the cut-offs normally used for the diagnosis of CeD in an effort to improve the performance of serological assays in CeD follow-up; however, this has resulted in little or no improvement in sensitivity44,45.

Although endoscopic biopsy remains the reference standard for the assessment of persistent villous atrophy at follow-up, there is a need for better non-invasive markers of intestinal damage46. With regard to serology, DGP IgG antibodies showed good discriminatory ability for the detection of persistent villous atrophy (87% sensitivity and 89% specificity) in a single study with 60 participants, even displaying a correlation between antibody titres and the severity of mucosal damage47. Another study, which involved 217 patients and aimed to assess the performance of a DGP IgA–IgG-based point-of-care test (POCT), demonstrated a higher sensitivity of the DGP-POCT compared with anti-TG2 IgA and EMA IgA assays for the detection of villous atrophy at follow-up (67.1% versus 44.7% and 37.7%)48, corroborating the possible role of DGP-based assessments as surrogate markers of mucosal damage.

Anti-TG2 IgA (and EMA) antibodies should not be routinely used as a marker of mucosal recovery during a long-term GFD owing to their poor sensitivity for the identification of persistent villous atrophy. DGP-based serological assays might prove to be a better predictor of persistent villous atrophy; however, further studies are needed to explore this possibility.

Blood biochemistry

-

Recommendation 3. We recommend the use of clinical chemistry analysis of blood count, iron, folate and other micronutrients for the evaluation of malabsorption and nutritional status in patients with CeD on a GFD.

-

Level of evidence very low; strong recommendation.

A strict GFD can restore intestinal villi and absorption function in patients with CeD2. However, studies demonstrate that mucosal recovery is not achieved in all patients, even in the absence of symptoms49,50 and normalization of coeliac-related antibody titres41. Therefore, laboratory tests should be used to detect nutritional deficiencies, as the nutritional parameters of patients with CeD on an optimized GFD should be comparable to those of the general population51,52.

Full blood count and iron status

Studies of the effect of GFD on recovery from iron deficiency anaemia (IDA) are scarce. In most cases, recovery from anaemia usually occurs within 1 year after commencement of a strict GFD, even without additional iron supplementation53,54. However, some patients with CeD continue to show IDA despite careful adherence to a GFD. A Finnish study showed that 6% of the 163 patients with CeD involved still had IDA after a 1-year GFD, especially in women53. A study from Saudi Arabia showed that nutrient intake in 51 women with CeD was below the recommended dietary intake. However, blood parameter values in women with CeD who followed a GFD for longer than 1 year were comparable to those in healthy controls52. Another study demonstrated that in a population of 163 adults with CeD, those with IDA at diagnosis had more gastrointestinal symptoms, worse indicators of well-being and increased levels of serum TG2Ab. After a 1-year GFD, the mucosal villous to crypt ratio was significantly lower in the anaemia group (P = 0.008), indicating a slower response53,55,56. Scricciolo and colleagues57 performed a clinical trial comparing 22 women with CeD on a GFD and with iron deficiency who were given either an iron-rich diet or iron supplementation. The investigators showed that the women on iron supplementation had an increase in ferritin serum levels compared with those on an iron-rich diet.

These results suggest that haemoglobin and ferritin serum levels should be monitored during follow-up, and iron supplementation should be considered when the GFD alone does not improve iron deficiency. Thus, careful monitoring of iron status is recommended in CeD follow-up, particularly in women who are in premenopause.

Lipids

Ballestero-Fernández and colleagues58 described no difference in blood levels of cholesterol and triglycerides in 64 patients with CeD compared with 74 healthy volunteers. Conversely, Remes-Troche and colleagues59 described lower levels of those lipids compared with healthy controls and individuals without coeliac disease but with non-coeliac gluten sensitivity. However, other studies described an increased risk of abnormal serum lipid levels in patients with CeD on a GFD60,61,62, particularly for triglycerides63,64. Independently of serum lipid levels, several reports have described an increased risk of fatty liver (also known as steatotic liver65) and metabolic dysfunction-associated fatty liver disease, which could predispose to a higher cardiovascular risk in patients with CeD on a GFD59,60,63,64. Reilly and colleagues66 showed that 29,096 individuals with CeD are at increased risk of nonalcoholic fatty liver disease (also known as metabolic dysfunction-associated steatotic liver disease65) compared with the general population (144,522 reference individuals), particularly during the first year after CeD diagnosis, but persisting through 15 years beyond diagnosis with CeD. Liver damage should be detected through evaluation of liver serum transaminases during follow-up. Interestingly, a UK study described that in 469,095 adults, the 2,083 persons affected with CeD, aged 40–69 years, had a lower prevalence of traditional cardiovascular risk factors but a higher risk of developing cardiovascular disease than those without CeD67. Moreover, a study from the Swedish register has shown that in a cohort of 49,829 individuals with CeD, there was an increased risk of death from cardiovascular disease compared with the general population (3.5 versus 3.4 per 1,000 person-years; hazard ratio (HR), 1.08 (95% CI 1.02–1.13))68. In conclusion, patients with CeD should be assessed for metabolic features, such as glucose, lipid status serum biomarkers and serum liver enzymes over time, according to age and risk factors, as per the general population.

Vitamins

There are few studies investigating serum vitamin levels, with the exception of vitamin D, and data are scant with discordant results, therefore, no clear recommendations can be derived58,61,69,70,71. Reasonably, follow-up testing should be adapted based on vitamin levels at diagnosis, and supplementation is suggested when needed.

Conversely, studies on vitamin D are numerous, and the data show that, despite deficiency at diagnosis and follow-up, there is no relationship with bone density58,70,72,73. The studies, however, have several limitations, including seasonal vitamin D (sun exposure), inconsistent testing for the active form of vitamin D (also known as 1,25-dihydroxycholecalciferol or calcitriol), history of previous vitamin D supplementation and limited data on men. Therefore, monitoring for vitamin D should be considered based on serum levels found at diagnosis and the lifestyle of patients.

Hallert and colleagues74 reported that in a group of 30 adult patients with CeD on a GFD for several years, half of them showed low serum levels of folate, vitamin B6 and vitamin B12, associated with higher homocysteine levels. The researchers also reported lower folate and vitamin B12 intake than the control group.

Fasting glucose

Despite the small size of the published studies, a strict GFD in children and/or adults with type 1 diabetes has positive effects on glycaemic control, indicating a trend towards a decrease in hypoglycaemic episodes and better glycaemic control62,75. Ludvigsson and colleagues76 described that in 9,243 individuals with CeD diagnosed before 20 years of age, CeD was associated with a 2.4-fold increased risk of later type 1 diabetes (95% CI 1.9–3.0). Conversely, the association between CeD, GFD and the development of type 2 diabetes is inconsistent. Ballestero-Fernández and colleagues58 described no variation of glucose on a GFD. Some studies reported that patients with CeD are less likely to develop type 2 diabetes and others correlate the use of a highly processed GFD with an increased risk of elevated glucose serum levels61,63,64. Zanini and colleagues61 showed no change in insulin resistance in either men or women with CeD, and the mean (± s.d.) value for the whole cohort was 1.3 ± 0.7 at baseline and 1.3 ± 0.8 during GFD (n = 100).

In the context of the risk of metabolic syndrome reported earlier, patients should routinely be assessed for serum glucose levels.

Electrolytes

Ballestero-Fernández and colleagues58 reported no deficiency in zinc, copper, selenium or magnesium. There are no studies about serum levels of micronutrients, such as zinc and copper, in adult patients with CeD on a GFD. A study analysed the nutritional indices collected in persons with CeD in the cross-sectional National Health and Nutrition Examination Survey, 2009–2014. The study, which involved 16,966 participants with 28 subjects with CeD, reported that in the US population over 6 years, the zinc and copper levels of people diagnosed with CeD were similar to those of individuals without CeD despite reduced total calories and macronutrient intake77. Rawal and colleagues78 described that zinc plasma levels rose in 134 patients on a GFD regardless of supplementation, and Ciacci and colleagues72 found no differences in serum levels of phosphorus among 50 untreated and 55 treated patients with CeD. Therefore, no recommendation is possible regarding the testing of electrolytes in CeD monitoring.

Other blood biomarkers

Novel and non-routine blood tests have been used in CeD to monitor its activity and GFD adherence. Patients with the most abnormal pathology have a loss of duodenal villi cytochrome P450 3A4 (CYP3A4), a drug-metabolizing enzyme that inactivates many drugs79,80. Chretien and colleagues81 conducted a clinical trial with 115 participants (47 patients with CeD, 68 healthy individuals), that showed an increase in serum concentrations of the antihypertensive drug felodipine in patients with CeD, probably secondary to decreased small intestinal CYP3A4 expression. Similar results were found for evaluation of the metabolism of the cholesterol-lowering medication simvastatin in a clinical trial with 54 participants (11 healthy volunteers, 43 patients with CeD)82. Furthermore, in recent years, clinical studies83,84,85 have been performed to evaluate the potential of tetramer-based assays to monitor patients with CeD on a GFD, particularly testing their expression after a gluten challenge. Other studies showed an increased level of reactive oxygen species in the serum of non-responsive patients with CeD86. Furthermore, in vivo experimental findings have indicated that citrulline, zonulin and intestinal-fatty acid binding protein (all markers of enterocyte damage) might be useful in monitoring CeD87. However, none of them is used in current clinical practice. In the future, those novel markers that measure direct mucosal damage might complement the traditional tests. The evaluation of T cells in blood has been considered a tool for CeD detection after a short-term gluten challenge in patients on a GFD without a clear diagnosis. Evaluation of T cell features in blood could be considered during follow-up to evaluate dietary compliance88,89. However, the role of these approaches outside of serology has not been fully explored.

Evaluation of gluten-free diet adherence and quality

Nutritional interviews and alimentary diaries to evaluate GFD adherence

-

Recommendation 4. We recommend the use of dietetic evaluation to assess patient adherence to the GFD.

-

Level of evidence low; strong recommendation.

International guidelines for the diagnosis of CeD highlight the importance of early referral to dieticians or nutritionists who have clinical experience in CeD14,90,91. Their role at diagnosis includes nutritional, anthropometric (BMI, waist circumference) and psychosocial assessments, as well as delivering comprehensive dietary education to facilitate a transition to a strict life-long GFD. Regular follow-ups with dieticians or nutritionists are recommended to review the above parameters. Additionally, a central component of this follow-up is the evaluation of patient adherence to the GFD92.

However, there is a limited range of published data assessing the efficacy of nutritional evaluations in relation to GFD adherence in adult patients with CeD. Based on the included studies, dietetic assessments might fail to identify ongoing villous atrophy in many patients93,94,95. Only two studies were identified that compared dietetic assessments with duodenal biopsy samples, the reference standard for assessment of disease activity. One study (in 94 patients with ongoing villous atrophy) indicated that dieticians were able to identify sources of gluten inclusion in 50% of cases, resulting in the restoration of villous height95. The other study, which used a dichotomous outcome of ‘good or poor’ GFD adherence, indicated that dietician evaluation had a sensitivity and specificity of 64% and 80%, respectively, when predicting persisting villous atrophy in a subset of 25 patients96. There is great variation in how nutritional assessments are undertaken, and standardized nutritional assessments (SNAs) seem to be important to maximize the accuracy of evaluations97. SNAs performed better than an adherence questionnaire (Coeliac Dietary Adherence Test (CDAT)) in one prospective cohort study conducted on 92 patients with CeD98. Equally, another study that compared five measures of GFD adherence indicted that SNAs could not be replaced with serological markers or patient self-assessments of adherence99. However, the current published studies on SNAs have been carried out only in Western countries, although many aspects of SNAs might be broadly generalizable across cultures; therefore, further research is required to understand the nuances of nutritional assessment of GFD adherence in different countries100.

Overall, the current published literature suggests that dietetic and nutritional evaluations of GFD adherence should be standardized and, ideally, used in combination with other non-invasive methods of GFD adherence. However, the gold standard for predicting persisting villous atrophy remains the repeat duodenal biopsy.

Nutritional interviews and alimentary diaries to evaluate GFD nutritional quality

-

Recommendation 5. We recommend a dietetic evaluation to monitor the nutritional balance of the GFD during follow-up.

-

Level of evidence very low; weak recommendation.

Several studies in adult and paediatric populations have focused on the nutritional quality of a GFD, showing that in patients with CeD, there is a reduction in the intake of fibre, iron, calcium, magnesium and B vitamins, although there was higher consumption of fatty foods, sugary foods and drinks. In addition, there was higher consumption of processed foods that would appear to have a higher glycaemic index than conventional products101,102,103,104,105,106,107,108.

Although there were conflicting findings about changes in anthropometric measurements in patients with CeD compared with healthy individuals, the approach to a GFD involves changes in eating habits, such as increasing the frequency of snack and sweets consumption or eating from the pot or in inappropriate places such as the bedroom or living room109. These changes, in particular, turn out to be less healthy and seem to be acquired by the patient’s entire family, potentially contributing to the so-called obesogenic environment109,110.

Thus, it is essential to educate care-givers on a healthy diet and lifestyle, with the final goal of ensuring correct diet instructions111.

Meal patterns for patients with CeD should ideally be individualized, reflecting their relevant medical history, food preferences, socioeconomic status and, when applicable, an individual’s risk of food insecurity (which can reduce the patient’s adherence to the GFD). Individualized nutritional approaches can also promote dietary patterns that support optimal health outcomes112.

The inclusion of alternative gluten-free grains such as buckwheat, amaranth, quinoa, millet and sorghum increased the nutritional profile of the GFD in patients with CeD, restricting their carbohydrate sources of rice, potatoes and corn113. Gluten-free grains can be consumed as whole grains and/or can be used to cook home-made gluten-free bread, cake, biscuits and pizza. They are important alternative pseudo-cereals that are rich in proteins, fibre, unsaturated fat, B-complex vitamins and minerals. Their intake can improve nutritional status and well-being102.

Moreover, foods that should be consumed every day are ideally home-made, natural, gluten-free preparations, which might include extra-virgin olive oil, milk, yoghurt (rich in natural probiotics), plant foods (vegetables, fresh fruits, legumes, nuts, herbs and spices, limiting salt) and fish (rich in omega 3), such as codfish, seabass, sardines and anchovies. Potatoes and animal foods should be consumed weekly; red meat and processed meats should be consumed less frequently, whereas white meats have fewer restrictions. Dairy products (preferably mozzarella, robiola, ricotta, goat cheese, feta) should be consumed moderately114,115.

Patients following plant-based diets might require additional support and should be encouraged to include legumes, nuts, meat alternatives, gluten-free fortified non-dairy milk (preferably soya) and appropriate micronutrient supplementation, as required116.

Role of questionnaires to quantify GFD adherence in routine clinical assessment

-

Recommendation 6. We recommend the use of a standardized patient-reported adherence questionnaire as a reasonable method of adherence assessment when an expert dietician is not readily available.

-

Level of evidence very low; strong recommendation.

There is no single approach to the assessment of adherence to a GFD that has been shown to be highly accurate. Assessment of adherence to a GFD is distinct and should be differentiated from unintentional exposure to gluten on a GFD, as gluten exposure can be observed even when there is a high level of adherence. The traditional reference standard for the assessment of adherence to a GFD is an assessment by a dietician with expertise in CeD14. However, access to these clinicians is limited, and even among those with expertise, there is no standardized method of dietetic assessment, which might result in variations in reported adherence117,118,119,120,121,122,123,124.

Standardized patient-reported adherence questionnaires represent a potential alternative to expert dietician assessments, particularly in situations in which access to an expert dietician is limited or when a comparison of adherence between patients is required. The available data suggest that the use of a standardized patient-reported adherence survey is superior to a patient self-report for assessing dietetic adherence as assessed by expert dieticians and, in some studies, has been predictive of ongoing enteropathy99.

Several patient-reported adherence questionnaires have been published in the peer-reviewed literature, with the most commonly cited being the Biagi score125 and the CDAT119; however, several newer scales, such as the GF-EAT, Diet-GFD and Standardized Diet Evaluation (SDE) have also been described124. In clinical settings, the SDE has been reported to perform better than self-report assessments and serologies97. However, the availability and feasibility of implementing an SDE by a specialist dietician is limited.

There are limited data on comparison of the scales; however, one study42 has suggested higher specificity for the Biagi score and a higher sensitivity score for the CDAT, with overall similar operating characteristics.

Overall, the data support the use of a standardized patient-reported adherence questionnaire as a reasonable method of adherence assessment when an expert dietician is not readily available or when a comparison of adherence between populations is required.

The development of new questionnaires and studies comparing the usefulness of various questionnaires might enable a single preferred instrument. At this time, however, we recommend clinicians choose the questionnaire that seems most relevant and appropriate for their patient population.

GFD adherence questionnaires for clinical assessment of patients with suspected ongoing CeD

-

Recommendation 7. We recommend the use of adherence questionnaires as part of a holistic clinical assessment.

-

Level of evidence very low; weak recommendation.

Although there are many potential causes of ongoing symptoms in individuals with CeD, gluten exposure has been reported to be common. In a study by Silvester and colleagues124 in 222 patients with CeD, 91% of adults on a GFD reported gluten exposure less than once per month, whereas 66% reported intermittent symptoms from suspected gluten exposure. In comparison with studies of patient-reported adherence questionnaires in general coeliac populations, studies to assess the ability of questionnaires to detect gluten exposure in patients with ongoing symptoms or non-responsive disease (ongoing signs or symptoms potentially consistent with active CeD despite an attempted GFD) are limited. On the basis of the available studies, it is likely that standardized adherence questionnaires are superior to subject self-reports in assessing the likelihood of gluten exposure as the cause of ongoing symptoms in individuals with CeD99. However, as evaluations of non-responsive CeD can be clinically challenging and can include several diet-based and non-diet-based aetiologies, adherence questionnaires should be used in this setting only as part of a holistic clinical assessment and when expert dietician support is not available.

Urinary and stool gluten immunogenic peptide detection

-

Recommendation 8. We recommend the determination of GIPs in urine or stool in cases of non-responsive CeD when gluten intake is suspected .

-

Level of evidence very low; strong recommendation.

-

Recommendation 9. We recommend the use of GIPs to prove estimated gluten intake.

-

Level of evidence very low; weak recommendation.

Determination of urine and/or faecal gluten immunogenic peptides (GIPs) is the only available direct approach for evaluation of voluntary or involuntary gluten consumption126,127. Alternative indirect methods are based on the histopathological and immunological consequences of gluten consumption or subjective estimations by questions about symptoms or dietary habits14.

Most of the adult patients with CeD on follow-up with mucosal histological damage are adherent according to dietetic questionnaires, asymptomatic and seronegative. However, the stool and urine samples of this group of patients with persistent mucosal damage are mostly GIP positive (59–100%), and the relationship might increase with the amount of GIP and the frequency of positive GIP tests128.

A discrete number of patients with CeD on follow-up consume detectable amounts of gluten (67–89% for weekly to monthly multiple testing). The levels of urinary and faecal GIPs of most of the patients who tested positive for one or both of these tests following a GFD are substantially lower than those obtained in the diagnosis129. This reduction in gluten consumption in GIP-positive patients with CeD on follow-up is sufficient to produce negative results in serological tests and clinical symptoms and could be hidden in dietetic questionnaires. However, the continuous gluten consumption at the low–moderate level revealed by urinary GIP positivity in multiple tests or with substantial levels of faecal GIP seemed to have a predictive value for persistent duodenal mucosal damage in CeD129. Thus, the use of GIP determinations (presumably multiple) can be used to verify the correctness of the GFD, frequently associated with the persistence of duodenal mucosal damage42,97,124,126,127,128,129,130.

Gluten peptides in the stool and urine are produced from previous gluten ingestion: the concentrations of faecal and/or urinary GIP showed significant (P < 0.001) correlation with the amount of gluten ingested, but with a high window of variability likely owing to individual rates of gluten digestion and passage through the gastrointestinal tract, as well as the time of sample collection after gluten intake130,131. Furthermore, the determination of urinary and/or faecal GIPs could be used to estimate the amount of ingested gluten, although the results on this issue seem conflicting, probably owing to the absence of a standardized protocol43,94,126,132,133,134,135,136,137,138,139,140,141,142.

Methods to detect urine or stool GIPs could be limited by the amount of detectable ingested gluten, individual variability in gluten metabolism and the maximum time of detectable GIPs after ingestion of gluten (12–24 h for urine, 1–7 days for stool)131. Single faecal or urine GIP determinations could detect daily ingestion of 50 mg of gluten in 15–50% of patients and 97–100% for unrestricted gluten intake (>5 g), as highlighted in a study by Burger and colleagues130 on 15 patients.

The use of several faecal and/or urine samples at different days and times of the day and the development of a standardized and widely accepted protocol would substantially improve the sensitivity and accuracy of the assessment of diet compliance in patients with CeD.

Monitoring the effect of gluten avoidance on quality of life

-

Recommendation 10. We recommend the promotion of GFD adherence to improve QoL beyond other clinical benefits. However, clinicians should be aware that GFD hypervigilance might diminish QoL and patients should be monitored for this as well.

-

Level of evidence very low; weak recommendation.

Taking into account 18 studies (14 cross-sectional, three cohort, one case–control) that met the inclusion criteria, more than three-quarters found a positive association between the level of GFD adherence and quality of life (QoL) in adults with CeD43,143,144,145,146,147,148,149,150,151,152,153,154,155,156,157,158,159. More specifically, in individuals with CeD, 14 (77.8%) studies suggested that higher GFD adherence was substantially associated with higher general or CeD-specific QoL143,144,145,146,147,148,150,151,152,153,154,155,156,157. However, three studies156,158,159 found no correlation between GFD adherence and higher QoL, and one study156 found extreme GFD vigilance associated with lower QoL. Most studies were conducted in populations of high socioeconomic status with relatively high GFD adherence and ‘good’ QoL at the onset. Studies ranged in size (50–7,044 participants), geographical location (USA: three151,156,157; Europe: nine43,143,145,146,147,148,155,158,159; Australia and New Zealand: four149,152,153,154; Middle-East: two144,150) and used validated instruments to assess GFD adherence and CeD-specific or general QoL. Findings were limited in that not all studies relied on biopsy-confirmed CeD, GFD adherence and QoL were based on self-reports and the cross-sectional nature of most of the studies did not infer causation. In addition to increased GFD adherence, other factors were associated with improved QoL (for example, increased food security, GFD knowledge, CeD-specific self-efficacy, self-compassion, autonomous motivation and fewer psychological and gastrointestinal symptoms) and warrant further study to better understand their role in monitoring and potential areas for intervention. It is important to point out that we focused only on general or CeD-specific QoL and did not consider other aspects of QoL, such as anxiety, depression and/or disordered eating patterns. Overall, the findings suggest that, in adults with CeD, monitoring to ensure GFD adherence might be important to promote improvements in QoL. However, there remains a concern that extreme GFD vigilance might diminish QoL and should also be monitored.

Endoscopy and histology

Routine use of follow-up duodenal and bulb biopsy to evaluate CeD activity

-

Recommend0ation 11. We recommend against the use of a routine re-biopsy strategy in patients with CeD on a GFD.

-

Level of evidence very low; strong recommendation.

The need for duodenal biopsies in CeD follow-up has been debated. In the case of seronegative CeD, the initial duodenal biopsy samples are essential for a correct diagnosis1; however, additional data on follow-up biopsy samples in both seropositive and seronegative CeD revealed variability of the histological abnormalities throughout the length of the duodenum and lack of uniform response to gluten elimination, raising questions about the value of performing follow-up biopsies in CeD160,161,162. Studies on mucosal healing after treatment with GFD have revealed that mucosal healing might be slow or incomplete in a substantial number of patients, with healing achieved in only 30% of patients in some studies and with higher recovery rates in children compared with adults.41,94,161,162,163,164,165,166,167,168,169. Challenges in comparing data between biopsy follow-up studies relate to the use of retrospective versus prospective methodology, the interobserver variation that occurs in evaluating histology using a categorical classification scheme (for example, Marsh–Oberhuber algorithm) and the lack of use of quantitative histology in some studies170,171.

According to the criteria of the European Society for Paediatric Gastroenterology Hepatology and Nutrition, serology has primary importance in the diagnosis of CeD, regardless of symptoms17. A ‘no biopsy’ approach to CeD diagnosis based on high titres of TG2Ab has been widely debated as a possible diagnostic approach in a subgroup of adult populations; one study suggested that the avoidance of upper endoscopy procedures and biopsies could be achieved in about one-third of adult patients172. Although this approach is only for research purposes, establishing guidelines for obtaining a follow-up biopsy is challenging when considering these shifts in practice.

Only a few studies exist that link duodenal mucosal status on follow-up biopsy samples with prognosis, malabsorption, lymphoma risk and mortality. In these, persistent flat mucosa increases the risk of malignancy173. Mortality risk was not increased in a large cohort of 7,648 patients174 and showed a borderline increase (not statistically associated with persistent mucosal injury) in a smaller study with 381 patients175. Conversely, mortality risk was slightly increased in a large Swedish study with 49,829 patients68. Similarly, the rates of other complications, such as anaemia, malignancy and other malabsorption features, do not seem to increase in patients with persistent mucosal abnormalities compared with patients with normalized mucosa31,44,164,176,177,178,179, apart from a slightly increased risk of hip fracture exclusively in the case of subtotal or total atrophy180.

Current evidence suggests that the resolution of clinical symptom status is the most reliable prognostic factor in CeD, even though these clinical parameters do not reliably correlate with complete mucosal healing181.

In summary, there is insufficient evidence to suggest that clinical outcomes are substantially altered because of routine re-biopsy in adult patients with CeD.

Recommended timing and sampling strategy of duodenal biopsy in patients with CeD on a GFD

-

Recommendation 12. We recommend 12–24 months from the beginning of the GFD as a reasonable time frame to repeat duodenal biopsy in treated patients with CeD, barring a severe clinical course for which repeat biopsy would be indicated in a shorter interval.

-

Level of evidence very low; weak recommendation.

-

Recommendation 13. We recommend four oriented biopsies in the second part of the duodenum, plus two oriented biopsies in the bulb, as a reasonable strategy to assess mucosal healing in patients with CeD on a GFD.

-

Level of evidence very low; strong recommendation.

Currently, there are no studies that indicate the absolute necessity of follow-up biopsy as routine practice for adult patients with CeD in the absence of specific clinical indications. Specifically, follow-up biopsies are not mandatory if the patient with CeD is asymptomatic, on a GFD and has no other features that suggest increased risk of complications13. If a routine biopsy is performed in asymptomatic patients with CeD, most studies recommend a time interval of at least 24 months from the time of the initial biopsy to enable slow recovery, especially in adult populations161,182,183. As stated in British and European guidelines1,14, we propose the following recommendation for performing a biopsy during the follow-up.

Follow-up biopsies should be performed in patients with persistent symptoms despite adherence to a strict GFD; these are patients who develop additional red flag symptoms (anaemia, diarrhoea, malabsorption, weight loss) during gluten elimination and in patients with persistently positive CeD serology, despite adherence to a strict GFD, as assessed by a dietician (Box 1).

To establish the initial diagnosis of CeD, clinical guidelines recommend obtaining four biopsy samples from the second duodenum and two biopsy samples from the duodenal bulb to account for the possibility of a patchy distribution of injury4. Placing duodenal bulb and distal duodenal biopsy samples in separate specimen containers at diagnosis is not required but does provide information on the distribution of mucosal injury should that information be of interest. To the best of our knowledge, only one study addresses the location and number of biopsies needed to characterize the mucosal status at the time of follow-up42. In this study, repeat duodenal biopsies were performed in 368 patients: the inclusion of biopsy samples from the first portion of the duodenum increased the detection of persistent villous blunting by 10%. In light of this finding, and in the absence of evidence to suggest otherwise, adherence to current biopsy guidelines for diagnosis is recommended. Further, to detect patchy mucosal injury, near-focus narrow-band imaging is encouraged, and it is suggested that endoscopists obtain one biopsy specimen per pass of the forceps as a single-biopsy technique improves the yield of well-oriented duodenal biopsy specimens184,185.

At microscopy, biopsy samples should be evaluated according to published best practice to ensure that only well-oriented villous–crypt units are assessed186. There are data to suggest that morphometric measurements of villous height to crypt depth ratios result in better interobserver agreement on mucosal abnormalities than non-morphometric approaches, and it is hoped that digital approaches to mucosal assessments will become more widely available in the future187.

Molecular analysis of duodenal biopsy samples in refractory CeD

Refractory coeliac disease (RCeD) is defined by the persistence of symptoms and villous atrophy in the absence of other causes despite a strict GFD for at least 6 months188. RCeD can be further classified as type I and type II on the basis of the number of aberrant IELs and clonal rearrangement of the T cell receptor (TCR)189. These aberrant IELs (surface CD3− and intracellular CD3+, surface CD8−) can be identified by immunohistochemistry (IHC) and, more accurately, by flow cytometry. In RCeD type II, aberrant IELs make up 20% or more of total IELs on flow cytometry and more than 50% on IHC190. RCeD type II is considered a low-grade intra-epithelial lymphoma188,189. Typically, RCeD should be managed in tertiary or national referral centres with specific experience in this rare form of CeD.

TCR clonality analysis and immunohistochemistry

-

Recommendation 14. We recommend the use of TCR clonality analysis to subtype refractory CeD. If immunophenotyping by flow cytometry of isolated small intestinal IELs is not available, a combinatory approach of TCR clonality analysis and IHC of duodenal mucosa is recommended.

-

Level of evidence very low; weak recommendation.

Eight studies were identified that included data on diagnostic accuracy for RCeD subtype I or II191,192,193,194,195,196,197,198. However, none of these was designed as a prospective diagnostic study, recruiting patients affected by so-far unresolved enteropathies, including those with a prior diagnosis of CeD and following a strict, prospectively designed diagnostic trait. Furthermore, comparability of the included studies is somewhat hampered by lack of a gold standard that defines RCeD subtypes and marked differences in the purpose of these non-prospective studies that might affect the selection of patients to include a homogeneous patient population to address the respective study aims.

In particular, the sensitivity in diagnosis of RCeD type II with IHC was found to be 62–100%, whereas specificity was 70–100%199. The sensitivity of TCR clonality analysis by PCR ranged from 53% to 100%, whereas the specificity range was 0–100%199,200. Although these ranges document a substantial scatter of data, the somewhat more stable sensitivities in analysing the T cell clonality and the more stable specificities in the IHC analysis would favour a sequential diagnostic process. Moreover, early studies have shown that patients with evident TCR clonality (RCeD type II), who present with small intestinal IELs that are CD8− or TCRαβ−, are more prone to develop an enteropathy-associated T cell lymphoma, which might implicate a prognostic function of this marker197.

One issue in the interpretation of TCR clonality analyses is how to deal with an oligoclonal band pattern and prominent clonal peaks. Generally, most oligoclonal results should be interpreted as normal (that is, not-disease-related) findings. However, prominent clonal peaks within an oligo- or polyclonal background can be substantial, specifically when they are reproduced in independent mucosal samples of the same patient201.

One IHC analysis that should be further observed is immunostaining for NKp46+ IELs of the small intestinal mucosa, as this seems to have a very good discriminatory property191.

Both techniques, immunophenotyping by IHC and analysis of TCR clonality, have limitations with regard to diagnostic accuracy. Specifically, the analysis of TCR clonality by PCR of the variable domain of the TCR lacks specificity201.

If other techniques (for example, multi-parameter testing by flow cytometry) are unavailable, performing a combination of IHC and TCR clonality testing is recommended.

Diagnostic accuracy of these techniques increases when identical prominent clonal PCR sequences are confirmed in a second independent mucosa sample that originates from an independent gastroscopy and by interpreting the two diagnostic modalities sequentially by identifying clonal T cell populations first, and second, by judging the risk of progression to lymphoma by IHC immunophenotyping of small intestinal IELs197.

Role of flow cytometric analysis of isolated small intestinal intra-epithelial lymphocytes

-

Recommendation 15. We recommend the use of flow cytometry to immunophenotype small intestinal IELs as the reference standard for subtype RCeD.

-

Level of evidence very low; strong recommendation.

Five studies were identified that included data on diagnostic accuracy for determination of RCeD subtype by flow cytometry after immunostaining188,190,192,199,202. Most studies identified the so-called aberrant lymphocyte population by immunostaining for cytoplasmic CD3+CD7+CD103+ lineage lymphocytes in a population of isolated, mostly IELs from small intestinal mucosa190. Again, these studies were not prospectively designed. Furthermore, the comparability of the included studies was similarly hampered by the factors listed earlier188,192,202.

The sensitivity for diagnosis of RCeD type II by flow cytometry was, in most studies, as high as 100%. However, these results included some studies that had not initially been designed to evaluate the diagnostic accuracy of flow cytometry188,202. Nevertheless, one study found a significantly (P = 0.0009) reduced sensitivity for flow cytometry, which was allocated to a small number of patients (four among 30 patients with RCeD) with an unusual fate of RCeD, for example, the development of γδ T cell lymphoma199. This study suggested that combining TCR clonality analysis and flow cytometry results in an increased diagnostic yield. This observation199 needs to be critically appraised, as some of the studies introduced in the previous paragraph described markedly decreased sensitivities for the TCR clonality analysis.

If available, immunophenotyping of isolated small intestinal IELs by flow cytometry after immunostaining should be performed for RCeD subtyping, as it has high diagnostic accuracy. The panel should comprise at least the following antibody markers: surface CD3, intracytoplasmic CD3, CD7, CD103, CD45 and, eventually, other markers, such as CD4, CD8 and TCR antibodies.

As one study identified a circumscribed lack of sensitivity of this technique192, a combinatory approach analysing small intestinal TCR clonality and immunophenotyping of small intestinal IELs by flow cytometry can be considered.

Monitoring of CeD with small bowel endoscopy

-

Recommendation 16. We recommend the use of capsule endoscopy and/or device-assisted enteroscopy in CeD monitoring in cases of suspected complications and RCeD.

-

Level of evidence very low; strong recommendation.

Features of CeD in the small bowel, such as villous atrophy, scalloping of folds, mosaicism, nodularity of the mucosa, fissuring of folds and ulcers, can be delineated on small bowel capsule endoscopy (SBCE)203,204,205. The sensitivity of SBCE to delineate features of CeD varies between 70% and 93% and the specificity varies between 90% and 100%203,204,206,207,208,209,210. However, duodenal histology remains the gold standard in making the diagnosis.

SBCE has a role in the diagnosis of CeD in which there is inadequate evidence for the diagnosis of CeD (normal or mildly positive CeD serology or evidence of Marsh 1 and 2 disease but with absence of villous flattening) or in which patients refuse or cannot undergo a gastroduodenoscopy for duodenal biopsy samples211,212,213.

Patients with established CeD occasionally present with persistent or recurrent signs and symptoms of malabsorption. These individuals require further investigation to rule out other causes for their symptoms, including inflammatory bowel disease. Although duodenal histology helps to determine whether there is persistent disease, SBCE can have a role in assessing disease extent in the small bowel and exclude complications214,215,216,217,218,219,220,221,222,223,224,225,226.

The length of the affected small bowel has a poor correlation with clinical symptoms and coeliac serology203,204,207,209,227. These should not determine whether further investigations, including SBCE, are carried out in patients with persistent symptoms. There are only limited data that correlate symptoms and coeliac serology with a diagnostic yield of SBCE for CeD features and extent of disease203,221,227.

Although SBCE has high sensitivity for the detection of macroscopic changes in CeD, there is poor correlation between the severity of duodenal histology and CeD-related findings on SBCE203,204,227. Only a few studies describe a positive correlation between findings on SBCE and the severity of histology213,222.

RCeD is a recognized complication of CeD. RCeD type II carries a low survival rate193. SBCE can identify complications of RCeD that most commonly occur in the distal small bowel, such as adenocarcinoma and enteropathy-associated T cell lymphoma217,221,228. Dedicated small bowel radiology has a complementary role to SBCE in the diagnosis of malignancies, in staging or in cases in which there is a high suspicion of malignancy but a negative SBCE194,229,230. However, superficial ulceration, mainly occurring in the pre-malignant phase (ulcerative jejunoileitis), can be missed by radiology and is more accurately assessed on SBCE220,222,223,225,229.

A histological diagnosis of malignancy can be confirmed during device-assisted enteroscopy in patients with suspected complications delineated on SBCE228,231,232,233,234,235,236,237.

Patients with RCeD require close monitoring and regular screening with repeated duodenal histology to assess RCeD type I and type II, and repeated SBCE can be helpful in measuring the extent of the disease and ruling out complications. The interval of surveillance remains unclear234,235. Patients with a complicated disease tend to have a large portion of the small bowel involved and a corresponding worse prognosis (RCeD type II versus type I versus uncomplicated CeD)236. The extent of disease (that is, the number of small bowel segments involved) can be assessed on SBCE as a measurement of response to treatment in RCeD236. Mild or no improvement in the extent of the disease can be considered an indication to alter or step up therapy237.

Other investigations

Evaluation of intestinal absorption in CeD: d-xylose breath test or urinary mannitol secretion

-

Recommendation 17. We do not recommend the use of the d-xylose breath test or urinary secretion test for absorption evaluation in patients with CeD.

-

Level of evidence very low; strong recommendation.

Permeability testing (d-xylose testing, lactulose to mannitol ratio) might detect changes in intestinal permeability associated with CeD; however, evidence of its use is limited and mostly dated, with a sensitivity and specificity subject to substantial variability. Therefore, these tests are not recommended during follow-up in patients with CeD238,239.

Bone mineral density

One of the most common and well-documented extra-intestinal manifestations of CeD is bone disease (osteopenia and osteoporosis), which increases fracture risk240. Bone tissue undergoes life-long remodelling involving synthesis and resorption processes241. Although bone health has been clinically recognized in CeD for several decades, the introduction of bone mineral density (BMD) measurement via dual-energy X-ray absorptiometry (DXA) in the late 1980s allowed the objective measurement of BMD at different body sites242. Bone loss is a common finding in untreated patients with CeD, despite many of them not meeting the criteria for osteoporosis243. Although malabsorption has been causally linked to adverse bone health in CeD, current studies have shown that genetics, local and systemic immunological factors, lack of physical activity and potential microbiota abnormalities might contribute to bone deterioration244,245,246,247. Indeed, osteoporosis prevalence as measured by DXA scan is affected by, among other factors, biological sex, geography, area of skeleton measured, age at disease onset and at diagnosis, clinical phenotype, menopause or andropause, and the degree of adherence to the GFD240,241. Because of the multifactorial nature of osteoporosis, its worldwide prevalence in CeD ranges from 1.7% to 42%241. According to two meta-analyses that investigated the most common clinical consequences of osteoporosis, there is a 60–100% excess of bone fractures before CeD diagnosis compared with the general population248,249. Notably, after the first year of the GFD, fracture excess is comparable to that of the general population despite the fact that BMD is not normalized in most patients with osteoporosis250. A decrease in BMD, as measured by DXA in a patient on a GFD, might be due to poor diet adherence, the onset of menopause or andropause, or other factors related to osteoporosis, but not necessarily to CeD pathogenesis.

Although the Fracture Risk Assessment tool has been developed to estimate the 10-year probability of major osteoporotic fractures and mortality risk in the general population, there is no concrete evidence of the value of incorporating this tool in the assessment of patients with CeD. Future work will determine whether approaches such as this help in the evaluation of bone health in individuals who are undergoing a targeted GFD251.

Impaired BMD is frequent in untreated CeD, leading to an increased risk of bone fractures241. Delays in diagnosis are associated with severe bone deterioration240. Despite microstructural evidence of bone loss in most patients, including those with normal BMD252, studies have shown that the deterioration of bone health is greater in patients with a classical clinical presentation than in patients with subclinical or asymptomatic CeD253. DXA can provide information about baseline BMD in patients newly diagnosed with CeD. Other surrogate markers of bone metabolism, such as serum levels of ionic calcium, serum vitamin D, bone-specific alkaline phosphatase, parathyroid hormone or surrogate markers of bone resorption (such as cross-linked C-telopeptide, C-terminal telopeptide of type I collagen, cross-linked N-telopeptide), can provide incremental information on bone metabolism240.

The evidence discussed earlier supports that DXA and bone metabolism markers could be useful to assess baseline bone health at diagnosis; however, experts have not reached consensus on the best time to indicate this test. An accurate diagnosis of bone health deterioration can enable interventions to delay or correct complications. On the other hand, bone health evaluation using DXA in patients with subclinical or asymptomatic CeD has not been sufficiently investigated, and no appropriate indications have been developed to date. These gaps should be addressed to improve bone health management and the prevention of complications following the initiation of a GFD.

Following the start of a GFD, BMD has been shown to improve253. This beneficial effect is associated with a reduction in bone fractures, even though this clinical event is not solely dependent on bone strength250. Patients with the most severe BMD baseline impairment rarely normalize bone mineral content. Despite persistent BMD impairment, as measured by DXA, 1 year after the GFD, bone fracture risk normalizes253.

Similarly, despite the fact that BMD in patients with subclinical or asymptomatic CeD is usually impaired at baseline, the low prevalence of fractures, which was comparable in 148 patients with CeD and 296 individuals as controls, casts doubt on the need for annual DXA measurement. However, the demonstration of BMD deterioration after initiation of the GFD in such patients, which is, in most cases, secondary to dietetic transgressions254, can be a strong argument to improve dietetic compliance advice for adequate calcium and vitamin D intake and physical exercise, although the latter seems to have a less relevant role in ameliorating BMD in premenopausal women255. In some cases, and despite strict GFD adherence, bone deterioration might be related to secondary intrinsic bone disorders that are unrelated to CeD241. Finally, menopause and andropause can affect bone mineralization and quality256. Thus, it seems reasonable to investigate BMD by DXA in any patient with CeD who has reached this stage of life. In the absence of scientific evidence, we suggest adherence to the accepted international guidelines on osteoporosis257,258.

Conclusions

CeD is an increasingly important public health concern, given the high prevalence of the disease and the growing appreciation of the potential long-term effects of this disease on patients and the health-care system. As CeD is a life-long condition with increased risks of systemic complications and limitations in current dietetic treatments, ongoing monitoring has been frequently recommended. However, evidence-based guidelines for monitoring practices are not available. In this article, we attempt to provide recommendations regarding the use of key monitoring assessments frequently used in current clinical practice (summarized in Fig. 1 and Table 1). In Box 2, the main concepts are briefly described.

a, Here are indicated the parameters related to the gluten-free diet (GFD) monitoring, coeliac disease (CeD) activity and dual-energy X-ray absorptiometry (DXA) usually considered a consequence of prolonged malabsorption. Alterations of anti-type 2 transglutaminase antibody (TG2Ab), symptoms and blood tests during the monitoring phase could be considered the expression of both GFD low adherence and/or residual activity of CeD. T cell receptor (TCR) clonality and duodenal flow cytometry (flow cyt) recognize and classify a refractory state, I or II. b, The lower panel of the figure shows that symptoms and biomarkers (such as antibodies and histology) usually recover in different time frames, with symptoms resolving more quickly than histological changes. BMD, bone mineral density; GIP, gluten immunogenic peptide; RCeD, refractory coeliac disease.

The strengths of this work include the rigorous approach using modern GRADE methodology and the inclusion of experts in CeD from a wide range of disciplines. However, we acknowledge that the level of evidence is low. In addition, these guidelines are focused on the follow-up of adults with CeD and might not apply to paediatric populations.

Unfortunately, as a result of the systematic evaluation of the currently available research in the monitoring of patients with CeD, the present work underlines the lack of evidence and the need for further research in this field. The lack of research on relevant issues that form the basis of CeD monitoring is surprising. The frequency of controls is not established and is usually driven by regional organizations rather than scientific evidence. There are multiple professional figures included in the monitoring phase, but they are dependent on local resources and organizations. The dynamics of TG2Ab IgG levels to detect poor dietetic compliance in CeD with IgA deficiency is completely unknown. Furthermore, the optimal method for tissue sampling during upper endoscopy in the case of a follow-up biopsy is also completely unknown, such as the possible adoption of different grading systems at diagnosis and during follow-up259. In reality, the Marsh score serves as an indicator of the most severe histological lesion identified, without taking into account the percentage of mucosal involvement. However, it is important to note that mucosal involvement might still hold importance in the context of ongoing monitoring259. The role of bulb biopsies in follow-up is far from clear260. The use of GIP detection in urine and stool, included here for the first time, is becoming more and more relevant in the evaluation of GFD adherence141, and represents the only way to test the presence of gluten in patients’ diets directly. Telemedicine emerged as a crucial tool during the coronavirus disease 2019 (COVID-19) pandemic for the continuous monitoring of various gastrointestinal diseases. Although its application for this purpose was highly desirable, its potential future integration, in conjunction with point-of-care tests, to monitor CeD and assess adherence to a GFD presents certain uncertainties261. From this point of view, the present article represents an important instrument to drive clinical research in the next years.

In conclusion, we anticipate that this guideline will provide pragmatic information to clinicians and enable the improvement and consistency of care of patients with CeD, as well as highlight important areas for future research.

References

Al-Toma, A. et al. European Society for the Study of Coeliac Disease (ESsCD) guideline for coeliac disease and other gluten-related disorders. United European Gastroenterol. J. 7, 583–613 (2019).

Elli, L. et al. Management of celiac disease in daily clinical practice. Eur. J. Intern. Med. 61, 15–24 (2019).

Green, P. H. R., Krishnareddy, S. & Lebwohl, B. Clinical manifestations of celiac disease. Dig. Dis. 33, 137–140 (2015).

Rubio-Tapia, A., Hill, I. D., Kelly, C. P., Calderwood, A. H. & Murray, J. A. ACG clinical guidelines: diagnosis and management of celiac disease. Am. J. Gastroenterol. 108, 656–676 (2013).

Wieser, H. Chemistry of gluten proteins. Food Microbiol. 24, 115–119 (2007).

Schuppan, D., Dennis, M. D. & Kelly, C. P. Celiac disease: epidemiology, pathogenesis, diagnosis, and nutritional management. Nutr. Clin. Care 8, 54–69 (2005).

Elli, L., Bergamini, C. M., Bardella, M. T. & Schuppan, D. Transglutaminases in inflammation and fibrosis of the gastrointestinal tract and the liver. Dig. Liver Dis. 41, 541–550 (2009).

Iversen, R. & Sollid, L. M. The immunobiology and pathogenesis of celiac disease. Annu. Rev. Pathol. 18, 47–70 (2022).

Catassi, G. N., Pulvirenti, A., Monachesi, C., Catassi, C. & Lionetti, E. Diagnostic accuracy of IgA anti-transglutaminase and IgG anti-deamidated gliadin for diagnosis of celiac disease in children under two years of age: a systematic review and meta-analysis. Nutrients 14, 7 (2021).

Husby, S., Murray, J. A. & Katzka, D. A. AGA clinical practice update on diagnosis and monitoring of celiac disease-changing utility of serology and histologic measures: expert review. Gastroenterology 156, 885–889 (2019).

Oberhuber, G., Granditsch, G. & Vogelsang, H. The histopathology of coeliac disease: time for a standardized report scheme for pathologists. Eur. J. Gastroenterol. Hepatol. 11, 1185–1194 (1999).

Marsh, M. N. Gluten, major histocompatibility complex, and the small intestine. A molecular and immunobiologic approach to the spectrum of gluten sensitivity (‘celiac sprue’). Gastroenterology 102, 330–354 (1992).

Zingone, F. et al. Guidelines of the Italian societies of gastroenterology on the diagnosis and management of coeliac disease and dermatitis herpetiformis. Dig. Liver Dis. 54, 1304–1319 (2022).

Ludvigsson, J. F. et al. Diagnosis and management of adult coeliac disease: guidelines from the British Society of Gastroenterology. Gut 63, 1210–1228 (2014).

Makharia, G. K. et al. The global burden of coeliac disease: opportunities and challenges. Nat. Rev. Gastroenterol. Hepatol. 19, 313–327 (2022).

Singh, P. et al. Global prevalence of celiac disease: systematic review and meta-analysis. Clin. Gastroenterol. Hepatol. 16, 823–836.e2 (2018).

Husby, S. et al. European Society Paediatric Gastroenterology, Hepatology and Nutrition guidelines for diagnosing coeliac disease 2020. J. Pediatr. Gastroenterol. Nutr. 70, 141–156 (2020).

Lundin, K. E. et al. Understanding celiac disease monitoring patterns and outcomes after diagnosis: a multinational, retrospective chart review study. World J. Gastroenterol. 27, 2603–2614 (2021).

Herman, M. L. et al. Patients with celiac disease are not followed up adequately. Clin. Gastroenterol. Hepatol. 10, 893–899.e1 (2012).

Bortoluzzi, F. et al. Sustainability in gastroenterology and digestive endoscopy: position paper from the Italian Association of Hospital Gastroenterologists and Digestive Endoscopists (AIGO). Dig. Liver Dis. 54, 1623–1629 (2022).

Rodríguez De Santiago, E. et al. Reducing the environmental footprint of gastrointestinal endoscopy: European Society of Gastrointestinal Endoscopy (ESGE) and European Society of Gastroenterology and Endoscopy Nurses and Associates (ESGENA) Position Statement. Endoscopy 54, 797–826 (2022).

Brown, D. A review of the PubMed PICO tool: using evidence-based practice in health education. Health Promot. Pract. 21, 496–498 (2020).

Kavanagh, B. P. The GRADE system for rating clinical guidelines. PLoS Med. 6, e1000094 (2009).

GRADE Handbook. https://gdt.gradepro.org/app/handbook/handbook.html#h.tc16aijafo2x (2013).

Best, W. R., Becktel, J. M. & Singleton, J. W. Rederived values of the eight coefficients of the Crohn’s disease activity index (CDAI). Gastroenterology 77, 843–846 (1979).

Jones, R., Coyne, K. & Wiklund, I. The gastro-oesophageal reflux disease impact scale: a patient management tool for primary care. Aliment. Pharmacol. Ther. 25, 1451–1459 (2007).

Revicki, D. A., Wood, M., Wiklund, I. & Crawley, J. Reliability and validity of the gastrointestinal symptom rating scale in patients with gastroesophageal reflux disease. Qual. Life Res. 7, 75–83 (1998).

Lohiniemi, S., Mäki, M., Kaukinen, K., Laippala, P. & Collin, P. Gastrointestinal symptoms rating scale in coeliac disease patients on wheat starch-based gluten-free diets. Scand. J. Gastroenterol. 35, 947–949 (2000).

Leffler, D. A. et al. A validated disease-specific symptom index for adults with celiac disease. Clin. Gastroenterol. Hepatol. 7, 1328–1334 (2009).

Itzlinger, A., Branchi, F., Elli, L. & Schumann, M. Gluten-free diet in celiac disease—forever and for all? Nutrients 10, 1796 (2018).

Farina, E. et al. Clinical value of tissue transglutaminase antibodies in celiac patients over a long term follow-up. Nutrients 13, 3057 (2021).

Leffler, D. et al. Kinetics of the histological, serological and symptomatic responses to gluten challenge in adults with coeliac disease. Gut 62, 996–1004 (2013).

Sugai, E. et al. Dynamics of celiac disease-specific serology after initiation of a gluten-free diet and use in the assessment of compliance with treatment. Dig. Liver Dis. 42, 352–358 (2010).

Nachman, F. et al. Serological tests for celiac disease as indicators of long-term compliance with the gluten-free diet. Eur. J. Gastroenterol. Hepatol. 23, 473–480 (2011).

Zanini, B. et al. Five year time course of celiac disease serology during gluten free diet: results of a community based ‘CD-Watch’ program. Dig. Liver Dis. 42, 865–870 (2010).

Bürgin-Wolff, A., Dahlbom, I., Hadziselimovic, F. & Petersson, C. J. Antibodies against human tissue transglutaminase and endomysium in diagnosing and monitoring coeliac disease. Scand. J. Gastroenterol. 37, 685–691 (2002).

Vahedi, K. et al. Reliability of antitransglutaminase antibodies as predictors of gluten-free diet compliance in adult celiac disease. Am. J. Gastroenterol. 98, 1079–1087 (2003).

Dipper, C. R. et al. Anti-tissue transglutaminase antibodies in the follow-up of adult coeliac disease. Aliment. Pharmacol. Ther. 30, 236–244 (2009).