Abstract

Transfer entropy is a recently introduced information-theoretic measure quantifying directed statistical coherence between spatiotemporal processes and is widely used in diverse fields ranging from finance to neuroscience. However, its relationships to fundamental limits of computation, such as Landauer's limit, remain unknown. Here we show that in order to increase transfer entropy (predictability) by one bit, heat flow must match or exceed Landauer's limit. Importantly, we generalise Landauer's limit to bi-directional information dynamics for non-equilibrium processes, revealing that the limit applies to prediction, in addition to retrodiction (information erasure). Furthermore, the results are related to negentropy and to Bremermann's limit and the Bekenstein bound, producing, perhaps surprisingly, lower bounds on the computational deceleration and information loss incurred during an increase in predictability about the process. The identified relationships set new computational limits in terms of fundamental physical quantities and establish transfer entropy as a central measure connecting information theory, thermodynamics and theory of computation.

Similar content being viewed by others

Introduction

Transfer entropy1 was designed to determine the direction of information transfer between two, possibly coupled, processes, by detecting asymmetry in their interactions. It is a Shannon information-theoretic quantity2,3,4 which measures a directed relationship between two time-series processes Y and X. Specifically, the transfer entropy TY→X measures the average amount of information that states yn at time n of the source time-series process Y provide about the next states xn+1 of the destination time-series process X, in the context of the previous state xn of the destination process (see more details in Methods):

The definition is asymmetric in Y and X, hence the labelling of an information source and destination. Intuitively, it helps to answer the question “if I know the state of the source, how much does that help to predict the state transition of the destination?”.

Following the seminal work of Schreiber1 numerous applications of transfer entropy have been successfully developed, by capturing information transfer within various domains, such as finance5, ecology6, neuroscience7,8, biochemistry9, distributed computation10,11,12, statistical inference13, complex systems14, complex networks15,16, robotics17, etc. Interestingly, maxima of transfer entropy were observed to be related to critical behaviour, e.g., average transfer entropy was observed to maximize on the chaotic side of the critical regime within random Boolean networks18 and was analytically shown to peak on the disordered side of the phase transition in a ferromagnetic 2D lattice Ising model with Glauber dynamics19. Transfer entropy was also found to be high while a system of coupled oscillators was beginning to synchronize, followed by a decline from the global maximum as the system was approaching a synchronized state16. Similarly, transfer entropy was observed to be maximized in coherent propagating spatiotemporal structures within cellular automata (i.e., gliders)10 and self-organizing swarms (cascading waves of motions)14.

There is growing awareness that information is a physical quantity, with several studies relating various information-theoretic concepts to thermodynamics20,21,22,23,24, primarily through Landauer's principle25. In this paper we report on a physical interpretation of transfer entropy and its connections to fundamental limits of computation, such as Landauer's limit.

Landauer's principle, dating back to 196125, is a physical principle specifying the lower theoretical limit of energy consumption need for a computation. It associates the logical irreversibility of functions involved in the computation with physical irreversibility, requiring a minimal heat generation per machine cycle for each irreversible function. According to Bennett20, “any logically irreversible manipulation of information, such as the erasure of a bit …, must be accompanied by a corresponding entropy increase in non-information bearing degrees of freedom of the information processing apparatus or its environment”. Specifically, the principle states that irreversible destruction of one bit of information results in dissipation of at least kT log 2 J of energy into the environment (i.e. an entropy increases in the environment by this amount — the Landauer limit). Here T is the temperature of the computing circuit in kelvins and k is Boltzmann's constant.

We shall consider the non-equilibrium thermodynamics of a physical system X close to equilibrium. At any given moment in time, n, the thermodynamic state Xn of the physical system is given by a vector xn ∈ Rd, comprising d variables, for instance the (local) pressure, temperature, chemical concentrations and so on. A state vector completely describes the physical macrostate as far as predictions of the outcomes of all possible measurements performed on the system are concerned26. The state space of the system is the set of all possible states of the system.

The thermodynamic state is generally considered as a fluctuating entity so that conditional probability for a transition from xn to xn+1, that is, p (xn+1|xn), is a clearly defined property of the system and can be accurately estimated by a proper sampling procedure. Each macrostate can be realised by a number of different microstates consistent with the given thermodynamic variables. Importantly, in the theory of non-equilibrium thermodynamics close to equilibrium, the microstates belonging to one macrostate x are equally probable.

A state vector, y, describes a state of some exterior system Y, possibly coupled to the system represented by X. Due to the presence or lack of such coupling, the time-series processes corresponding to X and Y may or may not be dependent. For a state transition from xn to xn+1, we shall say that σ(x)y is the internal entropy production of X in the context of some source Y, while ΔS(x)ext is the entropy production attributed to Y, so that (see Methods, (22)):

where ΔS(x) is the total variation of the entropy of system X.

Henceforth, we shall consider two simple examples illustrating entropy dynamics. In both these examples the physical system X is surrounded by the physical system Y (note that X is not a component of Y). The first example is the classical Joule expansion and the second is compression in the Szilárd engine-like device. These examples are fully described in Methods, illustrating cases with and without external entropy production. A comparison between these two examples shows that, although the resultant entropy change is the same in magnitude |ΔS(x)| = k log 2 in both cases, the change is brought about differently: (i) for the Joule expansion (of a one molecule gas), σ(x)y = k log 2 and ΔS(x)ext = 0, while (ii) for the Szilárd engine's compression (resetting one bit) σ(x)y = 0 and ΔS(x)ext = −k log 2.

At this stage, we would like to point out two important lessons from this comparison. Firstly, as argued by Bennett20, “a logically irreversible operation, such as the erasure of a bit or the merging of two paths, may be thermodynamically reversible or not depending on the data to which it is applied”. The (computation) paths referred to here are trajectories through the state-space or phase portrait of a system: if at time step n + 1 we reach a global system state xn+1 with multiple precursor states xn, then we have irreversibly destroyed information which is non-zero. This information is quantified by the entropy of the previous state conditioned on the next state, i.e., the conditional entropy h(xn | xn+1) at time step n + 1 (see Methods). Bennett elaborates that, if the data being erased is random, its erasure would represent a reversible entropy transfer to the environment, compensating an earlier entropy transfer from the environment during, e.g., a previous isothermal expansion. This, as expected, would make the total work yield of the cycle zero, in obedience to the Second Law. For a bit reset, as for any usual deterministic digital computation, the data is not random, being determined by the device's initial state — this is a crucial difference, as pointed out by Landauer and Bennett.

It is worth pointing out that the laws “of a closed physical system are one-to-one”22, p.78, meaning that in closed physical systems (or the universe as a whole) computational paths do not merge and information cannot be destroyed. We can only measure information destruction in open systems and what we measure is a departure of information from this system into the external, unobserved environment, where the destroyed information is offloaded along with energy dissipation. Note that a typical connection between an observed computational system and external environment is the physical representation of the computational system (e.g. bit registers): after all, “information is physical”27.

Secondly, we note that for the Szilárd engine's compression (resetting the bit), there is a decrease in the thermodynamical entropy of the one molecule gas by k log 2 and so one may argue that there is an increase in predictability about this system transition. It is precisely this intuition that was recently formalized via transfer entropy capturing the external entropy production28.

Arguably, transfer entropy takes an opposite perspective to information destruction, focussing on ability for prediction in coupled systems, rather than uncertainty in retrodiction. In our recent work28, transfer entropy has been precisely interpreted thermodynamically. The proposed thermodynamic interpretation of transfer entropy near equilibrium used the specialised Boltzmann's principle and related conditional probabilities to the probabilities of the corresponding state transitions. This in turn characterised transfer entropy as a difference of two entropy rates: the rate for a resultant transition and another rate for a possibly irreversible transition within the system affected by an additional source. Then it was shown that this difference, the local transfer entropy, is proportional to the external entropy production, possibly due to irreversibility. In the following sections we revisit the main elements of this approach, leading to new fundamental connections with Landauer's principle, Bremermann's limit, the Bekenstein bound, as well as Massieu-Planck thermodynamic potential (free entropy).

Results

Preliminary results: transfer entropy as external entropy production

Supported by the background described in Methods, specifically the assumptions (23) – (24) and the expressions (18) – (20), transfer entropy can be interpreted via transitions between states28:

When one considers a small fluctuation near an equilibrium, Z1 ≈ Z2, as the number of microstates in the macrostates does not change much. This removes the additive constant. Using the expression for entropy production (2), one obtains

If Z1 ≠ Z2, the relationship includes some additive constant  .

.

That is, the transfer entropy is proportional to the external entropy production, brought about by the source of irreversibility Y. The opposite sign reflects the different direction of entropy production attributed to the source Y: when ΔS(x)ext > 0, i.e. the entropy due to interactions with the surroundings increased during the transition in X, then the local transfer entropy is negative and the source misinforms about the macroscopic state transition. When, on the other hand, ΔS(x)ext < 0, i.e. some entropy produced during the transition in X dissipated to the exterior, then the local transfer entropy is positive and better predictions can be made about the macroscopic state transitions in X if source Y is measured.

Turning to our examples (see Methods), we note that (i) for the Joule expansion ΔS(x)ext = 0 and so, according to equation (4), tY→X = 0 as well, as the transition is adiabatic and X and Y are independent processes, while (ii) for the Szilárd engine's compression ΔS(x)ext = −k log 2 and so equation (4) yields tY→X = 1. That is, in the latter case of resetting one bit, the decrease in the thermodynamical entropy of the one molecule gas by k log 2 is accompanied by an increase in predictability about this state transition, by one bit precisely: this increase is captured by the transfer entropy from the exterior heat bath Y to the container X.

It is important to realize that local transfer entropy may increase, indicating an increase of predictability about a transition, not necessarily only when there is an irreversible operation, such as a bit reset. In other words, predictability about a transition may be increased for a wider range of processes. The transfer entropy quantifies then the extent by how much the predictability is increased. Furthermore, in general, there is a distinction between retrodiction (e.g., bit reset) when multiple computational paths converge and a more complicated prediction along potentially diverging forward-looking computational paths. This distinction is addressed in the following section.

Connection to Landauer's limit

Turning our attention to quasistatic and not necessarily reversible processes, we note that in these processes Z1 ≈ Z2 and under this approximation, equation (4) still holds without the additive constant  . Furthermore, under constant temperature, the external entropy production is

. Furthermore, under constant temperature, the external entropy production is  , where qext represents the heat flow to the system from the exterior in the context of the source Y. Hence,

, where qext represents the heat flow to the system from the exterior in the context of the source Y. Hence,

In other words, for irreversible but quasistatic processes, local transfer entropy is proportional to the heat received or dissipated by the system from/to the exterior.

Thus, we observe that Landauer's limit kT log 2 that associated a minimum entropy with a single bit of information is applicable here as well. In particular, for quasistatic processes, using (5), we obtain an equality that includes the classical Landauer's limit:

Both of the considered examples, the Joule expansion of a one molecule gas and the Szilárd engine's compression (resetting one bit), can be interpreted using this equation. For the Joule expansion, Δqext = 0 due to thermal isolation and there is a zero transfer entropy tY→X = 0 due to independence of X and Y, so either side of the equation is trivially zero. During the bit reset by the Szilárd engine compression, heat is dissipated, yielding Δqext = −kT log 2, while the transfer entropy tY→X = 1, again in agreement with equation (6).

Landauer inequalities for non-equilibrium dynamics

Depending on the processes, heat transfer can occur at different temperatures, and, in general,  . Nevertheless, under some stronger assumptions outlined in28, the conditional entropies can be related to the heat transferred in the transition, per temperature, even when temperatures are varying. In a general non-equilibrium case, we may consider two cases: (i) when the system dissipates heat, transfer entropy is positive and Z1 ≤ Z2:

. Nevertheless, under some stronger assumptions outlined in28, the conditional entropies can be related to the heat transferred in the transition, per temperature, even when temperatures are varying. In a general non-equilibrium case, we may consider two cases: (i) when the system dissipates heat, transfer entropy is positive and Z1 ≤ Z2:

and (ii) when the system absorbs heat, transfer entropy is negative and Z1 ≥ Z2:

In the first case (a cooling system with positive transfer entropy), the negative  is bounded from above by negative −k log 2 tY→X(n + 1), while in the second case (a heating system with negative transfer entropy), the positive

is bounded from above by negative −k log 2 tY→X(n + 1), while in the second case (a heating system with negative transfer entropy), the positive  is bounded from below by positive −k log 2 tY→X(n + 1). Generally, for absolute values,

is bounded from below by positive −k log 2 tY→X(n + 1). Generally, for absolute values,

For isothermal processes, this reduces to

For non-isothermal processes, a linear relationship between the transferred heat and temperature breaks down. For example, transfer entropy of a cycling system interacting with one hot (Th) and one cold (Tc) thermal reservoirs and exchanging with the surroundings the net heat 〈qext〉, can be bounded either as

when Z1 ≤ Z2, or

when Z1 ≥ Z2.

Hence, we obtain an inequality involving a modified Landauer's limit:

The expressions (6) and (13) essentially set the “conversion rate” between transfer entropy and the dissipated/received heat. Specifically, when transfer entropy is increased within the system by one bit, tY→X(n + 1) = 1, the dissipated heat must be equal to (or larger than the modified) Landauer's limit. The obtained inequality is in agreement with the generalised Clausius statement considered by29 in the context of information reservoirs and memory writing. The modified version of Landauer's principle offered in29 appears to have a slight error in the concluding equation [50] where the corresponding (βcold − βhot) term is in the numerator rather than the denominator.

Intuitively, when the system is cooled by losing heat equivalent to Landauer's limit, the predictability about the system cannot be increased by more than one bit. This interpretation is non-trivial because Landauer's limit specifies the amount of heat needed to reset one bit of information (limiting retrodiction), i.e., information is destroyed because multiple computational paths converge, while when dealing with local transfer entropy one considers prediction along forward-looking computational paths which may diverge. Thus, the suggested interpretation generalises Landauer's limit to bi-directional information dynamics for quasistatic processes and generalises the modified Landauer's limit to bi-directional information dynamics for non-equilibrium processes (subject to the aforementioned additional assumptions specified in28).

Connection to Bremermann's limit

In this section we analyze how fast a physical computing device can perform a logical operation, by connecting this problem to the dynamics of predictability captured by local transfer entropy. Margolus and Levitin30 specified this question through computational speed: the maximum number of distinct states that the system can pass through, per unit of time, pointing out that, for a classical computer, this would correspond to the maximum number of operations per second. It is well-known that this quantity is limited (Bremermann's limit31) and is immediately connected to how much energy is available for information processing, e.g., for switching between distinct states. As pointed out by Margolus and Levitin30, the rate at which a system can oscillate between two distinct states is  , where h is the Planck constant and E is fixed average energy, assuming zero of energy at the ground state. For a quantum system, where distinct states are orthogonal, “the average energy of a macroscopic system is equal to the maximum number of orthogonal states that the system can pass through per unit of time”30. The limit is smaller when a sequence of oscillations is considered:

, where h is the Planck constant and E is fixed average energy, assuming zero of energy at the ground state. For a quantum system, where distinct states are orthogonal, “the average energy of a macroscopic system is equal to the maximum number of orthogonal states that the system can pass through per unit of time”30. The limit is smaller when a sequence of oscillations is considered:  for a long evolution through orthogonal states.

for a long evolution through orthogonal states.

The work by Margolus and Levitin30 strengthened a series of previous results which related the rate of information processing to the standard deviation of the energy:  (cf.32). While these previous results specified that a quantum state with spread in energy δE takes time at least

(cf.32). While these previous results specified that a quantum state with spread in energy δE takes time at least  to evolve to an orthogonal state, the result of Margolus and Levitin bounds the minimum time via the average energy E:

to evolve to an orthogonal state, the result of Margolus and Levitin bounds the minimum time via the average energy E:  , rather than via the spread in energy δE which can be arbitrarily large for fixed E.

, rather than via the spread in energy δE which can be arbitrarily large for fixed E.

Importantly, Margolus and Levitin30 argued that these bounds are achievable for an ordinary macroscopic system (exemplified, for instance, with a lattice gas): “adding energy increases the maximum rate at which such a system can pass through a sequence of mutually orthogonal states by a proportionate amount”30.

Following Lloyd33 we interpret Bremermann's limit as the maximum number of logical operations that can be performed per second, i.e.  , where

, where  is the reduced Planck constant and E is the energy of the system.

is the reduced Planck constant and E is the energy of the system.

We now consider a transition during which the system dissipates energy, ΔE < 0, noting that the notation ΔE for the energy change should not be confused with the spread in energy δE. Then we define the change in the maximum number of logical operations per second, i.e. the computational deceleration, as  . This quantity accounts for how much the frequency of computation ν is reduced when the system loses some energy. This expression can also be written as h Δν = 4ΔE, relating the energy change to the change in frequency.

. This quantity accounts for how much the frequency of computation ν is reduced when the system loses some energy. This expression can also be written as h Δν = 4ΔE, relating the energy change to the change in frequency.

Then, generalizing (10) to |ΔE| ≥ kT log 2 |tY→X|, where ΔE is the heat energy which left the system X and entered the exterior Y during an isothermal process, we can specify a lower bound on the computational deceleration needed to increase predictability about the transition by tY→X, at a given temperature:

That is, the expression (14) sets the minimum computational deceleration needed to increase predictability. For example, considering the Szilárd engine's compression resetting one bit, we note that the computational deceleration within such a device, needed to increase predictability about the transition by one bit, is at least  , or

, or  . In other words, the product h Δν is bounded from below by the energy equal to four times the Landauer's limit, that is, h Δν ≥ 4kT log 2.

. In other words, the product h Δν is bounded from below by the energy equal to four times the Landauer's limit, that is, h Δν ≥ 4kT log 2.

Connection to the Bekenstein bound

Another important limit is the Bekenstein bound: an upper limit  on the information that can be contained within a given finite region of space which has a finite amount of energy E34:

on the information that can be contained within a given finite region of space which has a finite amount of energy E34:

where R is the radius of a sphere that can enclose the given system and c is the speed of light.

While Bremermann's limit constrains the rate of computation, the Bekenstein bound restricts an information-storing capacity35. It applies to physical systems of finite size with limited total energy and limited entropy, specifying the maximal extent of memory with which a computing device of finite size can operate.

Again considering a transition during which the system dissipates energy, ΔE < 0, we define the change in the maximum information  required to describe the system, i.e., the information loss, as

required to describe the system, i.e., the information loss, as  .

.

Then the predictability during an isothermal state transition within this region, reflected in the transfer entropy tY→X from a source Y, is limited by the information loss  associated with the energy change ΔE during the transition. Specifically, using |ΔE| ≥ kT log 2 |tY→X| for any source Y, we obtain:

associated with the energy change ΔE during the transition. Specifically, using |ΔE| ≥ kT log 2 |tY→X| for any source Y, we obtain:

While predictability about the system is increased by one bit, at a given temperature, i.e., tY→X(n + 1) = 1, there is a loss of at least  from the maximum information contained within (or describing) the system.

from the maximum information contained within (or describing) the system.

Let us revisit the Szilárd engine compression during a bit reset operation, this time within a spherical container of fixed radius R which dissipates heat energy to the exterior. Such a compressed system needs less information to describe it than an uncompressed system and the corresponding information loss about the compressed system  is bounded from below by Landauer's limit scaled with

is bounded from below by Landauer's limit scaled with  .

.

It is important to realise that while the system dissipates energy and loses information/entropy, the increased predictability is about the transition. Therefore, this increased predictability reflects the change of information rather than the amount of information in the final configuration. Hence, the expressions (14) and (16) set transient limits of computation, for computational deceleration and information loss, respectively.

Discussion

The obtained relationships (6), (9), (13), (14) and (16) specify different transient computational limits bounding increases of predictability about a system during a transition, represented via transfer entropy. These relations explicitly identify constraints on transfer entropy in small scale physical systems, operating on the scale of the thermal energy kT and being essentially ‘quantized’ by Landauer's limit. These constraints express increases of predictability via dissipated heat/energy; set the minimum computational deceleration needed to increase predictability; and offset the loss in the maximum information contained within a physical system by the predictability gained during a transition. Unlike classical Bremermann's limit and the Bekenstein bound which set the maximum computational speed and information, these inequalities specify the lower bounds, showing that in order to achieve a gain in predictability, the transient computational dynamics such as deceleration (or information loss) need to operate faster (or contain more) than these limits. Understanding these limits and their implications is becoming critical as computing circuitry is rapidly approaching these regimes36.

Finally, we point out an important relationship between an increase of predictability about the system (higher local transfer entropy) and negentropy: the entropy that the system exports (dissipates) to keep its own entropy low37. That is, the expression (4) may indicate the general applicability to guided self-organisation in various artificial life scenarios, where one would expect that maximising transfer entropy corresponds to maximising negentropy. It is known that negentropy ΔSext = −Φ, where Φ is the Massieu-Planck thermodynamic potential (free entropy). It was shown that maximising Φ is related to stability in several molecular biology contexts (e.g., protein stability38) and so the suggested thermodynamic interpretation associates such increases in stability with increases in transfer entropy to the system. One may also argue that the increase of stability in biological systems due to a free entropy change (measured in bits) is also scaled and in some cases ‘quantised’, by Landauer's limit.

Methods

Preliminaries

Formally, consider a time-series process X of the (potentially multivariate) random variables {… Xn−1, Xn, Xn+1 …} with process realizations {… xn−1, xn, xn+1 …} for countable time indices n. The underlying state of the process X is described by a time series of vectors {… Xn−1, Xn, Xn+1 …} with realizations {… xn−1, xn, xn+1 …}, where the multivariate realization xn fully describes the state of the process at n, perhaps using vectors xn = {xn−k+1, …, xn−1, xn} for a length k Markovian process, or for a thermodynamic process by including all relevant thermodynamic variables. If vectors {xn−k+1, …, xn−1, xn} are interpreted as embedding vectors39, as proxies of hidden states, one should in general be cautious to avoid false coupling detections40.

The probability distribution function for observing a realization xn is denoted by p(xn), while  , denotes conditional probability of observing realization xn+1 having observed xn at the previous time step, where p(xn+1, xn) is the joint probability of the two realizations. These quantities are similarly defined for process Y, for corresponding time indices n.

, denotes conditional probability of observing realization xn+1 having observed xn at the previous time step, where p(xn+1, xn) is the joint probability of the two realizations. These quantities are similarly defined for process Y, for corresponding time indices n.

Transfer entropy

The transfer entropy TY→X, defined by (1), is a conditional mutual information3 between Yn and Xn+1 given Xn. Following Fano41 we can quantify “the amount of information provided by the occurrence of the event represented by” yn “about the occurrence of the event represented by” xn+1, conditioned on the occurrence of the event represented by xn. That is, we can quantify local or point-wise transfer entropy10 in the same way Fano derived local or point-wise conditional mutual information41. This is a method applicable in general for different information-theoretic measures42: for example, local entropy or Shannon information content for an outcome xn of process X is defined as h(xn) = −log2p(xn). The quantity h(xn) is simply the information content attributed to the specific symbol xn, or the information required to predict or uniquely specify that specific value. Other local information-theoretic quantities may be computed as sums and differences of local entropies, e.g., h(xn+1 | xn) = h(xn+1, xn) − h(xn), where h(xn+1, xn) is the local joint entropy. In computing these quantities, the key step is to estimate the relevant probability distribution functions. This could be done using multiple realisations of the process, or accumulating observations in time while assuming stationarity of the process in time (and/or space for spatiotemporal processes).

As such, the local transfer entropy may be expressed as a difference between local conditional entropies:

where local conditional entropies are defined as follows3:

Entropy definitions

The thermodynamic entropy was originally defined by Clausius as a state function S satisfying

where qrev is the heat transferred to an equilibrium thermodynamic system during a reversible process from state A to state B. It can be interpreted, from the perspective of statistical mechanics, via the famous Boltzmann's equation S = k log W, where W is the number of microstates corresponding to a given macrostate. Sometimes W is termed “thermodynamic probability” which is quite distinct from a mathematical probability bounded between zero and one. In general, W can be normalized to a probability p = W/N, where N is the number of possible microstates for all macrostates. This is not immediately needed as we shall consider (and later normalize) relative “thermodynamic probabilities”.

At this stage, we recall a specialization of Boltzmann's principle by Einstein43, for two states with entropies S and S0 and “relative probability” Wr (the ratio of numbers W and W0 that account for the numbers of microstates in the macrostates with S and S0 respectively), given by: S − S0 = k log Wr. Here again the “relative probability” Wr is not bounded between zero and one. For instance, if the number of microstates in B (i.e., after the transition) is twice as many as those in A (as is the case, for instance, after a free adiabatic gas expansion; see the Joule expansion example described in the next section), then Wr = 2 and the resultant entropy change is k log 2. Thus, the “relative probability” Wr depends on the states involved in the transition.

In general, the variation of entropy of a system ΔS = S − S0 is equal to the sum of the internal entropy production σ inside the system and the entropy change due to the interactions with the surroundings ΔSext:

so that when the transition from the initial state S0 to the final state S is irreversible, the entropy production σ > 0, while for reversible processes σ = 0.

Examples of coupled systems

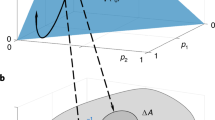

Joule expansion

The first example is the classical Joule expansion. A container X is thermally isolated from the heat bath Y and there are no heat exchanges between X and Y. A partition separates two chambers of X, so that a volume of gas is kept in the left chamber of X and the right chamber of X is evacuated. Then the partition between the two chambers is opened and the gas fills the whole container, adiabatically. It is well-known that such (irreversible) doubling of the volume at constant temperature T increases the entropy of X, i.e. ΔS(x) = nk log 2, where n is the number of particles (cf. the Sackur-Tetrode equation44). The same increase of entropy results from a quasistatic irreversible variant of the Joule expansion. If there was just one particle in the container (a one molecule gas, as shown in Fig. 1), the entropy would still increase after the gas expansion, by ΔS(x) = k log 2, reflecting the uncertainty about the particle's position with respect to the container's chambers (left or right).

In this example, there are no interactions between the systems X (the container) and Y (the exterior). In other words, ΔS(x)ext = 0 and the entropy increase is due to the internal entropy production. Formally, ΔS(x) = σ(x)y, that is, the internal entropy produced by X is not affected at all by the system Y, because of the total thermal isolation between X and Y.

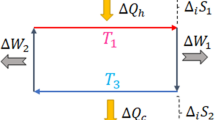

Szilárd engine compression

Now let us consider the second example with the same container X, surrounded by the exterior system Y, but now without a thermal isolation between the two, so that heat can be exchanged between X and Y. Two movable partitions can still divide the container into two separate chambers, as well as frictionlessly slide along the walls of the container to either side, the left or to the right. For clarity, we consider only one particle contained in the system X and consider the dynamics of the partitions as part of the exterior Y. This setup is (a part of) the Szilárd heat engine45,46.

When a partition is in the middle of the container, the particle is located either in the left or the right chamber with equal probability, but eventually collisions between the particle and the partition force the latter to one side. It is easy to calculate that, as one partition isothermally moves to the side of the container (at temperature T), the maximum work extracted from the heat bath is kT log 2. In order for the work to be extracted, one obviously needs to know on which side the particle was located initially. The most important aspects, in the context of our example, are how this coupled system modelled on the Szilárd engine realizes the logically irreversible operation of resetting a bit — the operation used to illustrate Landauer's principle — and what are the resultant entropy dynamics.

A similar physical implementation of this operation is, for instance, considered by Maroney47, as shown in Fig. 2. If the particle is on the left side, then the physical state represents logical state zero and if the particle is on the right side, it represents logical state one. The partition is removed from the middle of the container, allowing the particle to freely travel within the container. Then the partition is inserted into the far right-hand side of the container and is slowly (quasistatically) moved to its original position in the middle. This compression process maintains thermal contact between the container X and heat bath Y. Collisions with the particle exert a pressure on the partition, requiring work to be performed and the energy from the work is transferred to heat in the heat bath Y, amounting to at least kT log 2. Such resetting of a bit to zero, which converts at least kT log 2 of work into heat, is a typical example of Landauer's principle: any logically irreversible transformation of classical information is necessarily accompanied by the dissipation of at least kT log 2 of heat per reset bit. The entropy dynamics is the same for the Szilárd engine compression, where one partition is fixed at the edge and only the second one is moving.

It is easy to see that the physical result of such isothermal compression is a decrease in the thermodynamical entropy of the one molecule gas by k log 2, accompanied by an increase in the entropy of the environment by (at least) the same amount. That is, ΔS(x) = −k log 2. At the same time, as the example shows, the heat is dissipated to the exterior system Y, i.e., compensated by the entropy change due to the interactions with the surroundings, ΔS(x)ext = −k log 2 and hence, there is no internal entropy production, σ(x)y = 0.

Assumptions and their illustration in the examples

In an attempt to provide a thermodynamic interpretation of transfer entropy two important assumptions are made28, defining the range of applicability for such an interpretation. The first one relates the transition probability  of the system's reversible state change to the conditional probability p(xn+1 | xn):

of the system's reversible state change to the conditional probability p(xn+1 | xn):

where Z1 is a normalization factor and  is such that

is such that  . The normalization factor is equal to the ratio between the total number of microstates in all possible macrostates at n + 1 and the total number of microstates in all possible macrostates at n.

. The normalization factor is equal to the ratio between the total number of microstates in all possible macrostates at n + 1 and the total number of microstates in all possible macrostates at n.

We note that the normalization is needed because “relative probability”  is not bounded between zero and one, while the conditional probability is a properly defined mathematical probability. For example, in the Joule expansion, the number of microstates in xn+1 is twice as many as those in the old state xn, making

is not bounded between zero and one, while the conditional probability is a properly defined mathematical probability. For example, in the Joule expansion, the number of microstates in xn+1 is twice as many as those in the old state xn, making  . In this simple example the state xn+1 is the only possible macrostate and the normalization factor Z1 = 2 as well, since the number of all microstates after the transition is twice the number of the microstates in the old state before the transition. Hence, the right-hand side of the assumption is unity,

. In this simple example the state xn+1 is the only possible macrostate and the normalization factor Z1 = 2 as well, since the number of all microstates after the transition is twice the number of the microstates in the old state before the transition. Hence, the right-hand side of the assumption is unity,  , concurring with the conditional probability p(xn+1 | xn) = 1, as this transition is the only one possible. The entropy change is S(xn+1) − S(xn) = k log 2, as expected.

, concurring with the conditional probability p(xn+1 | xn) = 1, as this transition is the only one possible. The entropy change is S(xn+1) − S(xn) = k log 2, as expected.

On the contrary, for the Szilárd engine's compression example, the number of microstates in xnbefore the transition is twice as many as those in the new state xn+1 after the transition (e.g., partition moves from the far right-hand side to the middle) and  . Following Szilárd's original design, we allow for two possibly moving partitions: either from the right to the middle, or from the left to the middle, with both motions resulting in the same entropy dynamics. Hence, the total number of possible microstates in all the resultant macrostates is still 2: the particle is on the left, or on the right, respectively. Therefore, there is no overall reduction in the number of all possible microstates after the transition from xn to xn+1, so that Z1 = 1. Thus,

. Following Szilárd's original design, we allow for two possibly moving partitions: either from the right to the middle, or from the left to the middle, with both motions resulting in the same entropy dynamics. Hence, the total number of possible microstates in all the resultant macrostates is still 2: the particle is on the left, or on the right, respectively. Therefore, there is no overall reduction in the number of all possible microstates after the transition from xn to xn+1, so that Z1 = 1. Thus,  , setting the conditional probability p(xn+1 | xn) = 1/2 for the partition moving from the right. The entropy change is given by S(xn+1) − S(xn) = k log 1/2 = −k log 2.

, setting the conditional probability p(xn+1 | xn) = 1/2 for the partition moving from the right. The entropy change is given by S(xn+1) − S(xn) = k log 1/2 = −k log 2.

The second assumption relates the transition probability  of the system's internal state change, in the context of the interactions with the external world represented in the state vector y, to the conditional probability p(xn+1 | xn, yn). Specifically, the second assumption is set as follows:

of the system's internal state change, in the context of the interactions with the external world represented in the state vector y, to the conditional probability p(xn+1 | xn, yn). Specifically, the second assumption is set as follows:

for some number  , such that

, such that  , where σ(x)y is the system's internal entropy production in the context of y. The normalization factor is again the ratio between the total numbers of possible microstates after and before the transition. In general, Z1 ≠ Z2 because yn may either increase or constrain the number of microstates in xn+1.

, where σ(x)y is the system's internal entropy production in the context of y. The normalization factor is again the ratio between the total numbers of possible microstates after and before the transition. In general, Z1 ≠ Z2 because yn may either increase or constrain the number of microstates in xn+1.

For the Joule expansion example,  , Z2 = Z1 = 2, with identical resultant probabilities. The lack of difference is due to thermal isolation and hence independence, between X and Y, yielding ΔS(x) = σ(x)y = k log 2.

, Z2 = Z1 = 2, with identical resultant probabilities. The lack of difference is due to thermal isolation and hence independence, between X and Y, yielding ΔS(x) = σ(x)y = k log 2.

For the Szilárd engine's compression example, Z2 = Z1 = 1. There is no internal entropy production,  , entailing

, entailing  . Thus, our second assumption requires that the resultant conditional probability is set as

. Thus, our second assumption requires that the resultant conditional probability is set as  . That is, once the direction of the partition's motion is selected (e.g., from the right to the middle), the transition is certain to reach the compressed outcome, in context of the external contribution from Y.

. That is, once the direction of the partition's motion is selected (e.g., from the right to the middle), the transition is certain to reach the compressed outcome, in context of the external contribution from Y.

References

Schreiber, T. Measuring information transfer. Phys. Rev. Lett. 85, 461–464 (2000).

Shannon, C. E. A mathematical theory of communication. Bell Syst. Tech. J. 27, 379–423 and 623–656 (1948).

MacKay, D. J. Information Theory, Inference and Learning Algorithms (Cambridge University Press, Cambridge, 2003).

Cover, T. M. & Thomas, J. A. Elements of Information Theory (John Wiley & Sons, 1991).

Baek, S. K., Jung, W.-S., Kwon, O. & Moon, H.-T. Transfer entropy analysis of the stock market. (2005). ArXiv:physics/0509014v2.

Moniz, L. J., Cooch, E. G., Ellner, S. P., Nichols, J. D. & Nichols, J. M. Application of information theory methods to food web reconstruction. Ecol. Model. 208, 145–158 (2007).

Chávez, M., Martinerie, J. & Le Van Quyen, M. Statistical assessment of nonlinear causality: application to epileptic EEG signals. J. Neurosci. Methods 124, 113–128 (2003).

Wibral, M. et al. Transfer entropy in magnetoencephalographic data: quantifying information flow in cortical and cerebellar networks. Prog. Biophys. Mol. Bio. 105, 80–97 (2011).

Pahle, J., Green, A. K., Dixon, C. J. & Kummer, U. Information transfer in signaling pathways: a study using coupled simulated and experimental data. BMC Bioinformatics 9, 139 (2008).

Lizier, J. T., Prokopenko, M. & Zomaya, A. Y. Local information transfer as a spatiotemporal filter for complex systems. Phys. Rev. E 77, 026110 (2008).

Lizier, J. T., Prokopenko, M. & Zomaya, A. Y. Information modification and particle collisions in distributed computation. Chaos 20, 037109 (2010).

Lizier, J. T., Prokopenko, M. & Zomaya, A. Y. Local measures of information storage in complex distributed computation. Inform. Sciences 208, 39–54 (2012).

Barnett, L. & Bossomaier, T. Transfer entropy as a log-likelihood ratio. Phys. Rev. Lett. 109, 138105 (2012).

Wang, X. R., Miller, J. M., Lizier, J. T., Prokopenko, M. & Rossi, L. F. Quantifying and tracing information cascades in swarms. PLoS ONE 7, e40084 (2012).

Lizier, J. T., Pritam, S. & Prokopenko, M. Information dynamics in small-world Boolean networks. Artif. Life 17, 293–314 (2011).

Ceguerra, R. V., Lizier, J. T. & Zomaya, A. Y. Information storage and transfer in the synchronization process in locally-connected networks. In: 2011 IEEE Symposium on Artificial Life (ALIFE), April 1315, 2011, Paris, France 54–61 (IEEE, New York, 2011).

Lungarella, M. & Sporns, O. Mapping information flow in sensorimotor networks. PLoS Comput. Biol. 2, e144 (2006).

Lizier, J. T., Prokopenko, M. & Zomaya, A. Y. The information dynamics of phase transitions in random Boolean networks. In: Bullock, S., Noble, J., Watson, R. & Bedau, M. A. (eds.) Proceedings of the Eleventh International Conference on the Simulation and Synthesis of Living Systems (ALife XI), Winchester, UK 374–381 (MIT Press, Cambridge, MA, 2008).

Barnett, L., Lizier, J. T., Harré, M., Seth, A. K. & Bossomaier, T. Information flow in a kinetic Ising model peaks in the disordered phase. Phys. Rev. Lett. 111, 177203 (2013).

Bennett, C. H. Notes on Landauer's principle, reversible computation and Maxwell's Demon. Stud. Hist. Phil. Sci. B 34, 501–510 (2003).

Piechocinska, B. Information erasure. Phys. Rev. A 61, 062314 (2000).

Lloyd, S. Programming the Universe (Vintage Books, New York, 2006).

Parrondo, J. M. R., den Broeck, C. V. & Kawai, R. Entropy production and the arrow of time. New J. Phys. 11, 073008 (2009).

Prokopenko, M., Lizier, J. T., Obst, O. & Wang, X. R. Relating Fisher information to order parameters. Phys. Rev. E 84, 041116 (2011).

Landauer, R. Irreversibility and heat generation in the computing process. IBM J. Res. Dev. 5, 183–191 (1961).

Goyal, P. Information physics – towards a new conception of physical reality. Information 3, 567–594 (2012).

Landauer, R. Information is physical. Phys. Today 44, 23–29 (1991).

Prokopenko, M., Lizier, J. T. & Price, D. C. On thermodynamic interpretation of transfer entropy. Entropy 15, 524–543 (2013).

Deffner, S. & Jarzynski, C. Information processing and the second law of thermodynamics: an inclusive, Hamiltonian approach. (2013). ArXiv:1308.5001v1.

Margolus, N. & Levitin, L. B. The maximum speed of dynamical evolution. Phys. D 120, 188–195 (1998).

Bremermann, H. J. Minimum energy requirements of information transfer and computing. Int. J. Theor. Phys. 21, 203–217 (1982).

Anandan, J. & Aharonov, Y. Geometry of quantum evolution. Phys. Rev. Lett. 65, 1697–1700 (1990).

Lloyd, S. Ultimate physical limits to computation. Nature 406, 1047–1054 (2000).

Bekenstein, J. D. Universal upper bound on the entropy-to-energy ratio for bounded systems. Phys. Rev. D 23, 287–298 (1981).

Bekenstein, J. D. Entropy content and information flow in systems with limited energy. Phys. Rev. D 30, 1669–1679 (1984).

Bérut, A. et al. Experimental verification of Landauer's principle linking information and thermodynamics. Nature 483, 187–189 (2012).

Schrödinger, E. What is life? The Physical Aspect of the Living Cell. (Cambridge University Press, 1944).

Schellman, J. A. Temperature, stability and the hydrophobic interaction. Biophys. J. 73, 2960–2964 (1997).

Takens, F. Detecting strange attractors in turbulence. In: Rand, D. & Young, L.-S. (eds.) Dynamical Systems and Turbulence, Warwick 1980 Lecture Notes in Mathematics, 366–381 (Springer, Berlin/Heidelberg, 1981).

Smirnov, D. A. Spurious causalities with transfer entropy. Phys. Rev. E 87, 042917 (2013).

Fano, R. M. The Transmission of Information: A Statistical Theory of Communication (MIT Press, Cambridge, Massachussets, 1961).

Lizier, J. T. Measuring the dynamics of information processing on a local scale in time and space. In: Wibral, M., Vicente, R. & Lizier, J. T. (eds.) Directed Information Measures in Neuroscience Understanding Complex Systems, 161–193 (Springer Berlin Heidelberg, 2014).

Einstein, A. Über einen die Erzeugung und Verwandlung des Lichtes betreffenden heuristischen Gesichtspunkt. Ann. Phys. 322, 132–148 (1905).

Huang, K. Introduction to Statistical Physics. A Chapman & Hall book (Taylor & Francis Group, 2009).

Szilárd, L. Über die entropieverminderung in einem thermodynamischen system bei eingriffen intelligenter wesen (On the reduction of entropy in a thermodynamic system by the intervention of intelligent beings). Zeitschrift für Physik 53, 840–856 (1929).

Magnasco, M. O. Szilard's heat engine. Europhys. Lett. 33, 583–588 (1996).

Maroney, O. Information processing and thermodynamic entropy. In: Zalta, E. N. (ed.) The Stanford Encyclopedia of Philosophy (2009), Fall 2009 edn, Date of access: 08/10/2013, URL = http://plato.stanford.edu/archives/fall2009/entries/information-entropy/.

Acknowledgements

We express our thanks to Ralf Der (Max Planck Institute for Mathematics in the Sciences, Leipzig) for many fruitful discussions. We are also indebted to our late friend and colleague Don C. Price (CSIRO Materials Science and Engineering).

Author information

Authors and Affiliations

Contributions

M.P. conceived the study. M.P. and J.L. carried out mathematical analysis and wrote the paper.

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Rights and permissions

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License. The images or other third party material in this article are included in the article's Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder in order to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-nd/4.0/

About this article

Cite this article

Prokopenko, M., Lizier, J. Transfer Entropy and Transient Limits of Computation. Sci Rep 4, 5394 (2014). https://doi.org/10.1038/srep05394

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep05394

This article is cited by

-

Direction of information flow between brain regions in ADHD and healthy children based on EEG by using directed phase transfer entropy

Cognitive Neurodynamics (2021)

-

A regime shift in the Sun-Climate connection with the end of the Medieval Climate Anomaly

Scientific Reports (2017)

-

Characterizing Social Interaction in Tobacco-Oriented Social Networks: An Empirical Analysis

Scientific Reports (2015)

-

Extracting Information from Qubit-Environment Correlations

Scientific Reports (2014)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.