Abstract

Medical image fusion aims to fuse multiple images from a single or multiple imaging modes to enhance their corresponding clinical applications in diagnosing and evaluating medical problems, a trend that has attracted increasing attention. However, most recent medical image fusion methods require prior knowledge, making it difficult to select image features. In this paper, we propose a novel deep medical image fusion method based on a deep convolutional neural network (DCNN) for directly learning image features from original images. Specifically, source images are first decomposed by low rank representation to obtain the principal and salient components, respectively. Following that, the deep features are extracted from the decomposed principal components via DCNN and fused by a weighted-average rule. Then, considering the complementary between the salient components obtained by the low rank representation, a simple yet effective sum rule is designed to fuse the salient components. Finally, the fused result is obtained by reconstructing the principal and salient components. The experimental results demonstrate that the proposed method outperforms several state-of-the-art medical image fusion approaches in terms of both objective indices and visual quality.

Similar content being viewed by others

Introduction

Image fusion is an effective technique which can fully integrate the complementary information from multiple sensors or different aspects. This technique has been widely applied in many fields, such as land cover mapping1,2, object detection3,4,5 and medical diagnosis6,7,8. Medical image fusion is to synthesize multiple medical images from single or different imaging devices. The main purpose of the medical image fusion is to improve imaging quality while preserving the specific features for accurate diagnosis and treatment, which plays an important role in surgical navigation, routine staging, and radio-therapy planning of malignant disease9,10,11,12,13,14.

Currently, medical image fusion mainly focuses on computerized tomography (CT), magnetic resonance imaging (MRI), single-photo emission computed tomography (SPECT) modalities, and positron emission tomography (PET). CT image can clearly reflect main structures, e.g., bones and implants. MRI image records high-resolution anatomical details for soft tissues. However, the MRI image is not sensitive to the diagnosis of fractures compared to CT image. Moreover, PET image has the property of high sensitivity due to the molecular imaging technique, but it is with lower resolution. SPECT image is utilized to study the blood flow of tissues and organs by nuclear imaging technique. Therefore, each modality has different merits and drawbacks. In order to fully integrate the advantages of different modalities, an effective scheme is to fuse the complementary information from multi-sensor images15,16,17,18,19,20.

To date, various medical image fusion approaches have been proposed, which can be divided into two types: spatial domain fusion and transform domain fusion. The spatial domain methods usually adopt a pixel or block-based fusion strategy21, in which the source images are fused with a designed activity level measurement such as spatial frequency and sum-modified-Laplacian. For instance, Li et al. proposed a matting-based image fusion approach to achieve image fusion22, in which the image matting technique is used to decide the multi-focus regions. In Li’s paper22, a wise-block medical image fusion method was proposed. Source images was divided into smaller blocks according to local window. The average value of principal components of all the blocks was used as the weights.

In contrast to the spatial domain approaches, the transform domain approaches mainly contain three key steps. First, the original images are decomposed into the transform domain via a wavelet transform. Then, the designed fusion rule is used to fuse the transformed coefficients. Finally, an inverse transform is performed on the fused coefficients to obtain the fusion result. Multi-scale transform (MST) approaches have become the very popular research topic, such as curvelet transform (CVT)23, non-subsampled contourlet transform (NSCT)24, non-subsampled shearlet transform (NSST)25, and shift-invariant dual-tree complex shearlet transform (SIDCST)26. For example, Bhatnagar et al. developed a general fusion framework for multimodal medical images based on NSCT24. Yin et al. proposed a decision fusion rule to achieve medical image fusion in NSST domain27, in which four different types of medical image fusion problems were used to verify the effectiveness. In Yin’s paper26, a SIDCST was constructed by cascading the dual-tree complex wavelet transform (DTCWT) and shearlet filters, which makes full use of the limited redundancy of the DTCWT and the directional selectivity of the shearlet filter.Besides, sparse representation (SR) methods have been widely researched in image fusion field28. For example, Yang et al. first applied the SR in image fusion field, which obtains satisfactory performance28. Liu et al. proposed a general fusion framework by combining the SR and MST so as to improve the details of fusion result29. Liu et al. introduced a convolutional sparse representation (CSR) into image fusion to overcome the limited ability in detail preservation caused by SR method30. These publications have been demonstrated the SR is able to achieve the image fusion.

In recent years, deep learning has become a hot topic in image processing field. Several deep models have been applied in image fusion31,32,33,34. For instance, in Liu’s paper31, the multi-focus image fusion problem is regarded as binary image classification, and the convolutional neural network (CNN) is used to learn the weight map from a large number of labeled training samples. Li et al. proposed a novel deep learning architecture for infrared and visible images fusion problem via combining convolutional layers, fusion layer and dense block32. These methods demonstrated that the deep learning model is an effective tool to achieve image fusion.

However, although traditional methods can achieve medical image fusion, the issue of how to utilize CNN effectively for medical image fusion is still an open question. In this paper, a novel medical image fusion method is proposed based on a pre-trained CNN model, called deep medical image fusion (DMIF). First, the source images are decomposed into the principal and salient components via low rank representation. Then, the pre-trained CNN model is utilized to extract the deep features of principal components, and a weighted-average strategy is adopted to fuse the deep features. Second, the salient components are fused with a sum strategy. Finally, the principal and salient components are reconstructed to obtain the fusion result. Experimental results demonstrate that the proposed method can obtain outstanding performance against several state-of-the-art approaches.

The main contributions of this work are shown as follows:

-

(1)

A novel medical image fusion approach based on CNN is proposed. The proposed method opens new opportunities for the medical image fusion.

-

(2)

Low rank representation is adopted to decompose the medical images into two components for the first time, i.e., principal and salient layers, which is able to effectively separate the structure information and detail information.

-

(3)

Experiments on 44 pairs of medical images verify that the fusion performance obtained by the proposed method outperforms other approaches.

The remaining of this work is organized as follows. In Section “Proposed method”, we introduce the proposed method in detail. Section “Experiments” presents the experiment results and analyses. Finally, Section “Conclusions” gives the conclusions of this work.

Related work

VGG-1635 is a convolutional neural network (CNN) architecture designed for image classification. It was proposed by the Visual Geometry Group at the University of Oxford and was one of the top-performing models in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) in 2014. The architecture is characterized by its depth, utilizing 16 weight layers, named as VGG-16. The network consists of 16 weight layers, including 13 convolutional layers using 3*3 kernel with a stride size of 2 and 3 fully connected layers. The convolutional layers are followed by max-pooling layers using 2*2 filters with a stride size of 2 to reduce spatial dimensions. After each pooling layer, the size of the feature map is reduced by half. The last feature map before the fully connected layers is 7*7 with 512 channels. Despite its simplicity and straightforward architecture, VGG-16 has demonstrated strong performance on various computer vision tasks and served as a foundation for more advanced architectures. Due to the strong feature representation ability, the VGG-16 is considered as feature extractor in this work.

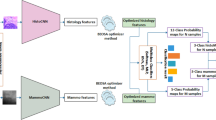

Proposed method

Figure 1 shows the flow chart of the proposed fusion framework, which consists of three steps: First, the original images are decomposed into principal and salient components. Second, two different fusion rules are proposed to achieve two component fusion, respectively. Finally, the fused principal and salient components are reconstructed together. The details of our method are shown as follows:

Low rank representation-based decomposition

Low rank representation (LRR) is to seek the lowest-rank representation among all the candidates that represent all vectors as the linear combination of the bases in a dictionary. Unlike the well-known sparse representation, which computes the sparest representation of each data vector individually, LRR aims at finding the lowest-rank representation of a collection of vectors jointly. Liu et al.36 proposed low rank representation to segment data for the first time. However, the LRR fails to preserve the local structure information. Thus, a latent low-rank representation (LatLRR) is proposed, which can extract both the global structure and local details. The LatLRR is formulated as follows:

where \(\lambda\) is a free parameter. \(\left\| {\, \cdot \,} \right\|_{*}\) is the nuclear norm which is the sum of the singular values of the matrix, and \(\left\| {\, \cdot \,} \right\|_{1}\) denotes the \(l_{1}\)-norm. \(X\) is the original image. \(Z\) is the low-rank coefficients, and \(L\) is the saliency coefficients. \(E\) indicates the sparse noisy coefficient. \(XZ\) denotes the low-rank part, and \(LX\) indicates the saliency part.

Since medical images have high frequency, the low rank representation is adopted in this work. The two source images, denoted as \(I_{1}\) and \(I_{2}\), are decomposed by the LatLRR to obtain the low-rank part \(I_{i}^{r}\) and saliency part \(I_{i}^{s}\),\(i \in \{ 1,2\}\). The main aim of this step is to obtain the main structure information and spatial details, which is beneficial for preserving the spatial information of source images.

Fusion of low-rank parts

Low-rank part mainly reflects global structure information and brightness information of the original images. In order to effectively fuse the low-rank parts of original images, the CNN model is adopted to extract the deep features. Specifically, first, the deep features of the low-rank parts are constructed via the pre-trained CNN, i.e., VGG-16. The CNN model can be thought as a composition of a number of functions.

where each function \(f_{l}\) takes the data samples \(X_{l}\) and a filter \(\omega_{l}\) as inputs and outputs \(I_{l + 1} ,l \in \{ 1,2,...,L\}\), and \(L\) is the number of layers.

For the pre-trained CNN model, the filter banks \(\omega_{l}\) has been learned from some big dataset, e.g. ImageNet. Suppose the input image \(I_{i}^{r}\), the multi-layer features are extracted, which is shown as follows:

In our work, three convolutional layers, i.e., ‘conv1’, ’conv2’, ’conv3’, are adopted. Then, a block-based average strategy is used to evaluate the weight of each feature.

Next, the initial weights are calculated by soft-max operator.

As we all know, the pooling operator in VGG-16 model is a kind of subsampling method, and thus, this operator decreases the spatial size of the feature maps to \({1 \mathord{\left/ {\vphantom {1 s}} \right. \kern-0pt} s}\) times of input where \(s = 2\) is the stride of the pooling layer. Thus, in different layers, the size of feature maps is \({1 \mathord{\left/ {\vphantom {1 {2^{i - 1} }}} \right. \kern-0pt} {2^{i - 1} }}\) times of the input image. After we obtain the initial weight map, an upsampling operator is utilized to increase the spatial size of the obtained weights to the one of the source images.

Finally, the multi-layer features are merged together to obtain the fused low-rank part.

Fusion of salient components

The saliency parts preserve the local detail information. Since the saliency features in source images have strong complementary information, a sum rule is adopted to fuse the saliency parts so as to preserve more details.

Image reconstruction

When the fused low-rank part \(G\) and saliency part \(H\) are obtained, the fusion result will be reconstructed as follows:

where \(R\) is the resulting image.

Experiments

In order to verify the superiority of the proposed method, several state-of-the-art medical image fusion methods including convolutional neural networks and non-subsampled contourlet transform (CNN-NSCT)37, nonsubsampled shearlet transform fusion method (NSST)38, nonsubsampled shearlet transform based structure tensor method (NSST-ST)39, sparse representation (SR)28, multi-scale transform based domain sparse representation fusion method (MST-SR)29, guided filtering based fusion method (GFF)22, and discrete stationary wavelet transform and an enhanced radial basis function neural network (DSWT-RBFN)40, are adopted for comparison. For the MST-SR method, the Laplacian pyramid is used as the multi-scale transform. In addition, the default parameter settings of all compared methods are consistent with the corresponding publications given by the authors.

Test images

In our experiment, 44 pairs of multi-modal medical images, i.e. CT and MRI, MRI and PET, MRI and SPECT, MR-T1 and MR-T2, are adopted as experimental datasets. Figure 2 shows the original images, which have been accurately registered before fusion. The size of each source image is 256 * 256 pixels.

Objective indexes

In order to quantitatively assess the fusion performance of different approaches, several widely used objective quality indexes are adopted in our experiment, including standard deviation (SD)41, entropy (EN)41, normalized mutual information QMI42, and phase congruency QPC41. SD calculates the overall contrast of the resulting image. EN measures the amount of information in the fused result. QMI denotes how much information from source images into the resulting image. QPC reflects image details in the fused result.

Experimental results

CT and MRI image fusion

The first experiment is to fuse CT and MRI images. Figure 3 presents the fusion images obtained by different methods. As shown in Fig. 3, the CNN-NSCT method leads to some loss of local details. The NSST method yields low contrast fusion result. The NSST-ST method fails to well fuse the information of source CT image. The fusion result obtained by SR method appears distortion phenomenon. The MST-SR method suffers from the detail loss of source MRI image. The GFF method decreases the brightness of source MRI image. Although the DSWT-RBFN method retains well the information of original images, the boundaries in the fused image are blurry. In comparison, the proposed method can retain the detail information of original images. In addition, the boundaries in the fused result are visible clearly.

To illustrate objectively the fusion quality of resulting images achieved by different approaches, Table 1 shows the average objective metrics on 11 pairs of CT and MRI images. It can be seen from Table 1 that the proposed approach yields the highest objective indexes in terms of SD, EN, QMI, and QPC, which further proves that the proposed method can effectively achieve the CT and MRI image fusion over other methods. For the computational time, it is show that the running time of the proposed method is acceptable among all studied techniques. Moreover, compared to the CNN-NSCT, the proposed method performs faster.

MRI and PET image fusion

The second experiment is performed on the MRI and PET images. The fusion images yielded by different methods are shown in Fig. 4. In this example, the CNN-NSCT and NSST methods produce unsatisfactory performance caused by loss of energy. The NSST-ST method fails to preserve well the color information of the source PET image. The SR and GFF methods lead to severe color distortion. The resulting image of the DSWT-RBFN method cannot well retain the color information in the original PET image. In contrast, the proposed method can obtain higher visual effect in terms of both color preservation and detail extraction against other studied methods.

The average objective indexes of different approaches on 11 pairs of MRI and PET images are presented in Table 2. We can see that the proposed method still produces the best performance against other compared methods, which also further verifies the advantage of the proposed method. For running time of all methods, it can be seen that the GFF method is efficient since it only requires image decomposition and fusion without involving any deep features. The computing time of the proposed method is moderate among all approaches.

MRI and SPET image fusion

The third experiment is tested on MRI and SPECT images. Figure 5 presents the fused images of different approaches. It is easy to observe that the proposed method still can retain the energy and details of source images. The compared methods cannot preserve color fidelity. Besides, the average objective indexes of different methods 11 pairs of MRI and SPET images are given in Table 3. It can be clearly observed that our method outperforms other studied methods on all the metrics, which is consistent with visual effect.

MR-T1 and MR-T2 image fusion

The fourth experiment is conducted on MR-T1 and MR-T2 images. The fused results of different fusion approaches are presented in Fig. 6. The NSST-PCNN, SR, and MST-SR methods suffer from detail loss in the fused results. The NSST and DSWT-RBFN methods fail to well inject the MR-T2 image into the fused images. The GFF method cannot well integrate the details from MR-T1 image. By contrast, the proposed method can effectively merge the MR-T1 and MR-T2. Furthermore, Table 4 lists the objective metrics of all approaches on 11 pairs of MR-T1 and MR-T2 images. It is obvious that the proposed method still obtains the highest fusion results in terms of four indexes, which further illustrate the effectiveness of the proposed method.

Comparative study

In this subsection, several CNNs-based medical image fusion methods, including including transfer learning technique-guided VGG19 model (TL-VGG19)43, CNN-based fusion in NSST domain (NSST-CNN)44, asymmetric dual deep network with sharing mechanism (ADDNS)45, perceptual high frequency CNN (PHF-CNN)37, multiscale double-branch residual attention network (MSDRA)46, are adopted for comparison. An experiment is performed on 11 pairs of CT and MRI medical images. Table 5 presents the objective results of all compared methods. It can be observed that the proposed method still yields the highest fusion performance among all fusion techniques. This experiment also further verifies the effectiveness of the proposed method.

Conclusions

In this work, a novel deep medical image fusion method based on a deep convolutional neural network (DCNN) is proposed for directly learning image features from original images. Specifically, source images are first decomposed by low rank representation to obtain the principal and salient components, respectively. Following that, the deep features are extracted from the decomposed principal components via DCNN and fused by a weighted-average rule. Then, considering the complementary between the salient components obtained by the low rank representation, a simple yet effective sum rule is designed to fuse the salient components. Finally, the fused result is obtained by reconstructing the principal and salient components. Experimental results verify that the proposed fusion method outperforms several state-of-the-art approaches. In future research work, the proposed method will be extended on multi-sensor image fusion.

Data availability

All images used in this work are collected from the database of Whole Brain Atlas created by Harvard Medical School (Available: http://www.med.harvard.edu/AANLIB/).

References

Nikolaev, A. V. et al. Quantitative evaluation of an automated cone-based breast ultrasound scanner for MRI–3d us image fusion. IEEE Trans. Med. Imaging 40, 1229–1239 (2021).

Duan, P. et al. Fusion of dual spatial information for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 59(9), 7726–7738 (2020).

Li, S., Zhang, K., Duan, P. & Kang, X. Hyperspectral anomaly detection with kernel isolation forest. IEEE Trans. Geosci. Remote Sens. 58, 319–329 (2019).

Zhu, Z. et al. Brain tumor segmentation based on the fusion of deep semantics and edge information in multimodal MRI. Inf. Fusion 91, 376–387 (2023).

Greensmith, J., Aickelin, U. & Tedesco, G. Information fusion for anomaly detection with the dendritic cell algorithm. Inf. Fusion 11, 21–34 (2010).

Algarni, A. D. Automated medical diagnosis system based on multi-modality image fusion and deep learning. Wirel. Personal Commun. 111, 1033–1058 (2020).

Liu, J., Kang, N. & Man, Y. Evidence fusion theory in healthcare. J. Manag. Anal. 5, 276–286 (2018).

Zhu, Z., Zheng, M., Qi, G., Wang, D. & Xiang, Y. A phase congruency and local laplacian energy based multi-modality medical image fusion method in nsct domain. IEEE Access 7, 20811–20824 (2019).

Kong, W. et al. Multimodal medical image fusion using gradient domain guided filter random walk and side window filtering in framelet domain. Inf. Sci. 585, 418–440 (2022).

James, A. P. & Dasarathy, B. V. Medical image fusion: A survey of the state of the art. Inf. Fusion 19, 4–19 (2014).

Wang, Z. & Ma, Y. Medical image fusion using m-pcnn. Inf. Fusion 9, 176–185 (2008).

Xu, Z. Medical image fusion using multi-level local extrema. Inf. Fusion 19, 38–48 (2014).

Xu, L., Si, Y., Jiang, S., Sun, Y. & Ebrahimian, H. Medical image fusion using a modified shark smell optimization algorithm and hybrid wavelet-homomorphic filter. Biomed. Signal Process. Control 59, 101885 (2020).

Hu, Q., Hu, S. & Zhang, F. Multi-modality medical image fusion based on separable dictionary learning and gabor filtering. Signal Process. Image Commun. 83, 115758 (2020).

Du, J., Li, W., Lu, K. & Xiao, B. An overview of multi-modal medical image fusion. Neurocomputing 215, 3–20 (2016).

Li, S., Yin, H. & Fang, L. Group-sparse representation with dictionary learning for medical image denoising and fusion. IEEE Trans. Biomed. Eng. 59, 3450–3459 (2012).

Zhou, J. et al. A fusion algorithm based on composite decomposition for pet and MRI medical images. Biomed. Signal Process. Control 76, 103717 (2022).

Seal, A. et al. PET-CT image fusion using random forest and à-trous wavelet transform. Int. J. Numer. Methods Biomed. Eng. 34, e2933 (2018).

Panigrahy, C., Seal, A. & Mahato, N. K. MRI and SPECT image fusion using a weighted parameter adaptive dual channel PCNN. IEEE Signal Process. Lett. 27, 690–694 (2020).

Sengupta, A., Seal, A., Panigrahy, C., Krejcar, O. & Yazidi, A. Edge information based image fusion metrics using fractional order differentiation and sigmoidal functions. IEEE Access 8, 88385–88398 (2020).

Ji, Z., Kang, X., Zhang, K., Duan, P. & Hao, Q. A two-stage multi-focus image fusion framework robust to image mis-registration. IEEE Access 7, 123231–123243 (2019).

Li, S., Kang, X., Hu, J. & Yang, B. Image matting for fusion of multi-focus images in dynamic scenes. Inf. Fusion 14, 147–162 (2013).

Srivastava, R., Prakash, O. & Khare, A. Local energy-based multimodal medical image fusion in curvelet domain. IET Comput. Vis. 10, 513–527 (2016).

Bhatnagar, G., Wu, Q. J. & Liu, Z. Directive contrast based multimodal medical image fusion in nsct domain. IEEE Trans. Multim. 15, 1014–1024 (2013).

Jin, X. et al. Multimodal sensor medical image fusion based on nonsubsampled shearlet transform and s-pcnns in hsv space. Signal Process. 153, 379–395 (2018).

Yin, M., Duan, P., Liu, W. & Liang, X. A novel infrared and visible image fusion algorithm based on shift-invariant dual-tree complex shearlet transform and sparse representation. Neurocomputing 226, 182–191 (2017).

Yin, M., Liu, X., Liu, Y. & Chen, X. Medical image fusion with parameter-adaptive pulse coupled neural network in nonsubsampled shearlet transform domain. IEEE Trans. Instrum. Meas. 68, 49–64 (2018).

Yang, B. & Li, S. Multifocus image fusion and restoration with sparse representation. IEEE Trans. Instrum. Meas. 59, 884–892 (2009).

Liu, Y., Liu, S. & Wang, Z. A general framework for image fusion based on multi-scale transform and sparse representation. Inf. Fusion 24, 147–164 (2015).

Liu, Y., Chen, X., Ward, R. K. & Wang, Z. J. Image fusion with convolutional sparse representation. IEEE Signal Process. Lett. 23, 1882–1886 (2016).

Liu, Y., Chen, X., Peng, H. & Wang, Z. Multi-focus image fusion with a deep convolutional neural network. Inf. Fusion 36, 191–207 (2017).

Li, H. & Wu, X. J. DenseFuse: A fusion approach to infrared and visible images. IEEE Trans. Image Process. 28, 2614–2623 (2018).

Liu, Y. et al. Deep learning for pixel-level image fusion: Recent advances and future prospects. Inf. Fusion 42, 158–173 (2018).

Zhang, Y. et al. IFCNN: A general image fusion framework based on convolutional neural network. Inf. Fusion 54, 99–118 (2020).

Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. Preprint at https://arXiv.org/quant-ph/1409.1556 (2014).

Liu, G., Lin, Z. & Yu, Y. Robust subspace segmentation by low-rank representation. In Proc. of the 27th International Conference on Machine Learning (ICML-10), 663–670 (2010).

Wang, Z. et al. Medical image fusion based on convolutional neural networks and non-subsampled contourlet transform. Expert Syst. Appl. 171, 114574 (2021).

Diwakar, M., Singh, P. & Shankar, A. Multi-modal medical image fusion framework using co-occurrence filter and local extrema in NSST domain. Biomed. Signal Process. Control 68, 102788 (2021).

Liu, X., Mei, W. & Du, H. Structure tensor and nonsubsampled shearlet transform based algorithm for CT and MRI image fusion. Neurocomputing 235, 131–139 (2017).

Chao, Z. et al. Medical image fusion via discrete stationary wavelet transform and an enhanced radial basis function neural network. Appl. Soft Comput. 118, 108542 (2022).

Liu, Z. et al. Objective assessment of multiresolution image fusion algorithms for context enhancement in night vision: A comparative study. IEEE Trans. Pattern Anal. Mach. Intell. 34, 94–109 (2011).

Hossny, M., Nahavandi, S. & Creighton, D. Comments on “Information measure for performance of image fusion”. Electron. Lett. https://doi.org/10.1049/el:20081754 (2008).

Do, O. C., Luong, C. M., Dinh, P. H. & Tran, G. S. An efficient approach to medical image fusion based on optimization and transfer learning with VGG19. Biomed. Signal Process. Control 87, 105370 (2024).

Sebastian, J. & King, G. G. A novel MRI and PET image fusion in the NSST domain using YUV color space based on convolutional neural networks. Wirel. Personal Commun. https://doi.org/10.1007/s11277-023-10542-w (2023).

Huang, W. et al. ADDNS: An asymmetric dual deep network with sharing mechanism for medical image fusion of CT and MR-T2. Comput. Biol. Med. 166, 107531 (2023).

Li, W. et al. A multiscale double-branch residual attention network for anatomical–functional medical image fusion. Comput. Biol. Med. 141, 105005 (2022).

Acknowledgements

This work was financially supported by the Key Projects of Natural Science Research in Universities of Anhui Province (Grant no. 2022AH051372), the Overseas Visit Training Projects of Young Backbone Teachers (Grant no. JWFX2023040), the Horizontal Projects of Suzhou University (Grant no. 2023xhx186), the Quality Engineering Balance Fund Projects of Suzhou University (Grant no. szxy2023jyjf78).

Author information

Authors and Affiliations

Contributions

All work in this manuscript was done independently by corresponding author N.L.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liang, N. Medical image fusion with deep neural networks. Sci Rep 14, 7972 (2024). https://doi.org/10.1038/s41598-024-58665-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-58665-9

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.