Abstract

In this paper, we propose a method for predicting epileptic seizures using a pre-trained model utilizing supervised contrastive learning and a hybrid model combining residual networks (ResNet) and long short-term memory (LSTM). The proposed training approach encompasses three key phases: pre-processing, pre-training as a pretext task, and training as a downstream task. In the pre-processing phase, the data is transformed into a spectrogram image using short time Fourier transform (STFT), which extracts both time and frequency information. This step compensates for the inherent complexity and irregularity of electroencephalography (EEG) data, which often hampers effective data analysis. During the pre-training phase, augmented data is generated from the original dataset using techniques such as band-stop filtering and temporal cutout. Subsequently, a ResNet model is pre-trained alongside a supervised contrastive loss model, learning the representation of the spectrogram image. In the training phase, a hybrid model is constructed by combining ResNet, initialized with weight values from the pre-trained model, and LSTM. This hybrid model extracts image features and time information to enhance prediction accuracy. The proposed method’s effectiveness is validated using datasets from CHB-MIT and Seoul National University Hospital (SNUH). The method’s generalization ability is confirmed through Leave-one-out cross-validation. From the experimental results measuring accuracy, sensitivity, and false positive rate (FPR), CHB-MIT was 91.90%, 89.64%, 0.058 and SNUH was 83.37%, 79.89%, and 0.131. The experimental results demonstrate that the proposed method outperforms the conventional methods.

Similar content being viewed by others

Introduction

Epilepsy is a chronic neurological disorder that affects about 50 million people, which is approximately 1% of the world’s population. Seizures are typical clinical manifestations of epilepsy, characterized by sudden and temporary neurobehavioral symptoms caused by abnormally hypersynchronous electrical discharges from overexcited neurons in the brain1,2. Except for a few special cases, seizures occur irregularly, and patient’s premonitory symptoms are uncertain. Moreover, the exact onset time cannot be estimated because it differs among individuals. Because of this unpredictability, people with epilepsy are limited in their social activities and exposed to trauma and danger, which substantially impacts their quality of life3. Furthermore, patients with severe epilepsy are hospitalized and managed throughout the day by medical personnel. However, the medical personnel are insufficient to manage all patients, and correct judgments cannot be made based solely on patient behavior monitoring. As a result, various studies related to epilepsy are being conducted to ensure the stability of daily lives for epilepsy patients4 and enable precise prevention and treatment with limited medical resources.

Due to the fact that EEG detects electric signals generated by the brain during seizures, absence seizures and focal seizures without awareness can also be identified5. Therefore, from the 1970s to the present, EEG data has been widely utilized in seizure prediction studies. Research fields relating to epilepsy are primarily separated into seizure detection6,7,8 and prediction. Both studies are essential, and current research mostly focuses on seizure prediction, starting with seizure detection. Early studies of seizure prediction included manual feature extraction techniques, which are unsuitable for deriving distinct patterns from massive datasets. Consequently, recent research on seizure prediction employs deep learning algorithms suitable for recognizing complicated patterns in large datasets.

Our main contributions can be summarized as follows: (1) we propose a pre-processing method in which EEG data compensates for the deficiencies of training data in deep learning and makes ResNet advantageous for feature extraction. (2) we pre-train the image representation to achieve the best performance possible with small amounts of data. (3) To extract various features from time-sequence image data, we propose a hybrid model combining ResNet and LSTM.

Related work

The presence of differences between pre-ictal and inter-ictal brain waves was the fundamental assumption of seizure prediction9,10. Philippa et al. and Shufang Li et al. linearly assessed the spike rate in the segment using an EEG raw signal to predict seizures11. Ali Shahidi Zandi et al. collected features using the positive zero-crossing approach and forecasted them by classifying inter-ictal and pre-ictal seizures using the Bayesian Gaussian Mixture model12, respectively. Dongrae Choet al. decomposed spectral components using various filtering techniques, including bandpass filtering, emergency mode degradation, and multivariate empirical mode degradation, and made predictions by comparing the phase synchronization of the gamma frequency band to that of other frequency bands13. The majority of past research has focused on signal analysis techniques, which are unsuitable for irregular and complex EEG data. To extract the frequency components of EEG data, numerous researchers use empirical mode decomposition14, continuous wavelet transform (CWT)15, discrete wavelet transform (DWT)16, and STFT17. Furthermore, many efforts have been made to extract meaningful information from EEG data, such as principal component analysis (PCA)16, approximate entropy18, and the Hjorth parameter19.Various classifiers classified the extracted features as pre-ictal and inter-ictal. Machine learning techniques such as Bayesian Gaussian Mixture12, Support Vector Machine (SVM)20,21, and K-Nearest Neighbor (KNN)22 have begun showing impressive outcomes. In addition, recent research employing deep learning models, which is closely related to this study, has shown advanced results. In contrast to prior studies, Haidar Khan et al. transformed signals using the Wavelet Transform (WT) and projected changes in the probability distribution and Convolution Neural Networks (CNN) using Kullback-Leibler divergence (KL divergence), a probability distribution of data method. Kostas et al. predicted, using an LSTM model capable of reflecting the information of time sequence signals23. Liu et al. proposed a novel patient-independent approach in epilepsy research by applying the advanced form of LSTM known as the Bidirectional Long Short-Term Memory (Bi-LSTM) network24. Until recently, research utilizing CNN-based deep learning models such as 3D-CNN25,26 and ResNet27 have been used as classification methods.

Extraction and classification methods, as described in the previous study, are equally crucial for all classification algorithms. When determining the method for feature extraction and classification, it is necessary to consider the characteristics and limitations of the data. Variable patient characteristics make it difficult to use EEG data for patient-independent seizure prediction28. Therefore, we performed patient-specific seizure prediction. Data scarcity is a disadvantage of patient-specific methods. Additionally, the inherent disadvantages of EEG data are their complexity and irregularity29. To achieve superior performance with limited data, we propose a specific pre-trained model consisting of ResNet and supervised contrastive loss. We also augment the data with a band-stop filter and temporary cutout. Moreover, we propose a hybrid model that combines ResNet and LSTM to reflect both types of information during training. We use STFT to transform the irregular and complex shortcomings of the EEG into data with frequency-time information.

Database

The dataset used in this research can be classified according to the reference electrode selection method, using two methods: ’Unipolar reference’ and ’Bipolar reference’. The SNUH dataset was measured using the ’Unipolar reference’ method, while the CHB-MIT dataset used the ’Bipolar reference’ method. In the ’Unipolar reference’ method, the GND value is determined by averaging all the electrodes and converting them into digital signals, with all electrodes sharing the same GND. The difference between the individual signal and the signal measured at the common ground is recorded. However, this method is susceptible to fine noise or common-mode signals, which can also be amplified and output. On the other hand, in the ’Bipolar reference’ method, each adjacent electrode is used as a GND to convert it into a digital signal. This method is resistant to noise from the common signal between electrode attachment points, as the measurement procedure eliminates it. However, it makes it difficult to observe brain waves at a specific location. A description of the two datasets is included below.

CHB-MIT scalp EEG dataset

The CHB-MIT dataset serves as a validated dataset primarily utilized in research related to seizure detection and prediction. It comprises data gathered from Children’s Hospital Boston, encompassing a total of 844 hours of data and 245 recorded seizures. The scalp EEG information was recorded using 22 electrodes with a sampling rate of 256Hz and extracted using the bipolar method. Among the 22 electrode channels, 18 common channels (’FP1-F7’, ’F7-T7’, ’T7-P7’, ’P7-O1’, ’FP1-F3’, ’F3-C3’, ’C3-P3’, ’P3-O1’, ’FP2-F4’, ’F4-C4’, ’C4-P4’, ’P4-O2’, ’FP2-F8’, ’F8-T8’, ’T8-P8’, ’P8-O2’, ’FZ-CZ’, ’CZ-PZ’) were used for training purposes.

SNUH scalp EEG dataset

This study was approved by the Institutional Review Board of the Seoul National University Hospital (IRB No. H-1710-030-891). Written informed consent from the patients was waived by the Institutional Review Board of Seoul National University Hospital. All methods were carried out in accordance with relevant guidelines and regulations. The SNUH dataset was collected from Seoul National University Hospital and included 845 h of data and 78 seizures. Scalp EEG information was recorded using 21 electrodes with a sampling rate of 200 Hz and was extracted with a unipolar reference. All 21 electrode channels (’Fp1-AVG’, ’F3-AVG’, ’C3-AVG’, ’P3-AVG’, ’Fp2-AVG’, ’F4-AVG’, ’C4-AVG’, ’P4-AVG’, ’F7-AVG’, ’T1-AVG’, ’T3-AVG’, ’T5-AVG’, ’O1-AVG’, ’F8-AVG’, ’T2-AVG’, ’T4-AVG’, ’T6-AVG’, ’O2-AVG’, ’Fz-AVG’, ’Cz-AVG’, ’Pz-AVG’) were used for training.

Pre-processing

The amount of data, the model, and the characteristics of the data all significantly influence the performance of models in data-based supervised learning. EEG data has three disadvantages: a class imbalance between pre-ictal and inter-ictal, an insufficient data quantity, and the complexity and irregularity of the data, making analysis difficult. These disadvantages directly affect the model’s performance. We addressed these issues during the pre-processing phase.

Data sampling

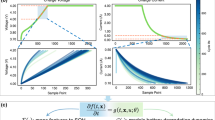

As illustrated in Fig. 1, we defined the period before ictal onset as “pre-ictal” and set the durations to 10, 15, and 30 min. “Inter-ictal” is defined as the period more than 3 hours away from the seizure, when the seizure waveform is absent from the EEG30. The validation datasets, CHB-MIT and SNUH, exhibit a class imbalance between pre-ictal and inter-ictal due to the relatively small number of ictals in comparison to the total length. When there is a large difference in the number of classes in the dataset, classes with a high distribution are given more weight during training. In the case of a seizure dataset with a substantial proportion of inter-ictal, overall accuracy may increase while sensitivity decreases. As sensitivity directly related to the patient’s life, resolving the difference in distribution between the two classes can lead to improved performance. To resolve the imbalance, we employed undersampling to extract data of the same length as pre-ictal and inter-ictal data, as depicted in Fig. 1. Additionally, oversampling was conducted to supplement the existing limited data and compensate for information loss during undersampling. As shown in Fig. 2, the window size was set to 10 s, and the sliding window algorithm was applied every 1s to generate overlapping data. Through data sampling, the data imbalance was resolved, and insufficient data were supplemented.

STFT

The irregular and complex raw EEG data presented in Fig. 3a was transformed into a spectrogram image with the x-axis indicating time and the y-axis representing frequency using STFT, as shown in Fig. 3b. As a spectrogram, the power value of the frequency band at a particular time can be easily observed, and it can be analyzed using both the time information on the x-axis and the image characteristics.

Equation (1) is converted into a discrete digital signal using STFT. Here, x[n] represents the raw signal in the time domain, m and n denote the time axes, and \(\omega\) signifies the frequency axis. w[] refers to the window function. For continuous data analysis, the Hanning window function with a window length of 1 s and 50 \(\%\) overlap was applied to enhance the time resolution31. As depicted in Fig. 3c, the data were constructed using only the information-rich data in the 0 \(\sim\) 60 Hz band. Difficult-to-analyze EEG were transformed into spectrograms containing time-frequency information, and a preprocessing step was performed to facilitate feature extraction from the data.

This is a pre-processing procedure for a single channel. The data shown in (a) is in its original form, referred to as raw EEG data. The spectrogram image depicted in (b) has undergone the application of STFT. The image (c) represents data that has been truncated to a frequency range of 0–60 Hz. For the pre-training phase, data augmentation techniques were applied to images (d, e). Specifically, temporal cut-out and band-stop filters were utilized.

Pretext task: pre-training

We conducted pre-training to achieve high performance with a limited data. The original data were augmented using a band-stop filter and temporary cutout, and then trained within a model consisting of ResNet and supervised contrastive loss. Training with augmented data can prevent overfitting, and the image representation is acquired in advance. Even with a small dataset, the training model could determine optimal parameters through the use of the pre-trained ResNet.

Data augmentation

The augmentation method has primarily been employed in image processing within the field of vision32, and it has also found applications in signal processing33 and other domains. For EEG data, which contains both signal information and STFT-converted image data, a band-stop filter and temporal cutout were employed to satisfy both requirements. The STFT-applied image takes the form of a horizontally and vertically cropped representation when specific frequency band and time zone information is removed. The images shown in Fig. 3d, e were generated through augmentation. The temporal cutout was vertically cropped, and 6 of 10 s were removed at random. The temporal cutout involved vertical cropping, removing 6 out of 10 seconds at random. Experiments were performed to determine the removed time and length of frequency information. Augmented images were used as input for the pre-trained model.

Residual learning

CNN34, which is effective in analyzing patterns in images, has been widely utilized in the field of computer vision. Deeper layers within CNN models are recognized as crucial for determining the model’s performance. However, contrary to initial expectations, increasing the depth of layers in CNN-based models often leads to degradation issues35. ResNet was introduced as a solution to address this degradation problem. It employs the model structure of VGGNet (Visual Geometry Group Net)36 and incorporates shortcut connections to add input values to output values35.

In this study, we employ ResNet-18, the shallowest model in the ResNet architecture. This decision is influenced by the experimental dataset, consisting of small images with dimensions of (21x60). Smaller images inherently carry less information, making it more challenging to effectively capture essential features and patterns within deeper networks. ResNet-18 consists of the five blocks, as illustrated in Fig. 4. Each block includes batch normalization, Rectified Linear Unit (ReLU), and max pooling. The input dimensions for CHB-MIT and SNUH datasets are (18 × 21 × 60) and (21 × 21 × 60), respectively. These dimensions represent the number of electrodes, the temporal information derived from a window size of 1 second and 50% overlap, and the frequency components.For CHB-MIT, the resulting feature maps from each block are as follows: (64 × 21 × 60), (64 × 21 × 60), (128 × 11 × 30), (256 × 6 × 15), and (512 × 3 × 8). The final feature map obtained from ResNet is transformed into a 512-dimensional vector through adaptive average pooling and a flatten layer. Throughout the pre-training and training processes, the output from ResNet is utilized as input values for the supervised contrastive loss and the LSTM layer, respectively.

Supervised contrastive learning

Contrastive learning has its origins in metric learning37 and is currently primarily studied as a learning technique for pre-trained models. Among the notable approaches are self-supervised contrastive learning38 and supervised contrastive learning39. Self-supervised contrastive learning is an unsupervised learning algorithm that is appropriate for large quantities of unlabeled data, but it cannot outperform supervised learning. The proposed method to address these deficiencies is supervised contrastive learning. In contrast to self-supervised contrastive learning, loss values are allocated based on class. In other words, it is a method of supervised learning using labeled data. Equation (2) represents self-supervised contrastive loss, while Equation (3) denotes supervised contrastive learning.

The symbol \(\cdot\) represents the dot product, and \(\tau\) is the hyperparameter. When the batch size is N and I \(\equiv \{1\ldots 2N\}\) is the index of an augmented sample, 2N indexes are included. \(z_j(i)\) represents a single positive sample, which is the remaining augmented image, while \(2(N-1)\) indexes represent negative samples, denoted by \(z_a\). In the denominator, equation \(z_i\) \(\cdot\) \(z_a\) represents similarity comparisons for negative samples, and it is repeated \(2(N-1)\) times. Only one image augmented from the same image has its numerator \(z_i \cdot z_j(i)\) compared for similarity. With the exception of one augmented image, all images are considered negative.

Equation (3) P(i) represents a sample from the same class considered as positive. Positive and negative samples are separated by class, and the loss is calculated as the mean similarity value for all positive samples39. In supervised contrastive loss during training, the loss is determined by comparing data within the same batch. Therefore, the larger the positive sample size and batch size, the better the performance. We conducted pre-training using supervised contrastive learning, which clearly demonstrates distinctions between objects (Fig. 5).

Our proposed method comprises two key modules: the Pretext task and the Downstream task. In the Pretext task, the data augmentation technique involving a band-stop filter and temporary cutout is applied, followed by the training of a pre-trained ResNet model with a supervised contrastive loss. This results in the generation of a pre-trained representation for the augmented data. In the Downstream task, fine-tuning is performed on the LSTM using the pre-trained ResNet, and training is conducted on the preprocessed original data.

Downstream task: training

LSTM

As a deep learning model derived from the Recurrent Neural Network (RNN), the LSTM model has proven effective in multiple fields with time-dependent or sequence-based data, including speech recognition, language modeling, and translation. Additionally, to address the gradient vanishing phenomenon that occurs on long-term dependency data of RNN, it is possible to transmit information over long distances without losing it through the cell state. Figure 6 illustrates the internal structure of the LSTM cell state, encompassing the forget gate, input gate, and output gate.

-

The LSTM’s calculation procedure is as follows: \(C_t\) represents the cell state value, \(h_t\) denotes the hidden state value, \(x_t\) is the input value, \(\sigma\) signifies the sigmoid function, tanh is the Hyperbolic Tangent function, and \(f_t\), \(i_t\), \({\tilde{C}}_t\) and \(o_t\) represent the output values of each gate.

-

(a)

Equation (4) represents a forget gate. The sigmoid function produces a value ranging between 0 and 1, indicating the extent to which past information should be discarded. A value closer to 0 implies less retention of information.

$$\begin{aligned} f_t=\sigma (W_f \cdot [h_{t-1}, x_t] + b_f) \end{aligned}$$(4) -

(b)

Equations (5) and (6) correspond to the input gate, responsible for selecting crucial information from incoming data. Equation (5) defines the value to be updated using the sigmoid function, while equation (6) calculates a new candidate value vector \({\tilde{C}}_t\), which will contribute to updating the cell state.

$$\begin{aligned} i_t= & {} \sigma (W_i \cdot [h_{t-1}, x_t] + b) \end{aligned}$$(5)$$\begin{aligned} {\tilde{C}}_t= & {} tanh(W_c\cdot [h_{t-1},x_t + b_c]) \end{aligned}$$(6) -

(c)

Equation (7) updates \(C_{(t-1)}\) to \(C_t\). This process involves updating the new cell state through a combination of addition and multiplication involving the data from the preceding steps. Specifically, Ct is updated by multiplying the previous cell state \(C_{(t-1)}\) with the output ft from the forget gate, and it is further updated through a combination of multiplication and addition involving the values from the input gate.

$$\begin{aligned} C_t = f_t*C_{t-1} + i_t * {\tilde{C}}_t \end{aligned}$$(7) -

(d)

Equations (8) and (9) represent an output gate responsible for generating the final output. In Equation (8), the sigmoid function determines the value of \(x_t\) to be output. Ultimately, in Equation (9), the output is determined by multiplying the result obtained from Equation (8) with \(C_t\).

$$\begin{aligned} o_t= & {} \sigma (W_o[h_t-1,x_t]+b_o) \end{aligned}$$(8)$$\begin{aligned} h_t= & {} \sigma _t*tanh(C_t) \end{aligned}$$(9) -

(a)

As demonstrated in the previous equation, the cell state selectively discards irrelevant past information, incorporates pertinent current information, and iteratively updates itself using the gates. This enables the LSTM model to exhibit outstanding performance, even when dealing with data that exhibits long-term dependencies40.

ResNet-LSTM hybrid model

The STFT pre-processing step produces image data with time information along the x-axis and frequency information along the y-axis. In this study, time and frequency information was used to extract data characteristics using a hybrid model combining ResNet and LSTM. ResNet was used to extract the image features, which were extracted as 512-dimensional vector values. It was delivered to the LSTM as an input. Time-series analysis was performed on the extracted features using LSTM with one hidden layer. It was classified using a linear classifier with the dropout and ReLU layers in the output layer.

Result and discussion

EEG data has three disadvantages in seizure prediction: complexity and irregularity, a small number of datasets, and imbalance. Patient-specific seizure prediction is more restricted by the separation of patient-specific data. Therefore, we developed a pre-trained model that can be applied to the prediction of seizures. To address the potential issue of overfitting due to limited training data, we employed the model described in the Pretext task process, as depicted in Fig. 5. This approach helped us reduce the risk of overfitting and improve the generalization of our model. Moreover, it provided initial weight values to determine the optimal training model parameters. The proposed method’s pseudocode is shown in Algorithm 1 and 2.

We defined a single data as 10 s and predicted seizures by classifying pre-ictal and inter-ictal data. Leave-one-out cross-validation was employed to aggregate the results effectively. In this approach, a pair of pre-ictal and inter-ictal data instances were treated as a singular unit, with N-1 units used for training while the remaining unit served as the testing set. This process was iterated N times. Evaluation metrics such as sensitivity, specificity, accuracy, and False Positive Rate (FPR) were employed and are detailed in Table 1. Furthermore, we conducted statistical testing on the means of each patient’s performance using a paired t-test. The training process utilized the window-based PyTorch framework and the Stochastic Gradient Descent (SGD) optimizer, which demonstrated superior performance in terms of generalization compared to adaptive optimization methods41. This offered an advantage in addressing overfitting concerns when dealing with limited data. For the pre-training phase, a batch size of 512, an epoch of 300, and a learning rate of 0.05 were employed. During the subsequent training phase, an epoch of 100, a learning rate of 0.01, and the same batch size were utilized. Each hyperparameter was determined through a series of experiments.

In this paper, the SNUH and CHB-MIT datasets were utilized for validation. Both datasets share the same number of patients, as detailed in the “DATABASE” section. However, the SNUH dataset contains 78 fewer instances of ictals and is measured using the noisier unipolar reference method. In our experimental setup, we defined the pre-ictal period as 10, 15, and 30 min, with subsequent evaluation metrics confirming the outcomes for each respective period. Tables 2, 3, 4 and 5 present patient-specific the results. Tables 3 and 5 are the results of ResNet-LSTM without applying the pre-trained model, and Tables 3 and 5 are the results of applying the pre-train model. A summary of the results’ performance is provided in Tables 6 and 7. According to Table 6, all results using pre-train were enhanced, with sensitivity showing improvement relative to specificity in the 10 and 15 min data. In the case of the 30-min data, a higher rate of increase in specificity and FPR led to an improvement in accuracy. Similar to the results obtained from the CHB-MIT, all SNUH results in Table 7 also improved, and the sensitivity of the 10 and 15 min results improved even further. In addition, the specificity was enhanced in the 30 min data. Experiments conducted on both datasets yielded comparable outcomes. The 30 min pre-ictal period presented fewer extractable data compared to the 10 and 15-min periods, and seizure signs tended to weaken as time distanced from the ictal event. Consequently, when comparing the 30 min data to other time intervals, further enhancements in specificity were observed. In the context of seizure prediction, defining the pre-ictal period is a significant consideration. Extending the pre-ictal period offers the advantage of advanced patient preparation, but it comes with the trade-off of reduced accuracy and increased patient anxiety. As demonstrated in Fig. 7, using the two datasets, CHB-MIT had the highest value at 15 min, while SNUH had the highest value at 10 min, and both datasets had similar values at 10 and 15 min. Even with a small amount of data, accuracy for 10, 15 min was ensured in SNUH, and in the paired t-test results of Tables 6 and 7, the numerical values according to the presence or absence of pre-training showed a significant difference in the overall result (p<0.05), indicating that pre-training plays a significant role in improving the numerical value.

STFT conversion transforms the EEG data into a spectrogram image with represented on the x-axis and frequency on the y-axis. For training, we used a hybrid model that combines ResNet and LSTM to reflect both types of information. The experimental outcomes for pre-train + ResNet and pre-train + ResNet-LSTM are outlined in Table 8. As a result of the experiment, improved results were obtained for both datasets, confirming the benefits of the hybrid model.

Table 9 shows a previous study conducted on patient-specific seizure prediction using the CHB-MIT dataset. Contemporary research trends involve extracting data in the frequency domain as features and utilizing machine learning and deep learning methodologies as classifiers. Ongoing investigations aim to enhance sensitivity and reduce FPR by addressing challenges such as data imbalance and insufficient samples, both inherent in EEG. Jemal et al.46 obtained a high sensitivity of 96.1% from 23 patients but with low specificity, and they employed 5-fold cross-validation instead of Leave-one-out cross-validation as the performance validation method. Table 9 includes two approaches42,44 employ STFT, the same method applied in this study. Among these, Yang et al.42 experimental results demonstrated low sensitivity of 59.9%, 66%, and 56% for patients 2,9, and 14, respectively. For patients 2 and 9, limited pre-ictal data relative to the total duration was a factor, while patient 14 had a shorter recording duration, indicating less effective training. The majority of studies on seizure prediction using CHB-MIT reported poor patient outcomes due to the aforementioned issues. As demonstrated in Table 3, the experimental results of our study revealed that the 10 min sensitivity for all three patients exceeded 80%, and patient 9’s sensitivity improved by nearly 40%. The inter-ictal weight concentration phenomenon was resolved by addressing the class imbalance. By generating a pre-trained model, the representation was acquired in advance, enabling the model to determine the optimal weight values during the actual training process. Through these interventions, we succeeded in enhancing outcomes for patients with previously low sensitivity. Table 9 does not present results based on all 24 patients, as certain experimental patient data was lacking and there was no common channel. The proposed method’s experimental results were presented for all patients, including those used in the previous method42. We obtained higher sensitivity and lower FPR compared to conventional methods.

Conclusion

In this paper, we propose a method for predicting epilepsy seizures based on a pre-trained model that employs supervised contrastive learning and a hybrid model that combines ResNet and LSTM. In the pre-processing phase, the data were transformed using STFT to ensure that the training model could efficiently perform feature analysis, and the class imbalance between pre-ictal and inter-ictal as well as the insufficient data were addressed by sampling and oversampling. During pre-training, data were augmented and pre-trained with a ResNet and supervised contrastive loss model so that the training model could find the optimal parameter with fewer data. During the training phase, image features and time series data were extracted using a hybrid model comprised a pre-trained ResNet and LSTM. The experimental results reveal that CHB-MIT demonstrates optimal performance for the 15 min pre-ictal period, while SNUH performs best for the 10 min pre-ictal period. We demonstrated greater sensitivity and a lower FPR than conventional methods.

Data availibility

The CHB-MIT data used in this study are public database, which could be accessed and downloaded from https://archive.physionet.org/physiobank/database/chbmit/. The SNUH data used in this study are not publicly available. The data may be made available from the corresponding authors upon reasonable request subject to permission and approval from the corresponding organizations and institutional review boards.

References

Jung, K.-Y. Epidemiology of epilepsy in Korea. Epilia Epilepsy Community 2, 17–20 (2020).

Lee, S.-Y. et al. Estimating the prevalence of treated epilepsy using administrative health data and its validity: Essence study. J. Clin. Neurol. 12, 434–440 (2016).

Yang, J. & Sawan, M. From seizure detection to smart and fully embedded seizure prediction engine: A review. IEEE Trans. Biomed. Circuits Syst. 14, 1008–1023 (2020).

Shaikh, A. & Dhopeshwarkar, M. Development of early prediction model for epileptic seizures. In Data Science and Big Data Analytics: ACM-WIR 2018, 125–138 (Springer, 2019).

Elger, C. E. & Hoppe, C. Diagnostic challenges in epilepsy: Seizure under-reporting and seizure detection. Lancet Neurol. 17, 279–288 (2018).

Siddiqui, M. K., Morales-Menendez, R., Huang, X. & Hussain, N. A review of epileptic seizure detection using machine learning classifiers. Brain Inform. 7, 1–18 (2020).

Boonyakitanont, P., Lek-Uthai, A., Chomtho, K. & Songsiri, J. A review of feature extraction and performance evaluation in epileptic seizure detection using eeg. Biomed. Signal Process. Control 57, 101702 (2020).

Liu, G., Zhou, W. & Geng, M. Automatic seizure detection based on s-transform and deep convolutional neural network. Int. J. Neural Syst. 30, 1950024 (2020).

Quyen, M. L. V., Navarro, V., Martinerie, J., Baulac, M. & Varela, F. J. Toward a neurodynamical understanding of ictogenesis. Epilepsia 44, 30–43 (2003).

Mormann, F., Lehnertz, K., David, P. & Elger, C. E. Mean phase coherence as a measure for phase synchronization and its application to the eeg of epilepsy patients. Physica D 144, 358–369 (2000).

Karoly, P. J. et al. Interictal spikes and epileptic seizures: Their relationship and underlying rhythmicity. Brain 139, 1066–1078 (2016).

Zandi, A. S., Tafreshi, R., Javidan, M. & Dumont, G. A. Predicting epileptic seizures in scalp eeg based on a variational Bayesian Gaussian mixture model of zero-crossing intervals. IEEE Trans. Biomed. Eng. 60, 1401–1413 (2013).

Cho, D., Min, B., Kim, J. & Lee, B. Eeg-based prediction of epileptic seizures using phase synchronization elicited from noise-assisted multivariate empirical mode decomposition. IEEE Trans. Neural Syst. Rehabil. Eng. 25, 1309–1318 (2016).

Yan, J. et al. Eeg seizure prediction based on empirical mode decomposition and convolutional neural network. In Brain Informatics: 14th International Conference, BI 2021, Virtual Event, September 17–19, 2021, Proceedings 14, 463–473 (Springer, 2021).

Hussein, R., Lee, S. & Ward, R. Multi-channel vision transformer for epileptic seizure prediction. Biomedicines 10, 1551 (2022).

Alickovic, E., Kevric, J. & Subasi, A. Performance evaluation of empirical mode decomposition, discrete wavelet transform, and wavelet packed decomposition for automated epileptic seizure detection and prediction. Biomed. Signal Process. Control 39, 94–102 (2018).

Peng, P., Song, Y. & Yang, L. Seizure prediction in eeg signals using stft and domain adaptation. Front. Neurosci. 1880 (2021).

Rout, S. K., Sahani, M., Dash, P. & Biswal, P. K. Multifuse multilayer multikernel rvfln+ of process modes decomposition and approximate entropy data from ieeg/seeg signals for epileptic seizure recognition. Comput. Biol. Med. 132, 104299 (2021).

Tanveer, M., Pachori, R. B. & Angami, N. Classification of seizure and seizure-free eeg signals using hjorth parameters. In 2018 IEEE symposium series on computational intelligence (SSCI), 2180–2185 (IEEE, 2018).

Savadkoohi, M., Oladunni, T. & Thompson, L. A machine learning approach to epileptic seizure prediction using electroencephalogram (eeg) signal. Biocybern. Biomed. Eng. 40, 1328–1341 (2020).

Banupriya, C. & Aruna, D. D. robust optimization of electroencephalograph (eeg) signals for epilepsy seizure prediction by utilizing vspo genetic algorithms with svm and machine learning methods. Indian J. Sci. Technol. 14, 1250–1260 (2021).

Ghaderyan, P., Abbasi, A. & Sedaaghi, M. H. An efficient seizure prediction method using knn-based undersampling and linear frequency measures. J. Neurosci. Methods 232, 134–142 (2014).

Tsiouris, K. M. et al. A long short-term memory deep learning network for the prediction of epileptic seizures using eeg signals. Comput. Biol. Med. 99, 24–37 (2018).

Liu, G., Tian, L. & Zhou, W. Patient-independent seizure detection based on channel-perturbation convolutional neural network and bidirectional long short-term memory. Int. J. Neural Syst. 32, 2150051 (2022).

Ozcan, A. R. & Erturk, S. Seizure prediction in scalp eeg using 3d convolutional neural networks with an image-based approach. IEEE Trans. Neural Syst. Rehabil. Eng. 27, 2284–2293 (2019).

Yu, Z. et al. Epileptic seizure prediction based on local mean decomposition and deep convolutional neural network. J. Supercomput. 76, 3462–3476 (2020).

Jiang, Y., Yang, L. & Lu, Y. An epileptic seizure prediction model based on a simulation block and a pretrained resnet. In 2020 13th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), 709–714 (IEEE, 2020).

Karoly, P. J. et al. The circadian profile of epilepsy improves seizure forecasting. Brain 140, 2169–2182 (2017).

Acharya, U. R. et al. Characterization of focal eeg signals: A review. Future Gener. Comput. Syst. 91, 290–299 (2019).

Ito, M., Hatta, K. & Arai, H. Postictal confusion was associated with improvement after electroconvulsive therapy in depression. Juntendo Med. J. 60, 245–250 (2014).

Griffin, D. & Lim, J. Signal estimation from modified short-time Fourier transform. IEEE Trans. Acoust. Speech Signal Process. 32, 236–243 (1984).

Bloice, M. D., Stocker, C. & Holzinger, A. Augmentor: an image augmentation library for machine learning. arXiv preprint arXiv:1708.04680 (2017).

Cheng, J. Y., Goh, H., Dogrusoz, K., Tuzel, O. & Azemi, E. Subject-aware contrastive learning for biosignals. arXiv preprint arXiv:2007.04871 (2020).

Behnke, S. Hierarchical Neural Networks for Image Interpretation Vol. 2766 (Springer, 2003).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. cite. arXiv preprint arxiv:1512.03385 (2015).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014).

Hadsell, R., Chopra, S. & LeCun, Y. Dimensionality reduction by learning an invariant mapping. In 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), vol. 2, 1735–1742 (IEEE, 2006).

Misra, I. & Maaten, L. v. d. Self-supervised learning of pretext-invariant representations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 6707–6717 (2020).

Khosla, P. et al. Supervised contrastive learning. Adv. Neural Inf. Process. Syst. 33, 18661–18673 (2020).

Hochreiter, S. & Schmidhuber, J. Long short-term memory. Neural Comput. 9, 1735–1780 (1997).

Wilson, A. C., Roelofs, R., Stern, M., Srebro, N. & Recht, B. The marginal value of adaptive gradient methods in machine learning (2018). arXiv:1705.08292.

Yang, X., Zhao, J., Sun, Q., Lu, J. & Ma, X. An effective dual self-attention residual network for seizure prediction. IEEE Trans. Neural Syst. Rehabil. Eng. 29, 1604–1613 (2021).

Khan, H., Marcuse, L., Fields, M., Swann, K. & Yener, B. Focal onset seizure prediction using convolutional networks. IEEE Trans. Biomed. Eng. 65, 2109–2118 (2017).

Truong, N. D. et al. Convolutional neural networks for seizure prediction using intracranial and scalp electroencephalogram. Neural Netw. 105, 104–111 (2018).

Romney, A. & Manian, V. Comparison of frontal-temporal channels in epilepsy seizure prediction based on eemd-relieff and dnn. Computers 9, 78 (2020).

Jemal, I., Mezghani, N., Abou-Abbas, L. & Mitiche, A. An interpretable deep learning classifier for epileptic seizure prediction using eeg data. IEEE Access 10, 60141–60150 (2022).

Acknowledgements

This work was supported by the Korea Health Industry Development Institute (KHIDI) grant funded by the Korea government(MOHW).(No. RS-2023-00266765, Development of a Real-time Seizure Prediction System using Smartphone-based Embedded Deep Learning).

Author information

Authors and Affiliations

Contributions

D.L. performed the experiments and analyzed the data. D.L. and B.K. developed code. D.L., C.J., I.J. and K.M. contributed to the discussion. K.J. and T.K. checked the dataset’s labeling. M.K. acquired the fund and supervised the project. All authors wrote the manuscript and approved the final form.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lee, D., Kim, B., Kim, T. et al. A ResNet-LSTM hybrid model for predicting epileptic seizures using a pretrained model with supervised contrastive learning. Sci Rep 14, 1319 (2024). https://doi.org/10.1038/s41598-023-43328-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-43328-y

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.