Abstract

Deep learning methods, particularly Convolutional Neural Network (CNN), have been widely used in hyperspectral image (HSI) classification. CNN can achieve outstanding performance in the field of HSI classification due to its advantages of fully extracting local contextual features of HSI. However, CNN is not good at learning the long-distance dependency relation and dealing with the sequence properties of HSI. Thus, it is difficult to continuously improve the performance of CNN-based models because they cannot take full advantage of the rich and continuous spectral information of HSI. This paper proposes a new Double-Branch Feature Fusion Transformer model for HSI classification. We introduce Transformer into the process of HSI on account of HSI with sequence characteristics. The two branches of the model extract the global spectral features and global spatial features of HSI respectively, and fuse both spectral and spatial features through a feature fusion layer. Furthermore, we design two attention modules to adaptively adjust the importance of spectral bands and pixels for classification in HSI. Experiments and comparisons are carried out on four public datasets, and the results demonstrate that our model outperforms any compared CNN-Based models in terms of accuracy.

Similar content being viewed by others

Introduction

Due to the advancement of current imaging spectrometry techniques, hyperspectral image (HSI) contains rich spectral and spatial information with high spectral and spatial resolution1, so pixel-level classification can be achieved2,3. HSI are widely used in many fields, such as atmospheric environment research4, precision agriculture5,6,7, and ocean research8. However, there is a lot of redundant information in the spectral bands of HSI and the difficulty in obtaining samples of HSI9 brings difficulties to the classification of HSI. In early studies of HSI classification, some machine learning-based approaches, such as SVM10, k-NN11, and multilayer perceptron (MLP)12, were used for HSI classification. However, most of them focus on the spectral information of HSI without taking full advantage of the spatial information of HSI. Although some methods based on morphological profiles13 and Gabor feature14 are presented to extract spatial features, the classification accuracy is still unsatisfactory. This is because these methods can only extract low-level features and the limited training samples of HSI.

The rapid development of deep learning techniques has brought the more diversified effective approaches for HSI classification. Deep learning follows an “end-to-end” design philosophy and can automatically extract linear and nonlinear features. Compared with traditional methods, which require a large amount of domain expert knowledge, deep learning methods can avoid designing manual features and improve the generalization ability of the model. Some deep learning-based models, such as Stacked Autoencoder (SAE)15, Recurrent Neural Network (RNN)16,17, and deep belief network (DBN)18, have been merged and successfully applied to HSI classification. Hang et al.17 proposed a model consisting of two RNN layers that can extract complementary information from non-adjacent spectral bands of HSI. RNN-based models can extract spectral features by considering the spectral dimension of HSI as a sequence, but they are prone to gradient vanishing, and difficult to learn long-distance dependency relations19.

Convolutional Neural Network (CNN) can effectively extract the spatial features of HSI, due to its powerful ability to extract local contextual information. A lot of CNN-based models have appeared in recent years. Hu et al.20 firstly used CNN for HSI classification and proposed a 1DCNN-based model, which includes multiple 1DCNNs and only considers the spectral features of HSI. Although the performance of 1DCNN-based model is poor, it has promoted the development of CNN-based models in HSI classification. Subsequently, a series of CNN-based models taking account of spectral and spatial features of HSI has been developed. Zhong et al.21 presented a 3DCNN-based model through a 3D convolution kernel to extract spectral-spatial features of HSI. Paoletti et al.22 designed a 2DCNN-based model based on deep pyramid network23, which can improve the classification performance by stacking a large number of convolution kernels. Li et al.24 proposed a 3DCNN-based Double-Branch model, where the two branches extract spectral and spatial features of HSI respectively. Gao et al.25 proposed a small convolution and feature reuse (SC-FR) module by combining cascaded 1 \(\times\) 1 convolutional layers and cross-layer connections. There is only one 3 \(\times\) 3 convolution in the model to extract spatial features of hyperspectral images. Dang et al.26 proposed a dual-path and small-convolution-based module (DPSC) for the extraction of spatial and spectral features from hyperspectral images. Both of these models are based on small convolutions to build lightweight models. Chang et al.27 proposed a method based on a consolidated convolutional neural network (C-CNN) composed of 2DCNN and 3DCNN to learn the spatial-spectral features and abstract spatial features of hyperspectral images. Shi et al.28 proposed a model based on multi-scale feature fusion and double attention mechanism to extract features from hyperspectral images. Although the CNN-based models have made some progress in HSI classification, the performance of them is still insufficient. First, HSI usually contains hundreds of bands and the spectral characteristics of some ground objects are extremely similar. CNN is not good at learning long-distance dependency relations of spectral bands29, and cannot accurately classify such objects. Secondly, the size of the convolution kernel in the CNN-Based model is usually small, and it is easy to extract the local features rather than the global features of the entire neighborhood pixel blocks. These problems cause the bottleneck of the CNN-based model in the classification of HSI. Improving the performance of CNN-based model in HSI classification becomes very important and meaningful.

The development of Transformer30 techniques brings a new idea to HSI classification, which was originally used in the field of Nature Language Processing (NLP). Transformer is very effective at processing sequence data30, which can extract global features of input data through a self-attention mechanism, and can better learn long-distance dependency relations of input data31,32. Dosovitskiy et al.32 proposed the first Transformer-based model for computer vision, Vision Transformer(ViT), and achieved good results. This model extracts global features by segmenting the image into patches. We can apply Transformer to extract features of HSI by regarding HSI as a sequence. HSI can be regarded as sequences in two ways. One is that the spectral bands of HSI are rich and continuous, so the entire spectral bands can be treated as a sequence. The other is that the spectral vector of each pixel can be considered as a word vector in the NLP field31, because of each pixel representing a ground object. However, simply applying the Transformer model, for example, vision transformer (ViT)32, into HSI classification still has many problems. First of all, segmenting the neighborhood pixel blocks with a fixed size like ViT makes it difficult to extract the low-level features of the input data33. Next, segmenting neighborhood patches only in the spatial dimension still fails to learn long-range dependency relations for the spectral features of HSI.In view of this, this paper proposes a Double-Branch Feature Fusion Transformer (denotedas DBFFT) model for HSI classification. The proposed model adopts two branches to extract spectral and spatial features of HSI respectively. The spectral branch consists of a spectral attention module and Transformer encoder block. The spatial branch is made up of a spatial attention module and Transformer encoder block. In addition, a feature fusion layer is designed between these two branches to fuse spectral and spatial features. The outputs obtained by the two branches are fused by addition operation, and finally used for classification. The main contributions of this paper can be described as follows:

-

The proposed model extracts the spectral features and spatial features of HSI respectively through a Double-Branch structure. In the two branches, according to the sequence characteristics of hyperspectral images, Pixel-wise embedding and Band-wise embedding are adopted to effectively extract the long-distance dependency relations of spectral dimension of HSI and the global spatial feature of HSI.

-

We design a CNN-based spectral attention module and a spatial attention module, which can adaptively adjust the importance of spectral and spatial features of the input data, and extract rich spectral and spatial features.

-

Our proposed model adopts label smooth techniques to alleviate the overfitting phenomenon of the model when the number of samples is small. In addition, we design a feature fusion layer to fuse the features extracted by the two branches to improve the performance of the model.

The remainder of this paper is organized as follows. In Sect. “Methodology”, we describe the details of our proposed model. In Sect. “Experiments results and analysis”, we present and analyze the experimental results, in addition to analyzing the factors that affect the performance of the model. In Sect. “Conclusion”, we give conclusions and present directions for future work.

Methodology

Overview of the proposed model

We set the HSI to be a data cube with length S, width M, and number of bands C. We take each labeled pixel as the center and segment a 3D cube of size \(H\times H\times C\) called the neighborhood pixel block, where H is the length and width of the neighborhood pixel block, C represents the number of spectral bands of the HSI. We take neighborhood pixel blocks as input to the model to fully utilize the spectral and spatial information of HSI.

Figure 1 shows the overall structure of our proposed model. The model contains two branches to extract spectral features and spatial features of HSI respectively. We take the upper branch as the spectral branch and the lower branch as the spatial branch. The spectral branch consists of the spectral attention module and the Transformer encoder block. The spatial branch is made up of a spatial attention module and a Transformer encoder block. Inspired by CrossViT34, we add a feature fusion layer between the two branches to fuse the spatial features and the spectral features.

The structure of the DBFFT. This model consists of two branches. The upper branch consists of a spectral attention module and Transformer encoder block to extract spectral features of HSI. The lower branch consists of spatial attention module and Transformer encoder block to extract spatial features of HSI.

The spectral branch first uses the spectral attention module to extract the rich spectral features of the neighborhood pixel blocks of size \(H\times H\times C\). Then the dimension of the spectral dimension is reduced from \(C\) to \(k\) to remove redundant information, and a new feature map of size \(H\times H\times k\) will be gotten. We set \(k\) = 32. After that, the feature map is segmented according to the spectral dimension to obtain \(k\) patches of size \(H\times H\), which are flattened and processed by linear projection to generate a sequence of shape (batch size, \(k+1\), \(M\)), where M represents the length of the vector in the sequence. This sequence will be used as input to the Transformer encoder block of the spectral branch. The spectral branch of our proposed model can utilize self-attention to extract global features, capturing the long-distance dependency relations of the spectral dimension.

The spatial branch first uses the spatial attention module to extract the rich spatial features of the neighborhood pixel blocks of size \(H\times H\times C\) to obtain a new feature map of size \(H\times H\times C\). The feature map is segmented by pixel, and \(H\times H\) vectors of length \(C\) are obtained and processed by linear projection to generate a sequence of shape (batch size, \(H\times H\), M). Use this sequence as the input to the Transformer encoder block. The spatial branch can extract the global spatial features of HSI.

Finally, the outputs of the two branches are fused to fuse spectral features and spatial features. We will describe the abovementioned parts in detail in the following sections.

Depth-wise separable convolution

As shown in Fig. 2, the depth-wise separable convolution consists of a depth-wise convolution layer and a 1 × 1 convolution layer. Depth-wise separable convolution can extract rich low-level features from HSI at the beginning of the entire attention module. Each convolution kernel in the depth-wise convolution only extracts spatial features in one spectral dimension. The 1 × 1 convolution fuses the features of different spectral bands to obtain a feature map. Since the spectral information of HSI is rich and redundant, the use of depth-wise separable convolution can reduce the redundant information of the extracted spectral dimension and the interference of redundant bands on feature extraction.

Spectral attention module

The redundant spectral information of raw HSI data will interfere with the recognition of the model. Therefore, by processing the HSI with the spectral attention module, the influence of noise information on the model is reduced, and the redundant information of HSI is reduced. The framework of the module is shown in Fig. 3. We extract the pixel-centered neighborhood pixel block of shape \(H\times H\times C\) as input, where \(H\) represents the size of the neighborhood pixel block and \(C\) represents the spectral dimension of the HSI. First, the low-level features of the neighborhood pixel blocks are extracted through two layers of depth-wise separable convolution layers. Second, the spectral attention \({\varvec{s}}{\varvec{e}}\in {{\varvec{R}}}^{1\times 1\times {\varvec{C}}}\) is generated by spectral attention to adjust the importance of each spectral band, and then the obtained feature map is fused with the original data to retain the original spectral and spatial features. Finally, the spectral features of the spectral dimension are fused through two 1 × 1 convolution layers with GeLU. The above process does not change the size of the neighborhood pixel blocks, but it can reduce the spectral dimension and redundant spectral features.

The spectral attention mechanism can automatically adjust the importance of different spectral bands for classification and reduce the interference of useless bands to the model. Figure 4 shows the whole process of generating spectral attention. Inspired by SE-block35, our computational process for generating spectral attention \({\varvec{s}}{\varvec{e}}\) is defined as follows:

where \(E\) represents the obtained feature map after the neighborhood pixel block is processed by two depth-wise separable convolution layers, \(E\left(k,i,j\right)\) represents the value of the position (i, j) of the k-th channel of the feature map E, \({h}^{avg}\) represents the result of global average pooling, \({h}_{\left(k\right)}^{avg}\) represents the value of the kth channel of \({h}^{avg}\), and \({\sigma }_{1}\) and \({\sigma }_{2}\) represent ReLU and sigmoid activation functions, respectively. \({FC}_{1}\) and \({FC}_{2}\) are two fully connected layers. The first layer reduces the dimension from M to M/r, and the second layer increases the dimension from M/r to M. We set r to be 16.

After spectral attention \({\varvec{s}}{\varvec{e}}\) and feature map \({F}_{1}\) are multiplied by band, the importance of different bands can be automatically adjusted.

Spatial attention module

Since we use the neighborhood pixel block as the input of the model, we usually regard the labels of all pixels of the neighborhood pixel block as the label of the center pixel. It will lead to the interference of the information of the pixels with different labels of the original center pixel to the model36. Therefore, we use a spatial attention module to enhance the information of pixels that are helpful for classification and weaken the information of pixels that interfere with classification. The framework of the spatial attention module is the same as Fig. 3, the difference lies in the part that generates the attention, which will generate a spatial attention. And this module does not change the spectral dimension of the input data.

Figure 5 shows the whole process of generating spatial attention. Inspired by CBAM37, we first perform global average pooling and global max pooling in the spectral dimension to generate \({s}^{avg}\) and \({s}^{max}\) of shape \(H\times H\times 1\). The calculation process of this part is described in Eqs. (3) and (4).

where \(F\) represents the feature map obtained after the neighborhood pixel block is processed by two depth-wise separable convolution layers in the spatial branch, F(κ,i,j) represents the value of the position (i,j) of the feature map F on the kth channel, \({s}^{avg}\) represents the result of global average pooling, \({S}_{\left(i,j\right)}^{avg}\) represents the value of the position (i, j) of \({s}^{avg}\), \(Max\left(\mathrm{F}\right)\) represents the maximum value of all channels of each pixel in the feature map F..

Then, we concatenate \({s}^{avg}\) and \({s}^{max}\). After processing through a convolutional layer and a sigmoid activation function, the spatial attention \({\varvec{s}}{\varvec{a}}\in {{\varvec{R}}}^{{\varvec{H}}\times {\varvec{H}}\times 1}\) is obtained.

After the spatial attention \({\varvec{s}}{\varvec{a}}\) and the feature map \({F}_{2}\) are multiplied by pixels, the importance of different pixels for classification can be automatically adjusted.

Pixel-wise embedding and Band-wise embedding

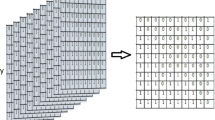

The classic ViT structure segments the image into patches according to a fixed size. When ViT has simply been applied to segment the image, it is not suitable for the characteristics of HSI because each pixel on the HSI represents a ground object. Meanwhile, such a segmentation method cannot learn the long-distance dependency relations of the spectral bands of HSI. To better combine the characteristics of HSI, we adopt Pixel-wise embedding and Band-wise embedding in the two branches to better learn the global features of HSI. In the spatial branch, we perform Pixel-wise embedding on the feature maps of the spatial attention module. We segment the feature map of shape \(H\times H\times C\) by pixel to generate \(H\times H\) vectors of length \(C\). Finally, the length of the vector is adjusted to M by the full connection layer processing, and M is set to 64. We did not add position embedding to the vectors because the CNN can encode the absolute position of the image38.

Considering that the spectral dimension information of the feature map is rich and continuous, we use Band-wise embedding to segment the HSI according to the spectral dimension, and then flatten the two-dimensional patch of each band. After that, the vector of output length M is processed through the fully connected layer as the input of the Transformer. This can learn long-distance dependency relations in the spectral dimension of HSI. Lastly, the generated sequence is used as the input of the transformer, after adding the positional embedding and the learnable embedding. Figure 6 illustrates how Pixel-wise embedding and Band-wise embedding process feature maps into sequences. Although the linear projection methods of the two branches are different for the characteristics of HSI, the length of the vector after linear projection is the same, which is to facilitate the fusion of features at the feature fusion layer.

Transformer encoder block

Each branch of our proposed model contains two Transformer encoder blocks respectively to extract global features of HSI. As shown in the Fig. 7a, each transformer encoder block consists of a multi-head self-attention mechanism sublayer and a Feedforward network sublayer, and each sublayer has LayerNormalization and residual connections. Figure 7b shows the processing of the self-attention mechanism in Transformer. The self-attention mechanism can extract the global features of the input sequence, and its calculation process is described in Eq. (7).

where \(K\), \(Q\), \(V\) are obtained by multiplying the input sequence with \({w}^{Q}\), \({w}^{K}\) and \({w}^{V}\) respectively. \({d}_{k}\) represents the dimension of the vector in K, whose role is to obtain a stable gradient by scaling19. Multi-head self-attention mechanism is to concatenate the outputs obtained by multiple self-attentions. Multiple heads are computed independently and each head has a different focus on the sequence. The formula is defined as follows:

where \({W}^{o}\) is a matrix and \(h\) represents the number of heads.

The Feedforward network consists of two fully connected layers and a GeLU activation function, which can further transform the features learned in self-attention mechanism. Equation (9) gives its calculation process.

where \(\sigma\) denotes GeLU activation function.

Feature fusion layer

Our proposed model extracts spatial and spectral features of HSI on two branches separately. Inspired by CrossViT34, we add a feature fusion layer between the two branches to fuse the features extracted by the two branches. Specifically, we consider exchanging the class tokens (i.e. the Learnable Embedding illustrated in Fig. 6) of the output sequence of the Transformer encoder block of the spectral branch and the first vector of the output sequence of the Transformer encoder block of the spatial branch. It is because the Transformer-based model uses the first vector of the output sequence to classify. Thus, we can think of this vector as a summary of the entire sequence34. Therefore, the class token of the output sequence of the spectral branch contains rich spectral features, and the first vector of the output sequence of the spatial branch contains rich spatial features. By exchanging these two vectors, the fusion of spectral and spatial features can be facilitated.

Label smooth

When the training samples that are used to train the model are insufficient, the generalization ability of the model will be reduced, which will lead to overfitting of the model. In practical applications, this problem of insufficient HSI samples is also very common. In order to decrease the influence of the overfitting phenomenon on the model, we introduce a regularization technique label smooth to alleviate it.

First, we change each label to use a one-shot representation. The vector \({y}_{n}\) represents the one-shot representation of each label y, its dimension is S dimension, where S represents the number of classes, and the value on the vector is 1 when n = y, otherwise it is 0. Then, we add noise \(\varepsilon\) to the label as follows:

where \({y}_{n}^{^{\prime}}\) is the new label obtained after label smooth, \(\varepsilon\) is the noise.

The model tends to become more "confident" during the training process, but the lack of training set samples and mislabeling of the dataset will cause the model to generate more misclassifications in the test set. By adding noise to each label, the model becomes "unconfident", the generalization ability of the model is improved, and the overfitting of the model is alleviated.

Experiments results and analysis

Data sets description

We adopt four public datasets: Kennedy Space Center (KSC), Salinas (SA), University of Pavia (PU), and Houston 2013(HU) to evaluate the performance of the proposed model.

Kennedy Space Center (KSC): This dataset was collected by AVIRIS sensors over the Kennedy Space Center (KSC) in Florida, USA. This dataset contains 512 × 614 pixels, and after removing the noise-affected bands, a total of 176 bands are available for experiments. It has a spatial resolution of 18 m and a wavelength range of 400 to 2500 nm. It contains a total of 13 land cover categories with a total of 5211 labeled pixels. The training samples, validation samples and test samples for each category are shown in the Table 1.

Salinas (SA): This dataset was collected by AVIRIS sensors over the Salinas Valley in California. This dataset contains 512 × 217 pixels, and after removing the noise-affected bands, a total of 204 bands are available for experiments. It has a spatial resolution of 3.7 m and a wavelength range of 400 to 2500 nm. It contains a total of 16 land cover categories with a total of 54,129 labeled pixels. The training samples, validation samples and test samples for each category are shown in the Table 2.

University of Pavia (PU): This dataset was collected by ROSIS sensors over the University of Pavia in northern Italy. This dataset contains 610 \(\times\) 340 pixels, and after removing noise-affected bands, a total of 103 bands are available for experiments. It has a spatial resolution of 1.3 m and a wavelength range of 430 to 860 nm. It contains a total of 9 land cover categories with a total of 42,776 labeled pixels. The training samples, validation samples and test samples for each category are shown in the Table 3.

Houston 2013 (HU): This dataset was collected by the ITRES CASI-1500 sensor over the University of Houston campus, which is provided by the 2013 IEEE GRSS Data Fusion Competition 39. This dataset contains 349 × 1905 pixels. This dataset has 144 spectral bands for experiments. It contains a total of 15 land cover categories with a total of 15,029 labeled pixels. The training samples, validation samples, and test samples for each category are shown in Table 4.

For deep learning methods, the more samples are used for training, the better the performance of the model will be gotten. It means that the training of the model will be more time-consuming as well as requiring more labeled pixels. Our proposed model can still maintain the optimal performance in the case of small samples. Therefore, for KSC, we consider 5% of the samples for training, 5% for validation, and the rest for testing. For PU, SA, and HU, we consider 1% of samples for training, 1% for validation, and the rest for testing.

Experimental setup

The software environment for our experiments is Python version 3.7.0 and the deep learning framework in PyTorch version 1.2.0. The hardware environment for our experiments is RTX2060 GPU with 6 GB RAM and AMD CPU R7-4800 at 2.9 GHz with 16 GB RAM. We choose SGD optimizer40 to optimize the training parameters of the model, and the loss function chooses the cross-entropy loss function. The learning rate is set to 0.001, 0.001, 0.01, and 0.001 on KSC, SA, PU, and HU respectively. The epoch on four datasets is set to 200.

In order to quantitatively evaluate the classification performance of the model, we choose OA (overall accuracy), AA (average accuracy), and kappa coefficient (κ) as the evaluation indicators of the model.

Parameters setting

We analyze some factors that affect the training and performance of the model, which are batch size, learning rate, number of head and input size. To be fair, each of our subsequent experiments was repeated ten times, and the metrics used were the average of 10 experiments. We chose 10 different random seeds for 10 experiments to exclude variability due to random factors in the experiments.

-

(1)

Batch size: Batch size is important for model training, which affects the convergence of the model. We consider the sets of {16, 32, 64} for experiments. The results are shown in the Fig. 8, we can see that choosing the appropriate batch size for training is very important for the final performance of the model, so we chose to use 16 on KSC, 64 on SA, 64 on PU, and 32 on HU.

-

(2)

Learning rate: The learning rate affects the convergence speed of the model during training, and it plays an important role in the performance of the model. We choose a learning rate sets of {0.01, 0.001, 0.0001} for experiments. As shown in the Fig. 9, choosing different learning rates to train the model has a great impact on the final performance of the model. Based on the above results, we choose to use 0.001 on KSC, 0.001 on SA, 0.01 on PU, and 0.001 on HU, respectively.

-

(3)

Number of heads: Transformer's multi-head self-attention can extract the global relationship between vectors in the sequence. Different heads can extract different relationships between vectors and other vectors. We select a set of head numbers {4, 6, 8} to evaluate the effect of head count on the model. As shown in the Fig. 10, different head counts affect the performance of the model. We use 4 on KSC, 4 on SA, 6 on PU, and 4 on HU respectively, according to the experimental results.

-

(4)

Input size: The input size determines the spatial information that the model can use for classification. To better evaluate the effect of size on the model, we choose a set of sizes {3, 5, 7, 9, 11}. As shown in the Fig. 11, as the size increases, the OA of the model continues to increase. In the HU dataset, the OA of size 11×11 is lower than the OA of size 9×9, but its value is still higher than that of sizes 3×3, 5×5, and 7×7. This indicates that the increase of spatial information can improve the information that can be mined by the model. We choose the size of \(11\times 11\) as the input size of the model on the PU, KSC, SA datasets, and \(9\times 9\) as the input size of the model on the HU dataset.

Comparison results of different methods

In this section, our proposed model is compared with the traditional method MLP as well as five deep learning models, such as 1D-CNN20, M3D-DCNN41, pResNet22, SSRN21, DBDA24, SCFR25 and DPSCN26. Among these methods, except for MLP and 1D-CNN, the neighborhood pixel patch is used as the input of the model. The hyperparameters (such as input size, learning rate) and training skills (such as early stopping, learning rate dynamic adjustment) of all the models are set according to their original paper to ensure fairness. We repeat each group of experiments in the four datasets 10 times with randomly selected training samples to ensure the fairness of the experiments. Meanwhile, we will also report the mean and standard deviation for all the metrics. Now, we briefly introduce the methods mentioned above in the following.

-

(1)

MLP: It is a multilayer perceptron that consists of two fully connected layers and a ReLU.

-

(2)

1D-CNN: It consists of 1D convolutional layers and fully connected layers.

-

(3)

M3D-DCNN: This model extracts multi-scale information by combining multiple 3D convolution kernels of different sizes, and the size of the neighborhood pixel block is 7 × 7.

-

(4)

pResNet: This model is based on 2DCNN. By introducing a deep pyramid network23, the depth of the model is improved to extract rich spectral and spatial information. The size of the neighborhood pixel block is 11 × 11.

-

(5)

SSRN: This model consists of multiple spectral residual blocks and spatial residual blocks. The two residual blocks are based on ResNet and 3DCNN. The size of the neighborhood pixel block is 7 × 7.

-

(6)

DBDA: A 3DCNN-based Double-Branch model, each branch consists of DenseNet and attention mechanism. The size of the neighborhood pixel block is 9 × 9.

-

(7)

SCFR: This model is completely composed of 1 × 1 convolutions except that the first layer is composed of 3 × 3 convolution. The size of the neighborhood pixel block is 7 × 7.

-

(8)

DPSCN: This model is constructed by the dual-path small convolution (DPSC) module. DPSC module consists of 1 × 1 convolution and with a residual path and a density path. The size of the neighborhood pixel block is 9 × 9.

The classification results of different models on the four datasets are shown in Tables 5, 6, 7 and 8, and the best classification results are shown in bold. It can be seen that the performance of our proposed model is the best on all four datasets. MLP and 1D-CNN, which only utilize the spectral information of HSI, have the lowest performance on all four datasets. The accuracy of the model using spatial information is higher than the MLP and 1D-CNN, which proves the importance of spatial information for HSI classification. It is worth noting that the performance of M3D-DCNN is much lower than pResNet, SSRN, DBDA, and DBFFT on the Four datasets. The reason is that the depth of M3D-DCNN is shallow and it is difficult to extract deep features of HSI. Furthermore, in the case of small samples, M3D-DCNN overfits the training data. The pResNet model performs poorly on PU, KSC, and HU, and its OA on PU, KSC and HU is 4.23%, 2.76%,8.81% lower than DBFFT, respectively. The reason is that pResNet stacks a large number of convolution kernels, which leads to too many training parameters of the model, resulting in overfitting of the model in the case of a small sample. In addition, the over-reliance of the 2DCNN-based model on the spatial features of HSI also leads to the poor performance of the model. SCFR and DPSCN are mainly composed of 1 × 1 convolutions, and these two models utilize a small amount of 3 × 3 convolutions to extract spatial information. SCFR performed poorly on all four datasets, suggesting that SCFR did not extract enough spatial features. The performance of DPSCN on PU is close to DBFFT, and OA is only 0.08% lower than DBFFT, but on KSC, SA, and HU, OA is 2.28%, 4.9%, and 2.2% lower than DBFFT, respectively. This indicates the poor generalization ability of DPSCN. Both SSRN and DBDA are 3D-CNN based models, but their performance on all four datasets is much lower than that of our proposed model. DBDA, which is the same as our proposed model, is also a Double-Branch structure, but the OA on KSC, SA, PU, and HU is 1.35%, 1.01%, 0.16%, 0.9% lower than DBFFT, respectively. This illustrates the importance of global features for HSI classification. Our model is not only optimal on OA, but also on AA and κ, which proves that our model has better stability.

Figures 12, 13, 14 and 15 show the original false-color image of the HSI, the ground truth map, the classification maps of DBFFT, and all the compared methods. We can find that there is a lot of salt and pepper noise on the classification maps of MLP and 1DCNN that only use spectral information for classification. The classification map of the CNN-Based model based on spectral and spatial information and the classification map of our proposed model are more smooth. However, M3D-DCNN has worse classification results than pResNet, SSRN, DBDA, SCFR, DPSCN, and DBFFT due to its severe overfitting. Our proposed model extracts global spectral features and global spatial features by introducing a self-attention mechanism, and fuses spectral and spatial features through a feature fusion layer to obtain a very smooth and ideal classification map. Compared with all other models, our classification map generates the least noise on the four datasets, and the classification map is the most accurate and smooth.

Figure 16 shows a part of the SA classification map, and we can see that in the case of small training set samples, class 8 and class 15 are extremely prone to misclassification on both our proposed model and the comparison model. MLP, 1D-CNN and M3D-DCNN misclassify a lot of these two classes. Our proposed model has the least number of misclassifications on class 8 and class 15 compared to other models, which is the performance of our proposed model in the face of overfitting.

Table 9 reports the training time and test time of the proposed model and 5 models with similar performance. It can be seen that our model outperforms DBDA and SSRN in both training time. On the SA dataset, the training time of SSRN is 3 times that of ours, and the training time of DBDA is 2 times that of us. Compared with DPSCN and SCFR, our model requires more training time and testing time, but DPSCN and SCFR can only achieve similar performance to our proposed model on some datasets, and perform poorly on other datasets. For example on the SA dataset, the OA of DPSCN and SC-FR is 4.76% and 6.98% lower than our proposed model, respectively. We thought it was worth the extra time to get better performance.

Investigation of training sample

The excellent performance of deep learning methods relies on a large number of labeled datasets, but it is usually difficult to obtain enough labeled data for HSI. Therefore, we test the performance of our proposed model and all compared models under different numbers of training set samples. For KSC, we take 1%, 3%, 5%, 10%, and 20% of labeled pixels as training samples. For PU, we choose 0.8%, 1%, 5%, 10%, and 20% of labeled pixels as training samples. For SA, we consider 0.5%, 1%, 3%, 5%, and 10% of labeled pixels as training samples. For HU, we consider 0.5%, 1%, 5%, 15%, and 20% of labeled pixels as training samples. As shown in Fig. 17, as the training samples increase, the OA of all models also increases. In the case of large training samples, all performances of SSRN, DBDA, pResNet and our proposed model are close to perfect. But when the training samples are reduced, our proposed model consistently outperforms other models. It should be mentioned that our proposed model has the highest accuracy on all sample proportions of SA, and it only performs suboptimally at 20% sample proportion on PU and KSC datasets. Considering the difficulty of sample acquisition of HSI, our proposed model is more suitable for the actual situation.

Effect of label smooth

To verify the effect of label smooth on model training, we retrain the models with label smooth removed and compare their performance. The results are shown in Fig. 18. On the four datasets, the performance of the model will be improved by adding label smooth during training. It proves that the model combined with label smooth has stronger generalization ability.

Effect of feature fusion layer

In this section, we will compare the performance of the proposed model with that model not having feature fusion layer. The results are shown in Fig. 19. We can see that feature fusion significantly improves the performance of the model on all four datasets, which proves that feature fusion layer improves the performance of the model by fusing the spectral and spatial features of HSI.

Effect of attention mechanism

We verify the effectiveness of the attention mechanism by removing the spectral attention module, spatial attention module, and removing both attention modules from the model respectively. The experimental results are shown in Fig. 20. We can see that the performance of the model on all four datasets decreases significantly when both modules are removed, and the performance of the model is reduced by 0.91%, 1.04%, 1.26%, and 3.61% on KSC, PU, SA, and HU, respectively. After only removing the spatial attention module, the performance of the model is reduced by 0.88%, 0.95%, 1.2%, and 3.44% on KSC, PU, SA, and HU, respectively. It is revealing that the spatial attention module plays a major role in improving the performance of the model. When we remove the spectral attention module, the results show that it has a certain but non-significant impact on the performance of the model. Therefore, we can conclude that the model can improve the performance of the model after adding the attention mechanism.

Conclusion

In this paper, we propose a Double-Branch feature fusion Transformer (DBFFT) model for HSI classification. The proposed model can overcome the shortcomings of CNN-based models, which are not good at learning the long-distance dependency relations of spectral bands and extracting global spatial features of HSI. We firstly present two attention mechanism modules to extract spectral and spatial features separately. According to the characteristics of HSI, we adopt Pixel-wise embedding and Band-wise embedding on the spectral branch and spatial branch to process the feature maps to better utilize the self-attention mechanism to extract the global spatial and global spectral features of HSI. Then, we design a feature fusion layer to fuse the spectral and spatial features of the two branches. In view of the limited number of training samples of HSI, our model can outperform the CNN-based model in the case of small samples. In addition, we also employ the label smooth technique to improve the generalization ability of the model in small sample scenarios.

In the future, we will do more works to improve the proposed model to achieve more effectiveness and performance. The first work is to improve the structure of the proposed model to enhance its ability to extract global features and generalization. Another is to improve the fusion ability of the spectral and spatial features with a more effective feature fusion layer. Finally, more hyperspectral image datasets could be considered, not just these few public datasets.

Data availability

The data that support the findings of this study are available from the Grupo de Inteligencia Computacional (GIC) website (http://www.ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes).

References

Landgrebe, D. Hyperspectral image data analysis. IEEE Signal Process. Mag. 19(1), 17–28. https://doi.org/10.1109/79.974718 (2002).

Fauvel, M., Tarabalka, Y., Benediktsson, J. A., Chanussot, J. & Tilton, J. C. Advances in spectral-spatial classification of hyperspectral images. Proc. IEEE 101(3), 652–675. https://doi.org/10.1109/JPROC.2012.2197589 (2013).

Li, J., Marpu, P. R., Plaza, A., Bioucas-Dias, J. M. & Benediktsson, J. A. Generalized composite kernel framework for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 51(9), 4816–4829. https://doi.org/10.1109/TGRS.2012.2230268 (2013).

Ibrahim, A. et al. Atmospheric correction for hyperspectral ocean color retrieval with application to the Hyperspectral Imager for the Coastal Ocean (HICO). Remote Sens. Environ. 204, 60–75 (2018).

Mahesh, S., Jayas, D., Paliwal, J. & White, N. Hyperspectral imaging to classify and monitor quality of agricultural materials. J. Stored Prod. Res. 61, 17–26 (2015).

Haboudane, D., Miller, J. R., Pattey, E., Zarco-Tejada, P. J. & Strachan, I. B. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 90(3), 337–352 (2004).

Manjunath, K., Ray, S. & Vyas, D. Identification of indices for accurate estimation of anthocyanin and carotenoids in different species of flowers using hyperspectral data. Remote Sens. Lett. 7(10), 1004–1013 (2016).

Han, Y., Li, J., Zhang, Y., Hong, Z. & Wang, J. Sea ice detection based on an improved similarity measurement method using hyperspectral data. Sensors 17(5), 1124 (2017).

Paoletti, M. E., Haut, J. M., Plaza, J. & Plaza, A. Deep learning classifiers for hyperspectral imaging: A review. ISPRS J. Photogramm. Remote Sens. 158, 279–317 (2019).

Fauvel, M., Benediktsson, J. A., Chanussot, J. & Sveinsson, J. R. Spectral and spatial classification of hyperspectral data using SVMs and morphological profiles. IEEE Trans. Geosci. Remote Sens. 46(11), 3804–3814. https://doi.org/10.1109/TGRS.2008.922034 (2008).

Hongwei, Z. & Basir, O. An adaptive fuzzy evidential nearest neighbor formulation for classifying remote sensing images. IEEE Trans. Geosci. Remote Sens. 43(8), 1874–1889. https://doi.org/10.1109/TGRS.2005.848706 (2005).

Collobert, R. & Bengio, S. Links between perceptrons, MLPs and SVMs. Proc. ICML https://doi.org/10.1145/1015330.1015415 (2004).

Benediktsson, J. A., Palmason, J. A. & Sveinsson, J. R. Classification of hyperspectral data from urban areas based on extended morphological profiles,". IEEE Trans. Geosci. Remote Sens. 43(3), 480–491. https://doi.org/10.1109/TGRS.2004.842478 (2005).

Li, W. & Du, Q. Gabor-filtering-based nearest regularized subspace for hyperspectral image classification. IEEE J. Select Topics Appl. Earth Observ. Remote Sens. 7(4), 1012–1022 (2014).

Okan, A., Özdemir, B., Gedik, B.E., Yasemin, C. & Çetin, Y. Hyperspectral classification using stacked autoencoders with deep learning. In Proc.WHISPERS. 1–4 (2014).

Zhou, F., Hang, R., Liu, Q. & Yuan, X. HSI classification using spectral-spatial LSTMs. Neurocomputing 328, 39–47. https://doi.org/10.1016/j.neucom.2018.02.105 (2019).

Hang, R., Liu, Q., Hong, D. & Ghamisi, P. Cascaded recurrent neural networks for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 57(8), 5384–5394. https://doi.org/10.1109/TGRS.2019.2899129 (2019).

Larochelle, H. & Bengio, Y. Classification using discriminative restricted boltzmann machines. In Proc. ICML. 536–543 (2008).

Hong, D. et al. SpectralFormer: Rethinking hyperspectral image classification with transformers. IEEE Trans. Geosci. Remote Sens. 60, 1–15. https://doi.org/10.1109/TGRS.2021.3130716 (2022).

Wei, Hu., Huang, Y., Wei, Li., Zhang, F. & Li, H. Deep convolutional neural networks for hyperspectral image classification. J. Sens. https://doi.org/10.1155/2015/258619(2015) (2015).

Zhong, Z., Li, J., Luo, Z. & Chapman, M. Spectral-spatial residual network for hyperspectral image classification: A 3-D deep learning framework. IEEE Trans. Geosci. Remote Sens. 56(2), 847–858. https://doi.org/10.1109/TGRS.2017.2755542 (2018).

Paoletti, M. E. et al. Deep pyramidal residual networks for spectral-spatial hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 57(2), 740–754. https://doi.org/10.1109/TGRS.2018.2860125 (2019).

Dongyoon, H., Kim, J., & Kim, J. Deep pyramidal residual networks. In Proc. CVPR. 5927–5935 (2017).

Rui, L., Zheng, S., Duan, C., Yang, Y. & Wang, X. Classification of hyperspectral image based on double-branch dual-attention mechanism network. Remote Sens. 12(3), 582. https://doi.org/10.3390/rs12030582 (2020).

Gao, H. et al. Convolutional neural network for spectral-spatial classification of hyperspectral images. Neural Comput. 31(8997), 9012. https://doi.org/10.1007/s00521-019-04371-x (2019).

Dang, L., Pang, P., Zuo, X., Liu, Y. & Lee, J. A dual-path small convolution network for hyperspectral image classification. Remote Sens. 13(17), 3411. https://doi.org/10.3390/rs13173411 (2021).

Chang, Y.-L. et al. Consolidated convolutional neural network for hyperspectral image classification. Remote Sens. 14(7), 1571. https://doi.org/10.3390/rs14071571 (2022).

Shi, H. et al. H2A2Net: A hybrid convolution and hybrid resolution network with double attention for hyperspectral image classification. Remote Sensing. 14(17), 4235. https://doi.org/10.3390/rs14174235 (2022).

He, X., Chen, Y. & Lin, Z. Spatial-spectral transformer for hyperspectral image classification. Remote Sens. 13(3), 498 (2021).

Vaswani, A. et al. Attention is all you need. arXiv preprint arXiv:1706.03762 (2017).

He, J., Zhao, L., Yang, H., Zhang, M. & Li, W. HSI-BERT: Hyperspectral image classification using the bidirectional encoder representation from transformers. IEEE Trans. Geosci. Remote Sens. 58(1), 165–178. https://doi.org/10.1109/TGRS.2019.2934760 (2020).

Dosovitskiy, A. et al. An image is worth 16×16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020).

Yuan, K., Guo, S., Liu, Z., Zhou, A., Yu F., & Wu, W. Incorporating convolution designs into visual transformers. In Proc. ICCV. 579–588 (2021).

Chen C. F. R., Fan, Q. & Panda, R. Crossvit: Cross-attention multi-scale vision transformer for image classification. In Proc. ICCV. 357–366 (2021).

Hu J., Shen, L. & Sun, G. Squeeze-and-excitation networks. In Proc. CVPR. 7132–7141. (2018).

Zhu, M., Jiao, L., Liu, F., Yang, S. & Wang, J. Residual spectral-spatial attention network for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 59(1), 449–462. https://doi.org/10.1109/TGRS.2020.2994057 (2021).

Sanghyun, W., Park, J., & Lee, J.-Y. CBAM: Convolutional block attention module. In Proc. ECCV. 3–19 (2018).

Kayhan O. S. & Gemert, J. C. V. On translation invariance in CNNs: Convolutional layers can exploit absolute spatial location. In Proc. CVPR. 14274–14285 (2020).

Acito, N., Matteoli, S., Rossi, A., Diani, M. & Corsini, G. Hyperspectral airborne “Viareggio 2013 Trial” data collection for detection algorithm assessment. IEEE J. Select. Topics Appl. Earth Observ. Remote Sens. 9(6), 2365–2376 (2016).

Donoho, D. L. High-dimensional data analysis: The curses and blessings of dimensionality. AMS Math Chall. Lect. 1, 32 (2000).

He, M., Li, B. & Chen, H. Multi-scale 3D deep convolutional neural network for hyperspectral image classification. Proc. ICIP https://doi.org/10.1109/ICIP.2017.8297014 (2017).

Funding

This research was funded by National Natural Science Foundation of China, grant number 62176087; Shenzhen Science and Technology Innovation Commission (SZSTI)-Shenzhen Virtual University Park (SZVUP) Special Fund Project (2021Szvup032); National Key Research and Development Program of China, grant number 2019YFE0126600; Major Project of Science and Technology of Henan Province, grant number 201400210300.

Author information

Authors and Affiliations

Contributions

Conceptualization, L.D. and L.W.; methodology, L.D., L.W. and Y.H.; software, L.W.; validation, L.W.; formal analysis, Y.L.; investigation, L.W.; writing—original draft preparation, L.W.; writing—review and editing, X. Z., Y.L. and L.D; visualization, L.W.; funding acquisition, X. Z. and Y.L. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dang, L., Weng, L., Hou, Y. et al. Double-branch feature fusion transformer for hyperspectral image classification. Sci Rep 13, 272 (2023). https://doi.org/10.1038/s41598-023-27472-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-27472-z

This article is cited by

-

A Cross-Domain Semi-Supervised Zero-Shot Learning Model for the Classification of Hyperspectral Images

Journal of the Indian Society of Remote Sensing (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.