Abstract

Advanced photodetectors with intelligent functions are expected to take an important role in future technology. However, completing complex detection tasks within a limited number of pixels is still challenging. Here, we report a differential perovskite hemispherical photodetector serving as a smart locator for intelligent imaging and location tracking. The high external quantum efficiency (~1000%) and low noise (10−13 A Hz−0.5) of perovskite hemispherical photodetector enable stable and large variations in signal response. Analysing the differential light response of only 8 pixels with the computer algorithm can realize the capability of colorful imaging and a computational spectral resolution of 4.7 nm in a low-cost and lensless device geometry. Through machine learning to mimic the differential current signal under different applied biases, one more dimensional detection information can be recorded, for dynamically tracking the running trajectory of an object in a three-dimensional space or two-dimensional plane with a color classification function.

Similar content being viewed by others

Introduction

Intelligent and low-cost photodetectors with advanced functions are the inevitable trend for the rapid development of modern technology1,2,3. Advances in photon detection involve diverse information such as photon efficiency4, wide-angle vision1,5,6,7,8, image effectiveness1,9,10, color classification1,11,12,13,14,15,16,17, object location18,19, transmission of digital information20, and so on. However, conventional technology in optical imaging often includes redundant, duplicate, and unrelated information, and matrix sensors in a camera also impose additional cost and complexity in imaging systems. To realize multifunction under different imaging scenes such as wide-angle, night vision, etc, conventional practice in a smartphone is to integrate several cameras, utilizing an individual different camera under different circumstances or requirements. The complex optical components and repeat pixel matrix components are actually a waste of space and cost. Fourier transform-based single-pixel imaging partially solves this issue9,10. A 2D image is converted into frequency domains by the Fourier transform, and only one single-pixel photodetector can record the image information by monitoring the photocurrent variations caused by the reflected light of an object. The object image can be reconstructed according to the Fourier spectrum coefficients through inverse Fourier transform with the assistance of the Fourier algorithm.

Realizing a 2D image with a single-pixel device in a limited space is a good starting point for intelligent photodetectors, which require more and more functions to meet the requirements of modern technology. This also provides space to integrate pixel arrays with different functions, although coordination of algorithm and data processing between device pixel arrays is challenging. Machine learning has laid the foundation for intelligent technologies, which gradually innovate knowledge and modern products1,19. The inherent advantages of machine learning to accurately process large amounts of data enable reliable and fast development of artificial intelligence, which also provides a possibility to realize lensless color imaging with higher wavelength resolution even superior to human eyes. The advent of computational spectrometers based on computer algorithms has greatly reduced the size and cost of the spectrometer12,17,21,22,23,24. The basic principle to reconstruct the fine spectrum lies in the accurate relationship between the detector’s response variation and the light wavelength12,17,25. Therefore, machine learning of the responsivity variations at different wavelengths with external bias, gate voltage, and electrode positions is expected to be able to process the data pool for high-performance computational spectrometers17. However, the limitation of developing intelligent photodetector through machine learning stems from the tiny variations among the pixel arrays under different circumstances.

Hemispherical surface possesses varying curvature and detection distance, which results in larger responsivity variations to the distribution of incident light intensity, wavelength, object distance information, and so on5,18,26. In addition, hemispherical photodetectors exhibit innate advantages of wide-angle detection in lensless systems to mimic the compound eye structure of small arthropods5,18,19,26. Experiments have shown that spray-coated perovskite films on a hemispherical substrate show the charge collection narrow-band (CCN) effect and wide-angle response by controlling the film composition, thickness, and charge carrier dynamics14,26,27,28,29,30. However, the photon detection mode remains rigid, which yields monotonous information, far from the ideal intelligent imaging to deliver much information.

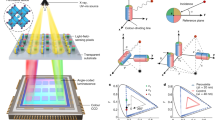

In this article, we report a differential perovskite hemispherical photodetector with 8 differential pixels to construct an intelligent detection system. The differential pixel size, position, and responsivity under different applied biases, along with the Fourier transform algorithm and neural network fitting (NNF) assisted machine learning, enable compatibly integrate functions of interest for a wide range of applications such as computational spectrometers, wide-angle imaging, color classification, location tracking, and so on.

Results

Nanoparticles assisted film formation and device gain

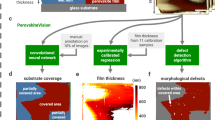

To deposit perovskite layers on a non-flat substrate, we previously established a facile and compatible pneumatic spraying process which has been well-established in the manufacturing industry. However, the spray-coated hemispherical perovskite device exhibits a photovoltaic response with a limited external quantum efficiency (EQE) of ~10%, despite the narrow-band response in color imaging being an essential feature in intelligent imaging. To improve the device performance, we synthesized an amphiphilic molecule naphthoguanidinium iodide (NGAI) to form supramolecular aggregates, which are composed of hydrophilic guanidinium and hydrophobic naphthalene. Supramolecular aggregates are a class of materials with good dynamics, homogeneity, and accurate molecular structure31,32,33,34. The molecules are recrystallized in a hydroiodic acid solution to precipitate needle-like white crystals. Through X-ray single crystal diffraction, the stacked structure is obtained and is shown in Fig. 1a. The NGAI is a monoclinic crystal (P21/c), with the lattice parameters of a = 1.33 nm, b = 0.59 nm, c = 14.63 nm, α = γ = 90°, β = 92.27. The naphthalene groups form a layered structure driven by π-π accumulation, while several guanidinium cations are combined with iodine ions in the form of chelates (Supplementary Fig. 2d). The transmission electron microscopy (TEM) study in Fig. 1b shows that the NGAI exists in the form of a nanosheet structure. The stacking model of the nanosheet structure is similar to the single-crystal structure of NGAI. When lead iodide (PbI2) is present in the solution, the morphology of the supramolecular aggregates changes into the nanoparticle structures (Fig. 1c), indicating the interaction between NGAI and PbI2. The average diameter of nanoparticles is ~4.7 nm (Fig. 1c)35. The formation scheme is shown in Fig. 1d, [NGA]+ non-polar naphthalene groups aggregate together in a polar solvent to form a dimer, and then stack laterally to form nanosheet structures, which can further evolve into nanoparticles with the presence of PbI2 by forming the covered structures with [NGA]+ ions.

a The crystal structure of the NGAI where NGAI is 1-(naphthalen-2-yl)guanidinium iodide. b The TEM image of the nanosheet structure aggregated by NGAI without adding PbI2, scale bar: 200 nm. c The TEM image of the nanoparticles aggregated by NGAI-PbI2 complex, scale bar: 20 nm. d Schematic diagram of the self-assembly of [NGA]+ in the polar solvent (w/o and w PbI2) where [NGA]+ is the 1-(naphthalen-2-yl)guanidinium cation. The guanidinium of [NGA]+ was exposed to the outside. e Schematic diagram of the process of crystallization of perovskite (FAPbI3 w NGAI) during spray-coating and annealing. f The crystalline processes of the FAPbI3 films were monitored by the absorbance at 700 nm with different amounts of NGAI under 80 °C. g The EQE of FAPbI3 (w 0%mol ~ 40%mol NGAI) photodetectors at −1.0 V bias condition. h The XRD spectra of FAPbI3 (w 0%mol ~ 30%mol NGAI) films fabricated by spray-coating.

The introduction of NGAI supramolecular aggregates is of vital importance to the device performance of differential perovskite detectors. On one hand, the NGAI serving as an additive improves the quality and manipulates the crystalline rates of the perovskite film from spray-coated processes36,37,38. We monitored the crystallization of formamidinium lead iodide (FAPbI3) perovskite precursor solutions with different equivalents of NGAI (0–30%mol relative to Pb2+) on the substrate by time-dependent absorbance spectroscopy (700 nm, 80 °C). The addition of NGAI can effectively slow down the film crystallization rate by wrapping the [PbI6]4− in solution (Figs. 1e, f)36,39, which results in a larger grain than that film without NGAI (Supplementary Fig. 4e). On the other hand, the nanoparticles can induce the charges injection from the external circuit to output a device gain40,41. The EQE spectra of the photodetectors are performed to confirm the existence of device gain in Fig. 1g, and the device gain shows up with the addition of 5%mol NGAI, and the maximum EQE value of ~1000% is observed once 10%mol ~ 20%mol NGAI is employed. The device performance of differential detectors depends on their responsivity variations. The large EQE value provides a wide range of correlations between device responsivity and light signals for differential detectors to acquire more detection information. It should be also noted that the addition of NGAI promotes the crystalline orientation to the stable (111) phase as evidenced by the XRD spectra (Fig. 1h) and the morphology graph (Supplementary Fig. 4e)42.

Differential external quantum efficiency for computational spectrometer

The large device differential EQE/responsivity allows us to resolve the tiny difference in light wavelength number through a computer algorithm for spectrometer application. Figure 2a shows the EQE spectra to the incident light of different wavelength numbers as a function of applied reverse bias conditions. As the increase of applied reverse bias, the EQE value is greatly improved, and the short wavelength range has a larger contribution to the photocurrent, resulting in a good differential response to light wavelength. The maximum EQE value of ~1000% in Fig. 2b also yields a wide range of variations for signal simulation and machine learning to resolve two beams of light with similar wavelength numbers. Characteristic curves between the reverse bias and current density are shown in Fig. 2c under the same irradiance (50 μW cm−2), and the signal difference can be clearly distinguished and learned under different biases. To better understand and simulate the EQE variations versus applied reverse bias, we describe the mechanism of bias dependent charge carrier collection process in Fig. 2d. At low bias conditions, the charge carrier drift length is smaller than the film thickness, leading to a narrow-band response in EQE spectra due to the penetration depth difference between short- and long-wavelength light. Under high bias conditions, all the charge carriers can be collected regardless of where the charge is generated in the film due to the extension of charge carrier drift length across the film. Therefore, the entire range of the EQE spectra has been improved. We employed the device architecture of Cr/PTAA/Perovskites/C60/BCP/Cr/Au, and the photodetector performance is evaluated in Supplementary Fig. 7. The current density (J) - voltage (V) curve of the photodetector exhibits a high responsivity of 5.1 A W−1 under the reverse bias of -1 V and the irradiance of 10 μW cm−2, corresponding to a high EQE value of 1180% (Supplementary Fig. 7a). The gain of the photodetector was caused by the nanoparticles induced charges injection within the perovskite film, resulting in a large specific detectivity (D*) of 2 × 1013 Jones based on the measured low noise current of ~10−13 A Hz−0.5 (Supplementary Fig. 7e). The response range from 350 nm to 850 nm enables the spectrometer application from UV-visible to near-infrared.

a The EQE mapping of the differential photodetector at different reverse biases and wavelengths (The irradiance of light is shown in Supplementary Fig. 8b Condition 1). b The EQE curves of the photodetector at different reverse biases (–0.2 V, –0.6 V, –1.0 V) and wavelength. c The current density of the photodetector at different reverse biases and wavelengths under the constant irradiance of 50 μW cm−2. d The schemed principle of the wavelength classification by differential photodetectors at different reverse biases. e The reconstructed spectra of four monochromatic lights by differential photodetectors match well with the reference spectra. f The reconstructed spectrum of the quasi-monochromatic light from a 520 nm laser. g The reconstructed spectrum of the polychromatic light.

To realize the bias-dependent computational spectrometer with the differential photodetector, a computational reconstruction algorithm was applied17. Figure 2e shows the reconstruction spectra of four beams of monochromatic lights with the same full width at half maximum (FWHM) of ~5 nm but at different wavelengths, and the simulated spectra overlap with that of incident lights. The FWHM is calculated by the reciprocal linear dispersion of the instrument, and the step size of the center wavelength is 5 nm. The corresponding resolution of the computational spectrometer was evaluated to be better than 4.7 nm in Supplementary Fig. 8a. The quasi-monochromatic light generated by a 520 nm laser with the FWHM of 5.8 nm is also reconstructed in Fig. 2f, matching well with its emission spectra. To simulate a complex situation, a polychromatic light with a white spectrum from a light-emitting diode (LED) is employed, and the differential detector can also reproduce the spectra reconstruction in Fig. 2g. Considering the influence of the irradiance, the responsivity maps with the reverse bias and wavelength are shown in Supplementary Fig. 8c–e. The reconstruction of polychromatic light with arbitrary irradiance/wavelength is feasible by building the relationship between the irradiance, wavelength, reverse bias, and current density.

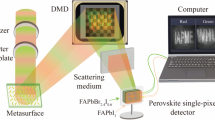

Color imaging with differential single-pixel detectors

Due to the detector’s capability in color recognition, there exists significant potential in full-spectrum color imaging without the use of optical filters. This potential lies in the detector’s ability to discern subtle color differences in objects. Building upon this capability, we present a design for multi-color imaging. The utilization of two-dimensional structured light patterns in conjunction with Fourier single-pixel imaging has presented a highly promising avenue for exploration. To enable single-pixel imaging with simplified device architecture and limited space, The Fourier transforms phase shift technique is adopted for high-resolution imaging9,10. Briefly, the set patterns were illuminated on the object through a projector, and we collected the current signal of the single-pixel device induced by the reflected light under different patterns (Fig. 3a). The collected current signal consists of the 2D image information, which can be reconstructed through the Fourier transform algorithm. However, the reconstructed gray 2D image doesn’t include any color information. To further explore the advantages of differential detectors, bias voltage-dependent signal variations versus light wavelengths are also included in the algorithm. Since the reflected light of objects of different colors is different in the spectrum, the difference can be refined through the single-pixel imaging of objects under different reverse biases, and finally realize color imaging. In this context, we opted for a smooth Rubik’s Cube as the imaging object, disregarding variations in the reflected light intensity across different positions on the Rubik’s Cube’s surfaces. The Rubik’s cube, as shown in Fig. 3a and Supplementary Fig. 9d, was photographed by single-pixel imaging under different bias pressures (–0.1 to –0.9 V). Supplementary Fig. 9c shows the gray images of the Rubik’s cube under different bias voltages. Images are optimized by reducing the noise and linear weight. The gray-scale map of 2D images is displayed in Fig. 3b. The K-Nearest Neighbor (KNN) algorithm is used to classify the collected images (Fig. 3a and Supplementary Software 1). We select 121 pixels in the color area of each image as the training set. The gray value of a pixel at different reverse biases represents an element in an n-dimensional vector. Here, the element includes 9 dimensions referring to 9 different reverse biases. The classification accuracy obtained from the training set reaches 100%, and the confusion matrix is shown in Supplementary Fig. 10a, which yields accurate mathematical functions to classify the colors in an image in Fig. 3c. The accuracy of color classification depends on the large differential response between the responsivity and light wavelength under different reverse biases, and more refined recognition can be realized through the optimization of algorithm. While differences in reflected light intensity may pose a potential interference in color identification, the integration of spectral recognition principles and the establishment of a matrix relationship between light intensity and response hold the promise of achieving more precise color identification.

a Schematic diagram of the single-pixel imaging the process of color classification. Patterns are projected onto the object by the projector. The reflected light is detected by the photodetector. Each pattern responded to a current density of photodetector. m is the numerical order of patterns. The m-current density curve can be translated into a gray-scale map by the algorithm. The gray level of pixels in gray-scale maps obtained under different reverse biases are collected as feature vectors. b The optimized images at different Vn from single-pixel imaging. c The color image realized by a lensless differential photodetector.

Intelligent detection and tracking by differential detector arrays

The spray coating method not only provides adjustable thickness and optoelectronic properties of the photodetector but also possesses the advantage of fabrication on non-planar surfaces. This feature enables the realization of a position localization system based on hemispherical detectors. To further enlarge the differential signals for intelligent detection in 3D space, we develop differential electrodes on hemispherical devices, since the effective incident flux intensity of the hemispherical surface at different positions is different compared to the planar surface26. Firstly, we calculate the effective incident light intensity at the surface of the hemispherical detector and planar detector depending on the different positions of the light source (Fig. 4a). d and h represent the horizontal distance and vertical distance between the light source and the devices’ geometry center (hemisphere and disc), respectively. r is the radius of the hemisphere and disc. l is the distance between the light source and the device geometry center. The effective incident light intensity is uniformly distributed on the planar surface with little difference, whereas it exhibits significant variations on the hemispherical surface. Five devices with different positions (The distance from the center of the objects to the point is from 0.2 r to 1.0 r) were set on the hemispherical/planar substrates as shown in Fig. 4b to study the dependent relationship between the differential signals of the five devices as changing the horizontal distance (d) of the light source. The changing curves of the effective incident flux intensity at different positions of the planar surface are almost coincident. However, the differential signals at different positions of the hemispherical surface are different and asymmetric. Therefore, the differential photodetector could capture the location information of the light source.

a The normalized effective incident flux on the hemispherical and planar surfaces with varying light source positions. b The changing curves of normalized effective incident flux at different points on the hemispherical and planar surfaces (d = 0.2 r ~ 1.0 r) of light at different horizontal positions. The height of the light source is constant. c The device structure of the hemispherical photodetector. d The process for building the model by NNF and trace reconstruction. e The signal matrix of the differential pixels distributing different positions (x1, x2, …, x8) of the photodetector. f The trace reconstruction of the light source w/o color classification and with color classification. g The spatial trace reconstruction in 3D space enabled by the differential hemispherical photodetector.

To further realize the feature of location tracking, we designed a differential photodetector with two kinds of differential electrodes in Fig. 4c. The electrode at the bottom of the photodetector was divided into eight parts (The interval is 45°) by a mask to collect light signals from different directions as differential pixels. Two kinds of electrodes were designed to enhance the difference of the signals from different positions and improve the accuracy of the computer algorithm for location tracking. The theoretical simulation in Supplementary Fig. 12 shows the differences between the long electrode and the short electrode design in resolving the differential signals with different pixels. The hemispherical photodetector was integrated onto a printed circuit board (PCB) as a portable device prototype for a location tracker (Supplementary Fig. 13). Figure 4d shows the processes of machine learning and data reconstruction based on differential perovskite hemispherical photodetector. During the learning process, signals (x1, x2, …, x8) from each position of the light source were collected by every differential pixel to fit the mathematical model of Bayesian regularization (Supplementary Software 1). To simulate the location tracking function, the signals (x’1, x’2, …, x’8) from the light source at a series of unknown positions were collected by each differential pixel to reconstruct the position/running trajectory of the object. A LED was fixed on a precise X-Y electric multi-axis displacement table to finely control the position of the light source. Then, the differential hemispherical photodetector was placed under the light source to record the running trajectory of the LED. There are 20 steps × 20 steps in the X-Y plane with each moving step size of 3 mm to develop a signal matrix in an area of 6 × 6 cm2 for 8 differential pixels. Figure 4e records the mapping results of the signal matrix by 8 differential pixels with different shapes regarding the object location tracking within a plane range, which serves as input data to build the mathematical model. The accuracy of the model generated from the neural network fitting (NNF) during the learning process can be evaluated by the characterization of the correlation coefficient in Supplementary Fig. 14. The correlation coefficient of the training group, test group, and all groups are 0.99976, 0.99967, and 0.99976, respectively. To verify the device performance and strategy availability, we defined the trajectory of an object in Fig. 4f (Original trace, top panel) and realized the process emersion of the motion trajectory in Fig. 4f (Result trace, top panel) and Supplementary Movie 1. The detailed trajectory reconstruction is shown in Supplementary Fig. 15.

Since all the planar location tracking was completed under a constant bias voltage, we can establish one more dimension differential signal with different biases to realize more detection information such as motion tracking with color classification feature or location tracking in 3D space. To demonstrate the color-distinguishable motion tracking, a series of signal matrixes including object color and position in the X-Y plane were built under different biases with a correlation through NNF. Finally, the trajectories (Supplementary Movie 2) of the red, green, and blue are reconstructed with three groups of bias (–0.30 V, –0.35 V, –0.40 V), and more fine colors can be resolved if more detailed differential signals are correlated. Similarly, controlling the external bias of the photodetector can also realize location tracking in 3D space with spatial coordinates. A simple trace in a 3D array of 20 × 20 × 3 was designed for proof of concept. Figure 4g (top panel) shows the original motion trace of an object in 3D space. The reconstructed trace at the bottom panel and Supplementary Movie 3 in 3D space is realized by this differential hemispherical photodetector, in consistent with the original trace.

Discussion

In summary, we successfully proposed a differential hemispherical photodetector with spray-coated perovskite film to realize intelligent functions of color imaging, computational spectrometers, and location tracking in a 3D space or 2D plane with a color classification capability. The low noise (~10−13 A Hz−0.5), high EQE (~1000%), and hemispherical device architecture enable the large differential signals to collect more information of interest. Combining the advantages of differential photodetectors with machine learning with NNF processes, the most advanced photodetectors can be further enhanced. The facile design not only saves the space and cost to construct complex detector arrays, but also pushes the detector performance towards intelligence. However, the data acquisition and analysis processes still require robust computing power, which may delay the result timeliness or impair the result accuracy. Further model design and algorithm optimization are still needed to improve the maturity of differential detectors by showing advancements in intelligent performance. Through integrating differential hemispherical detector arrays, most of the advanced photodetectors can be further intelligentized and miniaturized for future artificial intelligence applications.

Methods

Films and devices fabrication

Films fabrication: The precursor solution based on FAPbI3 perovskite was prepared in the following method. formamidine hydroiodide (FAI) 34 mg (0.2 mmol), PbI2 101 mg (0.22 mmol), methylamine hydrochloride (MACl) 4.0 mg (0.06 mmol), L-ascorbic acid (L-AA) 0.9 mg (0.025 mmol), NGAI 0–18.7 mg (0–0.06 mmol, 0–30%mol relative to Pb2+) was dissolved in a mixed solvent of 1 mL DMF: 2-Me: ACN = 1: 1: 3 (v/v). The mixed solution was stirred at room temperature until completely dissolved resulting in the precursor solution for spray coating. The substrate is heated to ~100 °C, and a certain volume of solution is added to the spray gun (S-120 0.2 mm nozzle). The spray speed is 3 μL s−1. After spraying, films were placed on a hot table at 120 °C and annealed for 15 min.

Hemispherical device fabrication: After washing with water, acetone, and isopropyl alcohol (IPA), the hemispherical substrate was deposited with chromium (Cr) electrode vacuum evaporation where the hemispherical glass was masked by patterned polyimide (PI) tape to split electrodes or define the effective area (0.1 cm2). The dried substrate was treated with ultraviolet ozone for 20 min. The hemispherical substrate was fixed on the stainless steel plate and heated to 100 °C. PTAA was prepared into 0.5 mg mL−1 toluene solution and sprayed onto the hemispherical substrate. The amount of spray solution is adjusted by referring to 25 µL cm−2. After spraying, the Cr/PTAA substrate was thermally annealed at 100 °C for 10 min and then transferred to the plasma treatment chamber for air-plasma treatment for 2 min. The resulting film is again fixed on the stainless steel plate and heated to 100 °C. The perovskite precursor solution was sprayed onto the Cr/PTAA substrate, and after each spray deposition, nitrogen (N2) was used to assist in the crystallization of the surface of the perovskite. After spraying, the device was thermally annealed at 130 °C for 15 min. The annealed device did not require any additional post-processing and deposited the materials directly by vacuum evaporation with 25 nm C60, 8 nm BCP, 5 nm Cr and 5 nm Au.

Fourier single-pixel imaging and color classification

The Fourier single-pixel imaging was realized by the method published by Zhang and Mai et al.9,10 (Additional details are presented in the Supplementary Materials.). The projector is used to project the pre-designed two-dimensional Fourier transform pattern onto the object. The detector is connected to the Keithley 2400 source meter to measure the light reflected by the object as Fig. 3a shows. The signal measured by the source meter corresponding to the first m two-dimensional patterns was input to the computer (m = 10000), and was calculated to obtain the corresponding image through the algorithm. The image is obtained by Fourier transform of the photocurrent signal of the device under different bias voltages and shown in Supplementary Fig. 9. The images were optimized by background subtraction, noise reduction, and smoothing before linear weighting operation. The optimized images are shown in Fig. 3b with the labels V1, V2, ……, and Vn. Here, we define the RGB as different color blocks as (1, 0, 0), (0, 1, 0), (0, 0, 1), (1, 1, 0), (0, 0, 0) and the algorithm is Fine KNN. The sample size is 121 × 5. A certain number of pixels in the image are selected as samples, and machine learning classification is carried out through the machine learning APP in Matlab. Through the existing learning results, each pixel in the picture is classified.

The calculation of effective incident flux intensity

The light intensity density (I) was defined by the following function to describe the spherical diffused light (Eq. (1)):

where R is the distance between the object and the point light source. IO is initial light intensity. Considering the symmetry of the sphere, the effective light intensity flux distribution (dφ) can be simplified to vertical incidence as Supplementary Fig. 12 shows. The effective incident flux intensity (dφ) can be calculated in Eq. (2) by the law of cosines.

where l is the distance between the point of the light source and the center of the sphere. r is the radius of the sphere. β1 is the angle between the incident light and the line between the point on the surface of the sphere and the center of the sphere (Supplementary Fig. 12). S is the area of the surface. Similarly, R can be converted into the coordinate parameter (θ), which is the angle between the perpendicular line of the equatorial plane of the sphere and the line between the point on the surface of the sphere and the center of the sphere. The effective incident flux intensity can be calculated with the parameter of θ (Eq. (3)).

If the light source is placed in an arbitrary position, l can be obtained by the horizontal distance (d) and perpendicular distance (h). l can be calculated by Eq. (4).

In a rectangular coordinate system, the point (x, y, z) on the surface of the sphere is limited by Eq. (5).

After the transformation of the coordinates in Supplementary Fig. 12, the distribution of effective incident flux intensity (dφ) only suffers the influence of z (Eq. (6)).

In Fig. 4a, we normalized the light intensity flux distribution (dφ) in the condition of h = 20 r,d = 0 r, which is close to the experimental conditions.

The point (x, y, z) at the surface of the plane is limited by Eqs. (7) and (8).

β2 is the angle between the line through the point (x, y, z) at the surface of the plane and the position of the light source (d, 0, h) and the line through the point (x, y, z) at the surface of the plane and the foot point of the light source (d, 0, 0) (Supplementary Fig. 12). The effective incident flux intensity (dφ) can be calculated in Eq. (9).

where R is the distance between the point (x, y, z) and the point light source and can be calculated by Eq. (10).

Artificial intelligence-assisted location

The (LED 520 nm, 3 W) is fixed on the X-Y two-dimensional (2D) displacement platform, and the mobile platform is controlled by the computer to move within an area of 6 cm × 6 cm on the plane. The length of each step is 0.3 cm. The hemispherical array detector is connected in parallel to the NI9205 acquisition card. The operating voltage of the photodetector is –0.3 V. The signal of each pixel is recorded by the computer when the LED was placed in each position. The data matrix is obtained by the different values of the response of each pixel to the light signal at different positions (Fig. 4d, e). The data matrix is learned by Neural Net Fitting in Matlab, where the input is a 400 × 8 matrix representing the data: 400 samples of 8 elements. The 8 elements correspond to the signal collected by 8 pixels. The output is a 400 × 2 matrix that represents the data: 400 samples of 2 elements. The 2 elements correspond to the coordinate (X, Y) of the light source. The hidden layer contains 10 neurons, and the output layer contains 2 neurons. The fitting method is Bayesian Regularization. After that, the X-Y 2D displacement platform is used to move the LED following the prescribed route, and the signal of each detector is read by the acquisition card. The detector signal changes are brought into the NNF-fitted model for position prediction, and the corresponding coordinates are output. To realize the function of color classification, the LED was changed into 3 colors (450 nm, 520 nm, 660 nm, 3 W), and the constant reverse bias is changed into a bias group (–0.3 V, –0.35 V, –0.40 V). Thus, the data input is a 400× (8 × 3) matrix. The output is a 400 × 3 matrix where 2 elements correspond to the coordinate (X, Y) of the light source, and 1 element corresponds to the color of the LED. To realize the function of spatial orientation, the height of the LED was changed into 4 positions (9.5 cm, 9.8 cm, 10.1 cm, 10.4 cm) and the constant reverse bias is changed into a bias group (–0.3 V, –0.35 V). Thus, the data input is a 400× (8 × 2) matrix. The output is a 400 × 3 matrix where 3 elements correspond to the coordinate (X, Y, Z) of the light source.

Data availability

The data that support the plots within this paper are available from the corresponding author upon request. The data generated in this study are provided in the Supplementary Information/Source Data file. The X-ray crystallographic coordinates for structures reported in this study have been deposited at the Cambridge Crystallographic Data Center (CCDC), under deposition numbers: 2313935. These data can be obtained free of charge from The Cambridge Crystallographic Data Center via www.ccdc.cam.ac.uk/data_request/cif. Source data are provided with this paper.

Code availability

The source code related to the findings presented in this manuscript is available in GitHub: https://github.com/fengwind1/NCOMMS-23-43328.git.

References

Long, Z. et al. A neuromorphic bionic eye with filter-free color vision using hemispherical perovskite nanowire array retina. Nat. Commun. 14, 1972 (2023).

Wang, T. et al. Image sensing with multilayer nonlinear optical neural networks. Nat. Photonics 17, 408–415 (2023).

Mennel, L. et al. Ultrafast machine vision with 2D material neural network image sensors. Nature 579, 62–66 (2020).

Guo, F. et al. A nanocomposite ultraviolet photodetector based on interfacial trap-controlled charge injection. Nat. Nanotechnol. 7, 798–802 (2012).

Gao, W., Xu, Z., Han, X. & Pan, C. Recent advances in curved image sensor arrays for bioinspired vision system. Nano Today 42, 101366 (2022).

Ji, Z., Liu, Y., Zhao, C., Wang, Z. L. & Mai, W. Perovskite wide-angle field-of-view camera. Adv. Mater. 34, 2206957 (2022).

Kim, M. et al. An aquatic-vision-inspired camera based on a monocentric lens and a silicon nanorod photodiode array. Nat. Electron. 3, 546–553 (2020).

Gu, L. et al. A biomimetic eye with a hemispherical perovskite nanowire array retina. Nature 581, 278–282 (2020).

Ji, Z. et al. Achieving 256 × 256-pixel color images by perovskite-based photodetectors coupled with algorithms. Adv. Funct. Mater. 31, 2104320 (2021).

Zhang, Z., Ma, X. & Zhong, J. Single-pixel imaging by means of Fourier spectrum acquisition. Nat. Commun. 6, 6225 (2015).

Cao, F. et al. Bionic detectors based on low-bandgap inorganic perovskite for selective NIR-I photon detection and imaging. Adv. Mater. 32, e1905362 (2020).

Guo, L. et al. A single-dot perovskite spectrometer. Adv. Mater. 34, e2200221 (2022).

Tsai, W. L. et al. Band tunable microcavity perovskite artificial human photoreceptors. Adv. Mater. 31, e1900231 (2019).

Wang, J. et al. Self-driven perovskite narrowband photodetectors with tunable spectral responses. Adv. Mater. 33, e2005557 (2021).

Fu, C. et al. A simple-structured perovskite wavelength sensor for full-color imaging application. Nano Lett. 23, 533–540 (2023).

Yang, Z., Albrow-Owen, T., Cai, W. & Hasan, T. Miniaturization of optical spectrometers. Science 371, eabe0722 (2021).

Yoon, H. H. et al. Miniaturized spectrometers with a tunable van der Waals junction. Science 378, 296–299 (2022).

Lee, E. K. et al. Fractal web design of a hemispherical photodetector array with organic-dye-sensitized graphene hybrid composites. Adv. Mater. 32, e2004456 (2020).

Hu, Z. Y. et al. Miniature optoelectronic compound eye camera. Nat. Commun. 13, 5634 (2022).

Bao, C. et al. High performance and stable all-inorganic metal halide perovskite-based photodetectors for optical communication applications. Adv. Mater. 30, 1803422 (2018).

Kong, L. et al. Single-detector spectrometer using a superconducting nanowire. Nano Lett. 21, 9625–9632 (2021).

Meng, J., Cadusch, J. J. & Crozier, K. B. Detector-only spectrometer based on structurally colored silicon nanowires and a reconstruction algorithm. Nano Lett. 20, 320–328 (2020).

Bao, J. & Bawendi, M. G. A colloidal quantum dot spectrometer. Nature 523, 67–70 (2015).

Yang, Z. et al. Single-nanowire spectrometers. Science 365, 1017–1020 (2019).

Wang, X.-L. et al. Spectrum reconstruction with filter-free photodetectors based on graded-band-gap perovskite quantum dot heterojunctions. ACS Appl. Mater. Interfaces 14, 14455–14465 (2022).

Feng, X. et al. Spray-coated perovskite hemispherical photodetector featuring narrow-band and wide-angle imaging. Nat. Commun. 13, 6106 (2022).

Lin, Z., Yang, Z., Wang, J. & Yang, S. De novo studies of working mechanisms for self-driven narrowband perovskite photodetectors. ACS Appl. Mater. Interfaces 15, 14557–14565 (2023).

Lin, Q., Armin, A., Burn, P. L. & Meredith, P. Filterless narrowband visible photodetectors. Nat. Photonics 9, 687–694 (2015).

Fang, Y., Dong, Q., Shao, Y., Yuan, Y. & Huang, J. Highly narrowband perovskite single-crystal photodetectors enabled by surface-charge recombination. Nat. Photonics 9, 679–686 (2015).

Siegmund, B. et al. Organic narrowband near-infrared photodetectors based on intermolecular charge-transfer absorption. Nat. Commun. 8, 15421 (2017).

Chen, Z. et al. Solvent-free autocatalytic supramolecular polymerization. Nat. Mater. 21, 253–261 (2022).

Seo, G., Kim, T., Shen, B., Kim, J. & Kim, Y. Transformation of supramolecular membranes to vesicles driven by spontaneous gradual deprotonation on membrane surfaces. J. Am. Chem. Soc. 144, 17341–17345 (2022).

Liao, R., Wang, F., Guo, Y., Han, Y. & Wang, F. Chirality-controlled supramolecular donor-acceptor copolymerization with distinct energy transfer efficiency. J. Am. Chem. Soc. 144, 9775–9784 (2022).

Shen, B., Park, I.-S., Kim, Y., Wang, H. & Lee, M. Induction of 2D grid structure from amphiphilic pyrene assembly by charge transfer interaction. Giant 5, 100045 (2021).

Jiang, Y. et al. Synthesis-on-substrate of quantum dot solids. Nature 612, 679–684 (2022).

Li, F. et al. Hydrogen-bond-bridged intermediate for perovskite solar cells with enhanced efficiency and stability. Nat. Photonics 17, 478–484 (2023).

Zhang, B. et al. A multifunctional polymer as an interfacial layer for efficient and stable perovskite solar cells. Angew. Chem. Int. Ed. 62, e202213478 (2023).

Tan, M. et al. Carbonized polymer dots enhanced stability and flexibility of quasi-2D perovskite photodetector. Light Sci. Appl. 11, 304 (2022).

Qu, W. et al. Low-temperature crystallized and flexible 1d/3d perovskite heterostructure with robust flexibility and high EQE-bandwidth product. Adv. Funct. Mater. 33, 2213955 (2023).

Shen, L., Fang, Y., Wei, H., Yuan, Y. & Huang, J. A highly sensitive narrowband nanocomposite photodetector with gain. Adv. Mater. 28, 2043–2048 (2016).

Fang, Y., Guo, F., Xiao, Z. & Huang, J. Large gain, low noise nanocomposite ultraviolet photodetectors with a linear dynamic range of 120 dB. Adv. Opt. Mater. 2, 348–353 (2014).

Ma, C. et al. Unveiling facet-dependent degradation and facet engineering for stable perovskite solar cells. Science 379, 173–178 (2023).

Acknowledgements

This work is financially supported by the National Natural Science Foundation of China (No. 22105083 and 52173166) and the Fundamental Research Funds for the Central Universities, JLU and JLUSTIRT (2017TD-06).

Author information

Authors and Affiliations

Contributions

H.W. conceived and supervised the project. X.F. fabricated the photodetectors and characterized the performance of the devices. C.L. helped with the photodetector fabrication and verified the device’s performance. J.S. helped with the SEM measurement. Y.H. assisted in the building of the system of the read-out system of the position orientation system. W.Q. assisted with the single-pixel point imaging. W.L. contributed to the X-ray single crystal measurement. K.G. analyzed the single crystal data. L.L. performed the TEM measurement. B.Y. commented on the results and provided constructive suggestions. All authors analyzed the data. H.W. and X.F. wrote the manuscript, and all the authors commented and reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

: Nature Communications thanks Kai Wang, Hoon Hahn Yoon and the other anonymous reviewer(s) for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Source data

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Feng, X., Li, C., Song, J. et al. Differential perovskite hemispherical photodetector for intelligent imaging and location tracking. Nat Commun 15, 577 (2024). https://doi.org/10.1038/s41467-024-44857-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-024-44857-4

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.