Abstract

Computer simulations can play a central role in the understanding of phase-change materials and the development of advanced memory technologies. However, direct quantum-mechanical simulations are limited to simplified models containing a few hundred or thousand atoms. Here we report a machine-learning-based potential model that is trained using quantum-mechanical data and can be used to simulate a range of germanium–antimony–tellurium compositions—typical phase-change materials—under realistic device conditions. The speed of our model enables atomistic simulations of multiple thermal cycles and delicate operations for neuro-inspired computing, specifically cumulative SET and iterative RESET. A device-scale (40 × 20 × 20 nm3) model containing over half a million atoms shows that our machine-learning approach can directly describe technologically relevant processes in memory devices based on phase-change materials.

Similar content being viewed by others

Main

Random-access memory based on phase-change materials (PCMs) is a leading candidate for the development of non-volatile memory and neuro-inspired computing technologies1,2,3,4. PCMs can be rapidly and reversibly switched between two solid-state phases via crystallization (SET) and amorphization (RESET). Information is digitally encoded by a large physical property contrast between the amorphous and crystalline phases, such as electrical conductivity and optical reflectivity. A highly used set of PCMs are located on the quasi-binary GeTe–Sb2Te3 (GST) line of chemical compositions. For example, Ge2Sb2Te5 and Ge8Sb2Te11 are used in rewriteable optical discs1, Ge1Sb2Te4 and Ge2-Sb2Te5 are used for cross-point random-access memory products5, and Sb2Te3-based PCMs with suitable dopants or nano-confinement layers are promising candidates for universal memory6,7 and neuro-inspired computing devices8. Ge2Sb2Te5 can also be integrated with waveguides to achieve various on-chip non-volatile photonic applications, including memory, computing, colour rendering and nanopixel displays9.

Computer simulations based on density functional theory (DFT) and DFT-driven ab initio molecular dynamics (AIMD) have provided key insights into the structural features10,11,12, bonding contrast13,14 and crystallization kinetics of GST15,16,17,18. However, the computational cost of AIMD prevents the simulations of systems beyond a thousand atoms and processes taking more than a few nanoseconds18. At the same time, the structural and chemical complexity in PCMs requires quantum-mechanically accurate methods rather than empirically parameterized force-field models. These requirements have made it challenging to simulate more realistic scenarios encountered in devices: temperature gradients induced by Joule heating by the programming pulses, the expansion and shrinking of active volume during switching, the evolution of local chemical composition and model system sizes of tens of nanometres, which are required to account for real-world device geometries.

Machine learning (ML)-based interatomic potential models are an emerging approach that aim to combine the efficiency of empirical potentials and the accuracy of DFT19. Using a reference database of small-scale DFT computations, the potential energy surface becomes the regression target for an ML model for fast and accurate simulations. In 2012, an artificial neural network ML potential model for GeTe was developed20, which has since been used to study crystallization21, ageing22 and thermal transport23. An ML potential fitted using the Gaussian approximation potential (GAP) framework was subsequently applied to the ternary PCM Ge2Sb2Te5 (refs. 24,25) and used to assess the structural properties and mid-gap electronic states of the amorphous ‘zero bit’26,27. Neural network potentials for elemental antimony (monatomic memory) and Ge2Sb2Te5 have also been reported28,29,30. However, so far, these models have been designed and verified for specific compositions; although the extrapolation of the GAP framework to binary Sb2Te3 has been explored31, a comprehensive model for the entire GST quasi-binary line remains unavailable.

In this Article, we show that ML-driven modelling can enable fully atomistic device-scale simulations of phase changes along the GST compositional line under realistic device geometries and conditions. ML is used to fit interatomic potentials using the GAP framework for different GST phases and compositions, which are iteratively updated to create the final reference database. The simulations of cumulative SET and iterative RESET processes under conditions relevant for practical operation, such as non-isothermal heating, reveal the atomistic processes and mechanisms in PCMs on the ten-nanometre length scale. The speed and accuracy gain of this approach allows the simulation of a cross-point memory device in a model containing more than 500,000 atoms.

ML-driven modelling of Ge–Sb–Te PCMs

The quality of any ML model is dependent on the quality of its input data, and the performance of an ML potential largely depends on the quality of the reference database to which it is fitted32. Here we introduce a dataset of representative structural models (data locations) and DFT-computed energies and forces (data labels) as a platform for fitting ML potentials for GST PCMs. Starting with an initial set including liquid and amorphous configurations from AIMD, we iteratively explored the structural space with evolving ML potentials and progressively trained the models to represent more complex scenarios (Fig. 1a). Iterative training allows for efficient reference data generation32, because no further AIMD is required beyond the initial set of data (which we call iter-0). We started by performing GAP-driven melt–quench simulations, of the type that are common in the field (iter-1), and then followed with more specialized simulations that reflected the particular requirements of the domain of application (iter-2). The latter simulations allow the model to ‘learn’ microscopic information most relevant to GST, that is, about melting and crystallization processes in which phases with different degrees of structural order coexist. The iter-2 part is central, because it gradually explores and includes the domain knowledge that is relevant to how the PCM functions and evolves in a real-world action.

a, Reference data generation for increasingly comprehensive GAP ML models. The initial reference database (iter-0) contains crystalline structures, including Materials Project (MP) entries50, and AIMD snapshots of liquid and amorphous phases. A two-step iterative training process is then carried out, with standard iterations (iter-1) and domain-specific iterations (iter-2) gradually expanding the database. b, Two-dimensional structural map representing the reference database, generated using the SOAP similarity matrix (Methods). The representative structures are shown, and discussed in the text according to labels (1)–(5) indicated here.

We illustrate the composition of the reference database in Fig. 1b. In this two-dimensional structural map, the distance between any two points indicates the smooth overlap of atomic positions (SOAP) kernel similarity33 of the corresponding configurations. The areas labelled (1)–(3) represent crystalline structures included from the start (Fig. 1b, grey). The first two comprise ordered and disordered GST, respectively, for which the key structural motif is an (often defective) octahedral environment. These points are distinct from area (3), representing diamond-type Ge. Area (4) covers liquid and amorphous GST configurations, mostly added during early iterations (Fig. 1b, yellow). Area (5) reflects phase-transition snapshots, added during the domain-specific series (Fig. 1b, red); accordingly, the points are located between the fully ordered and fully disordered regions of the map.

Once the full database was available, a final ML model was fitted, which we refer to as GST-GAP-22. Details of the model construction and validation are given in Supplementary Notes 1–3. The numerical performance of this potential was assessed by cross-validation on the database itself and by testing on out-of-sample configurations separately generated via AIMD. The error analysis suggests that the model has ‘learned’ its quantum-mechanical reference data largely to within the regularization (the imposed uncertainty during the fit; Supplementary Note 2). We fitted GST-GAP-22 to the reference data obtained with the PBEsol exchange–correlation functional, but also show that a version based on a different functional can be generated by recomputing the DFT data labels (Supplementary Note 4).

The ML model accurately predicts the structural properties of GST materials. For crystalline phases, GAP-optimized lattice parameters are nearly identical with the DFT data (Supplementary Table 3). For disordered structures, Extended Data Figs. 1 and 2 show that the radial, angular and ring-size distributions of liquid and amorphous GST phases are faithfully described. Note that the composition Ge4Sb2Te7 (formally ‘4 GeTe + 1 Sb2Te3’) was not explicitly included during training; nonetheless, key structural features are well described. All the amorphous models contain a non-negligible fraction of homopolar bonds (absent in the respective crystals), for example, Ge–Ge, typically formed during rapid quenching. The fraction of homopolar bonds is well reproduced across the series of compounds studied (Extended Data Fig. 1c), as well as the energy dependence on the homopolar bond count in amorphous GeTe, which has been linked to ageing and resistance drift (Supplementary Fig. 5)34. The onset of a Peierls distortion is a more subtle structural effect in PCMs, which affects the size of the bandgap in both crystalline and amorphous GST34, and is therefore directly relevant to applications in electronic memories. The degree of Peierls distortion can be assessed by comparing the short-to-long bond ratio in close-to-linear environments, which we evaluate using a three-body correlation function. Extended Data Fig. 1d,e shows that our ML potential captures this effect very well: the distortion ‘fingerprints’ for all the four compositions studied are similar between GAP-MD (red) and the AIMD reference (blue).

We also performed GAP-MD simulations with different stoichiometric compositions, off the GST quasi-binary line, to account for the p-type semiconducting behaviour observed in experiments35. Specially, we considered Ge2Sb1.8Te5 (cation deficient, p type) and Ge2.2Sb2Te5 (cation rich, n type) following previous AIMD work36. Structural indicators still yield close similarity between GAP-MD and AIMD (Supplementary Fig. 4). Although the above compositions are slightly away from the training domain, their local bonding patterns were ‘learned’ through sufficient sampling over the structural and chemical space. All the above analyses demonstrate the compositionally transferable and defect-tolerant nature of the current ML model, enabling efficient MD simulations of amorphous GST with local compositional fluctuations, which are expected to spontaneously occur on repeated programming operations in memory devices.

Single and cumulative switching

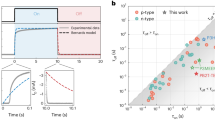

Having validated the ML modelling approach, increasingly challenging use cases leading up to device operation were modelled, beginning with the crystallization (SET) process and its atomistic modelling. Figure 2a shows a state-of-the-art AIMD crystallization simulation of Ge1Sb2Te4 over 600 ps at 600 K, with colour coding for the per-atom similarity \(\bar{k}\) (ref. 18). The model contains 1,008 atoms (3.0 × 2.9 × 4.2 nm3), with two fixed crystal-like layers representing the crystal–amorphous boundary encountered in devices (Fig. 2a). Our GAP-MD simulation successfully reproduces the crystallization process (Fig. 2b), and the resulting structure similarly contains a random distribution of vacancies and a number of antisite defects. This particular type of GAP-MD run can be completed within days on a high-performance computing system, whereas the corresponding AIMD simulation18 required half a year of real time and shows much less favourable scaling behaviour.

a, Snapshots from a 1,008-atom constant-temperature AIMD crystallization trajectory for Ge1Sb2Te4 in the [111] direction, taken from ref. 18 and visualized in the same style. Colour coding indicates the SOAP-based \(\bar{k}\) crystallinity18, illustrating the gradual transition from amorphous (red) to crystalline (yellow), and the occurrence of antisite defects (black). b, The same crystallization simulation carried out using GAP-MD. c, GAP-driven simulations of cumulative SET processes. After each RESET (amorphizing) pulse above the melting point of Ge1Sb2Te4, a series of small SET (crystallizing) pulses were applied, with the same simulation setup as that shown in b. In this proof of concept, three different kinds of pulse with different amplitudes and durations were considered: 650 K pulses of 100 ps duration, 600 K pulses of 100 ps duration and 600 K pulses of 50 ps duration. Each data point corresponds to a configuration after an individual pulse, and the lines are guides to the eye; the starting point of the plot (corresponding to the time at 0 ns) refers to the fully recrystallized configuration from the simulation shown in b. d, Crystallization of Ge1Sb2Te4 with multiple nuclei, leading to the formation of a polycrystalline structure over 2 ns.

In addition to SET and RESET, two further operations—cumulative SET and iterative RESET—are used for neuro-inspired computing4. In these cases, the programming pulse can be divided into a train of pulses with varied amplitude and duration37, and the change in electrical resistance38 or optical transmission39 as a function of the number of pulses can be used to mimic synaptic learning rules. Using the GAP ML model, we carried out cumulative SET simulations (Fig. 2c and Supplementary Video 1). Differences in the initial configuration, pulse width and maximum temperature affect the progressive structural changes, and the evolution of structural order determines the electrical and optical properties of the intermediate states. These simulations represent the situation inside one grain of rock-salt-like GST (with the grain size varying from several nanometres to several tens of nanometres)40,41. Taking into account the randomness in the nucleation of GST, cumulative SET operations can emulate integrate-and-fire dynamics for the construction of stochastic phase-change neurons42.

The simulations in Fig. 2a,b are at the system-size limit of DFT. Therefore, they describe crystal growth in a necessarily simplified way, along one selected direction only (here the [111] direction). ML potentials make it possible to surpass this limitation19: in Fig. 2d, we demonstrate this for a larger-scale crystallization simulation to obtain a polycrystalline state, which is typically formed in nanoscale devices. Three crystalline seeds (of about 100 atoms each) were embedded in this model, bypassing the stochastic nucleation process (Supplementary Fig. 7). The extracted growth speed averaged over three grains is ~0.7 m s–1 at 600 K, consistent with ultrafast differential scanning calorimetry experiments43. The large-scale GAP-MD simulations point towards a way to conceptually connect the previously shown small-scale models and the following real-device-size simulations.

Non-isothermal heating

Different types of scenario in real devices require different modelling strategies (Fig. 3 and Extended Data Fig. 3). The SET process in encapsulated electronic cells is commonly described in isothermal simulations (NVT ensemble; Fig. 2). In contrast, simulating the iterative RESET process requires the use of large-scale constant-energy simulations (NVE ensemble). The widely used mushroom-type electronic device was used to model such an operation (Fig. 3a). We constructed a 33 × 5 × 20 supercell expansion of a rock-salt-like Ge1Sb2Te4 model (23,100 atoms), and applied energy pulses to an area described by a half-cylinder shape at the bottom of the x–z plane (Fig. 3b). This setup represents the middle slab of mushroom PCM cells, with a cross-section of around 20 × 12 nm2. The top four atomic layers were fixed to prevent unwanted thermal transport to the bottom area through the periodic boundary conditions: in a real device, there would be a thermal barrier in contact with the GST material. We emphasize that in state-of-the-art mushroom-type devices41, the diameter of the heater can be scaled down to as small as ~3 nm. Therefore, the expected effective heating area is directly mirrored by our simulation setup (Fig. 3).

a, Schematic of the device layout. TEC, top electrode contact; BEC, bottom electrode contact. b, Snapshot from a non-isothermal (NVE) simulation in which an additional energy of 1,650 eV in total was added to the atoms as kinetic energy inside the focal area over the first 30 ps. A snapshot of the NVE simulation at 100 ps is shown, with the initial focal area indicated by the dashed lines. c, The corresponding two-dimensional temperature profile. Data were smoothed using a Gaussian filter with a broadness of 1.0 nm. d,e, Similar data as b (d) and c (e), but for an initial kinetic energy of 3,900 eV.

After a short NVT ensemble simulation at 300 K, the ensemble was switched to NVE and additional energies of 55 eV ps–1 were imposed on the atoms inside the focal area as kinetic energy over 30 ps. The NVE simulation was continued for another 70 ps. In total, this 30 ps energy pulse added 1,650 eV (0.264 fJ) of thermal energy to the model, resulting in a disordered area with a base length of ~7 nm (Fig. 3b). Then, we computed the local temperature profile based on the velocity of atoms collected on a two-dimensional grid. Figure 3c shows a clear radial temperature gradient from the heating centre towards the outer shell. In parallel, we performed another NVE simulation with an energy pulse of 3,900 eV (0.625 fJ) imposed on a larger focal area over 30 ps (Fig. 3d). The stronger pulse generated a larger disordered area with a base length of ~14 nm, creating a more extended gradient profile with a higher average temperature (Fig. 3e). This ML-driven MD simulation protocol closely resembles the iterative RESET operation in devices, providing real-time atomic-scale information beyond what has been revealed in experimental observations as well as finite element method simulations2. The evolution of the temperature gradient can be traced not only with sub-nanoscale spatial resolution but also with an ultrafine time resolution at the femtosecond level (Supplementary Video 2 and Supplementary Fig. 9).

Full device-scale simulations

Next, the capability for a structural model of real device size, corresponding to a cross-point product currently used in electronic memories5, is demonstrated. Figure 4a shows the schematic of the crossbar array, which consists of a PCM memory layer (composition close to Ge1Sb2Te4), an ovonic threshold switching (OTS) selector layer and several buffer layers between PCM, OTS and electrodes. The rest of the volume is filled with dielectric materials5. When programming, the electric current flows through the OTS layer after reaching a threshold voltage, and induces Joule heating to trigger the phase transition of the PCM. The size of the PCM region was reported as ~20 × 20 × 40 nm3 (ref. 5). The initial state is taken to be the layered trigonal phase due to unavoidable thermal annealing during the back-end-of-line stage for PCM integration2. We constructed a Ge1Sb2Te4 supercell of the same size, containing more than half a million atoms in a layered configuration (30 septuple-layer blocks, with each block arranged in the stacking sequence Te–Sb–Te–Ge–Te–Sb–Te), and melted the model using a short (10 ps) but very high energy pulse with a high energy gradient. Such a short time window is experimentally accessible in principle: melting of GST can be achieved in 5–10 ps, as evidenced by femtosecond laser experiments44.

a, Schematic of commercial cross-point products, consisting of electrodes, PCM, OTS layer and buffer layers in between5. b, Melting process of a Ge1Sb2Te4 structural model that matches the dimensions of the PCM cell in cross-point memory (20 × 20 × 40 nm3, containing 532,980 atoms). The melting process is triggered by a vertical heating pulse with additional energy (containing 60 fJ in total) from the bottom to the top after the cell is programmed. The colour coding is the same as that shown in Fig. 2, indicating the gradual loss of crystalline order (yellow) on formation of a disordered phase (red) in a much larger simulation cell. c, Heat dissipation during an extended MD simulation. d, Evolution of the amorphous GST (a-GST) structure from the melted state, characterized using radial distribution function (RDF) plots for the central area of the model (central 20 nm). This figure illustrates how atomic-structure analyses that are common for small cells (Extended Data Fig. 1) can now be performed for full device-scale simulations.

Figure 4b illustrates progressive disordering during melting. Layered structures remain early on (evident in the plot at 2 ps). On further heating, chemical disordering proceeded vertically from the bottom to the top, resulting in a gradient of ordering (Fig. 4b and Supplementary Video 3). At 6 ps, the bottom slab still shows some remaining chemical order, but it appears entirely disordered after 10 ps. The top region, especially the structural building blocks close to the heat barrier (near z = 40 nm), is only partially melted after 10 ps. In electronic devices, the applied heat dissipates mostly through electrodes (of very high thermal conductivity), quenching the molten state to form the amorphous phase. Here the added kinetic energy was gradually taken out from each atom over another 40 ps (Fig. 4c), leading to the gradual formation of the amorphous phase (Fig. 4d). Based on ML-driven MD, we can thus obtain the evolution of temperature gradients with sub-nanometre spatial resolution (Fig. 4c), revealing finer atomic details of the heat dissipation process compared with coarse-grained finite element method simulations.

The RESET energy is calculated as 60 fJ, which could be used as the theoretical limit of the minimum power consumption for the initial operation per bit (the most power-consuming step in devices). This is still an approximation because the entire amount of energy was directly assigned to the atoms with no energy dissipation to the surrounding layers. Although the present capability demonstration focuses on the initial ground state, similar calculations and analyses could also be carried out for more complex structures, including realistic models of the rock-salt-like phase with grain boundaries or mixed crystalline/amorphous structures. Moreover, the simulated heating pulse can be adjusted to more realistic and complex programming conditions, considering various pulse amplitudes, durations and shapes, which shall help to guide the optimization of programming schemes for better device performance.

Finally, the inclusion of electric-field effects in simulations is addressed, which is related to electromigration in the liquid state and ultimately to device failure. Since the cumulated transient electric fields on extensive cycling trigger substantial electromigration over more than millions of SET and RESET operations in GST devices, directly reproducing the entire process is not feasible. Nonetheless, as a proof of concept, we can accelerate such electromigration processes using a large electric field of 0.03 eV Å–1, applied from the bottom to the top of the cell, at 1,000 K. The resulting ML-driven MD trajectory (Extended Data Fig. 4) shows a clear migration of cation-like (anion-like) ions to the negative (positive) side. We expect that such a combination of ML potentials with electric-field effects will play a central role in the realistic modelling of PCM devices in the future.

Conclusions

We have reported an ML model that can be used to increase the speed and accuracy of simulations of the PCM GST, enabling fully atomistic simulations of memory devices under realistic device geometry and programming conditions. Since the computational requirements of the ML-driven simulations increase linearly with the model system size45, they can be readily extended to larger and more complex device geometries, and over longer timescales, using increasingly powerful computing resources. In addition to melting and crystal growth simulations, we expect that our ML model will allow for the sampling of nucleation and the atomic-scale observation of the formation of grain boundaries in large models of GST under isothermal conditions or with a temperature gradient. In combination with advanced sampling techniques17, ML-driven simulations could, therefore, allow the determination of nucleation barrier and critical nucleus size for GST.

Future work could also incorporate interface effects on the surrounding electrode and dielectric layers, an issue that is highly relevant to device engineering. For example, it has been reported46 that heat loss can be largely reduced by confining the PCM cell with aluminium oxide walls, but their direct atomic-scale impact on thermal vibrations at the interface and on the phase-transition capacity of PCMs cannot be studied by finite element method simulations alone. Atomistic ML models with extended reference databases could be used to study this effect, as well as help to predict the trend in minimum RESET energy, crystallization time for different device geometries and microscopic failure mechanisms for better architecture design. Our work illustrates the potential role of ML-driven simulations in the design of individual PCM phases, as well as entire devices based on them.

Methods

ML potential fitting

The ML interatomic potentials were fitted using the GAP framework25 and the SOAP structural descriptor33. A review of GAP and related methods is provided elsewhere32; Supplementary Notes 1–4 provide details specific to this work. Based on the iteratively constructed reference database (Fig. 1 and Supplementary Note 1), highly converged DFT data were obtained using CASTEP47 for the energies and forces of the reference structures (that is, database labelling).

Our fitted models provide accurate results for various GST compositions and phases (Supplementary Notes 2 and 3). The numerical accuracy was assessed by a fivefold cross-validation over the reference data and by testing on an external set of AIMD configurations not included in the training. We also performed a comprehensive validation based on physical properties, such as lattice parameters, computed relative energetic stability and structural features of the relevant GST structures.

MD simulations

ML-driven MD simulations were carried out using LAMMPS48 (https://www.lammps.org/) with an interface to the GAP software (which is freely available for academic research at https://github.com/libAtoms/QUIP). Different ensembles were used: canonical (constant volume and temperature; NVT), isobaric–isothermal (constant pressure and temperature; NPT) and microcanonical (constant volume and energy; NVE). The timestep was 2 fs. Supplementary Note 4 provides details of these simulations, as well as of reference AIMD simulations.

Modelling conditions to represent devices

Figure 3a and Extended Data Fig. 3 show three typical operations that need to be represented in simulations. Amorphous PCM films can be crystallized either by isothermal annealing or by Joule heating (programming pulse). In thin-film experiments, amorphous GST alloys crystallize into disordered rock-salt-like phases on substrate heating at ~150 °C, accompanied by a density increase (due to volume shrinkage; Extended Data Fig. 3a). Further annealing (250–400 °C) induces a gradual structural change via continuous vacancy ordering35,49. Such annealing is the initial step for both electronic and photonic PCM devices. The subsequent operation is to melt a fraction of the active volume near the heater by electrical or laser pulses (Fig. 3a), and the subsequent rapid cooling freezes the material to form the amorphous phase. In photonic devices that feature a more open structure, the active volume expands and shrinks frequently; in electronic devices, the PCM is fully encapsulated, and the active volume remains nearly unchanged on programming.

Describing these different scenarios leads to different use cases for ML-driven MD: namely, simulations in the NVT, NPT and NVE ensembles. Given the statistical distribution of vacancies in rock-salt-like GST and the highly disordered nature of liquid and amorphous GST, MD simulations of a few hundred atoms are naturally affected by strong finite-size effects. In parallel simulations of heating small-scale rock-salt-like Ge1Sb2Te4 models (Extended Data Fig. 3c), the SOAP-based crystallinity \(\bar{k}\) (ref. 18) occurs at different onset temperatures for five independent runs with different initial atomic configurations (NVT ensemble), and large fluctuations in mass density are seen when the volume is allowed to change (NPT). In contrast to AIMD, GAP-MD enables efficient simulations for much larger model sizes, improving statistical sampling and therefore the description of systems. In particular, \(\bar{k}\) and mass density profiles nearly overlap for 12 × 12 × 12 supercells (12,096 atoms each) for multiple independent NVT and NPT runs. The same occurs for NVE simulations, yielding more consistent liquid dynamics in larger supercells (Extended Data Fig. 3c). These results suggest finite-size effects become marginal in larger supercells, in line with previous simulations of glassy Ge2Sb2Te5 (ref. 45).

Data availability

Data supporting this work, including the parameter files required to use the potential, fitting data and structural models shown in Figs. 2–4, are available via Zenodo at https://doi.org/10.5281/zenodo.8208202. The XML identifier of the iter-2–3 potential model is GAP_2022_4_7_480_18_6_12_970.

References

Wuttig, M. & Yamada, N. Phase-change materials for rewriteable data storage. Nat. Mater. 6, 824–832 (2007).

Wong, H.-S. P. et al. Phase change memory. Proc. IEEE 98, 2201–2227 (2010).

Zhang, W., Mazzarello, R., Wuttig, M. & Ma, E. Designing crystallization in phase-change materials for universal memory and neuro-inspired computing. Nat. Rev. Mater. 4, 150–168 (2019).

Sebastian, A., Le Gallo, M., Khaddam-Aljameh, R. & Eleftheriou, E. Memory devices and applications for in-memory computing. Nat. Nanotechnol. 15, 529–544 (2020).

Yoon, K. J., Kim, Y. & Hwang, C. S. What will come after V-NAND—vertical resistive switching memory? Adv. Electron. Mater. 5, 1800914 (2019).

Rao, F. et al. Reducing the stochasticity of crystal nucleation to enable subnanosecond memory writing. Science 358, 1423–1427 (2017).

Liu, B. et al. Multi-level phase-change memory with ultralow power consumption and resistance drift. Sci. Bull. 66, 2217–2224 (2021).

Ding, K. et al. Phase-change heterostructure enables ultralow noise and drift for memory operation. Science 366, 210–215 (2019).

Shastri, B. J. et al. Photonics for artificial intelligence and neuromorphic computing. Nat. Photon. 15, 102–114 (2021).

Akola, J. & Jones, R. Structural phase transitions on the nanoscale: the crucial pattern in the phase-change materials Ge2Sb2Te5 and GeTe. Phys. Rev. B 76, 235201 (2007).

Caravati, S., Bernasconi, M., Kühne, T. D., Krack, M. & Parrinello, M. Coexistence of tetrahedral- and octahedral-like sites in amorphous phase change materials. Appl. Phys. Lett. 91, 171906 (2007).

Xu, M., Cheng, Y. Q., Sheng, H. W. & Ma, E. Nature of atomic bonding and atomic structure in the phase-change Ge2Sb2Te5 glass. Phys. Rev. Lett. 103, 195502 (2009).

Huang, B. & Robertson, J. Bonding origin of optical contrast in phase-change memory materials. Phys. Rev. B 81, 081204(R) (2010).

Raty, J.-Y. et al. A quantum-mechanical map for bonding and properties in solids. Adv. Mater. 31, 1806280 (2019).

Lee, T. H. & Elliott, S. R. Ab initio computer simulation of the early stages of crystallization: application to Ge2Sb2Te5 phase-change materials. Phys. Rev. Lett. 107, 145702 (2011).

Kalikka, J., Akola, J. & Jones, R. O. Crystallization processes in the phase change material Ge2Sb2Te5: unbiased density functional/molecular dynamics simulations. Phys. Rev. B 94, 134105 (2016).

Ronneberger, I., Zhang, W., Eshet, H. & Mazzarello, R. Crystallization properties of the Ge2Sb2Te5 phase-change compound from advanced simulations. Adv. Funct. Mater. 25, 6407–6413 (2015).

Xu, Y. et al. Unraveling crystallization mechanisms and electronic structure of phase-change materials by large-scale ab initio simulations. Adv. Mater. 34, 2109139 (2022).

Deringer, V. L., Caro, M. A. & Csányi, G. Machine learning interatomic potentials as emerging tools for materials science. Adv. Mater. 31, 1902765 (2019).

Sosso, G. C., Miceli, G., Caravati, S., Behler, J. & Bernasconi, M. Neural network interatomic potential for the phase change material GeTe. Phys. Rev. B 85, 174103 (2012).

Sosso, G. C. et al. Fast crystallization of the phase change compound GeTe by large-scale molecular dynamics simulations. J. Phys. Chem. Lett. 4, 4241–4246 (2013).

Gabardi, S., Caravati, S., Sosso, G. C., Behler, J. & Bernasconi, M. Microscopic origin of resistance drift in the amorphous state of the phase-change compound GeTe. Phys. Rev. B 92, 054201 (2015).

Sosso, G. C., Donadio, D., Caravati, S., Behler, J. & Bernasconi, M. Thermal transport in phase-change materials from atomistic simulations. Phys. Rev. B 86, 104301 (2012).

Mocanu, F. C. et al. Modeling the phase-change memory material, Ge2Sb2Te5, with a machine-learned interatomic potential. J. Phys. Chem. B 122, 8998–9006 (2018).

Bartók, A. P., Payne, M. C., Kondor, R. & Csányi, G. Gaussian approximation potentials: the accuracy of quantum mechanics, without the electrons. Phys. Rev. Lett. 104, 136403 (2010).

Konstantinou, K., Mocanu, F. C., Lee, T.-H. & Elliott, S. R. Revealing the intrinsic nature of the mid-gap defects in amorphous Ge2Sb2Te5. Nat. Commun. 10, 3065 (2019).

Konstantinou, K., Mocanu, F. C., Akola, J. & Elliott, S. R. Electric-field-induced annihilation of localized gap defect states in amorphous phase-change memory materials. Acta Mater. 223, 117465 (2022).

Dragoni, D., Behler, J. & Bernasconi, M. Mechanism of amorphous phase stabilization in ultrathin films of monoatomic phase change material. Nanoscale 13, 16146–16155 (2021).

Shi, M., Li, J., Tao, M., Zhang, X. & Liu, J. Artificial intelligence model for efficient simulation of monatomic phase change material antimony. Mater. Sci. Semicond. Process. 136, 106146 (2021).

Abou El Kheir, O., Bonati, L., Parrinello, M. & Bernasconi, M. Unraveling the crystallization kinetics of the Ge2Sb2Te5 phase change compound with a machine-learned interatomic potential. Preprint at arXiv https://doi.org/10.48550/arXiv.2304.03109 (2023).

Konstantinou, K., Mavračić, J., Mocanu, F. C. & Elliott, S. R. Simulation of phase-change-memory and thermoelectric materials using machine-learned interatomic potentials: Sb2Te3. Phys. Stat. Sol. B 258, 2000416 (2020).

Deringer, V. L. et al. Gaussian process regression for materials and molecules. Chem. Rev. 121, 10073–10141 (2021).

Bartók, A. P., Kondor, R. & Csányi, G. On representing chemical environments. Phys. Rev. B 87, 184115 (2013).

Raty, J. Y. et al. Aging mechanisms in amorphous phase-change materials. Nat. Commun. 6, 7467 (2015).

Siegrist, T. et al. Disorder-induced localization in crystalline phase-change materials. Nat. Mater. 10, 202–208 (2011).

Caravati, S., Bernasconi, M., Kühne, T. D., Krack, M. & Parrinello, M. First principles study of crystalline and amorphous Ge2Sb2Te5 and the effects of stoichiometric defects. J. Phys. Condens. Matter 21, 255501 (2009).

Skelton, J. M., Loke, D., Lee, T. & Elliott, S. R. Ab initio molecular-dynamics simulation of neuromorphic computing in phase-change memory materials. ACS Appl. Mater. Interfaces 7, 14223–14230 (2015).

Kuzum, D., Jeyasingh, R. G., Lee, B. & Wong, H. S. Nanoelectronic programmable synapses based on phase change materials for brain-inspired computing. Nano Lett. 12, 2179–2186 (2012).

Cheng, Z., Rios, C., Pernice, W. H. P., Wright, C. D. & Bhaskaran, H. On-chip photonic synapse. Sci. Adv. 3, e1700160 (2017).

Matsunaga, T. & Yamada, N. Structural investigation of GeSb2Te4: a high-speed phase-change material. Phys. Rev. B 69, 104111 (2004).

Song, Z. et al. 12-state multi-level cell storage implemented in a 128 Mb phase change memory chip. Nanoscale 13, 10455–10461 (2021).

Tuma, T., Pantazi, A., Le Gallo, M., Sebastian, A. & Eleftheriou, E. Stochastic phase-change neurons. Nat. Nanotechnol. 11, 693–699 (2016).

Orava, J., Greer, A. L., Gholipour, B., Hewak, D. W. & Smith, C. E. Characterization of supercooled liquid Ge2Sb2Te5 and its crystallization by ultrafast-heating calorimetry. Nat. Mater. 11, 279–283 (2012).

Waldecker, L. et al. Time-domain separation of optical properties from structural transitions in resonantly bonded materials. Nat. Mater. 14, 991–995 (2015).

Mocanu, F. C. Konstantinou, K. & Elliott, S. R. Quench-rate and size-dependent behaviour in glassy Ge2Sb2Te5 models simulated with a machine-learned Gaussian approximation potential. J. Phys. D 53, 244002 (2020).

Khan, A. I. et al. Ultralow-switching current density multilevel phase-change memory on a flexible substrate. Science 373, 1243–1247 (2021).

Clark, S. J. et al. First principles methods using CASTEP. Z. Kristallogr. Cryst. Mater. 220, 567–570 (2005).

Thompson, A. P. et al. LAMMPS—a flexible simulation tool for particle-based materials modeling at the atomic, meso, and continuum scales. Comput. Phys. Commun. 271, 108171 (2022).

Zhang, W. et al. Role of vacancies in metal-insulator transitions of crystalline phase-change materials. Nat. Mater. 11, 952–956 (2012).

Jain, A. et al. Commentary: the Materials Project: a materials genome approach to accelerating materials innovation. APL Mater. 1, 011002 (2013).

Lee, D., Lee, K., Yoo, D., Jeong, W. & Han, S. Crystallization of amorphous GeTe simulated by neural network potential addressing medium-range order. Comput. Mater. Sci. 181, 109725 (2020).

Cobelli, M., Galante, M., Gabardi, S., Sanvito, S. & Bernasconi, M. First-principles study of electromigration in the metallic liquid state of GeTe and Sb2Te3 phase-change compounds. J. Phys. Chem. C 124, 9599–9603 (2020).

Acknowledgements

W.Z. acknowledges useful discussions with Z. Song, X. Wang and R. Mazzarello. V.L.D. thanks S. R. Elliott for helpful comments. Y.Z. acknowledges support from a China Scholarship Council–University of Oxford scholarship. E.M. acknowledges support from the National Natural Science Foundation of China (52150710545). W.Z. acknowledges support from the 111 Project 2.0 (BP0618008) and the International Joint Laboratory for Micro/Nano Manufacturing and Measurement Technologies of Xi’an Jiaotong University. E.M. and W.Z. acknowledge XJTU for hosting their work at CAID. We would like to acknowledge the use of the University of Oxford Advanced Research Computing (ARC) facility in carrying out this work (https://doi.org/10.5281/zenodo.22558), as well as computational resources by the HPC platform of Xi’an Jiaotong University, the National Supercomputing Center in Xi’an and Hefei Advanced Computing Center. This work was performed using the resources provided by the Cambridge Service for Data Driven Discovery (CSD3) operated by the University of Cambridge Research Computing Service (https://www.csd3.cam.ac.uk/), provided by Dell EMC and Intel using Tier-2 funding from the Engineering and Physical Sciences Research Council (capital grant EP/T022159/1), and DiRAC funding from the Science and Technology Facilities Council (https://dirac.ac.uk/). We are grateful for the computational support from the UK National High-Performance Computing Service ARCHER2, for which access was obtained via the UKCP consortium and funded by EPSRC grant ref. EP/X035891/1.

Author information

Authors and Affiliations

Contributions

W.Z. and V.L.D. designed and coordinated the research. Y.Z. developed the potential model with guidance from V.L.D., performed the AIMD simulations with guidance from W.Z. and all the other computations and analysis with guidance from all the other authors. Y.Z., W.Z. and V.L.D. wrote the manuscript with input from E.M. All authors contributed to discussions.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Electronics thanks Seungwu Han and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Local structure of disordered GST from ML-driven simulations.

(a) Radial distribution function (RDF) for four different compositions along the GeTe–Sb2Te3 compositional line, from GeTe (cyan) to Sb2Te3 (yellow). The Ge4Sb2Te7 composition was not directly included in training and is highlighted by red labels. (b) Schematic sketch of octahedral and tetrahedral motifs in amorphous GST. (c) Fraction of homopolar bonds (Ge–Ge, Sb–Sb, Ge–Sb, and Te–Te) and tetrahedrally coordinated Ge atoms in simulated amorphous phases. Results from AIMD (GAP-MD) are shown in black (red), respectively. The data points present mean values and the error bars indicate standard deviations over 6,000 snapshots of AIMD simulations (collected from the last 40 ps trajectories of 3 independent melt–quench runs) and over 20,000 snapshots of GAP-MD simulations (collected from the last 40 ps trajectories of 10 independent melt–quench runs), respectively. Lines between points are guides to the eye. (d) Schematic sketch of the formation of a Peierls-like distortion, the degree of which can be quantified by the normalised angular-limited three-body correlation (ALTBC) function. (e) ALTBC functions for four amorphous GST phases as obtained from GAP-MD (red) and AIMD (blue), respectively.

Extended Data Fig. 2 Medium-range order.

This figure provides further insight into the medium-range order of GST compounds as described by GAP and AIMD models. (a) Distribution of primitive rings. The bar charts present the mean values and error bars indicate the standard deviations. (b) Schematic drawing of how the degree of planarity can be characterised using the inter-diagonal distance, d, of four-membered rings51. d values close to 0 indicate overly flat rings. (c) Calculated planarity of various amorphous GST structures from GAP-MD and AIMD, following ref. 51. The solid lines present mean values and the shaded areas indicate standard deviations. The mean values and the standard deviations in (a) and (c) were calculated over 6,000 snapshots of AIMD simulations (collected from the last 40 ps trajectories of 3 independent melt–quench runs) and over 20,000 snapshots of GAP-MD simulations (collected from the last 40 ps trajectories of 10 independent melt–quench runs), respectively.

Extended Data Fig. 3 Device conditions and modelling strategies.

Schematic sketches of (a) thin-film laboratory samples, and (b) photonic devices. Insets indicate different programming conditions in GST. In thin-film experiments, amorphous GST alloys crystallize into dis-ordered rock-salt-like phases upon substrate heating at approximately 150 °C (iso-thermal heating), accompanied by a density increase. In photonic devices, the active volume expands and shrinks frequently, and the heating condition is therefore non-iso-thermal. Hence, constant-pressure simulations (NPT ensemble) are required for these two cases. (c) Evolution of relevant quantities during GAP-MD simulations of Ge1Sb2Te4 with different system sizes, expressed as n × n × n expansions of the cubic rock-salt-type unit cell. From left to right, we show the per-cell averaged crystallinity measure, \(\bar{k}\), during NVT heating, the mass density during NPT heating, and the mean square displacement (MSD) during NVE MD at 1,300 K (with initial configurations taken from the NVT runs). For each model size, five independent simulations were performed, and the results are plotted as semi-transparent lines.

Extended Data Fig. 4 Electromigration and segregation in an electric field.

(a) Simulation combining the GST-GAP-22 ML model and an applied electric field. An elongated structural model of Ge1Sb2Te4 (6 × 6 × 16.7 nm3, containing 18,816 atoms) was isothermally annealed at 1,000 K, that is, in the liquid state corresponding to the beginning of the RESET process in devices. An electric field of 0.03 eV Å–1 was applied in z-direction, from the bottom to the top of the cell, as indicated by an arrow. The atomic charges are taken from ref. 52. One atom of each species is highlighted by large spheres to exemplify the atomic segregation under applied electric field. (b) Distribution of the respective atomic species after 60,000 simulation steps.

Supplementary information

Supplementary Information

Supplementary Notes 1–4, Tables 1–3 and Figs. 1–10.

Supplementary Video 1

GAP-MD simulation of a cumulative SET process in Ge1Sb2Te4.

Supplementary Video 2

Evolution of the temperature gradient obtained from GAP-MD simulations of a mushroom-like setup.

Supplementary Video 3

GAP-MD simulation of a non-isothermal melting process in a device-scale structural model of Ge1Sb2Te4.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhou, Y., Zhang, W., Ma, E. et al. Device-scale atomistic modelling of phase-change memory materials. Nat Electron 6, 746–754 (2023). https://doi.org/10.1038/s41928-023-01030-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41928-023-01030-x

This article is cited by

-

Unraveling the crystallization kinetics of the Ge2Sb2Te5 phase change compound with a machine-learned interatomic potential

npj Computational Materials (2024)