Abstract

Carbon credits generated through jurisdictional-scale avoided deforestation projects require accurate estimates of deforestation emission baselines, but there are serious challenges to their robustness. We assessed the variability, accuracy, and uncertainty of baselining methods by applying sensitivity and variable importance analysis on a range of typically-used methods and parameters for 2,794 jurisdictions worldwide. The median jurisdiction’s deforestation emission baseline varied by 171% (90% range: 87%-440%) of its mean, with a median forecast error of 0.778 times (90% range: 0.548-3.56) the actual deforestation rate. Moreover, variable importance analysis emphasised the strong influence of the deforestation projection approach. For the median jurisdiction, 68.0% of possible methods (90% range: 61.1%-85.6%) exceeded 15% uncertainty. Tropical and polar biomes exhibited larger uncertainties in carbon estimations. The use of sensitivity analyses, multi-model, and multi-source ensemble approaches could reduce variabilities and biases. These findings provide a roadmap for improving baseline estimations to enhance carbon market integrity and trust.

Similar content being viewed by others

Introduction

Forest loss accounts for up to one-fifth of anthropogenic carbon emissions to the atmosphere, making it the second largest anthropogenic emissions source after fossil fuel combustion1,2. Forest loss also results in the reduction of habitats, biodiversity, and ecosystem services provided by forests such as pollination and climate regulation3,4,5, while posing a threat to the livelihoods and cultures of forest-dwelling communities6,7. With forest loss projected to continue at a rate of 5.9 Mha y−1 without additional action8, avoiding deforestation is one of the most crucial actions for climate mitigation and sustainable development.

The necessity of mobilising financial support for actions to reduce deforestation is well-recognised, but global efforts at the scale needed have repeatedly stumbled. Initially, policy concerns and technical challenges prevented the inclusion of avoided deforestation projects in the 1997 Kyoto Protocol’s Clean Development Mechanism. Efforts to make progress on these challenges culminated in the United Nations Framework Convention for Climate Change’s (UNFCCC) Reducing Emissions from Deforestation and forest Degradation (REDD + ) programme, and avoided deforestation projects were officially adopted in 2013. Although the REDD+ framework supports both site-based and jurisdictional approaches which apply across a national or subnational administrative unit, site-based approaches have been predominant, operating primarily through voluntary carbon markets9. In recent years, voluntary carbon markets have expanded rapidly to reach over $2 billion by 2021, with REDD+ projects being the largest by traded volume at $863 million10. However, concerns regarding the credibility of avoided deforestation carbon credits have once again risen to the forefront11, as contrasting evidence on their effectiveness has cast doubt on the methods used to estimate deforestation emission baselines, which define the business-as-usual scenario upon which the emission reductions and subsequent carbon credits are calculated12,13. This has posed challenges for carbon market standards, which include the Verified Carbon Standard (VCS), Forest Carbon Partnership Facility (FCPF), and the Architecture for REDD+ Transactions: The REDD+ Environmental Excellence Standard (ART TREES), among others. To maintain credibility, carbon market standards are typically designed to be conservative, through mechanisms such as selecting methodologies with conservative assumptions, and requiring deductions for permanence, leakage, and uncertainty14,15. Overestimated baselines may generate credits that lack actual emission reductions, thereby resulting in inefficient resource allocation; conversely, underestimated baselines may result in insufficient financial incentives for forest protection. Thus, uncertainties in baseline projections have important implications for avoided deforestation schemes16.

Avoided deforestation projects are required to calculate deforestation emission baselines and their corresponding uncertainties following prescribed methods and guidelines (see Methods for details), such as the VCS, FCPF, and ART TREES standards in the voluntary carbon market. Typically, the deforestation emission baseline is a product of two components – a projected deforestation rate for the future period and a forest carbon estimate. Although baselining methods are broadly similar across various standards such as the VCS, FCPF, and ART TREES, even within the same standard a wide range of methods, parameters, and datasets are permitted, leading to concerns about baseline inflation and the overgeneration of credits17,18. This also leads to confusion and hesitancy among market participants regarding the reliability of standards and potential future reputational risks of being involved in a project that later becomes controversial12,19. Recognising these risks, carbon project developers are working to overhaul standards and address growing concerns about carbon credit integrity20. Project developers are also making a stronger push towards jurisdictional and nested REDD+ baselines21,22, an approach where baselines are determined at jurisdictional-level and applied to jurisdictional projects or allocated to site-based projects nested within the jurisdiction. Although jurisdictional baselines intend to ensure consistency by preventing individual project developers from deliberately making methodological choices to inflate their baselines, they still follow the same broad methodological principles as site-specific baselines. Therefore, jurisdictional baselines are potentially also able to make a wide range of methodological choices, with unknown consequences for the consistency and accuracy of baselines derived.

In order to provide support for enhancing carbon market integrity by improving baselining methods and assist carbon market participants in decision-making, here we assess the variability, accuracy, and uncertainties of baselining methods at the jurisdictional-level on a global scale. Firstly, we assess the range of commonly used methods for establishing deforestation emission baselines, deriving approximately 4 × 109 unique combinations of methods and parameters which were then applied to calculate baselines for each national or subnational jurisdiction. Secondly, we perform sensitivity analysis to quantify the relative variability of deforestation emission baselines across different methods. Thirdly, we validate the accuracy of different deforestation projection methods against historical data. We also use variable importance analysis to identify the key contributors to the observed relative variability and accuracy. Lastly, we assess uncertainties inherent to different deforestation projection methods and different models of carbon estimation, and propagate uncertainties in deforestation emission baselines.

Results

Relative variability of mean deforestation emission baselines

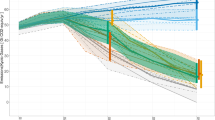

We estimated the relative variability (as measured by the coefficient of variance, CV = standard deviation/mean) of deforestation emission baselines across all methods for each jurisdiction (Fig. 1). The median variability was 171% (90% range: 87–440%), i.e. using different deforestation emission baselining methods would cause deforestation emission baselines to vary by 171% of the mean deforestation emission baseline for the median jurisdiction. Jurisdictions with high relative variabilities could be found across biomes and latitudes, including in jurisdictions with high forest area and high deforestation rates (Figs. S1–2). There was a significant negative correlation between log-transformed forest area and relative variability, with Pearson’s r = -0.34 and p < 0.01 (Fig. S3a), suggesting that although jurisdictions with larger forest area would generally have less variability in baselines, there were also other factors driving that variability.

a Global map of national or subnational jurisdictions, with colour indicating relative variability of deforestation emissions baselines for each jurisdiction. Deforestation emission baselines are the product of the projected deforestation rate and average forest carbon in CO2e per jurisdiction. b Inset of a for South and Southeast Asia. c Inset of a for Europe.

Box plots showing distribution of relative variabilities (% CV) of deforestation emission baselines (each point represents one jurisdiction, for n = 2794 jurisdictions) for different component parameters (see explanation of these datasets and methods in Method section): a Overall relative variability (across all methods), relative variability for each deforestation projection approach, as well as for each forest dataset used for deforestation projection estimates. Note that hist is a simple historical average, linear and poly2 are time functions; global, global_s, regional, and regional_s are linear regression models. b Relative variability for each of the 5–15 possible lengths of historical reference periods used for deforestation projection estimates. c Relative variability for each forest dataset used for carbon estimates, as well as each AGBC, BGBC, and SOC dataset.

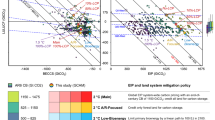

Next, we analysed variable importance for how each parameter type influences the deforestation emission baseline (Table 1). Projection approach was the most important variable, followed by the forest dataset used for estimating deforestation. Within each parameter type, we calculated and plotted the relative variabilities of each level (i.e. CV of possible combinations of methods which use only that level) for each jurisdiction (Fig. 2), and performed one-way ANOVAs and Tukey’s HSD post-hoc tests to determine which levels had lower relative variabilities and would thus generate more consistent results.

Of the deforestation projection approaches, linear regression models (global, global_s, regional, and regional_s; see Methods for explanation) generated the least variable results (Table 1, Fig. 2a); although permitted by VCS standards such as VM0015, these are seldom used compared to the simpler historical average (hist) or time function (linear and poly2) methods (Supplementary Data 1). We found that multi-model ensembles taking the means of 300 linear models which used random combinations of between 3 and 11 driver variables (global_s and regional_s) had significantly lower median relative variabilities than the corresponding linear models which used all 12 driver variables (global and regional). Moreover, the multi-model ensembles reduces the interquartile range (IQR) as well as outliers among jurisdictions. However, the regional models had significantly higher median relative variabilities (regional: 70.7%, regional_s: 65.4%) than their corresponding global models (global: 66.0%, global_s: 61.0%).

Of the forest datasets used for deforestation projection, Hansen et al.23 generated the least variable results, regardless of which tree cover threshold was used (Table 1, Fig. 2a). Hansen et al.23 is also the forest dataset with the highest spatial resolution (30 m) used in this study; however, MODIS24 (500 m) was observed to generate less variable results than ESA-CCI25 (300 m). A longer historical reference period, which ranged between 5 and 15 years in this study, also significantly reduced median relative variabilities (Table 1, Fig. 2b); this suggests that longer historical reference period can reduce the effect of stochastic variations in deforestation rates.

Although using different forest datasets used for carbon estimation (forest mask) had statistically significant differences in relative variabilities of the deforestation emission baseline, none of the post-hoc pairwise comparisons were statistically significant. For aboveground biomass carbon (AGBC), data from Soto-Navarro et al.26. and Spawn et al.27, which are both global wall-to-wall products, generate significantly less variable results than the Global Ecosystem Dynamics Investigation (GEDI) product which provides limited sampling tracks28. For belowground biomass carbon (BGBC), Soto-Navarro et al.26 generated the least variable results, and for soil organic carbon (SOC) neither dataset generated less variable results than the other (Table 1).

Historical accuracy of predicted deforestation rates

We assessed the accuracy of predicted deforestation rates by calculating the forecast error (relative difference between actual and predicted deforestation rates); this was achieved by comparing mean deforestation rates during the historical reference period with the ensuing period of the same duration, if the data was available. The median jurisdiction’s median forecast error between actual and predicted deforestation (calculated by \(\frac{\left|{predicted}-{actual}\right|}{{actual}}\)) was 0.778 (90% range: 0.548–3.56) across all methods (Fig. 3a), i.e. median predicted deforestation was different from actual deforestation by 0.778 times the actual deforestation. Each projection approach had different spatial variabilities and biases in how accurately they predicted deforestation rates (Fig. S4), without there being clear regional patterns. Forecast errors for the linear models (global, global_s, regional, and regional_s) were significantly lower than other approaches, but there were no significant differences between the different linear models. However, by taking the median of all approaches, it is possible to overcome the individual spatial variabilities and biases that are inherent to each individual projection approach. Variable importance analysis showed that the projection approach was the most important in affecting accuracy, followed by the forest dataset used, and finally the length of the historical reference period (Table 2). Linear models were more accurate in predicting deforestation than other approaches (Fig. 4a, S4e-S4f). Hansen et al.23 was the most historically accurate forest dataset. In general, increasing the length of the historical reference period improves accuracy, resulting in lower medians and IQRs for forecast errors (Fig. 4).

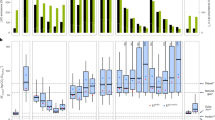

Global maps of national or subnational jurisdictions, with colour indicating median forecast error of different deforestation projection approaches a, and median relative uncertainty (90% CI) of deforestation emission baselines b, of deforestation projections c, of carbon estimates d, of AGBC estimates e, and of BGBC estimates f.

Box plots showing distribution of jurisdictional forecast error a, b of deforestation emission baselines (each point represents one jurisdiction, for n = 2,794 jurisdictions) for different component parameters (see explanation of these datasets and methods in Method section), as well as jurisdictional median relative uncertainty (90% CI) for carbon estimation, deforestation projection model, and propagated uncertainty for the deforestation emission baseline c, and the % of methods with > 15% uncertainty d.

Uncertainties of deforestation emission baselines, deforestation projections and carbon estimates

We propagated the deforestation projection model uncertainties and carbon pool uncertainties by summation of quadrature (Figs. 3b–f and 4c, d). The median jurisdiction’s propagated uncertainty was 29.1% (90% range: 20.8–42.6%) of its deforestation emission baseline. Deforestation projection model uncertainty (median 25.3%; 90% range: 10.1–40.4%) was higher than carbon uncertainty (median 10.7%; 90% range: 6.2–19.5%). There was no clear spatial pattern for overall uncertainty and deforestation projection uncertainty (Fig. 3b-c), but carbon uncertainty appears to be highest in the polar and boreal regions, as well as the tropics (Fig. 3d); in the tropics this was mainly due to AGBC uncertainties (Fig. 3e), while in the Congo basin, polar and boreal regions this was due to both AGBC and BGBC uncertainties (Fig. 3e, f), suggesting systematic issues such as under-sampling of these regions29. Voluntary carbon market standards typically require projects with more than 15% overall uncertainty to apply a discount, or may even prohibit their listing;14 we found that a median of 28.0% (90% range: 20.0–60.0%) of carbon estimation methods exceeded 15% relative uncertainty for each jurisdiction, and a median of 52.9% (90% range: 48.9–58.5%) of deforestation projection methods exceeded 15% relative uncertainty for each jurisdiction, giving a propagated overall median of 68.0% (90% range: 61.1–85.6%) for all possible methods for each jurisdiction.

Discussion

Deforestation emission baselines vary considerably depending on the methods and parameters used, within the range of commonly permissible methods and parameters. Many voluntary carbon standards also permit upward adjustments with justification, such as for High Forest Low Deforestation (HFLD) jurisdictions and in other circumstances, as flexibility for individual projects to make methodological choices14,30,31. Although such flexibility is intended to allow for necessary adjustments to the actual unique local circumstances, it can make baseline setting a matter of political judgement, and opens up carbon market participants to potential credibility and reputational risks should these baselines later be independently assessed to be flawed12,19. Performing sensitivity analyses (such as those used in our study) to assess the variability of baselining methods and parameters permitted, can help guide efforts to overhaul carbon standards and increase transparency to market participants. Sensitivity analyses could also be required for carbon project or programme developers in order to justify methodological choices, and as an additional safeguard to ensure that the calculated baselines are conservative.

In spite of efforts to advance the methods and science behind deforestation projections, it remains challenging to accurately predict deforestation rates for the future, as deforestation rates are influenced by various stochastic political, socio-economic, and biophysical environmental factors32,33. We show that the choice of deforestation projection approach has the greatest influence on baselines, with linear regression models incorporating various driving factors performing better than simpler and more commonly-used historical average and time function models, which do not include such driving factors. Our results also showed that forest datasets had the second-largest influence on baselines. Given that these different approaches, models, and data sources have their own unique spatial variabilities and biases, multi-model and multi-source ensemble approaches to overcome these variabilities and biases can be considered best practice, similar to other modelling communities such as the climate modelling, disease modelling, and remote sensing communities34,35,36. However, the feasibility and costs required for enhanced modelling efforts, as well as the need for more elaborate reporting and decision-making processes when faced with contradicting baselines, may become barriers to implementation.

We found large uncertainties in most combinations of methods and parameters, exceeding the 15% typically allowed in many voluntary carbon market standards; however, actual project developers may have access to additional observational data which could help reduce these uncertainties. Prior research has observed that uncertainties in deforestation baselines are commonly underestimated37. With such large uncertainties in the baselining methods analysed by our study, cost-effective approaches to reduce uncertainties will be relevant to the carbon market, such as improving experimental design and effective use of statistical techniques in place of extensive fieldwork38.

There are many potential areas for further fundamental scientific research to improve baselining methods. Given that forest dataset is the second most important variable affecting variabilities in baselines as well as historical accuracy, further work to improve forest datasets, such as by harmonising definitions of forests and ecosystems, and improving methods for quantifying their area and functional value, will be important39,40,41,42. With higher uncertainties in carbon estimation in the tropics, efforts to acquire more high-quality validation data and perform more forest research in the tropics, are also important. Further work on improving how permanence and leakage risks are monitored and managed may also need to consider the impact of inaccurate and uncertain baselines, as analysed in our study.

Carbon markets play a crucial role in financing nature-based climate solutions such as avoided deforestation and restoration projects, which can potentially provide up to one-third of cost-effective climate mitigation to meet the <2 °C target of the Paris Climate Agreement8. Our analyses show that baselining methods for avoided deforestation projects have high variabilities and uncertainties, and face challenges in accuracy; approaches such as sensitivity analyses and the use of multi-model and multi-source ensembles may help. These results can help guide carbon market participants in decision-making, and provide a useful roadmap for further research and practice to improve baseline estimation methods and thus enhance carbon market integrity and trust.

Methods

We first assessed the range of deforestation emission baselining methods commonly used for avoided deforestation carbon crediting by examining project documents for jurisdictional and site-based approaches. This included 30 VM0015 Verified Carbon Standard (VCS) projects, 4 Plan Vivo projects, and 55 Forest Reference Emissions Level (FREL) submissions to the United Nations Framework Convention on Climate Change (UNFCCC) by countries intending to implement activities under Reducing Emissions from Deforestation and Forest Degradation (REDD + ) (see Supplementary Data 1 and Note S1-2).

Based on this assessment, we used a high-performance computing cluster to calculate deforestation emission baselines for each jurisdiction using all unique combinations ( ~ 4 × 109) within the range of parameters and methods typically permitted and used in avoided deforestation carbon crediting (Table 3). Deforestation emission baselines are the product of the projected deforestation rate and average forest carbon in CO2e per jurisdiction. These possible deforestation emission baselines were calculated for each of 2794 level-0 national jurisdictions or level-1 subnational jurisdictions43. Only nations with at least 1000 km2 of forest (as estimated by MODIS for the year 2001) were included; nations with more than 10,000 km2 of forest were divided into level 1 subnational jurisdictions, while subnational jurisdictions with less than 10% forest cover by land area were excluded.

The types of projection approaches used typically falls into three categories: (i) historical average, which is a continuation of the average annual rate calculated for the historical reference period; (ii) time function, where historical trends are extrapolated to the future from the historical reference period using a linear or logistic regression; and (iii) modelling, which uses a model that expresses future deforestation as a function of driver variables. In this study, we used the historical average, two types of time function (linear time function and 2nd order polynomial time function), as well as four sets of linear models. In the linear models, the response variable (deforestation rate) was box-cox transformed, with outliers removed. There were up to 12 explanatory driver variables to predict deforestation which were biophysical and socioeconomic covariates (Table 4, brief explanation and rationale in Note S3), derived from commonly-used spatial products available at global-scale. Note that in this study, remotely-sensed tree cover loss or forest loss are considered to be synonymous with deforestation; for consistency among all datasets, forest gain or forest regeneration are not included. These spatial datasets were processed in Google Earth Engine using Mollweide equal-area projection. Next, statistical modelling and calculations were performed in R version 4.0.2, using the statistical packages ‘MASS’ v7.3-51.6’, ‘dplyr’ v1.1.2, and the parallelisation packages ‘foreach’ v1.5.0 and ‘doParallel’ v1.0.15. Bidirectional stepwise elimination was performed to determine variables that created the best model fit. A set of global models were run: the first linear model used all 12 explanatory driver variables (before stepwise elimination), while the second linear model was the mean of 300 repetitions of 3-11 random combinations of these explanatory driver variables. Next, these linear models were repeated for six regions of the world separately: Africa, Asia, Oceania, Latin America, North America, and Europe. These seven projection approaches in our study were then applied for all possible combinations of the different forest datasets, number of historical reference years, and range of years available, to derive the projected deforestation rate. Only non-negative projected deforestation values were retained, as methods which generate negative projected deforestation values would likely be rejected in actual baselines.

Average forest carbon in CO2e per jurisdiction was estimated from various aboveground biomass (AGB), belowground biomass (BGB), and soil organic carbon (SOC) datasets. These spatial datasets were processed in Google Earth Engine using Mollweide equal-area projection. All biomass was converted to carbon stock by a 0.47 stoichiometric factor, then to carbon dioxide equivalent emissions (CO2e) by a 3.67 molar mass conversion factor. We assumed a conservative 10-year decay estimate for the belowground carbon pool44.

Uncertainties were derived from the time function and linear models by extracting the 90% prediction intervals from the models, in line with ART TREES methodology30 and IPCC convention1. Uncertainties for carbon pools were either derived from the data provider where available, or used the IPCC default of 33%. Finally, these uncertainties were propagated by summation of quadrature.

Finally, variable importance was assessed by using random forest machine learning models in the R package ‘ranger’ v0.13.1, with deforestation emission baselines as the response variable and type of parameter as the explanatory variables. To improve computational efficiency, we bootstrapped 100 random forest models with 200 trees each, and with each random forest model taking a random sample of 900,000 rows.

Data availability

All data generated by this study is included in the manuscript and supplementary, as well as the Figshare repository (https://doi.org/10.6084/m9.figshare.24597315).

References

Shukla, P. R. et al. AR6 Mitigation of Climate Change: Summary for Policymakers. https://www.ipcc.ch/report/ar6/wg3/.

van der Werf, G. R. et al. CO2 emissions from forest loss. Nat. Geosci. 2, 737–738 (2009).

Giam, X., Bradshaw, C. J. A., Tan, H. T. W. & Sodhi, N. S. Future habitat loss and the conservation of plant biodiversity. Biol. Conserv. 143, 1594–1602 (2010).

Sarira, T. V., Zeng, Y., Neugarten, R., Chaplin-Kramer, R. & Koh, L. P. Co-benefits of forest carbon projects in Southeast Asia. Nat. Sustain. 5, 393–396 (2022).

Teo, H. C. et al. Large-scale reforestation can increase water yield and reduce drought risk for water-insecure regions in the Asia-Pacific. Glob. Change Biol. 28, 6385–6403 (2022).

Haenssgen, M. J. et al. Implementation of the COP26 declaration to halt forest loss must safeguard and include Indigenous people. Nat. Ecol. Evol. 63, 235–236 (2022). 6.

Mabasa, M. A. & Makhubele, J. C. Impact of deforestation on sustainable livelihoods in low-resourced areas of thulamela local. Municipality: Implic. Pract. J. Hum. Ecol. 55, 173–182 (2017).

Griscom, B. W. et al. Natural climate solutions. Proc. Natl Acad. Sci. USA 114, 11645–11650 (2017).

Laing, T., Taschini, L. & Palmer, C. Understanding the demand for REDD+ credits. Environ. Conserv. 43, 389–396 (2016).

Donofrio, S., Maguire, P., Daley, C., Calderon, C. & Lin, K. State of the Voluntary Carbon Markets 2022 Q3. (2022).

Balmford, A. et al. Credit credibility threatens forests. Science 380, 466–467 (2023).

West, T. A. P., Börner, J., Sills, E. O. & Kontoleon, A. Overstated carbon emission reductions from voluntary REDD+ projects in the Brazilian Amazon. Proc. Natl Acad. Sci. USA 117, 24188–24194 (2020).

Guizar-Coutiño, A. et al. A global evaluation of the effectiveness of voluntary REDD+ projects at reducing deforestation and degradation in the moist tropics. Conserv. Biol. 36, e13970 (2022).

Chagas, T., Galt, H., Lee, D., Neeff, T. & Streck, C. A close look at the quality of REDD+ carbon credits. (2020).

Grassi, G., Monni, S., Federici, S., Achard, F. & Mollicone, D. Applying the conservativeness principle to REDD to deal with the uncertainties of theestimates. Environ. Res. Lett. 3, 035005 (2008).

Friess, D. A. & Webb, E. L. Variability in mangrove change estimates and implications for the assessment of ecosystem service provision. Glob. Ecol. Biogeogr. 23, 715–725 (2014).

Mertz, O. et al. Uncertainty in establishing forest reference levels and predicting future forest-based carbon stocks for REDD+. J. Land Use Sci. 13, 1–15 (2017).

Rifai, S. W., West, T. A. P. & Putz, F. E. ‘Carbon Cowboys’ could inflate REDD+ payments through positive Measurement bias. Carbon Manag. 6, 151–158 (2015).

Greenfield, P. Revealed: more than 90% of rainforest carbon offsets by biggest certifier are worthless, analysis shows. The Guardian (2023).

Verra. Verra Launches Consultation on Proposed Changes to the VCS Program. (2023).

Ehara, M. et al. Allocating the REDD+ national baseline to local projects: a case study of Cambodia. Policy Econ. 129, 102474 (2021).

Irawan, S., Widiastomo, T., Tacconi, L., Watts, J. D. & Steni, B. Exploring the design of jurisdictional REDD+: the case of Central Kalimantan, Indonesia. Policy Econ. 108, 101853 (2019).

Hansen, M. C. et al. High-resolution global maps of 21st-century forest cover change. Science 342, 850–853 (2013).

Friedl, M. & Sulla-Menashe, D. MCD12C1 MODIS/Terra+ Aqua Land Cover Type Yearly L3 Global 0.05 Deg CMG V006 [data set]. NASA EOSDIS Land Processes DAAC (2015) https://doi.org/10.5067/MODIS/MCD12Q1.061.

Defourny, P. et al. Observed annual global land-use change from 1992 to 2020 three times more dynamic than reported by inventory-based statistics. Prep. (2023).

Soto-Navarro, C. et al. Mapping co-benefits for carbon storage and biodiversity to inform conservation policy and action. Philos. Trans. R. Soc. B 375, 20190128 (2020).

Spawn, S. A., Sullivan, C. C., Lark, T. J. & Gibbs, H. K. Harmonized global maps of above and belowground biomass carbon density in the year 2010. Sci. Data 2020 71 7, 1–22 (2020).

Dubayah, R. O. et al. GEDI L4B Gridded Aboveground Biomass Density, Version 2 [data set]. (ORNL DAAC, 2022).

Duncanson, L. et al. The Importance of Consistent Global Forest Aboveground Biomass Product Validation. Surv. Geophys. 40, 979–999 (2019).

ART Secretariat. The REDD+ Environmental Excellence Standards (TREES), Version 2.0. (2021).

Verra. VM0007 REDD+ METHODOLOGY FRAMEWORK (REDD+MF), V1.6. (2020).

Hewson, J., Crema, S. C., González-Roglich, M., Tabor, K. & Harvey, C. A. New 1 km resolution datasets of global and regional risks of tree cover loss. Land 8, 14 (2019).

Teo, H. C., Campos-Arceiz, A., Li, B. V., Wu, M. & Lechner, A. M. Building a green Belt and Road: a systematic review and comparative assessment of the Chinese and English-language literature. PLOS ONE 15, e0239009 (2020).

Zhang, J. Multi-source remote sensing data fusion: status and trends. Int. J. Image Data Fusion 1, 5–24 (2010).

Tebaldi, C. & Knutti, R. The use of the multi-model ensemble in probabilistic climate projections. Philos. Trans. R. Soc. Math. Phys. Eng. Sci. 365, 2053–2075 (2007).

Reich, N. G. et al. Accuracy of real-time multi-model ensemble forecasts for seasonal influenza in the U.S. PLOS Comput. Biol. 15, e1007486 (2019).

Yanai, R. D. et al. Improving uncertainty in forest carbon accounting for REDD+ mitigation efforts. Environ. Res. Lett. 15, 124002 (2020).

Emick, E. et al. An approach to estimating forest biomass while quantifying estimate uncertainty and correcting bias in machine learning maps. Remote Sens. Environ. 295, 113678 (2023).

Friess, D. A. & Webb, E. L. Bad data equals bad policy: how to trust estimates of ecosystem loss when there is so much uncertainty? Environ. Conserv. 38, 1–5 (2011).

Sexton, J. O. et al. Conservation policy and the measurement of forests. Nat. Clim. Change 62, 192–196 (2015).

Grainger, A. Difficulties in tracking the long-term global trend in tropical forest area. Proc. Natl Acad. Sci. USA 105, 818–823 (2008).

Sandker, M. et al. The Importance of High–Quality Data for REDD+ Monitoring and Reporting. Forests 12, 99 (2021).

Natural Earth. https://www.naturalearthdata.com/ (2019).

VCS. Agriculture, Forestry and Other Land Use (AFOLU) Requirements. VCS Version 3 (2017).

Domke, G. et al. Chapter 4: Forest Land. in 2019 Refinement to the 2006 IPCC Guidelines for National Greenhouse Gas Inventories Volume 4: Agriculture, Forestry and Other Land Use (2019).

Panagos, P. et al. European Soil Data Centre 2.0: Soil data and knowledge in support of the EU policies. Eur. J. Soil Sci. 73, e13315 (2022).

Hengl, T. & Wheeler, I. Soil organic carbon content. https://zenodo.org/record/2525553 (2018).

Jarvis, A., Reuter, H. I., Nelson, A. & Guevara, E. Hole-filled SRTM for the globe Version 4. CGIAR-CSI SRTM 90m Database https://srtm.csi.cgiar.org (2008).

Hijmans, R. J., Cameron, S. E., Parra, J. L., Jones, P. G. & Jarvis, A. Very high resolution interpolated climate surfaces for global land areas. Int. J. Climatol. 25, 1965–1978 (2005).

Kummu, M., Taka, M. & Guillaume, J. H. A. Gridded global datasets for gross domestic product and human development index over 1990–2015. Sci. Data 5, 1–15 (2018).

Li, X., Li, D., Xu, H. & Wu, C. Intercalibration between DMSP/OLS and VIIRS night-time light images to evaluate city light dynamics of Syria’s major human settlement during Syrian Civil War. Int. J. Remote Sens. 38, 5934–5951 (2017).

Teo, H. C., Lechner, A. M., Sagala, S. & Campos-Arceiz, A. Environmental impacts of planned capitals and lessons for Indonesia’s new capital. Land 9, 438 (2020).

Zhao, C., Cao, X., Chen, X. & Cui, X. A consistent and corrected nighttime light dataset (CCNL 1992–2013) from DMSP-OLS data. Sci. Data 9, 1–12 (2022).

Elvidge, C. D., Baugh, K., Zhizhin, M., Hsu, F. C. & Ghosh, T. VIIRS night-time lights. Int. J. Remote Sens. 38, 5860–5879 (2017).

Liu, X., de Sherbinin, A. & Zhan, Y. Mapping urban extent at large spatial scales using machine learning methods with VIIRS nighttime light and MODIS daytime NDVI data. Remote Sens 11, 1247 (2019).

Oliphant, A., Thenkabail, P. & Teluguntla, P. Global food-security-support-analysis data at 30-m resolution (GFSAD30) cropland-extent products. (2022) https://doi.org/10.3133/ofr20221001.

Maus, V. et al. An update on global mining land use. Sci. Data 91, 1–11 (2022).

Du, Z. et al. A global map of planting years of plantations. Sci. Data 91, 1–9 (2022).

Acknowledgements

L.P.K. is supported by the Singapore National Research Foundation (NRF-RSS2019-007), as well as the Singapore National Research Foundation and Agency for Science, Technology and Research LCER Phase 2 funding programme (U2303D4101). H.T. is supported by the Singapore Ministry of Education (MOE-T2EP50122-0006). T.V.S. is supported by MAC3 Impact Philanthropies. Q.Z. is supported by the Strategic Hiring Scheme Fund of the Hong Kong Polytechnic University (P0044791).

Author information

Authors and Affiliations

Contributions

H.C.T., L.P.K., D.A.F., H.T. conceived the study. L.P.K., D.A.F., H.T. supervised the study. H.C.T. conducted the analyses and led the writing. N.H.L.T. performed literature review and data collection. All authors (H.C.T., N.H.L.T., Q.Z., A.J.Y.L., R.S., X.C., Y.Z., T.V.S, J.D.T.D.A, H.T., D.A.F., L.P.K.) contributed to designing the methodologies, writing, and editing.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks Peter Elsasser and Xi Li for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Teo, H.C., Tan, N.H.L., Zheng, Q. et al. Uncertainties in deforestation emission baseline methodologies and implications for carbon markets. Nat Commun 14, 8277 (2023). https://doi.org/10.1038/s41467-023-44127-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-023-44127-9

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.