Abstract

Deep learning and quantum computing have achieved dramatic progresses in recent years. The interplay between these two fast-growing fields gives rise to a new research frontier of quantum machine learning. In this work, we report an experimental demonstration of training deep quantum neural networks via the backpropagation algorithm with a six-qubit programmable superconducting processor. We experimentally perform the forward process of the backpropagation algorithm and classically simulate the backward process. In particular, we show that three-layer deep quantum neural networks can be trained efficiently to learn two-qubit quantum channels with a mean fidelity up to 96.0% and the ground state energy of molecular hydrogen with an accuracy up to 93.3% compared to the theoretical value. In addition, six-layer deep quantum neural networks can be trained in a similar fashion to achieve a mean fidelity up to 94.8% for learning single-qubit quantum channels. Our experimental results indicate that the number of coherent qubits required to maintain does not scale with the depth of the deep quantum neural network, thus providing a valuable guide for quantum machine learning applications with both near-term and future quantum devices.

Similar content being viewed by others

Introduction

Machine learning has achieved tremendous success in both commercial applications and scientific research over the past decade. In particular, deep neural networks play a vital role in cracking some notoriously challenging problems, ranging from playing Go1 to predicting protein structures2. They contain multiple hidden layers and are believed to be more powerful in extracting high-level features from data than traditional methods3,4. The learning process can be fueled by updating the parameters through gradient descent, where the backpropagation (BP) algorithm enables efficient calculations of gradients via the chain rule3.

By harnessing the weirdness of quantum mechanics such as superposition and entanglement, quantum machine learning approaches hold the potential to bring advantages compared with their classical counterpart. In recent years, exciting progress has been made along this interdisciplinary direction5,6,7,8,9,10. For example, rigorous quantum speedups have been proved in classification models11 and generative models12 with complexity-theoretic guarantees. In terms of the expressive power of quantum neural networks, there is also preliminary evidence showing their advantages over the comparable feedforward neural networks13. Meanwhile, noteworthy progress has also been made on the experimental side14,15,16,17,18,19,20,21,22. For example, in ref. 14, the authors realize a quantum convolutional neural network on a superconducting quantum processor. In ref. 15, an experimental demonstration of quantum adversarial learning has been reported. Similar to deep classical neural networks with multiple hidden layers, a deep quantum neural network (DQNN) with the layer-by-layer architecture is proposed23,24,25, which can be trained via a quantum analog of the BP algorithm. The word “deep” in the DQNN refers to multiple hidden layers, rather than the large depth of quantum circuits. Under this framework, the quantum analog of a perceptron is a general unitary operator acting on qubits from adjacent layers, whose parameters are updated by multiplying the corresponding updating matrix of the perceptron in the training process.

In this paper, we report an experimental demonstration of training DQNNs through the BP algorithm on a programmable superconducting processor with six frequency-tunable transmon qubits. We find that a three-layer DQNN can be efficiently trained to learn a two-qubit target quantum channel with a mean fidelity of up to 96.0% and the ground state energy of molecular hydrogen with an accuracy of up to 93.3% compared to the theoretical prediction. In addition, we also demonstrate that a six-layer DQNN can efficiently learn a one-qubit target quantum channel with a mean fidelity of up to 94.8%. Our approach can carry over to other DQNNs with a larger width and depth straightforwardly, thus paving the way towards large-scale quantum machine learning with potential advantages in practical applications.

Results

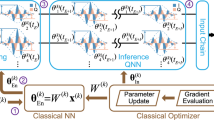

Deep quantum neural networks

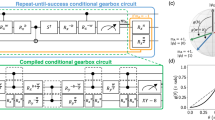

As sketched in Fig. 1a, our DQNN has a layer-by-layer structure, and maps the quantum information layerwise from the input layer state ρin, through L hidden layers, to the output layer state ρout. Quantum perceptrons are the building blocks of the DQNN, and different types of quantum perceptrons have been experimentally implemented recently26,27,28. DQNNs with the most general form of quantum perceptrons, which can apply generic unitaries on all qubits at adjacent layers, are capable of universal quantum computation29. In practice, we usually employ restricted forms of perceptrons for experimental implementations on noisy quantum devices. In this work, a single quantum perceptron is defined as a parameterized quantum circuit applied to the corresponding qubit pair at adjacent layers, which is shown in Fig. 1b. A sequential combination of the quantum perceptrons constitutes the layerwise operation between adjacent layers. One of the key characteristics of the DQNN is the layer-by-layer quantum state mapping, allowing efficient training via the quantum BP algorithm23.

a Architecture exhibition of a general DQNN. Information propagates layerwise from the input layer to the output layer. At adjacent two layers, we apply the quantum perceptron in the order according to the exhibited circuit in b. A quantum perceptron is realized by applying two single-qubit rotation gates Rx(θ1) and Rx(θ2) (the rotations along the x axis with variational angles θ1 and θ2, respectively) followed by a fixed two-qubit controlled-Phase gate. c Illustration of the quantum backpropagation algorithm. We apply forward channels \({{{{{{{\mathcal{E}}}}}}}}\) on ρin and successively obtain {ρ1, ρ2…ρout}, and apply backward channels \({{{{{{{\mathcal{F}}}}}}}}\) to successively obtain {σout, σL…σ1} in the backward process. These forward and backward terms are used for the gradient evaluation. d Exhibition of a quantum processor with six superconducting transmon qubits, which are used to experimentally implement the DQNNs. The transmon qubits (Q1–Q6) and the bus resonators (B1 and B2) are marked.

We sketch the general experimental training process in Fig. 1c. When performing the quantum BP algorithm, one only requires the information from adjacent two layers, rather than the full DQNN, to evaluate the gradients with respect to all parameters at these two layers. Such a BP-equipped DQNN bears the following merit: the number of coherent qubits required to maintain does not scale with the depth of the DQNN. This merit makes it possible to realize DQNNs with reduced number of layers of qubits through qubit reusing23. The qubits can be reused as follows: after completing the operations between adjacent layers, we can reset qubits in the previous layer to the fiducial product state, and then reuse them as qubits in the subsequent layer. We note that resetting qubits takes extra time in experiments, and this raises additional coherence requirements for qubits in the current layer. By reusing qubits, only two layers of qubits are required to implement a DQNN regardless of its depth. The experimental errors on qubits in the previous layer will affect qubits in the current layer, which will limit the depth of the DQNN in real experiments with noisy devices.

Experimental setup

Our experiment is carried out on a superconducting quantum processor, which possesses six two-junction and frequency-tunable transmon qubits30,31,32,33,34,35,36,37. As photographed in Fig. 1d, the chip is fabricated with the layout of the qubits being purposely and carefully optimized for a layer-by-layer structure. Each transmon qubit is coupled to an individual flux control line, XY control line, and quarter-wavelength readout resonator, respectively. All readout resonators are coupled to a common transmission line, which is connected through a Josephson parametric amplifier for high-fidelity single-shot readout of the qubits38,39. In order to implement the two-qubit gates in the quantum perceptrons, two separate half-wavelength bus resonators are respectively used to mediate the interactions among the qubits between layers16,40,41. The characterized fidelities of single-qubit Rx gates are above 99.5%, while the average fidelity of the two-qubit gates is 98.4%. The detailed experimental setup and device parameters can be found in Supplementary Note 4.

Learning a two-qubit quantum channel

The first application of DQNNs is learning a quantum channel. Specifically, we consider using DQNNs to learn a two-qubit target quantum channel. We experimentally implement a three-layer DQNN with two qubits in each layer. This three-layer DQNN is denoted by DQNN1. As mentioned above, we employ restricted form of perceptrons due to realistic experimental limitations. In general, the restricted form of perceptrons would reduce the representation power of DQNNs. Therefore, we construct the target quantum channel using the same ansatz as DQNN1 with randomly generated parameters θt, so that the target quantum channel would be within the representation range of DQNN1 (see Methods). Here, we choose \(\left|00\right\rangle\), \(\left|01\right\rangle\), \(\left |++ \right\rangle\), and \(\left |+i+i\right\rangle\) as our input states \({\rho }_{x}^{\,{{{{{{\rm{in}}}}}}}\,}\), where the subscript x = 1, 2, 3, 4 is the labeling, \(\left|0\right\rangle\) and \(\left|1\right\rangle\) are the eigenstates of Pauli Z matrix, \(\left |+\right\rangle \,\)\(\left(\left|-\right\rangle \right)\) is the eigenstate of Pauli X matrix, and \(\left |+i\right\rangle\) is the eigenstate of Pauli Y matrix. The four pairs of \(({\rho }_{x}^{\,{{{{{{\rm{in}}}}}}}},{\tau }_{x}^{{{{{{{\rm{out}}}}}}}\,})\) serve as the training dataset, where \({\tau }_{x}^{\,{{{{{{\rm{out}}}}}}}\,}\) is the corresponding desired output state produced by the target quantum channel. The optimization goal is to maximize the mean fidelity between \({\tau }_{x}^{\,{{{{{{\rm{out}}}}}}}\,}\) and the measured DQNN output \({\rho }_{x}^{{{{{{{{\rm{out}}}}}}}}}\) averaged over all four input states. The general training procedure goes as follows: (1) Initialization: we randomly choose the initial gate parameters θ for all perceptrons in DQNN1. (2) Forward process (implemented on our quantum processor): for each training sample \(({\rho }_{x}^{\,{{{{{{\rm{in}}}}}}}},{\tau }_{x}^{{{{{{{\rm{out}}}}}}}\,})\), we prepare the input layer to \({\rho }_{x}^{\,{{{{{{\rm{in}}}}}}}\,}\), then apply layerwise forward channels \({{{{{{{{\mathcal{E}}}}}}}}}^{1}\) and \({{{{{{{{\mathcal{E}}}}}}}}}^{{{{{{{\rm{out}}}}}}}}\), and extract \({\rho }_{x}^{1}\) and \({\rho }_{x}^{\,{{{{{{\rm{out}}}}}}}\,}\) successively by carrying out quantum state tomography42. (3) Backward process (implemented on a classical computer): we initialize the output layer to \({\sigma }_{x}^{\,{{{{{{\rm{out}}}}}}}\,}\), which is determined by \({\rho }_{x}^{{{{{{{{\rm{out}}}}}}}}}\) and \({\tau }_{x}^{{{{{{{{\rm{out}}}}}}}}}\) (see Supplementary Note 1), and then apply backward channels \({{{{{{{{\mathcal{F}}}}}}}}}^{{{{{{{\rm{out}}}}}}}}\) and \({{{{{{{{\mathcal{F}}}}}}}}}^{1}\) on \({\sigma }_{x}^{\,{{{{{{\rm{out}}}}}}}\,}\) to successively obtain \({\sigma }_{x}^{1}\) and \({\sigma }_{x}^{0}\). (4) Based on \(\{\left({\rho }_{x}^{l-1},{\sigma }_{x}^{l}\right)\}\), we evaluate the gradient of the fidelity with respect to all the variational parameters in the adjacent layers l−1 and l. Then we take the average over the whole training dataset for the final gradient, which is used to update the variational parameters θ. (5) Repeat (2), (3), (4) for s0 rounds. The pseudocode for our algorithm is provided in Supplementary Note 1.

In Fig. 2, we randomly choose 30 different initial parameters θ, and then train DQNN1 to learn the same target quantum channel. We observe that DQNN1 converges quickly during the training process, with the highest fidelity above 96%. To benchmark the performance of DQNN1, we carry out a classical simulation of the training in Supplementary Note 3, where we train DQNN1 to learn a target quantum channel without considering any experimental imperfections. The numerical results show that the average converged mean fidelity for 50 different initial parameters is above 98%. Compared with the numerical simulation results, the deviation of the final converged fidelities is due to experimental imperfections, including qubit decoherence and residual ZZ interactions between qubits43,44,45. In the upper left inset of Fig. 2, we show the distribution for all the converged fidelities from these 30 repeated experiments. We expect that the distribution will concentrate to a higher fidelity for improved performance of the quantum processor.

We train the three-layer DQNN1 with 30 different initial parameters and plot the mean fidelity as a function of training iterations for 10 of them for clarity. The upper left inset shows the distribution of the converged mean fidelities of these 30 different initial parameters. We choose one of the learning curves (marked with triangles), then randomly generate 100 different input quantum states, and test the fidelity between their output states given by the target quantum channel and the trained (untrained) DQNN1. In the lower left inset, two curves show the distribution of the fidelities for the trained (untrained) DQNN1. The right inset is a schematic illustration of DQNN1. At adjacent layers, we apply the quantum perceptrons in the order provided in Supplementary Table 2.

To evaluate the performance of DQNN1, we choose one training process from the 30 experiments, and refer the DQNN1 with parameters corresponding to the ending (starting) iteration of the training curve as the trained (untrained) DQNN1. We generate other 100 different input quantum states and experimentally measure their corresponding output states produced by the trained (untrained) DQNN1. We test the fidelity between output states given by the target channel and the trained (untrained) DQNN1. As shown in the lower left inset of Fig. 2, for the trained DQNN1, 43% of the fidelities exceed 0.95 and 95% of the fidelities are higher than 0.9, which separate away from the distribution of the results of the untrained DQNN1. This contrast illustrates the effectiveness of the training process of DQNN1.

Learning the ground state energy

DQNNs also provide a complementary approach to solving quantum chemistry problems. Here, we apply DQNNs to learn the ground state energy of a given Hamiltonian H as an example. The optimization goal is to minimize the energy estimate \({{{{{{\mathrm{tr}}}}}}}\,({\rho }^{{{{{{{\rm{out}}}}}}}}H)\) for the output state of the DQNN. We aim to learn the ground state energy of the molecular hydrogen Hamiltonian46. By exploiting the Bravyi-Kitaev transformation and certain symmetry, the Hamiltonian of molecular hydrogen can be reduced to the effective Hamiltonian acting on two qubits: \({\hat{H}}_{{{{{{{{\rm{BK}}}}}}}}}={g}_{0}{{{{{{{\bf{I}}}}}}}}+{g}_{1}{Z}_{0}+{g}_{2}{Z}_{1}+{g}_{3}{Z}_{0}{Z}_{1}+{g}_{4}{Y}_{0}{Y}_{1}+{g}_{5}{X}_{0}{X}_{1}\), where Xi, Yi, Zi are Pauli operators on the i-th qubit, and coefficients gj (j = 0, ⋯, 5) depend on the fixed bond length of molecular hydrogen. We consider the bond length 0.075 nm in this work and the corresponding coefficients gi can be found in ref. 46.

We use DQNN1 again as the variational ansatz to learn the ground state of molecular hydrogen with the following procedure, similar to the previous one of learning a quantum channel: (1) Initialization: we prepare the input layer to the fiducial product state \(\left|00\right\rangle\), and randomly generate initial gate parameters θ for DQNN1. 2) In the forward process (implemented on the quantum processor), we apply forward channels \({{{{{{{{\mathcal{E}}}}}}}}}^{1}\) and \({{{{{{{{\mathcal{E}}}}}}}}}^{{{{{{{\rm{out}}}}}}}}\) in succession, and extract quantum states of the hidden layer (ρ1) and the output layer (ρout) by quantum state tomography. (3) In the backward process (implemented on a classical computer), we initialize the quantum state of the output layer to σout, and then obtain σ1 and σ0 after successively applying backward channels \({{{{{{{{\mathcal{F}}}}}}}}}^{{{{{{{\rm{out}}}}}}}}\) and \({{{{{{{{\mathcal{F}}}}}}}}}^{1}\) on σout. (4) Based on \(\{\left({\rho }^{l-1},{\sigma }^{l}\right)\}\), we calculate the gradient of the energy estimate with respect to all the variational parameters in the adjacent layers l−1 and l, and then update all gate parameters in DQNN1. (5) Repeat (2), (3), (4) for s0 rounds. The pseudocode for our algorithm is provided in Supplementary Note 1.

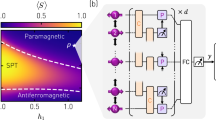

We train DQNN1 with 30 different initial parameters and show our experimental results in Fig. 3a. We observe that DQNN1 converges within 20 iterations. The lowest ansatz energy estimate reaches below −1.727 (hartree) in the learning process, with an accuracy up to 93.3% compared to the theoretical value of the ground state energy −1.851 (hartree). This shows the good performance of DQNN1 and the accuracy of our experimental system control. The inset of Fig. 3a shows the distribution of all the converged energy from these 30 repeated experiments with different initial parameters, six of which have accuracy above 90%.

a Experimental energy estimate at each iteration during the learning process for different initial parameters. The inset displays the distribution of converged energy estimates of 30 different initial parameters. b Numerical results for the mean energy estimate with different coherence time T and residual ZZ interaction strength μ between qubits. Specifically, we assume the same μ between all neighboring qubits and adjust the coherence time by the same ratio (T/T0) for all qubits, where T and T0 are the coherence times in the numerical simulation and the experiment, respectively.

To numerically investigate the effects of experimental imperfections on training DQNNs, we consider two possible sources: decoherence of qubits and residual ZZ interactions between qubits (see Methods for details). Under different residual ZZ interaction strength μ and different decoherence time T, we numerically train the DQNN with 30 different initial parameters to learn the ground state energy of the molecular hydrogen. We find that for four of these initial parameters DQNN1 converges to local minima instead of the global minimum. Excluding these abnormal instances with local minima, we plot the average energy estimate as a function of μ with different T/T0 in Fig. 3b, where T0 is the experimentally measured qubit coherence time. The numerical results show that, when there is no residual ZZ interaction, the decoherence of the qubits at T/T0 = 1 degrades the accuracy of the average energy estimate by 6% to −1.74 (the leftmost point of the dotted line). At μ/2π ≈ 1 MHz (close to our experimental characterization) with T/T0 = 1, the average energy estimate is −1.53, which is about 17% higher than the theoretical value and is comparable to the experimental values shown in Fig. 3a. Apparently, such a large μ dominantly limits the training performance, while the variation of the coherence time has a minor effect. This is anticipated given the fact that the total running time of the DQNN (1.2 μs) is significantly shorter than the average characteristic coherence time of the qubits (7.5 μs). These experimental imperfections can be suppressed after introducing advanced technologies in the design and fabrication of better superconducting quantum circuits, such as tunable couplers47,48,49 and tantalum-based qubits50,51.

Learning a one-qubit quantum channel

To further illustrate the efficiency of the quantum BP algorithm, we construct another DQNN with four hidden layers (denoted as DQNN2) by rearranging our six-qubit quantum processor into a six-layer structure, with one-qubit respectively in each layer. We focus on the task of learning a one-qubit target quantum channel, which is constructed using the same ansatz as DQNN2 with randomly generated parameters (see Methods). We choose \(\left|0\right\rangle,\left|1\right\rangle,\left|-\right\rangle\) as our input states and compare the measured output states of DQNN2 with the desired ones from the target single-qubit quantum channel. The general training procedure is similar as in training DQNN1 discussed above. Our experimental results are summarized in Fig. 4, which shows the learning curves for 10 different initial parameters. We find that DQNN2 can learn the target quantum channel with a mean fidelity up to 94.8%. We notice that the variance among the converged mean fidelity in DQNN2 is smaller than that for DQNN1, which may be attributed to the smaller total circuit depth and thus less error accumulation due to experimental imperfections. To study the learning performance, we choose one of these learning curves (marked in triangles), and refer DQNN2 with parameters corresponding to the ending (starting) iteration of the learning curve as the trained (untrained) DQNN2. We then use other 100 different input quantum states to test the trained and untrained DQNN2 by measuring the fidelities between the experimental output states and the corresponding desired ones given by the target quantum channel. As shown in the upper inset of Fig. 4, the fidelity distribution concentrates around 0.92 for the trained DQNN2, which stands in stark contrast to that of the untrained DQNN2 and thus indicates a good performance after training.

The mean fidelity of training the six-layer DQNN2 is plotted as the function of training iterations for different initial parameters. We randomly generate 100 different single-qubit states, and evaluate the fidelities between their output states produced by DQNN2 and their desired output states given by the target quantum channel. The upper inset displays the distribution in two cases: a well-trained DQNN2 (marked by squares) and an untrained (marked by circles) DQNN2, both are defined with the learning curve marked in triangles. The lower inset is a schematic illustration of DQNN2, where we apply the perceptrons in the order indicated by the direction of the arrows.

Discussion

In this work, we experimentally perform the forward process while implementing the backward process on a classical computer. For the task of learning a target quantum channel, we note that the backward process can also be implemented with a quantum device in principle (see Supplementary Note 1 for an experimental proposal). Yet, it is more challenging to achieve the required experimental accuracy for backward channels than that for forward channels. In the future, we expect an experimental implementation of the backward channel on a quantum processor with better performance.

The efficiency of the quantum BP algorithm can be measured in terms of the required number of copies for each training data per training iteration (see Supplementary Note 2 for detailed discussions). For the training with the quantum BP algorithm, this number scales exponentially with the number of qubits in the hidden layers and the output layer. This is due to the fact that an exponential number of measurements are required for quantum state tomography and the experimental implementation of backward channels. Compared with the training not utilizing the quantum BP algorithm, we find that the use of the quantum BP algorithm can enhance the training efficiency in some cases where DQNNs have narrow hidden layers. Such DQNNs can be used as quantum autoencoders to compress and denoise quantum data52.

In summary, we have demonstrated the training of deep quantum neural networks on a six-qubit programmable superconducting quantum processor. We experimentally exhibit its intriguing ability to learn quantum channels and learn the ground state energy of a given Hamiltonian. The quantum BP algorithm demonstrated in our experiments can be directly applied to DQNNs with extended widths and depths. With further improvements in experimental conditions, quantum perceptrons with enhanced expressive power are expected to be constructed with deeper circuits, which allows DQNNs to tackle more challenging tasks in the future.

Methods

Framework

We consider a DQNN that includes L hidden layers with a total number ml of qubits in layer l. The qubits in two adjacent layers are connected with quantum perceptrons and each perceptron consists of two single-qubit rotation gates Rx(θ1) and Rx(θ2) along the x axis with variational angles θ1 and θ2, respectively, followed by a fixed two-qubit controlled-Phase gate. The unitary of the quantum perceptron that acts on the i-th qubit at layer l−1 and the j-th qubit at layer l in the DQNN is written as \({U}_{(i,j)}^{l}({\theta }_{(i,j),1}^{l},{\theta }_{(i,j),2}^{l})\). Then the unitary product of all quantum perceptrons acting on the qubits in layers l−1 and l is denoted as \({U}^{l}=\mathop{\prod }\nolimits_{j={m}_{l}}^{1}\mathop{\prod }\nolimits_{i={m}_{l-1}}^{1}{U}_{(i,j)}^{l}\). The DQNN acts on the input state ρin and produces the output state ρout according to

where \({{{{{{{\mathcal{U}}}}}}}}\equiv {U}^{{{{{{{\rm{out}}}}}}}}{U}^{L}{U}^{L-1}\ldots {U}^{1}\) is the unitary of the DQNN, and all qubits in the hidden layers and the output layer are initialized to a fiducial product state \(\left|0\cdots 0\right\rangle\). The characteristic of the layer-by-layer architecture enables ρout to be expressed as a series of maps on ρin:

where \({{{{{{{{\mathcal{E}}}}}}}}}^{l}\left({\rho }^{l-1}\right)\equiv {{{{{{{\mathrm{tr}}}}}}}\,}_{l-1}\left({U}^{l}\left({\rho }^{l-1}\otimes {\left|0\cdots 0\right\rangle }_{l}\left\langle 0\cdots 0\right|\right){U}^{{l}^{{{{\dagger}}} }}\right)\) is the forward quantum channel.

In the Supplementary Information, we prove that for the two machine learning tasks in our work, the derivative of the mean fidelity or the energy estimate with respect to \({\theta }_{(i,j),k}^{l}\) can be calculated with the information of layers l−1 and l, which can be written as G(θl, ρl−1, σl) with θl incorporating all parameters in layers l−1 and l. We note that \({\rho }^{l-1}={{{{{{{{\mathcal{E}}}}}}}}}^{l-1}(\ldots {{{{{{{{\mathcal{E}}}}}}}}}^{2}({{{{{{{{\mathcal{E}}}}}}}}}^{1}({\rho }^{{{{{{{\rm{in}}}}}}}}))\ldots )\) refers to the quantum state in layer l−1 in the forward process, and \({\sigma }^{l}={{{{{{{{\mathcal{F}}}}}}}}}^{l+1}(\ldots {{{{{{{{\mathcal{F}}}}}}}}}^{{{{{{{\rm{out}}}}}}}}(\cdots \,)\ldots )\) represents the backward term in layer l with \({{{{{{{{\mathcal{F}}}}}}}}}^{l}\) being the adjoint channel of \({{{{{{{{\mathcal{E}}}}}}}}}^{l}\). The backward channel \({{{{{{{{\mathcal{F}}}}}}}}}^{l}\) applies on the backward term σl and produces σl−1 according to \({\sigma }^{l-1}={{{{{{{{\mathcal{F}}}}}}}}}^{l}({\sigma }^{l})={{{{{{{\mathrm{tr}}}}}}}\,}_{l}\left(\left({{\mathbb{I}}}_{l-1}\otimes {\left|0\right\rangle }_{l}\left\langle 0\right|\right){U}^{{l}^{{{{\dagger}}} }}\left({{\mathbb{I}}}_{l-1}\otimes {\sigma }^{l}\right){U}^{l}\right)\).

Generating random input quantum states

To evaluate the learning performance in the task of learning a target quantum channel, we need to generate many different input quantum states and test the fidelity between their output states produced by DQNN1 and their desired output states given by the target quantum channel.

For the task of learning a two-qubit quantum channel, we generate these input quantum states by separately applying single-qubit rotation gates \({R}_{{a}_{1}}({\Omega }_{1})\otimes {R}_{{a}_{2}}({\Omega }_{2})\) on the two qubits initialized in \(\left|00\right\rangle\). Here each rotation gate has a random rotation axis ai in the x–y plane and a random rotation angle Ωi.

For the task of learning a one-qubit quantum channel, we generate the input quantum states by applying single-qubit rotation gates Rb(Φ) on the input qubit initialized in \(\left|0\right\rangle\) with a random rotation axis b in the x–y plane and a random rotation angle Φ.

The target quantum channels

The target quantum channels are constructed using the DQNN ansatz with randomly chosen parameters θt. For DQNN1, the schematic illustration is shown in the right inset of Fig. 2. The input state is encoded in qubits Q1 and Q2 (input layer). After completing all the layerwise quantum perceptrons, the output state is obtained from qubits Q5 and Q6 (output layer). Each quantum perceptron is applied with randomly chosen single-qubit rotation angles, which are provided in the third column of Supplementary Table 2. The target quantum channel for DQNN2 is constructed in a similar way, with the corresponding parameters (randomly chosen) also provided in Supplementary Table 2.

Numerical simulations

We numerically consider two sources for experimental imperfections: decoherence of qubits and residual ZZ interactions between qubits. In our simulation, we consider the effects of the residual ZZ interactions only during the implementation of rotation gates for simplicity. Specifically, Rx,i(θ) (rotation gate Rx on the i-th qubit) is realized by the evolution of a time-dependent Hamiltonian of the form \({H}_{0}(t)=\theta ({a}_{i}^{{{{\dagger}}} }+{a}_{i})h(t)/2\), where ai (\({a}_{i}^{{{{\dagger}}} }\)) is the corresponding annihilation (creation) operator, and h(t) is the time-dependent driving strength given as a Gaussian function \(h(t)=A{e}^{-{(t/\delta )}^{2}}\). We set the evolution time of the rotation gate as Δt = 40 ns and δ = 10 ns. The unwanted residual ZZ interactions between qubits are considered by adding a Hamiltonian of the form \({H}_{1}(t)={\sum }_{\langle i,j\rangle }{\mu }_{i,j}{a}_{i}^{{{{\dagger}}} }{a}_{i}{a}_{j}^{{{{\dagger}}} }{a}_{j}\) to H0(t), where 〈i, j〉 denotes the interaction between nearest-neighbor qubits, and μi,j is the interaction strength between the i-th and j-th qubits. The qubit decoherence is considered by adding the relaxation term \(\sqrt{1/{T}_{1,i}}{a}_{i}\) and the pure dephasing term \(\sqrt{1/{T}_{\phi,i}}{a}_{i}^{{{{\dagger}}} }{a}_{i}\) as the collapse operators to the evolution. Here, T1,i and Tϕ,i are the energy relaxation time and the pure dephasing time of the i-th qubit, respectively.

Data availability

The data generated in this study have been deposited in the Figshare database under accession code https://doi.org/10.6084/m9.figshare.2280250153.

Code availability

The codes for numerical simulations are available at https://zenodo.org/badge/latestdoi/55219307254.

References

Silver, D. et al. Mastering the game of Go without human knowledge. Nature 550, 354 (2017).

Jumper, J. et al. Highly accurate protein structure prediction with AlphaFold. Nature 596, 583 (2021).

Goodfellow, I., Bengio, Y., and Courville, A. Deep learning (The MIT Press, Cambridge, 2016). https://www.deeplearningbook.org/

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436 (2015).

Biamonte, J. et al. Quantum machine learning. Nature 549, 195 (2017).

Dunjko, V. & Briegel, H. J. Machine learning & artificial intelligence in the quantum domain: a review of recent progress. Rep. Prog. Phys. 81, 074001 (2018).

Das Sarma, S., Deng, D.-L. & Duan, L.-M. Machine learning meets quantum physics. Phys. Today 72, 48 (2019).

Cerezo, M., Verdon, G., Huang, H.-Y., Cincio, L. & Coles, P. J. Challenges and opportunities in quantum machine learning. Nat. Comput. Sci. 2, 567 (2022).

Dawid, A. et al. Modern applications of machine learning in quantum sciences. arXiv http://arxiv.org/abs/2204.04198 (2022).

Huang, H.-Y., Kueng, R. & Preskill, J. Information-theoretic bounds on quantum advantage in machine learning. Phys. Rev. Lett. 126, 190505 (2021).

Liu, Y., Arunachalam, S. & Temme, K. A rigorous and robust quantum speed-up in supervised machine learning. Nat. Phys. 17, 1013 (2021).

Gao, X., Zhang, Z.-Y. & Duan, L.-M. A quantum machine learning algorithm based on generative models. Sci. Adv. 4, eaat9004 (2018).

Abbas, A. et al. The power of quantum neural networks. Nat. Comput. Sci. 1, 403 (2021).

Herrmann, J. et al. Realizing quantum convolutional neural networks on a superconducting quantum processor to recognize quantum phases. Nat. Commun. 13, 4144 (2022).

Ren, W. et al. Experimental quantum adversarial learning with programmable superconducting qubits. Nat. Comput. Sci. 2, 711 (2022).

Havlíček, V. et al. Supervised learning with quantum-enhanced feature spaces. Nature 567, 209 (2019).

Hu, L. et al. Quantum generative adversarial learning in a superconducting quantum circuit. Sci. Adv. 5, eaav2761 (2019).

Blank, C., Park, D. K., Rhee, J.-K. K. & Petruccione, F. Quantum classifier with tailored quantum kernel. npj Quantum Inf. 6, 1 (2020).

Li, Z., Liu, X., Xu, N. & Du, J. Experimental realization of a quantum support vector machine. Phys. Rev. Lett. 114, 140504 (2015).

Zhu, D. et al. Training of quantum circuits on a hybrid quantum computer. Sci. Adv. 5, eaaw9918 (2019).

Gong, M. et al. Quantum neuronal sensing of quantum many-body states on a 61-qubit programmable superconducting processor. Sci. Bull. 68, 906 (2023).

Huang, H.-Y. et al. Quantum advantage in learning from experiments. Science 376, 1182 (2022).

Beer, K. et al. Training deep quantum neural networks. Nat. Commun. 11, 808 (2020).

Liu, Z., Duan, L.-M. & Deng, D.-L. Solving quantum master equations with deep quantum neural networks. Phys. Rev. Res. 4, 013097 (2022).

Wilkinson, S. A. and Hartmann, M. J. Evaluating the performance of sigmoid quantum perceptrons in quantum neural networks. http://arxiv.org/abs/2208.06198 (2022).

Huber, P. et al. Realization of a quantum perceptron gate with trapped ions. http://arxiv.org/abs/2111.08977 (2021).

Moreira, M. S. et al. Realization of a quantum neural network using repeat-until-success circuits in a superconducting quantum processor. http://arxiv.org/abs/2212.10742 (2022).

Pechal, M. et al. Direct implementation of a perceptron in superconducting circuit quantum hardware. Phys. Rev. Res. 4, 033190 (2022).

Beer, K. Quantum neural networks. http://arxiv.org/abs/2205.08154 (2022).

Koch, J. et al. Charge insensitive qubit design from optimizing the cooper-pair box. Phys. Rev. A 76, 042319 (2007).

Barends, R. et al. Coherent josephson qubit suitable for scalable quantum integrated circuits. Phys. Rev. Lett. 111, 080502 (2013).

Li, X. et al. Perfect quantum state transfer in a superconducting qubit chain with parametrically tunable couplings. Phys. Rev. Appl. 10, 054009 (2018).

Cai, W. et al. Observation of topological magnon insulator states in a superconducting circuit. Phys. Rev. Lett. 123, 080501 (2019).

Kono, S. et al. Breaking the trade-off between fast control and long lifetime of a superconducting qubit. Nat. Commun. 11, 3683 (2020).

Carusotto, I. et al. Photonic materials in circuit quantum electrodynamics. Nat. Phys. 16, 268 (2020).

Negîrneac, V. et al. High-fidelity controlled-Z gate with maximal intermediate leakage operating at the speed limit in a superconducting quantum processor. Phys. Rev. Lett. 126, 220502 (2021).

Blais, A., Grimsmo, A. L., Girvin, S. M. & Wallraff, A. Circuit quantum electrodynamics. Rev. Mod. Phys. 93, 025005 (2021).

Roy, T. et al. Broadband parametric amplification with impedance engineering: beyond the gain-bandwidth product. Appl. Phys. Lett. 107, 262601 (2015).

Murch, K. W., Weber, S. J., Macklin, C. & Siddiqi, I. Observing single quantum trajectories of a superconducting quantum bit. Nature 502, 211 (2013).

Majer, J. et al. Coupling superconducting qubits via a cavity bus. Nature 449, 443 (2007).

Song, C. et al. Generation of multicomponent atomic schrodinger cat states of up to 20 qubits. Science 365, 574 (2019).

Nielsen, M. A., and Chuang, I. L., Quantum computation and quantum information (Cambridge University Press, Cambridge, 2010) https://doi.org/10.1017/CBO9780511976667.

Zhao, P. et al. High-contrast zz interaction using superconducting qubits with opposite-sign anharmonicity. Phys. Rev. Lett. 125, 200503 (2020).

Ku, J. et al. Suppression of unwanted zz interactions in a hybrid two-qubit system. Phys. Rev. Lett. 125, 200504 (2020).

Kandala, A. et al. Demonstration of a high-fidelity cnot gate for fixed-frequency transmons with engineered zz suppression. Phys. Rev. Lett. 127, 130501 (2021).

O’Malley, P. J. J. et al. Scalable quantum simulation of molecular energies. Phys. Rev. X 6, 031007 (2016).

Li, X. et al. Tunable coupler for realizing a controlled-phase gate with dynamically decoupled regime in a superconducting circuit. Phys. Rev. Appl. 14, 024070 (2020).

Collodo, M. C. et al. Implementation of conditional phase gates based on tunable zz interactions. Phys. Rev. Lett. 125, 240502 (2020).

Sung, Y. et al. Realization of high-fidelity cz and zz-free iswap gates with a tunable coupler. Phys. Rev. X 11, 021058 (2021).

Place, A. P. M. et al. New material platform for superconducting transmon qubits with coherence times exceeding 0.3 milliseconds. Nat. Commun. 12, 1779 (2021).

Wang, C. et al. Towards practical quantum computers: transmon qubit with a lifetime approaching 0.5 milliseconds. npj Quantum Inf. 8, 3 (2022).

Bondarenko, D. & Feldmann, P. Quantum autoencoders to denoise quantum data. Phys. Rev. Lett. 124, 130502 (2020).

Pan, X. and Lu, Z. Deep quantum neural networks on a superconducting processor. Figshare Dataset. https://doi.org/10.6084/m9.figshare.22802501 (2023).

Pan, X. and Lu, Z. Deep quantum neural networks on a superconducting processor. Zenodo Database. https://zenodo.org/badge/latestdoi/552193072 (2023).

Acknowledgements

We thank Wenjie Jiang for the helpful discussions. We acknowledge the support of the National Natural Science Foundation of China (Grants No. 92165209, No. 11925404, No. 11874235, No. 11874342, No. 11922411, No. 12061131011, No. T2225008, No. 12075128), the National Key Research and Development Program of China (Grants No. 2017YFA0304303), Key-Area Research and Development Program of Guangdong Province (Grant No. 2020B0303030001), Anhui Initiative in Quantum Information Technologies (AHY130200), China Postdoctoral Science Foundation (BX2021167), and Grant No. 2019GQG1024 from the Institute for Guo Qiang, Tsinghua University. D.-L.D. also acknowledges additional support from the Shanghai Qi Zhi Institute.

Author information

Authors and Affiliations

Contributions

X.P. carried out the experiments and analyzed the data with the assistance of Z.H. and Y.X.; L.S. directed the experiments; Z.L. formalized the theoretical framework and performed the numerical simulations under the supervision of D.-L.D.; W.L. and C.-L.Z. provided theoretical support; W.C. fabricated the parametric amplifier; W.W. and X.P. designed the devices; X.P. fabricated the devices with the assistance of W.W., H.W., and Y.-P.S.; Z.H., W.C., and X.L. provided further experimental support; X.P., Z.L., W.L., C.-L.Z., D.-L.D., and L.S. wrote the manuscript with feedback from all authors.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks the anonymous reviewer(s) for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pan, X., Lu, Z., Wang, W. et al. Deep quantum neural networks on a superconducting processor. Nat Commun 14, 4006 (2023). https://doi.org/10.1038/s41467-023-39785-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-023-39785-8

This article is cited by

-

Deep quantum neural networks on a superconducting processor

Nature Communications (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.