Abstract

The recent theory of compressive sensing leverages upon the structure of signals to acquire them with much fewer measurements than was previously thought necessary and certainly well below the traditional Nyquist-Shannon sampling rate. However, most implementations developed to take advantage of this framework revolve around controlling the measurements with carefully engineered material or acquisition sequences. Instead, we use the natural randomness of wave propagation through multiply scattering media as an optimal and instantaneous compressive imaging mechanism. Waves reflected from an object are detected after propagation through a well-characterized complex medium. Each local measurement thus contains global information about the object, yielding a purely analog compressive sensing method. We experimentally demonstrate the effectiveness of the proposed approach for optical imaging by using a 300-micrometer thick layer of white paint as the compressive imaging device. Scattering media are thus promising candidates for designing efficient and compact compressive imagers.

Similar content being viewed by others

Introduction

Acquiring digital representations of physical objects - in other words, sampling them - was, for the last half of the 20th century, mostly governed by the Shannon-Nyquist theorem. In this framework, depicted in Fig. 1(a), a signal is acquired by N regularly-spaced samples whose sampling rate is equal to at least twice its bandwidth. However, this line of thought is thoroughly pessimistic since most signals and objects of interest are not only of limited bandwidth but also generally possess some additional structure1. For instance, images of natural scenes are composed of smooth surfaces and/or textures, separated by sharp edges.

Concept.

(a) Classical Nyquist-Shannon sampling, where the waves originating from the object, of size N, are captured by a dense array of M = N sensors. (b) The “Single Pixel Camera” concept, where the object is sampled by M successive random projections onto a single sensor using a digital multiplexer. (c) Imaging with a multiply scattering medium. The M sensors capture, in a parallel fashion, several random projections of the original object. In cases (b) and (c), sparse objects can be acquired with a low sensor density M/N < 1.

Recently, new mathematical results have emerged in the field of Compressive Sensing (or Compressed Sensing, CS in short) that introduce a paradigm shift in signal acquisition. It was indeed demonstrated by Donoho, Candès, Tao and Romberg2,3,4 that this additional structure could actually be exploited directly at the acquisition stage so as to provide a drastic reduction in the number of measurements without loss of reconstruction fidelity.

For CS to be efficient, the sampling must fulfill specific technical conditions that are hard to translate into practical design guidelines. In this respect, the most interesting argument featured very early on in2,3,4 is that a randomized sensing mechanism yields perfect reconstruction with high probability. As a matter of convenience, hardware designers have created physical systems that emulate this property. This way, each measurement gathers information from all parts of the object, in a controlled but pseudo-random fashion. Once this is achieved, CS theory provides good reconstruction guarantees.

In the past few years, several hardware implementations capable of performing such random compressive sampling were introduced5,6,7,8,9,10,11,12,13. In optics, these include the single pixel camera6, which is depicted in Fig. 1(b) and uses a digital array of micromirrors (abbreviated DMD) to sequentially reflect different random portions of the object onto a single photodetector. Other approaches include phase modulation with a spatial light modulator10, or a rotating optical diffuser13. The idea of random multiplexing for imaging has also been considered in other domains of wave propagation. CS holds much promise in areas where detectors are rather complicated and expensive such as the THz or far infrared. In this regards, there have been proposals to implement CS imaging procedures in the THz using random pre-fabricated masks5, DMD or SLM photo-generated contrast masks on semi-conductors slabs14 and efforts are also pursued on tunable metamaterial reflectors15. Recently, a carefully engineered metamaterial aperture was used to generate complex RF beams at different frequencies8.

However, these CS implementations come with some limitations. First, these devices include carefully engineered hardware designed to achieve randomization, via a DMD6, a metamaterial8 or a coded aperture11. Second, the acquisition time of most implementations can be large because they require the sequential generation of a large number of random patterns.

In this work, we replace such man-made emulated randomization by a natural multiply scattering material, as depicted in Fig. 1(c). Whereas scattering is usually seen as a time-varying nuisance, for instance when imaging through turbid media16, the recent results of wave control in stable complex material have largely demonstrated that it could also be exploited, for example so as to build focusing systems that beat their coherent counterparts in terms of resolution17,18. Such complex and stable materials are readily available in several frequency ranges -they were even coined in as one-way physical functions for hardware cryptography19. In the context of CS, such materials perform an efficient randomized multiplexing of the object into several sensors and hence appear as analog randomizers. The approach is applicable in a broad wavelength range and in many domains of wave propagation where scattering media are available. As such, this study is close in spirit to earlier approaches such as the random reference structure20, the random lens imager7, the metamaterial imager8, or the CS filters proposed in21 for microwave imaging. They all abandoned digitally controlled multiplexors as randomizers. Still, we go further in this direction and even drop the need for a designer to craft the randomizer.

Compressive sampling with multiply scattering material has several advantages. First, it has recently been shown that they have an optimal multiplexing power for coherent waves22, which consequently makes them optimal sensors within the CS paradigm. Second, these materials are often readily available and require very few engineering. In the domain of optics for example, we demonstrate one successful implementation using a 300 μm layer of Zinc Oxide (ZnO), which is essentially white paint. Third, contrarily to most aforementioned approaches, this sensing method provides the somewhat unique ability to take a scalable number of measurements in parallel, thus with a potential of strongly reducing acquisition time. In practice, if 500 samples are required to reconstruct a given image using CS principles, this imaging framework allows their acquisition at once on 500 independent sensors, whereas state-of-the-art systems such as the single pixel camera require a sequence of 500 random patterns on the DMD.

On practical grounds, the use of a multiply scattering material in CS raises several ideas that we consider in this study. First, the random multiplexing achieved through multiple scattering must be measured a posteriori, since it is no longer known a priori as in engineered random sensing. This calibration problem has been the topic of recent studies in the context of CS23 and we propose here a simple least squares calibration procedure that extends our previous work24,25. Second, the use of such a measured Transmission Matrix (TM) induces an inherent uncertainty in the sensing mechanism, that can be modeled as noise in the observations. As we show both through extensive simulations and actual experiments, this uncertainty is largely compensated by the use of adequate reconstruction techniques. In effect, the imager we propose almost matches the performance of idealized sub-Nyquist random sensing.

Theoretical background

In its simplest form, CS may be understood as a way to solve an underdetermined linear inverse problem. Let x be the object to image, understood as a N × 1 vector and let us suppose that x is only observed through its multiplication y by a known measurement matrix H, of dimension M × N, we have y = Hx. Each one of the M entries of y is thus the scalar product of the object with the corresponding row of H. When there are fewer measurements than the size of the object, i.e. M < N, it is impossible to recover x perfectly without further assumptions, since the problem has infinitely many solutions. However, if x is known to be sparse, meaning that only a few of its coefficients are nonzero (such as stars in astronomical images) and provided H is sufficiently random, x can still be recovered uniquely through sparse optimization techniques1.

In a signal processing framework, the notion of structure may also be embodied as sparsity in a known representation1. For example, most natural images are not sparse, yet often yield near-sparse representations in the wavelet domain. If the object x is known to have some sparse or near-sparse representation s in a known basis B (x = Bs), then it may again be possible to recover it from a few samples, by solving y = HBs, provided H and B obey some technical conditions such as incoherence1,2,3,4,26.

In practice, when trying to implement Compressive Sensing in a hardware device, fulfilling this incoherence requirement is nontrivial. It requires a way to deterministically scramble the information somewhere between the object and the sensors. Theory shows that an efficient way to do this is by using random measurement matrices H or HB2,3,4. Using such matrices, it can indeed be shown26 that the number of samples required to recover the object is mostly governed by its sparsity k, i.e. the number of its nonzero coefficients in the given basis. If the coefficients of the M × N measurement matrix are independent and identically distributed (i.i.d.) with respect to a Gaussian distribution, perfect reconstruction can be achieved with only  measurements27. Furthermore, many algorithms are available, for instance Orthogonal Matching Pursuit (OMP) or Lasso26,28, which can efficiently perform such reconstruction under sparsity constraints.

measurements27. Furthermore, many algorithms are available, for instance Orthogonal Matching Pursuit (OMP) or Lasso26,28, which can efficiently perform such reconstruction under sparsity constraints.

Using natural complex media as random sensing devices

Our approach is summarized in Fig. 1(c) and its implementation in an optical experiment is depicted in Fig. 2. The coherent waves originating from the object and entering the imaging system propagate through a multiply scattering medium. Within the imager, propagation produces a seemingly random and wavelength-dependent interference pattern called speckle on the sensors plane. The speckle figure is the result of the random phase variations imposed on the waves by the propagation within the multiply scattering sample29. Scattering, although the realization of a random process, is deterministic: for a given input and as long as the medium is stable, the interference speckle figure is fully determined and remains constant. In essence, the complex medium acts as a highly efficient analog multiplexer for light, with an input-output response characterized by its transmission-matrix24,25. We highlight the fact that the multiple scattering material is not understood here as a nuisance occurring between the object and the sensors, but rather as a desirable component of the imaging system itself. After propagation, sensing takes place using a limited number M < N of sensors.

Experimental setup for compressive imaging using multiply scattering medium.

Within the imaging device, waves coming from the object (i) go through a scattering material (ii) that efficiently multiplexes the information to all M sensors (iii). Provided the transmission matrix of the material has been estimated beforehand, reconstruction can be performed using only a limited number of sensors, potentially much lower than without the scattering material. In our optical scenario, the light coming from the object is displayed using a spatial light modulator.

Let x and y denote the N × 1 and M × 1 vectors gathering the value of the complex optical field at discrete positions before and after, respectively, the scattering material. It was confirmed experimentally24,25 that any particular output ym can be efficiently modeled as a linear function of the N complex values xn of the input optical field:

where the mixing factor  corresponds to the overall contribution of the input field xn into the output field ym. All these factors can be gathered into a complex matrix [H]mn = hmn called the Transmission Matrix (TM), which characterizes the action of the scattering material on the propagating waves between input and output. The medium hence produces a very complex but deterministic mixing of the input to the output, that can be understood as spatial multiplexing. This linear model, in the ideal noiseless case, can be written more concisely as:

corresponds to the overall contribution of the input field xn into the output field ym. All these factors can be gathered into a complex matrix [H]mn = hmn called the Transmission Matrix (TM), which characterizes the action of the scattering material on the propagating waves between input and output. The medium hence produces a very complex but deterministic mixing of the input to the output, that can be understood as spatial multiplexing. This linear model, in the ideal noiseless case, can be written more concisely as:

As can be seen, each of the M measurements of the output complex field may hence be considered as a scalar product between the input and the corresponding row of the TM. If multiply scattering materials have already been considered for the purpose of focusing, thus serving as perfect “opaque lenses”17,18, the main idea of the present study is to exploit them for compressive imaging. In other wavelength domains than optics, analogous configurations may be designed to achieve CS through multiple scattering. For instance, a collection of randomly packed metallic scatterers could be used as a multiply scattering media from the microwave domain up to the far infrared and the method proposed here could allow imaging at these frequencies with only a few sensors. A similar approach could be used to lower the number of sensors in 3D ultrasound imaging using CS through multiple scattering media.

In our optical experimental setup, we used a Spatial Light Modulator (an array of N = 1024 micromirrors, abbreviated as SLM) to calibrate the system and also to display various objects, using a monochromatic continuous wave laser as light source.

During a first calibration phase, which lasts a few minutes and needs to be performed only once, a series of controlled inputs x are emitted and the corresponding outputs y are measured. The TM can be estimated through a simple least-squares error procedure, which generalizes the method proposed in24,25, as detailed in the supplementary material below. In short, this calibration procedure benefits from an arbitrarily high number of measurements for calibration, which permits to better estimate the TM. It is important to note here that the need for calibration is the main disadvantage of this technique, compared to the more classical CS imagers based on pseudo-random projections, which have direct control on the TM. However, this calibration step here involves only standard least-squares estimation of the linear mapping between input and output of the scattering material24,25. In our experimental setup, the whole calibration is performed in less than 1 minute. While we here rely on optical holography to extract complex amplitude from intensity measurements, the TM measurement can be implemented in a simplified way for other types of waves (RF, acoustics, Terahertz), where direct access to the field amplitude is possible. It may not be so straightforward in practical situations when only the intensity of the output is available and where more sophisticated methods30 would be required.

After calibration, the scattering medium can be used to perform CS, using this estimated TM as a measurement matrix. Note that, in our experiment, the same SLM used for calibration is then used as a display to generate the sparse objects. This approach is not restrictive as any sparse optical field or other device capable of modulating light could equivalently be used at this stage. As demonstrated in our results section, using such an estimated TM instead of a perfectly controlled one does yield very good results all the same, while bringing important advantages such as ease of implementation and acquisition speed. Hence, even if the proposed methodology does require the introduction of a supplementary calibration step, this step comes at the cost of a few mandatory supplementary calibration measurements rather than at the cost of performance. This claim is further developed in our results and methods sections.

For a TM to be efficient in a CS setup, it has to correctly scramble the information from all of its inputs to each of its outputs. It is known that a matrix with i.i.d Gaussian entries is an excellent candidate for CS31 and the TM of optical multiple scattering materials were recently shown to be well approximated by such matrices22. The rationale for this fact is that the transmission of light through an opaque lens leads to a very large number of independent scattering events. Even if the total transmission matrix that links the whole input field to the transmitted field shows some non-trivial mesoscopic correlations32, recent studies proved that these correlations vanish when controlling/measuring only a random partition of input/output channels22. In our experimental setup, the number of sensors is very small compared to the total number of output speckle grains and we can hence safely disregard any mesoscopic correlation.

Several previous studies24,25 have shown on experimental grounds that TMs were close to i.i.d. Gaussians by considering their spectral behavior, i.e. the distribution of their eigenvalues. As a consistency check, we also verified that our experimentally-obtained TMs are close to Gaussian i.i.d., through a complementary study of their coherence, which is the maximal correlation between their columns with values between 0 and 1. Among all the features that were proposed to characterize a matrix as a good candidate for CS33,34,35, coherence plays a special role because it is easily computed and because a low coherence is sufficient for good recovery performance in CS applications36,37,38,39, even if it is not necessary40. In Fig. 3(a), we display one actual TM obtained in our experiments. In Fig. 3(b), we compare its coherence with the one of randomly generated i.i.d. Gaussian matrices. The similar behavior confirms the results and discussions given in22,24, but also suggests that TMs are good candidates in a CS setup, as will be demonstrated below.

Experimentally measured Transmission Matrix (TM).

(a) TM for a multiply scattering material as obtained in our experimental study. (b) Coherence of sensing matrices as a function of their number M of rows, for both a randomly generated Gaussian i.i.d. matrix and an actual experimental TM. Coherence gives the maximal colinearity between the columns of a matrix. The lower, the better is the matrix for CS.

Results and discussion

During our experiments, we measured the reconstruction performance of the imaging system, when the image to reconstruct is composed of N = 32 × 32 = 1024 pixels, using a varying number M of measurements. In practice, we use a CCD array, out of which we select M pixels. These are chosen at random in the array, with an exclusion distance equal to the coherence length of the speckle, in order to ensure uncorrelated measurements. Details of the experiments can be found in the methods section below. For each sparsity level k between 1 and N, a sparse object with only k nonzero coefficients was displayed under P = 3 different random phase illuminations [Since our SLM can only do phase modulation, we used a simple trick as in41 to simulate actual amplitude objects, based on two phase-modulated measurements. See the supplementary material on this point.]. These virtual measurements may, without loss of generality, be replaced by the use of an amplitude light modulator and are anyways replaced by the actual object to image in a real use-case. The corresponding outputs were then measured and fed into a Multiple Measurement Vector (MMV) sparse recovery algorithm20. For each sparsity level, 32 such independent experiments were performed.

Reconstruction of the sparse objects was then achieved numerically using the M × P measurements only. The TM used for reconstruction is the one estimated in the calibration phase. In order to demonstrate the efficiency and the simplicity of the proposed system, we used the simple Multichannel Orthogonal Matching Pursuit algorithm42 for MMV reconstruction. It should be noted that more specialized algorithms may lead to better performance and should be considered in the future.

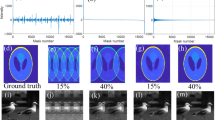

Examples of actual reconstructions performed by our analog compressive sampler are shown on Fig. 4. As can be seen, near-perfect reconstruction of complex sparse patterns occur with sensor density ratios M/N that are much smaller than in classical Shannon-Nyquist sampling (M = N). An important feature of the approach is its universality: reconstruction is also efficient for objects that are sparse in the Fourier domain.

Imaging results.

Examples of signals, which are sparse either in the Fourier or canonical domain (left), along with their actual experimental reconstruction using a varying number of measurements. (a) Fourier-sparse object (b–c) canonical sparse objects. In (b), small squares are the original object and large squares are the reconstruction. In all cases, the original object contains 1024 pixels and is thus sampled with a number M of sensors much smaller than N. A, B, C and D images are correspondingly represented in the phase transition diagram of Fig. 5.

The performance of the proposed compressive sampler for all sampling and sparsity rates of interest is summarized on Fig. 5, which is the main result of this paper. It gives the probability of successful reconstruction displayed as a function of the sensor density M/N and relative sparsity k/M. Each point of this surface is the average reconstruction performance for real measurements over approximately 50 independent trials. As can be seen, this experimental diagram exhibits a clear “phase transition” from complete failure to systematic success. This thorough experimental study largely confirms that the proposed methodology for sampling using scattering media indeed reaches very competitive sampling rates that are far below the Shannon-Nyquist traditional scheme.

Probability of success for CS recovery.

Experimental probability of successful recovery (between 0 and 1) for a k-sparse image of N pixels via M measurements. On the x-axis is displayed the sensor density ratio M/N. A ratio of 1 corresponds to the Nyquist rate, meaning that all correct reconstructions found in this figure beat traditional sampling. On the y-axis is displayed the relative sparsity ratio k/M. A clear phase transition between failure and success is observable, which is close to that obtained by simulations (dashed line), where exactly the same experimental protocol was conducted with simulated noisy observations both for calibration and imaging. Boxes A, B, C and D locate the corresponding examples of Fig. 4. Each point in this 50 × 50 grid is the average performance over approximately 50 independent measurements. This figure hence summarizes the results of more than 105 actual physical experiments.

The phase transition observed on Fig. 5 appears to be slightly different from the ones described in the literature31,43. The main reason for this fact is that this diagram concerns reconstruction under P = 3 Multiple Measurement Vectors (MMV) instead of the classical Single Measurement Vector (SMV) case. This choice, which proves important in practice, is motivated by the fact that MMV is much more robust to noise than SMV44. In order to compare our experimental performance to its numerical counterpart, we performed a numerical experiment whose 50% success-rate transition curve is represented by the dashed green line. The transmission matrix is taken as i.i.d Gaussian. The measurement matrix is estimated with the same calibration procedure as in the physical experiment. Each measurement, during calibration and imaging, is contaminated by additive Gaussian noise of variance 3%. Performance obtained in this idealized situation is close to that obtained in our practical setup, for this level of additive noise.

Conclusion

In this study, we have demonstrated that a simple natural layer of multiply scattering material can be used to successfully perform compressive sensing. The compressive imager relies on scattering theory to optimally dispatch information from the object to all measurement sensors, shifting the complexity of devising CS hardware from the design, fabrication and electronic control to a simple calibration procedure.

As in any hardware implementation of CS, experimental noise is an important issue limiting the performance, especially since it impacts the measurement matrix. Using baseline sparse reconstruction algorithms along with standard least-squares calibration techniques, we demonstrated that successful reconstruction exhibits a clear phase transition between failure and success even at very competitive sampling rates. The proposed methodology can be considered to be a truly analog compressive sampler and as such, benefits from both theoretical elegance and ease of implementation.

The imaging system we introduced has many advantageous features. First, it enables the implementation of an extremely flat imaging device with few detectors. Second, this imaging methodology can be implemented in practice with very few conventional lenses, as in45 for instance. This is a strong point for implementation in domains outside optics where it is hard to fabricate lenses. Indeed, the concept presented here can directly be used in other domains of optics such as holography, but also in other disciplines such as THz, RF or ultrasound imaging. Third, similarly to recent work on metamaterials apertures, non-resonant scattering materials work over a wide frequency range and have a strongly frequency-dependent response. Fourth, unlike most current compressive sensing hardware, this system gives access to many compressive measurements in a parallel fashion, potentially speeding up acquisition. These advantages come at the simple cost of a calibration step, which amounts to estimate the Transmission Matrix of the scattering material considered. As we demonstrated, this can be achieved by simple input/output mapping techniques such as linear least-squares and needs to be done only once.

While conventional direct imaging can be thought as an embarrassingly parallel process that does not exploit the structure of the scene, in contrast most current CS hardware (such as the single pixel camera) require a heavily sequential process that does take into account the structure of the scene. Our approach borrows from the best of both acquisition processes, in that it is both embarrassingly parallel and takes into account the structure of the scene.

References

Elad, M. Sparse and redundant representations: from theory to applications in signal and image processing. (Springer, 2010).

Candès, E. J. Compressive sampling. Proceedings of the International Congress of Mathematicians: Madrid, August 22–30, 2006: invited lectures. 2006.

Candès, E. J., Romberg, J. & Tao, T. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE T. Inform. Theory 52, 489 (2006).

Donoho, D. L. Compressed sensing. IEEE T. Inform. Theory 52, 1289 (2006).

Chan, W. L. et al. A single-pixel terahertz imaging system based on compressed sensing. Appl. Phys. Lett. 93, 12105 (2008).

Duarte, M. F. et al. Single-pixel imaging via compressive sampling. IEEE T. Signal Process 25, 83–91 (2008).

Fergus, R., Torralba, A. & Freeman, W. T. Random lens imaging. (Tech. Rep. Massachusetts Institute of Technology, 2006).

Hunt, J. et al. Metamaterial Apertures for Computational Imaging. Science 339, 310–313 (2013).

Jacques, L. et al. CMOS compressed imaging by random convolution. Int. Conf. Acoust. Spee. 1113–1116 (2009).

Katz, O. et al. Compressive ghost imaging. Appl. Phys. Lett. 95, 131110 (2009).

Levin, A. et al. Image and depth from a conventional camera with a coded aperture. ACM T. Graphics 26, 70 (2007).

Potuluri, P., Xu, M. & Brady, D. Imaging with random 3D reference structures. Opt. Express. 11, 2134–2141 (2003).

Zhao, C. et al. Ghost imaging lidar via sparsity constraints. Appl. Phys. Lett. 101, 141123 (2012).

Shrekenhamer, D., Watts, C. M. & Padilla, W. J. Terahertz single pixel imaging with an optically controlled dynamic spatial light modulator. Opt. Express 21, 12507–12518 (2013).

Chen, H. T. et al. Active terahertz metamaterial devices. Nature 444, 597–600 (2006).

Tyson, R. Principles of adaptive optics. (CRC Press, 2010).

Vellekoop, I. M. & Mosk, A. P. Focusing coherent light through opaque strongly scattering media. Opt. lett 32, 2309–2311 (2007).

Vellekoop, I. M., Lagendijk, A. & Mosk, A. P. Exploiting disorder for perfect focusing. Nat. Photon. 4, 320–322 (2010).

Pappu, R., Recht, R., Taylor, J. & Gershenfeld, N. Physical one-way functions. Science 297, 2026–2030 (2002).

Cotter, S. F. Sparse solutions to linear inverse problems with multiple measurement vectors. IEEE T. Signal Process 53, 2477–2488 (2005).

Li, L. & Li, F. Compressive Sensing Based Robust Signal Sampling. Appl. Phys. Research 4, 30 (2012).

Goetschy, A. & Stone, A. D. Filtering Random Matrices: The Effect of Incomplete Channel Control in Multiple Scattering. Phys. Rev. Lett. 111, 063901 (2013).

Gribonval, R., Chardon, G. & Daudet, L. Blind calibration for compressed sensing by convex optimization. Int. Conf. Acoust. Spee. 2713–2716 (2012).

Popoff, S. M. et al. Measuring the transmission matrix in optics: an approach to the study and control of light propagation in disordered media. Phys. Rev. Lett. 104, 100601 (2010).

Popoff, S. M. et al. Controlling light through optical disordered media: transmission matrix approach. New J. Phys. 13, 123021 (2011).

Eldar, Y. C. & Kutyniok, G. eds. Compressed sensing: theory and applications. (Cambridge University Press, 2012).

Candès, E. J. & Wakin, M. B. An introduction to compressive sampling. IEEE Signal Process. 25, 21–30 (2008).

Tibshirani, R. Regression shrinkage and selection via the lasso. J. Roy. Stat. Soc. B Met. 267–288 (1996).

Goodman, J. W. Introduction to Fourier optics. (Roberts and Company Publishers, 2005).

Ohlsson, H. et al. Compressive phase retrieval from squared output measurements via semidefinite programming. arXiv, 1111.6323 (2011).

Donoho, D. & Tanner, J. Precise undersampling theorems. Proc. IEEE 98, 913–924 (2010).

Akkermans, E. Mesoscopic physics of electrons and photons (Cambridge University Press, 2007).

Candès, E. J. The restricted isometry property and its implications for compressed sensing. C. R. Math. 346, 589–592 (2008).

Cohen, A., Dahmen, W. & DeVore, R. Compressed sensing and best k-term approximation. J. Am. Math. Soc. 22, 211–231 (2009).

Yin, W. & Zhang, Y. Extracting salient features from less data via l1-minimization. SIAG/OPT Views-and-News 19, 11–19 (2008).

Donoho, D. L. & Huo, X. Uncertainty principles and ideal atomic decomposition. IEEE T. Inf. Theory 47, 2845–2862 (2001).

Tropp, J. A. Greed is good: Algorithmic results for sparse approximation. IEEE T. Inf. Theory 50, 2231–2242 (2004).

Tropp, J. A. & Gilbert, A. C. Signal recovery from random measurements via orthogonal matching pursuit. IEEE T. Inf. Theory 53, 4655–4666 (2007).

Wang, J. & Shim, B. A Simple Proof of the Mutual Incoherence Condition for Orthogonal Matching Pursuit. arXiv, 1105.4408 (2011).

Candès, E. J. et al. Compressed sensing with coherent and redundant dictionaries. Appl. Comput. Harmon. A. 31, 59–73 (2011).

Popoff, S. M. et al. “Image transmission through an opaque material.”. Nat. Comms. 1, 81 (2010).

Gribonval, R. et al. Atoms of all channels, unite! Average case analysis of multi-channel sparse recovery using greedy algorithms. J. Four. Anal. Appl. 14, 655–687 (2008).

Donoho, D. & Tanner, J. Observed universality of phase transitions in high-dimensional geometry, with implications for modern data analysis and signal processing. Philos. T Roy. S. A 367, 4273–4293 (2009).

Eldar, Y. & Rauhut, H. Average case analysis of multichannel sparse recovery using convex relaxation. IEEE T. inform. Theory 56, 505–519 (2010).

Katz, O. et al. Non-invasive real-time imaging through scattering layers and around corners via speckle correlations. arXiv, 1403.3316 (2014).

Acknowledgements

This work was supported by the European Research Council (Grant N°278025), the Emergence(s) program from the City of Paris and LABEX WIFI (Laboratory of Excellence within the French Program “Investments for the Future”) under references ANR-10-LABX-24 and ANR-10-IDEX-0001-02 PSL*. G.C. is supported by the Austrian Science Fund (FWF) START-project FLAME (Y 551-N13). O.K. is supported by the Marie Curie intra-European fellowship for career development (IEF) and the Rothschild fellowship. I.C. would like to thank the Physics arXiv Blog for drawing his attention to opaque lenses and Ms. Iris Carron for her typesetting support.

Author information

Authors and Affiliations

Contributions

L.D., S.G., I.C. proposed the use of a multiply scattering material for compressive sensing. S.P., G.L. and S.G. designed the initial experimental setup. G.C. performed initial numerical analysis. D.M., O.K. and S.G. discussed the experimental implementation. D.M. and A.L. performed the experiments and A.L. performed the numerical analysis with the help of L.D. All authors contributed to discussing the results and writing the manuscript.

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Electronic supplementary material

Supplementary Information

Supplementary Information

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article's Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder in order to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/

About this article

Cite this article

Liutkus, A., Martina, D., Popoff, S. et al. Imaging With Nature: Compressive Imaging Using a Multiply Scattering Medium. Sci Rep 4, 5552 (2014). https://doi.org/10.1038/srep05552

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep05552

This article is cited by

-

Adaptive deep learning network for image reconstruction of compressed sensing

Signal, Image and Video Processing (2024)

-

Image sensing with multilayer nonlinear optical neural networks

Nature Photonics (2023)

-

Deep Memory-Augmented Proximal Unrolling Network for Compressive Sensing

International Journal of Computer Vision (2023)

-

Soft threshold iteration-based anti-noise compressed sensing image reconstruction network

Signal, Image and Video Processing (2023)

-

Intelligent metasurfaces: control, communication and computing

eLight (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.