Abstract

The chaotic Boltzmann machine proposed in this paper is a chaotic pseudo-billiard system that works as a Boltzmann machine. Chaotic Boltzmann machines are shown numerically to have computing abilities comparable to conventional (stochastic) Boltzmann machines. Since no randomness is required, efficient hardware implementation is expected. Moreover, the ferromagnetic phase transition of the Ising model is shown to be characterised by the largest Lyapunov exponent of the proposed system. In general, a method to relate probabilistic models to nonlinear dynamics by derandomising Gibbs sampling is presented.

Similar content being viewed by others

Introduction

Boltzmann machines are a type of neural network model composed of stochastic elements. Since they were proposed more than twenty years ago, it has been demonstrated that they are capable of solving various problems such as optimisation problems1, repairing degraded images2 and learning interdependency among random variables3. For optimisation problems, convergence to the global optimum is guaranteed, provided that the system is annealed at a sufficiently slow rate2. For learning problems, parameter values for a Boltzmann machine that represent data distribution can be learned by a simple algorithm, which is theoretically sound and insightful3.

Although they are theoretically important, it is difficult to apply Boltzmann machines in their original form to real-world problems. The main difficulty is in their computation costs. For optimisation problems, the optimality is only assured theoretically for a extremely slow annealing schedule2, which is impractical. For learning problems, the learning algorithm requires lengthy computation to obtain equilibrium statistics4,5.

However, these difficulties do not necessarily mean that Boltzmann machines are not practically important. The capability of solving optimisation problems without prior knowledge of the problem structures is highly attractive. As for learning problems, many attempts have been made to increase computation speeds at the expense of the learning capability by restricting network structures and by using approximations in the learning algorithm. Even restricted versions of Boltzmann machines, such as restricted Boltzmann machines6 and deep Boltzmann machines7, combined with approximate learning algorithms, such as mean-field approximation4,5 and contrastive divergence8, achieve state-of-the art performance among various machine learning methods. This fact highlights the potential capabilities of Boltzmann machines.

Hardware implementation is one approach to enhance the computation speed of Boltzmann machines without degrading their capability. One of the difficulties in this approach is the generation of good random numbers, which is necessary to realise stochastic behaviour of the component units. Although thermal noise exists in electronic circuits, it is difficult to utilise this noise to precisely simulate the probabilistic behaviour of Boltzmann machines. Therefore, pseudo-random number generators9,10 or mechanisms to utilise physically controllable randomness, such as quantum effects11,12,13, are required in the circuit. Other previous studies on hardware implementation avoid randomness by using mean-field approximations.

In this paper, we propose a deterministic system that works as a Boltzmann machine and show numerically that the system has computing abilities comparable to a Boltzmann machine. The apparently stochastic behaviour of the system is realised, without any use of random numbers, by chaotic dynamics that emerges from pseudo billiard dynamics14. Although the numerical simulation of the system is not efficient when calculated sequentially on ordinary digital computers, the proposed approach allows possibly efficient hardware implementation of a Boltzmann machine as a parallel distributed system. More generally, our approach presents a novel mechanism for biologically inspired information processing and analogue computing15,16,17,18,19,20.

Results

Chaotic boltzmann machines

A Boltzmann machine is a stochastic system composed of binary units interacting with each other. Let si ∈ {0, 1} be the state of the ith unit in a Boltzmann machine composed of N units. Interactions between the units are represented by a symmetric matrix (wij) whose diagonal elements are all zero. The states of the units are updated randomly as follows. First, a unit is selected randomly; let i be the index of the selected unit. The input zi to the ith unit is calculated as follows:

where bi represents a constant bias applied to the ith unit. The state of the ith unit is updated according to the probability

where T denotes the temperature of the system. By repeating this procedure for randomly selected units, the state s = (s1, …, sN) of a Boltzmann machine is updated sequentially. The obtained states eventually follow the Gibbs distribution

where the global energy E and the partition function Z are given by

The procedure of state updates can be understood as Gibbs sampling, or as a Markov-chain Monte Carlo method.

Here, we propose a deterministic system that can simulate Boltzmann machines without any use of random numbers. In the system, the ith unit is associated with a state variable xi ∈ [0, 1], which we call the internal state of the unit. The internal state xi evolves according to the following differential equation

The states s of the units are updated by a deterministic rule, instead of the probabilistic rule described in equation (2). Specifically, the state si ∈ {0, 1} of the ith unit changes when and only when xi reaches 0 or 1 as follows:

Note that regardless of the states of other units, the right-hand side of equation (6) is positive for si = 0 and negative for si = 1. Therefore, the internal state xi continues to oscillate between 0 and 1.

The differential equation (6) is designed from equation (2) so that it satisfies |dxi/dt| ∝ P[si]−1. Let us assume that the states of other units are fixed. Then, according to equation (6), it takes (1 + exp(zi/T))−1 unit time for going up from xi = 0 to 1 and (1 + exp(−zi/T))−1 unit time for going down from xi = 1 to 0. Hence, xi oscillates between 0 and 1 with the period of 1 unit time and so does si. Therefore, the probability of observing si = 1 is given by (1 + exp(−zi/T))−1, which is consistent with equation (2). However, since the states of other units in the system actually change, it is not theoretically assured that the probability of observing a certain state in the proposed system is exactly the same as the original Boltzmann machine. In this paper, we present numerical evidence that the proposed system actually works as a Boltzmann machine.

As indicated by equations (6) and (7), the internal state x = (x1, …, xN) goes straight in the hypercube [0, 1]N and its direction changes only at the boundary. Therefore, the dynamics can be regarded as a pseudo billiard14 in the hypercube. The billiard dynamics induces a Poincaré map on the boundary of the hypercube. Since it exhibits chaotic behaviour as shown below, we call this system a chaotic Boltzmann machine. Note that the system dynamics can be computed by simple arithmetic calculations, because the right-hand side of equation (6) is piecewise constant.

Application to combinatorial optimisation problems

As an example for solving combinatorial optimisation problems, we applied the chaotic Boltzmann machine to maximum cut problems. Given an undirected network of N nodes whose edge weights are represented by a symmetric weight matrix (dij), the maximum cut problem is to find a subset S ⊂ {1, …, N} that maximises Σi∈S,j∉Sdij. To solve this problem, the parameter values of the Boltzmann machines are set as bi = Σjdij and wij = −2dij. When the energy is minimised, the solution of the maximum cut problem is given by the set of nodes taking on the state si = 1. We used the problem sets provided along with the Biq Mac solver21, for which exact solutions are also provided.

For comparison, we used conventional (stochastic) Boltzmann machines having the same parameter values. We regard N iterations of Gibbs sampling as one unit time. Note that this does not mean that one unit time of a stochastic Boltzmann machine corresponds to that of a chaotic one. Since the mechanisms are different, the comparison of time is not straightforward.

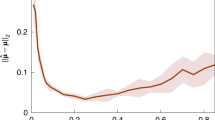

The typical behaviour of chaotic and stochastic Boltzmann machines for a maximum cut problem is shown in Fig. 1. The temperature is fixed at a large value, T = maxiΣj|wij|. The sampled energy values appear to fluctuate similarly in both types of models (Figs. 1(a) and (b)). The histograms of the sampled energy values coincide with each other (Fig. 1(c)). Figure 2 shows the largest Lyapunov exponent per unit time calculated for the Poincaré map induced on the boundary of the hypercube [0, 1]N. The Lyapunov exponent always takes positive values, thereby indicating chaos.

Behaviour of Boltzmann machines for a maximum cut problem (g05_100.0).

Typical time evolutions of (a) chaotic and (b) stochastic Boltzmann machines for T = 128. Energy values are sampled every 10 unit time. (c) Histograms of the energy values sampled 100,000 times from chaotic (thin red line) and stochastic (thick pink line) Boltzmann machines.

In order to solve the problem using Boltzmann machines, simulated annealing is applied, as shown in Fig. 3. The initial temperature is set to the same value as in Fig. 1 and it is multiplied by 0.95 every N/4 unit time. Annealing is terminated when the same energy value is sampled ten times consecutively. Table 1 shows statistics of the solutions obtained by chaotic and stochastic Boltzmann machines. For each of the datasets, simulated annealing is performed 100 times. For all the problems with N ≤ 100, optimal solutions are obtained. Overall, fairly good solutions (2–3% degraded from optima) are obtained without excessive tuning of the annealing schedule. The point to be noted here is that there is no significant difference between the chaotic and stochastic Boltzmann machines. A detailed comparison of the results has no meaning, because time cannot be directly compared.

It should be noted that there have been many studies that utilise chaotic dynamics for solving combinatorial optimisation problems22,23,24,25,26. We have presented novel chaotic dynamics that can be related to stochastic approaches to combinatorial optimisation problems.

Application to the ising model

For application of Boltzmann machines to learning problems, a simple learning rule3 has been derived as gradient decent on the Kullback-Leibler divergence between the data distribution and the equilibrium distribution as follows:

where 〈sisj〉data and 〈sisj〉model denote the expected values of sisj at equilibrium state of the system in which the units are clamped to data vectors and unclampled, respectively. Therefore, for the learning process to work, it is essential to obtain faithful samples from equilibrium distributions.

To evaluate the applicability of the chaotic Boltzmann machines to such problems, we start from a simple example, the Ising model on a two-dimensional lattice, which has been extensively investigated. The Ising model is a simple model of ferromagnetism and it can be regarded as a Boltzmann machine whose connections are limited to only neighbouring nodes in the lattices. Despite the simplicity of the model, it exhibits rich behaviour including phase transition with critical behaviour.

The Hamiltonian of the Ising model is given by

where summation is taken for every adjacent pair of the two-dimensional lattice. Note that here we use σi ∈ {+1, −1}, instead of {0, 1}, following the convention in statistical physics. The differential equation of the chaotic Ising model used is given as follows:

which is designed essentially in the same way as equation (6).

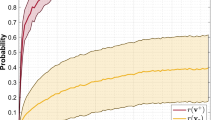

Figure 4 shows snapshots of the chaotic and stochastic Ising models. There appears to be no difference between the two models. As observed from Fig. 5, there is no difference in the following thermodynamic statistics: the average absolute magnetisation 〈|m|〉 = 〈|Σiσi|〉/N and the magnetic susceptibility χ = N(〈m2〉 − 〈|m|〉2).

Statistics of chaotic (thin red lines) and stochastic (thick pink lines) Ising models on a two-dimensional lattice of size 24 × 24 with a periodic boundary condition.

(a) The average absolute magnetisation per site 〈|m|〉 and (b) the magnetic susceptibility per site χ. The statistics are calculated during 100,000 unit time for 100 different initial values. (c) The largest Lyapunov exponent λ of the chaotic Ising model. Both the magnetic susceptibility and the largest Lyapunov exponent exhibit peaks corresponding to the ferromagnetic phase transition.

The largest Lyapunov exponent is always positive in this case also. It should be noted that the largest Lyapunov exponents exhibit peaks in both Figs. 2 and 5(c). These peaks are analogous to those observed in neuron models coupled by gap junctions27,28. In Fig. 5, the peak corresponds to the ferromagnetic phase transition. This result is consistent with a previous study that also relates the peak to the synchronisation phase transition in the Kuramoto model29.

It should be noted that some deterministic discrete-time models that can simulate the Ising model have been proposed on the basis of the ideas of microcanonical ensembles30,31,32 and coupled map lattices33,34,35,36,37. The chaotic Ising model proposed in this paper has continuous-time billiard dynamics that is totally different from these two series of models.

Discussion

It is intuitively understandable from equation (6) that a unit in a chaotic Boltzmann machine takes the states si = 0 and 1 with the same probability as Gibbs sampling (equation (2)), because xi moves at a speed inversely proportional to the conditional probability as |dxi/dt| ∝ P[si|s\si]–1. However, considering all the interactions in the system, it is not trivial at all that the billiard ball moves following the joint probability of the Gibbs distribution (equation (3)). Although the results presented in this paper provide numerical evidence, further theoretical investigation of the dynamics from the viewpoints of both nonlinear dynamics and statistical mechanics is necessary. It is also important to characterise the differences in dynamical aspects of stochastic and chaotic Boltzmann machines. Chaotic Boltzmann machines can be a good example of a system whose macroscopic behaviour appears to be that of an equilibrium system, even though the microscopic behaviour is far from equilibrium36.

We have described a method to derandomise the Gibbs sampling of Boltzmann machines by using billiard dynamics. This approach seems applicable to a wider class of probabilistic models such as Markov random fields and graphical models. It can be extended to multi-valued random variables in the following two possible ways. One way is to extend the phase space of xi to [0, Si), where Si denotes the number of values of the ith unit. The internal state xi moves unidirectionally from 0 to Si and the state si is determined by  . If the endpoints of the phase space are regarded as identical, si takes values of 0, 1, …, Si−1 cyclically. Another way is to use a switched arrival system38 composed of Si tanks. In this case, si switches probabilistically due to the chaotic behaviour of switched arrival systems; however, the system dynamics becomes non-invertible.

. If the endpoints of the phase space are regarded as identical, si takes values of 0, 1, …, Si−1 cyclically. Another way is to use a switched arrival system38 composed of Si tanks. In this case, si switches probabilistically due to the chaotic behaviour of switched arrival systems; however, the system dynamics becomes non-invertible.

The numerical simulation of chaotic Boltzmann machines is slower than that of stochastic Boltzmann machines, because every time s is updated, the probabilities for all the units have to be calculated. As for stochastic Boltzmann machines, calculation is necessary only for the selected unit. Hence, it is impractical to use chaotic Boltzmann machines, instead of stochastic ones, on ordinary digital computers.

However, stochastic Boltzmann machines are not amenable to parallelisation. Even if all the nodes can communicate quickly with each other, state updates of stochastic Boltzmann machines must be carried out one by one, sequentially and exclusively; simultaneous updates of multiple units are not allowed except for special cases such as restricted Boltzmann machines and the Ising model, for which parallelisation depending on the specific network structures is possible. Due to the parallel distributed manner of information processing in the nervous system, neural network models appear to be easily parallelisable; however, this is not the case for stochastic Boltzmann machines. On the other hand, chaotic Boltzmann machines are defined as a dynamical system in which units evolve in parallel. Moreover, no random numbers are required. Therefore, efficient hardware implementation as a parallel distributed system is highly expected. Although there may be unexpected difficulties in hardware implementation of chaotic Boltzmann machines, this paper presents at least a novel mechanism for implementing Boltzmann machines that inherit the parallelism of neural networks.

Compared with stochastic Boltzmann machines, the units in chaotic Boltzmann machines are more like real neurons. The behaviour of internal states can be regarded as oscillators that interact with each other through discretised signals. The units are analogous to neurons in the brain that also show oscillatory behaviour and interact through digital signals, namely, neuronal spikes. Actually, a chaotic Boltzmann machine is similar to a neural network model of simplified hysteresis neurons39.

The functional roles of chaos in the brain have been discussed extensively40,41,42,43,44,45,46. Chaotic neural networks41 have been proposed as a simple neural network model that exhibits chaotic behaviour and the chaotic dynamics has been shown to be effective when used for associative memory networks43,47 and for solving optimisation problems22,23. We have presented here another mechanism that induces chaotic behaviour in neural networks.

We have also shown that chaos in hybrid dynamical systems can be utilised for computing. Herding systems48 are an example that utilises the complex behaviour of piecewise isometries for machine learning; note that time discretisation of chaotic Boltzmann machines yields piecewise isometries. In general, hybrid dynamical systems with rich nonlinear dynamics are expected to form the possible components of future computers, as discussed in ref. 49. Chaotic Boltzmann machines can be a suitable candidate if they can be implemented efficiently in microelectronic circuits. Moreover, because a similar billiard dynamics can be implemented as a model of city traffic by using a switched flow system50, it may be possible to devise a physical mechanism that can realise chaotic Boltzmann machines. From the viewpoint of thermodynamics and reversible computing51,52,53, it is intriguing that the billiard dynamics of the chaotic Boltzmann machines is invertible.

In conclusion, we have proposed a chaotic dynamical system that works as a Boltzmann machine. We have shown numerically that chaotic Boltzmann machines have computing abilities comparable to the conventional ones. The proposed approach allows possibly efficient hardware implementation of a Boltzmann machine as a parallel distributed system. Moreover, chaotic Boltzmann machines are not merely an implementation of Boltzmann machines, as they have implications in various research fields including nonlinear dynamics, statistical physics, thermodynamics, computing, machine learning and neuroscience.

Methods

See ref. 50 for the derivation of the Poincaré map (spin-flip map) defined on the boundary of the hypercube.

References

Korst, J. H. M. & Aarts, E. H. L. Combinatorial optimization on a Boltzmann machine. J. Parallel Distribut. Comp. 6, 331–357 (1989).

Geman, S. & Geman, D. Stochastic relaxation, Gibbs distributions and the Bayesian restoration of images. IEEE Trans. Pattern Anal. Mach. Intell. 6, 721–741 (1984).

Ackley, D. H., Hinton, G. E. & Sejnowski, T. J. A learning algorithm for Boltzmann machines. Cognitive Sci. 9, 147–169 (1985).

Peterson, C. & Anderson, J. R. A mean field theory learning algorithm for neural networks. Complex Syst. 1, 995–1019 (1987).

Hinton, G. E. Deterministic Boltzmann learning performs steepest descent in weight-space. Neural Comput. 1, 143–150 (1989).

Smolensky, P. Information processing in dynamical systems: foundations of harmony theory. in Parallel Distributed Processing: Explorations in the Microstructure of Cognition, Vol. 1 (ed. Rumelhart D. E., et al.) Chap. 6, 194–281 (MIT Press, Cambridge, 1986).

Salakhutdinov, R. & Hinton, G. Deep Boltzmann machines. Proceedings of the 12th International Conference on Artificial Intelligence and Statistics 448–455 (2009).

Hinton, G. E. Training products of experts by minimizing contrastive divergence. Neural Comput. 14, 1771–1800 (2002).

Skubiszewski, M. An exact hardware implementation of the Boltzmann machine. Proceedings of the Fourth IEEE Symposium on Parallel and Distributed Processing 107–110 (1992).

Ly, D. L. & Chow, P. High-performance reconfigurable hardware architecture for restricted Boltzmann machines. IEEE Trans. Neural Netw. 21, 1780–1792 (2010).

Akazawa, M. & Amemiya, Y. Boltzmann machine neuron circuit using single-electron tunneling. Appl. Phys. Lett. 70, 670–672 (1997).

Wu, N.-J., Shibata, N. & Amemiya, Y. Boltzmann machine neuron device using quantum-coupled single electrons. Appl. Phys. Lett. 72, 3214–3216 (1998).

Yamada, T., Akazawa, M., Asai, T. & Amemiya, Y. Boltzmann machine neural network devices using single-electron tunnelling. Nanotech. 12, 60–67 (2001).

Blank, M. & Bunimovich, L. Switched flow systems: pseudo billiard dynamics. Dyn. Syst. 19, 359–370 (2004).

Branicky, M. S. Analog computation with continuous ODEs. Proceedings of IEEE Workshop on Physics and Computation 265–274 (1994).

Siegelmann, H. T. Computation beyond the Turing limit. Science 268, 545–548 (1995).

Moore, C. Recursion theory on the reals and continuous-time computation. Theor. Comp. Sci. 162, 23–44 (1996).

Blum, L., Cucker, F., Shub, M. & Smale, S. Complexity and real computation (Springer, New York, 1997).

Aihara, K. Chaos engineering and its application to parallel distributed processing with chaotic neural networks. Proc. IEEE 90, 919–930 (2002).

Horio, Y. & Aihara, K. Analog computation through high-dimensional physical chaotic neuro-dynamics. Physica D 237, 1215–1225 (2008).

Rendl, F., Rinaldi, G. & Wiegele, A. Solving Max-Cut to optimality by intersecting semidefinite and polyhedral relaxations. Math. Program. 121, 307–335 (2010).

Hasegawa, M., Ikeguchi, T. & Aihara, K. Combination of chaotic neuro-dynamics with the 2-opt algorithm to solve traveling salesman problems. Phys. Rev. Lett. 79, 2344–2347 (1997).

Chen, L. & Aihara, K. Global searching ability of chaotic neural networks. IEEE Trans. Circuits Syst. I 46, 974–993 (1999).

Elser, V., Rankenburg, L. & Thibault, P. Searching with iterated maps. Proc. Natl. Acad. Sci. USA 104, 4655–4660 (2007).

Ercsey-Ravasz, M. & Toroczkai, Z. Optimization hardness as transient chaos in an analog approach to constraint satisfaction. Nature Phys. 7, 966–970 (2011).

Ercsey-Ravasz, M. & Toroczkai, Z. The chaos within Sudoku. Sci. Rep. 2, 725 (2012).

Schweighofer, N., Doya, K., Fukai, H., Chiron, J. V., Furukawa, T. & Kawato, M. Chaos may enhance information transmission in the inferior olive. Proc. Natl. Acad. Sci. USA 101, 4655–4660 (2004).

Takemoto, T., Kohno, T. & Aihara, K. Circuit Implementation and dynamics of a two-dimensional MOSFET neuron model. Int. J. Bif. Chaos 17, 459–508 (2007).

Miritello, G., Pluchino, A. & Rapisarda, A. Phase transitions and chaos in long-range models of coupled oscillators. Europhys. Lett. 85, 10007 (2009).

Creutz, M. Microcanonical Monte Carlo simulation. Phys. Rev. Lett. 50, 1411–1414 (1983).

Vichniac, G. Y. Simulating physics with cellular automata. Physica 10D, 96–116 (1984).

Creutz, M. Deterministic Ising dynamics. Ann. Phys. 167, 62–72 (1986).

Kaneko, K. Pattern dynamics in spatiotemporal chaos. Physica D 34, 1–41 (1989).

Sakaguchi, H. Phase transitions in coupled Bernoulli maps. Prog. Theor. Phys. 80, 7–12 (1988).

Miller, J. & Huse, D. A. Macroscopic equilibrium from microscopic irreversibility in a chaotic coupled-map lattice. Phys. Rev. E 48, 2528–2535 (1993).

Egolf, D. A. Equilibrium regained: From nonequilibrium chaos to statistical mechanics. Science 287, 101–104 (2000).

Just, W. Phase transitions in coupled map lattices and in associated probabilistic cellular automata. Phys. Rev. E 74, 046209 (2006).

Chase, C., Serrano, J. & Ramadge, P. J. Periodicity and chaos from switched flow systems: contrasting examples of discretely controlled continuous systems. IEEE Trans. Automat. Contr. 38, 70–83 (1993).

Jin'no, K., Nakamura, T. & Saito, T. Analysis of bifurcation phenomena in a 3-cells hysteresis neural network. IEEE Trans. Circuits Syst. I 46, 851–857 (1999).

Skarda, C. A. & Freeman, W. J. How brains make chaos in order to make sense of the world. Behav. Brain Sci. 10, 161–195 (1987).

Aihara, K., Takabe, T. & Toyoda, M. Chaotic neural networks. Phys. Lett. A 144, 333–340 (1990).

Elbert, T., Ray, W. J., Kowalik, Z. J., Skinner, J. E., Graf, K. E. & Birbaumer, N. Chaos and physiology: deterministic chaos in excitable cell assemblies. Physiol. Rev. 74, 1–47 (1994).

Tsuda, I. Toward an interpretation of dynamic neural activity in terms of chaotic dynamical systems. Behav. Brain. Sci. 24, 793–810 (2001).

Faure, P. & Korn, H. Is there chaos in the brain? I. Concepts of nonlinear dynamics and methods of investigation. C. R. Acad. Sci. III 324, 773–793 (2001).

Korn, H. & Faure, P. Is there chaos in the brain? II. Experimental evidence and related models. C. R. Biol. 326, 787–840 (2003).

Toyoizumi, T. & Abbott, L. F. Beyond the edge of chaos: Amplification and temporal integration by recurrent networks in the chaotic regime. Phys. Rev. E 84, 051908 (2011).

Adachi, M. & Aihara, K. Associative dynamics in a chaotic neural network. Neural Netw. 10, 83–98 (1997).

Welling, M. Herding dynamic weights to learn. Proceedings of the 26th Annual International Conference on Machine Learning 1121–1128 (ACM Press, New York, 2009).

Aihara, K. & Suzuki, H. Theory of hybrid dynamical systems and its applications to biological and medical systems. Phil. Trans. R. Soc. A 368, 4893–4914 (2010).

Suzuki, H., Imura, J. & Aihara, K. Chaotic Ising-like dynamics in traffic signals. Sci. Rep. 3, 1127 (2013).

Landauer, R. Irreversibility and Heat Generation in the Computing Process. IBM J. Res. Dev. 5, 183–191 (1961).

Bennett, C. H. Logical reversibility of computation. IBM J. Res. Dev. 17, 525–532 (1973).

Bennett, C. H. The thermodynamics of computation—a review. Int. J. Theor. Phys. 21, 905–940 (1982).

Acknowledgements

This research is supported by the Aihara Innovative Mathematical Modelling Project, the Japan Society for the Promotion of Science (JSPS) through the “Funding Program for World-Leading Innovative R&D on Science and Technology (FIRST Program)”, initiated by the Council for Science and Technology Policy (CSTP).

Author information

Authors and Affiliations

Contributions

H.S. conceived of the model and analysed it. All the authors designed the research and wrote and reviewed the manuscript, especially from the viewpoints of hybrid dynamical systems (H.S.), hybrid control systems (J.I.), circuit implementation (Y.H.) and analogue computing (K.A. and Y.H.).

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Rights and permissions

This work is licensed under a Creative Commons Attribution 3.0 Unported License. To view a copy of this license, visit http://creativecommons.org/licenses/by/3.0/

About this article

Cite this article

Suzuki, H., Imura, Ji., Horio, Y. et al. Chaotic Boltzmann machines. Sci Rep 3, 1610 (2013). https://doi.org/10.1038/srep01610

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep01610

This article is cited by

-

Chaotic dynamics in nanoscale NbO2 Mott memristors for analogue computing

Nature (2017)

-

Hardware emulation of stochastic p-bits for invertible logic

Scientific Reports (2017)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.