Abstract

The aim of this research was to evaluate the ability to switch attention and selectively attend to relevant information in children (10–15 years) with persistent listening difficulties in noisy environments. A wide battery of clinical tests indicated that children with complaints of listening difficulties had otherwise normal hearing sensitivity and auditory processing skills. Here we show that these children are markedly slower to switch their attention compared to their age-matched peers. The results suggest poor attention switching, lack of response inhibition and/or poor listening effort consistent with a predominantly top-down (central) information processing deficit. A deficit in the ability to switch attention across talkers would provide the basis for this otherwise hidden listening disability, especially in noisy environments involving multiple talkers such as classrooms.

Similar content being viewed by others

Introduction

Listening and understanding a single talker in the presence of other talkers or distracters requires adequate hearing sensitivity, processing of the spectral (frequency and intensity) and temporal (time) cues, separating the information into coherent streams as well as selectively attending to the relevant talker and ignoring the distracters1. Selective auditory attention and re-orientation in a noisy environment is a basic yet complex behavior2,3,4. Most of our listening experiences in the environment are dynamic and the sources as well as information change constantly in time and space. Therefore the listener needs to orient attention when there is relevant information and rapidly re-orient attention from one stream of information to another as the situation demands2.

There have also been suggestions that the deficits in auditory processing skills and speech perception in noise, which are most often observed in children who have a history of recurrent otitis media (middle ear infection) with effusion (OME)5, are associated with poor attention abilities6. We have focused our research to study the attentional mechanisms in such a population in order to gain further insight into the underlying cause of the listening difficulties in background noise.

Auditory attention can involve both top-down and bottom-up processing based on the types and demands of a listening task7,8,9. A task which requires a voluntary selection of targets amongst distracters would involve top down (cognitive) control, whereas that requiring involuntary focus of attention due to factors such as salience will recruit bottom up (sensory) processing resources10. From a functional perspective, listening to speech in a noisy background requires a listener to remain alert and responsive to relevant cues (intrinsic and phasic alertness), orient attentional focus to important or salient signals (orienting) and selectively focus attention on the sounds of interest while ignoring the distracters (selective attention)11,12. In addition, there may also be a need to simultaneously focus attention on two or more signals (divided attention) and/or disengage and switch attentional focus between multiple sources of information based on relevance or salience (attention switching/re-orientation)13,14.

Selective auditory attention in the time domain is especially important in situations where speech and noise sources overlap in space and where the listeners are required to constantly switch attentional focus in time15. A number of studies have demonstrated that tone detection in the presence of background masking noises improved significantly when the temporal interval of target occurrence was expected or cued16,17,18,19. An extension to this notion of temporal selective attention is the time required for the subjects to re-orient their attention after attending to the expected time window. This has been studied in vision where the temporal re-orientation time varied between 200–500 ms20. However to our knowledge, this has yet to be examined in audition.

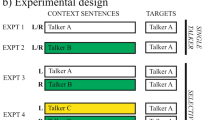

In this study, we designed a task to examine the relative roles of top-down and bottom-up control of attention and the time taken to re-orient attention in the auditory domain. Based on a combination of the multi-probe signal method21 and Posner's cueing paradigm22, this method involved priming a target signal at a specific time interval in a stimulus sequence (temporal epoch) by cueing, followed by frequent presentations of the target at the cued epoch. This ensured the focus of attention on the expected epoch. To identify attention specific effects, in addition to presenting targets at the expected interval, stimuli were also presented infrequently at unexpected epochs. Importantly we also allowed for the presentation of catch trials to facilitate bias correction and sensitivity analysis. Here, we used a target identification task involving the discrimination and identification of a target syllable from a string of five syllables in the presence of a two-talker speech babble (see Figure 1). The duration of the five temporal windows (epochs) was based on the subject's individual reaction time via button press responses in a control experiment. This ensured a correlation between attention and reaction, while also providing a means to quantify the subject's response accuracy over time and allowed us to model the patterns of attentional re-orientation. Additionally, by changing the temporal position of the priming cue and the expected epoch between the first and last stimulus windows, we were able to gain insights into the subject's auditory selective attention abilities.

A time domain view of the stimulus presented in a test trial within an experimental block (“Early” Condition) in which the target was presented frequently and cued at the first temporal epoch.

(A) – Cue-Tone (2500 Hz); (B) – Target (da) validly cued and occurs in 60% of trials at this epoch; (C) – 2 Talker Babble (Female); (D) – Target (da) invalidly cued and occurs in 20% of trials at these epochs.

Performance benchmarks were collected from two control groups of subjects with normal hearing sensitivity and no reported listening difficulty (adults and children). We then applied this paradigm on a third (experimental) group of children who presented with persistent listening difficulties in noise. In particular, apart from parental and teacher concerns about their listening difficulties and a concomitant medical history of recurrent OME, this last group of children otherwise performed similar to the control (children) group when assessed using a wide range of clinical tests for hearing sensitivity and auditory processing. Apart from the standard test battery recommended by the American Academy of Audiology (2010)23, they were also examined on additional tests (See Table 1) that ruled out deficits in peripheral hearing, auditory short-term memory, auditory sustained attention and auditory processing (See Table 2).

Previous studies, although using shorter observation time windows than the current experiment, have demonstrated a reduction in sensitivity to targets outside a certain time window around an expected epoch16,18. Detection of the targets occurring earlier than expected has been shown to involve involuntary shifts of attention requiring bottom-up processing resources; whereas the detection of targets later than expected involves voluntary disengagement and switch of attention from the expected temporal epoch requiring top-down processing resources9,10. Furthermore, we anticipate a gradual improvement in sensitivity over time at the unexpected epochs following the epoch when a target was expected but not presented24. We assessed the difference in sensitivity to identify a target for expected and unexpected targets presented at the first epoch as a measure of selective attention and the time taken to relatively recover the sensitivity for the unexpected targets as a measure of attention switching. All the participants were tested on 2 conditions, an “Early” condition in which the target syllable occurred frequently (60%) in Epoch 1 and a “Late” condition in which the target occurred frequently in last epoch (Epoch 5). That is, for the “Early” condition the target syllables occurred infrequently at the unexpected epochs (2–5) while the converse was true for the “Late” condition where target occurrence at epochs 1–4 was unexpected. The “Early” condition allowed us to examine the voluntary attention re-orientation mechanisms that are distinct from the involuntary attentional processes of the “Late” condition25.

To our knowledge, this is the first investigation and demonstration of temporal attentional re-orientation in children. Most importantly, these results indicated a significantly longer attentional re-orientation time for children who reported with persistent listening difficulties and a history of recurrent OME, in contrast to an age matched control group.

Results

Adults and children with no listening difficulty

Early condition

We examined subjects with normal hearing and no reported listening difficulties (12 adults and 12 children). We observed several distinct patterns in hit rate and false alarm responses. Overall, the hit rates at the expected epoch were considerably higher than those at the unexpected epochs in children but not for adults (Figure 2, blue and green bars). For the children, the hit rates dropped substantially immediately after Epoch 1 (from 0.82 ± 0.01 to 0.39 ± 0.07) in the “Early” condition, then gradually improved consistent with a reorientation/re-preparation process24 (Figure 2A, blue and green bars), reaching 0.69 ± 0.05 in Epoch 5. This did not occur for the adult subjects, where there was no notable drop in their hit rates after the expected epoch, maintaining a hit rate of 0.72 ± 0.06 at Epoch 2 (0.51 ± 0.01 seconds, see Methods). The considerable reduction in hit rate for the normal children after the expected epoch coupled with a relatively slow reorientation time meant that there remained a notable difference in hit rate between Epoch 1 and Epoch 5, the last temporal window. In order to compare the reorientation time between normal adults and children, we extrapolated the hit rates using a simple line of best fit (y = 0.089*x+0.22, adjusted R2 = 0.81) and projected that normal children will only regain sensitivity at 3.32 ± 0.08 seconds with a hit rate of 0.84 ± 0.18 (see Figure 2A). In the expected epoch (Epoch 1), there was no notable difference in hit rates for the normal adults and children, however, there was a considerably higher number of false alarms committed by the children (Figure 2B, blue and green): 0.17 ± 0.02 versus 0.06 ± 0.01 respectively - suggesting a reduction in sensitivity (see below for d′ analysis).

2A and 2B: Pooled hit and false alarm rates for target identification for the 3 groups in the “Early” Condition across the 5 temporal epochs.

The blue bars represent the data for the adult participants with no listening difficulty, the green bars for the children with no listening difficulty and the red bars for children with listening difficulty. The green and red dashed line in figure 2A are the lines of best fit used to extrapolate the attention re-orientation time for children without and with listening difficulties respectively. Error bars represent standard errors of the mean.

Late condition

In the “Late” condition (Figure 3); the hit rates were substantially higher for the adults in all epochs with a consistently lower false alarm rate. While there was a notable difference in hit rates between adults and children in Epoch 5, both groups performed above the 75% threshold, with adults reaching 0.94 ± 0.00 and children 0.87 ± 0.01. In comparison between Early and Late conditions, the hit rates at the expected epoch showed a similar pattern. There was a higher hit rate for adults in the “Late” condition; however, a commensurate increase in false alarm rate was also observed, suggesting a similar level of sensitivity for target identification at the expected epoch for both groups of participants. That was not true for children though, where a considerable increase in false alarm rate was observed in the Late condition (from 0.13 ± 0.02 to 0.46 ± 0.03), suggesting a lower level of sensitivity. Similar to the finding in the “Early” condition, there was no notable difference between the false alarm rates for the unexpected epochs within each of the two groups (See Figures 2B and 3B, blue and green bars).

3A and 3B: Pooled hit and false alarm rates for target identification for the 3 groups in the “Late” Condition across the 5 temporal epochs.

The blue bars represent the data for the adult participants with no listening difficulty, the green bars for the children with no listening difficulty and the red bars for children with listening difficulty. Error bars represent standard errors of the mean.

Selective attention

The hit rate and false alarm results were summarized with a sensitivity (d′) analysis (See Method and Supplement 1 for further details). We compared the target identification sensitivity at the first temporal epoch when the target was expected at that epoch (“Early” condition) to when it was unexpected (“Late” condition) as a measure of temporal selective attention ability24 and found a notably higher sensitivity for target identification at the first epoch in the “early” condition (Expected) compared to that at the first epoch in the “Late” condition (Unexpected) suggesting a marked effect of focusing attention selectively on the expected epoch for both groups of participants. Further we also compared the sensitivity for identifying the target at the expected epochs for both Early and Late conditions (Figure 4). In the Early condition, normal adults had a sensitivity of 2.49, 95% CI of [2.2 2.75] while normal children were considerably lower at 1.87, 95% CI of [1.62 2.11]. In the Late condition, normal adults had a sensitivity of 2.64, 95% CI of [2.35 2.91], with the normal children dropping in sensitivity to 1.23, 95% CI of [1.01 1.44]. In summary, sensitivity for the adult population did not vary between conditions and was consistently considerably higher than that of normal children. However, the converse was true for the normal children tested, where we observed a notable decrease in sensitivity between the Early and Late conditions.

Pooled sensitivity (d′) for target identification at the expected epochs for the 3 groups for “Early” and “Late” conditions.

The blue bars represent the data for the adult participants with no listening difficulty, the green bars for the children with no listening difficulty and the red bars for children with listening difficulty. Error bars represent the 95% confidence intervals. * – Substantial Difference; NS – Non-substantial difference.

Children with listening difficulties

Early condition

This group of subjects consisted of 12 children, age-matched (p > 0.05) against the children in the control group, who presented with persistent listening difficulties especially in a noisy environment (see Methods). Consistent with the results from the children in the control group and from previous research16,18, the hit rates for target identification was considerably higher when the target syllable occurred at the expected epoch and poorer elsewhere for all the participants (See Figures 2A and 3A, red bars). A comparison between the children in the experimental and control group showed no notable difference in hit rates at Epoch 1. Substantially more false alarms were committed by children in the experimental group (0.41 ± 0.03 versus 0.17 ± 0.02 (normal children) and 0.06 ± 0.01 (normal adults)).

Similar to the responses in the control group of children, there was a substantial drop in pooled hit rate immediately after the expected epoch – from 0.79 ± 0.01 (Epoch 1) to 0.16 ± 0.05 (Epoch 2). Additionally, hit rates were considerably lower for all the unexpected epochs when compared with the control group and the normal adults, only reaching 0.30 ± 0.05 at Epoch 5; However, a trend of recovery can still be seen, albeit at a much slower rate (Figure 2A). Again, we extrapolated the hit rates from Epoch 2 to 5 and estimated that the experimental group would have recovered their sensitivity at 9.47 ± 0.25 seconds, reaching a hit rate of 0.78 ± 0.27; a notable increase in duration from the control group.

Late condition

In the Late condition the hit rate at the expected epoch was notably lower for the children in the experimental group. Interestingly, the false alarm rates in the expected epoch for the experimental group did not vary significantly when compared with the “Early” condition, maintaining at 0.47 ± 0.01, even though a substantial increase was observed for children in the control group.

Selective attention

Similar to the results obtained in the previous experiment, we observed considerably higher sensitivity for target identification at the expected epoch compared to the unexpected epoch for both groups of children suggesting that similar to the children in the control group, the children in the experimental group showed an advantage for identifying the target based on its expectancy. Further comparison of target identification sensitivity between the 2 groups at the expected epochs for both “Early” and “Late” conditions showed a notably higher sensitivity for the children in the control group in the Early condition but there was no substantial difference in sensitivity between the two groups in the “Late condition (See Figure 4).

Discussion

In these experiments, we used a modified probe-signal method to analyze the rate of recovery in attention and extrapolated the time course necessary for subjects to regain attentional focus. In addition to segmenting each trial into equal response windows (epochs) based on each individual's minimal response times, false alarms were also recorded to ensure the validity of the task and to calculate the sensitivity for target identification across the epochs. The catch trials used in the task also played an important role of reducing the attentional preparation of the listeners for targets presented at the unexpected epochs24. The role of the catch trials in context of this paradigm would be to generate a degree of uncertainty regarding the occurrence of target which may relax the participants' state of preparation for responding to the target especially at the unexpected temporal epochs24. Although the proportion of catch trials used in the current study was relatively small (20%), it has been shown previously that such dis-preparation due to the presence of catch trials is an ‘all or none’ process, such that even a small percentage of catch trials is sufficient for the effect to be observed24. By combining a rigid time response window with hit rates and false alarm measures we were able to derive bias-free sensitivity measures at the expected epochs.

We also found enhanced sensitivity for target identification at expected vs. unexpected epoch indicating an ability to attend selectively to the target at the expected time interval for all 3 groups of participants. From the present data we are unable to compare and contrast the magnitude or strength of selective attention abilities between these groups.

While other studies have also shown a reduction in sensitivity after the expected epoch, here we also quantified the recovery rate. Such recovery can be attributed to the process of “re-preparation” by which the listener develops a new state of preparation across the unexpected epochs, due to the absence of targets at the expected epoch24. Despite the temporary disruptions in re-preparation caused by the presence of catch trials, the participants gradually recover their sensitivity. This recovery would require a goal driven mental effort for the listeners26 involving predominantly top down voluntary control of shift in attention across time25 with possibly some involuntary bottom-up processing10,24.

Interestingly, the results showed that our adult participants had an essentially flat distribution of hit rates across the five epochs in the “Early” condition. Given the similarities in hit rate, the results may suggest that adults reoriented faster than could be detected using a button press response paradigm. The duration of the epochs was tailored individually based on the response times derived from a set of control experiments (see Methods), which for adults was 360 ± 15.56 ms. These results suggest a relatively rapid attention switching time in the time domain for adults. This is consistent with earlier studies pertaining to the recovery of the “attentional blink” phenomenon in which the detectability of the second target in a dual target detection task was substantially reduced if it occurred within 200–500 ms from the first and improved thereafter27. This has been observed in audition as well as vision20,28 and indicates that the listeners' attention is captured by the first target and thus unable to rapidly switch to the next. While this may provide a tentative explanation to the results from the adult population, “attentional blink” in the auditory modality has not been examined in children.

In contrast, the hit rates for normal children dropped significantly between Epoch 1 and Epoch 2 - before increasing slowly in Epoch 5. A linear extrapolation projected a reorientation time of 3.32 ± 0.08 seconds to attain parity with the expected epoch, which is significantly slower than adult behavior. To our knowledge, this is the first report that quantified the differences in attentional reorientation between adults and children and suggests that this process continues to mature into adulthood.

Most significantly, when we applied this testing methodology on a cohort of children who reported with persistent listening difficulties (Experimental group), their hit rates, false alarm rates and temporal re-orientation time were respectively lower and longer than that of the normal children and adults. A comparison of sensitivity in the expected epochs (Figure 4) clearly demonstrates this trend. Interestingly, there was a significant difference in d′ between the Control and Experimental cohorts only in the “Early” condition, with the d′ for the Control cohort reduced to the level of the Experimental subjects in the “Late” condition. The difference in hit rate responses between the cohorts could not account for such a drop in sensitivity. Rather, there was a highly significant difference in false alarm rates between the expected epochs for the children in the Control group, where a much smaller number of false alarms were committed in the “Early” condition. This suggests that the Experimental cohort were less able to inhibit their responses in the expected epoch of the “Early” condition, rather than representing a decrease in sensitivity of the Control group.

The substantially higher false alarm rate in the Experimental group at the expected epoch in the “Early” condition may be due to a combination of excessive facilitation effect due to a reflexive shift of attention at the expected epoch along with poor response inhibition29. Moreover, the total number of false alarms across the five epochs was significantly higher for these subjects, suggesting a general reduced ability to avoid responding in a catch trial. Previous work has shown that such intentional or voluntary inhibitory control processes are vital in regulating the allocation of attention29,30. A poor control of response inhibition as observed may also be related to poorer working memory capacity31. This inability to intentionally inhibit the allocation of attention to irrelevant stimuli may lead to increased distractibility of these children especially in noisy listening environments and may thus partly explain their difficulties in listening.

The results seen with the experimental group are consistent with the idea that there are differences in the ability to rapidly shift attention in the group of children with persistent listening difficulties compared to their age matched peers. This difference could involve a combination of top-down and bottom-up processing deficits. Listening in a noisy background or in a multi-talker environment, like a group discussion, not only requires efficient peripheral hearing, auditory processing and memory but also rapid switching of the focus of the listeners attention from one talker to another based on the changing relevance of information32,33. This requires the application of listening effort to attend to the expected as well as unexpected sources of information and the ability to inhibit responding to distracting stimuli. Deficits in any of these abilities may affect an individual's ability to listen effectively in a noisy or multi-talker situation. Previous brain imaging studies indicate the involvement of predominantly frontal and parietal cortical areas of the brain in attention switching, listening effort and response inhibition control which further suggests a more central or top down processing deficit in the experimental group tested in this study8,34,35,36.

Previous work involving children with listening difficulties has reported variable performance on psychoacoustic tasks meant to measure their auditory processing abilities. This has also been attributed to poor auditory attention and is the consistent with the findings reported here37,38. Interestingly, all the participants in our experimental group reported a history of recurrent otitis media. While the data does not speak directly to any definitive links with OME, it may be that due to the transient disruptions in hearing associated with recurrent OME39,40 the experimental group may have learnt to allocate most of their cognitive resources to selectively focus attention on expected information and the remaining resources are insufficient for them to switch their attention to any unexpected stimulus. Future studies are needed to evaluate this hypothesis. Further research should also explore whether these attention switching deficits are specific to the auditory modality or are modality independent general cognitive deficits.

This work has described an auditory attention switching deficit in a group of school-aged children with persistent listening difficulties in noisy environments. As the current set of standard clinical tests was unable to discriminate this group of listeners from normal controls, the test reported here may provide a good candidate test for children with listening difficulties. As attention switching requires predominantly top down control, the data is consistent with the suggestion that this deficit represents a more central pathology in contrast to a peripheral auditory processing deficit. An aspect of considerable interest will be the capacity of training or practice regimes to assist in overcoming this deficit. In a similar study, we are currently focusing on assessing children diagnosed with an auditory processing disorder to determine if it is part of the broader spectrum of listening difficulties and to gain insights into its underlying cause.

Method

Participants

We examined three groups of participants – 12 normal adults (mean age 21.09, SD 3.52), 12 normal children (control group) (mean age 12.5, SD 1.55) and 12 children with persistent listening difficulties (experimental group) (mean age 11.38, SD 1.48). All subjects spoke Australian English as their first language, had normal hearing sensitivity and did not present with any middle ear pathologies. We ruled out any auditory memory, sustained attention or processing deficits for all the participants based on a comprehensive clinical test battery23,41 (See Table 1 and 2). The test scores for each of the normal children on the standardized clinical tests were within the previously published norms42,43. All the children in the experimental group presented with persistent listening difficulties that were based on parental, teacher and participant reports of concerns regarding their listening abilities especially in noisy environments.

Children with a history or formal diagnosis of attention deficit/hyperactivity disorder (ADHD) were excluded from the study. Furthermore, all children were tested on the auditory continuous performance task44 and there was no significant difference (See Table 2) between the control and experimental group children. Earlier studies have reported the continuous performance task as a screening test for ADHD45. Interestingly, their medical history also revealed a history of recurrent (>2 episodes, Mean = 2.91, SD = 0.51) otitis media with effusion between the ages of 2–5 years that was absent in the control group. Both the control and experimental groups were age matched (p > 0.05). Informed consent was obtained from all the participants in accordance with procedures approved by the Human Research Ethics Committee at Macquarie University. For every child participant, care was taken to avoid participant fatigue and loss of motivation46 by constant positive reinforcements and dividing the tests across multiple sessions within a span of 2 weeks.

The modified Multi-probe signal method

The multi-probe signal method examines the allocation of attentional resources by examining a subject's target detection sensitivity in expected and unexpected time windows. It focused the subject's attention to the expected epoch by 1) presenting an auditory priming cue and 2) repeated presentations of the target signal, at the primed epoch. Target signals were then presented at the unexpected temporal epochs. This allowed us to examine the subject's attention reorientation time by comparing the target detection sensitivity between the time windows. Here, we examined five temporal epochs with the following target presentation ratio: 60% in the expected epoch, 5% in the four unexpected epochs and 20% catch trials. All the participants were tested on two conditions, an “Early” condition in which Epoch 1 was the expected epoch, where the target syllable occurred frequently and a “Late” condition in which Epoch 5 was the expected. This allowed us to compare between voluntary endogenous attention re-orientation mechanisms (“Early”) and involuntary exogenous process (“Late”)9,25.

The duration of the epochs was set based on the subject's response time (RT) derived from a series of training trials (see below), where

This ensured a reasonably high level of test difficulty within the motor constraints of the participants. The mean inter-stimulus interval for each group of participants was: Adult: 360 ± 15.56 ms, Control group: 404.16 ± 13.84 ms, Experimental group: 410.83 ± 14.3 ms.

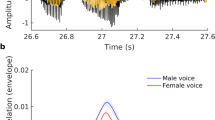

Stimuli and procedure

The experiments were performed in a darkened anechoic chamber. Stimuli consisted of five speech syllables from the list (/da/pa/ga/ka/ba) each 150 ms long spoken in a male voice, presented from a loud speaker (Audience A3) located 1 m directly in front of the subjects (0°Azimuth). Maskers in the form of female “babble speech” were presented from two speakers (Tannoy V6) placed 1 m in front at ±45°Azimuth at a constant intensity level of 70 dB SPL. The target to masker ratio varied between subjects (by varying the intensity of the syllable train) to keep a 75–85% target detection threshold. Speech syllable stimuli were used to emulate a natural listening environment within the constraints of linguistic load. It has been shown in an earlier study that attention can be specifically allocated to a single syllable47. The stimulus duration was kept short to preserve a narrow listening window18.

Each trial began and ended with 800 ms of masker followed the syllable train that was mixed pseudo-randomly, with/da/being the target. A priming cue (100 ms, 2.5 kHz tone) always preceded the expected epoch by 100 ms (see Figure 1). Previous research suggests that such cues may facilitate detection and help orient attention involuntarily even if the listener is unaware of its presence22. The cue frequency was chosen based on the premise that the key differences in the acoustics of the syllables used are in the 2nd and 3rd formant transitions and may enhance identification of target syllable at the cued epoch48. The duration between the cue and the onset of a syllable (stimulus onset asynchrony (SOA)) was maintained at 100 ms to avoid the phenomenon of inhibition of return at longer SOAs which is known to impair the speed and accuracy of target identification at the cued epoch49,50. Auditory stimulus was generated using Matlab (version 2009b, The MathWorks Inc., Natick, Massachusetts) on a PC connected to an external sound card (RME FireFace 400). The subject's task was to press the response button as quickly as possible when the target syllable/da/was detected. Subject's also received instantaneous visual feedback (a red or green LED light) for correct target and false alarm identifications. A head tracker (Intersense IC3) constantly monitored the subject's position to ensure they directly faced the front speaker and a TDT System 2 (Tucker Davis Technologies) recorded the button press responses. Button presses that occurred 50 ms prior to the occurrence of target in a trial were rejected from the analysis with an assumption that they were random guesses.

Each subject participated in two training and test blocks. Each training block consisted of 25 trials which had both cued and un-cued targets presented in a randomized order. They were initially presented at a target to masker ratio of −10 dB and subsequently varied in 1 dB steps to reach a hit rate threshold of 75–85% and <40% false alarm rate on catch trials18. Each test block examined the “Early” and “Late” conditions separately and was further divided into two split halves of 60 trials each of approximately 5 minutes in duration, short enough to avoid participant fatigue (See Appendix 2). The first 10 trials always had the syllable/da/presented at the expected epoch (priming trials) to focus the attention of the participant and were excluded from subsequent analysis. The remaining 50 trials were presented in a pseudo-random order that preserved position of the expected epoch (either “Early” or “Late”).

Analysis

The results were analyzed using hit and false alarm rates of target identification at each epoch, as well as d′ analysis at the first epoch. The hit rate was the proportion of correct responses, while the false alarm rate was the proportion of responses (button presses) in catch trials. The false alarms were assigned to the epochs based on the subject's response time51. Since the number of trials across the five epochs was uneven, the proportion of catch trials allocated to each epoch was based on the distribution probability of the targets; i.e., 60% of false alarms would be committed in the expected epoch. A control study corroborated this assumption by showing that uniform target presentation rates lead to a uniform false alarm distribution (see Supplement 2). Subsequent analysis was performed on the pooled hit rate and false alarm rates by combining the results across participants in each group18,52,53. Sensitivity (d′) was calculated using the pooled hit and false alarm rates only at the first temporal epoch for both conditions in order to assess selective attention ability. The 95% CI for the sensitivity measures were calculated using Miller's approach54 (see Figure 4). Also, hit or false alarm rate values of 0 or 1 were adjusted by 1/2n or 1-1/2n respectively where n is the number of trials at each epoch to compensate for extreme values (i.e. 0 and 1) in the calculation52.

In order to predict the temporal reorientation time, we modeled the hit rate from the unexpected epochs with a line of best fit (linear least square interpolation) and extrapolated to the epoch at which the hit rate reached 1 standard error of the expected epoch (see Figure 4). An adjusted chi-square test was used to calculate the goodness of fit.

References

Hill, K. T. & Miller, L. M. Auditory attentional control and selection during cocktail party listening. Cereb Cortex 20, 583–590 (2010).

Coull, J. T. & Nobre, A. C. Where and when to pay attention: the neural systems for directing attention to spatial locations and to time intervals as revealed by both PET and fMRI. J Neurosci 18, 7426–7435 (1998).

Fritz, J. B., Elhilali, M., David, S. V. & Shamma, S. A. Auditory attention--focusing the searchlight on sound. Curr Opin Neurobiol 17, 437–455 (2007).

Shamma, S. A., Elhilali, M. & Micheyl, C. Temporal coherence and attention in auditory scene analysis. Trends Neurosci 34, 114–123 (2011).

Moore, D. R., Hartley, D. E. H. & Hogan, S. C. M. Effects of otitis media with effusion (OME) on central auditory function. International Journal of Pediatric Otorhinolaryngology 67, Supplement 1, S63–S67 (2003).

Asbjornsen, A. E. et al. Impaired auditory attention skills following middle-ear infections. Child Neuropsychol 11, 121–133 (2005).

Kim, M.-S. & Cave, K. Top-down and bottom-up attentional control: On the nature of interference from a salient distractor. Percept Psychophys 61, 1009–1023 (1999).

Coull, J. T., Vidal, F., Nazarian, B. & Macar, F. Functional anatomy of the attentional modulation of time estimation. Science 303, 1506–1508 (2004).

Coull, J. T. fMRI studies of temporal attention: allocating attention within, or towards, time. Brain Res Cogn Brain Res 21, 216–226 (2004).

Rohenkohl, G., Coull, J. T. & Nobre, A. C. Behavioural dissociation between exogenous and endogenous temporal orienting of attention. PLoS One 6, (2011).

Sturm, W., Willmes, K., Orgass, B. & Hartje, W. Do specific attention deficits need specific training? Neuropsychol Rehabil 7, 81–103 (1997).

Gomes, H., Molholm, S., Christodoulou, C., Ritter, W. & Cowan, N. The development of auditory attention in children. Front Biosci 5, D108–120 (2000).

Chiesa, A., Calati, R. & Serretti, A. Does mindfulness training improve cognitive abilities? A systematic review of neuropsychological findings. Clin Psychol Rev 31, 449–464 (2011).

Mirsky, A. F., Anthony, B. J., Duncan, C. C., Ahearn, M. B. & Kellam, S. G. Analysis of the elements of attention: a neuropsychological approach. Neuropsychol Rev 2, 109–145 (1991).

Astheimer, L. B. & Sanders, L. D. Listeners modulate temporally selective attention during natural speech processing. Biol Psychol 80, 23–34 (2009).

Wright, B. A. & Fitzgerald, M. B. The time course of attention in a simple auditory detection task. Percept Psychophys 66, 508–516 (2004).

Bonino, A. Y. & Leibold, L. J. The effect of signal-temporal uncertainty on detection in bursts of noise or a random-frequency complex. J Acoust Soc Am 124, EL321–327 (2008).

Werner, L. A., Parrish, H. K. & Holmer, N. M. Effects of temporal uncertainty and temporal expectancy on infants' auditory sensitivity. J Acoust Soc Am 125, 1040–1049 (2009).

Egan, J. P., Greenberg, G. Z. & Schulman, A. I. Interval of Time Uncertainty in Auditory Detection. J Acoust Soc Am 33, 771–778 (1961).

Theeuwes, J., Godijn, R. & Pratt, J. A new estimation of the duration of attentional dwell time. Psychonomic bulletin & review 11, 60–64 (2004).

Dai, H. P., Scharf, B. & Buus, S. Effective attenuation of signals in noise under focused attention. J Acoust Soc Am 89, 2837–2842 (1991).

Posner, M. I. Orienting of attention. Q J Exp Psychol 32, 3–25 (1980).

American Academy of Audiology (2010). Clinical Practice Guidelines: Diagnosis, Treatment and Management of Children and Adults with Central Auditory Processing Disorder. Retrieved from http://www.audiology.org/resources/documentlibrary/Documents/CAPDGuidelines8-2010.pdf (Accessed on 2012 November 29).

Correa, A., Lupianez, J. & Tudela, P. The attentional mechanism of temporal orienting: determinants and attributes. Exp Brain Res 169, 58–68 (2006).

Coull, J. T., Frith, C. D., Buchel, C. & Nobre, A. C. Orienting attention in time: behavioural and neuroanatomical distinction between exogenous and endogenous shifts. Neuropsychologia 38, 808–819 (2000).

Renner, P., Grofer Klinger, L. & Klinger, M. R. Exogenous and endogenous attention orienting in autism spectrum disorders. Child Neuropsychol 12, 361–382 (2006).

Dux, P. E. & Marois, R. The attentional blink: A review of data and theory. Attention, Perception, & Psychophysics 71, 1683–1700 (2009).

Shapiro, K. L., Raymond, J. & Arnell, K. The attentional blink. Trends in cognitive sciences 1, 291–296 (1997).

Fillmore, M. T., Milich, R. & Lorch, E. P. Inhibitory deficits in children with attention-deficit/hyperactivity disorder: intentional versus automatic mechanisms of attention. Development and Psychopathology 21, 539–554 (2009).

Luna, B., Garver, K. E., Urban, T. A., Lazar, N. A. & Sweeney, J. A. Maturation of cognitive processes from late childhood to adulthood. Child Development 75, 1357–1372 (2004).

Redick, T. S., Calvo, A., Gay, C. E. & Engle, R. W. Working memory capacity and go/no-go task performance: selective effects of updating, maintenance and inhibition. J Exp Psychol Learn Mem Cogn 37, 308–324 (2011).

Shinn-Cunningham, B. G. & Best, V. Selective attention in normal and impaired hearing. Trends Amplif 12, 283–299 (2008).

Koch, I., Lawo, V., Fels, J. & Vorlander, M. Switching in the cocktail party: exploring intentional control of auditory selective attention. J Exp Psychol Hum Percept Perform 37, 1140–1147 (2011).

Moore, D. R. Listening difficulties in children: Bottom-up and top-down contributions. Journal of Communication Disorders 45, 411–418 (2012).

Strauss, D. J. et al. Electrophysiological correlates of listening effort: neurodynamical modeling and measurement. Cogn Neurodyn 4, 119–131 (2010).

Li, C. S., Huang, C., Constable, R. T. & Sinha, R. Imaging response inhibition in a stop-signal task: neural correlates independent of signal monitoring and post-response processing. J Neurosci 26, 186–192 (2006).

Moore, D. R., Ferguson, M. A., Edmondson-Jones, A. M., Ratib, S. & Riley, A. Nature of auditory processing disorder in children. Pediatrics 126, e382–390 (2010).

Moore, D. R. The diagnosis and management of auditory processing disorder. Lang Speech Hear Serv Sch 42, 303–308 (2011).

Cross, J., Johnson, D. L., Swank, P., Baldwin, C. D. & McCormick, D. Middle Ear Effusion, Attention and the Development of Child Behavior Problems. Psychology 1, 220–228 (2010).

Roberts, J. et al. Otitis media, hearing loss and language learning: controversies and current research. J Dev Behav Pediatr 25, 110–122 (2004).

Reynolds, C. R. Forward and backward memory span should not be combined for clinical analysis. Arch Clin Neuropsychol 12, 29–40 (1997).

Kelly, A. Normative data for behavioural tests of auditory processing for New Zealand school children aged 7 to 12 years. Australian and New Zealand Journal of Audiology, The 29, 60 (2007).

Bellis, T. J. Assessment and management of central auditory processing disorders in the educational setting: from science to practice (Singular Publishing Group, 2003).

Riccio, C. A., Cohen, M. J., Hynd, G. W. & Keith, R. W. Validity of the Auditory Continuous Performance Test in differentiating central processing auditory disorders with and without ADHD. J Learn Disabil 29, 561–566 (1996).

Riccio, C. A., Reynolds, C. R. & Lowe, P. A. Clinical applications of continuous performance tests: Measuring attention and impulsive responding in children and adults (John Wiley & Sons Inc, 2001).

Dillon, H., Cameron, S., Glyde, H., Wilson, W. & Tomlin, D. An opinion on the assessment of people who may have an auditory processing disorder. J Am Acad Audiol 23, 97–105 (2012).

Pitt, M. A. & Samuel, A. G. Attentional allocation during speech perception: How fine is the focus? Journal of Memory and Language 29, 611–632 (1990).

Scharf, B., Dai, H. & Miller, J. L. The role of attention in speech perception. J Acoust Soc Am 84, S158–S158 (1988).

Klein, R. M. Inhibition of return. Trends Cogn Sci 4, 138–147 (2000).

MacPherson, A. C., Klein, R. M. & Moore, C. Inhibition of return in children and adolescents. J Exp Child Psychol 85, 337–351 (2003).

Watson, C. S. & Nichols, T. L. Detectability of auditory signals presented without defined observation intervals. J Acoust Soc Am 59, 655–668 (1976).

MacMillan, N. & Creelman, D. Detection Theory: A User's Guide. 2nd edn (Lawrence Erlbaum Associates, 2005).

Macmillan, N. A. & Kaplan, H. L. Detection theory analysis of group data: estimating sensitivity from average hit and false-alarm rates. Psychological Bulletin 98, 185–199 (1985).

Miller, J. The sampling distribution of d′. Percept Psychophys 58, 65–72 (1996).

McArthur, G. M. & Bishop, D. V. M. Frequency Discrimination Deficits in People With Specific Language Impairment: Reliability, Validity and Linguistic Correlates. J Speech Lang Hear Res 47, 527–541 (2004).

Baker, R. J., Jayewardene, D., Sayle, C. & Saeed, S. Failure to find asymmetry in auditory gap detection. Laterality 13, 1–21 (2008).

Rocheron, I., Lorenzi, C., Füllgrabe, C. & Dumont, A. Temporal envelope perception in dyslexic children. Neuroreport 13, 1683–1687 (2002).

Sęk, A. & Moore, B. C. J. Implementation of two tests for measuring sensitivity to temporal fine structure. International Journal of Audiology 51, 58–63 (2012).

Best, V., Carlile, S., Kopco, N. & van Schaik, A. Localization in speech mixtures by listeners with hearing loss. J Acoust Soc Am 129, EL210–215 (2011).

van Noorden, L. P. A. S. Temporal coherence in the perception of tone sequences. 7–24 (Institute for Perceptual Research, 1975).

Acknowledgements

This research was financially supported by the HEARing CRC established and supported under the Australian Government's Cooperative Research Centers Program. We wish to acknowledge Dr. Bertram Scharf, Dr. Adam Reeves and Dr. Suzanne Purdy for their helpful suggestions and comments.

Author information

Authors and Affiliations

Contributions

I.D. and M.S conceived the overall concept for the project. I.D., J.L. and S.C. designed the experiments. I.D. recruited the participants and collected the data. I.D. performed the analysis with inputs from J.L. and S.C. The manuscript was mainly prepared by I.D. and J.L. with input from S.C. and M.S. I.D. created the figures. All authors reviewed the manuscript.

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Electronic supplementary material

Supplementary Information

Switch Attention to Listen

Rights and permissions

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 3.0 Unported License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-nd/3.0/

About this article

Cite this article

Dhamani, I., Leung, J., Carlile, S. et al. Switch Attention to Listen. Sci Rep 3, 1297 (2013). https://doi.org/10.1038/srep01297

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep01297

This article is cited by

-

Training with an auditory perceptual learning game transfers to speech in competition

Journal of Cognitive Enhancement (2022)

-

Parental perception of listening difficulties: an interaction between weaknesses in language processing and ability to sustain attention

Scientific Reports (2018)

-

Long-term influence of recurrent acute otitis media on neural involuntary attention switching in 2-year-old children

Behavioral and Brain Functions (2015)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.