Abstract

Scanning electron microscopy (SEM) is a crucial tool for analyzing submicron-scale structures. However, the attainment of high-quality SEM images is contingent upon the high conductivity of the material due to constraints imposed by its imaging principles. For weakly conductive materials or structures induced by intrinsic properties or organic doping, the SEM imaging quality is significantly compromised, thereby impeding the accuracy of subsequent structure-related analyses. Moreover, the unavailability of paired high–low quality images in this context renders the supervised-based image processing methods ineffective in addressing this challenge. Here, an unsupervised method based on Cycle-consistent Generative Adversarial Network (CycleGAN) was proposed to enhance the quality of SEM images for weakly conductive samples. The unsupervised model can perform end-to-end learning using unpaired blurred and clear SEM images from weakly and well-conductive samples, respectively. To address the requirements of material structure analysis, an edge loss function was further introduced to recover finer details in the network-generated images. Various quantitative evaluations substantiate the efficacy of the proposed method in SEM image quality improvement with better performance than the traditional methods. Our framework broadens the application of artificial intelligence in materials analysis, holding significant implications in fields such as materials science and image restoration.

Similar content being viewed by others

Introduction

Due to its exceptional sub-nanometer resolution and large depth of field, scanning electron microscopy (SEM) has emerged as a crucial tool for morphological characterization at the submicron scale1, and it is widely used in materials science2, biomedicine3, chemistry4 and so on. SEM generates high-resolution images by focusing an electron beam onto the sample surface and detecting the emitted secondary electrons. Therefore, a prerequisite for obtaining high-resolution and high-quality SEM images is the high conductivity of samples5. Weakly conductive samples, such as most polymers and some semiconductor materials, often exhibit an excess of electrons or free charges on their surface, which impedes the transmission of electronic signals, resulting in a significant reduction in imaging contrast and clarity. Besides, organic contamination introduced during material synthesis or processing can also diminish the electrical conductivity of the samples6,7. Subject to the electron beam, the organic matter decomposes into carbon-hydrogen compounds and covers the sample surface. This will lead to the charge accumulation and thus diminish the quality of SEM imaging8,9. To improve the sample conductivity, a common method is coating the sample surface with a gold film through vacuum sputtering10. However, the gold layer will cover the original material information, resulting in a reduction in elemental contrast for the material. For samples at the scale of hundreds of nanometers or even smaller, the gold layer will obscure the details of the sample structure, leading to a misrepresentation of surface structures. Additionally, gold-coated samples are generally non-reusable, increasing experimental costs and operational complexities. Therefore, there is an urgent need for a method that can quickly, conveniently, and effectively improve the SEM imaging quality of weakly conductive samples without contaminating or damaging the sample.

With the advancement of computational imaging, image post-processing provides another avenue for enhancing SEM imaging quality. Traditional methods such as linear11 or nonlinear filters12,13 recover sharp images from blurred images by deconvolution methods. They are mostly achieved by simplifying and modeling the principles of blurring, and then utilizing prior information from the images to restore the blurred images. However, in practical situations, the types of blurring are more complex than those modeled. At the same time, iterative calculation of the blur kernel requires a significant amount of time. Compared to traditional methods, neural networks can automatically learn the blur kernel without the need for manual design, and they exhibit faster computational speeds14,15,16,17,18,19,20. Therefore, deep learning-based methods have been widely applied to enhance micrograph quality, such as image deblurring21,22,23,24 and super-resolution25,26,27,28,29. For SEM images, Haan et al.30 used a Generative Adversarial Network (GAN) to increase the resolution of SEM images by two fold. Juwon et al. proposed a multi-scale network for deblurring defocused SEM images, achieving superior performance compared to traditional methods31. Although deep learning has achieved significant advancements in SEM imaging improvement, the existing studies primarily rely on supervised learning, which requires paired data containing both blurred and clean images for network training. However, in practical scenarios involving weakly conductive samples, it is challenging to obtain one-to-one corresponding SEM images with both blurred and clear versions under the same field of view. Hence, there is a pressing need for an unsupervised learning approach that can perform image deblurring without relying on paired data training.

In recent years, the characteristics of Cycle-consistent Generative Adversarial Network (CycleGAN) unpaired training make unsupervised learning possible32, and demonstrate comparable performance to supervised methods. This framework has been successfully applied to enhance the quality of natural33,34, satellite35, and fluorescence microscopic images36,37,38. Here, we propose an unsupervised learning-based approach to improve the quality of SEM images captured from weakly conductive samples. The proposed method employs the CycleGAN architecture to learn from unpaired data consisting of blurry and clear SEM images in an end-to-end manner. An additional edge loss function was introduced into the CycleGAN model to address the requirements of material structure analysis, helping eliminate artifacts and restore detailed information about the material contours. Multiple image evaluation metrics demonstrated that the improved CycleGAN model can effectively enhance the SEM image quality of various weakly conductive samples without any complicated physical operations.

Principle and network analysis

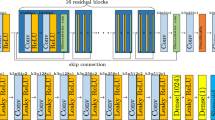

The overall framework of our method is shown in Fig. 1a, which is inspired by CycleGAN. It consists of two generators (G and F) and two discriminators (\(D_{A}\) and \(D_{B}\)). A and B represent the blurred and clear image sets, respectively, and no pre-aligned image pairs are required in the two image collections. Generator G aims to translate the blurred image A to a clear one G(A). The discriminator \(D_{B}\) determines whether G(A) is a real or generated clear image. Generator F aims to translate the clear image B to a blurred one F(B). The discriminator \(D_A\) determines whether F(B) is a real or generated blurred image. These generators and discriminators are trained using adversarial loss (\(L_{GAN}\)), which allows the generator to complete the conversion between different image domains. To address the gradient vanishing problem and generate high-quality images, the \(L_{GAN}\) employed least squares loss instead of cross-entropy loss. The cycle-consistency loss (\(L_{GAN}\)) is imposed to make the cycle-generated images as close to the input images as possible. Here, the Structure Similarity Index Measure (SSIM)39 loss is used as the \(L_{cycle}\), which can measure the similarity between the initial input images A and B and the corresponding cyclic images F(G(A)) and G(F(B)) output by two generators in terms of brightness, contrast, and structure. The utilization of \(L_{GAN}\) and \(L_{cycle}\) allow the network to be trained with unpaired data. In addition, blurred image A and clear image B are input into generators F and G to construct identity loss (\(L_{id}\)) and edge loss (\(L_{edge}\)), respectively. The \(L_{id}\) is used to ensure that the information from the original input image is retained. The \(L_{edge}\) utilizes the Sobel operator to extract image edge information and preserves the edge detail information of the image. This is necessary because just using the weak constraint introduced by cycle consistency is prone to generate noise artifacts and structural distortion in the output images when our datasets consist of SEM images of various materials with different morphologies. The equations for the loss functions can be seen in Method. The generators G and F are trained simultaneously to learn the mapping relationship between the two image domains.

(a) Schematic of the overall architecture. The proposed method consists of two generators (G and F) and two discriminators (\(D_{A}\) and \(D_{B}\)). The generator G predicts clean images from blurred image A, Discriminator \(D_A\) attempts to distinguish between the real clear image and the generated clear image. The generator F predicts a blurred image from clean image A, and the discriminator \(D_{B}\) attempts to distinguish between the real blurred image and the generated blurred image. Loss functions include adversarial loss (\(L_{GAN}\)), cyclic consistency loss (\(L_{cycle}\)), identity loss (\(L_{id}\)), and edge loss (\(L_{edge}\)). (b) The generator network structure. Numbers below each layer represent the number of channels. (c) The discriminator network structure.

Deblurring results of different models on simulated datasets. The material in the SEM images is iron chloride. (a) Deblurring results for data with Gaussian blur only. (b) Deblurring results for data with Gaussian blur and synthetic fog. (c) Deblurring results for data with synthetic fog and hybrid blur (Gaussian blur, motion blur, out-of-focus blur).

Figure 1b and c show the structure of the generator and discriminator, respectively. We designed a Unet network structure with multi-scale convolution as our generator, which was inspired by inception blocks21 and Unet40. The designed generator has 8 convolution layers and 8 deconvolution layers. Each convolution layer is followed by an instance norm and an activation function (leaky ReLU). Except for the stride size of the eighth convolution layer and the first deconvolution layer is 1, the other convolution stride sizes are 2. In addition, there are 14 Multi blocks, whose structure is shown in the inset in Fig. 1b. Multi block can enhance image edge features by using multi-scale convolution, to better recover image details. Each Multi block consists of 1\(\times\)1 convolution kernels and 3\(\times\)3 convolution kernels, and all convolution stride sizes are 1. Skip connections are used in the middle to fuse information at different scales. The discriminator shown in Fig. 1c was implemented in a full convolution manner. 5 convolution layers were used in the discriminator. Except for the last convolutional layer, each convolution layer was followed by an instance norm and an activation function (leaky ReLU). Except the stride size of the first three convolution layers is 2, the other convolution stride sizes are 1.

Verification and analysis of experimental results

Results on the simulated dataset

It is impossible to quantitatively characterize the performance of the model in image enhancement without paired samples. Here, to quantitatively evaluate the effectiveness of the proposed model, the simulated dataset was created comprising pairs of blurred and clear images. Clean SEM images were selected as ground truth and the corresponding low-quality SEM images were synthesized by introducing blur. In response to the weak intrinsic conductivity of the material and the scenario of organic compound doping, three simulated blurry datasets A, B, C were constructed by applying Gaussian blur, Gaussian blur and synthetic fog, hybrid blur (including Gaussian blur, motion blur, out-of-focus blur) and synthetic fog on the clear SEM images, respectively. Figure 2a–c is obtained separately from these three simulated datasets. The kernel size and standard deviation \(\sigma\) of the Gaussian blur were set as 7\(\times\)7 and 1, respectively(detailed information seen in Methods). \(\sigma\) = 1 is the level of blurriness that typically occurs in practice. And in practical applications, pixels beyond approximately 3\(\sigma\) distance can be considered negligible for the calculation results. Hence, image processing programs only need to compute a (6\(\sigma\)+1)\(\times\)(6\(\sigma\)+1) matrix to ensure the relevant pixel influence. The matrix is the Gaussian blur kernel, whose size was set as 7\(\times\)7 in our work. The synthetic fog refers to fogging an image to reduce its quality. The degree of fogging is random at different positions in the image(detailed information seen in Methods). Blurry and clear datasets were randomly shuffled to achieve unpaired data training. For comparison, the CycleGAN and the traditional methods such as blind deconvolution (Blind Deconv for short)41 and Wiener filtering algorithm (Wiener for short)42, were applied to enhance the quality of the simulated blurred images. 10 iterations were set for blind deconvolution. The results are shown in Fig. 2. It can be seen that, for all types of blurry images, CycleGAN demonstrates superior image restoration performance, improving the clarity and contrast of images to approach the ground truth. In contrast, traditional methods such as blind deconvolution and Wiener filtering show poorer performance in handling images with unknown blurry kernels, and it is difficult to recover the contrast and clarity of blurry images that have been modified with added synthetic fog and Gaussian blur.

To quantitatively evaluate the deblurring results, SSIM39 and Peak Signal-to-Noise Ratio (PSNR)43 metrics are employed and the average values on the test datasets are shown in Table 1. SSIM measures the image structure similarity by comparing the brightness and contrast between the two images. PSNR is the ratio of the maximum power of the image signal to the noise power (detailed equations seen in Methods). The value range of SSIM is between 0 and 1, where 1 indicates perfect similarity between two images, 0 indicates no similarity. The value range of PSNR is between 0 and infinity, where higher values indicate better image quality. The results show that our method achieves higher SSIM and PSNR scores relative to the traditional methods, especially in datasets B and C, indicating the effectiveness of CycleGAN in improving the SEM imaging quality of weakly conductive samples. To further demonstrate the superiority of the proposed CycleGAN, two other traditional methods, the Richardson–Lucy (RL) algorithm44 and constrained least squares (CLS) filter algorithm45, have been added for comparison and the results are shown in Supplementary Fig. S1 and Table S1. It can be seen that the performance of the proposed CycleGAN surpasses traditional methods significantly.

To visually and comprehensively demonstrate the deblurring effects of each model, three no-reference image quality evaluation metrics, Average gradient (AG)46, Contrast (CON)47, and Spatial frequency (SF)48, were also used to evaluate the results in Fig. 2 and Supplementary Fig. S1. AG is the average value of the image gradient. CON measures the contrast of the image by the gray difference between adjacent pixels and the pixel distribution probability. SF reflects the change rate of the image grayscale, which is used to measure the overall activity level of an image. The values of AG, CON, and SF are numbers greater than or equal to zero but have no upper limit, the larger the values, the clearer the image. Further details on the image quality evaluation metrics are presented in the “Methods” section. As shown in Table 2 and Supplementary Table S2, the CycleGAN model achieved the maximum values for the three metrics, which were closest to the ground truth, indicating that the CycleGAN model can effectively improve image sharpness and highlight image details. Conversely, traditional methods had poor performance on the image restoration, especially in cases involving complex blur.

Results on the real dataset

To evaluate the deblurring capability of our model in real data, the model was trained and tested on the real dataset. The real dataset consists of unmatched clear and blurry SEM images obtained from experiments. Clear SEM images are obtained by SEM imaging of materials with good conductivity. The blurry images are obtained by SEM imaging of the above materials after introducing organic contamination. Figure 3 and Supplementary Fig. S2 show the SEM image deblurring results of various models on different samples. The materials shown in Fig. 3a–c were tungsten trioxide (\(\mathrm WO_{3}\)) and copper sulfide (CuS), respectively. Subjectively, compared with the traditional methods, the recovered images obtained by our method have clearer edges, better contrast, and richer details. Objectively, the recovered images were evaluated by the no-reference image quality evaluation metrics, and the results are shown in Table 3 and Supplementary Table S3. It can be seen that the recovered images obtained by the CycleGAN model achieve the maximum values for all metrics, consistent with the results obtained from the simulated dataset. These results indicate that the CycleGAN model used here has stable performance on images of different materials and can adapt to different degrees and types of blurriness, enhancing image detail information and clarity.

In addition to weakly conducting samples obtained by adding organic contaminants, we also verified the effectiveness of our method on SEM images of weakly conducting material that has not been trained by a network. Figure 4a shows the SEM image of silicon dioxide (\({\text{SiO}}_{2}\)) particles. Due to its intrinsic weak conductivity, the high-magnification SEM image of \({\text{SiO}}_{2}\) has low imaging quality which is not clear and the edges are blurred. After processing with the CycleGAN model, the image quality has significantly improved, and the particle edges are clearer (Fig. 4b). The rise in numerical values for multiple evaluation metrics further confirms this conclusion (Table 4). Therefore, our method can effectively improve the SEM imaging quality of weakly conductive materials.

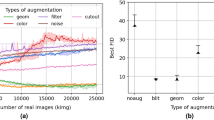

Edge Loss

As SEM images of micro-nano scale materials often exhibit rich edge details, an additional edge loss was incorporated when constructing the network. Here, the effects of the edge loss on the recovered images were investigated. As a crucial parameter, the value of edge loss weight \(\gamma\) directly influences the quality of the generated images. If \(\gamma\) is too small, the generator tends to produce artifacts in the output. Conversely, if \(\gamma\) is too large, the generator prioritizes maintaining the input image, leading to a decrease in quality. The value of \(\gamma\) in our model was determined through quantitative evaluation of synthetic data, as shown in Fig. 5a. As \(\gamma\) increases from 0 to 20, the SSIM and PSNR values increase first and then decrease. Both reach their maximum values simultaneously when \(\gamma\) is 10. Based on this, the value of \(\gamma\) in our model was set as 10.

To further validate the effectiveness of the edge loss, the blurry SEM images were processed by the CycleGAN model with and without edge loss, as shown in Fig. 5b. Compared to the original image, both models enhanced the clarity and contrast of the images. However, the model without edge loss resulted in obvious artifacts on the edge of the material. The model with edge loss could maintain the edge details of the material, thus confirming the effectiveness of our edge loss. Furthermore, experiments were performed using the other operator as edge loss. Supplementary Fig. S3 and Table. S4 show that both Kirsch and Sobel operators can effectively restore the edge information of the image. The results demonstrated the validity of adding edge loss. Compared to multiple operators, the Sobel operator performs well and has low computational complexity, making it particularly suitable for our task.

Conclusions

In summary, an unsupervised method based on CycleGAN was proposed to enhance the SEM imaging quality for weakly conductive samples. In the case of unknown blurry kernels and the absence of paired datasets, the proposed method effectively improves the quality of various blurry SEM images, including the restoration of image details, contrast, and improvement of clarity. The performance surpasses traditional methods significantly. In comparison to the reported CycleGAN architectures, we introduced an additional edge loss function tailored to material analysis needs, resulting in the removal of artifacts and restoring material contour details. As far as we know, this is the first application of unsupervised learning in improving SEM image quality. We believe that the work contributes to the expansion of artificial intelligence applications in materials science and has significant importance for material analysis.

Methods

Image quality metrics

AG is defined as follows:

where f(x, y) is pixel intensity of the image at (x, y), which is grayscale value in our work.

CON is defined as follows:

where \(\delta\) is grayness difference between adjacent pixels, \(P_\delta\) is the pixel distribution probability.

SF is defined as follows:

where RF and CF are row frequency and column frequency respectively:

SSIM can be expressed as follows:

where \(\mu\) and \(\sigma\) are the mean and standard deviation of the images at pixel i over the 11 \(\times\) 11 Gaussian filter, respectively. C1 and C2 are non-zero constants introduced to avoid the denominator from being 0. Usually, the C1 and C2 are much less than 1. We set the values of C1 and C2 as 0.0001 and 0.0004, respectively.

PSNR is defined as follows:

max(I) is the maximum pixel value, which is equal to 1 for normalized images. MSE is the mean squared error difference between the two images.

Loss function

The adversarial loss (\(L_{GAN}\)) for generator G and the discriminator \(D_B\) is specified as follows:

where A and B are unpaired blurred and clear images, a \(\in\) A, b \(\in\) B. Similarly, the \(L_{GAN}\) for generator F and the discriminator \(D_{A}\) is specifically as follows:

The cycle consistency loss (\(L_{cyle}\)) is as follows:

Mean-squared error function(MSE) was used as the identity loss (\(L_{id}\)) which was imposed on both generators G and F, as shown below:

The edge loss (\(L_{edge}\)) can be expressed as follow:

The Sobel operators in the x and y directions are:

Our final loss is defined as the weighted sum of the above four losses:

where coefficients \(\lambda _1\), \(\lambda _2\), and \(\gamma\) are the weights of cycle consistency loss, identity loss, and edge loss, respectively. The weight size determines the influence of different losses on the overall loss function. The values of \(\lambda _1\), \(\lambda _2\), and \(\gamma\) were empirically determined as 10, 5, and 10, respectively.

Experimental settings

The proposed model was trained using the TensorFlow framework on an NVIDIA GeForce RTX 3090. Based on the computer hardware used, each experimental model was trained for 50 epochs with a batch size of 1. Adam Optimizer was used to optimize the gradients with a learning rate of 0.0001. The image size was set to a fixed resolution of 256 \(\times\) 256 pixels for input to the network during training. All the images were acquired on the Hitachi SU8010 SEM that was used with a 5 kV accelerating voltage.

Synthesis dataset: Three synthetic datasets were created. The first dataset only adds Gaussian blur. Gaussian blur is an image blurring filter that uses the Gaussian distribution to calculate the transformation of each pixel in the image. In two-dimensional space, it is defined as:

where \(\sigma\) is the standard deviation of the function, which controls the radial range of the function. The second dataset adds a random concentration synthetic fog and Gaussian blur. The formation of a foggy image can be formulated as follows:

where I(x, y) and f(x, y) refer to the foggy and original image, respectively. \(\beta\) is the scattering coefficient, the d is the depth of field, and the A controls the light intensity. The \(\beta\) is randomly chosen in the range of [1.5- 2.5], d is 1, and the A is 3. The third dataset adds hybrid blur (Gaussian blur, motion blur, out-of-focus blur), and synthetic fog. The motion blur can be expressed as follows:

where the \(\theta\) is the motion blur angle and the d is the motion blur length. The \(\theta\) = 0\(\circ\) and d = 10 pixels. The out-of-focus blur caused by a system with a circular aperture can be modeled as a uniform disk with a radius r:

where the r is 5. The parameters of the applied Gaussian blur for all datasets were the kernel size and standard deviation, which were set as 7\(\times\)7 and 1, respectively. After data expansion, we obtained 2550 pairs of 256\(\times\)256 images, 10% of which were used for testing. During training, blurry and clear data sets were randomly shuffled to achieve unpaired data training.

Real dataset: We deliberately contaminated the samples to obtain blurry SEM images of weakly conductive samples, and collected SEM images of normal samples as clear images. After data expansion, we obtained 1550 pairs of 256 \(\times\) 256 pixel size images, of which 10% were used for testing.

Data availability

The data used in this study are available upon request to the corresponding author.

References

Goldstein, J. Practical Scanning Electron Microscopy: Electron and Ion Microprobe Analysis (Springer, 2012).

Inkson, B. J. Scanning electron microscopy (SEM) and transmission electron microscopy (TEM) for materials characterization. In Materials Characterization Using Nondestructive Evaluation (NDE) Methods 17–43 (Elsevier, 2016).

Golding, C. G., Lamboo, L. L., Beniac, D. R. & Booth, T. F. The scanning electron microscope in microbiology and diagnosis of infectious disease. Sci. Rep. 6, 1–8 (2016).

Rout, J., Tripathy, S., Nayak, S., Misra, M. & Mohanty, A. Scanning electron microscopy study of chemically modified coir fibers. J. Appl. Polym. Sci. 79, 1169–1177 (2001).

Akhtar, K., Khan, S. A., Khan, S. B. & Asiri, A. M. Scanning Electron Microscopy: Principle and Applications in Nanomaterials Characterization (Springer, 2018).

San Gabriel, M. et al. Peltier cooling for the reduction of carbon contamination in scanning electron microscopy. Micron 172, 103499 (2023).

Sullivan, N., Mai, T., Bowdoin, S. & Vane, R. A study of the effectiveness of the removal of hydrocarbon contamination by oxidative cleaning inside the SEM. Microsc. Microanal. 8, 720–721 (2002).

Soong, C., Woo, P. & Hoyle, D. Contamination cleaning of TEM/SEM samples with the zone cleaner. Microsc Today 20, 44–48 (2012).

Postek, M. T. An approach to the reduction of hydrocarbon contamination in the scanning electron microscope. Scanning J. Scanning Microsc. 18, 269–274 (1996).

Murtey, M. & Ramasamy, P. Life science sample preparations for scanning electron microscopy. Acta Microsc. 30, 80–91 (2021).

Lin, F. & Jin, C. An improved wiener deconvolution filter for high-resolution electron microscopy images. Micron 50, 1–6 (2013).

Carasso, A. S., Bright, D. S. & Vlada’r, A. S. E. Apex method and real-time blind deconvolution of scanning electron microscope imagery. Opt. Eng. 41, 2499–2514 (2002).

Williamson, M. & Neureuther, A. Utilizing maximum likelihood deblurring algorithm to recover high frequency components of scanning electron microscopy images. J. Vac. Sci. Technol. B Microelectron. Nanometer Struct. Process. Meas. Phenom. 22, 523–527 (2004).

Ströhl, F. & Kaminski, C. F. A joint Richardson–Lucy deconvolution algorithm for the reconstruction of multifocal structured illumination microscopy data. Methods Appl. Fluoresc. 3, 014002 (2015).

Lin, Z. et al. Dbganet: Dual-branch geometric attention network for accurate 3d tooth segmentation. IEEE Transactions on Circuits and Systems for Video Technology (2023).

Liu, T. et al. An adaptive image segmentation network for surface defect detection. IEEE Transactions on Neural Networks and Learning Systems (2022).

Shi, M. et al. LMFFNet: A well-balanced lightweight network for fast and accurate semantic segmentation. IEEE Transactions on Neural Networks and Learning Systems (2022).

Lin, Z. et al. Deep dual attention network for precise diagnosis of COVID-19 from chest CT images. IEEE Transactions on Artificial Intelligence (2022).

Bai, Y., Zhang, Z., He, Z., Xie, S. & Dong, B. Dual-convolutional neural network-enhanced strain estimation method for optical coherence elastography. Opt. Lett. 49, 438–441 (2024).

Shi, M. et al. Lightweight context-aware network using partial-channel transformation for real-time semantic segmentation. IEEE Transactions on Intelligent Transportation Systems (2024).

Wang, Y. et al. Deblurring microscopic image by integrated convolutional neural network. Precis. Eng. 82, 44–51 (2023).

Zhang, C. et al. Correction of out-of-focus microscopic images by deep learning. Comput. Struct. Biotechnol. J. 20, 1957–1966 (2022).

Cheng, A. et al. Improving the neural segmentation of blurry serial SEM images by blind deblurring. Comput. Intell. Neurosci. textbf2023 (2023).

Fanous, M. J. & Popescu, G. GANscan: continuous scanning microscopy using deep learning deblurring. Light Sci. Appl. 11, 265 (2022).

Zhang, Q. et al. Single-shot deep-learning based 3d imaging of Fresnel incoherent correlation holography. Opt. Lasers Eng. 172, 107869 (2024).

Wang, H. et al. Deep learning enables cross-modality super-resolution in fluorescence microscopy. Nat. Methods 16, 103–110 (2019).

Weigert, M. et al. Content-aware image restoration: Pushing the limits of fluorescence microscopy. Nat. Methods 15, 1090–1097 (2018).

Rivenson, Y. et al. Deep learning microscopy. Optica 4, 1437–1443 (2017).

Huang, T. et al. Single-shot Fresnel incoherent correlation holography via deep learning based phase-shifting technology. Opt. Express 31, 12349–12356 (2023).

de Haan, K., Ballard, Z. S., Rivenson, Y., Wu, Y. & Ozcan, A. Resolution enhancement in scanning electron microscopy using deep learning. Sci. Rep. 9, 12050 (2019).

Na, J., Kim, G., Kang, S.-H., Kim, S.-J. & Lee, S. Deep learning-based discriminative refocusing of scanning electron microscopy images for materials science. Acta Mater. 214, 116987 (2021).

Zhu, J.-Y., Park, T., Isola, P. & Efros, A. A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision 2223–2232 (2017).

Wen, Y. et al. Structure-aware motion deblurring using multi-adversarial optimized cyclegan. IEEE Trans. Image Process. 30, 6142–6155 (2021).

Jaisurya, R. & Mukherjee, S. Attention-based single image dehazing using improved cyclegan. In 2022 International Joint Conference on Neural Networks (IJCNN) 1–8 (IEEE, 2022).

Song, J. et al. Unsupervised denoising for satellite imagery using wavelet directional cyclegan. IEEE Trans. Geosci. Remote Sens. 59, 6823–6839 (2020).

Li, X. et al. Unsupervised content-preserving transformation for optical microscopy. Light Sci. Appl. 10, 44 (2021).

Park, H. et al. Deep learning enables reference-free isotropic super-resolution for volumetric fluorescence microscopy. Nat. Commun. 13, 3297 (2022).

Ning, K. et al. Deep self-learning enables fast, high-fidelity isotropic resolution restoration for volumetric fluorescence microscopy. Light Sci. Appl. 12, 204 (2023).

Wang, Z., Bovik, A. C., Sheikh, H. R. & Simoncelli, E. P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 13, 600–612 (2004).

Ronneberger, O., Fischer, P. & Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part III 18, 234–241 (Springer, 2015).

Holmes, T. J. et al. Light microscopic images reconstructed by maximum likelihood deconvolution. Handbook of Biological Confocal Microscopy 389–402 (1995).

Wiener, N. Extrapolation, Interpolation, and Smoothing of Stationary Time Series: With Engineering Applications (The MIT press, 1949).

Huynh-Thu, Q. & Ghanbari, M. Scope of validity of PSNR in image/video quality assessment. Electron. Lett. 44, 800–801 (2008).

Ingaramo, M. et al. Richardson-Lucy deconvolution as a general tool for combining images with complementary strengths. ChemPhysChem 15, 794–800 (2014).

Yeoh, W.-S. & Zhang, C. Constrained least squares filtering algorithm for ultrasound image deconvolution. IEEE Trans. Biomed. Eng. 53, 2001–2007 (2006).

Wang, R., Du, L., Yu, Z. & Wan, W. Infrared and visible images fusion using compressed sensing based on average gradient. In 2013 IEEE International Conference on Multimedia and Expo Workshops (ICMEW), 1–4 (IEEE, 2013).

Liu, W., Zhou, X., Jiang, G. & Tong, L. Texture analysis of MRI in patients with multiple sclerosis based on the gray-level difference statistics. In 2009 First International Workshop on Education Technology and Computer Science, vol. 3, 771–774 (IEEE, 2009).

Li, S., Kwok, J. T. & Wang, Y. Combination of images with diverse focuses using the spatial frequency. Inf. Fusion 2, 169–176 (2001).

Acknowledgements

This work was financially supported by the National Key R & D Program of China (2021YFB2900903) and the National Natural Science Foundation of China (12004444, 62175041, 62105071); Guangdong Introducing Innovative and Entrepreneurial Teams of “The Pearl River Talent Recruitment Program” (Grant No. 2019ZT08X340); Guang-dong Provincial Key Laboratory of Information Photonics Technology (Grant No. 2020B121201011); Guangzhou Basic and Applied Basic Research Foundation (No. 2023A04J2043).

Author information

Authors and Affiliations

Contributions

X.G. and T.H. performed the computation, acquired and analyzed the data, wrote the manuscript; P.T. and J.D. provided funding and guided the experiment; L.Z. and W.Z. provided funding and prepared the final version of the manuscript. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gao, X., Huang, T., Tang, P. et al. Enhancing scanning electron microscopy imaging quality of weakly conductive samples through unsupervised learning. Sci Rep 14, 6439 (2024). https://doi.org/10.1038/s41598-024-57056-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-57056-4

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.