Abstract

Time series data collected using wireless sensors, such as temperature and humidity, can provide insight into a building’s heating, ventilation, and air conditioning (HVAC) system. Anomalies of these sensor measurements can be used to identify locations of a building that are poorly designed or maintained. Resolving the anomalies present in these locations can improve the thermal comfort of occupants, as well as improve air quality and energy efficiency levels in that space. In this study, we developed a scoring method to identify sensors that shows collective anomalies due to environmental issues. This leads to identifying problematic locations within commercial and institutional buildings. The Dynamic Time Warping (DTW) based anomaly detection method was applied to identify collective anomalies. Then, a score for each sensor was obtained by taking the weighted sum of the number of anomalies, vertical distance to an anomaly point, and dynamic time-warping distance. The weights were optimized using a well-defined simulation study and applying the grid search algorithm. Finally, using a synthetic data set and the results of a case study we could evaluate the performance of our developed scoring method. In conclusion, this newly developed scoring method successfully detects collective anomalies even with data collected over one week, compared to the machine learning models which need more data to train themselves.

Similar content being viewed by others

Introduction

Anomaly detection is one of the most popular research areas in time series data mining. A data point that does not follow the pattern of the rest of the data can be considered an anomaly or outlier. Identifying these anomaly points is important for many industries. Some applications include the detection of abnormal behavior of ECG signals in the health industry1, credit card fraud detection in the banking industry2, anomalous behavior in aircraft3, identifying spammers, online fraudsters in social media4 and many more5.

Nowadays commercial buildings also provide an opportunity to monitor indoor environment quality with the help of Internet of Things (IoT) sensors. These devices measure the environment of the building and generate temporal data which helps building owners to understand the indoor environmental quality (IEQ) and thermal comfort of the tenants. In another research work, we used these sensor data to identify locations with similar thermal environments of a building6. Identifying the issues of the indoor thermal environment is important for building owners to save energy and keep tenants comfortable. In order to identify these issues, we can inspect IoT sensor data and detect abnormal behaviors. The presence of these abnormal behaviors might be occurred due to the issues of the location of those sensors are located.

Existing anomaly detection algorithms can be mainly divided into two categories: supervised and unsupervised. Supervised anomaly detection in sensor data refers to the process of identifying abnormal events or patterns in data using labeled training data. The supervised anomaly detection methods have certain drawbacks including limited anomaly labeling, sensitivity to labeling errors, difficulty to identify novel anomalies and insufficient data. Due to these limitations, most anomaly detection methods use unsupervised or semi-supervised techniques. In 2021, Yan7 introduced a generative adversarial network-based (GAN8) chiller fault detection framework. However, the framework used a labeled training dataset, which identifies the specific fault to generate synthetic data. In our study, where we work with Internet of Things (IoT) sensor data, we do not have any labels, and the anomaly patterns are subject to change. Because of these reasons, unsupervised methods are more suitable for our study.

Unsupervised methods in anomaly detection do not rely on labeled anomaly data during training. Instead, they learn patterns and structures inherent in the data to detect anomalies based on deviations from the learned normal behavior. Some state-of-the-art approaches for unsupervised anomaly detection include Robust KDE (RKDE)9, Local Outlier Factor (LOF)10, mixture models (EGMM)11, one-class SVM (OCSVM)12, and autoencoders13, Isolation Forest (IForest)14. However, unsupervised methods also may have limitations in certain cases, such as difficulty in identifying the types of anomalies or sensitivity to the data distribution. Most of the unsupervised methods rely on the distribution and need to have proper parameter tuning. Quintana and the team have used Automated Load profile Discord Identification(ALDI)15 to identify outliers in energy load profiles. But this method identifies outliers by testing a hypothesis for significantly different time series distributions. In our method we do not rely on the distribution, but the temporal behavior of the time series.

Transitioning to the application of this framework in building science, previous studies have primarily concentrated on pinpointing abnormal data points in a time series. However, a single abnormal point may not necessarily signify a problem within a specific building location. For a more comprehensive understanding, we must look beyond single-sensor anomalies and instead concentrate on clusters of abnormal behaviors across all sensors on a building floor. When the same abnormal behavior at the same time is observed in multiple sensors, it suggests a higher likelihood of a building anomaly. This approach allows us to identify potential issues with the building’s HVAC system, control system, external envelope, or other infrastructure problems.

In 2019, Wang and his team has proposed an anomaly detection framework for thermal comfort in buildings16. It is a stochastic-based, two-step anomaly detection framework that is based on occupants’ votes. An outlier is automatically flagged when a vote is significantly different from other occupants’ votes. In the building industry, Fault diagnosis and detection (FDD) is an application of anomaly detection that monitors building HVAC systems to identify faults17. Graph-based anomaly detection methods also has been introduced by some researchers18,19 Another research work20 was done to detect anomalies in indoor office space by predicting values using long short-term memory (LSTM). They have used IoT sensors to collect temperature and humidity data.

The majority of existing research21,22,23,24 have considered identifying abnormal points of a single time series (intra-time series anomaly detection). But, these single abnormal points can be raised due to equipment failures within the data collection hardware itself, which may lead to a false positive or false negative. Lieu et al.21 have used energy data, and a data point, which has fallen outside the \(95\%\) confidence bounds, is considered as an abnormal consumption. By dividing univariate time series into subsequences, Debanjana and Harry22 could classify a data point as an anomaly or normal point using one-class support vector machines (OC-SVM) in 2020. In Wei et al.’s paper23, an unsupervised temperature anomaly detection method is proposed to detect anomalies in real-time temperature time series. It sets dynamic thresholds based on the Smoothed Z-Score Algorithm.

The importance of our study is that it identifies abnormal time series compared to every time series that we use in a study (inter-time series anomalies) and proposes a novel method to identify collective anomalies which can occur due to environmental issues. Furthermore, this method operates without the need for a labeled large training dataset and does not depend on the distribution of time series data. Ultimately the locations of these sensors can be considered as the locations that indicate a potential issue within the HVAC system or building construction. The scoring method development considered the number of anomalies, the vertical distance between the average point and an abnormal point, and the DTW distance between the average time series and the given time series. In the end, we applied the developed scoring method in a school building, located in New York, USA, and a synthetic data set, and discussed the results.

Methods

Anomaly detection

An anomaly is something that deviates from what is standard or expected25. The anomaly detection problem for time series is usually formulated in a way that can identify outlier data points relative to some usual signal. Anomaly detection within the context of buildings has real-world implications. Anomalies in building IEQ data can be caused by either environmental issues or hardware issues. A collective anomaly could identify inefficient controls or HVAC systems or could point to occupant behaviors negatively impacting the energy use within that space26. Contextual anomalies (as defined by a greater variation from the average within that same space) could identify failing or poorly calibrated hardware, or failure points within a building’s mechanical or construction environment. Figure 1 shows the difference between these contextual and collective anomalies.

There are many approaches to detecting an anomaly in time-series data27 and a few common methods are presented in Table 1. Seasonal Trend Decomposition28 is one of the classic methods, and it uses a threshold to identify anomalies. Isolation Forest29 is based on the classification models, and it does not consider any distance measures. The forecasting method30 predicts the next point and based on the identified pattern from the past data it tries to identify anomalies. All these three methods have different methods of identifying anomalies. Hence we applied these three anomaly detection methods and DTW based anomaly detection method, which is explained in the next section, to sample time series data and selected the best method. The best method should identify collective anomalies, as they represent environmental issues.

Anomaly detection method based on dynamic time warping

This method is based on the anomaly detection method, which was introduced by Diab and team31. It has a control time series and a data time series. Anomalies are identified based on the distance between points of the optimal path. The optimal path means the optimal match between the control series and the given time series. The basic steps of this algorithm are as follows:

-

1.

Identify the optimal similarity path between two series (using DTW).

-

2.

Calculate Euclidean distances between those points in the optimal path.

-

3.

Calculate median absolute deviation (MAD) of distances.

-

4.

Consider the points which are 3 times MAD away from the median as outliers.

-

5.

Three or more consecutive outliers are considered as anomalies.

The importance of this algorithm is, it considers three or more consecutive outliers as anomalies. It detects only the collective anomalies, which are caused by environmental issues. However, this algorithm identifies anomalies based on a control sequence, but in practical situations, we will not always be able to find control sequences. Also, this method detects anomalies within single time series. But in our study, we want to identify abnormal time series compared to the all time series (inter-time series anomaly detection). Then it helps to identify abnormal sensors and locations with issues. Due to these limitations, we did some alterations to the existing method in order to apply it in our research study.

Instead of the control sequence, we used the median time series, which was created by calculating the median values of all the time series at each time point. Also when calculating the MAD value, we calculated all the distance values, comparing the median time series with each time series. By calculating MAD in that way, we could consider all the time series and identify the anomalies.

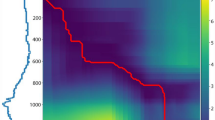

Dynamic time warping

Dynamic Time Warping (DTW)32 measures the distance between two arrays or time series. DTW is a method that calculates an optimal match between two given sequences. This method allows us to find the distance between sequences of different lengths. Let A and B be two sequences with length \(L_A\) and \(L_B\) respectively. \(a_i\) and \(b_j\) indicate the ith and jth observations of A and B respectively. Then the pairwise euclidean distances33 can be calculated for each observation of A and B. It will yield the \(L_A \times L_B\) distance matrix S. The cumulative distance matrix D is calculated as in Eq. (1). The matrix D captures the total cost of alignment between the \(a_1,b_1\) and \(a_{L_A},b_{L_B}\). A lower total cost shows a higher similarity between the two sequences.

In the above equation \(i=1,\ldots ,L_A\), \(j=1,\ldots ,L_B\) and \(S_{ij} = d(a_i,b_j)\). The final distance between A and B is the bottom corner value of the cumulative distance matrix D, which is \(D(L_{A},L_B)\)

Median absolute deviation

The Median Absolute Deviation (MAD) is a robust measure of how a set of data is spread out. The variance and standard deviation are also measures of spread, but they are more affected by extremely high or extremely low values. Also, in practical situations, it is hard to get normally distributed data. Hence, the MAD is one statistic that we can use instead. It is less affected by outliers because outliers have a smaller effect on the median than they do on the mean. MAD is defined as follows,

Here \(X_i\) represents ith observation and according to our study X means the list distances between each data point of the optimal path, created using each time series and the control time series.

Abnormal sensor detection method

In this section, we explain the method we developed to identify sensors that can be considered abnormal compared to a group of sensors positioned within a building. The locations where those abnormal sensors are located can be considered as problematic areas of the building. Algorithm 1 summarizes the process of our algorithm.

We developed a scoring method to identify abnormal sensors, and identifying the main parameters which help to detect differences between two-time series was important. Considering literature and observations we decided to use the following parameters to compare the difference between the control and the given time series:

-

1.

Number of anomalies. (based on section “Anomaly detection method based on dynamic time warping”).

-

2.

Median of vertical Euclidean distances between outlier points, and control time series.

-

3.

DTW distance between time series (check the similarity of the patterns).

We calculated the above values for all the sensors and normalized using min-max normalization and bring all values into one scale, from 0 to 1. Not all these three parameters have the same level of importance when detecting the difference between control and other time series. Hence, we considered generating a weighted score as above in algorithm 1, step 2.4, and ranking the time series based on these scores. However, since there is no historical data, on which are labeled as abnormal or not, optimizing the weights in a way that the abnormal sensors get the highest score was another challenge. Hence to overcome this, we had to develop a method to scale the weights using a simulation study.

Simulation study

Since we have no information about the actual abnormal sensors, we developed a simulation study to generate a set of ‘normal’ time series, and an ‘abnormal’ by considering the control time series. The flow chart in Fig. 2 summarizes the simulation work.

First, we had to identify the time series model of the median time series in order to generate a set of normal time series. Here we considered auto-regressive model (AR), moving-average model (MA), Auto Regressive Moving Average (ARMA), and Auto-Regressive Integrated Moving Average (ARIMA) models which are expressed using the Eqs. (3), (4), (5) and (6) respectively. In these equations, \(y_t\) means y, response variable, measured at time t, and \(\beta\), alpha values represent the coefficients of the AR model and MA model respectively. \(\varepsilon _t\) expresses the randomness, c is the constant factor, \(\theta _i\) indicates the numeric coefficient for the value associated with the ith lag, and \(\varepsilon\) represents the residual. The differenced time series of ARIMA model is indicated by \({y_t}'= y_t-y_{t-1}\).

The Augmented Dickey–Fuller test34 is used to check the stationarity of the time series. If the time series is stationary, we proceeded to AR, MA, or ARMA models and selected the best model among them using the lowest AIC (Akaike Information Criterion) and BIC (Bayesian Information Criterion) values35. Otherwise we had to take the difference to convert to stationary series and consider the ARIMA model with the rest of the models. Partial Autocorrelation (PACF) plots and Autocorrelation (ACF) plots36 were used to find the order of AR and MA respectively. The differencing order of the ARIMA model is based on the number of times we took the difference to make the series stationary. After identifying the model we simulate 10 time series from the selected model.

The next step was generating an abnormal time series which showed unusual behavior compared to the rest of the time series. For that, we applied the AR(1) plus noise state-space model37 to generate our abnormal time series and it gave a distinguishable time series as in Fig. 3. The AR(1) plus state space model can be explained as follows.

Here Eq. (7) is the observation equation, and Eq. (8) is the state equation. \(x_t\) denotes the state at time t, the transition matrix is \([\phi ]\), the observation matrix is [1], and the transition offset is c. The observation and transition noises, \(WN(0,\sigma ^2)\) are indicated by \(\varepsilon _t\) and \(w_t\) respectively. The expectation of state is \(E[X_t] = \frac{c}{1-\phi }\).

Now we have 11 time series: the first 10 time series are normal and the 11th series is abnormal. Using these 11 time series, we generated a median time series and calculated anomaly count, vertical distance, and DTW distance. Then we used the grid search optimization method38 to optimize the weights so that the abnormal sensor has the highest score. Also, the difference between the lowest score and the maximum score should be maximized. Here the score shows the abnormality of the sensor out of 100. The following algorithm 2 explains the weight-optimizing process.

Data collection and preparation

In order to test our algorithm, we used data from a school building in the USA and synthetic data which contains both collective and contextual anomalies. The school building is located in New York, USA. New York school building’s data39 were collected in 2018 from March to July (summer season), and 11 sensors were placed inside the building to collect data. Sensors were placed in different locations throughout the school building in a variety of different rooms, and collected temperature data over a period of time.

For the synthetic dataset, we used an existing time series dataset and recreated similar time series with contextual anomalies and one different time series with collective anomalies. This synthetic dataset will help us to evaluate whether our method is capable of detecting the most abnormal time series due to collective anomalies. The following two plots in Fig. 4 show the variations and trends of the temperature time series of the synthetic data and the school building data.

Based on Fig. 4, in synthetic data, we can clearly see some collective outliers in the ‘orange’ color time series. Other time series are similar to mean time series, but they show some contextual (single) outliers. Since our algorithm should identify anomalies dues to environmental issues, it should identify the ‘orange’ color time series as the most abnormal one. In New York building’s we can see lots of abnormal points and sensor data with different patterns and higher variation. However, our method should be able to identify abnormal sensors in both scenarios, where the variation is high and low.

Results

Current anomaly detection methods vs DTW based anomaly detection method

Popular anomaly detection methods mainly identify abnormal points, not an abnormal time window. In this study, we tested the anomaly detection ability of some of these popular methods: STL decomposition, Isolation Forest, Forecasting method, and DTW method. Plots in Fig. 5 show a sample temperature time series from a building and how each different method identifies anomalies of that time series. Temperature sharply decreases from 23 to \(21\,^{\circ }\)C and increases back to \(23\,^{\circ }\)C within a short period of time. Facility managers would like to identify these type of time windows, which shows indoor environmental abnormal behaviors.

Based on the results in Fig. 5 we could see that only the DTW-based method identifies collective anomalies in a perfect way that we want to detect building anomalies. Hence we proceeded with the DTW-based method described previously. This method helped to identify abnormal time windows, not only the abnormal points, providing more applicable results in order to identify abnormal time series in buildings.

Abnormal sensors detection

New York school building

In this section, we will discuss the results of the abnormal sensors detection process of the New York building. All the sensors are zone temperature thermostats, measuring temperature in a variety of room types. Room categories include gymnasiums, science wings, locker rooms, auditoriums, and more. As we explained in the methodology section, first we generated the median time series and considered it as the control time series. Figure 6 shows temperature vs time plots which contain all the sensors and the median time series of those sensor data.

Then we needed to identify the time series model which fits best for the median time series. First, we checked the stationarity of the time series using the Augmented Dickey–Fuller test. Based on the results the time series was a non-stationary time series (p = 0.0835; failed to reject the null hypothesis). Then we had to choose the ARIMA model instead of the AR, MA, and ARMA models. After the 1st order differencing, the p-value drops beyond the 0.05 threshold. Hence we could consider the order of differencing as 1. To fit the models, we had to identify the order of AR and MA models and the following PCAF and ACF plots in Fig. 7 helped us to identify those orders.

Based on the PACF, since there are strong correlations till lag = 1, we assigned 2 as the order of the AR model. In the ACF plot, there are lots of strong correlations and because of that, we used 1 as the order of the MA model, as it is better to consider a less complex model. Using the orders of AR, and MA processes and order of differencing we fitted the ARIMA model, ARIMA(1,1,1). Then we used the estimated parameters of the ARIMA model to simulate the time series. The fitted ARIMA model is:

The original median time series had an increasing trend and using the differencing method we could detrend and make it stationary to fit the time series model. Once we detrended the time series we could compare the de-trended time series with the simulated time series. Figure 8 shows the original median, de-trended, and a simulated time series.

After that, we generated an abnormal time series and using the grid search, we explained in algorithm 2, we could optimize the weights of the score function (\(\psi\)). The final score function of ith time series of this building is as follows:

The final score values and ranks of each sensor, based on the anomaly count, vertical distance and DTW distance compared to the real median time series, are shown in the following Table 2. Based on the table ‘sensor 222238’ can be considered as the most abnormal sensor while ‘sensor 222365’ is the least abnormal sensor. It can be clearly seen that ‘sensor 222365’ follows the median time series though it has one outlier point in the middle of the time series. Figure 9 shows the most abnormal and least abnormal sensors compared to the median time series.

Synthetic data set

This section explains the results of anomaly detection of synthetic data. As with the New York analysis, first, we had to take the median time series by considering the time series of all sensors. The time series of each sensor and median time series are shown in Fig. 10.

We checked the stationarity of the median time series which was found to be stationary (p-value = 0). The difference was taken in order to make the median time series stationary. Then we end up with ARMA (2,1) model as the best-fitted model. The fitted ARMA model is,

Then we generated an abnormal time series and 10-time series using the selected model. Using those time series and grid search methods we found the weights for the score function of each ith time series as follows,

After that, we calculated the actual anomaly count, mean vertical distance from an anomaly to the respective median time series points, and DTW distance for each sensor. Scores were found for each sensor using the score function which is summarized in the Table 3.

Based on the scores, the ‘sensor S5’ can be considered as the most abnormal sensor while the ‘sensor s3’ is the least abnormal sensor. Figure 11 shows the difference between these two time series plots. The most abnormal sensor clearly stands out from the median time series.

Discussion and conclusion

This study was conducted in order to identify the locations with abnormal behaviors of indoor temperature, and we selected a school building and a synthetic dataset to test our method. Based on the results of those datasets, it is clearly shown that our algorithm using the weighted scoring system is capable of identifying sensors with abnormal behaviors. Sensors with low scores show a better alignment with the median time series while the sensors with higher scores show significant changes in the pattern of time series when compared with the median series.

Identifying abnormal performances of buildings is important for many parties in different ways. It helps building occupants improve their own comfort, while owners can save money by reducing energy waste and utility costs. Hence anomaly detection in buildings is an interesting research work, which is attracted by many building owners, tenants and researchers.

Our study offers significant advantages over existing models: it can effectively identify anomalies without the need for labeled training data, making it highly versatile even with a small dataset. Furthermore, this method does not depend on the distribution of time series data, enhancing its applicability. Despite its simplicity, it effectively identifies anomalies based on domain knowledge in the field of building science.

This anomaly detection process in buildings can help identify areas of concern that are difficult to find observationally or require a significant and costly energy audit to diagnose. These anomalies can be tied back to issues with a building’s mechanical system, spaces that are not conditioned to their real occupancy use, inefficient control systems, inefficient building envelope, insulation or window issues, and beyond. Anomaly detection could also help identify thermostats or other data collection equipment that are poorly performing or miscalibrated and may be transmitting false data that has a negative impact on that space.

However, an additional benefit of this methodology is to be able to test a building on multiple occasions to verify long-term trends and any degradation or loss of indoor environmental quality over time. A pattern of increasing frequency or severity in anomaly detection can be a key indicator of failing or degrading building conditions. Preventative analytics and fault detection can help to identify the presence of these issues before they reach a failure point, which will allow facilities managers to proactively identify and resolve known issues.

Though we used the median time series as the control time series, building owners and operators can change it to a known control time series, if available within the building. For example, if the building is on a temperature setpoint schedule, instead of the median time series, they can easily change this model to identify sensors that do not follow the setpoint schedule.

Not all anomalies are ignorable, and sometimes we do not want to consider some series as abnormal series, though we have some degree of abnormality. Hence, for future work, we are planning to improve our algorithm in a way that can identify whether the anomaly is acceptable or ignorable automatically. In this study, we only considered univariate anomalies, but in the future, we would like to develop a multivariate abnormal sensor detection algorithm as it can help to identify the performance issues of the building, quicker and more accurately than a human could.

Data availability

Data used in this paper is available upon request. Please contact the corresponding author (A.W.) for data.

References

Chuah, M. & Fu, F. Ecg anomaly detection via time series analysis. In Frontiers of High Performance Computing and Networking ISPA 2007 Workshops, 123–135 (2007).

Moschini, G., Houssou, R., Bovay, J. & S, R.-N. Anomaly and fraud detection in credit card transactions using the arima model. ArXiv (2021).

Puranik, T. & Mavris, D. Anomaly detection in general aviation operations using energy metrics and flight data records. J. Aerosp. Inf. Syst. 15, 22–35 (2018).

Savage, D., Zhang, X., Yu, X., Chou, P. & Wang, Q. Anomaly detection in online social networks. Ann. Appl. Stat., 645–662 (2010).

da Silva Arantes, J., da Silva Arantes, M., Fröhlich, H., Siret, L. & Bonnard, R. A novel unsupervised method for anomaly detection in time series based on statistical features for industrial predictive maintenance. Int. J. Data Sci. Anal. 12, 383–404 (2021).

Wickramasinghe, A., Muthukumarana, S., Loewen, D. & Schaubroeck, M. Temperature clusters in commercial buildings using k-means and time series clustering. Energy Informatics (2022).

Yan, K. Chiller fault detection and diagnosis with anomaly detective generative adversarial network. Build. Environ. 201, 107982 (2021).

Goodfellow, I. J. et al. Generative adversarial networks (2014).

Kim, J. & Scott, C. D. Robust kernel density estimation. J. Mach. Learn. Res. 13, 2529–2565 (2012).

Breunig, M., Kröger, P., Ng, R. & Sander, J. LOF: Identifying Density-based Local Outliers, vol. 29, 93–104 (2000).

Fu, H., Ma, H. & Ming, A. EGMM: An enhanced gaussian mixture model for detecting moving objects with intermittent stops, 1–6 (2011).

Muller, K.-R., Mika, S., Ratsch, G., Tsuda, K. & Scholkopf, B. An introduction to kernel-based learning algorithms. In IEEE Transactions on Neural Networks, 181–201 (2001).

Chen, J., Sathe, S., Aggarwal, C. & Turaga, S. D. Outlier Detection with Autoencoder Ensembles, 90–98 (2017).

Liu, F. T., Ting, K. & Zhou, Z.-H. Isolation Forest, 413 – 422 (2009).

Quintana, M. et al. Aldi++: Automatic and parameter-less discord and outlier detection for building energy load profiles. Energy Build. 265, 112096 (2022).

Wang, Z., Parkinson, T., Li, P., Lin, B. & Hong, T. The squeaky wheel: Machine learning for anomaly detection in subjective thermal comfort votes. Build. Environ. 151, 219–227 (2019).

Bing, Y., Van Paassen, D. & Riahy, S. General modeling for model-based FDD on building HVAC system. Simul. Pract. Theory 9, 387–397 (2002).

Li, G. & Jung, J. Entropy-based dynamic graph embedding for anomaly detection on multiple climate time series. Sci. Rep. 11, 1–10 (2021).

Zheng, M., Domanskyi, S., Piermarocchi, C. & Mias, G. I. Visibility graph based temporal community detection with applications in biological time series. Sci. Rep. 11, 1–12 (2021).

YooSeok, J., TaeWook, K. & Chanjun, C. Anomaly analysis on indoor office spaces for facility management using deep learning methods. J. Build. Eng. 43, 103139 (2021).

Liu, F., Lee, Y., Jiang, H., Snowdon, J. & Bobker, M. Statistical modeling for anomaly detection, forecasting and root cause analysis of energy consumption for a portfolio of buildings. In Proceedings of Building Simulation 2011: 12th Conference of International Building Performance Simulation Association, 2530–2537 (2011).

Debanjana, N. & Harry, P. Automated real-time anomaly detection of temperature sensors through machine-learning. In International Journal of Sensor Networks, 137–152 (Inderscience Publishers, 2020).

Wei, L., Hongyi, J., Dandan, C., Lifei, C. & Qingshan, J. A real-time temperature anomaly detection method for IoT data. In 5th International Conference on Internet of Things, Big Data and Security, 112–118 (2020).

Benkő, Z., Bábel, T. & Somogyvári, Z. Model-free detection of unique events in time series. Sci. Rep. 12, 1–17 (2022).

Foorthuis, R. On the nature and types of anomalies: A review of deviations in data. Int. J. Data Sci. Anal. 12, 297–331 (2021).

Yassine, H., Khalida, G., Abdullah, A., Faycal, B. & Abbes, A. Artificial intelligence based anomaly detection of energy consumption in buildings: A review, current trends and new perspectives. Appl. Energy 287, 116601 (2021).

Schmidl, S., Wenig, P. & Papenbrock, T. Anomaly detection in time series: A comprehensive evaluation. Proc. VLDB Endow. 15, 1779–1797 (2022).

Robert, C., William, C. & Irma, T. Stl: A seasonal-trend decomposition procedure based on loess. J. Off. Stat. 6, 3–73 (1990).

Liu, F., Ting, K. & Zhou, Z. Isolation-based anomaly detection. In ACM Transactions on Knowledge Discovery from Data (TKDD) 3 (2012).

Bajaj, A. Anomaly detection in time series (2022). https://neptune.ai/blog/anomaly-detection-in-time-series. Accessed 20 April 2022.

Diab, M. et al. Anomaly detection using dynamic time warping. In 2019 IEEE International Conference on Computational Science and Engineering (CSE) and IEEE International Conference on Embedded and Ubiquitous Computing (EUC), 193–198 (2019).

Tormene, P., Giorgino, T., Quaglini, S. & Stefanelli, M. Matching incomplete time series with dynamic time warping: An algorithm and an application to post-stroke rehabilitation. Artif. Intell. Med. 45, 11–34 (2008).

Gower, J. Properties of Euclidean and non-Euclidean distance matrices. Linear Algebra Appl. 67, 81–97 (1985).

Fuller, W. A. Introduction to Statistical Time Series (Wiley, 1976).

Brockwell, P. & Davis, R. Time Series: Theory and Methods 2nd edn. (Springer, 2009).

Mills, T. C. Time Series Techniques for Economics (Cambridge University Press, 1990).

Lopes, H. F. Ar(1) plus noise model (2017). http://hedibert.org/wp-content/uploads/2017/06/AR1plusnoise-blockmove-singlemove-fixedparameters.html. Accessed 15 April 2022.

Muhammad, J. K. Grid search optimization algorithm in python (2020). https://stackabuse.com/grid-search-optimization-algorithm-in-python/

Biam, P. Rtem hackaton api and data science tutorials (2022). https://www.kaggle.com/datasets/ponybiam/onboard-api-intro?select=all_points_metadata.csv/. Accessed 03 May 2022.

Acknowledgements

The authors thank the editor and anonymous reviewers whose comments helped improve this manuscript and New York State Energy Research and Development Authority for the data contribution. The authors have been partially supported by Research Manitoba and the Natural Sciences and Engineering Research Council of Canada. The developed code is considered the intellectual property of the industrial partner. However, we are willing to share the code for research purposes upon request.

Author information

Authors and Affiliations

Contributions

A.W. performed the analysis and drafted the manuscript with the help of M.S. S.M. supervised the research. S.N.W. provided critical feedback. All authors helped shape the research, analysis and reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wickramasinghe, A., Muthukumarana, S., Schaubroeck, M. et al. An anomaly detection method for identifying locations with abnormal behavior of temperature in school buildings. Sci Rep 13, 22930 (2023). https://doi.org/10.1038/s41598-023-49903-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-49903-7

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.