Abstract

The grey wolf optimizer (GWO) is a novel type of swarm intelligence optimization algorithm. An improved grey wolf optimizer (IGWO) with evolution and elimination mechanism was proposed so as to achieve the proper compromise between exploration and exploitation, further accelerate the convergence and increase the optimization accuracy of GWO. The biological evolution and the “survival of the fittest” (SOF) principle of biological updating of nature are added to the basic wolf algorithm. The differential evolution (DE) is adopted as the evolutionary pattern of wolves. The wolf pack is updated according to the SOF principle so as to make the algorithm not fall into the local optimum. That is, after each iteration of the algorithm sort the fitness value that corresponds to each wolf by ascending order, and then eliminate R wolves with worst fitness value, meanwhile randomly generate wolves equal to the number of eliminated wolves. Finally, 12 typical benchmark functions are used to carry out simulation experiments with GWO with differential evolution (DGWO), GWO algorithm with SOF mechanism (SGWO), IGWO, DE algorithm, particle swarm algorithm (PSO), artificial bee colony (ABC) algorithm and cuckoo search (CS) algorithm. Experimental results show that IGWO obtains the better convergence velocity and optimization accuracy.

Similar content being viewed by others

Introduction

The swarm intelligence algorithms are proposed to mimic the swarm intelligence behavior of biological in nature, which has become a hot of cross-discipline and research field in recent years. The appearance of swarm intelligent optimization algorithm provides the fast and reliable methods for finding solutions on many complex problems1,2. Because the swarm intelligence algorithm have characteristics of self-organization, parallel, distributive, flexibility and robustness, now they have been very widespread used in many cases, such as electric power system, communication network, system identification and parameter estimation, robot control, transportation and other practical engineering problems3,4,5. Therefore, the research on the swarm intelligence optimization algorithms has an important academic value and practical significance.

At present, a variety of swarm intelligence optimization algorithms have been proposed by simulating the biotic population and evolution process in nature, such as particle swarm optimization (PSO) algorithm, shuffled frog leaping algorithm (SFLA), artificial bee colony (ABC) algorithm, ant colony optimization (ACO) algorithm, biogeography-based optimization (BBO) algorithm, and cuckoo search (CS) algorithm. Particle Swarm Optimization (PSO) algorithm put forward by Kennedy and Eberhart to mimic the the foraging behavior of birds and fish flock6, but the convergence velocity and searching accuracy of PSO algorithm are unsatisfactory to some extend. Shuffled Frog-leaping Algorithm (SFLA) put forward by Eusuff in 2003 is a novel swarm intelligent cooperative searching strategy based on the natural memetics7,8. On the one hand, individuals exchange information in the global searching process, and its search precision is high. On the other hand, SFLA has the disadvantage of slow convergence velocity and easy to falling into the local optimum. Artificial Bee Colony (ABC) Algorithm put forward by Karaboga in 2005 to mimics the finding food source behavior of bees9. In order to mimic the social behavior of the ant colony, Dorigo et al. Proposed the an novel Ant Colony Optimization (ACO) Algorithm in 200610. But their disadvantages are the slow convergence speed and easy to premature. Biogeography-Based Optimization (BBO) algorithm was put forward by Simon in 200811, whose idea is based on the geographical distribution principle in the biogeography. Cuckoo Search (CS) Algorithm was proposed by Yang and Deb in 2009 based on the cuckoo’s parasitic reproduction mechanism and Levy flights searching strategy12,13, whose advantage is that CS algorithm is not easy to fall into the local optimum compared with other intelligent algorithms and has less parameters, and whose disadvantage is that the adding of Levy Flight search mechanism leads to strong leap in the process of search, thus, its local search is not careful.

The common shortcoming of these algorithms is that each swarm intelligence algorithm has problem in different degrees that the convergence velocity is slow, the optimization precision is low, and easy to fall into the local optimum5. The key reason cause these shortcoming is that whether an algorithm is able to achieve the proper compromise between exploration and exploitation in its each searching phase or not14. Exploration and exploration are contradictory. Exploration reflects the ability of the algorithm to search for new space, while exploration reflects the refining ability of the algorithm. These two criteria are generally used to evaluate stochastic optimization algorithms. Exploration is refers to that a particle leave the original search path in a certain extent and search towards a new direction, which reflects the ability of exploiting unknown regions. Exploitation is refers to that a particle continue to search more carefully on the original trajectory in a certain extent, which can insure the wolf make a detailed search to the region that have been explored. Too small exploration can cause a premature convergence and falling into a local optimum, however, too small exploitation will make the algorithm converge too slowly.

The grey wolf optimizer (GWO) as a novel swarm intelligent optimization algorithm was put forward by Seyedali Mirjalili etc in 2014, which mainly mimics wolf leadership hierarchy and hunting mechanism in nature15. Seyedali and Mirjalili etc has proved that the optimization performance of standard GWO is superior to that of PSO, GSA, DE and FEP algorithm. Due to the wolves algorithm with the advantages of simple in principle, fast seeking speed, high search precision, and easy to realize, it is more easily combined with the practical engineering problems. Therefore, GWO has high theoretical research value. But GWO is as a new biological intelligence algorithm, the research about it is just at the initial phase, so research and development of the theory are still not perfect. In order to make the algorithm plays a more superior performance, further exploration and research is needed.

Many swarm intelligence algorithms are mimic the hunting and searching behaviors of some animals. However, GWO simulates internal leadership hierarchy of wolves, thus, in the searching process the position of best solution can be comprehensively assessed by three solutions. But for other swarm intelligence algorithms, the best solution is searched only leaded by a single solution. So GWO can greatly decrease the probability of premature and falling into the local optimum. So as to achieve the proper compromise between exploration and exploitation, an improved GWO with evolution and elimination mechanism is proposed. The biological evolution and the SOF principle of biological updating of nature are added to the basic wolf algorithm. In order to verify the performance of the improved GWO, 12 typical benchmark functions are adopted to carry out simulation experiments, meanwhile, experimental results are compared with PSO algorithm, ABC algorithm and CS algorithm. The experimental results show that the improved grey wolf optimizer (IGWO) obtains the better convergence velocity and optimization accuracy.

The paper is organized as follows. In section 2, the grey wolf optimizer is introduced. A grey wolf optimizer with evolution and SOF mechanism is presented in section 3. In section 4, the simulation experiments are carried out and the simulation results are analyzed in details. Finally, the conclusion illustrates the last part.

Grey Wolf Optimizer

The grey wolf optimizer is a novel heuristic swarm intelligent optimization algorithm proposed by Seyedali Mirjalili et al. in 2014. The wolf as top predators in the food chain, has a strong ability to capture prey. Wolves generally like social life and in the interior of the wolves exists a rigid social hierarchy15.

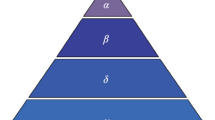

In order to mimic wolves internal leadership hierarchy, the wolves is divided into four types of wolf: alpha, beta, delta and omega, where the best individual, second best individual and third best individual are recorded as alpha, beta, and delta, and the rest of the individuals are considered as omega. In the GWO, the hunting (optimization) is guided by alpha, beta, and delta8. They guide other wolves (W) tend to the best area in searching space. In iterative searching process, the possible position of prey is assessed by three wolves alpha, beta, and delta. In optimization process, the locations of wolves are updated based on Eqs (1) and (2).

where, t represents the t-th iteration, \(\overrightarrow{A}\) and \(\overrightarrow{C}\) are coefficient vector, \(\overrightarrow{{X}_{P}}\) is the position vector of prey, \(\overrightarrow{X}\) represents the wolf position. The vector \(\overrightarrow{A}\) and \(\overrightarrow{C}\) can be expressed by:

where, the coefficient \(\overrightarrow{a}\) linearly decreases from 2 to 0 with the increasing of iteration number, \(\overrightarrow{{r}_{1}}\) and \(\overrightarrow{{r}_{2}}\) are random vector located in the scope [0, 1].

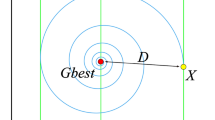

Principle of the position updating rules described in Eqs (1) and (2) are shown in Fig. 1. It can be seen from Fig. 1 the wolf at the position (X, Y) can relocate itself position around the prey according to above updating formulas. Although Fig. 1 only shows 7 positions that the wolf possible move to, by adjusting the random parameters C and A it can make the wolf to relocate itself to any position in the continuous space near prey. In the GWO, it always assumes that position of alpha, beta and delta is likely to be the prey (optimum) position. In the iteration searching process, the best individual, second best individual and third best individual obtained so far are respectively recorded as alpha, beta, and delta. However, other wolves who are regarded as omega relocate their locations according to the locations of alpha, beta, and delta. The following mathematical formulas are used to re-adjust positions of the wolf omega. The conceptual model that wolf update its position is shown in Fig. 2.

where, \(\overrightarrow{{X}_{\alpha }}\), \(\overrightarrow{{X}_{\beta }}\) and \(\overrightarrow{{X}_{\delta }}\) are the position vector of alpha, beta, and delta, respectively. \(\overrightarrow{{C}_{1}}\), \(\overrightarrow{{C}_{2}}\), \(\overrightarrow{{C}_{3}}\) are randomly generated vectors, \(\overrightarrow{X}\) represents the position vector of current individual. The Eqs (5), (6) and (7) respectively calculate the distances between the position of current individual and that of individual alpha, beta, and delta. So the final position vectors of the current individual are calculated by:

where, \(\overrightarrow{{A}_{1}}\), \(\overrightarrow{{A}_{2}}\), \(\overrightarrow{{A}_{3}}\) are randomly generated vectors, and t represents the number of iterations.

In the plane, three points is able to determine a region. Thus, the scope of position of the prey can be determined by the best three wolves. The GWO that whose target solution is comprehensively assessed by three solutions, can greatly decrease the probability of trapping into the local extreme.

It can be seen from the above formula that Eqs (5–7) respectively define the step size of the omega tend to alpha, beta, and delta. The final positions of the omega wolves are defined by Eqs (8–11).

The exploration ability and exploitation ability have important influence on the searching performance of an algorithm. For the GWO, exploration is refers to a wolf leave the original search path in a certain extent and search towards a new direction, which reflects the wolf’s ability of exploiting unknown regions. Exploitation is refers to that a wolf continue to search more carefully on the original trajectory in a certain extent, which can insure the wolf make a detailed search to the region that have been explored. So how to make the algorithm achieve a proper compromise between exploration and exploitation is a question that worth research.

It can be observed that the two random and adaptive vectors \(\overrightarrow{A}\) and \(\overrightarrow{C}\) can be used to obtain a proper compromise between exploration ability and exploitation ability of the GWO. As is shown in Fig. 3, when \(\overrightarrow{A}\) is greater than 1 and is less than −1, that is \(|\overrightarrow{A}| > 1\), the wolf shows exploration ability. When the value of vector \(\overrightarrow{C}\) is greater than 1, it can also enhance the exploration ability of the wolf. In contrast, when \(|\overrightarrow{A}| < 1\) and C < 1 the wolf’s exploitation capacity is enhanced. For increasing the exploitation ability of the wolf gradually, the vector \(\overrightarrow{A}\) decreases linearly with the iterations number increasing. However, in the course of optimization the value of \(\overrightarrow{C}\) is generated randomly, which can make exploration and exploitation of the wolf reach a equilibrium at any stage. Especially in the final stages of the iteration, it is able to avoid the algorithm from trapping into a local extreme. The pseudo codes of the GWO are described as follows.

Initialize the population Xi(i = 1, 2, … n) of GWO

Initialize GWO parameters (a, A, C)

Calculate the individual fitness value in the population

Record the best individual, second best individual and third best individual as \(\overrightarrow{{X}_{\alpha }}\), \(\overrightarrow{{X}_{\beta }}\) and \(\overrightarrow{{X}_{\delta }}\)

While (t< maximum iteration number)

For each individual

Update the position of current individual by Eqs (5–11)

End for

Update a, A, C

Calculate the fitness value of all individual in the population

Update \(\overrightarrow{{X}_{\alpha }}\), \(\overrightarrow{{X}_{\beta }}\), \(\overrightarrow{{X}_{\delta }}\)

t = t + 1

End While

Return \(\overrightarrow{{X}_{\alpha }}\)

The GWO has strong exploration ability, which can avoid the algorithm falling into the local optimum. For the GWO, the proper compromise between exploration ability and exploitation ability is very simple to be achieved, so it can effectively solve many complicated problems.

Improved Grey Wolf Optimizer (IGWO)

For increasing the search performance of the GWO, an improved grey wolf optimizer (IGWO) is proposed. In the IGWO, the biological evolution and the SOF principle of biological updating of nature are added to the standard GWO. Due to the differential evolution algorithm having the advantages of simple principle, less algorithm parameters and easy implementation, differential evolution (DE) strategy is chose as the evolutionary pattern of wolves in this paper. The wolf pack is updated according to the SOF principle so as to make the algorithm not fall into the local optimum. That is, after each iteration of the algorithm sort the fitness value that corresponds to each wolf by ascending order, and then eliminate R wolves with larger fitness value, meanwhile randomly generate wolves that equal to the number of eliminated wolves.

Grey Wolf Optimizer with evolution operation

In nature, organisms evolve from the low level to advanced level continually under the action of heredity, selection and mutation16,17. Similarly, there are also a series of changes like heredity, selection and mutation in the searching process of the wolves. The evolution law of SOF make wolves gradually strong. In the same way, for increasing the searching performance of the algorithm, the evolution operation is added to basic GWO. Based on the biological evolution of nature, many evolution methods have been developed, such as differential evolution(DE), quantum evolution and cooperative evolution, etc.18. For all the evolution methods, the differential evolution strategy has simple principle, less parameters and easy implementation and it has been extensively researched and applied19,20. Therefore, the DE strategy is chose as the evolution method of GWO. The basic principle of DE operator is to adopt the difference among individuals to recombine the population and obtain intermediate individuals, and then get the next generation population through a competition between parent individual and offspring individual21,22. The basic operations of DE include three operations: mutation, crossover and selection. After the operation of evolution is added to GWO, the wolf’s position updating is shown as Fig. 2.

Mutation operation

The most prominent feature of differential evolution is mutation operation. When an individual is selected, two differences with weight are added to the individual to accomplish its variation23. The basic variation ingredient of DE is the difference vector of the parents, and each vector contains two different individuals \(({X}_{r1}^{t},{X}_{r2}^{t})\) of parent (the t-th generation). The difference vector is defined as follows.

where, r1 and r2 express index number of two different individuals of the population. Thus the mutation operation can be described as:

where, r1, r2 and r3 are different integers in the scope (1, 2, … n) from the current target vector index i. F is the scaling factor to control the scaling of differential vector.

In order to produce an ideal variation factor, to ensure that wolves can evolve toward the direction that good for development of the wolves. So in this paper, chooses outstanding individuals of wolves as parents. After a large number of simulation experiments, beta and delta are chose as two parents, and then combined with the alpha wolf to form a variation factor. Therefore, the variation factor is designed as Eq. (14).

In order to make the algorithm has a high exploration ability in the early stage to avoid falling into local optimum, and has a high exploitation ability in the latter stage to increase the convergence speed, a dynamic scaling factor is employed. So scaling factor F change from large to small according to the iteration number in Eq. (15).

where, fmin and fmax are the minimum and maximum of the scaling factor, Max_iter is maximum iteration number. iter is the iter-th iteration number.

Crossover operation

For the target vector individual \({X}_{i}^{t}\) of the wolves, make it have a crossover operation with the variation vector \({V}_{i}^{t+1}\), and produce a test individual \({U}_{i}^{t+1}\). In order to guarantee the individual \({X}_{i}^{t}\) taking place a evolution, a random choice method is adopt to insure at least one bit of \({U}_{i}^{t+1}\) is contributed by \({U}_{i}^{t+1}\). For other bits of \({U}_{i}^{t+1}\), the crossover probability factor CR is used to decide which bit of \({U}_{i}^{t+1}\) is contributed by \({V}_{i}^{t+1}\), and which bit is contributed by \({X}_{i}^{t}\). Crossover operation is express as follows.

where, rand(j) ∈ [0, 1] obeys the random-uniform distribution, j is the j-th variable (gene), CR is crossover probability, and rand(i) ∈ [1, 2, … D].

It can be known from the Eq. (16), if the CR is larger, \({V}_{i}^{t+1}\) is able to make more contribution to \({U}_{i}^{t+1}\). When CR = 1, \({U}_{i}^{t+1}={V}_{i}^{t+1}\). If the CR is smaller, \({X}_{i}^{t}\) is able to make more contribution to \({U}_{i}^{t+1}\).

Selection operation

The “greedy choice” strategy is applied to the selection operation. After mutation operation and crossover operation generate the experiment individual \({U}_{i}^{t+1}\) and the compete it with \({X}_{i}^{t}\). It can be expressed as Eq. (17).

where, f is the fitness function, \({X}_{i}^{t+1}\) is the individual of t-th generation. from \({U}_{i}^{t+1}\) and \({X}_{i}^{t}\) choose the individual with best fitness as an individual of (t + 1)- generation, and replace the individual of the t-th generation.

In the early stages of the algorithm, difference of the population is large, so mutation operation makes the algorithm has strong exploration ability. In the later stages of the algorithm iteration, namely when the algorithm tends to converge, difference between individuals of the population is small, which makes the algorithm has a strong exploitation ability.

SOF wolves updating mechanism

SOF is a role of nature that formed in the process of the biological evolution23,24,25. In nature, some vulnerable wolves will be eliminated because of uneven distribution of the prey, hunger, disease and other reasons. Meanwhile, new wolves will join to this wolf organization to enhance fighting capacity of the organization, which can insure the wolf organization survival well in the complicated world. The wolf pack is updated according to the SOF principle so as to make the algorithm not fall into the local optimum26,27,28,29.

In the new algorithm, assume the number of wolves in the pack is fixed, and the strength of wolves is measured by fitness value. The higher the fitness, the better the solution. Therefore, after each iteration of the algorithm sort the fitness value that corresponds to each wolf in ascending order, and then eliminate R wolves with larger fitness value, meanwhile randomly generate new wolves that equal to the number of eliminated wolves. When R is large, the number of wolves that new generating is big, which will help to increase the diversity of wolves. But if the value of R is too large, the algorithm tends to be searching randomly, which will results in the convergence speed becoming slow. If the value of R is too small, it is not conducive to maintain the diversity of population, which results in the ability of exploring new solution space weakened. Therefore, in this paper, R is a random integer between n/(2 × ε) and n/ε.

where, n is the total number of wolves, ε is scale factor of wolves updating.

The flow chart of the improved grey wolf optimizer (IGWO) is illustrated in Fig. 4. The main procedure steps are described as follows.

-

(1)

Initialize the grey wolf population. Randomly generated position of wolves Xi(i = 1, 2, … n) Initialize parameters a, A and C.

-

(2)

Calculate fitness of each wolf, choose the first three best wolves and save them as alpha beta and Delta in turn.

-

(3)

Update position. According to Eqs (5–11) update position of the other wolves, that is, update position of omega wolf.

-

(4)

Evolution operation. Use alpha, beta, and delta to form variation factor according to the Eq. (14). After crossover and selection operation, select the individual with good fitness as the wolf of next generation. Choose the first three best wolves and save them as alpha beta and Delta in turn.

-

(5)

Update wolves. Sort fitness values that correspond by wolves from small to large, eliminate R wolves with larger fitness value. Meanwhile, randomly generate new R wolves.

-

(6)

Update parameter a, A and C.

-

(7)

Judge whether the termination condition is satisfied, if satisfied, output the position and fitness value of alpha as the optimal solution. If not satisfied, return to step (2).

Simulation Experiments and Results Analysis

Before carrying out the simulation experiments to compare the performances of adopted optimization algorithm, twelve benchmark functions are selected2, which are listed in Table 1. The experiment consists of two parts. For validating the performance of two improvements to the GWO, one part is that separately do experiments for the GWO with differential evolution (DGWO), GWO with SOF mechanism (SGWO) and IGWO. And meanwhile, compare results with DE algorithm. The second part is that do experiments to compare IGWO with other swarm intelligence algorithm, including PSO algorithm, ABC algorithm and CS algorithm. The parameter settings of GWO, PSO algorithm, ABC algorithm, CS algorithm and, DE algorithm are defined according with literature and can be found respectively in8,9,12,13,23, which are listed in Table 2.

Experiment and analysis for two improvement of IGWO

For validating the performance of two improvements to the GWO, first of all, simulation experiments are separately carried out for the grey wolf optimizer with differential evolution (DGWO), grey wolf optimizer with SOF mechanism (SGWO) and IGWO. And meanwhile, The simulation results compared with GWO and DE algorithm are shown in Figs 5–11. It can be seen from the simulation convergence curves for the adopted testing functions, compared with GWO, the convergence velocity and optimization precision of DGWO, SGWO and IGWO all have been improved, but IGWO is the best.

Then for further validating the searching accuracy of DGWO, SGWO and IGWO, every optimization algorithm is run independently thirty times and the best, worst and average values are recorded for the adopted twelve testing functions under the 30-dimension and 100-dimension. The maximum iterations number is Max Max_iter = 500. The statistical results of D = 30 and D = 100 are shown in Tables 3 and 4 respectively.

Simulation contrast experiments and results analysis

Three swarm intelligence algorithms (PSO algorithm, ABC algorithm and CS algorithm) are selected to carry out the simulation contrast experiments with the proposed IGWO so as to verify its superiority on the convergence velocity and searching precision. When dimension D = 30, the simulation convergence results on the adopted testing functions are shown in Figs 12–23. when D = 100, the simulation convergence results on the adopted testing functions are shown in Figs 24–27.

It can be seen from the simulation convergence curves, IGWO has better convergence velocity and searching precision than other three algorithms (ABC, CS and PSO). Especially for function F8, F9 and F12, compared with GWO, the IGWO make their convergence speed are improved obviously. It can be seen from their surface figure that the three functions are multimodal function. So there are a lot of local minimum values within the scope of search. For IGWO, the addition of differential evolution and the SOF mechanism can improving the weakness of easily falling into the local extreme and obtain smaller function value. Thus, the convergence speeds of the three functions are improved significantly. At the same time, it suggests that the IGWO has the superiority to jump out of the local optimal.

For further validating the searching accuracy, every optimization algorithm is run independently thirty times and the best, worst and average values are recorded for the adopted twelve testing functions under the 30-dimension and 100-dimension. The maximum iterations number is Max Max_iter = 500. The statistical results of D = 30 and D = 100 are shown in Tables 5 and 6 respectively. Seen from the numerical results listed in Tables 5 and 6, the IGWO proposed in this paper makes optimization accuracy of 12 typical functions have a certain improvement. And the optimization accuracy of IGWO is better than that of PSO, ABC, CS algorithm under the same dimension. For the F1 function, when its dimension D = 30, its average value is raised from e-27 to e-63, relative to GWO been increased by 36 orders of magnitude; and when D = 100, its average value is raised from e-12 to e-34, relative to GWO increased by 22 orders of magnitude. For the F2 function, when D = 30, its average value has been improved 35 orders of magnitude relative to GWO; and when D = 100, relative to GWO its average value is improved 22 orders of magnitude. For function F9 and F11, when D = 30 and D = 100, IGWO respectively search to their ideal minimum value 0. The optimization performance for other testing functions all has been improved.

Thus, the simulation experiments results, including convergence curves and statistics data, show that the proposed improved grey wolves optimizer has a better convergence rate and optimization performance. According to the improvement above, three reasons can be summarized: Firstly, the adding of evolution operation is able to increase the diversity of wolves, that is to say to increase the solution diversity so as to make the algorithm jump out the local extreme. Secondly, the selection method of parents of DE and dynamic scaling factor F can make the algorithm has a good exploration ability in the early search stage and has a good exploitation ability in the later search stage, therefore, both search precision and convergence speed are improved. In addition, the adding of SOF wolf updating mechanism can also decrease the probability of the algorithm falling into the local extreme.

Conclusions

For achieving the proper compromise between exploration and exploitation, further accelerate the convergence and increase the optimization accuracy of GWO, an improved grey wolf optimizer (IGWO) is proposed in this paper. The biological evolution and SOF principle in nature are added to the standard GWO. The simulation experiments are carried out by adopting twelve typical function optimization problems. The simulation results show the proposed IGWO has better convergence velocity and optimization performance than DE algorithm, PSO algorithm, ABC algorithm and CS algorithm. On the one hand, the adoption of evolution operation can increase the wolves diversity and make the algorithm has a good exploration ability in the early searching stage and has a good exploitation ability in the later search stage. On the other hand, the adoption of SOF wolf updating mechanism can decrease the probability of falling into the local optimum. In the future work, we will carry out similar hybridisation of other swarm intelligent optimization algorithms, such as dragonfly algorithm (DA), ant lion optimizer (ALO), multi-verse optimizer (MVO), coral reefs optimization (CRO) algorithm, etc.

Data Availability

There are no data available for this paper.

References

Derrac, J., García, S. & Molina, D. A practical tutorial on the use of non-parametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. J. Swarm and Evolutionary Computation. 1, 3–18 (2011).

Cui, Z. H. & Gao, X. Z. Theory and applications of swarm intelligence. J. Neural Computing and Applications. 21, 205–206 (2012).

Zhang, Z. et al. On swarm intelligence inspired self-organized networking: its bionic mechanisms, designing principles and optimization approaches. J. Communications Surveys & Tutorials IEEE. 16, 513–537 (2014).

Leboucher, C. et al. A swarm intelligence method combined to evolutionary game theory applied to the resources allocation problem. J. International Journal of Swarm Intelligence Research (IJSIR). 3, 20–38 (2012).

Parpinelli, R. S. & Lopes, H. S. New inspirations in swarm intelligence: a survey. J. International Journal of Bio-Inspired Computation. 3, 1–16 (2011).

Kennedy, J. & Eberhart R. Particle swarm optimization, in Neural Networks, 1995. Proceedings., IEEE International Conference on. pp. 1942–1948 (1995).

Ebrahimi, J., Hosseinian, S. H. & Gharehpetian, G. B. Unit commitment problem solution using shuffled frog leaping algorithm. J. Power Systems, IEEE Transactions on. 26, 573–581 (2011).

Fang, C. & Wang, L. An effective shuffled frog-leaping algorithm for resource-constrained project scheduling problem. J. Computers & Operations Research. 39, 890–901 (2012).

Karaboga, D. & Basturk, B. On the performance of artificial bee colony (ABC) algorithm. J. Applied soft computing. 8, 687–697 (2008).

Dorigo, M., Birattari, M. & Stutzle, T. Ant colony optimization. IEEE Computational Intelligence Magazine 1, 28–39 (2006).

Simon, D. Biogeography-based optimization. Evolutionary Computation, IEEE Transactions on 12, 702–713 (2008).

Yang, X. S. & Deb, S. Cuckoo search via Lévy flights. A. Proceedings of World Congress on Nature & Biologically Inspired Computing. C. IEEE Publications. USA, 210–214 (2009).

Mirjalili, S., Wang, G.-G., dos, L. & Coelho, S. Binary optimization using hybrid particle swarm optimization and gravitational search algorithm. J. Neural Computing and Applications. 25, 1423–1435 (2014).

Črepinšek, M., Liu, S. H. & Marjan, M. Exploration and exploitation in evolutionary algorithms: a survey. ACM Comput. Surv. 45, 1–33 (2013).

Mirjalili, S., Mirjalili, S. M. & Lewis, A. Grey wolf optimizer. J. Advances in Engineering Software. 69, 46–61 (2014).

Brambilla, M. et al. Swarm robotics: a review from the swarm engineering perspective. J. Swarm Intelligence. 7, 1–41 (2013).

Liao, J. et al. A neighbor decay cellular automata approach for simulating urban expansion based on particle swarm intelligence. J. International Journal of Geographical Information Science. 28, 720–738 (2014).

Das, S. & Suganthan, P. N. Differential evolution: a survey of the state-of-the-art. J. Evolutionary Computation, IEEE Transactions on. 15, 4–31 (2011).

Palmer, Katie et al. Differential Evolution of Cognitive Impairment in Nondemented Older Persons: Results From the Kungsholmen Project. J. Psychiatry. 159, 436–442 (2014).

Agrawal, R. et al. Parallelization of industrial process control program based on the technique of differential evolution using multi-threading[C]//Industrial Engineering and Engineering Management (IEEM), 2014 IEEE International Conference on. IEEE, 546–550 (2014).

Islam, S. M. et al. An adaptive differential evolution algorithm with novel mutation and crossover strategies for global numerical optimization. J. Systems, Man, and Cybernetics, Part B: Cybernetics, IEEE Transactions on. 42, 482–500 (2012).

Zheng, Y. J. et al. A hybrid fireworks optimization method with differential evolution operators. J. Neurocomputing. 148, 75–82 (2015).

Zhang, P., Wei, Y. X. & Xue, H. Q. Heterogeneous Multiple Colonies Ant Colony Algorithm Based on Survival of Fittest Rules. J. Computer Engineering. 38, 182–185 (2012).

Eyler, C. E. & Rich, J. N. Survival of the fittest: cancer stem cells in therapeutic resistance and angiogenesis. J. Journal of Clinical Oncology. 26, 2839–2845 (2008).

Whitley, D. An executable model of a simple genetic algorithm. J. Foundations of genetic algorithms. 2, 45–62 (2014).

Vidal, T. et al. A hybrid genetic algorithm with adaptive diversity management for a large class of vehicle routing problems with time-windows. J. Computers & Operations Research. 40, 475–489 (2013).

Kristian, B. & Frédéric, R. N. Survival of the Fittest in Cities: Urbanisation and Inequality. J. The Economic Journal. 124, 1371–1400 (2014).

Bak, P. & Sneppen, K. Punctuated equilibrium and criticality in a simple model of evolution. Physical Review Letters. 24, 4083–4086 (1993).

Boettcher, S. & Percus, A. Nature’s way of optimizing. Artificial Intelligence. 1–2, 275–286 (2000).

Acknowledgements

This work was supported by the Project by National Natural Science Foundation of China (Grant No. 21576127), the Basic Scientific Research Project of Institution of Higher Learning of Liaoning Province (Grant No. 2017FWDF10), and the Project by Liaoning Provincial Natural Science Foundation of China (Grant No. 20180550700).

Author information

Authors and Affiliations

Contributions

Jie-Sheng Wang participated in the concept, design, interpretation and commented on the manuscript. A substantial amount of Shu-Xia Li’s contribution to the data collection, analysis and algorithm simulation, draft writing, and critical revision of this paper was undertaken.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, JS., Li, SX. An Improved Grey Wolf Optimizer Based on Differential Evolution and Elimination Mechanism. Sci Rep 9, 7181 (2019). https://doi.org/10.1038/s41598-019-43546-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-019-43546-3

This article is cited by

-

Hybrid evolutionary grey wolf optimizer for constrained engineering problems and multi-unit production planning

Evolutionary Intelligence (2024)

-

Ameliorated grey wolf optimizer with the best and worst orthogonal opposition-based learning

Soft Computing (2024)

-

Investigation of delamination and surface roughness in end milling of glass fibre reinforced polymer composites using Fuzzy Model and Grey wolf Optimizer

International Journal on Interactive Design and Manufacturing (IJIDeM) (2024)

-

DE-GWO: A Multi-objective Workflow Scheduling Algorithm for Heterogeneous Fog-Cloud Environment

Arabian Journal for Science and Engineering (2024)

-

Review of the grey wolf optimization algorithm: variants and applications

Neural Computing and Applications (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.