Abstract

Active-vision-based measurement plays an important role in the profile inspection study. The binocular vision, a passive vision, is employed in the active vision system to contribute the benefits of them. The laser plane is calibrated by two 2D targets without texture initially. Then, an L target with feature points is designed to construct the orthogonality object of two vectors. In order to accurately model the binocular-active-vision system, the feature points on the L target are built by two cameras and parameterized by the laser plane. Different from the optimization methods on the basis of the distance object, the laser plane is further refined by the distance-angle object. Thus, an optimization function is created considering both the norms and angles of the vectors. However, the scale of the distance is diverse from the scale of the angle. Therefore, the optimization function is enhanced by the normalization process to balance the different scales. The comparison experiments show that the binocular active vision with the normalization object of vector orthogonality achieves the decreasing distance errors of 25%, 22%, 13% and 4%, as well as the decreasing angle errors of 23%, 20%, 14% and 4%, which indicates an accurate measurement to reconstruct the object profile.

Similar content being viewed by others

Introduction

Profile measurement is one of the most important techniques in the optical inspection and vision1,2,3,4. Recently, information acquirement of the 3D profile is popular in the research fields of the automotive industry5, the robot vision6, the medical diagnosis7, the reverse engineering8, etc. However, the camera only generates 2D images without depth information, which restricts us to acquire and perceive the profile of object in the real world.

Many advances to solve the problem of profile measurement are reported in recent years9. The developments of the profile measurement can be classified into two main parts according to the way for acquiring the third dimensional information. The first kind of methods contributes the third dimensional information by the other camera, i.e. the binocular vision or the stereo vision. Faugerass O.10 provides the classical work of computational stereo in image-based reconstruction. The properties of the stereo vision, including epipolar geometry, are concluded according to the camera projective model. The fundamental matrix of the stereo vision is also estimated in the literature. Grimson W. E. L.11,12 presents an unsupervised learning method to model activities and cluster trajectories through a multi-camera system. The framework groups the trajectories corresponding to the same activities. Then the paths are extracted from the views of the cameras. Thirdly, the abnormal activities are detected by the probabilistic model. The activity model is suitable for recognizing the scene structures. The improvements are the uncalibrated cameras in the method and the activity learning without supervision. The framework focuses on the activity model and scene structure recognition. However, only positions and directions are extracted as features of trajectories. The accurate profile and size of object are not reconstructed in the paper. Ren Z.13 proposes a 3D measurement method of small mechanical parts with binocular vision. The complicated noisy impact is considered in the test. The nonlinear features are precisely extracted by the multiscale decomposition of images and virtual chains. The 3D curves are reconstructed by the projections of two cameras. Ambrosch K.14 provides the hardware for the real-time stereo vision. A novel algorithm for the absolute differences and census transform15 is proposed for stereo matching. Wang Z.16 introduces a calibration method for the parameters of binocular vision system. The test is performed by the small target in the large field of view. The re-projection errors and Zhang’s method17 are employed to conduct the optimization solution of the parameters. Although the binocular vison is simple to be realized, the feature matching is still a complex problem for the object without texture. Therefore, the binocular vision is widely mentioned in 3D pose estimation18,19, visual navigation20, etc.

The second kind of profile measurement methods is active vision, which generates the third dimensional information by the structured light. The light pattern in the active vision includes points21, lines22 and gratings23,24. Xu G.25 outlines a model to reconstruct the 3D laser projection point on the measured object. A point laser projector is combined to a planar target, where the projection invariants are derived. Rodríguez J. A. M.22 presents a 3D vision method using the mobile camera and the laser line. An approximation network is constructed to calibrate the scanning system. Grating is the prevalent structured light in the active vision. Chen X.26 constructs a camera-projector system. Four sinusoidal gratings are projected on the measured object. The fourth order radial and tangential lens distortion are considered in the system calibration model. The active vision facilitates the profile measurement as the structured light is an idea mark on the object. Therefore, the active vision overcomes the matching problem of the object without texture. Chen, H.27 presents an improved calibration method to increase the calibration accuracy of the camera and the projector simultaneously. The previous works also cover the studies on the combination of the active vision and the binocular vision28. As the single camera has the problem of camera occlusion, Zhang S.29 develops a shape measurement method based on one grating projector and two cameras. The system model and 3D data registration with the iterative closest-point algorithm are described in the method. Vilaça J. L.30 reports a laser scanning system with two cameras to profile the 3D object surface. The two cameras achieve the laser line detection for the calibration of structured light. As the grating pattern tends to be impacted by the illumination in the environment, the measurement on the basis of laser line is appropriate for the accurate non-contact measurement. Jin Z. S.31 proposes a reconstruction method for the gas metal arc welding (GMAW) pool. The vision measurement system consists of two cameras and a laser generator. The point projection is constructed by the pinhole model. The two cameras and the laser plane are calibrated respectively. The method provides the 3D reconstruction result of weld pool surface. Nevertheless, the epipolar geometry, which relates the points on one camera and the lines on the other camera, is not mentioned in the reconstruction. Furthermore, only the closed form solutions are modeled in the paper. The optimization process based on the real linear dimension and angular dimension is also not reported in the method.

A binocular active vision approach is proposed to achieve the profile measurement. In the traditional research15, two main ideas are reported in the literatures. The first is based on the 2D re-projection errors, which are generated from the image and parameterized by the internal and external matrices of the active vision system. Obviously, the 3D reconstruction accuracy is not the optimization objective of the idea. Therefore, it is unsuitable for 3D profile reconstruction. The other approach employs the 3D reconstruction errors. The 3D distance is reconstructed by the parameters of the active vision system. The measurement accuracy of the linear dimension is improved in the optimization process. However, the measurement accuracy of the angular dimension is not considered in the optimization. In order to simultaneously improve the measurement accuracy of the real linear dimension and angular dimension, we focus on the orthogonality of vectors that are generated from an L target. Geometrically, two vectors are derived from the feature points on the L target. The norms of the vectors and the angle between the vectors are parameterized by the laser plane coordinate, the internal and external matrices of the cameras. According to the known angle and norms of the vectors on the L target, the angle reconstruction errors and the distance reconstruction errors are synthetically modeled in the optimization objective function. In the study, the measurement accuracy is enhanced by the optimization process. By this method, the distance and angle are both considered in the optimization object. Moreover, the different scales of the distance and angle are normalized to perform an accurate measurement. The rest paper is divided to three parts: Section 2 describes the solution model of the binocular active vision with the normalization object of the vector orthogonality. Then, Section 3 conducts the comprehensive comparison experiments to verify the method. Section 4 concludes the paper.

Methods

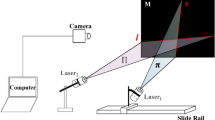

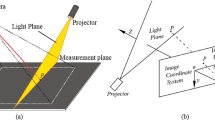

The measurement model is illustrated in Fig. 1. Two targets without texture are designed and positioned in the view-field of the binocular vision system. Oα-XαYαZα and Oβ-XβYβZβ are the camera coordinate systems of the left and right cameras. OW-XWYWZW is the world coordinate system. A laser plane is projected to the two targets. There are two intersection lines on the targets. Then the two cameras capture the laser intersection lines. The projections of the intersection lines are generated on the image planes of the two cameras.

The cameras are calibrated by the well-known direct linear transform (DLT) method32. The projection planes that are determined by the optical centers and projection laser lines are33

where φα,I, φα,II, φβ,I, φβ,II are the projection planes. \({{\bf{P}}}^{{\rm{\alpha }}}={[{p}_{km}^{{\rm{\alpha }}}]}_{3\times 4}\), \({{\bf{P}}}^{{\rm{\beta }}}={[{p}_{km}^{{\rm{\beta }}}]}_{3\times 4}\) are the projection matrices of two cameras. aα, bα, aβ, bβ are the projections of the laser lines. The superscripts α, β represent the left and right cameras. The superscripts I, II indicate the left and right targets.

The Plücker matrices of the intersection lines on the targets are33

where A*, B* are the plane-based Plücker matrices of the intersection lines on the left and right targets, respectively.

The plane-based Plücker matrices A*, B* are further transformed to the dual matrices A, B by the Graßmann–Plücker relation32. A laser plane can be determined by two laser lines A, B34. Thus, the laser plane satisfies

where φ is the laser plane of the binocular-active-vision system. The solution process of the initial value of the laser plane is interpreted in Fig. 2.

In order to refine the laser plane and reconstruct the 3D intersection points between the measured object and the laser plane, we design an L target with three feature points Qi,1, Qi,2, Qi,3 in the i-th position. i = 1, 2, …, n. In Fig. 3, the laser plane intersects the L target with the three points. The projections of the three points captured by two cameras obey to the pinhole model17. Therefore, we have

where \({{\bf{Q}}}_{i,j}^{{\rm{\alpha }}}\), \({{\bf{Q}}}_{i,j}^{{\rm{\beta }}}\) are the 3D points derived from two cameras and related to Qi,1, Qi,2, Qi,3. \({{\bf{q}}}_{i,j}^{{\rm{\alpha }}}={({x}_{i,j}^{{\rm{\alpha }}},{y}_{i,j}^{{\rm{\alpha }}})}^{{\rm{T}}}\), \({{\bf{q}}}_{i,j}^{{\rm{\beta }}}={({x}_{i,j}^{{\rm{\beta }}},{y}_{i,j}^{{\rm{\beta }}})}^{{\rm{T}}}\) are two projection points on the left and right cameras. si,j is a scale factor. j = 1, 2, 3.

The feature points on the laser plane satisfy33

Stacking Eqs (8)–(11), the 3D points \({{\bf{Q}}}_{i,j}^{{\rm{\alpha }}}\), \({{\bf{Q}}}_{i,j}^{{\rm{\beta }}}\) are solved by the singular value decomposition (SVD) method35 and parameterized by

where

As the 3D points \({{\bf{Q}}}_{i,j}^{{\rm{\alpha }}}({\boldsymbol{\phi }})\), \({{\bf{Q}}}_{i,j}^{{\rm{\beta }}}({\boldsymbol{\phi }})\) correspond to the feature point Qi,j, Qi,j is balanced by \({{\bf{Q}}}_{i,j}^{{\rm{\alpha }}}({\boldsymbol{\phi }})\), \({{\bf{Q}}}_{i,j}^{{\rm{\beta }}}({\boldsymbol{\phi }})\) and parameterized by

As the three 3D points on the L target generated two orthogonal vectors and the norms of the vectors are known, the orthogonality and norms of the vectors can be considered as two objects to optimize the laser plane. The object is given by

where \(\tilde{{\boldsymbol{\phi }}}\) is the optimized laser plane of Eq. (15). d1, d2 are norms of the two vectors on the L target. θ is the angle between the two vectors.

As the orthogonality is scaled by degree and the norms of the vectors are scaled by millimeter, it is necessary to balance the two different objects. Consequently, the two objects in Eq. (15) are enhanced and normalized by

where \(\hat{{\boldsymbol{\phi }}}\) is the optimized laser plane of Eq. (16).

The optimization process of the laser plane in the binocular active vision is explained in Fig. 4. The benefits of the normalization object of vector orthogonality can be observed in experiment results.

In profile reconstruction, the laser plane intersects the measured object with 3D points Q. The epipolar geometry is employed to realize the matching task of the 2D points of the left image and the right image36. The corresponding point is considered as the nearest point to the epipolar line in the candidate set of laser points. The 3D points Q is located on the optimized laser plane \(\hat{{\boldsymbol{\phi }}}\) and projected to the cameras17,33, then

where \({{\bf{q}}}^{{\rm{\alpha }}}={({x}^{{\rm{\alpha }}},{y}^{{\rm{\alpha }}})}^{{\rm{T}}}\), \({{\bf{q}}}^{{\rm{\beta }}}={({x}^{{\rm{\beta }}},{y}^{{\rm{\beta }}})}^{{\rm{T}}}\) are the projection points on two cameras. Eqs (17)–(19) can be rewritten by

where

The 3D point Q on the measured object is solved by the SVD method.

Results

The measured objects and a binocular-active-vision system, including a laser projector and two cameras, are shown in the first column of Fig. 5. 2048 × 1536 resolution is adopted in the experiments. The test codes are programmed by Matlab. The algorithms of the codes are based on the processes in Figs 2 and 4. Figure 2 describes the closed form solution of the laser plane. Figure 4 interprets the optimization process of the laser plane using the binocular active vision with normalization object of vector orthogonality. The profiles of four measured objects, a car model, a mechanical part, a cylinder and a tea pot, are sampled to show the validity of the method. The second and third columns in Fig. 5 provide the point-epipolar-line matching results of the left and right images. The profile measurement results are expressed in the last column of Fig. 5. Different from the traditional benchmark of the standard distance25, standard distances and standard angles are both chosen as the benchmarks to verify the proposed method. In the four groups of experiments, the normalization object of vector orthogonality is compared to other four reconstruction methods, which are the binocular vision, the monocular vision and a laser plane, the binocular vision and a laser plane without optimization, the binocular vision and a laser plane with the object of vector orthogonality. The standard lengths of this experiment are 25 mm, 30 mm, 35 mm and 40 mm while the standard angle is 90°. Figure 6 shows the errors of the reconstruction distances and standard distances. The measurement distances between the object and the line connecting two cameras are 950 mm, 1000 mm, 1050 mm and 1100 mm.

Experimental results of the profile measurement using the binocular active vision with the normalization object of vector orthogonality. (a, e, i and m) are the test system and the measured objects. (b, f, j and n) The images of the right camera, the feature points and epipolar lines. (c, g, k and o) The images of the left camera, the feature points and epipolar lines. (d, h, l and p) The reconstruction results of the measured objects.

Absolute errors of the reconstructed test lengths based on A, B, C, D and E reconstruction methods. Method A is the binocular reconstruction vision. Method B is the monocular vision and a laser plane. Method C is the binocular vision and a laser plane without optimization. Method D is the binocular vision and a laser plane with the object of vector orthogonality. Method E is the binocular active vision with the normalization object of vector orthogonality. The error bar represent the distribution the reconstruction errors. Five line segments are located in every group of data. From the bottom line segment to the top line segment, 10%, 25%, 50%, 75%, 90% data are smaller than the related values of the line segments, respectively. (a–d) Are related to the measurement distances of 950 mm, 1000 mm, 1050 mm and 1100 mm, respectively.

Figure 6(a) displays the experimental errors when the distance between the object and two cameras is 950 mm. The standard lengths of 25 mm, 30 mm, 35 mm and 40 mm are reconstructed by five methods, respectively. For the binocular vision method, the average reconstruction errors are 0.25 mm, 0.38 mm, 0.50 mm and 0.59 mm. The standard deviations are 0.22 mm, 0.24 mm, 0.22 mm and 0.24 mm. The average reconstruction errors using the monocular vision and the laser plane are 0.20 mm, 0.36 mm, 0.48 mm and 0.56 mm. The standard deviations are 0.20 mm, 0.22 mm, 0.22 mm and 0.24 mm. When the binocular vision and the laser plane without optimization is performed for reconstruction, the average reconstruction errors are 0.17 mm, 0.26 mm, 0.42 mm and 0.53 mm. The standard deviations are 0.20 mm, 0.20 mm, 0.22 mm and 0.24 mm. Based on the binocular vision and the laser plane with the object of vector orthogonality, the average errors are 0.13 mm, 0.22 mm, 0.37 mm and 0.48 mm. The standard deviations are 0.17 mm, 0.17 mm, 0.20 mm and 0.20 mm. For the binocular active vision with the normalization object of vector orthogonality, the average errors are 0.14 mm, 0.20 mm, 0.35 mm and 0.46 mm. The standard deviations are 0.14 mm, 0.14 mm, 0.17 mm and 0.20 mm. Tables 1 and 2 summarize the error means and standard deviations under the measurements of the standard lengths, 25 mm, 30 mm, 35 mm, 40 mm and the right angle. Moreover, Table 3 shows the uncertainties under the measurements of the standard lengths, 25 mm, 30 mm, 35 mm, 40 mm and the right angle.

From Fig. 6(a–d), the average errors and the standard deviations increase when the distances between the object and the cameras change from 950 mm to 1100 mm. While the measurement distance is fixed, the average errors and the standard deviations drop down from method A to method E. Furthermore, the average errors and the standard deviations indicate an increasing trend when the standard length of the test grows up.

Figure 7 describes the angle errors in the test. The reconstructed standard angle is a right angle. Then the angle errors are obtained by comparing the reconstructed angles with the standard angle of 90°. In addition, the distances between the object and two cameras are also 950 mm, 1000 mm, 1050 mm and 1100 mm.

Absolute errors of the reconstructed test angles based on A, B, C, D and E reconstruction methods. Method A is the binocular reconstruction vision. Method B is the monocular vision and a laser plane. Method C is the binocular vision and a laser plane without optimization. Method D is the binocular vision and a laser plane with the object of vector orthogonality. Method E is the binocular active vision with the normalization object of vector orthogonality. The error bar represent the distribution the reconstruction errors. Five line segments are located in every group of data. From the bottom line segment to the top line segment, 10%, 25%, 50%, 75%, 90% data are smaller than the related values of the line segments, respectively. (a–d) Are related to the measurement distances of 950 mm, 1000 mm, 1050 mm and 1100 mm, respectively.

Figure 7(a) displays the experimental errors when the distance between the object and two cameras is 950 mm. For the different standard lengths, the average errors are 0.43°, 0.57°, 0.89° and 1.01° for the binocular reconstruction vision. The standard deviations are 0.40°, 0.40°, 0.45° and 0.45°. The method of the monocular vision and the laser plane expresses the reconstruction average errors of 0.39°, 0.56°, 0.86° and 0.99°. The standard deviations are 0.36°, 0.38°, 0.44° and 0.44°. For the third method, the average errors are 0.30°, 0.43°, 0.76° and 0.82°. The standard deviations are 0.30°, 0.32°, 0.37° and 0.38°. According to the method of the binocular vision and a laser plane with the object of vector orthogonality, the average errors are 0.18°, 0.36°, 0.68° and 0.75°. The standard deviations are 0.30°, 0.32°, 0.36° and 0.36°. For the last method, the average errors are 0.14°, 0.32°, 0.62° and 0.72°. The standard deviations are 0.29°, 0.30°, 0.32° and 0.33°. The experimental errors when the distances between the object and two cameras are 950 mm, 1000 mm, 1050 mm, 1100 mm are provided in Tables 1–3.

According to the results in Fig. 7(a–d), when the norm of the vector increases from 25 mm to 30 mm, 35 mm, 40 mm, the average angle errors and the standard deviations increase. The average errors and the standard deviations also grow up when the measurement distance varies from 950 mm to 1100 mm. Moreover, while the distance is fixed, the decreasing trend of the reconstruction angle errors is also observed from method A to method E. The errors of the binocular active vision with the normalization object of vector orthogonality are smaller than the ones of the binocular vision and a laser plane with the object of vector orthogonality. Therefore, the normalization object contributes more accurate results than other methods as it balances the length object and the angle object.

In order to further investigate the accuracy of the approach, the first-level square ruler and the 0.01 mm vernier caliper are chosen as the quasi-ground-truths of the angle and the length, respectively. Figure 8 shows the experimental setups of the verification method of the profile measurement using the binocular active vision with the normalization object of vector orthogonality. The marks are attached to the two right sides of the square ruler. In order to recognize the standard length on the vernier caliper, two semi-circular marks are attached to the two outside large jaws of the vernier caliper, respectively. The vernier caliper provides the absolute reference lengths of 25 mm, 30 mm, 35 mm, and 40 mm, respectively. Table 4 shows the verification results of the measurement method with the quasi-ground-truths of the length and the angle. The experiment results show that the average reconstruction errors of the absolute lengths of the A, B, C, D and E methods are 0.63 mm, 0.60 mm, 0.55 mm, 0.51 mm and 0.49 mm, respectively. The average reconstruction errors of the absolute standard angle of the A, B, C, D and E methods are 0.91°, 0.87°, 0.78°, 0.71°, and 0.68°, respectively. In view of other methods, the binocular-active-vision method with the normalization object of the vector orthogonality achieves the higher accuracy for the reconstruction and is available for the object profile measurement and motion analysis.

Experimental setups of the verification method of the profile measurement using the binocular active vision with the normalization object of vector orthogonality. (a) Is the square ruler for the absolute reference of the angle. (b) Is the vernier caliper for the absolute reference of the length. (c) Is the verification experiment with the square ruler. (d) Is the verification experiment with the vernier caliper.

Summary

A profile measurement method adopting binocular active vision with the normalization object of vector orthogonality is presented in the paper. An L target is designed to construct two vectors in the view field of the cameras. The orthogonality of the vectors is achieved by the feature points on the target. Both length and angle of the vectors are considered in the optimization function. Furthermore, the length and angle of the vectors are normalized to balance the different optimization objects. Four methods are compared with the proposed method in the experiments. The experiments results show that the average errors of the reconstruction lengths using the five methods are 0.64 mm, 0.62 mm, 0.56 mm, 0.51 mm and 0.49 mm, respectively. The length errors of the method, compared with the other methods, are decreased by 23%, 20%, 14% and 4%, respectively. The average reconstruction errors of the five methods are 0.92°, 0.89°, 0.79°, 0.72° and 0.69°, respectively. The angle errors of the method, compared with the other methods, are reduced by 25%, 22%, 13% and 4%, respectively. Therefore, the binocular-active-vision method with normalization object of vector orthogonality balances the different objects of the angle and length and improves the accuracy of 3D objects reconstruction.

Data Availability

The datasets generated during the current study are available from the corresponding author on reasonable request.

References

Kim, D. & Lee, S. Structured-light-based highly dense and robust 3D reconstruction. J. Opt. Soc. Am. A. 30, 403–417 (2013).

Farid, N., Hussein, H. & Bahrawi, M. Employing of diode lasers in speckle photography and application of FFT in measurements. Mapan-J. Metrol. Soc. I. 30, 125–129 (2015).

Głowacz, A. & Głowacz, Z. Diagnosis of the three-phase induction motor using thermal imaging. Infrared Phys. Techn. 81, 7–16 (2017).

Özgürün, B., Tayyar, D. Ö., Agiş, K. Ö. & Özcan, M. Three-dimensional image reconstruction of macroscopic objects from a single digital hologram using stereo disparity. Appl. Optics 56, 84–90 (2017).

Xu, J., Xi, N., Zhang, C., Shi, Q. & Gregory, J. Real-time 3d shape inspection system of automotive parts based on structured light pattern. Opt. Laser Technol. 43, 1–8 (2011).

Brosed, F. J., Santolaria, J., Aguilar, J. J. & Guillomía, D. Laser triangulation sensor and six axes anthropomorphic robot manipulator modelling for the measurement of complex geometry products. Robot. & Com-Int. Manuf. 28, 660–671 (2012).

Ruiz, J. et al. Breast density quantification using structured-light-based diffuse optical tomography simulations. Appl. Optics 56, 7146–7157 (2017).

Bakirman, T., Gumusay, M. U. & Reis, H. C. Comparison of low cost 3D structured light scanners for face modeling. Appl. Optics 56, 985–992 (2017).

Geng, J. Structured-light 3d surface imaging: a tutorial. Adv. Opt. Photonics 3, 128–160 (2011).

Faugeras, O., Luong, Q. T. & Papadopoulo, T. The geometry of multiple images - the laws that govern the formation of multiple images of a scene and some of their applications. (DBLP, 2001).

Wang, X., Tieu, K. & Grimson, W. E. L. Correspondence-free activity analysis and scene modeling in multiple camera views. IEEE T. Pattern Anal. 32, 56–71 (2010).

Wang, X., Tieu, K., & Grimson, W. E. L. Correspondence-free multi-camera activity analysis and scene modeling. IEEE Conference on Computer Vision & Pattern Recognition 1–8 (2008).

Ren, Z., Liao, J. & Cai, L. Three-dimensional measurement of small mechanical parts under a complicated background based on stereovision. Appl. Optics 49, 1789–1801 (2010).

Ambrosch, K. & Kubinger, W. Accurate hardware-based stereo vision. Comput. Vis. Image Und. 114, 1303–1316 (2010).

Zabih, R. & Woodfill, J. Non-parametric local transforms for computing visual correspondence. Proceedings of Computer Vision-ECCV 151–158 (1994).

Wang, Z., Wu, Z., Zhen, X., Yang, R. & Xi, J. An onsite structure parameters calibration of large FOV binocular stereovision based on small-size 2D target. Optik 124, 5164–5169 (2013).

Zhang, Z. A flexible new technique for camera calibration. IEEE T. Pattern Anal. 22, 1330–1334 (2000).

Luo, Z., Zhang, K., Wang, Z., Zheng, J. & Chen, Y. 3D pose estimation of large and complicated workpieces based on binocular stereo vision. Appl. Optics 56, 6822–6836 (2017).

Li, J. Binocular vision measurement method for relative position and attitude based on dual-quaternion. J. Mod. Optic. 39, 1–8 (2017).

Zhang, X., Song, Y., Yang, Y. & Pan, H. Stereo vision based autonomous robot calibration. Robot. Auton. Syst. 93, 43–51 (2017).

Xu, G., Yuan, J., Li, X. & Su, J. Optimization reconstruction of projective point of laser line coordinated by orthogonal reference. Sci. Rep. 7, 14719 (2017).

Rodríguez, J. Laser imaging and approximation networks for calibration of three-dimensional vision. Opt. Laser Technol. 43, 491–500 (2011).

Wang, G., Hu, Z. & Wu, F. Implementation and experimental study on fast object modeling based on multiple structured stripes. Opt. Laser. Eng. 42, 627–638 (2004).

Liu, H., Su, W. H. & Reichard, K. Calibration-based phase-shifting projected fringe profilometry for accurate absolute 3D surface profile measurement. Opt.Commun. 216, 65–80 (2003).

Xu, G., Yuan, J., Li, X. & Su, J. 3D reconstruction of laser projective point with projection invariant generated from five points on 2d target. Sci. Rep. 7, 601–613 (2017).

Chen, X., Xi, J. & Jin, Y. Accurate calibration for a camera–projector measurement system based on structured light projection. Opt. Laser. Eng. 47, 310–319 (2009).

Chen, H. et al. Accurate calibration method for camera and projector in fringe patterns measurement system. Appl. Optics 55, 4239–4300 (2016).

Lelas, M. & Pribanić, T. Accurate stereo matching using pixel normalized cross correlation in time domain. Automatika 57, 46–57 (2016).

Zhang, S. Three-dimensional shape measurement using a structured light system with dual cameras. Opt. Eng. 47, 013604 (2008).

Vilaça, J. L., Fonseca, J. C. & Pinho, A. M. Calibration procedure for 3D measurement systems using two cameras and a laser line. Opt. Laser Technol. 41, 112–119 (2009).

Jin, Z. S., Li, H. C., Li, R., Sun, Y. F. & Gao, H. M. 3D reconstruction of GMAW pool surface using composite sensor technology. Measurement 133, 508–521 (2019).

Abdel-Aziz, Y. I., Karara, H. M. & Hauck, M. Direct linear transformation from comparator coordinates into object space coordinates in close range photogrammetry. Photogramm. Eng. Rem. S. 81, 103–107 (2015).

Hartley, R. & Zisserman, A. Multiple View Geometry in Computer Vision (Cambridge University Press, 2003).

Wei, Z. Novel calibration method for a multi-sensor visual measurement system based on structured light. Opt. Eng. 49, 043602 (2010).

Horn, R. A. & Johnson, C. R. Matrix Analysis (Cambridge University Press, 2012).

Linda, G. S. & George, C. S. Computer Vision (Prentice Hall, 2001).

Acknowledgements

This work wassupported by National Natural Science Foundation of China under Grant Nos 51875247, 51478204, 51205164, and Natural Science Foundation of Jilin Province under Grant No. 20170101214JC.

Author information

Authors and Affiliations

Contributions

G.X. provided the method, G.X., J.C. and X.T.L. contributed the writing and editing of the paper, J.C. and J.S. prepared the codes and experiments, J.C. prepared the figures.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xu, G., Chen, J., Li, X. et al. Profile measurement adopting binocular active vision with normalization object of vector orthogonality. Sci Rep 9, 5505 (2019). https://doi.org/10.1038/s41598-019-41341-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-019-41341-8

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.