Abstract

In this paper, a structured light vision measurement method using a scanning laser line and a positioning laser line is proposed. The novel method enables the scanning laser plane to slide along a slide rail while maintaining intersection with the positioning laser plane, eliminating the need to determine the scanning direction and moving step. During the measurement process, the laser plane equations need to be recalibrated for each new position, so a real-time calibration method is given. Initially, the geometric barycenter method is employed to detect the subpixel coordinates of the light stripe intersection point. Subsequently, these coordinates are projected into the camera coordinate system using the initial equations of the positioning laser plane. Finally, leveraging the normal information of the initial equation of the scanning laser plane and the three-dimensional coordinates of the light stripe intersection point, the real-time calibration of the scanning laser plane equations can be accomplished. The proposed method enables the three-dimensional reconstruction of objects, and its accuracy is verified through measurements on gauge blocks. Experimental results demonstrate that this method achieves precise and stable three-dimensional reconstruction of object surface shape.

Similar content being viewed by others

Introduction

With the swift progress in modern manufacturing and technology, three-dimensional (3D) reconstruction techniques have garnered significant attention in both research and practical applications, encompassing fields such as machine vision, biomedical science, entertainment, and contemporary industry1,2. Especially within the manufacturing sector, there has been a rising demand for measurements with high precision and efficiency. This has catalyzed the evolution and refinement of numerous non-contact 3D measurement techniques, including structured light measurement, 3D laser scanning, and Time-of-Flight (TOF) camera technologies3.

Structured light measurement technology captures precise 3D information of an object by projecting specific light patterns onto its surface and subsequently analyzing the reflected patterns or distortions. Because of its suitability for complex-shaped objects, combined with its high precision, speed, cost-effectiveness, and stability, structured light measurement emerges as a preferred method for close-range, small-scale measurement scenarios in the non-contact measurement domain4. In contrast, 3D laser scanning technology utilizes a laser beam to scan a target object, processing its reflected light to reconstruct the object’s surface topology. Although this technology is suitable for large-scale and long-distance measurement scenarios, for smaller research groups, high-precision instruments may be economically unaffordable. More cost-effective equipment might compromise on accuracy, and may not be as effective as structured light measurement methods in low-cost, small-scale measurement applications5,6,7. TOF (Time-of-Flight) cameras operate based on the time it takes for light to propagate on the surface of an object and measure distance, offering advantages in speed and measurement range. However, in close-distance, small-scale measurement scenarios, its stability and measurement accuracy may not be as good as structured light measurement methods8,9,10.

Structured light measurement techniques can be broadly divided into three categories: point-structured, surface-structured, and line-structured light. The point-structured light measurement technique projects a laser onto an object’s surface, producing a laser dot. By analyzing the positional shift of this laser dot on a photosensitive element, the depth information of the dot can be deduced11. However, this method is limited to measuring a finite number of positions at any given moment. To comprehensively capture the structural intricacies of the entire surface, scanning must be done point by point or line by line. Consequently, the data acquired for an object’s surface details is often insufficient.

On the other hand, the surface-structured light measurement technique employs a projector to cast raster images onto the object’s surface. By analyzing the modulated raster image influenced by the object’s surface, the 3D contour of the surface can be directly determined12,13,14,15,16. This approach obviates the need for scanning, a stark contrast to the point-structured light method. However, the act of raster projection requires both the encoding of the projection pattern and the decoding of the captured image, thereby amplifying the intricacy of image processing17,18,19,20.

The line-structured light measurement technique operates on the principle of laser triangulation. Initially, a linear laser is cast onto the surface of the object in question. Following this, an extraction algorithm identifies and pinpoints the two-dimensional (2D) coordinates of the light stripe’s central point within the image. By mapping these 2D coordinates into the camera’s coordinate system, and considering the pre-calibrated vision system parameters, the spatial coordinates of this central point can be calculated. This approach captures a more extensive set of data in a single pass compared to the point-structured light technique, ensuring heightened efficiency21. When set against the surface-structured light technique, the line-structured method boasts more straightforward image processing, given that each image contains just a singular light stripe pattern.

To capture the full 3D profile of the object under measurement, the laser plane must integrate with at least one mobile unit equipped with scanning capabilities. This integration facilitates the laser plane’s movement across the object, following a predefined scanning trajectory. Utilizing the mobile device, combined with the outcomes of the system calibration, data from various images can be consolidated within a singular coordinate system. This process enables the acquisition of point cloud data representing the surface of the measured object5,22,23.

Coordinate measuring machines (CMM)24,25,26 are commonly employed as mobile devices. When the 3D scanning system is mounted on the CMM, the data points are directly transformed into 3D data within the CMM coordinate system. Subsequently, a curved surface is constructed by gridding the point cloud. Since the final measurement is performed in the CMM frame, the target’s position in the CMM frame is measured using the trigger probe to derive the conversion from the world coordinate system to the global coordinate system of the CMM. Usually, using contact probes to identify the target orientation in the CMM global frame makes the calibration process inconvenient and not facilitating. Therefore, some methods are proposed without mobile control devices, such as handheld laser scanning27,28. However, although these methods mitigate the precision demands of the scanning device, they tend to accumulate measurement errors continuously, and the methods’ performance limits the measurement accuracy.

To simplify the calibration and scanning operations, in the experiments given in the references29,30,31,32,33, a linear projector was placed perpendicular to the object surface (x-axis), and the camera was aligned at an oblique angle. In this configuration, the object was fixed on a platform that moved the object along the x-axis (slide rail). However, in practice, the perpendicular relationship between the line laser projector and the object surface is difficult to guarantee and can affect the final measurement accuracy. Therefore, in reference34, the above specific vertical relationship was not required, and a relevant algorithm to determine the scanning direction was given to further improve the 3D reconstruction accuracy.

To enhance the measurement accuracy of the system, various studies have incorporated mechanical, specifically stepping motors, as their primary driving mechanisms for scanning motion. For instance, Huang35 utilized three stepping motors to drive a console, enabling movement in three dimensions with an accuracy of 0.55 mm. Zhou36 employed a stepping motor to drive a table carrying the measured object, facilitating perpendicular movement to the laser plane. Similarly, Li37 conducted measurements on a ceramic ball with a radius of 28.494 mm, achieving a measured radius of 28.499 mm with a radius error of only 0.005 mm. By utilizing stepping motors as driving methods, these studies ensured high measurement accuracy. However, it is worth noting that the scanning process of the laser plane controlled by a stepping motor follows a “step → pause → step” pattern, introducing a certain hysteresis. From a strict perspective, such a process deviates from the notion of continuous “scanning,” potentially compromising the real-time efficiency of 3D measurements.

Increasing the number of laser lines can significantly enhance the measurement accuracy and efficiency of line-structured light techniques. For instance, Wu38 designed a 3D measurement system utilizing a three-line laser to measure the diameter of a sphere with a measurement error of 0.01 mm. In reference39, a multi-line structured light measurement method was employed, achieving a detection accuracy of 99.74% for various computer keyboards. Li40 designed a multi-line structured light measurement system, with the measurement error of objects being within 0.4 mm. Reference41 presented a multi-line structured light vision technique, realizing a balance between precision and speed in measuring the flatness of steel plates. Gao42 employed two three-line lasers, together casting a total of six parallel rays onto the target object. However, it’s pertinent to note that a linear movement of the object remained a requisite to enable efficient scanning measurements.

The multi-line structured light measurement methodology boasts a slew of benefits, encompassing heightened accuracy, amplified efficiency, and a potential for cost savings. Nonetheless, this approach isn’t without its drawbacks. To begin with, prevailing multi-line structured light techniques often handle each light stripe in isolation, devoid of any meaningful interrelation among them. Additionally, despite the multi-line approach, there’s a possibility that a solitary image might fall short in capturing comprehensive data, thereby prompting the need for data collection across multiple images via scanning43. Subsequently, the surface point cloud representing the examined object is derived by meticulously aligning and amalgamating the collated scan data.

This paper presents a novel solution to address the aforementioned issues through a double-line combined structured light 3D vision measurement method. The proposed method utilizes two single-line lasers: one acts as a positioning laser plane, while the other serves as a scanning laser plane, with the motion controlled by a slide rail. Throughout the scanning process, the two laser planes consistently intersect each other, eliminating the requirement for a specific spatial relationship, such as perpendicularity. By leveraging the intersection information of the two light stripes and the initial calibration data of the system, real-time calibration of the scanning laser plane is achieved during the motion. This calibration enables the comprehensive measurement of the surface of the object being measured. Importantly, the method proposed in this paper circumvents the need for determining the scanning direction and avoids any efficiency limitations caused by the hysteresis of stepper motors commonly used in measurement systems.

System structure and measurement steps

System structure

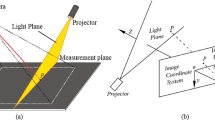

The structure of the measurement system is shown in Fig. 1, which consists of two single-line lasers, a slide rail, a camera, and a computer. Laser1 (scanning laser) is securely mounted on the slide rail slider. Laser2 (positioning laser) remains fixed and maintains a constant intersection angle with Laser1. Theoretically, the lasers can intersect at any desired angle. The computer takes charge of controlling the camera to capture continuous images throughout the scanning process, while also performing necessary image processing tasks.

Measurement steps

As shown in Fig. 2, the initial laser planes of the system are defined by the scanning laser plane, π1, and the positioning laser plane, ∏. The light strip, denoted as l, when projected onto the background plane, M, intersects s1 at the point labeled P1. As the scanning unfolds, a series of subsequent intersection points emerge, represented as P2 through Pn.

As shown in Fig. 3, the measurement process can be summarized as the following three steps:

Step 1: Initial calibration of the system In this step, the system undergoes calibration procedures, including camera calibration, positioning laser plane calibration, and initial scanning laser plane calibration. This involves obtaining the camera’s internal parameter matrix, distortion coefficients, and equations for both laser planes.

Step 2: Real-time calibration of the scanning laser plane During this step, the slider is utilized to facilitate the movement of Laser1 along the slide rail in a linear fashion while the camera continuously captures images. The image coordinates of the intersection point Pn in each image are detected, and their corresponding 3D coordinates are solved by using the calibration information of the camera and the positioning laser plane. Based on the calibration information of the initial scanning laser plane and the 3D coordinates of the intersection point Pn, the equation of the scanning laser plane is calculated at each moment of image capture.

Step 3: 3D surface shape measurement and reconstruction In this final step, the center point information of the scanning light stripe image is detected by a light stripe center detection algorithm, and those 3D coordinates are calculated. Subsequently, the 3D surface shape data of the measured object can be obtained by splicing the 3D coordinates of all images, and the 3D reconstruction can be achieved ultimately.

Calibration and calculation methods

The structure of the double-line combined structured light 3D vision measurement system integrates both a camera and two single-line lasers. Consequently, the initial calibration process encompasses camera calibration to determine the intrinsic parameters, such as the internal parameter matrix and distortion coefficients. Additionally, calibration of the laser plane is carried out to establish the mathematical equations representing the laser planes employed in the measurement system. By precisely determining the equations for the positioning laser plane and the scanning laser plane, the system can accurately identify the intersection points between the laser stripes and the object surface. These initial calibration steps ensure the system’s capacity for accurate and reliable measurements.

Camera calibration

The basic principle of camera calibration is that a non-linear relationship exists between the three-dimensional (3D) surface of an object and its two-dimensional (2D) image captured by the camera. The pinhole imaging model is commonly employed to describe the linear relationship of perspective projection while considering lens distortion effects. During camera calibration, four coordinate systems are established to precisely describe the transformation relationships between coordinates. These coordinate systems include the world coordinate system \(({X}_{w},{Y}_{w},{Z}_{w})\), the camera coordinate system \(({X}_{c},{Y}_{c},{Z}_{c})\), the image physical coordinate system \((x,y)\), and the image pixel coordinate system \((u,v)\), as shown in Fig. 4.

Zhang’s calibration method44 is widely recognized as one of the most commonly used camera calibration techniques. This method establishes the world coordinate system by selecting a reference point on the calibration plate, typically the upper-left corner, as the origin. The \({X}_{w}{O}_{w}{Y}_{w}\) plane of the world coordinate system coincides with the plane of the calibration plate, ensuring that the points on the calibration plate have a constant Z-coordinate value of zero. To calculate the camera’s internal parameter matrix \(A\), external parameter matrix \(R\), translation vector \(T\), and distortion coefficient \(K\), \(N (N \ge 3)\) calibration plate images are captured from different positions, and the corner point coordinates of each image are extracted. In this paper, tangential distortion is added based on Zhang’s calibration model, which makes the calibration model become a non-linear model more in line with high-precision measurement. By utilizing a non-linear optimization function, typically solved using the Levenberg–Marquardt (L–M) algorithm, the camera calibration process can be completed. The internal parameter matrix \(A\) and distortion coefficient \(K{({k}_{1},{k}_{2},{p}_{1},{p}_{2})}^{T}\) of the camera can be accurately determined.

Laser plane calibration

In a previous study45, a laser plane calibration algorithm utilizing a planar target with a square pattern is proposed. The calibration process involves capturing the target image from a minimum of two distinct spatial positions. By projecting the pixel coordinates of the light stripe’s center point onto the camera coordinate system, a set of 3D coordinates can be obtained. These 3D coordinates are then used to determine the laser plane equations through a fitting procedure.

The Bouguet method mentioned in reference46 is used to extract the image coordinates of four corner points in the black square pattern, as shown in Fig. 5. The plane equation of the target in the camera coordinate system can be solved by using the camera parameters, as shown in Fig. 6.

The sub-pixel coordinates of the center point of the light stripe can be accurately extracted using the Steger algorithm, as presented in the reference47. This algorithm, depicted in Fig. 7, enables precise localization of the center point with sub-pixel accuracy.

The sub-pixel coordinates of the center points of light stripes in multiple images are projected into the camera coordinate system, and their 3D coordinates are calculated. Subsequently, a laser plane equation is fitted by these calculated 3D points. The fitting process is shown in Fig. 8.

The equation describing the laser plane can be expressed as follows:

In Eq. (1), \(A\), \(B\), and \(C\) represent the coefficients of the laser plane equation. The values of these coefficients are determined through the calibration process discussed earlier. By fitting the 3D coordinates of the center points of the light stripes in multiple images and utilizing the camera and laser plane calibration information, the equations for both the initial scanning laser plane π and the positioning laser plane ∏ can be derived. The completion of this process signifies the successful initial calibration of the measurement system.

The process of calculating three-dimensional coordinates

Camera calibration can establish the relationship between the pixel coordinates and the corresponding 3D world coordinates. The relationship is as follows:

\(\left[\begin{array}{ccc}\alpha & \gamma & {u}_{0}\\ 0& \beta & {v}_{0}\\ 0& 0& 1\end{array}\right]=A\), which is the camera’s intrinsic matrix, where \(\alpha\) and \(\beta\) represent the scale factors along the u and v axes of the image pixel coordinate system, respectively. \(\gamma\) represents the non-orthogonality factor between the two coordinate axes of the pixel plane. \({(u}_{0},{v}_{0})\) denotes the coordinates of the intersection point between the camera’s optical axis and the image plane in the pixel coordinate system.

Here, \(r=\sqrt{{x}_{d}^{2}+{y}_{d}^{2}}\), where \(\left({x}_{d},{y}_{d}\right)\) represents the actual image coordinates of a point in the image physical coordinate system. \(\left({x}_{p},{y}_{p}\right)\) represents the pixel coordinates of the same point in the image pixel coordinate system. \(({k}_{1},{k}_{2},{p}_{1},{p}_{2})\) are the distortion coefficients of the camera lens. \({k}_{1}\) and \({k}_{2}\) represent the coefficients of the radial distortion function in the image physical coordinate system, while \({p}_{1}\) and \({p}_{2}\) represent the coefficients of the tangential distortion function in the image physical coordinate system.

where \(({x}_{u},{y}_{u})\) represents the ideal image coordinates of a point in the image physical coordinate system, and \(({X}_{c},{Y}_{c},{Z}_{c})\) represents the coordinates of a point in the camera coordinate system.

For each extracted two-dimensional pixel coordinate \(({x}_{p},{y}_{p})\) in the image, the mapping relationship between the calculated coordinates using Eqs. (2) and (3), and the distortion-corrected coordinates \(({x}_{u},{y}_{u})\) can be expressed as follows:

Based on Eq. (4), the three-dimensional coordinates \(({X}_{c},{Y}_{c},{Z}_{c})\) can be expressed in terms of the coordinate \({Z}_{c}\) and the function f as follows:

The three-dimensional coordinates \(({X}_{c},{Y}_{c},{Z}_{c})\) can be calculated using the known equation of the light plane. Substituting the three-dimensional coordinates into Eq. (1) yields the following formula:

Based on Eqs. (5), (6), (7), and (8), the formula for three-dimensional coordinates is derived as follows:

Real-time calibration algorithm of scanning laser plane equation

During the scanning process, the spatial equation of the scanning laser plane undergoes continuous changes, necessitating real-time calibration. In this section, we propose a real-time calibration algorithm for the scanning laser plane equation. This algorithm consists of two steps: first, the sub-pixel positioning method for light stripe intersection points, and second, solving the spatial equations of the scanning laser plane.

Sub-pixel positioning method of the light stripe intersection point

The accurate determination of light stripe intersection points is essential for precise calibration. In this paper, the center point of the intersection area between two light stripes is defined as the light stripe intersection point, as shown in Fig. 9.

As shown in Fig. 10, the intersecting area can be regarded as a closed quadrilateral formed by connecting the corner points so that the center point can be typically considered as the intersection point. However, due to the influence of lens distortion, the quadrilateral often becomes irregular, causing the geometric center to deviate from the true intersection point of its diagonals. To locate the coordinates of the intersection point, a sub-pixel positioning algorithm for the light stripe intersection points is given in this section.

Positioning of corner points

Using the Harris corner point detection algorithm, the pixel coordinates of the corner points \({\alpha }_{1}\), \({\alpha }_{2}\), \({\alpha }_{3}\), and \({\alpha }_{4}\) are determined within the laser intersection area, as shown in Fig. 11a. The initial positioning of pixel-level corner points in the area is achieved. To further improve the accuracy of coordinates, a sub-pixel detection area Ri is defined around the pixel coordinates of each corner point. Sub-pixel corner point detection is further performed in each detection area Ri to obtain the corresponding sub-pixel coordinates. The resulting sub-pixel corner points \({\beta }_{1}\), \({\beta }_{2}\), \({\beta }_{3}\), and \({\beta }_{4}\) are shown in Fig. 11b.

Positioning of the center point

An irregular quadrilateral can be divided into two triangles by one of its diagonals. It is known that the line connecting the barycenters of these two triangles passes through the geometric center of the quadrilateral. Exploiting this geometric property, we can obtain four triangles by connecting the two diagonal lines of the irregular quadrilateral determined by the connecting lines of the four points at the new position shown in Fig. 11b, as shown in Fig. 12. Consequently, the task of determining the geometric center of an irregular quadrilateral is transformed into solving the barycenters based on the properties of triangles.

The barycentric coordinates of each triangle can be calculated using the sub-pixel coordinates of the corner points. This allows us to determine the positions of the barycentric points, denoted as \({\gamma }_{1}\), \({\gamma }_{2}\), \({\gamma }_{3}\), and \({\gamma }_{4}\), for the four triangles, as shown in Fig. 13a.

The barycentric points \({\gamma }_{1}\) and \({\gamma }_{4}\) of the triangles can be connected to form one line segment, while \({\gamma }_{2}\) and \({\gamma }_{3}\) can be connected to form another line segment. The intersection point of the two line segments represents the geometric center of the irregular quadrilateral and corresponds to the center point P of the light stripe intersection area, as shown in Fig. 13b.

Solution of scanning laser plane spatial equation

In the measurement process, the scanning light stripe intersects with the positioning light stripe continuously, resulting in the intersection point lying in both planes. The sub-pixel coordinates of the light stripe intersection point can be projected into the camera coordinate system to obtain its spatial coordinates \((X,Y,Z)\) by using the calibration information of the positioning laser plane.

When the scanning laser plane moves along a fixed orientation, it remains parallel to the initial scanning laser plane. Consequently, all scanning laser planes share the same plane normal vector. Assuming that the vector \(\overrightarrow{n}=(A,B,C)\) is the initial normal vector of the scanning laser plane. The formula for calculating the new scanning laser plane equation is as follows:

The real-time spatial plane equation of the scanning laser plane can be solved by Eq. (10), and real-time calibration in the measurement process can be achieved.

Experiment and results

The experimental part of this paper includes calibration experiment, scanning measurement and 3D reconstruction, and accuracy test. The experimental equipment used in the experiment includes a camera, two single-line lasers, a checkerboard calibration board, and a planar target with a black square pattern. Detailed models and parameters of the equipment can be found in Table 1, while Fig. 14 showcases the experimental equipment and environment.

Calibration experiment

In the calibration experiment, a total of nine images of the checkerboard calibration board were captured using the camera, as shown in Fig. 15. These images were then used to determine the camera’s internal parameter matrix and distortion coefficient, following the camera calibration model described in Camera calibration section. The resulting internal parameter matrix is represented as:

Similarly, the distortion coefficient is represented as:

These parameters provide the necessary information to correct the camera’s lens distortion and accurately project the image coordinates onto the 3D world coordinates.

Then nine images of black square patterns with light strips at different positions were obtained using the camera. By applying the laser plane calibration method described in Laser plane section, the laser plane equations can be calculated. In this paper, the calibration is performed for two laser planes: the positioning laser plane and the initial scanning laser plane.

Figure 16 is a fitting process of the positioning laser plane. Figure 16a is nine images taken at different positions, and the 3D points in the center of the light stripe and the results of fitting the laser plane are shown in Fig. 16b and c. The calculated plane equation is:

As shown in Fig. 17, the plane equation of the initial scanning laser plane is calculated by the same method, and the calculated plane equation is:

Scanning measurement and 3D reconstruction

In the scanning experiment, the 3D point cloud data of the measured object is obtained by scanning the object with the controlled scanning laser plane. The camera captures continuous images of the scanning light stripe’s motion, with a shooting rate of 50 frames per second. The scanning process lasts for 4.92 s, resulting in a total of 246 images, out of which 217 images are considered efficient.

The coordinates of the center point of each image are extracted by the Steger algorithm, and the 3D coordinates in the camera coordinate system are solved by combining the scanning laser plane equation of each frame to obtain the point cloud data of the image. Furthermore, the reverse engineering software Geomagic Wrap 2017 is utilized to fit the 3D surface of the point cloud data, resulting in the generation of a 3D reconstructed image. To evaluate the effectiveness of the system, five objects with different shapes are measured in this paper, as shown in Fig. 18.

Accuracy test

To test the accuracy of the structured light vision measurement system, rectangular gauge blocks with known thicknesses are measured in this paper. The precision of the gauge block is 1 μm. During the measurement process, a scanning light stripe is projected onto the surface of the gauge block. As shown in Fig. 19, the light stripes on the surface of the gauge block and the background plane are separated by the gauge block itself. Point cloud data of gauge block surface and background plane are extracted respectively, and two parallel planes can be obtained using the scanning measurement method, as shown in Fig. 20. The distance between these two parallel planes is the measured value of the thickness of the gauge block. To assess the accuracy of the measurement system, the actual thickness of the gauge block is considered as the reference value. The difference between the measured thickness obtained from the structured light vision measurement system and the actual thickness of the gauge block is analyzed to determine the error of the measurement system.

To ensure the reliability of the experimental data, this study utilized ten gauge blocks of varying specifications. The known thicknesses of these gauge blocks were as follows: 1 mm, 3 mm, 5 mm, 10 mm, 15 mm, 20 mm, 25 mm, 30 mm, 40 mm, and 50 mm. Each gauge block was subjected to 20 individual measurements, from which the mean measurement value was determined. Subsequent to this, the Mean Absolute Error (MAE), Average Error (AE), and Root Mean Square Error (RMSE) were computed to evaluate the precision of the measurement system.

The MAE serves as an indicator of the average magnitude of systematic errors inherent to the system. AE, on the other hand, considers the directionality of errors, while the RMSE provides a nuanced reflection of the measurement errors. The measurement outcomes and their respective error values are delineated in Table 2. Upon analysis of the data presented in Table 2, it becomes evident that for the gauge block with a maximal thickness of 50 mm, the MAE is approximately 0.0793 mm, the AE is around -0.0016 mm, and the RMSE is roughly 0.0974 mm. These results affirm the exemplary accuracy of the measurement system devised in this investigation.

A comparative evaluation of measurement accuracy was conducted by employing methodologies analogous to those found in other references. Specifically, in reference22, gauge blocks with standardized thicknesses akin to those in our study, specifically 14.873 mm and 29.746 mm, exhibited AE values of − 0.039 mm and 0.139 mm, respectively. In contrast, our study yielded AE values of − 0.0057 mm and 0.0374 mm for gauge blocks with thicknesses of 15 mm and 30 mm. Furthermore, in reference23, the AE values for measurements of 1 mm and 3 mm gauge blocks stood at 0.0222 mm and 0.0116 mm, respectively. Contrarily, our study documented AE values of -0.0091 mm and − 0.0087 mm for the corresponding gauge blocks. Based on this empirical data, the methodology employed in our study demonstrates reduced measurement errors, highlighting its superiority over the methods proposed in references22,23.

In addition, this article also measured the accuracy of a single laser. The experimental setup has been adjusted to ensure that the single-line structured light is perpendicular to the surface of the test object, and the laser direction corresponds to the scanning direction of the laser plane along the sliding rail. Each gauge block was also measured 20 times. The measurement results using a single laser are shown in Table 3. At the same time, in comparison with the methods presented in this article, we selected 5 representative gauge blocks and displayed the differences by comparing MAE and RMSE. The results are shown in Fig. 21. The experimental results show that compared to using a single laser, the method of using two lasers in this paper has lower errors and improves measurement accuracy and stability to a certain extent.

Discussion

In this paper, we presented a novel approach for 3D surface shape measurement using a double-line combined structured light 3D vision measurement system. The system integration of cameras, two single-line lasers, and a slide rail enables rapid and accurate measurements, eliminating the need for pre-determining scanning directions and step sizes. The real-time calibration during the scanning process, achieved through computing the scanning laser plane equation using 3D coordinates of light stripe intersection points and the initial light plane position, is an innovative aspect of our work.

Our experimental results demonstrate the effectiveness of the proposed system in accurately reconstructing 3D surfaces while maintaining stability and data accuracy. The validation experiments included calibration, scanning measurements, and accuracy test. When comparing our method with existing techniques, our approach exhibited excellent accuracy. This highlights the potential impact of our methodology in addressing the limitations of other methods and enhancing measurement precision. Moreover, in contrast to traditional single-line structured light measurement methods, our system exhibited reduced errors and improved measurement accuracy and stability.

Given our current apparatus, we suggest the following measurement parameters: System working distance: 0.5 m-2.4 m. The camera’s scanning angular range (H × W) is 8.2° × 10.9°. The near-distance scanning range (H × W) is 71.7 mm × 95.4 mm, while the far-distance scanning range (H × W) is 344.2 mm × 457.9 mm. The capture volume size is 0.3748m3. By measuring gauge blocks of different sizes and after comprehensive analysis, the measurement error of this system is less than 1%.

In conclusion, our study introduces a novel solution to the field of structured light vision measurement. The double-line combined structured light 3D vision measurement system offers significant advantages over existing single-line methods, demonstrating its potential to overcome limitations and deliver accurate results. Future research could explore further applications and refinements to enhance the system’s performance and versatility.

Conclusion

In this paper, a novel approach for 3D surface shape measurement using a double-line combined structured light 3D vision measurement system is proposed. The system utilizes essential equipment such as lasers, cameras, and a slide rail to enable fast and accurate measurement without the necessity of predetermining the scanning direction and motion step. During the scanning process, the real-time calculation of the scanning laser plane equation is solved by using the 3D coordinates of the light strip intersection point and the initial position of the light plane. Experimental results demonstrate the system’s ability to accurately reconstruct 3D surfaces while maintaining stability and data accuracy. In a word, the method proposed in this paper provides a new solution for structured light vision measurement and has a good effect in practical application.

Data availability

All data generated or analyzed during this study are included in this manuscript.

References

Todo, C. et al. Reconstruction of conifer root systems mapped with point cloud data obtained by 3D laser scanning compared with manual measurement. Forests 12, 1117 (2021).

Sheng, W. Precise measurement for line structure light vision sensor with large range. Sci. Rep. 13, 7234 (2023).

Su, X. & Zhang, Q. Dynamic 3-D shape measurement method: A review. Opt. Lasers Eng. 48, 191–204 (2010).

Tong, O. L. H. et al. Structured-light surface scanning system to evaluate breast morphology in standing and supine positions. Sci. Rep. 10, 14087 (2020).

Cui, B., Tao, W. & Zhao, H. High-precision 3D reconstruction for small-to-medium-sized objects utilizing line-structured light scanning: A review. Remote Sens. 13, 4457 (2021).

Wang, Q., Tan, Y. & Mei, Z. Computational methods of acquisition and processing of 3D point cloud data for construction applications. Arch. Comput. Methods Eng. 27, 479–499 (2020).

Design of 3D laser radar based on laser triangulation. KSII TIIS 13, (2019).

Dashpute, A., Anand, C. & Sarkar, M. Depth resolution enhancement in time-of-flight cameras using polarization state of the reflected light. IEEE Trans. Instrum. Meas. 68, 160–168 (2019).

Frangez, V., Salido-Monzu, D. & Wieser, A. Assessment and improvement of distance measurement accuracy for time-of-flight cameras. IEEE Trans. Instrum. Meas. 71, 1–11 (2022).

Pasinetti, S. et al. Experimental procedure for the metrological characterization of time-of-flight cameras for human body 3D measurements. Sensors 23, 538 (2023).

Muralikrishnan, B., Ren, W., Everett, D., Stanfield, E. & Doiron, T. Performance evaluation experiments on a laser spot triangulation probe. Measurement 45, 333–343 (2012).

Chen, B. & Zhang, S. High-quality 3D shape measurement using saturated fringe patterns. Opt. Lasers Eng. 87, 83–89 (2016).

Wang, Z. & Yang, Y. Single-shot three-dimensional reconstruction based on structured light line pattern. Opt. Lasers Eng. 106, 10–16 (2018).

Li, Y. & Wang, Z. RGB line pattern-based stereo vision matching for single-shot 3-D measurement. IEEE Trans. Instrum. Meas. 70, 1–13 (2021).

Li, Y. & Wang, Z. 3D reconstruction with single-shot structured light RGB line pattern. Sensors 21, 4819 (2021).

Qin, Z. et al. Cereal grain 3D point cloud analysis method for shape extraction and filled/unfilled grain identification based on structured light imaging. Sci. Rep. 12, 3145 (2022).

Long, Y. et al. Decoding line structured light patterns by using Fourier analysis. Opt. Eng. 54, 073109 (2015).

Tornero-Martínez, N., Anguiano-Morales, M., Trujillo-Schiaffino, G. & Salas-Peimbert, D. P. Image stitching for fringe projection profilometry. Opt. Quant. Electron. 53, 290 (2021).

Feng, S. et al. High dynamic range 3D measurements with fringe projection profilometry: A review. Meas. Sci. Technol. 29, 122001 (2018).

Yang, S., Yang, T., Wu, Y., Wu, G. & Liu, F. Line-encoded structured light measurement method in measuring shiny and transparent objects. J. Opt. 25, 045701 (2023).

Li, W., Luo, Z., Hou, D. & Mao, X. Multi-line laser projection 3D measurement simulation system technology. Optik 231, 166390 (2021).

Li, Y., Zhao, B., Zhou, J. & Ren, Y. A universal method for the calibration of swing-scanning line structured light measurement system. Optik 241, 166930 (2021).

Wang, H., Wu, Z., He, Z., Gao, R. & Huang, H. Detection of HF-ERW process by 3D bead shape measurement with line-structured laser vision. IEEE Sens. J. 21, 7681–7690 (2021).

Mahmud, M., Joannic, D., Roy, M., Isheil, A. & Fontaine, J.-F. 3D part inspection path planning of a laser scanner with control on the uncertainty. Comput. Aided Des. 43, 345–355 (2011).

Van Gestel, N., Cuypers, S., Bleys, P. & Kruth, J.-P. A performance evaluation test for laser line scanners on CMMs. Opt. Lasers Eng. 47, 336–342 (2009).

Xie, Z., Wang, X. & Chi, S. Simultaneous calibration of the intrinsic and extrinsic parameters of structured-light sensors. Opt. Lasers Eng. 58, 9–18 (2014).

Yin, L., Wang, X. & Ni, Y. Flexible three-dimensional reconstruction via structured-light-based visual positioning and global optimization. Sensors 19, 1583 (2019).

Winkelbach, S., Molkenstruck, S. & Wahl, F. M. Low-cost laser range scanner and fast surface registration approach. in Pattern Recognition (eds Franke, K., Müller, K.-R., Nickolay, B. & Schäfer, R.) vol. 4174, 718–728 (Springer Berlin Heidelberg, 2006).

Apolinar Muñoz Rodríguez, J. Calibration modeling for mobile vision based laser imaging and approximation networks. J. Mod. Opt. 57, 1583–1597 (2010).

Apolinar Muñoz-Rodrı́guez, J., Asundi, A. & Rodríguez-Vera, R. Shape detection of moving objects based on a neural network of a light line. Opt. Commun. 221, 73–86 (2003).

Mun˜oz-Rodriguez, J. A. Direct object shape detection based on skeleton extraction of a light line. Opt. Eng. 39, 2463 (2000).

Muñoz-Rodríguez, J. A. & Rodríguez-Vera, R. Evaluation of the light line displacement location for object shape detection. J. Mod. Opt. 50, 137–154 (2003).

Rodríguez, J. A. M., Rodríguez-Vera, R., Asundi, A. & Campos, G. G. Shape detection using light line and Bezier approximation network. Imaging Sci. J. 55, 29–39 (2007).

Li, C., Xu, X., Sun, H., Miao, J. & Ren, Z. Coaxiality of stepped shaft measurement using the structured light vision. Math. Probl. Eng. 2021, e5575152 (2021).

Huang, W. & Kovacevic, R. Development of a real-time laser-based machine vision system to monitor and control welding processes. Int. J. Adv. Manuf. Technol. 63, 235–248 (2012).

Zhou, J., Pan, L., Li, Y., Liu, P. & Liu, L. Real-time stripe width computation using back propagation neural network for adaptive control of line structured light sensors. Sensors 20, 2618 (2020).

Li, Y., Zhou, J., Mao, Q., Jin, J. & Huang, F. Line structured light 3D sensing with synchronous color mapping. IEEE Sens. J. 20, 9796–9805 (2020).

Wu, F., Mao, J., Zhou, Y. & Qing, L. Three-line structured light measurement system and its application in ball diameter measurement. Optik 157, 222–229 (2018).

Miao, H., Xiao, C., Wei, M. & Li, Y. Efficient measurement of key-cap flatness for computer keyboards with a multi-line structured light imaging approach. IEEE Sens. J. 19, 10087–10098 (2019).

Li, W. 3D measurement system based on divergent multi-line structured light projection, its accuracy analysis. 15 (2021).

Xia, X., Wang, L., Wang, L., Zhang, Z. & Liu, X. High-efficiency flatness detection method for medium-thick steel plates based on multiline parallel laser vision. OE 62, 105101 (2023).

Gao, H., Zhou, F., Peng, B., Wang, Y. & Tan, H. 3D wide FOV scanning measurement system based on multiline structured-light sensors. Adv. Mech. Eng. 6, 758679 (2014).

Yang, S., Yang, T., Wu, G., Wu, Y. & Liu, F. Flexible and fast calibration method for uni-directional multi-line structured light system. Opt. Lasers Eng. 164, 107525 (2023).

Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 22, 1330–1334 (2000).

Sun, Q., Hou, Y., Tan, Q. & Li, G. A flexible calibration method using the planar target with a square pattern for line structured light vision system. PLoS ONE 9, e106911 (2014).

Bouguet, J.-Y. Pyramidal implementation of the Lucas Kanade feature tracker description of the algorithm. 9.

Steger, C. An unbiased detector of curvilinear structures. IEEE Trans. Pattern Anal. Mach.Intell. 20, 113–125 (1998).

Acknowledgements

The work described in this paper is supported by the Foundation of Science and Technology Department of Jilin Province under Grant No. YDZJ202201ZYTS531.

Author information

Authors and Affiliations

Contributions

The authors confirm contribution to the paper as follows: study conception and design: Q.S., M.W.; data collection: M.W., C.G.; analysis and interpretation of results: M.W., Z.R., W.D.; Figures drawing: M.W.; draft manuscript preparation: M.W., Q.S. All authors reviewed the results and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, M., Sun, Q., Gao, C. et al. A three-dimensional vision measurement method based on double-line combined structured light. Sci Rep 13, 18660 (2023). https://doi.org/10.1038/s41598-023-46176-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-46176-y

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.