Abstract

The detection of emotional facial expressions plays an indispensable role in social interaction. Psychological studies have shown that typically developing (TD) individuals more rapidly detect emotional expressions than neutral expressions. However, it remains unclear whether individuals with autistic phenotypes, such as autism spectrum disorder (ASD) and high levels of autistic traits (ATs), are impaired in this ability. We examined this by comparing TD and ASD individuals in Experiment 1 and individuals with low and high ATs in Experiment 2 using the visual search paradigm. Participants detected normal facial expressions of anger and happiness and their anti-expressions within crowds of neutral expressions. In Experiment 1, reaction times were shorter for normal angry expressions than for anti-expressions in both TD and ASD groups. This was also the case for normal happy expressions vs. anti-expressions in the TD group but not in the ASD group. Similarly, in Experiment 2, the detection of normal vs. anti-expressions was faster for angry expressions in both groups and for happy expressions in the low, but not high, ATs group. These results suggest that the detection of happy facial expressions is impaired in individuals with ASD and high ATs, which may contribute to their difficulty in creating and maintaining affiliative social relationships.

Similar content being viewed by others

Introduction

The detection of emotional facial expressions is an initial stage in conscious facial expression processing. The appropriate detection of emotional facial expressions in other individuals plays an important role in effectively understanding their emotional states, regulating social behavior, and creating and maintaining social relationships.

Several psychological studies have shown that typically developing (TD) individuals detect emotional facial expressions more rapidly than neutral facial expressions when using the visual search paradigm with photographic stimuli of facial expressions1,2,3,4,5,6,7,8,9. The visual search is an ecologically valid experimental approach that mimics everyday situations in which one must find a target, and photographic facial expressions are realistic stimuli compared with other types of stimuli, such as schematic drawings10. For example, Williams et al.3 conducted an experiment in which participants examined the lined-up photos of facial expressions and responded to the different expressions in them. The results showed that the reaction time (RT) for detecting a sad or happy expression target in a crowd of neutral face distractors was shorter than that for detecting a neutral face among a group of emotional faces. Several studies showed that this rapid detection of emotional facial expressions depends on emotional significance, not visual features, such as curved mouths in happy expressions5,6. For example, Sato and Yoshikawa5 presented normal emotional facial expressions (anger and happiness) and control stimuli, termed “anti-expressions”11, within crowds of neutral expressions. Anti-expressions were created by applying computer-morphing techniques to photographs of emotional facial expressions to implement visual changes equivalent to those in emotional facial expressions from neutral facial expressions; however, the anti-expressions did not clearly show emotions and were most frequently labeled as neutral expressions in free responses11. This method allowed the researchers to determine whether rapid detection of emotional facial expressions was attributable to emotional significance or to visual features. The results of the visual search experiment showed that RTs for detecting normal expressions of anger and happiness were shorter than those for detecting anti-expressions. These results indicate that emotional facial expressions are efficiently detected by TD individuals because of their emotional, rather than visual, characteristics.

Autism spectrum disorder (ASD) is a neurodevelopmental disorder primarily characterized by impairments in social interaction12. One of the most obvious features of social impairment is the deficient communication involving emotional facial expressions13. Several experimental studies have reported that individuals with ASD showed atypical patterns in the recognition of emotional facial expressions14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73, although null findings were also reported74,75,76,77,78,79,80,81,82,83,84,85,86,87,88. Atypical patterns were also reported regarding several other types of facial expression processing, such as the perception of form89 and motion90 of dynamic facial expressions, facial mimicry91,92,93,94 and autonomic responses to facial expressions95,96,97,98, and attentional orienting by gaze with emotional facial expressions85,99. In addition, some studies reported that individuals with ASD are impaired in their ability to process subliminally presented facial expressions98,100,101. Taken together, even though findings are not entirely consistent, the data imply that individuals with ASD are impaired in various types of emotional expression processing, including the early stages of processing.

However, whether the rapid detection of emotional facial expressions is impaired in individuals with ASD remains unknown. Only a few studies have explored this issue, using the visual search paradigm with photographs of facial expressions, as in studies of TD individuals, and they have failed to show clear deficits in the performance of the ASD group102,103,104. For example, Ashwin et al.102 tested ASD and TD groups in a visual search experiment in which participants detected angry and happy facial expressions within crowds of neutral expressions. The results showed that the RTs for detecting angry expressions were shorter than those for detecting happy expressions in both the ASD and TD groups. However, because these studies only compared the detection of angry vs. happy expressions, it remains unclear whether the group would have differed in their RTs for emotional vs. neutral facial expressions. Given the aforementioned evidence showing impaired emotional expression processing in ASD groups, we hypothesized that the rapid detection of emotional vs. neutral facial expressions might also be impaired in individuals with ASD.

In addition, it remains to be seen whether detection of emotional facial expressions is similarly affected in individuals from the general population who have high autistic traits (ATs). Several previous studies have shown that the autistic phenotypes are continuously distributed as ATs in the general population, where clinically diagnosed ASD represents the upper extreme105,106,107,108. Several psychometric scales were developed to quantitatively and reliably measure ATs, such as the autism-spectrum quotient (AQ)105. Previous research indicated that individuals with high ATs showed more deficiencies in various types of facial expression processing, including emotion recognition in facial expressions109, facial mimicry of emotional expressions110, and gaze-triggered attentional orienting by emotional expressions111 than those with low ATs. However, no study has yet investigated effects of ATs on the detection of emotional facial expressions. Based on the above data showing similarity in impaired facial expression processing among individuals with ASD and high ATs, we hypothesized that the rapid detection of emotional vs. neutral facial expressions would be impaired in individuals with high ATs.

In the present study, we tested these hypotheses by comparing ASD vs. TD individuals in Experiment 1 and non-clinical individuals with high vs. low ATs in Experiment 2 using the visual search paradigm (Fig. 1). We used facial expressions depicting anger and happiness as target stimuli presented within crowds of neutral expressions, as has been done in several previous studies with TD individuals1,2,3,4,5,7,8,9. We also presented anti-expressions as targets, as in previous studies5,7,8,9. Because anti-expressions replicated changes equivalent to those between normal emotional facial expressions and neutral expressions but were less emotional and most frequently labeled as neutral11, they allowed us to compare, approximately, emotional vs. neutral facial expressions controlling for the effects of basic visual processing. We predicted that the rapid detection of normal vs. anti-expressions would be evident in the TD group but not in the ASD group in Experiment 1 and in the low ATs group but not in the high ATs group in Experiment 2. To confirm the emotional impact of normal expressions compared with anti-expressions, we additionally required participants to rate the stimuli in terms of subjectively experienced valence and arousal112.

Results

Experiment 1

In Experiment 1, we compared individuals with high-functioning ASD and age- and sex-matched TD groups for the detection of normal vs. anti-expressions of anger and happiness.

RT

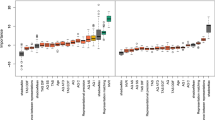

In Fig. 2, the results for RT in Experiment 1 are shown. An analysis of variance (ANOVA) with group (TD, ASD), stimulus type (normal, anti), and emotion (anger, happiness) as factors for log-transformed RTs revealed a significant three-way interaction, F(1,32) = 4.24, p = 0.048, η 2 p = 0.115, indicating that the detection of normal vs. anti-expressions differed depending on the group and the emotion presented. Besides, the main effects of group and stimulus type were significant, F(1,32) = 5.81 and 69.89, p = 0.022 and 0.000, η 2 p = 0.155 and 0.685, respectively, and interactions of group × stimulus type and stimulus type × emotion reached marginal significance, F(1,32) = 3.41 and 3.27, p = 0.074 and 0.080, η 2 p = 0.093 and 0.095, respectively. No other main effects or interactions were significant, F(1,32) < 0.26, p > 0.1, η 2 p < 0.007.

Mean (with SE) reaction times (RTs) in the typically developing (TD) and autism spectrum disorder (ASD) groups in Experiment 1. Asterisks indicate the significant simple-simple main effects of stimulus type, indicating shorter RTs for normal expressions than for anti-expressions (***p < 0.001; n.s.: not significant).

Follow-up analyses were conducted to understand the three-way interaction. First, given our predictions, we investigated the simple-simple main effects of stimulus type. Significant effects, indicating shorter RTs for normal expressions than for anti-expressions, were found for angry expressions in both TD and ASD groups, F(1,64) = 27.58 and 30.12, p = 0.000 and 0.000, respectively, and for happy expressions in the TD group, F(1,64) = 27.62, p = 0.000, but not in the ASD group, F(1,64) = 2.56, p = 0.114. We explored other effects and found that the simple-simple main effects of group were significant only for normal angry, normal happy, and anti-angry expression conditions, indicating shorter RTs for the TD than the ASD groups, F(1,128) = 5.40, 9.15, and 5.40, p = 0.022, 0.003, and 0.022, respectively. The simple-simple main effects of emotion were significant only for normal and anti-expressions in the ASD group, indicating faster detection of normal angry vs. normal happy expressions and anti-happy vs. anti-angry expressions, F(1,64) = 4.59 and 3.99, p = 0.036 and 0.049, respectively.

Rating

The results of the ratings in Experiment 1 are presented in Table 1. For the valence ratings, an ANOVA with group, stimulus type, and emotion as factors revealed a significant three-way interaction, F(1,32) = 11.27, p = 0.002, η 2 p = 0.260. Besides, the main effects of stimulus type and emotion, and the interactions of group × stimulus type, group × emotion, and stimulus type × emotion were significant, F(1,32) = 18.12, 183.41, 8.49, 12.96, and 198.40, p = 0.000, 0.000, 0.007, 0.001, and 0.000, η 2 p = 0.362, 0.851, 0.210, 0.288, and 0.861, respectively. The remaining main effect of group were not significant, F(1,32) = 0.62, p = 0.437, η 2 p

= 0.019.

Follow-up simple effect analyses were conducted for the three-way interaction. Significant simple-simple main effects of stimulus type were found for both emotion conditions in both groups, indicating more negative ratings for normal angry expressions and more positive ratings for normal happy expressions than for corresponding anti-expressions in both TD and ASD groups, F(1,64) = 74.49, 176.49, 39.73, and 51.49, p = 0.000, 0.000, 0.000, and 0.000, respectively. The simple-simple main effects of group were significant only for normal expressions of anger and happiness, indicating more negative ratings for normal angry expressions and more positive ratings for normal happy expressions in the TD than in the ASD group, F(1,128) = 4.87 and 24.88, p = 0.029 and 0.000, respectively. The simple-simple main effects of emotion were significant for both stimulus-type conditions in both groups, indicating more negative ratings for angry than for happy expressions under normal expression conditions and the reverse under anti-expression conditions in both the in the TD and ASD groups, F(1,64) = 289.18, 11.81, 104.48, and 5.53, p = 0.000, 0.001, 0.000, and 0.022, respectively.

To compare the valence ratings of normal and anti-expressions of anger and happiness with those of neutral expressions, multiple comparisons with the Bonferroni correction were conducted. The results showed that normal angry expressions were significantly more negative and normal happy expressions were significantly more positive than neutral expressions in the TD and ASD groups, t(16) = 17.25, 12.78, 5.85, and 5.57, p = 0.000, 0.000, 0.000, and 0.000, r = 0.974, 0.976, 0.825, and 0.812, respectively. Anti-angry expressions were not significantly different from neutral expressions in the TD and ASD groups, t(16) = 0.00 and 0.14, p = 1.000 and 1.000, r = 0.000 and 0.035, respectively, whereas anti-happy expressions were rated as significantly more negative than neutral expression in the TD and ASD groups, t(16) = 5.37 and 2.93 p = 0.000 and 0.040, r = 0.802 and 0.591, respectively.

For the arousal ratings, a three-way ANOVA revealed a significant two-way interaction of stimulus type × group, F(1,32) = 5.97, p = 0.020, η 2 p = 0.157. The main effects of group and stimulus type were also significant, F(1,32) = 4.7 and 72.15, p = 0.038 and 0.000, η 2 p = 0.128 and 0.693. There were no other significant main effects or interactions, F(1,32) < 2.68, p > 0.1, η 2 p < 0.078.

Follow-up analyses for the two-way interaction revealed significant simple main effects of stimulus type, indicating higher arousal ratings for normal than for anti-expressions, both in the TD and ASD groups, F(1,32) = 59.80 and 18.31, p = 0.000 and 0.000, respectively. The simple main effects of group were also significant for normal expressions, indicating higher arousal ratings for normal expressions in the TD than in the ASD group, F(1,64) = 10.48, p = 0.002.

Bonferroni corrected multiple comparisons contrasting normal and anti-expressions of anger and happiness vs. neutral expressions showed that normal expressions of anger and happiness elicited significantly higher arousal than neutral expressions in the TD and ASD groups, t(16) = 7.26, 8.55, 4.74, and 4.53, p = 0.000, 0.000, 0.001, and 0.001, r = 0.974, 0.976, 0.825, and 0.812, respectively. Anti-angry expressions were rated as eliciting significantly higher arousal than neutral expressions in the TD and ASD groups, t(16) = 5.29 and 3.20, p = 0.005 and 0.022, r = 0.798 and 0.625, respectively. Anti-happy expressions were not significantly different from neutral expressions in the TD and ASD groups, t(16) = 2.59 and 1.36, p = 0.152 and 0.775, r = 0.544 and 0.322, respectively.

Experiment 2

In Experiment 2, we compared the non-clinical samples of individuals with high vs. low ATs, divided using a median split for the AQ scores, in terms of the detection of normal vs. anti-expressions of anger and happiness.

RT

The results for RT in Experiment 2 are shown in Fig. 3. An ANOVA with group, stimulus type, and emotion as factors for log-transformed RTs showed, as in the results of Experiment 1, a significant three-way interaction between group, stimulus type, and emotion, F(1,36) = 4.83, p = 0.035, η 2 p = 0.118. The main effect of stimulus type and the interaction of stimulus type × emotion were also significant, F(1,36) = 47.73 and 17.76, p = 0.000 and 0.000, η 2 p = 0.543 and 0.330, respectively. No other main effects or interactions were significant, F(1,36) < 1.85, p > 0.1, η 2 p < 0.050.

Mean (with SE) reaction time (RTs) in the low and high autistic traits (ATs) groups in Experiment 2. Asterisks indicate the significant simple-simple main effects of stimulus type, indicating shorter RTs for normal expressions than for anti-expressions (***p < 0.001; *p < 0.05; n.s.: not significant).

Follow-up analyses were conducted for the three-way interaction. First, the results revealed significant simple-simple main effects of stimulus type, indicating shorter RTs for normal expressions than for anti-expressions, for angry expressions in both the low and high ATs groups, F(1,72) = 18.53 and 39.74, p = 0.001 and 0.000, respectively, and for happy expressions in the low ATs group, F(1,72) = 4.75, p = 0.033, but not in the high ATs group, F(1,72) = 0.20, p = 0.655. We also explored other effects, and found that there was no significant simple-simple main effect of group, F(1,144) < 1.21, p > 0.1. The simple-simple main effects of emotion were significant for normal expressions in both the low and high ATs groups, indicating faster detection of angry expressions than happy expressions, F(1,72) = 5.39 and 12.42, p = 0.024 and 0.001, respectively, and also for anti-expressions in the high ATs group, indicating a faster detection of anti-happy than anti-angry expressions, F(1,72) = 12.32, respectively, p = 0.001.

Rating

The results of the ratings from Experiment 2 are presented in Table 2. For the valence ratings, an ANOVA with group, stimulus type, and emotion as factors revealed a significant two-way interaction of stimulus type × emotion, F(1,36) = 89.25, p = 0.000, η 2 p = 0.713. The main effects of stimulus type and emotion were also significant, F(1,36) = 10.19, and 62.32, p = 0.003 and 0.000, η 2 p = 0.221 and 0.634, respectively. The main effect of group, indicating higher ratings in the low than the high ATs groups, was also significant, F(1,36) = 5.91, p = 0.020, η 2 p = 0.141. There were no other significant interactions, F(1,36) < 0.68, p > 0.1, η 2 p

< 0.019.

Follow-up analyses were conducted for the stimulus type × emotion interaction and revealed the significant simple main effects of stimulus type for both angry and happy emotions, indicating more negative ratings for normal angry expressions than for anti-angry expressions and more positive ratings for normal than for anti-happy expressions, F(1,72) = 150.96 and 8.70, p = 0.000 and 0.004, respectively. The simple main effects of emotion were also significant for both normal and anti-expression stimuli, indicating more negative ratings for angry vs. happy expressions among normal expressions and the reverse for anti-expressions, F(1,72) = 32.96 and 90.44, p = 0.000 and 0.000, respectively.

Bonferroni corrected multiple comparisons contrasting the valence ratings of normal and anti-expressions of anger and happiness vs. those of neutral expressions revealed that normal angry expressions were significantly more negative and normal happiness expressions were significantly more positive than neutral expressions in the low ATs group, t(17) = 4.59 and 2.80, p = 0.000 and 0.000, r = 0.744 and 0.562, respectively, and the high ATs group, t(19) = 6.31 and 4.69, p = 0.000 and 0.001, r = 0.823 and 0.732, respectively. Anti-angry expressions were not significantly different from neutral expressions both in the low and high ATs groups, t(17) = 0.38, p = 1.000, r = 0.092 and t(19) = 1.82, p = 0.336, r = 0.385, respectively. Anti-happy expressions were rated more significantly negative than neutral expression in the low and high ATs groups, t(17) = 4.24, p = 0.002, r = 0.717 and t(19) = 4.77, p = 0.001, r = 0.738, respectively.

For the arousal ratings, a three-way ANOVA revealed the significant main effect of stimulus type, indicating higher arousal ratings for normal than for anti- expressions, F(1,36) = 57.41, p = 0.000, η 2 p = 0.615, and marginally significant main effect of group, F(1,36) = 3.47, p = 0.071, η 2 p = 0.088. No other main effects or interactions were significant, F(1,36) < 0.80, p > 0.1, η 2 p < 0.023.

Bonferroni corrected multiple comparisons for the arousal ratings revealed that normal angry and happy expressions elicited significantly higher arousal than neutral expressions in the low ATs group, t(17) = 3.74 and 3.50, p = 0.007 and 0.012, r = 0.672 and 0.647, respectively, and the high ATs group, t(19) = 4.55 and 7.48, p = 0.001 and 0.000, r = 0.722 and 0.864, respectively. Both anti-expressions of anger and happiness were not significantly different from neutral expressions in the low ATs group, t(17) = 0.81 and 1.93, p = 1.000 and 0.280, r = 0.193 and 0.424, respectively, and the high ATs group, t(19) = 1.24 and 1.61, p = 0.920 and 0.500, r = 0.274 and 0.346, respectively.

Discussion

Our results in the TD groups in Experiments 1 and 2 consistently showed that normal facial expressions of anger and happiness were detected faster than the corresponding anti-expressions. The subjective emotional rating data confirmed that normal expressions of anger and happiness were more emotionally significant in terms of valence and arousal dimensions compared with the corresponding anti-expressions and neutral expressions. Although anti-expressions were not completely equal to neutral expressions, their responses were restricted to either valence or arousal dimensions and did not satisfy normal emotional elicitation requiring both dimensions113. These results are consistent with several previous studies5,7,8,9 and indicate the existence of psychological mechanisms for the efficient detection of emotional facial expressions in TD individuals. The faster detection of normal angry vs. normal happy expressions was evident in Experiment 2 but not in Experiment 1. These inconsistent results are understandable, given previous findings reporting positive5,8,9 and null7 findings using a similar paradigm. Emotional differences may be less evident and susceptible to influence by other factors, such as individual differences8,9, than the difference between normal vs. anti-expressions.

More importantly, our results showed that normal happy expressions were not detected faster than anti-happy expressions in the ASD group. Normal angry expressions were detected faster than anti-angry expressions and than normal happy expressions in the ASD group. The faster detection of angry than happy expressions is consistent with the results of previous studies using the visual search paradigm with angry and happy facial expressions in ASD groups102,103,104. However, these studies did not compare detection performance for emotional and neutral facial expressions. The finding of impaired processing of happy facial expressions in the ASD group supports previous findings that show abnormal patterns in emotion recognition64,67,71, motion perception90, and facial92,93,94 and autonomic97 responses during the processing of happy facial expressions in ASD individuals. However, none of these studies tested the detection of emotional facial expressions. Although impairment in happy expression processing was not consistently shown in previous studies, discrepant results may be explained by methodological differences, such as the ceiling effect for happy expressions in the standard emotion recognition tasks56,69. To the best of our knowledge, this is the first study reporting the impaired rapid detection of happy facial expressions in individuals with ASD.

Our results in Experiment 2, as in the results of the ASD group in Experiment 1, showed that normal happy expressions were not detected faster than anti-happy expressions in the high ATs group. The impaired processing of happy facial expressions is consistent with the previous finding that individuals with high ATs show weaker responses to gaze-triggered attentional shifts111 and facial mimicry110 during the processing of happy facial expressions than those with low ATs. The similar patterns of impaired detection of happy expressions among individuals with ASD and high ATs are in line with findings showing that ATs are continuously distributed in the general population extending into the clinically diagnosed ASD groups105,106,107,108. Extending the results of these previous studies, our results provide new evidence that the impairment of rapid detection of happy facial expression found in individuals with ASD is shared in individuals from the general population with high ATs.

The subjective emotional ratings in Experiment 1 showed less extreme valence and weaker arousal ratings for normal expressions in the ASD group than in the TD group. This result is in line with those of previous findings showing weak facial91,92,94 and autonomic57,95,96,97,98 emotional reactions to facial expressions. It is interesting to note that typical and atypical patterns for angry and happy expressions, respectively, shown in the RTs were not evident in the subjective emotional ratings in individuals with ASD and with high ATs. This suggests that the rapid detection and subjective emotional elicitation in response to emotional facial expressions may rely on partly dissociable psychological mechanisms in individuals with ASD and high ATs.

Although our results showing impaired rapid detection of happy, but not angry, facial expressions in individuals with ASD and high ATs were only partly consistent with our hypotheses based on previous findings in the ASD and ATs literature, the results fit with those reporting the effects of oxytocin on the rapid processing of emotional facial expressions. Oxytocin, a neuropeptide which acts as a neurotransmitter in distributed brain regions, has been shown to be reduced in individuals with ASD114,115,116,117 and its administration has been shown to improve their social cognition of faces118,119. Some previous studies tested the intranasal administration of oxytocin in TD individuals using the backward masking paradigm120, dot-probe paradigm121, and rapid serial visual presentation paradigm122 and found that oxytocin facilitated the rapid processing of happy facial expressions. One study showed that this effect was more evident in groups with high ATs122. By contrast, some studies reported that the oxytocin had an adverse effect on the rapid processing of negative facial expressions123,124. These data suggest that the present findings of impaired detection of happy facial expressions in individuals with ASD and high ATs may be related to their low levels of oxytocin. It may also be possible that the rapid detection of angry expressions depends on neural circuits that are not reliant on oxytocin and thus are preserved intact in autistic phenotypes.

What are the implications of results showing impaired rapid detection of happy facial expressions in individuals with ASD and high ATs? From the perspective of social psychology, happy facial expressions signal positive emotional states125 and affiliative intentions126,127. From the perspective of cognitive psychology, the detection of facial expressions is the first stage in conscious facial expression processing, which allows subsequent processes, such as the recognition of emotions and elicitation of interactive behaviors. The appropriate detection of facial expressions is critical, because human information-processing resources are limited and conscious processing is only available for stimuli from a central visual field128. Together, the psychological mechanisms for detecting happy facial expressions enable the efficient processing of important social stimuli from peripheral visual fields and promote interactive behaviors crucial to creating and maintaining affiliative relationships. Our results suggest that individuals with ASD and high ATs are impaired in this important initial processing stage. Several case reports have shown that individuals with ASD and high ATs have impaired affiliative relationships in real life (e.g., romantic partnerships and marriage)129,130,131,132. Our results suggest that these problems may be at least partially attributable to the impaired detection of happy expressions.

Several limitations of this study should be acknowledged. First, we tested adult participants only. The developmental trajectory of this phenomenon therefore remains untested. Previous studies have suggested the abnormal development of facial expression processing in individuals with ASD56,63,104. It may be that analogous atypical age-related changes appear in the impaired detection of happy expressions in individuals with ASD. Because even newborn infants show preference for happy facial expressions133, the impaired detection of happy facial expressions may be one of the primary problems in ASD. If so, promising directions for further research may include elucidating how this problem develops.

Second, our sample was small; hence, the results should be interpreted cautiously. Although we did not find impaired detection of angry facial expressions in the ASD group compared with the TD group, null group differences may be attributable to the lack of statistical power. In fact, several studies have found impairment in several processes, such as facial mimicry91,93,94 and autonomic arousal95 for angry expressions in ASD. Future studies with larger ASD samples may reveal the impaired detection of angry and happy facial expressions in individuals with ASD.

In summary, our experiments using the visual search paradigm with photographic stimuli for normal and anti-expressions of anger and happiness showed that RTs for normal vs. anti-expressions of happiness were shorter in the TD group but not in the ASD group. Similarly, detection of normal vs. anti-expressions of happiness was faster in the low ATs group but not in the high ATs group. These results suggest that the rapid detection of happy facial expressions is impaired in individuals with ASD and high ATs. We speculate that this impairment may possibly contribute to their difficulties in creating and maintaining affiliative social relationships.

Methods

Experiment 1

Participants

The ASD group consisted of 17 adults with ASD (5 females and 12 males; mean ± SD age = 26.9 ± 5.5 years). The diagnosis was made using the Diagnostic and Statistical Manual-IV-Text Revision134 via a stringent procedure in which every item of the ASD diagnostic criteria was investigated in interviews with participants and their parents (and professionals who helped them, if any) by at least two psychiatrists with expertise in developmental disorders. Only participants who met at least one of the four social impairment items (i.e., impairment in nonverbal communication including lack of joint attention, sharing interest, relationship with peers, and emotional and interpersonal mutuality) without satisfying any of the autistic disorder criteria, such as language delay, were included. Comprehensive interviews were administered to obtain information on the participants’ developmental histories for diagnostic purposes. Neurological and psychiatric issues other than those associated with ASD were ruled out. Participants were taking no medication. Full-scale intelligence quotients (IQs), measured by the Wechsler Adult Intelligence Scale, third edition (WAIS-III) (Nihon Bunka Kagakusha, Tokyo, Japan), of all participants in the ASD group fell within the normal range (mean ± SD = 112.8 ± 15.3; range, 86–134).

The TD group consisted of 17 adults (5 females and 12 males; mean ± SD age = 26.5 ± 4.8 years), who were recruited by personal contact. The TD participants were matched for age, t(32) = 0.02, p = 0.718, and sex, χ 2(1) = 0.00, p = 1.000, with the ASD group.

All participants were right-handed, as assessed by the Edinburgh Handedness Inventory135, and had normal or corrected-to-normal visual acuity. After the procedures were fully explained, all participants gave their informed consent to participate. This experiment was approved by the local ethics committee of the Primate Research Institute, Kyoto University, and was conducted in accordance with institutional ethical provisions and the Declaration of Helsinki.

Experimental design

The experiment was constructed as a three-factor mixed design, with group (TD, ASD) as a between-participant factor and stimulus type (normal, anti-expression) and emotion (anger, happiness) as within-participant factors.

Apparatus

The events were controlled by Presentation 14.9 (Neurobehavioral Systems, San Francisco, CA) implemented on a Windows computer (HP Z200 SFF, Hewlett-Packard Company, Tokyo, Japan). The stimuli were presented on a 19-inch CRT monitor (HM903D-A, Iiyama, Tokyo, Japan) with a refresh rate of 150 Hz and a resolution of 1024 × 768 pixels. The refresh rate was confirmed using a high-speed camera (EXILIM FH100, Casio, Tokyo, Japan) with a temporal resolution of 1000 frames/s. The responses were obtained using a response box (RB-530, Cedrus, San Pedro, CA), which offers 2–3 ms RT resolution.

Stimuli

The photographs of normal and anti-expressions of anger and happiness were used as target stimuli, and the photographs of neutral expressions were used as distractor stimuli. The schematic illustrations of the stimuli are presented in Fig. 1. The stimuli were identical to those used in previous studies5,7,8,9. Each individual face subtended a visual angle of 1.8° horizontally × 2.5° vertically.

Normal expressions were gray-scale photographs depicting angry, happy, and neutral expressions of a female (PF) and male (PE) model chosen from a facial expression database136. Neither model was familiar to any of the participants. No expression showed bared teeth.

Anti-expressions were created from these photographs using computer-morphing software (FUTON System, ATR, Soraku, Japan). First, the coordinates of 79 facial feature points were identified manually and realigned based on the coordinates of the bilateral irises. Next, the differences between the feature points of the emotional (angry and happy) and neutral facial expressions were calculated. Then the positions of the feature points for the anti-expressions were determined by moving each point by the same distance but in the opposite direction to that in the emotional faces. Minor color adjustments by a few pixels were performed using Photoshop 5.0 (Adobe, San Jose, CA). For a different use of “anti-expressions” see Skinner and Benton6,137.

Two types of adjustments were made to the stimuli using Photoshop 5.0 (Adobe, San Jose, CA). First, the photographs were cropped into a circle, slightly inside the frame of the face, to eliminate contours and hairstyles not relevant to the expression. Second, the photographs were prepared so that significant differences in contrast were eliminated, thereby removing possible identifying information.

We prepared eight positions, separated by 45 degrees and arranged in a circle (10.0° × 10.0°), for the presentation of stimulus faces. Stimuli occupied four of the eight positions; half were presented to the left and half were presented to the right side. A schematic illustration of one such stimulus display is presented in Fig. 1. Each combination of the four positions was presented an equal number of times. In the target-present trials, the position of the target stimulus was randomly chosen; however, the target stimulus was presented on the left side in half the trials and on the right side in the other half. In the target-absent trials, all four faces were identical and depicted neutral expressions.

Procedure

The experiment was conducted in an electrically shielded and soundproofed room (Science Cabin, Takahashi Kensetsu, Tokyo, Japan). Participants sat in chairs with their chins fixed into steady positions 80 cm from the monitor. They were asked to keep their gaze on the fixation cross (0.86° × 0.86°) at the center of the display when the cross was presented. Before the experiment began, participants engaged in 20 practice trials to gain familiarity with the apparatus.

The experiment consisted of a total of 432 trials presented in six blocks of 72 trials, with an equal number of target-present and target-absent trials. The trial order was pseudo-randomized. In each trial, the fixation cross was presented for 500 ms and then the stimulus array consisting of four faces was presented until participants responded. Participants were asked to respond as quickly and as accurately as possible by pushing the appropriate button on a response box using their left or right index finger to indicate whether all four faces were the same or one face was different. The position of the response buttons was counterbalanced across participants.

After the visual search task, participants engaged in the rating task for the target and distractor (neutral) facial stimuli. The stimuli were presented individually. They were asked to evaluate each stimulus in terms of emotional valence and arousal (i.e., the nature and intensity of the emotion, respectively, that participants felt when perceiving the stimulus expression)112 using a nine-point scale ranging from 1 (negative and low arousal, respectively) to 9 (positive and high arousal, respectively). The order of facial stimuli and rating items during the rating task were randomized and balanced across participants. Although we also asked participants to rate familiarity (i.e., the frequency with which they encountered facial expressions such as those depicted by the stimulus in daily life) and naturalness (i.e., the degree to which the expression depicted by the stimulus seemed natural) of stimuli as in previous studies5,7,8,9, these data are not reported here, because they were irrelevant to the purposes of the study.

Data analysis

All statistical tests were performed using SPSS 16.0 J software (SPSS Japan, Tokyo, Japan).

For RT analysis, the mean RTs of correct responses in target trials were calculated for each condition, excluding measurements ±3 SD from the mean as artifacts. To satisfy normality assumptions for the subsequent analyses, the data were subjected to a log transformation. The log-transformed RT was analyzed using a three-way repeated-measure ANOVA with group (TD, ASD) as a between-participant factor and stimulus type (normal, anti) and emotion (anger, happiness) as within-participant factors. Follow-up analyses of significant interactions for the simple effect were conducted138. When higher-order interactions were significant, the main effects or lower-order interactions were not subjected to interpretation because they would be qualified by higher-order interactions139.

Preliminary analyses for accuracy (mean% ± SD; normal anger: 96.4 ± 4.0, 94.1 ± 5.9; normal happiness: 97.1 ± 2.4, 95.6 ± 4.2; anti-anger: 85.6 ± 11.3, 81.9 ± 13.0; and anti-happiness: 84.6 ± 12.2, 82.7 ± 13.3 for TD and ASD groups, respectively) showed no evidence of a speed–accuracy trade-off (i.e., lower accuracy for faster responses) and group difference. Hence, we report only the RT results, as in previous studies5,8,9.

Each rating of valence and arousal was analyzed using an ANOVA and follow-up analyses identical to those used for the RT analysis. In addition, to compare the rating scores of normal/anti-expressions of anger and happiness with those of neutral expression, multiple comparisons with the Bonferroni correction were conducted; the α level was divided by the number of tests performed (i.e., 4 per group).

The α level for all analyses was set to 0.05.

Experiment 2

Participants

A total of 51 adults (26 females and 25 males; mean ± SD age, 22.5 ± 4.5 years), who were recruited by advertisement, were initially investigated. All of these participants completed the Japanese version of the AQ, which includes a 50-item self-rating questionnaire to measure ATs105 and visual search experiments. Nine participants showed high rates of error in trials (>50% under at least one of normal anger, normal happiness, anti-anger, or anti-happiness conditions); their data were not included in the analysis. The remaining participants were divided based on a median split for the AQ scores into two groups. The median AQ score (21) was in good agreement with that of a previous standardization study with young Japanese participants (mean ± SD, 20.7 ± 6.4)140. The 18 participants (10 females and 8 males; mean ± SD age, 23.3 ± 3.3 years) whose AQ scores were below 21 points (mean ± SD = 17.2 ± 2.7) were classified as the low ATs group. The 20 participants (9 females and 11 males; mean ± SD age, 21.7 ± 3.6 years) whose AQ scores were above 21 points (mean ± SD = 27.4 ± 5.0) were classified as the high ATs group. Four participants had scores equal to the median of all participants’ scores; their data were excluded from the analysis. All participants were right-handed, as assessed by the Edinburgh Handedness Inventory135, and had normal or corrected-to-normal visual acuity. A psychiatrist or psychologist administered a short structured diagnostic interview using the Mini-International Neuropsychiatric Interview141; no neuropsychiatric problems at a clinical level were detected in any of the participants. Full-scale IQs were also measured by the WAIS-III (Nihon Bunka Kagakusha, Tokyo, Japan) and all participants showed scores in a normal range (mean ± SD = 122.2 ± 7.2 and 122.6 ± 9.6, in the low and high ATs groups, respectively). A significant group difference between the low and high ATs groups was found in the AQ scores, t(36) = 7.68, p = 0.000, but not terms of age and full-scale IQs, t(36) = 0.56 and 0.14, p = 0.576 and 0.891, respectively, and sex, χ 2(1) = 0.42, p = 0.516. After the procedures were fully explained, all participants provided informed consent for participation. This experiment was part of a larger project investigating personalities and mental health. The experiment was approved by the local ethics committee of the Primate Research Institute, Kyoto University, and conducted in accord with institutional ethical provisions and the Declaration of Helsinki.

Experimental design

The design of Experiment 2 was identical to that used in Experiment 1 with one exception: the group factor included the levels of low vs. high ATs.

Apparatus, stimuli, and procedure

The apparatus, stimuli, and procedure were identical to those used in Experiment 1.

Data analysis

The method of data analyses was almost identical to that used in Experiment 1, with one exception: the group factor included the levels of low vs. high ATs. Preliminary analyses for accuracy (mean% ± SD; normal anger: 92.0 ± 10.4, 93.3 ± 5.3; normal happiness: 84.2 ± 14.2, 83.0 ± 11.1; anti-anger: 84.5 ± 12.4, 86.7 ± 10.2; anti-happiness: 96.2 ± 8.9, 98.1 ± 1.9 for low and high ATs groups, respectively) showed no evidence of a speed–accuracy trade off or of a group difference; hence, we report only the RT results.

References

Hansen, C. H. & Hansen, R. D. Finding the face in the crowd: An anger superiority effect. J. Pers. Soc. Psychol. 54, 917–924 (1988).

Gilboa-Schechtman, E., Foa, E. B. & Amir, N. Attentional biases for facial expressions in social phobia: The face-in-the-crowd paradigm. Cogn. Emot. 13, 305–318 (1999).

Williams, M. A., Moss, S. A., Bradshaw, J. L. & Mattingley, J. B. Look at me, I’m smiling: Visual search for threatening and nonthreatening facial expressions. Vis. Cogn. 12, 29–50 (2005).

Lamy, D., Amunts, L. & Bar-Haim, Y. Emotional priming of pop-out in visual search. Emotion 8, 151–161 (2008).

Sato, W. & Yoshikawa, S. Detection of emotional facial expressions and anti-expressions. Vis. Cogn. 18, 369–388 (2010).

Skinner, A. L. & Benton, C. P. Visual search for expressions and anti-expressions. Vis. Cogn. 20, 1186–1214 (2012).

Sawada, R., Sato, W., Uono, S., Kochiyama, T. & Toichi, M. Electrophysiological correlates of detecting emotional facial expressions. Brain Res. 1560, 60–72 (2014).

Sawada, R. et al. Sex differences in the rapid detection of emotional facial expressions. PLoS One 9, e94747 (2014).

Sawada, R. et al. Neuroticism delays detection of facial expressions. PLoS One 11, e0153400 (2016).

Frischen, A., Eastwood, J. D. & Smilek, D. Visual search for faces with emotional expressions. Psychol. Bull. 134, 662–676 (2008).

Sato, W. & Yoshikawa, S. Anti-expressions: Artificial control stimuli for emotional facial expressions regarding visual properties. Soc. Behav. Pers. 37, 491–502 (2009).

American Psychiatric Association. Diagnostic and statistical manual of mental disorders (5th ed.) (American Psychiatric Publishing, 2013).

Hobson, R. P. Autism and the development of mind (Lawrence Erlbaum Associates, 1993).

Scott, D. W. Asperger’s syndrome and non-verbal communication: A pilot study. Psychol. Med. 15, 683–687 (1985).

Hobson, R. P. The autistic child’s appraisal of expressions of emotion. J. Child Psychol. Psychiatry 27, 321–342 (1986).

Hobson, R. P. The autistic child’s appraisal of expressions of emotion: A further study. J. Child Psychol. Psychiatry 27, 671–680 (1986).

Weeks, S. J. & Hobson, R. P. The salience of facial expression for autistic children. J. Child Psychol. Psychiatry 28, 137–151 (1987).

Gioia, J. V. & Brosgole, L. Visual and auditory affect recognition in singly diagnosed mentally retarded patients, mentally retarded patients with autism and normal young children. Int. J. Neurosci. 43, 149–163 (1988).

Hobson, R. P., Ouston, J. & Lee, A. Emotion recognition in autism: Coordinating faces and voices. Psychol. Med. 18, 911–923 (1988).

Braverman, M., Fein, D., Lucci, D. & Waterhouse, L. Affect comprehension in children with pervasive developmental disorders. J. Autism Dev. Disord. 19, 301–316 (1989).

Macdonald, H. et al. Recognition and expression of emotional cues by autistic and normal adults. J. Child Psychol. Psychiatry 30, 865–877 (1989).

Tantam, D., Monaghan, L., Nicholson, H. & Stirling, J. Autistic children’s ability to interpret faces: A research note. J. Child Psychol. Psychiatry 30, 623–630 (1989).

Ozonoff, S., Pennington, B. F. & Rogers, S. J. Executive function deficits in high-functioning autistic individuals: Relationship to theory of mind. J. Child Psychol. Psychiatry 32, 1081–1105 (1991).

Capps, L., Yirmiya, N. & Sigman, M. Understanding of simple and complex emotions in non-retarded children with autism. J. Child Psychol. Psychiatry 33, 1169–1182 (1992).

Baron-Cohen, S., Spitz, A. & Cross, P. Do children with autism recognise surprise? A research note. Cogn. Emot. 7, 507–516 (1993).

Davies, S., Bishop, D., Manstead, A. S. & Tantam, D. Face perception in children with autism and Asperger’s syndrome. J. Child Psychol. Psychiatry 35, 1033–1057 (1994).

Bormann-Kischkel, C., Vilsmeier, M. & Baude, B. The development of emotional concepts in autism. J. Child Psychol. Psychiatry 36, 1243–1259 (1995).

Muris, P., Meesters, C., Merckelbach, H. & Lomme, M. Knowledge of basic emotions in adolescent and adult individuals with autism. Psychol. Rep. 76, 52–54 (1995).

Gepner, B., de Gelder, B. & de Schonen, S. Face processing in autistics: Evidence for a generalised deficit? Child Neuropsychol. 2, 123–139 (1996).

Loveland, K. A. et al. Emotion recognition in autism: verbal and nonverbal information. Dev. Psychopathol. 9, 579–593 (1997).

Buitelaar, J. K., van der Wees, M., Swaab-Barneveld, H. & van der Gaag, R. J. Theory of mind and emotion-recognition functioning in autistic spectrum disorders and in psychiatric control and normal children. Dev. Psychopathol. 11, 39–58 (1999).

Celani, G., Battacchi, M. W. & Arcidiacono, L. The understanding of the emotional meaning of facial expressions in people with autism. J. Autism Dev. Disord. 29, 57–66 (1999).

Grossman, J. B., Klin, A., Carter, A. S. & Volkmar, F. R. Verbal bias in recognition of facial emotions in children with Asperger syndrome. J. Child Psychol. Psychiatry 41, 369–379 (2000).

Howard, M. A. et al. Convergent neuroanatomical and behavioural evidence of an amygdala hypothesis of autism. Neuroreport 11, 2931–2935 (2000).

Teunisse, J. P. & de Gelder, B. Impaired categorical perception of facial expressions in high-functioning adolescents with autism. Child Neuropsychol. 7, 1–14 (2001).

Pelphrey, K. A. et al. Visual scanning of faces in autism. J. Autism Dev. Disord. 32, 249–261 (2002).

Bölte, S. & Poustka, F. The recognition of facial affect in autistic and schizophrenic subjects and their first degree relatives. Psychol. Med. 33, 907–915 (2003).

Deruelle, C., Rondan, C., Gepner, B. & Tardif, C. Spatial frequency and face processing in children with autism and Asperger syndrome. J. Autism Dev. Disord. 199–210 (2004).

Downs, A. & Smith, T. Emotional understanding, cooperation, and social behavior in high-functioning children with autism. J. Autism Dev. Disord. 34, 625–635 (2004).

Gross, T. F. The perception of four basic emotions in human and nonhuman faces by children with autism and other developmental disabilities. J. Abnorm. Child. Psychol. 32, 469–480 (2004).

Piggot, J. et al. Emotional attribution in high-functioning individuals with autistic spectrum disorder: A functional imaging study. J. Am. Acad. Child Adolesc. Psychiatry. 43, 473–480 (2004).

Ashwin, C., Chapman, E., Colle, L. & Baron-Cohen, S. Impaired recognition of negative basic emotions in autism: a test of the amygdala theory. Soc. Neurosci. 1, 349–363 (2006).

Dziobek, I., Fleck, S., Rogers, K., Wolf, O. T. & Convit, A. The ‘amygdala theory of autism’ revisited: Linking structure to behavior. Neuropsychologia 44, 1891–1899 (2006).

Golan, O., Baron-Cohen, S. & Hill, J. The Cambridge Mindreading (CAM) Face-Voice Battery: Testing complex emotion recognition in adults with and without Asperger syndrome. J. Autism Dev. Disord. 36, 169–183 (2006).

Lindner, J. L. & Rosén, L. A. Decoding of emotion through facial expression, prosody and verbal content in children and adolescents with Asperger’s syndrome. J. Autism Dev. Disord. 36, 769–777 (2006).

Boraston, Z., Blakemore, S. J., Chilvers, R. & Skuse, D. Impaired sadness recognition is linked to social interaction deficit in autism. Neuropsychologia 45, 1501–1510 (2007).

Humphreys, K., Minshew, N., Leonard, G. L. & Behrmann, M. A fine-grained analysis of facial expression processing in high-functioning adults with autism. Neuropsychologia 45, 685–695 (2007).

Tardif, C., Lainé, F., Rodriguez, M. & Gepner, B. Slowing down presentation of facial movements and vocal sounds enhances facial expression recognition and induces facial-vocal imitation in children with autism. J. Autism Dev. Disord. 37, 1469–1484 (2007).

Clark, T. F., Winkielman, P. & McIntosh, D. N. Autism and the extraction of emotion from briefly presented facial expressions: Stumbling at the first step of empathy. Emotion 8, 803–809 (2008).

Corden, B., Chilvers, R. & Skuse, D. Emotional modulation of perception in Asperger’s syndrome. J. Autism Dev. Disord. 38, 1072–1080 (2008).

Kätsyri, J., Saalasti, S., Tiippana, K., von Wendt, L. & Sams, M. Impaired recognition of facial emotions from low-spatial frequencies in Asperger syndrome. Neuropsychologia 46, 1888–1897 (2008).

Rosset, D. B. et al. Typical emotion processing for cartoon but not for real faces in children with autistic spectrum disorders. J. Autism Dev. Disord. 38, 919–925 (2008).

Wallace, S., Coleman, M. & Bailey, A. An investigation of basic facial expression recognition in autism spectrum disorders. Cogn. Emot. 22, 1353–1380 (2008).

Akechi, H. et al. Does gaze direction modulate facial expression processing in children with autism spectrum disorder? Child Dev. 80, 1134–1146 (2009).

Lacroix, A., Guidetti, M., Rogé, B. & Reilly, J. Recognition of emotional and nonemotional facial expressions: A comparison between Williams syndrome and autism. Res. Dev. Disabil. 30, 976–985 (2009).

Rump, K. M., Giovannelli, J. L., Minshew, N. J. & Strauss, M. S. The development of emotion recognition in individuals with autism. Child Dev. 80, 1434–1447 (2009).

Bal, E. et al. Emotion recognition in children with autism spectrum disorders: Relations to eye gaze and autonomic state. J. Autism Dev. Disord. 40, 358–370 (2010).

Dziobek, I., Bahnemann, M., Convit, A. & Heekeren, H. R. The role of the fusiform-amygdala system in the pathophysiology of autism. Arch. Gen. Psychiatry 67, 397–405 (2010).

García-Villamisar, D., Rojahn, J., Zaja, R. H. & Jodra, M. Facial emotion processing and social adaptation in adults with and without autism spectrum disorder. Res. Autism Spectr. Disord. 4, 755–762 (2010).

Kessels, R. P. C., Spee, P. & Hendriks, A. W. Perception of dynamic facial emotional expressions in adolescents with autism spectrum disorders (ASD). Transl. Neurosci. 1, 228–232 (2010).

Law Smith, M. J., Montagne, B., Perrett, D. I., Gill, M. & Gallagher, L. Detecting subtle facial emotion recognition deficits in high-functioning Autism using dynamic stimuli of varying intensities. Neuropsychologia 48, 2777–2781 (2010).

Philip, R. C. et al. Deficits in facial, body movement and vocal emotional processing in autism spectrum disorders. Psychol. Med. 40, 1919–1929 (2010).

Uono, S., Sato, W. & Toichi, M. The specific impairment of fearful expression recognition and its atypical development in pervasive developmental disorder. Soc. Neurosci. 6, 452–463 (2011).

Wallace, G. L. et al. Diminished sensitivity to sad facial expressions in high functioning autism spectrum disorders is associated with symptomatology and adaptive functioning. J. Autism Dev. Disord. 41, 1475–1486 (2011).

Kennedy, D. P. & Adolphs, R. Perception of emotions from facial expressions in high-functioning adults with autism. Neuropsychologia 50, 3313–3319 (2012).

Sawyer, A. C., Williamson, P. & Young, R. L. Can gaze avoidance explain why individuals with Asperger’s syndrome can’t recognise emotions from facial expressions? J. Autism Dev. Disord. 42, 606–618 (2012).

Sucksmith, E., Allison, C., Baron-Cohen, S., Chakrabarti, B. & Hoekstra, R. A. Empathy and emotion recognition in people with autism, first-degree relatives, and controls. Neuropsychologia 51, 98–105 (2013).

Uono, S., Sato, W. & Toichi, M. Common and unique impairments in facial-expression recognition in pervasive developmental disorder-not otherwise specified and Asperger’s disorder. Res. Autism Spectr. Disord. 7, 361–368 (2013).

Enticott, P. G. et al. Emotion recognition of static and dynamic faces in autism spectrum disorder. Cogn. Emot. 28, 1110–1118 (2014).

Sachse, M. et al. Facial emotion recognition in paranoid schizophrenia and autism spectrum disorder. Schizophr. Res. 159, 509–514 (2014).

Eack, S. M., Mazefsky, C. A. & Minshew, N. J. Misinterpretation of facial expressions of emotion in verbal adults with autism spectrum disorder. Autism 19, 308–315 (2015).

Golan, O., Sinai-Gavrilov, Y. & Baron-Cohen, S. The Cambridge Mindreading Face-Voice Battery for Children (CAM-C): complex emotion recognition in children with and without autism spectrum conditions. Mol. Autism. 6, 22 (2015).

Taylor, L. J., Maybery, M. T., Grayndler, L. & Whitehouse, A. J. Evidence for shared deficits in identifying emotions from faces and from voices in autism spectrum disorders and specific language impairment. Int.l J. Lang. Commun. Disord. 50, 452–466 (2015).

Ozonoff, S., Pennington, B. F. & Rogers, S. J. Are there emotion perception deficits in young autistic children? J. Child Psychol. Psychiatry 31, 343–361 (1990).

Prior, M., Dahlstrom, B. & Squires, T. L. Autistic children’s knowledge of thinking and feeling states in other people. J. Child Psychol. Psychiatry 31, 587–601 (1990).

Baron-Cohen, S., Jolliffe, T., Mortimore, C. & Robertson, M. Another advanced test of theory of mind: Evidence from very high functioning adults with autism or asperger syndrome. J. Child Psychol. Psychiatry 38, 813–822 (1997).

Adolphs, R., Sears, L. & Piven, J. Abnormal processing of social information from faces in autism. J. Cogn. Neurosci. 13, 232–240 (2001).

Gepner, B., Deruelle, C. & Grynfeltt, S. Motion and emotion: A novel approach to the study of face processing by young autistic children. J. Autism Dev. Disord. 31, 37–45 (2001).

Ogai, M. et al. fMRI study of recognition of facial expressions in high-functioning autistic patients. Neuroreport 14, 559–563 (2003).

Robel, L. et al. Discrimination of face identities and expressions in children with autism: same or different? Eur. Child Adolesc. Psychiatry 13, 227–233 (2004).

Castelli, F. Understanding emotions from standardized facial expressions in autism and normal development. Autism 9, 428–449 (2005).

Neumann, D., Spezio, M. L., Piven, J. & Adolphs, R. Looking you in the mouth: abnormal gaze in autism resulting from impaired top-down modulation of visual attention. Soc. Cogn. Affect. Neurosci. 1, 194–202 (2006).

Sasson, N. et al. Orienting to social stimuli differentiates social cognitive impairment in autism and schizophrenia. Neuropsychologia 45, 2580–2588 (2007).

Spezio, M. L., Adolphs, R., Hurley, R. S. & Piven, J. Abnormal use of facial information in high-functioning autism. J. Autism Dev. Disord. 37, 929–939 (2007).

Uono, S., Sato, W. & Toichi, M. Dynamic fearful gaze does not enhance attention orienting in individuals with Asperger’s disorder. Brain Cogn. 71, 229–233 (2009).

Jones, C. R. G. et al. A multimodal approach to emotion recognition ability in autism spectrum disorders. J. Child Psychol. Psychiatry 52, 275–285 (2011).

Tracy, J. L., Robins, R. W., Schriber, R. A. & Solomon, M. Is emotion recognition impaired in individuals with autism spectrum disorders? J. Autism Dev. Disord. 41, 102–109 (2011).

Weng, S. J. et al. Neural activation to emotional faces in adolescents with autism spectrum disorders. J. Child. Psychol. Psychiatry 52, 296–305 (2011).

Uono, S., Sato, W. & Toichi, M. Reduced representational momentum for subtle dynamic facial expressions in individuals with autism spectrum disorders. Res. Autism Spectr. Disord. 8, 1090–1099 (2014).

Sato, W., Uono, S. & Toichi, M. Atypical recognition of dynamic changes in facial expressions in autism spectrum disorders. Res. Autism Spectr. Disord. 7, 906–912 (2013).

McIntosh, D. N., Reichmann-Decker, A., Winkielman, P. & Wilbarger, J. L. When the social mirror breaks: Deficits in automatic, but not voluntary, mimicry of emotional facial expressions in autism. Dev. Sci. 9, 295–302 (2006).

Beall, P. M., Moody, E. J., McIntosh, D. N., Hepburn, S. L. & Reed, C. L. Rapid facial reactions to emotional facial expressions in typically developing children and children with autism spectrum disorder. J. Exp. Child. Psychol. 101, 206–223 (2008).

Oberman, L. M., Winkielman, P. & Ramachandran, V. S. Slow echo: facial EMG evidence for the delay of spontaneous, but not voluntary, emotional mimicry in children with autism spectrum disorders. Dev. Sci. 12, 510–520 (2009).

Yoshimura, S., Sato, W., Uono, S. & Toichi, M. Impaired overt facial mimicry in response to dynamic facial expressions in high-functioning autism spectrum disorders. J. Autism Dev. Disord. 45, 1318–1328 (2015).

Hubert, B. E., Wicker, B., Monfardini, E. & Deruelle, C. Electrodermal reactivity to emotion processing in adults with autistic spectrum disorders. Autism 13, 9–19 (2009).

Riby, D. M., Whittle, L. & Doherty-Sneddon, G. Physiological reactivity to faces via live and video-mediated communication in typical and atypical development. J. Clin. Exp. Neuropsychol. 34, 385–395 (2012).

Sepeta, L. et al. Abnormal social reward processing in autism as indexed by pupillary responses to happy faces. J. Neurodev. Disord. 4, 17 (2012).

Nuske, H. J., Vivanti, G., Hudry, K. & Dissanayake, C. Pupillometry reveals reduced unconscious emotional reactivity in autism. Biol. Psychol. 101, 24–35 (2014).

De Jong, M. C., Van Engeland, H. & Kemner, C. Attentional effects of gaze shifts are influenced by emotion and spatial frequency, but not in autism. J. Am. Acad. Child Adolesc. Psychiatry 47, 443–454 (2008).

Kamio, Y., Wolf, J. & Fein, D. Automatic processing of emotional faces in high-functioning pervasive developmental disorders: An affective priming study. J. Autism Dev. Disord. 36, 155–167 (2006).

Hall, G. B., West, C. D. & Szatmari, P. Backward masking: Evidence of reduced subcortical amygdala engagement in autism. Brain Cogn. 65, 100–106 (2007).

Ashwin, C., Wheelwright, S. & Baron-Cohen, S. Finding a face in the crowd: Testing the anger superiority effect in Asperger Syndrome. Brain Cogn. 61, 78–95 (2006).

Krysko, K. M. & Rutherford, M. D. A threat-detection advantage in those with autism spectrum disorders. Brain Cogn. 69, 472–480 (2009).

May, T., Cornish, K. & Rinehart, N. J. Exploring factors related to the anger superiority effect in children with Autism Spectrum Disorder. Brain Cogn. 106, 65–71 (2016).

Baron-Cohen, S., Wheelwright, S., Skinner, R., Martin, J. & Clubley, E. The autism-spectrum quotient (AQ): Evidence from Asperger syndrome/high-functioning autism, males and females, scientists and mathematicians. J. Autism Dev. Disord. 31, 5–17 (2001).

Constantino, J. N. & Todd, R. D. Autistic traits in the general population: A twin study. Arch. Gen. Psychiatry 60, 524–530 (2003).

Allison, C. et al. The Q-CHAT (Quantitative CHecklist for Autism in Toddlers): A normally distributed quantitative measure of autistic traits at 18–24 months of age: preliminary report. J. Autism Dev. Disord. 38, 1414–1425 (2008).

Hoekstra, R. A., Bartels, M., Cath, D. C. & Boomsma, D. I. Factor structure, reliability and criterion validity of the Autism-Spectrum Quotient (AQ): A study in Dutch population and patient groups. J. Autism Dev. Disord. 38, 1555–1566 (2008).

Poljac, E., Poljac, E. & Wagemans, J. Reduced accuracy and sensitivity in the perception of emotional facial expressions in individuals with high autism spectrum traits. Autism 17, 668–680 (2013).

Hermans, E. J., van Wingen, G., Bos, P. A., Putman, P. & van Honk, J. Reduced spontaneous facial mimicry in women with autistic traits. Biol. Psychol. 80, 348–353 (2009).

Lassalle, A. & Itier, R. J. Autistic traits influence gaze-oriented attention to happy but not fearful faces. Soc. Neurosci. 10, 70–88 (2015).

Lang, P. J., Bradley, M. M. & Cuthbert, B. N. Emotion and motivation: Measuring affective perception. J. Clin. Neurophysiol. 15, 397–408 (1998).

Barrett, L. F. & Russell, J. A. Independence and bipolarity in the structure of current affect. J. Pers. Soc. Psychol. 74, 967–984 (1998).

Modahl, C. et al. Plasma oxytocin levels in autistic children. Biol. Psychiatry. 43, 270–277 (1998).

Al-Ayadhi, L. Y. Altered oxytocin and vasopressin levels in autistic children in Central Saudi Arabia. Neurosciences (Riyadh) 10, 47–50 (2005).

Husarova, V. M. et al. Plasma oxytocin in children with autism and its correlations with behavioral parameters in children and parents. Psychiatry Investig. 13, 174–183 (2016).

Zhang, H. F. et al. Plasma oxytocin and arginine-vasopressin levels in children with autism spectrum disorder in China: Associations with symptoms. Neurosci. Bull. 32, 423–432 (2016).

Guastella, A. J. et al. Intranasal oxytocin improves emotion recognition for youth with autism spectrum disorders. Biol. Psychiatry 67, 692–694 (2010).

Anagnostou, E. et al. Intranasal oxytocin versus placebo in the treatment of adults with autism spectrum disorders: a randomized controlled trial. Mol. Autism 3, 16 (2012).

Schulze, L. et al. Oxytocin increases recognition of masked emotional faces. Psychoneuroendocrinology 36, 1378–1382 (2011).

Domes, G., Normann, C. & Heinrichs, M. The effect of oxytocin on attention to angry and happy faces in chronic depression. BMC Psychiatry 16, 92 (2016).

Xu, L. et al. Oxytocin enhances attentional bias for neutral and positive expression faces in individuals with higher autistic traits. Psychoneuroendocrinology 62, 352–358 (2015).

Ellenbogen, M. A., Linnen, A. M., Grumet, R., Cardoso, C. & Joober, R. The acuteeffects of intranasal oxytocin on automatic and effortful attentional shifting toemotional faces. Psychophysiology 49, 128–137 (2012).

Kim, Y. R. et al. Intranasal oxytocin lessens the attentional bias to adult negative faces: A double blind within-subject experiment. Psychiatry Investig. 11, 160–166 (2014).

Ekman, P. & Friesen, W. V. Unmasking the face: A guide to recognizing emotions from facial clues (Prentice-Hall, Englewood Cliffs, 1975).

Knutson, B. Facial expressions of emotion influence interpersonal trait inferences. J. Nonverbal Behav. 20, 165–182 (1996).

Yik, M. S. M. & Russell, J. A. Interpretation of faces: A cross-cultural study of a prediction from Fridlund’s theory. Cogn. Emot. 13, 93–104 (1999).

Simons, D. J. & Rensink, R. A. Change blindness: Past, present, and future. Trends Cogn. Sci. 9, 16–20 (2005).

Bentley, K. Alone together: Making an asperger marriage work (Jessica Kingsley Publishers, 2007).

Aston, M. The Asperger couple’s workbook: Practical advice and activities for couples and counsellors (Jessica Kingsley Publishers, 2008).

Simone, R. 22 Things a woman must know: If she loves a man with Asperger’s syndrome (Jessica Kingsley, 2009).

Simons, H. F. & Thompson, J. R. Affective deprivation disorder: Does it constitute a relational disorder? (Affectivedeprivationblogspot.com, 2009).

Farroni, T., Menon, E., Rigato, S. & Johnson, M. H. The perception of facial expressions in newborns. Eur. J. Dev. Psychol. 4, 2–13 (2007).

American Psychiatric Association. Diagnostic and statistical manual of mental disorders. 4th ed., text revision (American Psychiatric Publishing, 2000).

Oldfield, R. C. The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia 9, 97–113 (1971).

Ekman, P. & Friesen, W. V. Pictures of Facial Affect (Consulting Psychologist, 1976).

Skinner, A. L. & Benton, C. P. Anti-expression aftereffects reveal prototype-referenced coding of facial expressions. Psychol. Sci. 21, 1248–1253 (2010).

Kirk, R. E. Experimental design: Procedures for the behavioral sciences. 3rd ed. (Brooks/Cole, 1995).

Tabachnick, B. G. & Fidell, L. S. Computer-assisted research design and analysis (Allyn and Bacon, 2001).

Wakabayashi, A., Baron-Cohen, S., Wheelwright, S. & Tojo, Y. The Autism-Spectrum Quotient (AQ) in Japan: A cross-cultural comparison. J. Autism Dev. Disord. 36, 263–270 (2006).

Sheehan, D. V. et al. The Mini-International Neuropsychiatric Interview (M.I.N.I.): The development and validation of a structured diagnostic psychiatric interview for DSM-IV and ICD-10. J. Clin. Psychiatry 59, 22–33 (1998).

Acknowledgements

The authors thank Kazusa Minemoto, Emi Yokoyama, and Akemi Inoue for their technical support. This study was supported by funds from the Japan Society for the Promotion of Science (JSPS) Funding Program for Next Generation World-Leading Researchers Grant Number LZ008, the Meiji Yasuda Mental Health Foundation, the JSPS KAKENHI Grant Number 25885049, and the Organization for Promoting Neurodevelopmental Disorder Research.

Author information

Authors and Affiliations

Contributions

W.S., R.S., S.U., S.Y., T.K., Y.K., M.S. and M.T. designed the research; W.S., R.S., S.U., S.Y., M.S., and M.T. obtained the data; W.S., R.S., S.Y., and M.T. analyzed the data; and W.S., R.S., S.U., S.Y., T.K., Y.K., M.S., and M.T. wrote the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sato, W., Sawada, R., Uono, S. et al. Impaired detection of happy facial expressions in autism. Sci Rep 7, 13340 (2017). https://doi.org/10.1038/s41598-017-11900-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-017-11900-y

This article is cited by

-

Patterns of neural activity in response to threatening faces are predictive of autistic traits: modulatory effects of oxytocin receptor genotype

Translational Psychiatry (2024)

-

Influence of stimulus manipulation on conscious awareness of emotional facial expressions in the match-to-sample paradigm

Scientific Reports (2023)

-

Impairment of emotional expression detection after unilateral medial temporal structure resection

Scientific Reports (2021)

-

Autistic traits are associated with the functional connectivity of between—but not within—attention systems in the general population

BMC Neuroscience (2020)

-

Atypical Visual Processing but Comparable Levels of Emotion Recognition in Adults with Autism During the Processing of Social Scenes

Journal of Autism and Developmental Disorders (2019)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.