Abstract

Recent studies have proposed that the diffusion of messenger molecules, such as monoamines, can mediate the plastic adaptation of synapses in supervised learning of neural networks. Based on these findings we developed a model for neural learning, where the signal for plastic adaptation is assumed to propagate through the extracellular space. We investigate the conditions allowing learning of Boolean rules in a neural network. Even fully excitatory networks show very good learning performances. Moreover, the investigation of the plastic adaptation features optimizing the performance suggests that learning is very sensitive to the extent of the plastic adaptation and the spatial range of synaptic connections.

Similar content being viewed by others

Introduction

The brain is a large neural network, where sensory information is processed, possibly triggering a specific response. If this response turns out to generate a result, that is different from the expected one, error signals cause changes in the synaptic weights attempting to minimize further mismatches1,2,3. Models of neural network attempting to contribute to the understanding of learning in the brain usually look at a task where for a specific set of inputs the network has to adapt to generate a desired result. Error back propagation4, one of the most popular algorithms proposed, can however not serve as a candidate for learning in a biological sense5. A teaching signal which transmits information antidromically with memory of all neurons it has passed before, seems unlikely to exist in the brain.

Different studies have proposed a variety of models for a neural network able to learn without passing error gradients antidromically. It has been suggested that errors could propagate backwards through a second network6, 7, where forward and backward connections have to be chosen symmetric. Another interesting approach is to calculate error gradients by using an alternation of two activity phases, where one is determined by a teacher8. A similar performance, as the back propagation algorithm, can also be achieved by using exact error gradients only for the last synapses and the synapses in the hidden layers are modified only in the same direction as with back propagation9. There exist also interesting models, called liquid state machines or echo state machines, where the weights of the main network remains unchanged and the inputs are connected to a large recurrent neural network, thereby mapping the input to a higher dimensional space10, 11. These models are mainly used for spatiotemporal problems like speech recognition, but their capability for recognizing handwritten digits has also been demonstrated12.

The variety of model reduces if we insist on the assumption that synaptic changes have to be triggered by a specific error signal. Since there is no evidence that a neural network can transfer feedback information antidromically, such a signal should propagate through the extracellular space. A feedback signal to change the strength of synapses in a larger region could for instance be delivered by monoamine releasing neurons. It is known that these neurons release their transmitters deep into the extracellular space13. In particular for dopamine, it has been verified, that its release can mediate plasticity14, 15. In several recently proposed models these ideas are implemented by the assumption that spike-timing-dependent plasticity (STDP) and dopamine induced plasticity are directly coupled16,17,18. These models assume that dopamine serves as a rewarding signal. In an other model it has been proposed19 that the transmitted feedback signal changes the vesicle release probability of previously activated synapses.

Opposite to rewarding reinforcement it has been argued that negative feedback signals, which change synapses only if mistakes occurred, are more biologically plausible and preserve the adaptability of the system20,21,22. Based on such ideas, de Arcangelis et al.23, 24 proposed a model of spiking integrate-and-fire neurons which is able to learn logical binary functions in a neural network. For wrong responses by the neural network, activated synapses are modified proportionally to the inverse number of synaptic connections on the signal path to the output neuron. This method implies that the feedback signal propagates backwards on the synaptic connections and decreases in strength depending on the number of neurons it has passed before. In this article we extend these ideas by a model, where the strength of the feedback signal does not depend on a network distance, but on the euclidean distance. By this model we study the importance of the localization of a teaching signal, i.e. if a localized learning signal can represent advantages over one acting widely in space. This is the case, for instance, of dopamine whose effect covers a finite range of tens to thousands of synapses13.

Network Model

Network topology

The network consists of 4 input neurons, 1 output neuron and N neurons in the hidden network, which are placed randomly in a square, with side length L. The area is chosen to scale proportional to N, such that the density of neurons remains constant (Fig. 1). In the further discussions we will use N to characterize the size of the network. Each neuron in the hidden network has ten outward connections. Each connection is established by choosing a distance d from an exponential distribution25 \(p(d)=\frac{1}{{d}_{0}}{e}^{-d/{d}_{0}}\) and searching for a neuron at a distance sufficiently close to d. The input neurons also have ten outward connections with their nearest neighbours whereas the output neuron has no outward but only inward connections from its ten nearest nodes. When inhibitory neurons are considered, a fixed fraction p inh of the neurons will be inhibitory.

Network architecture. Network with one hundred neurons in the network (gray neurons). The four input neurons (green) on the left are labeled 1 to 4 (top to bottom) and are connected to the network. On the right is the output neuron (red) which senses incoming signals from its ten nearest nodes. The strength of the synapses is indicated by their thickness.

Neuronal model

For the firing dynamics we choose an integrate-and-fire model with discrete time steps. During each time step all neurons with potential exceeding a certain threshold v i ≥ v max = 1.0 fire. After firing the potential of the neuron i is set back to zero and the voltage of all connected neurons j becomes

where ω ij is the synaptic strength and η i ∈ [0, 1] stands for the amount of releasable neurotransmitter, which for simplicity is the same for all synapses of neuron i. The plus or minus sign is for excitatory or inhibitory synapses, respectively. After each firing η i decreases by a fixed amount Δη = 0.2, which guarantees that the network activity will decay in a finite time. The initial conditions are v = 0 and η = 1 for all neurons. All neurons which have reached a potential higher than the threshold will fire at the next time step. After firing a neuron will be in a refractory state for t refr time step, during which it cannot receive or send any signal.

Learning

Learning binary input-output relations such as the XOR-rule has been a classical benchmark problem for neural networks. In our case the XOR-rule turned out to be too simple as only very small networks with unfavorable parameters were not always able to learn it. We choose to study a more complex problem with up to 15 different Boolean functions of 4 binary inputs (see Table 1). We will study how our learning procedure performs for different parameters and network sizes.

Each input bit is applied to one input neuron by making it fire or not fire if the input is one or zero, respectively. The activity then propagates through the network and the binary output value 1 is identified with the output neuron firing at least once and zero if it does not fire during the whole network activity.

Whether the activity reaches the output neuron depends solely on the strength of the synapses. If the average strength of the synapses is too low, the propagation of the activity stops soon and only a fraction of the entire network will fire. On the other hand for very strong synapses almost all neurons will fire several times and the output neuron will fire for each input pattern. Therefore an optimal choice for the average synaptic strength is a “critical value” where on average for each input pattern there is an equal chance that the output neuron fires or does not fire. We approximate this “critical point” by initially choosing weak synapses (ω ij = 1.0 for the synapses of the input neurons and ω ij = 0.1 for all other synapses) and when applying the input patterns, as long as no input leads to firing of the output neuron, all synapses are strengthened by a small amount (0.001 times the actual strength of the synapse). As soon as the first input leads to the output neuron firing, the network is considered to be close to the “critical point” and the learning procedure starts.

Learning proceeds as follows: All inputs of the rule are fed into the network one after the other, where the value of the neurotransmitters and the voltages are restored back to η = 1.0 and v = 0 before each input. Whenever the result is wrong the synaptic strengths are adapted. If the correct result is one but the output neuron did not fire, synapses are strengthened, otherwise if the correct result is zero but the output neuron fired, synapses are weakened20. Only those synapses activated during the activity propagation are modified. After a neuron fires all synapses which do not lead to neurons which were in the refractory state are considered as activated. The adaptation signal is assumed to be released at the output and decrease exponentially in space

where α = 0.001 is the adaptation strength and r is the euclidean distance between the output neuron and the postsynaptic neuron of the synapse, neuron j. The postsynaptic neuron is chosen based on the assumption that a synapse is located much closer to the postsynaptic than to the presynaptic neuron. Different values for α have been tested and are found to affect the learning speed but not the qualitatively results. The plus or minus sign is for excitatory or inhibitory synapses, respectively. Additionally the adaptation is proportional to the strength of the synapse itself and to the number of times the synapse was activated (n act ). The ω ij and the n act dependence are considered since they lead to an increase in learning performance. A maximum synaptic strength (ω max = 2) is imposed. If the activity is so weak that the voltage of the output neuron remains unchanged, the system is not able to provide an answer and therefore we increase all the weights by a small amount (Δω ij = αω ij ).

XOR gate with only excitatory neurons

A biological neural network consists of excitatory (ω ij ≥ 0) and inhibitory (ω ij ≤ 0) synapses. It has actually been shown that a certain percentage of inhibitory synapses can increase the learning performance24. The importance of inhibiting signals can be seen by looking at the XOR-rule (Fig. 2): When one of the two input neurons fires the output neuron should fire as well, but when both input neurons fire the output neuron should not fire. Using only synapses with positive weights we might intuitively think that by the principle of superposition a stronger input should always lead to a stronger output. This, however, does not hold in general. An interesting and important ingredient to generate inhibition is the refractory time. Figure 2 shows an example of a small network that performs the XOR rule with excitatory neurons and a refractory time of one time step. Black and gray arrows are for weights equal to and barely less than one, respectively. When both input neurons (neuron 1 and 2 in the figure) are stimulated neuron 4 fires one time step earlier than in the other two cases, where neuron 4 fires after a second stimulation by neuron 3. This earlier firing of neuron 4 leads to neuron 7 stimulating neuron 6 when it is in the refractory period. Therefore, neuron 6 only fires once which is not sufficient to make the output neuron (neuron 8) fire. The self-organization of the learning mechanism can take advantage of this mechanism to suppress certain signals. Therefore, even if purely excitatory network is not biologically reasonable, the refractory time can induce inhibition which can be of computational relevance. Therefore, we chose to perform most simulations with purely excitatory neurons.

Results

Our learning procedure runs until either sequentially all input-output relations are learned or a maximal number of learning steps T max has been performed, where a learning step occurs whenever the network generates a wrong result for the actual input. For most of the simulations the networks will be trained to learn the first ten patterns from Table 1. The learning performance is evaluated by generating a statistical ensemble of 2000 random networks with the same parameters and the success rate s is defined by the ratio of networks which are able to learn all patterns within T max .

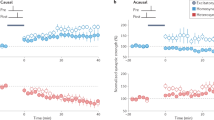

We first investigate the role of the learning length r 0 on the success rate s (see Fig. 3a). For very localized learning signals (r 0 < 10−1) the success rates are close to zero. This is not surprising as only the synapses leading to the output neuron are modified by a noticeable amount. For larger r 0 the learning adaptation penetrates deeper into the network and the success rate rapidly increases up to s = 1.0 for r 0 = 10 and T max = 100,000. Interestingly, when r 0 exceeds the system size (r 0/L = 1), which implies that all synapses in the network are adapted, the learning performance strongly decreases. Overall, we find that the learning performance can strongly be optimized by the spacial extent of the teaching signal.

Ranges of the learning length optimizing the learning performance. The success rate s as a function of the learning length r 0/L. (a) Different maximal learning times T max show a consistent improvement in performance (N = 1000, d 0 = 2, t refr = 1, first ten patterns of Table 1). (b) The performance dependence on the number of patterns the system has to learn (T max = 10000, N = 1000, t refr = 1, d 0 = 2). For T max = 3000 (in Fig. a) and for 12 patterns (in Fig. b) we also performed simulations with inhibitory neurons p inh = 0.2 resulting in an overall performance improvement. The error bar represent a 95% confidence interval.

Figure 3b shows the learning performance for a different number of patterns to be learned by the network. Unsurprisingly the performance is higher when only the first three patterns from Table 1 have to be learned compared to the case when all the fifteen patterns have to be learned. It is interesting to notice that the value for r 0 at which the learning performance increases shifts towards larger values the more patterns the network has to learn. This suggests that the more patterns a network has to learn the deeper the learning signal has to penetrate into the network.

Next we investigate, the role of the network size on the performance. Indeed, we find a monotonic increase in performance (see Fig. 4a). Interestingly the performance exhibits its maximum at a value of r 0 increasing proportionally to L, whereas the s-decay shifts towards larger r 0 for larger system size. This result confirms that the learning capabilities decrease when r 0 exceeds the system size L and the adaptation changes all activated synapses in an almost uniform way. In Fig. 4b we vary the characteristic length for synaptic connections d 0 and study its effect on the learning performance. When r 0 exceeds the system length, d 0 strongly affects the learning performance. For longer synapses (higher d 0) the performance drops much faster as r 0 increases. This result is in agreement with the abrupt decrease of the performance for plastic adaptation acting over a range larger than the system size L. For very large d 0, the synaptic network also undergoes quite uniform plastic adaptation over large distances. The maximum performance appears to weakly depend on d 0, except for very localized connections.

System size dependence and influence of the synaptic length. (a) The success rate s as a function of r 0/L for different network sizes N (T max = 10000, d 0 = 2, t refr = 1, first ten patterns of Table 1). Larger networks show a better performance and the peak of the performance roughly moves proportionally to L. (b) The effect of the network structure is analyzed by plotting s for different synaptic characteristic length d 0 (N = 1000, T max = 10000, t refr = 1, first ten patterns of Table 1). For N = 100 (in Fig. a) and for d 0 = 2 (in Fig. b) we also performed simulations with inhibitory neurons p inh = 0.2 resulting in an overall performance improvement. The error bars represent a 95% confidence interval.

We also ask the question whether the space dependence of the adaptation influences the presented results. Therefore we perform simulations using a plastic adaptation that decrease in space as a Gaussian. Results are very similar (data not shown) to those obtained for a synaptic adaptation decaying exponentially, except that for the Gaussian adaptation the peaks are slightly narrower.

Further, we tested the influence of the refractory time on the learning performance (see Fig. 5a). When the refractory time is zero learning is possible, but the performance is worse compared to t refr = 1 and t refr = 2. For t refr = 10 the performance decreases almost to zero. This might be related to the fact that for larger refractory times it becomes less likely that a single neuron fires more than once, therefore preventing the occurrence of activity loops. This result shows that the refractory time is an important ingredient to generate inhibition, but also the activation function (Heaviside step function) causes dissipation which can create inhibition. We could reduce this type of dissipation by using an activation function that is proportional to the voltage of the firing neuron. Equation 1 changes then to:

Role of the refractory time on the performance. (a) The success rate s for different refractory times, ranging from t refr = 0 to t refr = 10 (N = 1000, T max = 10000, d 0 = 2, first ten patterns out of Table 1). (b) Performance obtained for the neuronal activation function given in Eq. 3. For t refr = 0 (in Fig. a and b) we also performed simulations with inhibitory neurons p inh = 0.2 resulting in an overall performance improvement. The error bars represent a 95% confidence interval.

To eliminate dissipation completely we would need to set the firing threshold to zero. However, this would make learning impossible as every input will make all successive neurons fire and nothing can stop the output neuron from firing. In Fig. 5b we show the effect of the linear activation function (eq. 3) on the learning performance. The result confirms our assumption. For a refractory time equal to zero the learning performance drops to zero. For refractory times larger than zero the overall performance is higher compared to the Heaviside activation function. The performance also does not decrease for larger refractory times. Adding inhibitory neurons makes it possible for neurons with t refr = 0 to learn, but with a low performance.

In each case of Figs 3, 4 and 5 we perform the same simulation, but with a fraction p inh = 0.2 of inhibitory neurons. Inhibitory neurons noticeably increase the maximum learning performance and the performance decay for larger r 0 is slightly less pronounced. In Fig. 5b the inhibitory neurons make it possible to learn if the refractory time is zero, which is not possible without inhibitory neurons.

Conclusions

We show that a neural network trained by a distance dependent plastic adaptation can learn Boolean rules with a very good performance. The spatial extent of the learning signal was found to have a huge impact on the learning performance. A very localized plastic adaptation, which only modifies the synapses directly connected to the output neuron, results, unsurprisingly, in poor performance. For deeper adaptation signal, the performance strongly increases, until the learning length r 0 exceeds the system length L, where the performance decreases. When \({r}_{0}\gg L\) the adaptation signal becomes quite uniform in space and the lack of variability might limit the learning capabilities. Similar behaviour is observed for increasing characteristic length of synaptic connections. Indeed, current evidence from functional magnetic resonance imaging (fMRI) experiments26, 27 and EEG data28, 29 shows that a greater brain signal variability indicates a more sophisticated neural system, able to explore multiple functional states. Signal variability also reflects a greater coherence between regions and a natural balance between excitation and inhibition originating the inherently variable response in neural functions. Furthermore, the observation that older adults exhibit less variability reflecting less network complexity and integration, suggests that variability can be a proxy for optimal systems.

Interestingly, we show that a network with only excitatory neurons can learn non-linearly separable problems with a success rate of up to 100%. In this case, the neural refractory time generates inhibition in the neuronal model. If we include additional inhibitory neurons the performance still increase noticeably.

Regarding the network structure we find that the synaptic length also has an impact on the performance: For networks dominated by local connections the performance is worse compared to networks with long range connections. We conclude that the structure of the network and the locality of the plastic adaptation have an important role in the learning performance. To assess the generality of our results it will be necessary to study more complex forms of learning like, for example, pattern recognition. Further investigations should also try to improve the learning performance to become comparable to machine learning algorithms.

References

Schultz, W. & Dickinson, A. Neuronal coding of prediction errors. Annual review of neuroscience 23, 473–500 (2000).

Keller, G. B. & Hahnloser, R. H. Neural processing of auditory feedback during vocal practice in a songbird. Nature 457, 187–190 (2009).

Keller, G. B., Bonhoeffer, T. & Hübener, M. Sensorimotor mismatch signals in primary visual cortex of the behaving mouse. Neuron 74, 809–815 (2012).

Williams, D. & Hinton, G. Learning representations by back-propagating errors. Nature 323, 533–536 (1986).

Crick, F. The recent excitement about neural networks. Nature 337, 129–132 (1989).

Kolen, J. F. & Pollack, J. B. Backpropagation without weight transport. In Neural Networks, 1994. IEEE World Congress on Computational Intelligence., 1994 IEEE International Conference on, vol. 3, 1375–1380 (IEEE, 1994).

Stork, D. G. Is backpropagation biologically plausible. In International Joint Conference on Neural Networks, vol. 2, 241–246 (IEEE Washington, DC, 1989).

O’Reilly, R. C. Biologically plausible error-driven learning using local activation differences: The generalized recirculation algorithm. Neural computation 8, 895–938 (1996).

Lillicrap, T. P., Cownden, D., Tweed, D. B. & Akerman, C. J. Random synaptic feedback weights support error backpropagation for deep learning. Nature Communications 7, 13276 (2016).

Maass, W., Natschläger, T. & Markram, H. Real-time computing without stable states: A new framework for neural computation based on perturbations. Neural computation 14, 2531–2560 (2002).

Jaeger, H. The “echo state” approach to analysing and training recurrent neural networks-with anerratum note. Bonn, Germany: German National Research Center for Information Technology GMD Technical Report 148, 13 (2001).

Jalalvand, A., Van Wallendael, G. & Van De Walle, R. Real-time reservoir computing network-based systems for detection tasks on visual contents. In 2015 7th International Conference on Computational Intelligence, Communication Systems and Networks.

Fuxe, K. et al. The discovery of central monoamine neurons gave volume transmission to the wired brain. Progress in neurobiology 90, 82–100 (2010).

Liu, F. et al. Direct protein–protein coupling enables cross-talk between dopamine d5 and γ-aminobutyric acid a receptors. Nature 403, 274–280 (2000).

Reynolds, J. N. & Wickens, J. R. Dopamine-dependent plasticity of corticostriatal synapses. Neural Networks 15, 507–521 (2002).

Izhikevich, E. M. Solving the distal reward problem through linkage of stdp and dopamine signaling. Cerebral cortex 17, 2443–2452 (2007).

Florian, R. V. Reinforcement learning through modulation of spike-timing-dependent synaptic plasticity. Neural Computation 19, 1468–1502 (2007).

Aswolinskiy, W. & Pipa, G. Rm-sorn: a reward-modulated self-organizing recurrent neural network. Frontiers in computational neuroscience 9, 36 (2015).

Seung, H. S. Learning in spiking neural networks by reinforcement of stochastic synaptic transmission. Neuron 40, 1063–1073 (2003).

Bak, P. & Chialvo, D. R. Adaptive learning by extremal dynamics and negative feedback. Physical Review E 63, 031912 (2001).

Chialvo, D. R. & Bak, P. Learning from mistakes. Neuroscience 90, 1137–1148 (1999).

Bosman, R., Van Leeuwen, W. & Wemmenhove, B. Combining hebbian and reinforcement learning in a minibrain model. Neural Networks 17, 29–36 (2004).

de Arcangelis, L. & Herrmann, H. J. Learning as a phenomenon occurring in a critical state. Proceedings of the National Academy of Sciences 107, 3977–3981 (2010).

Capano, V., Herrmann, H. J. & de Arcangelis, L. Optimal percentage of inhibitory synapses in multi-task learning. Scientific Reports 5, 9895 (2015).

Roerig, B. & Chen, B. Relationships of local inhibitory and excitatory circuits to orientation preference maps in ferret visual cortex. Cerebral cortex 12, 187–198 (2002).

Garrett, D. D., Kovacevic, N., McIntosh, A. R. & Grady, C. L. Blood oxygen level-dependent signal variability is more than just noise. Journal of Neuroscience 30, 4914–4921 (2010).

Garrett, D. D., Kovacevic, N., McIntosh, A. R. & Grady, C. L. The importance of being variable. Journal of Neuroscience 31, 4496–4503 (2011).

Ghosh, A., Rho, Y., McIntosh, A. R., Kötter, R. & Jirsa, V. K. Noise during rest enables the exploration of the brain’s dynamic repertoire. PLoS computational biology 4, e1000196 (2008).

McIntosh, A. R., Kovacevic, N. & Itier, R. J. Increased brain signal variability accompanies lower behavioral variability in development. PLoS computational biology 4, e1000106 (2008).

Acknowledgements

The authors thank L.M. van Kessenich for many interesting discussions. We acknowledge financial support from the European Research Council (ERC) Advanced Grant FP7-319968 FlowCCS.

Author information

Authors and Affiliations

Contributions

D.L.B. conceived the research and conducted the numerical simulations. L.d.A. and H.J.H. gave important advise. All authors contributed to the writing of the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Berger, D.L., de Arcangelis, L. & Herrmann, H.J. Spatial features of synaptic adaptation affecting learning performance. Sci Rep 7, 11016 (2017). https://doi.org/10.1038/s41598-017-11424-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-017-11424-5

This article is cited by

-

Global multistability and analog circuit implementation of an adapting synapse-based neuron model

Nonlinear Dynamics (2020)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.