Abstract

Understanding the emergence of cooperation has long been a challenge across disciplines. Even if network reciprocity reflected the importance of population structure in promoting cooperation, it remains an open question how population structures can be optimized, thereby enhancing cooperation. In this paper, we attempt to apply the evolutionary algorithm (EA) to solve this highly complex problem. However, as it is hard to evaluate the fitness (cooperation level) of population structures, simply employing the canonical evolutionary algorithm (EA) may fail in optimization. Thus, we propose a new EA variant named mlEA-CPD-SFN to promote the cooperation level of scale-free networks (SFNs) in the Prisoner’s Dilemma Game (PDG). Meanwhile, to verify the preceding conclusions may not be applied to this problem, we also provide the optimization results of the comparative experiment (EAcluster), which optimizes the clustering coefficient of structures. Even if preceding research concluded that highly clustered scale-free networks enhance cooperation, we find EAcluster does not perform desirably, while mlEA-CPD-SFN performs efficiently in different optimization environments. We hope that mlEA-CPD-SFN may help promote the structure of species in nature and that more general properties that enhance cooperation can be learned from the output structures.

Similar content being viewed by others

Introduction

The Prisoner’s Dilemma Game (PDG) is a popular abstract mathematical method and has been employed in biology to explain the emergence and persistence of cooperation behavior among selfish individuals1,2,3,4,5,6,7,8. After all, survival of the fittest is a widely accepted natural selection rule, and individuals employing the selfish strategy might be expected to be more likely to persist. After carefully studying PDG, researchers found that organisms may still form a cooperative community even if they all act entirely for their own interest. Even so, researchers still found it hard to explain large-scale cooperation in reality, as defection usually dominates in their simulations. To explain this puzzle, researchers have long been exploiting the deeper mechanisms.

In the past decades, network reciprocity, proposed by Nowak et al., has had wide influence in this avenue of research. Individuals are constrained by spatial structure to play only with their immediate neighbors8. Nowak et al. concluded that topology constraints influence the evolution of cooperation (confirmed years later). After that, many extended studies have contributed to network reciprocity. In the early stages, researchers focused on the single layer networks: They found that population structure plays a determinate role in the evolution of cooperation9 and cooperators in PDG are likely to form clusters to defend against defectors10. They revealed the potential positive relationship between cooperation and some network properties, such as heterogeneity7, 11 and clustering coefficients12. And they also focused on how error and attack on the poulation structures may influence the evolution of cooperation13. Recently, researchers have analyzed the evolutionary game in interdependent networks, as populations in reality are not isolated and interaction exists between different layers14,15,16,17. These studies have reflected that interdependence may induce some new mechanisms that enhance cooperation and fixed the cooperation behavior on the system. And György et al. in ref. 18 reviewed how the population structure can modify long-term behavioral patterns in evolutionary games.

In addition to studying how network reciprocity may influence the evolution of cooperation, some researchers have focused on investigating the potential behavior whereby players may adjust their interaction with others based on the gaming results. This is a natural phenomenon since population structure in reality may dynamically change during the game process. A representative method in this subject area is the coevolutionary rule, which was designed and proposed by Zimmermann et al. in ref. 19. Even if a large number of studies have contributed to this subject, most works in this area can be divided into those that employ strategy independent rules for connection adaptation20,21,22 and those that take strategies or their performance as factors to influence the population reorganization23, 24. Perc et al. have also provided a review of this research in ref. 25.

While network reciprocity seems to have preliminarily explained large-scale cooperation in reality, some researchers have practically analyzed the real human game. Their experiment results revealed that humans do not base their strategy decisions on other’s payoffs while playing PDG. In addition, Gracialázaro et al. in their experiments have found the existence of a population structure does not seem to have an influence on the global outcome of cooperation26. Following these experiments, researchers have also found that cooperation obviously depends on the strategy updating rule. Cimini et al. in ref. 27 have analyzed cooperation frequency in a simulation where different strategy updating rules are introduced. They found cooperation frequency assessed under the imitation-based strategy updating rule depends heavily on the population structure, but network reciprocity seems to have little effect on the game dynamics when individuals do not take neighbors’ payoffs into consideration (non-imitative rule). These experiments and extended works seem to have put an end to network reciprocity. However, it remains difficult to conclude that population structure has little effect on promoting cooperation, as different strategy updating rules place different levels of emphasis on different game processes in nature. Evolutionary dynamics based on payoff comparisons are appropriate to model biological evolution, while they may not apply to social or economic contexts26. Moreover, Carlos et al. has emphasized in their research that their conclusion applies only to human cooperation, and network reciprocity may still be relevant to cooperation in other contexts.

Therefore, even if the relevance between network reciprocity and cooperation in social or economic issues remains controversial, population structure is still essential to cooperation in biological evolution. Moreover, just as group selection indicates that cooperative groups may be more likely to survive in nature than uncooperative ones, cooperation is essential to the survival and evolution of species in nature. A high cooperation level can help species to maintain high competitiveness in nature, which may partially explain why helping family members finally helps the individual itself (Kin selection). Therefore, methods to help optimize the population structure should be important. Even if quite a lot of studies have contributed to the promotion of cooperation in the population, it remains difficult problem28. One representative breakthrough that has been made on this subject is the introduction of coevolutionary rules, which has provided a way to help understand the self-reorganizing ability of the population. Even so, little has been done to investigate how a population structure can be constructed or adjusted through man-intervention. And cooperation promotion methods that do not rely on the self-adapt ability of a population may shed light on this problem.

In this paper, we design a variant of evolutionary algorithm to optimize the population structure and thereby enhancing cooperation. Even if much of the preceding research has found a correlation of cooperation and some network properties, naively applying these conclusions to our problems may be quite problematic since these conclusions are mostly obtained based on specific network models and lack generality. Therefore, we employ the cooperation level of population structures as the objective value of our algorithm.

To our knowledge, no appropriate simple approach has been proposed to exactly determine the cooperation level of different population structures. Moreover, the evaluated cooperation level of structures actually fluctuates within a range. Therefore, the evaluation of structures within EA is fuzzy and may interfere with the selection of EA over the elite solutions. In the field of evolutionary computation, researchers also refer to this interference that leads to potential failure in optimization as the “EA cheat”. Apparently, simply employing the canonical evolutionary algorithm (EA) may fail in optimization, even if EA have been applied to many engineering problems29,30,31,32,33,34,35,36. Even so, there are two widely accepted methods to reduce the evaluation error of different structures’ cooperation level: (1) Prolong the simulation time of game evolution. (2) Average over independent evaluations. However, the corresponding computation cost cannot be ignored.

To successfully apply EA to the optimization of population structure, we propose a new EA variant named mlEA-CPD-SFN to promote cooperation in the Prisoner’s Dilemma Game (PDG). Within mlEA-CPD-SFN, a modified local search operator named multilevel evolutionary operator is designed for the purpose of revising the wrong-filtering solution in EA population and exploiting solutions with potential higher cooperation levels. Therefore, we designed a memory structure (restoration list) within the operator to record some reliable solutions for the revision and some rules for the operator to control the search bias.

To test the performance of mlEA-CPD-SFN, different types of scale-free structures have been employed, and optimization has been constrained not to change the initial degree distribution. Meanwhile, to verify that the preceding conclusions may not be applied to this problem, we also provide the optimization results of the comparative experiment (EAcluster), which optimizes the clustering coefficient of structures. Even if the preceding research concluded that highly clustered scale-free networks enhance cooperation, we still find EAcluster cannot perform as satisfactorily as mlEA-CPD-SFN does. Moreover, we also find that the mlEA-CPD-SFN can perform well and simultaneously maintain a low computation cost (details in III). Finally, to verify the adaptability of mlEA-CPD-SFN subject to different optimization environments, different strategy update rules are employed in our experiments. The simulation results verify the adaptability of mlEA-CPD-SFN.

Results

Prisoner’s Dilemma Game and Evaluation of Population Structure

Understanding the emergence of cooperation in the context of Darwinian evolution has been a challenge in recent decades. Even if relevant works in this field are almost entirely theoretical, it is quite likely to have broad-reaching implications for the future.

PDG is one of the most commonly used tools to help explain how cooperation endures in nature. In PDG, the defectors receive the highest reward T (temptation to defect) when defecting to a cooperator who receives the lowest payoff S (sucker value). If both of the players choose the same strategy, they receive a payoff R as a reward for cooperation or P as punishment for defection. Moreover, T, P, S, R follow the rule T > R > P > S. As a result, in a single round of PDG, defection is the best strategy no matter what the opponents’ strategies are, even though all players would be better off if they all cooperate.

Population structures provide the basic organization of the game. In ref. 10, players interact only within a limited local neighborhood. When a site x is updated, the current occupant and all neighbors around compete to recolonize this site with their offspring. Those offspring keep the same strategy as their parents. The probability of neighbor y succeeding in reproduction is:

where d > = max{d x , d y }, D = T-S, d i marks the degree of node i and P i marks the payoff of i. Therefore, the probability that the focal individual succeeds in reproduction is \({W}_{{s}_{x}\leftarrow {s}_{x}}=\prod _{l}(1-{W}_{{s}_{x}\leftarrow {s}_{y}})\). With probability \(1-{W}_{{s}_{x}\leftarrow {s}_{x}}\), an offspring of one neighbor takes over site x. The relative probability for the success of neighbor y is W sx←sy /∑ l W sx←sy , where l marks neighbors of x. In this paper, the synchronous update method is employed.

Generally, individuals in a population are initially designated as cooperators or defectors with equal probability. And a corresponding cooperation level is obtained through averaging over generations after the equilibrium of a population is reached. Thus, the evaluated cooperation level of structures may naturally fluctuate among independent evaluations.

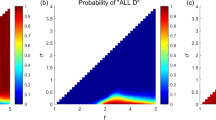

To illustrate this phenomenon, the distribution of evaluated cooperation level is given in Fig. 1. Each sub-graph in Fig. 1 contains 5000 independent evaluated results of a BA network. Meanwhile, two different evaluation modes have been designed to obtain these simulation results on the same group of population structures: Mode-A and Mode-B. In Mode-A, each equilibrium cooperation level is obtained by averaging 0.1 N generations after a transient period of N generations. In Mode-B, each equilibrium cooperation level is obtained by averaging N generations after a transient period of 10 N generations.

Evaluation distribution of tested population structure (BA network). In this experiment, population structures with different sizes have been tested. It is obvious that the distribution of cooperation frequency obtained under two different evaluation modes is similar (horizontal contrast) and the distribution of cooperation frequency gets broader when size of population gets smaller (vertical contrast).

Apparently, the independent evaluation results vary within a certain range, which may get wider with the decrease of the tested structure scale. Moreover, dramatically prolonging the simulation time cannot decrease the fluctuation range of evaluation in an obvious way. Meanwhile, the distributions of evaluated cooperation levels obtained under Mode-A and Mode-B are similar, which indicates that Mode-A and Mode-B both provide a necessary transient period for the equilibrium of population.

Therefore, the fluctuation of evaluation may interfere with the selection of EA over the elite solutions. As overlapping may exist between the evaluation distribution of different structures, there naturally exists a certain probability that a worse structure is mistaken by EA as the superior. This phenomenon is termed the “EA cheat” and is a knock down to the algorithm.

To avoid unnecessary computation cost, we employ Mode-A to evaluate the cooperation level of structures in our paper. Moreover, we introduce the multi-sampling method to average over independent evaluations, thereby approaching the ideal mean value of the evaluation distribution. To analyze the effect of this approach, the new evaluation distributions obtained under the multi-sampling method are provided in Fig. 2. On the whole, the multi-sampling method can help reduce the evaluation error of structures. However, different sampling numbers may be necessary toward different evaluation distributions of structures, which explains why the fluctuation range in Fig. 2(c) is wider than that in Fig. 2(b). Therefore, it is difficult to determine an appropriate sampling number, not to mention the fact that this method only decreases the fluctuation range without effectively dealing with the EA cheat (e.g., if the ideal mean value of two evaluation distributions is close enough).

The evaluation distribution obtained under the multi-sampling method. Different sampling numbers have been tested. (a) 5 samplings, (b) 10 samplings, and (c) 20 samplings. On the whole, the multi-sampling method can help reduce the evaluation error of structures. However, different sampling numbers may be necessary toward different evaluation distributions of different structures, which explains why the fluctuation range in Fig. 2(c) is wider than in Fig. 2(b).

Multilevel Evolutionary Operator and mlEA-CPD-SFN

Previous simulation results have revealed that the evaluation of structures may fluctuate and interfere with the selection of EA over the elite solutions. Multi-sampling may help reduce evaluation error. But determining an appropriate sampling number will be difficult. Moreover, the corresponding increase in computation cost is unbearable.

Therefore, the reserved (potential) structures should be repeatedly sampled more to ensure reliability of their evaluation results. However, the abandoned (mediocre) structures should be repeat sampled less to decrease computational cost. With this in mind, we propose a local search operator variant named multilevel evolutionary operator (see Methods) to achieve this. And for a timely revision of the wrong-filtering solution caused by the EA cheat, some reliable structures are saved as substitutes and a memory structure (see Methods) is designed. Given these, we further propose a new EA variant named mlEA-CPD-SFN (see Methods) to optimize the structure of scale-free networks for the promotion of cooperation in Prisoner’s Dilemma game.

Optimizing Clustering Coefficient through EA

Assenza et al. have revealed the enhancement of cooperation in highly clustered scale-free networks12. Therefore, optimizing the clustering coefficient may help promote the cooperation level of scale-free structures. The objective value in EAcluster is the clustering coefficient of structures, which can be obtained as follows: Suppose neighbors of nodes i construct a graph represented by Γ i and its edge number is |E(Γ i )|. Then, the clustering coefficient of node i would be Cluster i = |E(Γ i )|/(d i × (d i − 1)/2). Therefore, the clustering coefficient of a structure should be Cluster = (Σ i Cluster i )/N.

Two types of scale-free networks are employed to test the performance of EAcluster: Barabási Albert networks (BANs)37 and Holme and Kim networks (HKNs: p = 1). BANs is a type of commonly used scale-free network, while the HKNs is one of its variants and may have higher clustering coefficients in pairs with a higher p (p ∈ [0,1]) for construction12. For fair comparison with mlEA-CPD-SFN, canonical local search operator is designed within EAcluster, and the maximum generation of EAcluster is set to 120 where evolution of EAcluster has almost converged. The simulation results are given in Fig. 3.

Simulation results of EAcluster in optimizing population structure for the promotion of cooperation in PDG. Each group contains 10 independent structures whose evaluated cooperation level lies within a gray bar (black points mark the mean value). On the whole, naively optimizing the clustering coefficient of a population structure may promote cooperation in PDG, but it is not efficient enough. Moreover, sometimes this method may fail and produce a worse structure, as shown in the optimization of 500-node BANs (the mean value gets smaller).

Apparently, optimizing the clustering coefficient of scale-free structures can help promote cooperation in PDG. However, the efficiency of EAcluster is quite limited. Moreover, as EAcluster fails in the optimization of 500-node BANs, we can conclude that naively applying preceding research conclusions to the practical optimization of structures may not perform desirably and sometimes maybe problematic.

Efficiency of mlEA-CPD-SFN in Optimizing Population Structure

In this part, the same structures are employed to test the performance of mlEA-CPD-SFN (see Methods). Two types of mlEA-CPD-SFN and a hybrid mlEA-CPD-SFN are considered:

-

Only one level in each pyramid: mlEA-CPD-SFN1 (shortened to lv1).

-

Five levels in each pyramid: mlEA-CPD-SFN5 (shortened to lv5).

-

Only one level in each pyramid but multi-sampling is employed for initial evaluation: mlEA-CPD-SFN1-M (shortened to lv1-M).

To protect the best record in the restoration list, α in mlEA-CPD-SFN1 is set to 0, while α = 0.5 in mlEA-CPD-SFN5. Within mlEA-CPD-SFN1-M, initial evaluation of structures is obtained by averaging over 5 independent evaluations. The optimization results of these algorithms are given in Fig. 4.

Simulation results of lv1, lv5 and lv1-M in optimizing population structure for the promotion of cooperation in PDG. Each group contains 10 independent structures whose evaluated cooperation level lies within a gray bar (black points mark the mean value). On the whole, these algorithms all efficiently promote cooperation in PDG, and the performance of mlEA-CPD-SFN5 surpasses those of the others.

The initial evaluation of structures in mlEA-CPD-SFN1 and mlEA-CPD-SFN5 contains only one sampling. Therefore, compared with mlEA-CPD-SFN1-M, these two mlEA-CPD-SFNs in theory are more likely to overestimate or underestimate the cooperation level of structures. As the performance of mlEA-CPD-SFN1-M surpasses mlEA-CPD-SFN1, we can conclude that accurate evaluation of structures obviously influences the optimization results. This conclusion also explains why the performance of mlEA-CPD-SFN1 worsens when optimizing smaller structures. Even if the complexity of a problem synchronously descends with the structure scale, the corresponding evaluation error gets more obvious (refer to Fig. 1). Even so, in our experiments, mlEA-CPD-SFN5 performs better than mlEA-CPD-SFN1-M in general. This phenomenon reveals that the level of mlEA-CPD-SFN is positive to its performance. The restoration list provides records to maintain the evolving of the EA population subject to the attack of “EA cheat”. With the increase in level, more history records are available, and these records can be saved longer.

The evaluated cooperation level of structures provided in our paper is obtained by averaging over 5000 independent evaluations. Therefore, these simulation results should be reliable. However, further investigation regarding whether prolonging simulation time will influence our results should be undertaken, as those results are obtained under Mode-A with the purpose of saving computation cost. Thus, we prolong the simulation time and track the trend in cooperation during the game process (Fig. 5). Each data point within the simulation results is obtained through averaging over 500 independent runs (50 runs/structure). It is apparent that prolonging simulation time has little influence upon the evaluation results as cooperation frequency has almost converged around 1.1 N generation (Mode-A).Therefore, the simulation results we obtain should be credible. In addition, we can conclude that mlEA-CPD-SFN performs effectively in optimizing population structure and promoting cooperation in PDG (mlEA-CPD-SFN5 performs best, and mlEA-CPD-SFN1-M follows).

Mean cooperation frequency of structures during the game process. (a) 1000-node BANs. (b) 500-node BANs. (c) 1000-node HKNs. (d) 500-node HKNs. Apparently, prolonging simulation time has little influence upon the evaluation results, as cooperation frequency has already converged around 1.1 N generation (Mode-A). Moreover, mlEA-CPD-SFN5 performs best in optimizing population structure and mlEA-CPD-SFN1-M follows. The optimization effect of these three algorithms is obvious.

We further analyze the optimization characteristic of mlEA-CPD-SFN and explore its advantages in optimization. Therefore, we provide the running time and evaluation number of the above three algorithms in Table 1.

Apparently, the computation cost of mlEA-CPD-SFN1-M surpasses those of the others (almost triple). Thus, the multi-sampling method is paired with a dramatic increase in computation cost. However, mlEA-CPD-SFN n performs well and simultaneously maintains a low computation cost. This is due to the basic principle of mlEA-CPD-SFN: The reserved (potential) structures should be repeatedly sampled more to ensure reliability of their evaluation results. However, the abandoned (mediocre) structures should be repeat sampled less to decrease computational cost. In addition, the structure of the restoration list is irrelevant to the evaluation of structures.

Adaptability of mlEA-CPD-SFN to Different Update Rules

Previous research has concluded that update rules influence the cooperation level of structures27. Therefore, investigation of mlEA-CPD-SFN’s adaptability to different strategy update rules is necessary. Two common strategy update rules are additionally employed in our experiments:

-

Fermi rule: A neighbor (Supposed as y) of x is chosen randomly. The imitation probability for y to learn from x is \({W}_{{s}_{x}\leftarrow {s}_{y}}=1/(1+\exp (({P}_{y}-{P}_{x})/k))\), where P i is the payoff of individual i and k denotes the amplitude of noise and is set to 0.1.

-

Unconditional imitation rule: Each individual x imitates the neighbor y with the largest payoff, provided P y > P x .

The configuration of algorithms remains unchanged. As cooperation almost dominates upon HKNs(p = 1) under the Fermi rule when the cost to benefit ratio r = 0.95, we partially employ HKNs(p = 0.5) to test the performance of algorithms. Moreover, performance of mlEA-CPD-SFN10 (10 levels in each pyramid: lv10) is also provided in Fig. 6. Notably, the simulation time to evaluate the initial and optimized structures in this part is set to 22000 generations, and the equilibrium cooperation level of structures is obtained by averaging the last 2000 generations (like the results in Fig. 5). Therefore, we do not further provide the corresponding mean cooperation frequency of structures during the game process.

Simulation results of lv1, lv1-M, lv5 and lv10 in optimizing population structure for the promotion of cooperation in PDG. Each group contains 10 independent structures whose evaluated cooperation level lies within a gray bar (black points mark the mean value). The results in the upper (bottom) row are obtained under the Fermi rule (unconditional imitation rule). Overall, these algorithms all effectively promote cooperation in PDG. mlEA-CPD-SFN10 performs best under the Fermi rule, while mlEA-CPD-SFN1-M performs best under the unconditional imitation rule.

Overall, these algorithms all successfully promote cooperation in PDG (Fig. 6). Therefore, we can conclude that mlEA-CPD-SFN is adaptable to different strategy updating rules. Moreover, the level of mlEA-CPD-SFN apparently influence its efficiency in performance. Finally, we can see mlEA-CPD-SFN1 apparently fails in some optimization, while mlEA-CPD-SFN1-M performs well. This phenomenon verifies that the evaluation error may cheat EA and thereby cause the failure of optimization.

Note that unlike other strategy updating rules, the unconditional imitation rule leads to a deterministic dynamic. Therefore, the initialized distribution of strategy plays an important role in the final cooperation frequency, and thereby, the evaluation of structures should be more unstable. This may explain why mlEA-CPD-SFN1-M performs best under the unconditional imitation rule even if these algorithms all perform effectively.

Discussion

Cooperation is essential in many aspects of life. In biology, the prisoner’s dilemma game (PDG) has long been used to help explain how cooperation endures in nature. As cooperation is highly relevant to the competitiveness of groups in nature, understanding cooperation in PDG and proposing methods of promotion should have significant implications. To our knowledge, although many well-known mechanisms have provided ways to understand the self-reorganizing ability of a population toward an optimal situation for cooperation, little has been done to investigate how to construct or adjust the population structure with man-intervention, even if the preceding research recognizes its importance. Therefore, cooperation optimization methods that do not rely on the self-regulation mechanism of a population may shed light on this problem.

The contributions of this paper are summarized as follows: (1) We propose a new EA variant named mlEA-CPD-SFN to optimizes the structure of scale-free networks for the promotion of cooperation in the Prisoner’s Dilemma game without changing the initial structures’ degree distribution. (2) We reveal that evaluation error of population structures may cause the “EA cheat” and canonical evolutionary algorithm (EA) may fail in optimization. (3) Different types of scale-free structures and updating rules have been applied to verify the performance of mlEA-CPD-SFN. (4) We provide the optimization results of the comparative experiment (EAcluster) and reveal that naively applying preceding research conclusions to the practical optimization of structures may not perform desirably and sometimes maybe problematic. (5) The experimental results show that mlEA-CPD-SFN can perform well in various situations and simultaneously maintain a low computation cost.

We hope mlEA-CPD-SFN may help promote the structure of species in nature and that more general properties that enhance cooperation can be learned from the output structures.

Methods

Restoration List

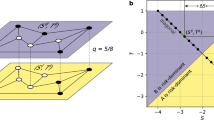

Even if the “EA cheat” may lead to some wrong-filtering solution in the EA population, timely revision still may save EA from failure in optimization. Therefore, a hierarchical memory structure (given in Fig. 7) is designed to backup some reliable solutions in case of need. There are 2Ω pyramid-like sub-lists in the restoration list, and each contains some vacant spaces for records. Notably, records in the upper pyramid are prior to those in the bottom.

L n,m marks the mth record of the nth pyramid, and L n marks the nth pyramid. L n is responsible for nth solution in the EA population. The reason to separate the memory structure into numbers of parts is: (1) Maintain the diversity of the EA population and to avoid premature convergence of algorithm. (2) Maintain the parallelism of the EA. (3) Enable communication among pyramids with the help of computing characteristic of EAs.

Basic Strategies for Multilevel Evolutionary Operator

The basic rules for multilevel evolutionary operator are: (1) Some supplementary rules for the restoration list. (2) Rules to compare structures with different sampling numbers. Note that sum marks the sum of evaluation results and num marks the number of evaluations (sampling number); e.g.: if a structure has been evaluated twice, num = 2 and sum is the total of the evaluation results. Therefore I i = {G i , sum Gi , num Gi } marks the ith solution in the EA population. G = (V, E) is used to represent a population structure (graph), where V = {v 1, v 2, …, v N } is the set of nodes and E = {e ij | i, j ∈ V and i ≠ j} is the set of edges in the structure.

(1) Four rules are designed for the restoration list: insertion rule, mutation rule, sorting rule, and information update rule.

-

The insertion rule: Suppose we attempt to backup the ith solution to the restoration list. If there are vacant spaces in L i , this solution is inserted directly at the bottom of L i . Otherwise, this solution should clear out some old records with smaller avg = sum/num and num first. If no vacant space is ready, this solution will not be backed up.

-

The mutation rule: Suppose ith solution has a larger avg than L i,m . Even if the insertion rule is unsatisfied, it may still take the place of L i,m with probability α max-m (max is the number of the vacant space in L i ).

-

The sort rule: Solutions in pyramids are prioritized in terms of their avg, and sort_list(i) marks the sorting operation on the ith pyramid.

-

The information update rule: The backup should be updated synchronously if the sum or num of its ontology is updated and record_synchronize(L i,m , sum, num) is used to denote the update operation of L i,m .

(2) Two comparison strategies are designed to compare structures with different sampling numbers. Strategy 1 is used to compare solutions in the EA population and strategy 2 is used to compare the solutions in the EA population and restoration list.

Strategy 1 (I i and I j ): if avg Gi > avg Gj , I i is better than I j .

Strategy 2 (I i and I j (record)): We suppose G i ’ is a variant from G i . (i) Only when avg Gi < avg Gi’ < avg Gj , I j is considered more optimal than I i . (ii) Only when avg Gi’ > avg Gi > avg Gj , I i is considered more optimal than I j . Details of how G i ’ is produced are given below.

Multilevel Evolution Operator: A Variant from the Local Search Operator

Local search operator is a widely employed method to improve the exploitation of EA through continuous fine-tuning of solutions. In our paper, we employed an edge switching process (in Fig. 8) to complete the fine-tune of the current solution G i and obtain G i ’: (a) A node u (d u ≥ 2) with its two neighbors i and j (d i , d j ≥ 2) are selected. (b) Edges e jk and e im (u ≠ i ≠ j ≠ k ≠ m) are selected. (c) Remove e jk , e im and add e ji , e km (e ji , e km ∉ G i ). edge_switch(G i , v u , v j , v i , v k , v m ) marks this edge switching operation (step c), and node_select(v u , v j ) marks the node selecting process (step a-b).

Canonical local search operator only compares and selects between the initial and adjusted solutions in terms of their evaluation results. However, the records in the restoration list are also considered by the multilevel evolutionary operator (Suppose I i , I i ’ mark the initial solution and adjusted solution, while L i,0 is the top level record in the ith pyramid):

-

If avg Gi > avg Gi’: (a) I i ’ is abandoned, and I i is reserved. (b) Evaluate the structure of I i once more, and update sum and num. (c) Synchronize information if its backup exists.

-

If avg Gi’ > avg Gi > avg Li : (a) Backup I i . (b) I i is replaced by I i ’. (c) Sort records in L i .

-

If avg Li > avg Gi’ > avg Gi : Replace I i with L i,0 and restoration(i) denotes this operation.

-

For other situations, the multilevel evolution operator replaces I i with I i ’.

Algorithm 1 Provide details of the multi-level evolutionary operator and β marks the probability scale.

Algorithm 1: Multilevel Evolutionary Operator |

Input: I k : kth solution in EA population, avg Gk : Evaluation of G k ; β: probability scale. |

Output: Ĩ k ’: modified solution from I k . avg Ĩk’: Evaluation of Ĩ k ’; |

Step 1: Initialization, see algorithm 2 for more information. |

Step 2: Local search based on the hill-climbing, iterates over every edge of nodes. |

(a) Edge switching (see algorithm 3 for more information). |

(b) If the adjusted solution is unreasonable, conduct algorithm 4. |

(c) If the adjusted solution is accepted, operates according to the specific situations. See algorithm 5 for more information. |

(d) If the loop is over go to step 3, else go to the next round. |

Step 3: Output current I k and avg GK as Ĩ k ’ and avg Ĩk’; |

Algorithm 2: Initialization |

1: Flag List ←false;//Flag List marks whether the current structure has already been backed up; |

2: sum Gk ← avg Gk , |

3: num Gk ←1; |

Algorithm 3: Edge switching adjustment |

1: if(U(0,1) < β×d i /∑ i d i ) then |

2: [Is_Nodes_Found, v i , v a , v b , v c , v d ]←node_select(v i , v a ); |

3: if(Is_Nodes_Found = false) then |

4: continue; |

5: else |

6: G k ‘ ← edge_switch(G k , v i , v a , v b , v c , v d ); |

7: else |

8: continue; |

9: end if ; |

10: avg Gk ‘ ← C(G k ‘);//C(G k ‘) evaluate cooperation level of G k ‘ once; |

Algorithm 4: Operation when edge switching adjustment is rejected |

1: if(G k ‘ is not connected or avg Gk‘ < avg Gk ) then |

2: num Gk ←num Gk + 1; |

3: sum Gk ←sum Gk + C(G k ); |

4: avg Gk ←sum Gk /num Gk ; |

5: if(Flag List ) then |

6: record_synchronize (i, sum, num) |

7: end if; |

8: end if; |

Algorithm 5: Operation when edge switching adjustment is accepted |

//avg Gk’ > avg Gk : adjustment accepted; |

1: if(avg Gk > avg Li ) then//avg Gi’ > avg Gi > avg Li ; |

2: Attempt to back-up G k in L k ; |

3: sort_list(k); |

4: avg Gk ← avg Gk’ ; |

5: num Gk ←1; |

6: sum Gk ← avg Gk’ ; |

7: Flag List ←false; |

8: G k ←G k ‘; |

9: elseif(avg Li > avg Gk’ ) then //avg Li > avg Gi’ > avg Gi |

10: restoration(k); |

11: is_list_taken←true; |

12: else |

13: avg Gk ← avg Gk’ ; |

14: num Gk ←1; |

15: sum Gk ← avg Gk’ ; |

16: Flag List ←false; |

17: G k ←G k ‘; |

18: end if; |

Implementation of mlEA-CPD-SFN

Given a targeted population structure for optimization, we first generate an EA population {G 1, G 2, …, G Ω} through a simple edge swapping operation (Fig. 9). Crossover operators of mlEA-CPD-SFN is similar to the crossover operation in33 Algorithm 6 provides the details of mlEA-CPD-SFN.

Algorithm 6: mlEA-C PD -SFN |

Input: G 0: Initial scale-free structure. GS: Population size. P c : Crossover rate. max: Levels in pyramids. gen_max: Iteration number. β: probability scale. |

Output: G*: The optimized population structure. |

Step 1: Initialize population through simple_edge_swapping(G0) and evaluate their cooperation level; |

Step 2: EA population reproduce (Crossover operator). Select parents through roulette selection. |

Step 3: Mutation (simple_edge_swapping) to the offspring and evaluate their cooperation level. |

Step 4: Multilevel evolutionary operator performs upon both the parent and the offspring. |

Step 5: If the iteration generation satisfies, output the best solution in EA population. Or else, select the best GS solutions in the EA population for the next generation and go to step 2. |

Some parameters in experiments are fixed as: P = 0, T = 1 + r, S = 0, R = 1, β = 20, r = 0.95 (details of cost to benefit ratio r in ref. 10), GS = 6 and gen_max = 30. Notably, the cooperation level of structures provided in Results is obtained by averaging over 5000 independent evaluations.

References

Chiong, R. & Kirley, M. Effects of iterated interactions in multiplayer spatial evolutionary games. IEEE Trans. on Evolutionary Computation 16, 537–555 (2012).

Li, J. & Kendall, G. The effect of memory size on the evolutionary stability of strategies in iterated prisoner’s dilemma. IEEE Trans. on Evolutionary Computation 18, 819–826 (2014).

Wedekind, C. & Milinski, M. Cooperation through image scoring in humans. Science 288, 850–852 (2000).

Fehr, E. & Fischbacher, U. The nature of human altruism. Nature 425, 785–791 (2003).

Nowak, M. A. & Sigmund, K. Evolution of indirect reciprocity. Nature 437, 1291–1298 (2005).

Nowak, M. A. Five rules for the evolution of cooperation. Science 314, 1560–1563 (2006).

Chen, X., Fu, F. & Wang, L. Prisoner’s dilemma on community networks. Physica A: Statistical Mechanics and Its Applications 378, 512–518 (2007).

Nowak, M. A. & May, R. M. Evolutionary games and spatial chaos. Nature 359, 826–829 (1992).

Santos, F. C., Rodrigues, J. F. & Pacheco, J. M. Graph topology plays a determinant role in the evolution of cooperation. Proceedings of Royal Society of London B: Biological Sciences 273, 51–55 (2006).

Hauert, C. & Doebeli, M. Spatial structure often inhibits the evolution of cooperation in the snowdrift game. Nature 428, 643–646 (2004).

Santos, F. C. & Pacheco, J. M. Scale-free networks provide a unifying framework for the emergence of cooperation. Physical Review Letters 95, 098104 (2005).

Assenza, S., Gómez-Gardeñes, J. & Latora, V. Enhancement of cooperation in highly clustered scale-free networks. Physics Review E 78, 017101 (2008).

Perc, M. Evolution of cooperation on scale-free networks subject to error and attack. New Journal of Physics 11, 033027 (2009).

Wang, Z., Szolnoki, A. & Perc, M. Evolution of public cooperation on interdependent networks: The impact of biased utility functions. Epl. 97, 48001 (2012).

Wang, Z., Wang, L., Szolnoki, A. & Perc, M. Evolutionary games on multilayer networks: a colloquium. The European Physical Journal B 88, 124–138 (2015).

Kenett, D. Y., Perc, M. & Boccaletti, S. Networks of networks – An introduction. Chaos, Solitons & Fractals 80, 1–6 (2015).

Wang, Z., Szolnoki, A. & Perc, M. Optimal interdependence between networks for the evolution of cooperation. Scientific Reports 3, 2470 (2013).

György, S. & Gábor, F. Evolutionary games on graphs. Physics Reports 446, 97–216 (2007).

Zimmermann, M. G., Eguíluz,V. M. & Miguel, M. S. Cooperation, adaptation and the emergence of leadership. Economics with heterogeneous interacting agents, Lecture Notes in Economics and Mathematical Systems (2001).

Szolnoki, A. & Perc, M. Resolving social dilemmas on evolving random networks. Epl 86, 30007 (2009).

Szolnoki, A. & Perc, M. Emergence of multilevel selection in the prisoner’s dilemma game on coevolving random networks. New Journal of Physics 11, 093033 (2009).

Szolnoki, A., Perc, M. & Danku, Z. Making new connections towards cooperation in the prisoner’s dilemma game. Europhysics Letters 21, 88–121 (2008).

Pacheco, J. M., Traulsen, A. & Nowak, M. A. Active linking in evolutionary games. Journal of Theoretical Biology 243, 437–443 (2006).

Pacheco, J. M., Traulsen, A. & Nowak, M. A. Coevolution of strategy and structure in complex networks with dynamical linking. Physical Review Letters 97, 258103 (2006).

Perc, M. & Szolnoki, A. Coevolutionary games–a mini review. Bio Systems 99, 109–125 (2010).

Gracialázaro, C. et al. Heterogeneous networks do not promote cooperation when humans play a Prisoner’s Dilemma. Proceedings of the National Academy of Sciences of the United States of America 109, 12922–12926 (2012).

Cimini, G. & Sanchez, A. How Evolutionary Dynamics Affects Network Reciprocity in Prisoner’s Dilemma. Journal of Artificial Societies and Social Simulation 18, 22–35 (2015).

Shivshankar, S. & Jamalipour, A. An Evolutionary Game Theory-Based Approach to Cooperation in VANETs Under Different Network Conditions. IEEE Transactions on Vehicular Technology 64, 2015–2022 (2015).

Zhou, M. & Liu, J. A two-phase multi-objective evolutionary algorithm for enhancing the robustness of scale-free networks against multiple malicious attacks. IEEE Trans. on Cybernetics 47, 539–552 (2017).

Chi, Y. & Liu, J. Learning of fuzzy cognitive maps with varying densities using a multi-objective evolutionary algorithm. IEEE Trans. on Fuzzy Systems 24, 71–81 (2016).

Zhong, W., Liu, J., Xue, M. & Jiao, L. A multi-agent genetic algorithm for global numerical optimization. IEEE Trans. on Systems, Man and Cybernetics, Part B 34, 1128–1141 (2004).

Liu, C., Liu, J. & Jiang, Z. A multiobjective evolutionary algorithm based on similarity for community detection from signed social networks. IEEE Trans. on Cybernetics 44, 2274–2287 (2014).

Zhou, M. & Liu, J. A memetic algorithm for enhancing the robustness of scale-free networks against malicious attacks. Physica A: Statistical Mechanics and its Applications 410, 131–143 (2014).

Yuan, Y., Xu, H., Wang, B., Zhang, B. & Yao, X. Balancing convergence and diversity in decomposition-based many-objective optimizers. IEEE Trans. on Evolutionary Computation 20, 180–198 (2016).

Qiu, X., Xu, J. X., Tan, K. C. & Abbass, H. A. Adaptive cross-generation differential evolution operators for multiobjective optimization. IEEE Trans. on Evolutionary Computation 20, 232–244 (2016).

He, J. & Lin, G. Average convergence rate of evolutionary algorithms. IEEE Trans. on Evolutionary Computation 20, 316–321 (2015).

Barabàsi, A. L. & Albert, R. Emergence of scaling in random networks. Science 286, 509–512 (1999).

Acknowledgements

This work is partially supported by the Outstanding Young Scholar Program of National Natural Science Foundation of China (NSFC) under Grant 61522311 and the Overseas, Hong Kong & Macao Scholars Collaborated Research Program of NSFC under Grant 61528205.

Author information

Authors and Affiliations

Contributions

Penghui Liu and Jing Liu devised the research project; Penghui Liu performed numerical simulations; Penghui Liu analyzed the results; Penghui Liu and Jing Liu wrote the paper.

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liu, P., Liu, J. Multilevel Evolutionary Algorithm that Optimizes the Structure of Scale-Free Networks for the Promotion of Cooperation in the Prisoner’s Dilemma game. Sci Rep 7, 4320 (2017). https://doi.org/10.1038/s41598-017-04010-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-017-04010-2

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.