Abstract

Anticoagulants are potent therapeutics widely used in medical and surgical settings, and the amount spent on anticoagulation is rising. Although warfarin remains a widely prescribed oral anticoagulant, prescriptions of direct oral anticoagulants (DOACs) have increased rapidly. Heparin-based parenteral anticoagulants include both unfractionated and low molecular weight heparins (LMWHs). In clinical practice, anticoagulants are generally well tolerated, although interindividual variability in response is apparent. This variability in anticoagulant response can lead to serious incident thrombosis, haemorrhage and off-target adverse reactions such as heparin-induced thrombocytopaenia (HIT). This review seeks to highlight the genetic, environmental and clinical factors associated with variability in anticoagulant response, and review the current evidence base for tailoring the drug, dose, and/or monitoring decisions to identified patient subgroups to improve anticoagulant safety. Areas that would benefit from further research are also identified. Validated variants in VKORC1, CYP2C9 and CYP4F2 constitute biomarkers for differential warfarin response and genotype-informed warfarin dosing has been shown to reduce adverse clinical events. Polymorphisms in CES1 appear relevant to dabigatran exposure but the genetic studies focusing on clinical outcomes such as bleeding are sparse. The influence of body weight on LMWH response merits further attention, as does the relationship between anti-Xa levels and clinical outcomes. Ultimately, safe and effective anticoagulation requires both a deeper parsing of factors contributing to variable response, and further prospective studies to determine optimal therapeutic strategies in identified higher risk subgroups.

Similar content being viewed by others

Introduction

Anticoagulants are a widely prescribed class of drugs used in both medical and surgical settings. In 2015 alone, 14.6 million prescriptions were dispensed in the community in England, the associated cost was over £222 million which represented an increase of £84 million on the previous year [1, 2].

Oral anticoagulants include indirectly acting coumarin-derived oral anticoagulants, notably warfarin, and direct oral anticoagulants (DOACs)— dabigatran, apixaban, edoxaban and rivaroxaban. In the UK, ~1.25 million patients receive long term oral anticoagulation, and worldwide warfarin remains the most commonly prescribed oral anticoagulant [3]; however warfarin has now been superseded by apixaban as the most widely prescribed oral anticoagulant in primary care in England [4]. Parenteral anticoagulants include indirect acting unfractionated heparin (UFH), low molecular weight heparins (LMWHs), fondaparinux, and the less frequently prescribed direct acting drugs such as bivalirudin and argatroban.

Anticoagulants are primarily indicated in the prophylaxis and treatment of venous thromboembolism (VTE), the prophylaxis of systemic (arterial) thromboembolism in predisposing conditions including atrial fibrillation (AF), following a mechanical heart valve implantation, and in constitutive thrombophilic conditions such as antiphospholipid syndrome. Heuristically, parenteral anticoagulants are preferred for short term inpatient anticoagulation, whilst oral anticoagulants are indicated in longer term outpatient anticoagulation.

Clinically, it is evident that patients can respond differently to the same drug [5]. Precision medicine is a therapeutic paradigm that aspires to tailor drug and/or dose selection to specific patient subgroups to enhance drug efficacy and/or minimise harm. There are three main facets to the precise use of therapeutics. First, patients warranting drug intervention need to be identified. For symptomatic conditions patients usually self-present, but asymptomatic conditions require screening. Opportunistic screening for AF for example increases overall AF detection [6], and the use of novel technologies may further facilitate and improve (paroxysmal) AF screening [7]. Second, optimisation of physician conformity to existing therapeutic guidelines is required. For example, ~50% of patients with AF do not receive anticoagulation and of these patients, 25% have no documented reason, suggesting notable anticoagulant underuse [8]. Further initiatives to understand the barriers limiting guideline adherence are necessary.

Third, optimisation of drug benefit-risk profiles is required through a deeper understanding of the factors shaping interindividual drug response variability. The human body consists of a complex network of interactions within and between hierarchically organised different biological levels (e.g. genomic, epigenomic, transcriptomic, proteomic, metabolomic, cellular, tissue, organ, and organ system levels), and this dynamic network is shaped by myriad genetic and environment influences. Health, disease and drug response all represent emergent non-intuitive properties arising from this complex network system, and so vary between patients [9]. It is hoped that systematic pan-omics and environmental factor interrogation will identify new drug response biomarkers, and their incorporation into algorithms and multiscale models will parse interindividual drug response variability sufficiently for clinical utility (Fig. 1).

Interindividual variability in response to a given drug results in efficacy, non-efficacy and adverse drug reactions. The primary sources for this variation are external environmental influences and an individual’s genome and heritable epigenetic traits. The human body consists of a complex dynamic network of interactions within and between its hierarchically organised discrete biological levels (genomic, epigenomic, transcriptomic, proteomic, metabolomic, cellular, tissue, organ, and organ system levels). Consequently, the myriad environmental and heritable traits feed into and shape the human dynamic network; these processes lead to the emergence of non-intuitive system properties including health and disease status and individual drug response. To adequately parse interindividual drug response variability, systematic pan-omics investigations and environmental factor mapping are needed to identify new response biomarkers. Subsequent mechanistic studies will further pathophysiological understanding. Furthermore, it is envisaged that integration of identified factors into algorithms such as polygenic risk scores help define sufficiently distinct patient subgroups, with adequate predictive capacity, which would benefit from distinct treatment strategies. These strategies will include both altered dose and/or drug recommendations with existing therapeutics, and the potential development of novel subgroup-specific therapeutics.

The clotting system balances the opposing needs of free blood flow for tissue viability, with rapid haemostasis following external injury; these competing requirements position the clotting system atop a physiological ‘tightrope’. It is thus highly pertinent to understand interindividual anticoagulant response variability because anticoagulants target and perturb this finely balanced clotting system (Fig. 2) and so their therapeutic window is appreciably narrower than for several other routinely prescribed medications (e.g. lipid lowering therapies, proton pump inhibitors). Individual patient under- and over-anticoagulation risks thrombotic and haemorrhagic complications, respectively, and notably warfarin is third on the list of drugs/drug groups resulting in adverse drug reactions (ADRs) associated with hospitalisation [10]. Furthermore, anticoagulants can also provoke unpredictable ADRs, such as heparin-induced thrombocytopaenia (HIT).

Vitamin K antagonists (VKAs), notably warfarin, inhibit hepatic vitamin K 2,3 epoxide reductase complex 1 (VKORC1) within the vitamin K cycle. This inhibition decreases active reduced vitamin K, which restricts the post-translational gamma-carboxylation activity of vitamin K-dependent γ-glutamyl carboxylase required by clotting factors II, VII, IX and X to become fully functional. Apixaban, edoxaban and rivaroxaban are direct factor Xa inhibitors, whilst dabigatran inhibits factor IIa (thrombin). A unique pentasaccharide sequence within unfractionated heparin, low molecular weight heparins (LMWHs) and fondaparinux enables high-affinity binding to antithrombin (AT). Heparin chains containing this pentasaccharide sequence and at least 18 saccharide units in length are needed to inactivate factor IIa because bridging to form the ternary heparin/AT/thrombin complex is necessary. Shorter chains containing the pentasaccharide chain can still inactivate Xa and so LMWHs have reduced anti-IIa compared to anti-Xa activity; fondaparinux inhibits Xa but not IIa. Bivalirudin and argatroban are two less frequently used parenterally administered anticoagulants; both are direct thrombin inhibitors. TF tissue factor. Drugs within ovals are orally administered; drugs within rectangles are parenterally administered.

Therefore, improving the detection of patients that require anticoagulation, increasing observance to existing clinical guidelines, and deepening our understanding of the interindividual variability observed in response to a given anticoagulant are all essential to the long-term realisation of precision anticoagulation. The aims of this review are to discuss the factors associated with interindividual variability in response to warfarin, DOACs, UFH and LMWHs as summarised in Table 1, to consider the current challenges and opportunities for advancing precision anticoagulation, and to highlight areas of unmet research need. Figure 3 highlights the main pharmacogenomic variants associated with differential response to anticoagulants.

ABCB1 ATP-binding cassette sub-family B member 1, CES1 carboxylesterase 1, CYP2C9 cytochrome P450 2C9, CYP2C18 cytochrome P450 2C18, FCGR2A immunoglobulin G (IgG) receptor IIa gene, FPGS folylpolyglutamate synthase, HLA-DRA human leucocyte antigen class II, DR alpha, INR international normalised ratio, PTPRJ protein tyrosine phosphatase receptor type J (CD148), TDAG8 T-cell death-associated gene 8, VKORC1 vitamin K 2,3 epoxide reductase complex 1, WSD warfarin stable dose.

Oral anticoagulants

Warfarin

The coumarin-derived racemic mixture, warfarin, at peak usage was estimated to be taken by at least 1% of the whole UK population, and by 8% of those aged over 80 years [11]. However, warfarin usage has decreased in many European countries and in the US, with a concomitant increase in the use of DOACs. Nevertheless, warfarin is still widely used especially in some of the lower-middle income countries, where affordability is a major issue.

Warfarin inhibits hepatic vitamin K 2,3 epoxide reductase complex 1 (VKORC1). VKORC1 is the rate-limiting enzyme in the warfarin sensitive vitamin K-dependent gamma carboxylation system, and inhibition of VKORC1 reduces the production of functional clotting factors II, VII, IX and X, proteins C, S and Z, and leads to anticoagulation (Fig. 2). Warfarin has a narrow therapeutic window and large inter-individual variability with up to 20-fold difference in stable dose requirements between individuals. Therefore, warfarin treatment is closely monitored via the international normalised ratio (INR); for most indications, the recommended therapeutic INR range is 2.0–3.0. An overview of warfarin pharmacokinetics is provided in Table 2.

Patients with AF on warfarin are unsettlingly outside the therapeutic INR range 30–50% of the time [12, 13]. Importantly, bleeding is the most common warfarin ADR occurring in up to 41% of treated patients, with major bleeding frequencies as high as 10–16% [14, 15]. The risk of adverse events is highest during the initial dose-titration period within the first few weeks to months of warfarin therapy, and so strategies to individualise the initial warfarin doses have been sought.

Clinical and environmental factors affecting warfarin response

Numerous clinical and environmental factors influence warfarin dose requirements and response, including age, ethnicity, weight, height, medications, diet, illness, smoking and crucially adherence.

Increasing patient age has consistently been associated with higher warfarin sensitivity, which may be caused by the significant negative correlation between age and warfarin clearance, and by the fall in total hepatic VKORC1content due to age-related decreases in hepatic mass requirements [16].

Concomitant medications can affect warfarin pharmacokinetics by reducing its intestinal absorption, altering its clearance, or by competing for protein binding. Drugs can also influence the pharmacodynamics of warfarin by mechanisms such as inhibition of the synthesis of vitamin K-dependent coagulation factors or increasing the clearance of these factors. A list of major medications that interact with warfarin has been reviewed [17]. Importantly, patients on amiodarone require 20–30% lower doses of warfarin for stable anticoagulation [18].

Dietary factors can affect warfarin dose requirements, such as alcohol consumption or vitamin K intake. Alcohol may perturb warfarin metabolism and high dietary intake of vitamin K (found in green vegetables) may conceivably offset warfarin activity. However, there is conflicting evidence on the association between warfarin maintenance doses and vitamin K intake [19, 20].

Several illnesses such as liver disease, malnutrition, decompensated heart failure, hypermetabolic states (e.g. febrile illnesses, hyperthyroidism) are recognised to affect warfarin dose requirements [18, 21].

Cigarette smoking can induce CYP1A2 activity, the major enzyme responsible for R-warfarin metabolism. With increased smoking, R-warfarin metabolism increases, increasing dose requirements. Therefore, a change in smoking habit may affect warfarin coagulation response and consequently patients should be carefully monitored and warfarin doses reduced accordingly following cessation [22].

Genetic factors affecting warfarin dose requirements

CYP2C9

CYP2C9 metabolises the S-warfarin enantiomer, which is 3-5x more potent than R-warfarin. Over 30 CYP2C9 variants are recognised, although CYP2C9*2, *3, *5, *6, *8 and *11 represent the main CYP2C9 non-synonymous reduction-of-function (ROF) single nucleotide polymorphisms (SNPs). These SNPs all attenuate S-warfarin metabolism, although CYP2C9*6 is an exonic single nucleotide deletion, which shifts the reading frame and leads to complete loss of function [23]. CYP2C9*2 and *3 are the most common Caucasian variants with minor allele frequencies of 0.13 and 0.07, respectively. In Asian populations, CYP2C9*2 is very rare and CYP2C9*3 has a low frequency (~0.04); in African populations CYP2C9*2 and *3 are both rare or absent. CYP2C9*2 and *3 reduce S-warfarin metabolism by ~30–40% and ~80–90% respectively [24], and are associated with both decreased WSD requirements [25] and an increased risk of bleeding [26, 27]. The largest bleeding risk is apparent in patients homozygous for CYP2C9*3, with a hazard ratio for bleeding relative to CYP2C9*1/*1 patients of 4.87 (95% confidence interval 1.38, 17.14) [26]. Using multiple linear regression models, several observational studies have shown that CYP2C9 polymorphisms account for ~10–15% of the variance in warfarin maintenance dosage [16, 28,29,30,31].

The variants, CYP2C9*5, *6, *8 and *11, are present mainly in African populations. With the exception of CYP2C9*6 for which there is presently insufficient evidence, CYP2C9*5, *8 and *11 are all associated with reduced warfarin dose requirements [32, 33]. Interestingly, a genome-wide association study (GWAS) identified an intergenic SNP, rs12777823, located near the 5’ end of CYP2C18 within the CYP2C gene cluster, that was associated with lower warfarin dose requirements in African-American patients [34]. Incorporation of rs12777823 improved the proportion of warfarin dose variability in these patients by an absolute of 5% [34].

VKORC1

The common VKORC1 SNP, rs9923231(c.-1639G>A), has consistently been associated with reduced warfarin dose requirements [34,35,36]. -1639A perturbs a transcription factor binding site in the VKORC1 promoter region and reduces gene expression [37]. In African-American, Asian and Caucasian populations, the allele frequency of -1639A is ~0.13, ~0.92 and ~0.40 respectively, indicating reversal of the minor allele within Asian populations. rs9923231 accounts for 20–25% of WSD variation in Asian and Caucasian populations, but only ~6% in African-Americans [38]. This is potentially attributable to both its lower frequency and/or the influence of additional factors in Africa-American patients. rs9923231 has been associated with an increased risk of bleeding in some studies [31, 39, 40], but not others [41, 42]. Interestingly, several rare VKORC1 mutations (e.g. rs61742245, D36Y) have been identified in patients resistant to warfarin that require high warfarin doses to achieve therapeutic anticoagulation [43].

CYP4F2

A non-synonymous variant (rs2108622) in the vitamin K oxidase gene, CYP4F2, associated with increased warfarin dose requirements has been confirmed in genome-wide studies [35, 36]. CYP4F2 metabolises reduced (active) vitamin K, removing it from the vitamin K cycle. rs2108622 accounts for 1–7% of dose variance [35, 44].

Other genetic factors

Interestingly, a population-specific regulatory variant (rs7856096) located in the folate homoeostasis gene folylpolyglutamate synthase (FPGS) was identified through exome-sequencing of African-American patients with extreme warfarin dose requirements, and was associated with lower warfarin dose requirements [45].

There are other genes that might potentially influence warfarin response but these have not been consistently identified in different studies, and have not been identified in genome-wide association studies. That does not mean that they are not important, but it is possible that their effect size is much lower than the 3 main genes so far identified to affect warfarin response. Much larger studies would be needed to consistently detect their effect.

Warfarin genetic testing

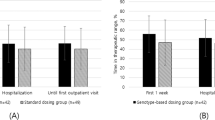

Together, CYP2C9 and VKORC1 SNPs and clinical variables account for nearly 60% of warfarin dose variance [31, 46]. Despite results from many multiple regression analyses demonstrating that genetic information from CYP2C9 and VKORC1 provides good predictive power with regards to warfarin dosage, there is currently no recommendation for genetic screening of patients starting warfarin therapy in guidelines from the cardiology and thoracic societies, although CPIC has provided detailed advice on dose changes in people with different CYP2C9 and VKORC1 variants [47]. A handful of randomised controlled trials have attempted to evaluate whether applying pharmacogenomic dosing algorithms to clinical practice translates into better clinical outcomes, such as more rapid attainment of therapeutic INR or a reduction in percentage of out-of-range INR. ENGAGE-AF-TIMI 48 [48] trial demonstrated patients with AF receiving clinical based warfarin dosing who were deemed sensitive and highly sensitive responders to warfarin on genetic testing (incorporating CYP2C9 (*2 and *3 alleles; rs1799853 and rs1057910) and VKORC1 (-1639G->A; rs9923231)) were more likely to bleed and have raised INRs. This result was consistent even after adjustment for clinical co-variates [49]. Sub analysis of Hokusai VTE trial echoed these findings with pooled sensitive responders spending more time with higher INRs and increased bleeding events [50]. The EU-PACT [51] trial showed that pharmacogenomic-guided dosing was superior to fixed dosing regimen but the COAG [52] trial did not. Reasons for this divergence in outcome were largely due to ethnicity of patients (27% African-American in COAG versus almost 100% Caucasians in EU-PACT), and the availability of genotype data prior to warfarin initiation. The results of the EU-PACT trial were confirmed by an implementation study which also utilised point of care warfarin genetic testing and showed an improvement in the time in therapeutic range compared to standard of care [53]. Furthermore, the GIFT [54] trial supported the findings of EU-PACT again in a predominantly Caucasian based more elderly cohort demonstrating reduction in bleeding endpoints, less time with INR > 4 and non-inferior protection against VTE.

The potential utility of warfarin genotype-guided dosing has also been shown in two real world evaluations. In Finland, an evaluation of warfarin treated patients from a biobank demonstrated that sensitive and highly sensitive responders spent a longer time with supratherapeutic INRs but there was no significant increase in bleeding risk, although there were few bleeding events in the study [55]. A retrospective cohort study in the US showed that pharmacist-guided warfarin service which utilised pharmacogenetic-guided dosing was able to reduce warfarin-related hospitalisations [56].

Most of the studies on warfarin pharmacogenetics have been conducted in European ancestry patients. However, our systematic review showed that there has been significant activity in developing dosing algorithms for individuals of Asian ancestry, in addition to European ancestry patients [57]. Indeed, up till May 2020, 433 dosing algorithms have been described in the literature, but the majority have not been evaluated for clinical utility. The covariates included in these algorithms have been age (included in 401 algorithms), concomitant medications (270 algorithms), weight (229 algorithms), CYP2C9 variants (329 algorithms), VKORC1 variants (319 algorithms) and CYP4F2 variants (92 algorithms).

There has been much less work on developing algorithms in individuals of African ancestry than in other populations [57]. A systematic review has shown that variants which are more prevalent in Black Africans have functional effects which are equivalent to those seen in White individuals [58], yet these have not been routinely utilised in dosing algorithms, nor tested prospectively in randomised trials. In the COAG trial [52], Black patients were shown to have worse anticoagulation control when randomised to the genotyping arm compared to the use of the clinical algorithm—this is likely to have been due to the lack of African-specific variants in the dosing algorithm. Indeed a recent study has shown that pharmacogenetic dosing algorithms that did not incorporate CYP2C9*5 overestimated the warfarin dose by 30% [59]. Given the widespread usage of warfarin in Black patients, particularly in Sub-Saharan Africa where DOACs are still unaffordable, it is important further studies are undertaken to improve the quality of anticoagulation with warfarin in this population.

Direct oral anticoagulants

Over the past decade, DOACs have emerged as oral anticoagulant alternatives to warfarin. DOACs reversibly target the active sites of circulating and clot-bound thrombin (dabigatran) or clotting factor Xa (rivaroxaban, apixaban and edoxaban) (Fig. 2). Compared to warfarin, DOACs have a rapid onset of action, a wider therapeutic window, and fewer food and drug interactions. Currently, DOACs are prescribed at fixed doses without laboratory monitoring. However, clinical and genetic factors have been shown to affect DOAC efficacy and safety and dose adjustments may be required in high-risk patients. An overview of DOAC pharmacokinetics is provided in Table 2.

Efficacy and safety of DOACs compared with standard treatment

In non-valvular AF, a meta-analysis of the four main trials investigating dabigatran, rivaroxaban, apixaban, and edoxaban revealed that the rate of stroke and systemic embolism, all-cause mortality and intracranial haemorrhage were all significantly reduced by 19%, 10%, and 52%, respectively, compared to patients on warfarin [60]. However, with the exception of apixaban; rivaroxaban, higher doses of dabigatran and edoxaban were associated with 25% increased risk of gastrointestinal bleeding [60, 61].

Meta-analysis of trials that investigated the efficacy and safety of dabigatran, rivaroxaban, apixaban, and edoxaban in patients with acute VTE, demonstrated DOACs were non-inferior to conventional therapy and associated with a reduced risk of bleeding [62]. Pooled analysis of trials conducted with dabigatran, rivaroxaban, apixaban and edoxaban revealed that DOACs are effective for post-operative thromboprophylaxis in patients after a total hip or knee replacement, but their clinical benefits over LMWHs are marginal and DOACs are generally associated with higher bleeding tendency [63, 64].

Finally, in a phase II dose validation study (RE-ALIGN), the efficacy and safety of dabigatran was compared to warfarin in patients with mechanical heart valves [65]. The study was however terminated prematurely due to increased incidence of thromboembolic and bleeding events in the dabigatran-treated patients and the thromboembolic effects were seen in patients with both high and low trough levels [66]. Therefore, whilst DOACs are indicated in the management of non-valvular AF and VTE, warfarin currently remains the drug of choice for patients with mechanical heart valves. Three small scale proof of concept trials with rivaroxaban in patients with mechanical heart valves demonstrate future investigation of DOACs in mechanical heart valves may warrant investigation [67,68,69].

Factors affecting efficacy and safety of DOACs

Food and drug interactions

Unlike warfarin, DOACs are not known to be affected by food and have fewer drug-drug interactions. A comprehensive list of drug interactions with DOACs has been reviewed by Heidbuchel et al. [70].

P-gp inhibitors, inducers and substrates

Net absorption of DOACs is dependent on the intestinal permeability glycoprotein (P-gp) efflux transporter. Strong P-gp inducers, such as rifampin, older antiepileptics (carbamazepine, phenytoin, and phenobarbital), and St John’s wort, decrease exposure to DOACs and concurrent use should be avoided due to increased risk of thrombosis. Strong P-gp inhibitors such as amiodarone, verapamil, clarithromycin, dronedarone and antifungals (e.g. itraconazole, ketoconazole) increase the absorption, exposure and bioavailability of DOACs, potentially leading to increased bleeding complications. P-gp inhibitors increase dabigatran bioavailability by ~10% to 20% [71]. There are also case reports of major bleeding in elderly patients taking concomitant dabigatran with P-gp inhibitors that might have been due in part to the inhibition of P-gp, in addition to other factors such as age and decreased renal function [72, 73] (Table 1). More recently, in a retrospective cohort study of AF patients on dabigatran, concomitant use of digoxin, which is a substrate of P-gp, was associated with 33% increased risk of gastrointestinal bleeding (Table 1) [74].

CYP450 inducers and inhibitors

Dabigatran is not a substrate, inhibitor, or inducer of hepatic CYPs [75]. As less than 4% of the active metabolite of edoxaban is metabolised by CYP3A4 [76], drug interactions with CYP inducers or inhibitors are not expected. However, rivaroxaban and apixaban are CYP3A4 substrates; co-administration with drugs that inhibit or induce this metabolic enzyme as well as P-gp (e.g. ketoconazole or rifampicin) could significantly affect drug response, leading to increased risk of bleeding or reduced efficacy, respectively [77, 78].

Anticoagulants, antiplatelet agents and nonsteroidal anti-inflammatory agents

The DOAC product monographs contraindicate the concomitant use of other anticoagulants due to an increased bleeding risk [79,80,81,82]. Caution is also warranted if co-prescribing DOACs and other drugs that elevate bleeding risk: these include antiplatelets, nonsteroidal anti-inflammatory drugs (NSAIDs), cyclooxygenase-2 inhibitors, and systemic corticosteroids (Table 1). A post hoc analysis of the RE-LY trial showed that the rate of major bleeding was higher in patients on concomitant antiplatelet drugs compared to those not on antiplatelet therapy [83] (Table 1). Furthermore, in patients on a DOAC and antiplatelet therapy, dual antiplatelet treatment was associated with a higher risk of major bleeding than single antiplatelet therapy [83] (Table 1). Subgroup analysis of the EINSTEIN-DVT and EINSTEIN-PE trials demonstrated that rivaroxaban-treated patients co-administered a NSAID had a 2.4-fold higher risk of a major bleed and those who concomitantly took aspirin had a 1.5-fold higher risk [84] (Table 1). Aspirin and NSAID use increased the risk of major bleeding in apixaban-treated patients by approximately 30% [85]. Patients with AF receiving antiplatelet therapy in addition to edoxaban had higher rates of bleeding and cardiovascular death than those not on antiplatelet therapy [86]. It is therefore important to consider the patient–specific risk-benefit profile when DOACs are prescribed with permissible agents that may increase bleeding risk, and concurrent therapy should be administered for the shortest appropriate duration.

Weight

Although population pharmacokinetic-pharmacodynamic studies have shown that extremes of bodyweight (<50 or >110 kg) do not significantly impact dabigatran pharmacology [75], a post hoc analysis of the RE-LY trial [87] showed a 20% decrease in dabigatran trough levels in patients >100 kg compared to patients 50–100 kg; no drug label dose adjustments have been recommended though [88]. Increased BMI is strongly associated with increased glomerular filtration rates (GFRs) [89] and increased drug clearance [90]. Hence the inverse correlation between weight and dabigatran levels could impact efficacy in very obese patients [91]. Two case studies of incident ischaemic stroke [92, 93] have been reported in obese patients (BMI > 39 kg/m2) on long-term anticoagulation with dabigatran for AF. The two patients had sub-therapeutic trough dabigatran levels and supra-physiologic creatinine clearance [92, 93], suggesting fixed-dose dabigatran may be insufficiently effective in severe obesity.

A pharmacokinetic and pharmacodynamic assessment indicated that extremes of bodyweight have limited effect on the pharmacokinetic profile of rivaroxaban. This is most likely due to rivaroxaban’s low volume of distribution [94] (Table 2). Pre-specified subgroup analyses stratified by weight and/or BMI within large phase III rivaroxaban trials have shown that efficacy and safety outcomes are consistent between different-weighted rivaroxaban users [95,96,97,98,99,100,101,102], suggesting that fixed-dose rivaroxaban regimens can be used safely in patients of all weight ranges [103]. Case studies suggest that the bioavailability of rivaroxaban is not affected in patients who are obese or morbidly obese [93, 104] and dose adjustments seem unnecessary. Interestingly, in a clinical case report of an obese patient who presented with an ischaemic stroke whilst on dabigatran, substitution to rivaroxaban lead to peak and trough rivaroxaban levels consistent with effective anticoagulation [93], suggesting that rivaroxaban is more efficacious than dabigatran in obese patients with AF.

Extremes of body weight lead to modest changes in apixaban exposure [105], and so weight ≤60 kg is recommended as one of the two criteria for reduced apixaban dosing in the current apixaban label [80, 106]. Similarly, in phase II edoxaban studies of patients with AF, weight ≤60 kg was associated with increased edoxaban exposure [107] and possible increased bleeding incidence [108], leading to a dose reduction recommendation (30 mg once daily if ≤60 kg) [81, 109].

Hepatic impairment

Patients can be classified into three distinct groups of liver diseases: Child-Pugh A (mild), B (moderate) and C (severe) based on the presence of encephalopathy or ascites, along with the levels of serum albumin, serum bilirubin, and prothrombin time. Patients with severe liver disease were excluded from the DOAC clinical trials as hepatic impairment is often associated with intrinsic coagulation abnormalities, leading to an increased bleeding risk.

Given that rivaroxaban, apixaban and edoxaban are metabolised by liver enzymes, hepatic impairment can considerably affect the disposition of these anticoagulants [110]. Moderately impaired liver function is associated with 2.27-fold increase in rivaroxaban exposure, which is paralleled by an increase in factor Xa inhibition [111]. Conversely, apixaban pharmacokinetics are not significantly altered in patients with mild to moderate hepatic impairment or in patients with alanine aminotransferase and aspartate aminotransferase levels >2× upper limit of normal (ULN) [112]. Peak serum edoxaban concentrations decreased by 10% and 32% in patients with mild and moderate hepatic impairment, respectively [113]. Product labelling for the three factor Xa inhibitors does not recommend their use in patients with moderate or severe hepatic impairment.

The pharmacokinetic profile of dabigatran is not affected in individuals with moderate hepatic impairment [114] but as subjects with severe liver disease were excluded from clinical trials of dabigatran, dabigatran is not recommended in patients with elevated liver enzymes (>2× ULN).

Renal impairment

Approximately 77% of dabigatran, 36% of rivaroxaban, 27% of apixaban, and 50% of edoxaban are excreted by the kidneys as active drug [76, 115,116,117]. Expectedly, DOAC pharmacokinetic studies have demonstrated that renal impairment is associated with elevated systemic exposure. In patients with severe renal impairment, as defined by creatinine clearance (CrCl) < 30 mL/min, the plasma concentration area under the curve (AUC) of dabigatran, rivaroxaban, apixaban, and edoxaban were increased by 6-fold, 65%, 44% and 72%, respectively [118,119,120,121]. Product labelling of DOACs recommends dose reduction for patients with CrCl 15–50 mL/min and avoidance of use in patients with advanced renal dysfunction (CrCl <15 mL/min) and in those on haemodialysis [79,80,81,82]. A sub-group analysis of the ENGAGE AF-TIMI 48 clinical study suggested that edoxaban-treated patients with CrCL >95 mL/min are potentially at a slightly higher risk of stroke/systemic embolism compared to those treated with warfarin, but with lower bleeding risk [122]. As such, the edoxaban drug label recommends edoxaban is used only after a careful individualised assessment of thromboembolic and bleeding risks in patients with high CrCl.

Elderly

Age and renal function are intricately related. GFR gradually declines with ageing at a rate of ~1 ml/min/year after the age of 30 [123], with an accelerated decrease in GFR after 65 years of age [124]. To ensure the efficacy and safety of DOACs in elderly patients, renal function should be monitored annually in those with CrCl >50 mL/min and 2 to 3 times per year in those with CrCl 30–49 mL/min [125].

Non-adherence is also of concern as DOACs have short half-lives and missed doses could decrease efficacy, increasing the risk of thromboembolic events. Other factors such as poly-pharmacy, cognitive impairment, hospitalisation, history of bleeding and/or falls are common in the elderly, which could lead to over- or under-dosing of DOACs.

Genetic factors

Given that there are strong genetic effects associated with warfarin dosing requirements, there has also been interest in whether genetic factors may determine outcomes with the DOACs, A GWAS conducted in a subset of patients from the RE-LY trial reported genome-wide SNP associations for both peak and trough dabigatran concentrations [126]. The minor allele of rs8192935, an intronic SNP located in the carboxylesterase 1 gene (CES1), was associated with a 12% reduction in peak dabigatran concentrations. By contrast, the minor allele of rs4148738, an intronic SNP located in ABCB1, which encodes P-gp, was associated with a 12% increase in peak dabigatran concentrations (Table 1). However, neither rs8192935 nor rs4148738 were associated with clinical outcomes. Importantly, CES1 rs2244613 was associated with both decreased dabigatran trough levels and a 33% lower risk of bleeding events per minor allele [126] (Table 1). These findings need replication but at present do not seem to of clinical value.

Genetic studies focusing on clinical outcomes with dabigatran and the anti-Xa inhibitors have usually been small scale with inconsistent findings [127, 128]. More recently, a larger study of 2364 patients treated with either apixaban and/or rivaroxaban, of whom 412 had clinically relevant non-major bleeding or major bleeding, evaluated eight functional variants in five genes (ABCB1, ABCG2, CYP2J2, CYP3A4, CYP3A5), and found that none of the genetic variants were associated with bleeding [129]. Older patients, those who switched from one DOAC to another, and those on P450 or Pgp inhibitors were at increased risk of bleeding.

From the limited number of studies conducted on genetic factors associated with DOAC-related clinical end-points, it can be concluded that no common variants with a large effect size have been identified. This contrasts with the findings with warfarin. It is possible that rare variants may be important and/or multiple common variants with a small effect size may determine outcomes, but these hypotheses will need to be tested in large well designed studies which focus on patients with major bleeding episodes, who will need to be sequenced (for rare variants) and assessed for polygenic scores.

Unpredictable, or type B, ADRs have been reported with DOACs, but these have been sporadic and no genetic studies have been undertaken. An older direct thrombin inhibitor ximelagatran was withdrawn because of liver injury. A genetic study involving 74 cases and 130 treated controls showed a strong association with the HLA alleles DRB1*07:01 and DQA1*02, suggesting an immune pathogenesis [130]. Liver injury was not found to be associated with the newer DOACs in a systematic review of 29 randomised trials evaluating over 150,000 patients [131]. However, a systematic review of 15 studies of patients who developed liver injury while taking DOACs suggested that hepatotoxicity can occur rarely, but the outcome is usually favourable [132].

Other factors

Little is known about the risk factors associated with the DOAC efficacy and safety in real-world practice. Other than co-medications, genetics, weight, age, renal and hepatic function, factors such as gender, concomitant diseases, infections, and lifestyle variables (e.g. smoking, alcohol intake) may also play a role in the efficacy and safety of DOACs. Recent real-world data from a retrospective study investigating AF patients initiated on dabigatran found an increased risk of gastrointestinal bleeding in patients who were female, had congestive heart failure, had previous H. pylori infection, and were diagnosed with alcohol abuse [74]. In addition, a 67% increased risk of gastrointestinal bleeding was observed among patients with chronic kidney disease [74]

To date, the effect of ethnicity on the efficacy and safety of DOACs remains uncertain due to poor enrolment of black and Hispanic patients and inconsistent race/ethnicity reports in major DOACs clinical trials [133].

Monitoring and antidotes

Although anticoagulation monitoring for DOACs is not mandated, assessment of drug exposure and anticoagulant effect may be beneficial in specific clinical situations such as those with renal or hepatic insufficiency, identifying potential drug-drug interactions, in cases of suspected overdosing, and in the presence of serious bleeding or thrombotic events. Given the unique mechanisms of action of DOACs, routine INR testing is unsuitable [134,135,136,137]. Suitable dose monitoring tests have been outlined in Table 2. Briefly, the diluted thrombin time (dTT) and ecarin clotting time (ECT) assays are sensitive to the magnitude of dabigatran’s anticoagulant effect and have a linear response to plasma dabigatran within its therapeutic range [138]. The activated partial thromboplastin time (aPTT) only gives an approximate assessment of dabigatran’s effect on coagulation [134,135,136, 139]. The chromogenic anti-Factor-Xa assay, calibrated to rivaroxaban, apixaban or edoxaban may be used to quantitatively assess for clinically relevant drug levels, but these assays are not as yet available worldwide [140,141,142].

Antidotes are now available for the reversal of DOACs in case of emergencies (Table 2). However, challenges in their usage in clinical practice are anticipated. Clear guidelines on timing of usage, indications, and bleeding types will be required for practitioners [143].

Parenteral anticoagulants

Unfractionated heparin

Heparin is an endogenously produced highly sulphated linear glycosaminoglycan (mucopolysaccharide). Clinically used UFH is derived from porcine or bovine mucosa, and is heterogeneous with respect to molecular size, pharmacokinetics and anticoagulant activity [144]. The mean molecular weight of UFH molecules is 15,000 Da (Table 3), corresponding to approximately 45 saccharide units, although UFH molecules range from 3000 to 30,000 Da [144]. A unique pentasaccharide sequence enables high-affinity binding of antithrombin (AT) to heparin molecules, converting AT from a slow into a rapid serine protease inhibitor [145]. However, this pentasaccharide sequence is only present in a third of UFH molecules, and heparin molecules lacking this sequence have reduced anticoagulant activity at therapeutic levels [144, 146]. The heparin/AT complex inactivates the main recognised physiological targets of AT: thrombin (factor IIa) and factor Xa [147] (Fig. 2). Inhibition of thrombin requires formation of a ternary heparin/AT/thrombin complex; heparin chains less than approximately 18 saccharide units are not long enough to bridge AT to thrombin, and so have little anti-IIa activity [148]. However, anti-Xa activity only requires heparin to bind to AT, and so shorter heparin molecules that contain the pentasaccharide sequence still catalyse factor Xa inhibition [144]. UFH also has additional mechanisms of action, detected in in vitro studies, including AT-dependent inhibition of factors IXa, XIa and XIIa, and at high concentrations pentasaccharide sequence-independent heparin cofactor II (HCII)-dependent inhibition of factor IIa [144].

At therapeutic doses, UFH clearance is nonlinear with dose-dependent pharmacokinetics involving both saturable and non-saturable elimination mechanisms [149]. The rapid saturable component is the main clearance route of UFH and involves cellular uptake and metabolism by liver sinusoidal endothelial cells, which constitute part of the hepatic reticuloendothelial system (RES), and/or by vascular endothelial cells; the slower non-saturable component is largely renal [149]. UFH also binds to endothelial cells, macrophages, platelets and multiple plasma proteins besides AT including von Willebrand factor, lipoproteins and fibrinogen, which limits the anticoagulant potency of UFH and increases the variability in response to UFH [144, 150]. Although use of UFH has declined, it is still used in patients with acute coronary syndrome undergoing percutaneous coronary intervention (PCI) [151], and in clinical settings where anticoagulation fine tuning is sought (e.g. in perioperative anticoagulant bridging or in patients at high bleeding risk) because of its rapid onset of action and clearance, and the availability of protamine sulphate for rapid UFH inactivation [152].

Factors affecting heparin efficacy

As anticoagulant response to UFH is unpredictable, UFH therapy is monitored mainly using the aPTT, although the activated clotting time (ACT) is used to monitor the higher UFH doses administered in PCI and cardiopulmonary bypass surgery [144]. The evidence behind the aPTT therapeutic range used is relatively weak (1.5–2.5× the control level or upper limit of normal [32–39 s] [152]), as it has not been verified in randomised trials [144], and patients with a prolonged baseline aPTT cannot have UFH therapy reliably monitored using aPTT [152]. Clinically, failure for rapid attainment of a therapeutic aPTT after starting UFH has been associated with VTE recurrence in some [153], but not all studies [154]. Interestingly, the risk of 180-day VTE recurrence following an incident VTE was reduced in patients who rapidly attained an aPTT ≥ 58 s on UFH, but not in patients with rapid attainment of aPTT ≥40 s [155]. Conversely, during the median six day duration of UFH therapy, the proportion of time with an aPTT ≥40 s, but not ≥58 s, was associated with a reduced hazard of VTE recurrence [155]. Markedly low aPTTs (<1.25x control) taken 4–6 h after starting UFH therapy have also been associated with recurrent myocardial infarction [156].

A barrier to the rapid attainment of a therapeutic aPTT is under-dosing of both UFH loading and infusion maintenance doses [157]. Thus UFH nomograms have been developed, which significantly increase the proportion of patients reaching a therapeutic aPTT within 24 h compared to clinical judgement [158]. UFH nomograms standardise the loading and initial heparin infusion rate and provide an algorithm for rate adjustments based on aPTT measurements; both weight and non-weight based nomograms are available [152]. Nevertheless, in a RCT sub-analysis including 6,055 patients with a ST-elevation myocardial infarction who received UFH according to a weight-based nomogram, only 33.8% of initial aPTTs fell within the therapeutic range; 13.2% and 16.3% were markedly low and high, respectively [156]. Factors associated with markedly low initial aPTT values on UFH included increased weight and younger age [156]. Even when the initial aPTT on UFH using a nomogram is within the therapeutic range, it is maintained over the next two measurements in only 29% of patients [159].

Heparin resistance

Heparin resistance refers to the requirement for unusually high heparin doses to achieve a therapeutic aPTT, and studies have suggested that it occurs in 21–26% of patients [160, 161]. Several factors have been associated with heparin resistance including nonspecific binding secondary to strong negative charge, AT deficiency, platelet count >300,000/microL, recent heparin therapy, increased levels of heparin-binding proteins, increased heparin clearance, high levels of factor VIII and fibrinogen and concomitant use of the serine protease inhibitor, aprotinin [144, 160, 162,163,164]. Given the importance of ascertaining an early therapeutic aPTT, further research is required to incorporate factors associated with a decreased response into UFH nomograms.

Factors associated with heparin safety

Bleeding

Major bleeding occurs in up to 7% of patients exposed to therapeutic UFH [165]. Risk factors for heparin-associated bleeding include older age, female gender, recent surgery or trauma, hepatic dysfunction, haemostatic problems, peptic ulcer disease, malignancy, reduced admission haemoglobin and concomitant use of other anti-clotting agents (e.g. antiplatelet drugs and thrombolytics) [152, 165,166,167]. Independent risk factors associated with markedly elevated initial aPTT values on UFH (≥2.75 times control) are older age, female sex, lower weight and renal dysfunction [156]. However, aPTT values correlate inconsistently with UFH-associated bleeding [156, 165, 167] and patients can suffer serious bleeding when the aPTT is in the therapeutic range, indicating that underlying clinical predictors appear stronger bleeding risk factors than aPTT [165]. No significant differences in bleeding rates have been observed in patients administered UFH according to nomograms, compared to non-nomogram dosing [158].

Heparin-induced thrombocytopaenia

HIT is an antibody-mediated ADR. Antibody formation, thrombocytopaenia and thrombosis occur in up to 8%, 1–5% and 0.2–1.3% of heparin-exposed patients, respectively [168]. Thrombosis in HIT is associated with a 20–30% risk of mortality [169]. The pathophysiology of HIT involves heparin binding to platelet factor 4, subsequent autoantibody production, and then the binding of IgG autoantibodies to the platelet surface stimulating platelet activation [170].

The risk of developing HIT is greater with UFH than LMWH for surgical patients [171], although this has not been confirmed in medical patients [172]. Therapeutic dose UFH poses an elevated risk of HIT compared to prophylactic dose UFH [173], and female patients are at higher risk of HIT [174]. The 4Ts pre-test clinical scoring system has been developed that incorporates thrombocytopaenia, the timing of platelet count fall, thrombosis, and other possible causes for observed thrombocytopaenia. Whilst the 4Ts score has an excellent negative predictive value, its positive predictive value remains suboptimal [175].

Genetic factors may be important in predisposing to HIT. Early candidate gene studies suggested some associations [176], which were not replicated, including an association of the homozygous 131RR genotype in the IgG receptor IIa gene, FCGR2A, with thrombosis in HIT patients [177]. More recently, genome-wide approaches have also been utilised. Karnes et al. in a study comparing 67 HIT cases with 884 heparin-exposed controls, reported that SNPs near the T-cell death-associated gene 8 (TDAG8) are associated with HIT in a recessive model, with the strongest association for the imputed SNP, rs10782473, with an OR 18.52 (95% CI 6.33–54.23) [178]. The most strongly associated genotyped SNP, rs1887289, leads to decreased TDAG8 transcription in cis-expression quantitative trait loci (eQTL) studies of healthy individuals, and is in moderate linkage disequilibrium with a TDAG8 missense SNP (rs3742704) [178].

More recently, a larger GWAS comparing anti-PF4 antibody positive patients who were also positive in the functional assay (n = 1269) with antibody positive functional assay-negative controls (n = 1131) and antibody negative controls (n = 1766) showed an association with the ABO blood group locus, with the O blood group being identified as a risk factor (OR, 1.42; 95% CI, 1.26–1.61; P = 3.09 × 10−8) for thrombosis in HIT [179]. Since the blood group is already known in most patients, there should perhaps be extra caution in blood group O patients who develop thrombocytopenia on heparin treatment. A subsequent GWAS that investigated the association with anti-PF4/heparin antibodies returned no genome-wide significant hits [180].

Hyperkalaemia

Heparin can lead to reversible hypoaldosteronism, resulting in a decrease in blood sodium and increase in potassium levels [181], which can predispose to hyperkalaemia. The most important mechanism appears to be a decrease in both the number and affinity of angiotensin II receptors in the zona glomerulosa [182]. Serum potassium levels above the upper limit of normal occur in ~7% of patients on heparin [182] and usually occur within 14 days of initiating heparin therapy [182]. The risk of hyperkalaemia appears higher with UFH than LMWHs [183]. Heparin-associated hyperkalaemia usually requires the presence of additional risk factors that perturb potassium homoeostasis including diabetes mellitus, metabolic acidosis, renal dysfunction and concomitant medications including spironolactone, angiotensin-converting enzyme inhibitors, trimethoprim and nonsteroidal anti-inflammatory drugs [182,183,184,185].

Low molecular weight heparins

LMWHs are derived from UFH through chemical or enzymatic depolymerisation, have approximately one third the molecular weight of UFH (Table 3), and have largely superseded UFH. LMWHs have reduced anti-IIa activity relative to anti-Xa activity because of their shorter molecular length (mean weight corresponds to ~15 saccharide units [144]), a greater bioavailability and a longer duration of anticoagulant effect than UFH, permitting once/twice daily dosing. Although molecular and thus pharmacological heterogeneity still exists within and between LWMHs, it is less pronounced than for UFH, meaning LMWHs are routinely prescribed without monitoring. In VTE treatment, LMWH is used for both rapid anticoagulation during warfarinisation (LMWH therapy continuing until a stable therapeutic INR has been achieved), and when heparin-based anticoagulation is indicated for the duration of VTE treatment (e.g. in pregnancy). The dosing of LMWHs is mostly fixed for VTE thromboprophylaxis, but is weight-based for VTE treatment.

LMWH anti-Xa monitoring

Although LMWH therapy is generally unmonitored, monitoring has been suggested in specific clinical settings including adult patients receiving LMWH with concomitant renal dysfunction [186, 187], morbid obesity, during pregnancy, and to check compliance [188, 189]. Consensus-based paediatric guidelines also recommend monitoring therapeutic LMWH in paediatric patients [190]. The recommended monitoring test is the chromogenic anti-Xa assay, which indirectly determines drug concentration (in anti-Xa International Units/mL) by measuring ex vivo the extent to which exogenous factor Xa is inhibited by LMWH-antithrombin complexes present in the patient’s blood sample. Clinical factors associated with anti-Xa activity on LMWH include dose, body weight [191, 192], renal function [193] (see later) and levels of tissue factor pathway inhibitor (TFPI) [194] and TFPI-Xa complexes [194]; the latter two being consistent with heparin-induced TFPI mobilisation [194].

The anti-Xa assay has limitations. Anti-Xa prophylactic and therapeutic index reference ranges are based on expert opinion rather than large prospective trial evidence [186, 187] and are different for different LWMHs, dosing schedules (once vs twice daily dosing) and indications (thromboprophylaxis vs treatment) [187]. Measured anti-Xa activity is affected by the timing of blood collection and interassay variation [195]. Thus, different assays can lead to different clinical decisions regarding optimal dosing in patients on the same LMWH [195]. Greater assay standardisation or assay-specific anti-Xa reference ranges are required.

Importantly, although anti-Xa levels are a marker of LMWH blood concentration, the correlation with clinical endpoints (bleeding, VTE) is inconsistent. For instance, elevated anti-Xa levels have been inconsistently correlated with bleeding [196,197,198], while a negative correlation was found between anti-Xa levels and VTE [198], but other studies found no association [197, 199]. Similarly, anti-Xa activity while on enoxaparin has been associated with increased risk of death or recurrent myocardial infarction, but not bleeding, in one study (n = 803) of acute coronary syndrome patients [200], whilst in a RCT sub-analysis of patients undergoing elective PCI (n = 2298), anti-Xa activity was associated with bleeding, but not death, myocardial infarction or revascularisation [201]. Besides variable definitions of supra- and subtherapeutic anti-Xa activity and the small sample sizes of many studies, the overall lack of reliable associations between anti-Xa activity and clinical events may plausibly be because the global anticoagulant effect of LMWHs involves additional factors besides anti-Xa activity, including anti-IIa activity, platelet levels, and interindividual variations in heparin-binding proteins [202,203,204].

LMWH VTE thromoboprophylaxis in critically ill trauma and surgical patients

Although anti-Xa monitoring has limitations, VTE thromboprophylaxis in patients at higher risk of VTE, principally critically ill trauma and surgical patients, may benefit from anti-Xa level monitoring, and in particular 12-h post dose/trough monitoring. These patients frequently have suboptimal anti-Xa trough levels [205, 206], and peripheral oedema is associated with reduced anti-Xa exposure [205]. Low body weight and multiple organ dysfunction have also been associated with high and low peak anti-Xa levels in intensive care patients, respectively [206]. A study of critically ill trauma and surgical patients reported that patients with low 12 h anti-Xa levels (≤0.1 IU/mL) on a VTE thromboprophylaxis regimen of enoxaparin 30 mg twice daily had an increased risk of DVT [207]. Interestingly in this study, peak anti-Xa levels were not different in those who did and did not develop a DVT [207]. A recent study of dalteparin VTE thromboprophylaxis in high risk trauma patients demonstrated that following transition to anti-Xa monitoring, VTE incidence decreased, and that in patients with 12-h anti-Xa levels available, those with levels <0.1 IU/mL had an increased risk of developing DVT [208]. However, this study also found that increased body weight partially correlated with low anti-Xa activity. Nevertheless, 12-h/trough anti-Xa monitoring of LMWH for VTE thromboprophylaxis in high risk critically ill patients merits further investigation.

Body weight

In general, patients at the extremes of body weight have been under-represented in LMWH RCTs. Although anti-Xa activity is inversely correlated to body weight [191, 192], weight accounts for only 16% of interindividual anti-Xa activity [196]. Nevertheless, 85% of patients receiving prophylactic enoxaparin who are under ≤45 kg of weight have anti-Xa activity ≥0.5 IU/mL [191], which is above the LMWH anti-Xa thromboprophylaxis accepted range for prophylaxis (0.2–0.5 IU/mL [209]). Nevertheless, the mean anti-Xa level was 0.64 IU/mL, which is still at the low end of the therapeutic anti-Xa range (0.5–1.2 IU/mL [209]) [191]. 54% of patients <50 kg have been reported to receive treatment LMWH therapy in excess of 200 IU/kg/day, compared to only 21% of patients weighing 50–100 kg [210]. Furthermore, weighing <50 kg was significantly associated with a higher rate of bleeding complications, although the extent to which this is attributable to low body weight per se remains unclear [211]. Larger studies are required to further investigate the interaction between treatment dose LMWH and low body weight on bleeding risk.

Excessive body weight is itself associated with an increased risk of primary and recurrent VTE [212]. Fixed doses in obese patients correlate with lower anti-Xa activity [192]. A weight based regimen for prophylactic enoxaparin dosing in medically hospitalised severely obese patients (BMI ≥ 40 kg/m2) in a small study (n = 31) significantly improved the proportion of patients with peak anti-Xa levels in the prophylactic therapeutic range [213]. A retrospective analysis of patients undergoing orthopaedic surgery (n = 817) on 40 mg daily fixed dose prophylactic enoxaparin reported that venographically detected VTE occurrence was significantly higher in obese compared to non-obese patients [214]. In sub-analyses of an RCT (n = 3706) comparing fixed dose prophylactic dalteparin to placebo in medical patients, a trend for benefit with dalteparin was present for all BMI categories except for patients with a BMI ≥ 40 kg/m2, suggesting that fixed dose LMWH thromboprophylaxis may be insufficient in severely obese patients [215]. However, within this study, the overall frequency of thrombotic and haemorrhagic events did not differ between obese (defined as BMI ≥ 30 kg/m2 for men and ≥28.6 kg/m2 for women) and non-obese patients [215]. For patients on treatment dose of either enoxaparin or UFH for VTE (n = 2217) in another RCT, patients weighing >100 kg (compared to patients ≤100 kg), and patients whose BMI was ≥30 kg/m2 (compared to those with BMI < 30 kg/m2) did not have a significantly increased risk of VTE recurrence or major bleeding [216].

The product monographs recommend capping maximum daily doses to 18,000 IU (dalteparin) [217], 28,000 IU (tinzaparin) [218], 19,000 IU (nadroparin) [219] and to 18,000 IU and 20,000 IU for once and twice daily dosing of enoxaparin, respectively [220]. There is still limited clinical data available to determine whether dose capping in clinical practice reduces therapeutic LMWH efficacy. Alternatively, there has been concern that increasing LMWH doses based on total body weight in obese patients may predispose to higher-than-predicted anti-Xa levels, with a potential bleeding risk. This is because LMWHs accumulate predominantly in the blood and vascular tissue, and intravascular volume is not linearly related with total body weight [221]. Nevertheless, a recent systematic review summarised the results of four pharmacokinetic studies of LMWH in obesity and concluded that dosing by total body weight does not lead to elevated anti-Xa levels in obese patients; the maximum body weight of a participant was 192kg [222]. Overall, studies involving larger numbers of severely obese patients are required to improving LMWH dosing in this group who are at high risk of thrombotic events.

Renal dysfunction

Renal elimination is preferentially more important to LMWHs than UFH, although the extent of its role in LMWH clearance, compared to cellular metabolism, varies between LMWHs. LMWHs of lower molecular weight (e.g. nadroparin, enoxaparin) preferentially rely on renal elimination whereas higher molecular weight LMWHs (e.g. tinzaparin) concomitantly utilise the cellular route of elimination. Interestingly, the affinity of LMWH fragments for antithrombin also influences elimination pathway propensity, with higher affinity fragments being preferentially eliminated by the cellular saturable route [149].

The major LMWH RCTs generally excluded patients with renal dysfunction. However, clinical studies have reported that enoxaparin anti-Xa exposure in non-haemodialysis patients with CrCl ≤ 30 mL/min is increased at both prophylactic and therapeutic doses [193]. Therapeutic nadroparin accumulates with decreasing renal function [223], but no accumulation was observed with prophylactic nadroparin in patients with a GFR of 30–50 mL/min [224]. No anti-Xa activity accumulation has been determined at prophylactic [193, 225] or therapeutic doses [226, 227] for dalteparin or tinzaparin in renal dysfunction. Interestingly in patients on haemodialysis, no anti-Xa accumulation with prophylactic enoxaparin or prophylactic dalteparin [228] was observed, suggesting that renal replacement therapy removes enoxaparin/dalteparin [193].

Although prophylactic enoxaparin is weakly associated with higher anti-Xa levels in patients with renal dysfunction [229], no excess bleeding has been confirmed, and anti-Xa levels have not differentiated between those with and without serious bleeding events [229]. Importantly, a meta-analysis of 12 studies (n = 4971) found that therapeutic enoxaparin is associated with an increased risk of major bleeding in patients with CrCl ≤ 30 mL/min compared to those with CrCl > 30 mL/min [230]. However, empirical dose reduction of therapeutic enoxaparin in patients with CrCl ≤ 30 mL/min may negate this elevated bleeding risk [230]. Therefore, in patients with renal dysfunction requiring therapeutic LMWH, a reduced enoxaparin dose [187], dalteparin, tinzaparin or UFH appear reasonable selections.

Conclusions

The goal of anticoagulation therapy, whether oral or parenteral, is to safely shift the coagulation system equilibrium further from thrombogenesis in patients with either a regional hypercoagulable (e.g. AF, mechanical heart valve) or systemic hypercoagulable (e.g. antiphospholipid syndrome) predisposition. Although effective, anticoagulation therapy is associated with both thrombotic and haemorrhagic ADRs, as well as unpredictable ADRs. Currently, 50–60% of observed INR variability can be explained in patients on warfarin, with the majority attributable to genetic variation in VKORC1 and CYP2C9. However, whilst the relationship between INR and clinical events is well characterised with respect to the use of warfarin, associations between aPTT or anti-Xa levels and clinical outcomes in patients on UFH or LMWHs, respectively, appear less clear and need further investigation. Furthermore, although factors have been associated with interindividual variation in response to DOACs (e.g. weight, and renal function), UFH (e.g. weight) and LWMHs (e.g. weight, and renal function for enoxaparin), the majority of observed variation in monitoring assays and clinical outcomes remains unexplained. Therefore, further research and large-scale anticoagulation therapy studies are required, especially considering their widespread and increasing use and the potential severity of adverse effects (bleeding or thrombosis). Priority research areas include: determining if extreme DOAC systemic exposures are associated with adverse clinical outcomes, conducting larger studies involving patients typically excluded from anticoagulation RCTs (e.g. at the extremes of weight, renal dysfunction), identifying novel biomarkers associated with differential anticoagulant response via systematic utilisation of omics- technologies (e.g. genomics, proteomics, metabolomics), and development of better methods to improve warfarin anticoagulation in under-served populations where usage is high. Large scale studies powered for clinical endpoints would be ideal and would help resolve the uncertainties arising from conflicting smaller studies. However, well designed studies using established anticoagulation biomarkers such as INR and anti-Xa would also be acceptable, and are likely to be cheaper and smaller than clinical end-point studies. Larger trials will most likely need international collaboration which inevitably will increase cost and complexity. Ultimately, clinicians strive for primum non nocere (‘first, do not harm’). This is highly relevant with anticoagulation where therapy is aiming to strike a fine balance between bleeding and thrombotic risks. Precision anticoagulant prescribing through better choice of either dose and/or drug may help in achieving this balance, but unfortunately, we are ‘not there yet’.

Data availability

This is a review article and all the relevant papers used for the review have been cited. No additional original data was used in the writing of the review, and therefore no specific data needs to be made available.

References

Health & Social Care Information Centre (HSCIC) (2015). Prescription Cost Analysis, England - 2015

Health & Social Care Information Centre (HSCIC) (2014). Prescription Cost Analysis England 2014.

Anticoagulation Europe (UK) Commissioning effective anticoagulation services for the future. 2012.

Afzal S, Zaidi STR, Merchant HA, Babar ZU, Hasan SS. Prescribing trends of oral anticoagulants in England over the last decade: a focus on new and old drugs and adverse events reporting. J Thromb Thrombolysis. 2021;52:646–53.

Spear BB, Heath-Chiozzi M, Huff J. Clinical application of pharmacogenetics. Trends Mol Med. 2001;7:201–4.

Hobbs FD, Fitzmaurice DA, Mant J, Murray E, Jowett S, Bryan S, et al. A randomised controlled trial and cost-effectiveness study of systematic screening (targeted and total population screening) versus routine practice for the detection of atrial fibrillation in people aged 65 and over. The SAFE study. Health Technol Assess. 2005;9:iii–iv, ix-x, 1-74.

Tieleman RG, Plantinga Y, Rinkes D, Bartels GL, Posma JL, Cator R, et al. Validation and clinical use of a novel diagnostic device for screening of atrial fibrillation. Europace. 2014;16:1291–5.

Johansson C, Hägg L, Johansson L, Jansson J-H. Characterization of patients with atrial fibrillation not treated with oral anticoagulants. Scand J Prim Health Care. 2014;32:226–31.

Turner RM, Park BK, Pirmohamed M. Parsing interindividual drug variability: an emerging role for systems pharmacology. Wiley Interdiscip Rev Syst Biol Med. 2015;7:221–41.

Pirmohamed M, James S, Meakin S, Green C, Scott AK, Walley TJ, et al. Adverse drug reactions as cause of admission to hospital: prospective analysis of 18 820 patients. BMJ (Clin Res ed). 2004;329:15–9.

Pirmohamed M. Warfarin: almost 60 years old and still causing problems. Br J Clin Pharm. 2006;62:509–11.

Jones M, McEwan P, Morgan CL, Peters JR, Goodfellow J, Currie CJ. Evaluation of the pattern of treatment, level of anticoagulation control, and outcome of treatment with warfarin in patients with non-valvar atrial fibrillation: a record linkage study in a large British population. Heart. 2005;91:472–7.

Boulanger L, Kim J, Friedman M, Hauch O, Foster T, Menzin J. Patterns of use of antithrombotic therapy and quality of anticoagulation among patients with non-valvular atrial fibrillation in clinical practice. Int J Clin Pract. 2006;60:258–64.

Wysowski DK, Nourjah P, Swartz L. Bleeding complications with warfarin use: a prevalent adverse effect resulting in regulatory action. Arch Intern Med. 2007;167:1414–9.

Gulløv AL, Koefoed BG, Petersen P. Bleeding during warfarin and aspirin therapy in patients with atrial fibrillation: the AFASAK 2 Study. Arch Intern Med. 1999;159:1322–8.

Gage BF, Eby C, Milligan PE, Banet GA, Duncan JR, McLeod HL. Use of pharmacogenetics and clinical factors to predict the maintenance dose of warfarin. Thromb Haemost. 2004;91:87–94.

Wells PS, Holbrook AM, Crowther NR, Hirsh J. Interactions of warfarin with drugs and food. Ann Intern Med. 1994;121:676–83.

Gage BF, Eby C, Johnson JA, Deych E, Rieder MJ, Ridker PM, et al. Use of pharmacogenetic and clinical factors to predict the therapeutic dose of warfarin. Clin Pharm Ther. 2008;84:326–31.

Absher RK, Moore ME, Parker MH, Rivera-Miranda G, Perreault MM. Patient-specific factors predictive of warfarin dosage requirements. Ann Pharmacother. 2002;36:1512–7.

Sconce E, Avery P, Wynne H, Kamali F. Vitamin K supplementation can improve stability of anticoagulation for patients with unexplained variability in response to warfarin. Blood. 2007;109:2419–23.

Ansell J, Hirsh J, Dalen J, Bussey H, Anderson D, Poller L, et al. Managing oral anticoagulant therapy. Chest. 2001;119:22S–38S.

Faber MS, Fuhr U. Time response of cytochrome P450 1A2 activity on cessation of heavy smoking. Clin Pharm Ther. 2004;76:178–84.

Johnson JA, Cavallari LH. Warfarin pharmacogenetics. Trends Cardiovasc Med. 2015;25:33–41.

Lee CR, Goldstein JA, Pieper JA. Cytochrome P450 2C9 polymorphisms: a comprehensive review of the in-vitro and human data. Pharmacogenetics. 2002;12:251–63.

Johnson JA, Gong L, Whirl-Carrillo M, Gage BF, Scott SA, Stein CM, et al. Clinical Pharmacogenetics Implementation Consortium guidelines for CYP2C9 and VKORC1 genotypes and warfarin dosing. Clin Pharm Ther. 2011;90:625–9.

Yang J, Chen Y, Li X, Wei X, Chen X, Zhang L, et al. Influence of CYP2C9 and VKORC1 genotypes on the risk of hemorrhagic complications in warfarin-treated patients: a systematic review and meta-analysis. Int J Cardiol. 2013;168:4234–43.

Higashi MK, Veenstra DL, Kondo LM, Wittkowsky AK, Srinouanprachanh SL, Farin FM. Association between CYP2C9 genetic variants and anticoagulation-related outcomes during warfarin therapy. JAMA. 2002;287:1690–8.

Aquilante CL, Langaee TY, Lopez LM, Yarandi HN, Tromberg JS, Mohuczy D, et al. Influence of coagulation factor, vitamin K epoxide reductase complex subunit 1, and cytochrome P450 2C9 gene polymorphisms on warfarin dose requirements. Clin Pharmacol Ther. 2006;79:291–302.

Carlquist JF, Horne BD, Muhlestein JB, Lappé DL, Whiting BM, Kolek MJ, et al. Genotypes of the cytochrome p450 isoform, CYP2C9, and the vitamin K epoxide reductase complex subunit 1 conjointly determine stable warfarin dose: a prospective study. J Thromb Thrombolysis. 2006;22:191–7.

Sconce EA, Khan TI, Wynne HA, Avery P, Monkhouse L, King BP, et al. The impact of CYP2C9 and VKORC1 genetic polymorphism and patient characteristics upon warfarin dose requirements: proposal for a new dosing regimen. Blood. 2005;106:2329–33.

Wadelius M, Chen LY, Lindh JD, Eriksson N, Ghori MJR, Bumpstead S, et al. The largest prospective warfarin-treated cohort supports genetic forecasting. Blood. 2009;113:784–92.

Schwarz UI. Clinical relevance of genetic polymorphisms in the human CYP2C9 gene. Eur J Clin Investig. 2003;33:23–30.

Tai G, Farin F, Rieder MJ, Dreisbach AW, Veenstra DL, Verlinde CLMJ, et al. In-vitro and in-vivo effects of the CYP2C9*11 polymorphism on warfarin metabolism and dose. Pharmacogenet Genom. 2005;15:475–81.

Perera MA, Cavallari LH, Limdi NA, Gamazon ER, Konkashbaev A, Daneshjou R, et al. Genetic variants associated with warfarin dose in African-American individuals: a genome-wide association study. Lancet. 2013;382:790–6.

Takeuchi F, McGinnis R, Bourgeois S, Barnes C, Eriksson N, Soranzo N, et al. A genome-wide association study confirms VKORC1, CYP2C9, and CYP4F2 as principal genetic determinants of warfarin dose. PLoS Genet. 2009;5:e1000433.

Cha PC, Mushiroda T, Takahashi A, Kubo M, Minami S, Kamatani N, et al. Genome-wide association study identifies genetic determinants of warfarin responsiveness for Japanese. Hum Mol Genet. 2010;19:4735–44.

Yuan HY, Chen JJ, Lee MT, Wung JC, Chen YF, Charng MJ, et al. A novel functional VKORC1 promoter polymorphism is associated with inter-individual and inter-ethnic differences in warfarin sensitivity. Hum Mol Genet. 2005;14:1745–51.

Johnson JA, Cavallari LH. Pharmacogenetics and cardiovascular disease–implications for personalized medicine. Pharmacol Rev. 2013;65:987–1009.

Reitsma PH, van der Heijden JF, Groot AP, Rosendaal FR, Buller HR. A C1173T dimorphism in the VKORC1 gene determines coumarin sensitivity and bleeding risk. PLoS Med. 2005;2:e312.

Schwarz UI, Ritchie MD, Bradford Y, Li C, Dudek SM, Frye-Anderson A, et al. Genetic determinants of response to warfarin during initial anticoagulation. N Engl J Med. 2008;358:999–1008.

Crawford DC, Ritchie MD, Rieder MJ. Identifying the genotype behind the phenotype: a role model found in VKORC1 and its association with warfarin dosing. Pharmacogenomics. 2007;8:487–96.

Limdi NA, McGwin G, Goldstein JA, Beasley TM, Arnett DK, Adler BK, et al. Influence of CYP2C9 and VKORC1 1173C/T genotype on the risk of hemorrhagic complications in African-American and European-American patients on warfarin. Clin Pharmacol Ther. 2008;83:312–21.

Loebstein R, Dvoskin I, Halkin H, Vecsler M, Lubetsky A, Rechavi G, et al. A coding VKORC1 Asp36Tyr polymorphism predisposes to warfarin resistance. Blood. 2007;109:2477–80.

Borgiani P, Ciccacci C, Forte V, Sirianni E, Novelli L, Bramanti P, et al. CYP4F2 genetic variant (rs2108622) significantly contributes to warfarin dosing variability in the Italian population. Pharmacogenomics. 2009;10:261–6.

Daneshjou R, Gamazon ER, Burkley B, Cavallari LH, Johnson JA, Klein TE, et al. Genetic variant in folate homeostasis is associated with lower warfarin dose in African Americans. Blood 2014;124:2298–2305.

Klein TE, Altman RB, Eriksson N, Gage BF, Kimmel SE, Lee MT, et al. Estimation of the warfarin dose with clinical and pharmacogenetic data. N Engl J Med. 2009;360:753–64.

Johnson JA, Caudle KE, Gong L, Whirl-Carrillo M, Stein CM, Scott SA, et al. Clinical Pharmacogenetics Implementation Consortium (CPIC) Guideline for Pharmacogenetics-Guided Warfarin Dosing: 2017 update. Clin Pharm Ther. 2017;102:397–404.

Giugliano RP, Ruff CT, Braunwald E, Murphy SA, Wiviott SD, Halperin JL, et al. Edoxaban versus Warfarin in patients with atrial fibrillation. N Engl J Med. 2013;369:2093–104.

Mega JL, Walker JR, Ruff CT, Vandell AG, Nordio F, Deenadayalu N, et al. Genetics and the clinical response to warfarin and edoxaban: findings from the randomised, double-blind ENGAGE AF-TIMI 48 trial. Lancet. 2015;385:2280–7.

Vandell AG, Walker J, Brown KS, Zhang G, Lin M, Grosso MA, et al. Genetics and clinical response to warfarin and edoxaban in patients with venous thromboembolism. Heart. 2017;103:1800.

Pirmohamed M, Burnside G, Eriksson N, Jorgensen AL, Toh CH, Nicholson T, et al. A randomized trial of genotype-guided dosing of warfarin. N Engl J Med. 2013;369:2294–303.

Kimmel SE, French B, Kasner SE, Johnson JA, Anderson JL, Gage BF, et al. A pharmacogenetic versus a clinical algorithm for warfarin dosing. N Engl J Med. 2013;369:2283–93.

Jorgensen AL, Prince C, Fitzgerald G, Hanson A, Downing J, Reynolds J, et al. Implementation of genotype-guided dosing of warfarin with point-of-care genetic testing in three UK clinics: a matched cohort study. BMC Med. 2019;17:76.

Gage BF, Bass AR, Lin H, Woller SC, Stevens SM, Al-Hammadi N, et al. Effect of genotype-guided warfarin dosing on clinical events and anticoagulation control among patients undergoing hip or knee arthroplasty: the GIFT randomized clinical trial. JAMA. 2017;318:1115–24.

Vuorinen AL, Lehto M, Niemi M, Harno K, Pajula J, van Gils M, et al. Pharmacogenetics of anticoagulation and clinical events in warfarin-treated patients: a register-based cohort study with Biobank Data and National Health Registries in Finland. Clin Epidemiol. 2021;13:183–95.

Kim K, Duarte JD, Galanter WL, Han J, Lee JC, Cavallari LH, et al. Pharmacist-guided pharmacogenetic service lowered warfarin-related hospitalizations. Pharmacogenomics. 2023;24:303–14.

Asiimwe IG, Zhang EJ, Osanlou R, Jorgensen AL, Pirmohamed M. Warfarin dosing algorithms: a systematic review. Br J Clin Pharm. 2021;87:1717–29.

Asiimwe IG, Zhang EJ, Osanlou R, Krause A, Dillon C, Suarez-Kurtz G, et al. Genetic factors influencing Warfarin dose in Black-African Patients: a systematic review and meta-analysis. Clin Pharm Ther. 2020;107:1420–33.

Lindley KJ, Limdi NA, Cavallari LH, Perera MA, Lenzini P, Johnson JA, et al. Warfarin dosing in patients with CYP2C9*5 variant alleles. Clin Pharm Ther. 2022;111:950–5.

Ruff CT, Giugliano RP, Braunwald E, Hoffman EB, Deenadayalu N, Ezekowitz MD, et al. Comparison of the efficacy and safety of new oral anticoagulants with warfarin in patients with atrial fibrillation: a meta-analysis of randomised trials. Lancet. 2014;383:955–62.

Loffredo L, Perri L, Violi F. Impact of new oral anticoagulants on gastrointestinal bleeding in atrial fibrillation: a meta-analysis of interventional trials. Dig Liver Dis. 2015;47:429–31.

van der Hulle T, Kooiman J, den Exter PL, Dekkers OM, Klok FA, Huisman MV. Effectiveness and safety of novel oral anticoagulants as compared with vitamin K antagonists in the treatment of acute symptomatic venous thromboembolism: a systematic review and meta-analysis. J Thromb Haemost. 2014;12:320–8.

Adam SS, McDuffie JR, Lachiewicz PF, Ortel TL, Williams JW Jr. Comparative effectiveness of new oral anticoagulants and standard thromboprophylaxis in patients having total hip or knee replacement: a systematic review. Ann Intern Med. 2013;159:275–84.

Gómez-Outes A, Terleira-Fernández AI, Suárez-Gea ML, Vargas-Castrillón E. Dabigatran, rivaroxaban, or apixaban versus enoxaparin for thromboprophylaxis after total hip or knee replacement: systematic review, meta-analysis, and indirect treatment comparisons. BMJ (Clinical research ed) 2012;344:e3675.

Eikelboom JW, Connolly SJ, Brueckmann M, Granger CB, Kappetein AP, Mack MJ, et al. Dabigatran versus warfarin in patients with mechanical heart valves. N Engl J Med. 2013;369:1206–14.