Abstract

Signal processing has become central to many fields, from coherent optical telecommunications, where it is used to compensate signal impairments, to video image processing. Image processing is particularly important for observational astronomy, medical diagnosis, autonomous driving, big data and artificial intelligence. For these applications, signal processing traditionally has mainly been performed electronically. However these, as well as new applications, particularly those involving real time video image processing, are creating unprecedented demand for ultrahigh performance, including high bandwidth and reduced energy consumption. Here, we demonstrate a photonic signal processor operating at 17 Terabits/s and use it to process video image signals in real-time. The system processes 400,000 video signals concurrently, performing 34 functions simultaneously that are key to object edge detection, edge enhancement and motion blur. As compared with spatial-light devices used for image processing, our system is not only ultra-high speed but highly reconfigurable and programable, able to perform many different functions without any change to the physical hardware. Our approach is based on an integrated Kerr soliton crystal microcomb, and opens up new avenues for ultrafast robotic vision and machine learning.

Similar content being viewed by others

Introduction

Image processing, the application of signal processing techniques to photographs or videos, is a core part of emerging technologies such as machine learning and robotic vision1, with many applications to LIDAR for self-driving cars2, remote drones3, automated in-vitro cell-growth tracking for virus and cancer analysis4, optical neural networks5, ultrahigh-speed imaging6,7, holographic three-dimensional (3D) displays8,9, and others. Many of these require real-time processing of massive real-world information, placing extremely high demands on the processing speed (bandwidth) and throughput of image processing systems. While electrical digital signal processing (DSP) technologies10 are well established, they face intrinsic limitations in energy consumption and processing speed such as the well-known von Neumann bottleneck11.

To overcome these limitations, optical signal processing offers the potential for much higher speeds2, and this has been achieved using a variety of techniques including silicon photonic crystal metasurfaces12, surface plasmonic structures13, and topological interfaces14. These free-space, spatial-light devices offer many attractions such as compact footprint, low power consumption, and compatibility with commercial cameras and optical microscopes. However, they tend to be non-reconfigurable fixed systems designed to perform a single fixed function. On a more advanced level, human action recognition through processing of video image data, has been achieved using photonic computers15,16. However, these were achieved either in comparatively low speed systems15 or in high bandwidth (multi-TeraOP regime) systems based on bulk-optics that is incompatible with integration16. To date, optical systems, especially those compatible with integration17, still have not demonstrated that are capable of processing of large data sets of high-definition video images and at ultrahigh speeds—enough for real-time video image processing.

Here, we demonstrate an optical real-time signal processor for video images that is reconfigurable and compatible with integration. It is based on components that are either already integrated or have been demonstrated in integrated form, and operates at an ultrahigh bandwidth of 17 Terabits/s. This is sufficient to process ~400,000 (399,061) video signals both concurrently and in real-time, performing up to 34 functions on each signal simultaneously.

Here, the term “functions” refers to signal processing operations comprised of fundamental mathematical operations that are performed by the system, which in our case relate to object image edge enhancement, detection and motion blur. These functions operate on the input signal to extract or enhance these key characteristics and include both integral and fractional order differentiation, fractional order Hilbert transforms, and integration. For differentiation and Hilbert transforms we perform both integral order and a continuous range of fractional order transforms. Therefore, while there are 3 basic types of functions that we perform, with the inclusion of a range of integral and fractional orders we achieve 34 functions in total. Importantly, these 34 functions are all achieved without any change in hardware, but only by tuning the parameters of the system. Furthermore, beyond these 34 functions, the range of possible functions is in fact unlimited given that the system can process a continuous range of arbitrary fractional and high-order differentiation and fractional Hilbert transforms.

Our system is comparable to electrical DSP systems but with the important advantages that it operates at multi-terabit/s speeds, enabled by massively parallel processing. It is also very general, flexible, and highly reconfigurable—able to perform a wide range of functions without requiring any change in hardware. We perform multiple image processing functions in real-time, which are essential for machine vision and microscopy for tasks such as object recognition or identification, feature capture, and data compression12,13. We use an integrated Kerr soliton crystal microcomb source that generates 95 discrete taps, or wavelengths as the basis for massively parallel processing, with single channel rates at 64 GigaBaud (pixels/s). Our experimental results agree well with theory, demonstrating that the processor is a powerful approach for ultrahigh-speed video image processing for robotic vision, machine learning, and many other emerging applications.

Results

Principle of operation

The operational principle of the video image processor is based on the RF photonic transversal filter18,19,20 approach, as represented by Eq. (1) and illustrated in Fig. 1a–c(i–iii). We employ wavelength division multiplexed (WDM) signals to provide the different taps, or channels– each wavelength representing a single tap/channel. We also use WDM as a central means of accomplishing both single and multiple functions simultaneously. The tap delays required by Eq. 1 are achieved here by an optical delay line in the form of standard single-mode fiber (SMF) in order to perform the wavelength (i.e., tap or channel) dependent delays. We use WaveShaper to flatten the comb and implement the channel weightings for the transversal filter for each function, as well as to separate different groups of wavelengths to perform parallel and simultaneous processing of multiple functions. We used a maximum of 95 wavelengths supplied by a soliton crystal Kerr microcomb that produced a comb spacing of ~50 GHz. The transfer function of the system is given by

where ω is the RF angular frequency, T is the time delay between adjacent taps (i.e., wavelength channels), and h(n) is the tap coefficient of the nth tap, or wavelength, which can be calculated by performing the inverse Fourier transform of H(ω)18,19,20. In Eq. (1), the tap coefficients can be tailored by shaping the power of comb lines according to the different computing functions (e.g., differentiation, integration, and Hilbert transformation), thus enabling different video image processing functions. For microcombs with multiple equally spaced comb lines transmitted over the dispersive SMF, in Eq. (1) T is given by \(T=D\times L\times \triangle \lambda\), where D is the dispersion coefficient of the SMF, L is the length of the SMF, Δλ is the spectral separation between adjacent comb lines (in our case 48.9 GHz) and the RF bandwidth of the system is given by f = 1/T. The optical delay lines play a crucial role in achieving simultaneous processing by introducing wavelength dependent controlled time delays to the different channels, enabling the functions to be processed independently and in parallel. These time delays coincide with the requirements of the transversal filter function (Eq. 1) and ensure that the input signals for each function are properly aligned and synchronized. To change the system bandwidth one needs to change the time delay between adjacent wavelengths. While this is generally fixed for a given system, it can be changed by either using different lengths of SMF or alternatively adding a length of dispersion compensating fiber (DCF) which effectively reduces the net dispersion D of the fiber, equivalent to decreasing the SMF fiber length. To achieve dynamic tuning of the RF bandwidth would require a tunable delay line which is beyond the scope of our work.

PD photodetector. a Diagram illustration of the flattening method applied to the input video frames including both horizontally and vertically. b Schematic illustration of experimental setup for video image processing. c The processed video frames after (i) 0.5 order differentiation for edge detection, (ii) integration for motion blue, and (iii) Hilbert transformation for edge enhancement.

All 95 wavelengths from the microcomb were passed through a single output port WaveShaper which flattened the comb and weighted the individual lines according to the required tap weights for the particular function being performed. The weighted wavelengths were then passed through an electro-optic modulator which was driven by the analog input video signal. The output function was finally generated by summing all of the wavelengths, achieved by photodetection of all wavelengths.

The setup for the massively parallel signal processing demonstration is shown in Fig. 2, which uses an approach similar to that used for our ultrahigh-speed optical convolution accelerator5. Figure 2 shows the results for 34 different functions, which are listed in detail along with their individual parameters in Supp. Table S1. In this work, for the ultrahigh-speed demonstration we chose fewer taps for each function—typically 5—in order to increase the number of functions we could perform, while maximizing the overall speed or bandwidth. We found that 5 taps was the minimum number that was able to achieve good performance, striking a balance between complexity and efficiency, providing satisfactory performance for the desired functions while minimizing the number of required elements. We were able to achieve 34 functions simultaneously overall. As for the initial tests, the microcomb lines were fed into a WaveShaper then weighted and directed to an EO modulator followed by the SMF delay fiber. Lastly, the generated RF signal for each function was resampled and converted back to digital video image frames, which formed the digital output signal of the system.

EDFA erbium doped fiber amplifier, MRR micro-ring resonator, EOM electro-optical Mach-Zehnder modulator, SMF single-mode fiber, WS WaveShaper. PD photodetector, OC optical coupler. Detailed parameters for each function have been shown in Supp. Table S1.

In our experiments the analog input video image frames were first digitized and then flattened into 1D vectors (X) and encoded as the intensities of temporal symbols in a serial electrical waveform by a high speed analog to digital converter with a resolution of 8 bits per symbol at a sampling rate of 64 gigabaud (see Methods). In principle, for analog video signals this A/D and D/A step can be avoided. We added this step since this allowed us to dramatically increase the speed of the video signal over standard video rates in order to fully exploit the ultrahigh-speed of our processor.

The use of WDM and WaveShapers allows for very flexible allocation of wavelengths and highly reconfigurable tuning. By employing these components in a carefully designed configuration where each function only requires a limited number of wavelengths, multiple functions can be simultaneously processed. By configuring the WaveShapers appropriately according to the transfer function, different functions can be applied to different groups of wavelength channels, facilitating simultaneous processing of multiple functions across the entire microcomb spectrum.

In the experiments presented here, we demonstrate real-time video image processing, simultaneously executing 34 functions encompassing edge enhancement, edge detection, and motion blur. Edge detection serves as the foundation for object detection, feature capture, and data compression12,13. We achieve this by temporal signal differentiation with either high integral or fractional order derivatives that extract information about object boundaries within images or videos. We also perform a motion blur function based on signal integration that represents the apparent streaking of moving objects in images or videos. It usually occurs when the image being recorded changes during the recording of a single exposure, and has wide applications in computer animation and graphics21. Edge enhancement or sharpening based on signal Hilbert transformation is also a fundamental processing function with wide applications22. It enhances the edge contrast of images or videos, thus improving their acutance. The standard Hilbert transform implements a 90 degree phase shift and is commonly used in signal processing to generate a complex analytic signal from a real-valued signal. We also employ arbitrary, or fractional order, Hilbert transforms which have been shown to be particularly useful for object image edge enhancement23. These processing functions not only underpin conventional image or video processing24,25 but also facilitate emerging technologies such as robotic vision and machine learning2,4.

To achieve fractional order operations, the system utilizes the concept of optical fractional differentiation and Hilbert transforms26. This is accomplished by carefully designing the WaveShapers in the optical signal processing setup with the appropriate set of weights (phase and amplitude) for shaping the optical signals in the frequency domain, and their configurations can be adjusted to achieve different fractional order operations. While we use off-the-shelf commercial WaveShapers, in practice these can be realized using various techniques compatible with integration, such as cascaded Mach-Zehnder interferometers or programmable phase modulators. These components enable precise control over the spectral phase and amplitude profiles of the optical signals, allowing the realization of fractional order operations.

A key feature of our system that was critical in achieving high fidelity and performance signal processing was the improvement we obtained in the frequency comb spectral line shaping accuracy. To accomplish this we employed a two-stage shaping strategy (see methods)27 where a feedback control path was employed to calibrate the system and further improve the comb shaping accuracy. The feedback loop in the optical signal processing system plays a crucial role in ensuring the optimization of signal processing quality. It involves monitoring the system’s output and feeding it back to adjust the tap weights in order to achieve the best performance (see Methods for more detail). The error signal was generated by directly measuring the impulse response of the system and then comparing it with ideal tap coefficients. Note that this type of feedback calibration approach is challenging and rarely used for analog optical signal computing, such as the systems based on either spatial-light metasurfaces12,28 or waveguide resonators29,30, for example.

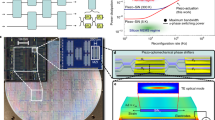

Our system is based on a soliton crystal (SC) microcomb source, generated in an integrated MRR18,19,20 (Fig. 3(a, b), Supplementary Note 1, Supplementary Fig. S1–7). Since their first demonstration in 200731, and subsequently in CMOS compatible integrated form32, optical frequency combs generated by compact micro-scale resonators, or micro-combs32,33,34,35, have led to significant breakthroughs in many fields such as metrology36, spectroscopy35,37, telecommunications33,38, quantum optics39,40, and radio-frequency (RF) photonics18,19,20,41,42,43,44. Microcombs offer new possibilities for major advances in emerging applications such as optical neural networks5, frequency synthesis29, and light detection & ranging (LIDAR)2,45,46. With a good balance between gain and cavity loss as well as dispersion and nonlinearity, soliton microcombs feature high coherence and low phase noise and have been highly successful for many RF photonic applications19,23,47,48,49,50,51.

The SC microcomb is generated in a 4-port integrated micro-ring resonator (MRR) with an FSR of 48.9 GHz. Optical spectra of (i) Pump. (ii) Primary comb with a spacing of 39 FSRs. (iii) Primary comb with a spacing of 38 FSRs. (iv) SC micro-comb. a Optical spectrum of the micro-comb when sweeping the pump wavelength. b Measured soliton crystal step of the intra-cavity power.

SC microcombs, multiple self-organized solitons52, have been highly successful particularly for RF photonic signal processing18,19,20,26,53,54,55,56, ultra-dense optical data transmission33, and optical neuromorphic processing5,57. They feature very high coherence with low phase noise56, are intrinsically stable with only open-loop control (Supplementary Note 1, Supplementary Fig. S2 and Supplementary Movie S1) and can be simply and reliably initiated via manual pump wavelength sweeping. Further, they have intrinsically high conversion efficiency since the intra-cavity energy is much higher than for single-soliton states5,33. Our microcomb has a low free spectral range (FSR) of ~48.9 GHz, closely matching the ITU frequency grid of 50 GHz, and generates over 80 wavelengths in the telecom C-band, which serves as discrete taps for the video image processing system.

Experimental results—static images

Since each frame of a streaming video signal is essentially a static image, we initially benchmarked the system single function system performance on static images with varying numbers of taps to understand the tradeoffs in performance. Figure 4a(i–iii), b(i–iii), c(i–iii), d(i–iii), e(i–iii), f(i–iii), g(i–iii), h(i–iii), i(i–iii) shows the experimental results of static image processing using the above RF photonic system. We conducted initial experiments to investigate the performance of the transfer functions with 15, 45, and 75 taps for single function performance, where the WaveShaper was set to zero out any unneeded wavelengths. These experiments were performed on single static images—i.e., a single frame of the video signal, as shown in Fig. 4. This was aimed at exploring the influence of the tap number on the signal processing performance and determining the optimal tap number for the subsequent massively parallel signal processing demonstration. This allowed us to assess these tradeoffs between complexity, accuracy, and efficiency in the signal processing operations.

a‒c Results for edge detection based on differentiation with order of 0.5, 0.75, and 1, respectively. d–f Results for motion blur based on integration with tap number of 15, 45, and 75, respectively. g–I Results for edge enhancement based on Hilbert transformation with operation bandwidth of 18 GHz, 12 GHz, and 38 GHz, respectively. a‒I (i) shows the designed and measured optical spectra of the shaped microcomb, (ii) shows the measured and simulated spectral response of the video image processing system, and (iii) shows the measured and simulated high definition (HD) video images after processing.

The original (unprocessed) high definition (HD) digital images had a resolution of 1080 × 1620 pixels. The results for edge detection based on signal differentiation with orders of 0.5, 0.75, and 1 are shown in Fig. 2a–c, respectively. In each figure, we show (i) the designed and measured spectra of the shaped comb, (ii) the measured and simulated spectral response, and (iii) the measured and simulated images after processing. The measured comb spectra and spectral response were recorded by an optical spectrum analyser (OSA) and a vector network analyser (VNA), respectively. The experimental results agree well with theory, indicating successful edge detection for the original images.

In Fig. 3d–f, we show the results for motion blur based on signal integration with different tap numbers of 15, 45, and 75, respectively. These are also in good agreement between the experimental results and theory. The blur intensity increases with the increased number of taps, reflecting the fact that there is improved processing performance as the number of taps increases. Compared with discrete laser arrays that feature bulky sizes, limiting the number of available taps, microcombs generated by a single MRR can operate as a multi-wavelength source that provides a large number of wavelength channels, as well as greatly reducing the size, power consumption, and complexity. This is very attractive for the RF photonic transversal filter system that requires a large number of taps for improved processing performance.

Figure 3g–I show the results of edge enhancement based on signal Hilbert transformation (90° phase shift) with different operation bandwidths of 12 GHz, 18 GHz, and 38 GHz, respectively. In our experiment, the operation bandwidth was adjusted by changing the comb spacing (2 FSRs vs 3 FSRs of the MRR) and the fiber length (1.838 km vs 3.96 km). Note that having to change the fiber length in principle can be avoided by using tunable dispersion compensators58,59. The WaveShaper can accommodate any FSR (channel spacing) as long as it fits roughly within telecom band channel spacings. Thus the system is very flexible and can accommodate any FSR by the WaveShaper or delay by changing the length of SMF if a different microcomb device is used. Alternatively, the WaveShaper can be used to filter out certain channels if a larger effective channel spacing is desired compared to the source FSR. The tradeoff in varying the FSR is that smaller FSRs yield lower bandwidths whereas larger FSRs reduce the number of wavelengths within the telecom C band. As can be seen, the edges in the images are enhanced, and the experimental results are consistent with the simulations.

We also demonstrate more specific image processing such as edge detection based on fractional differentiation with different orders of 0.1‒0.9, edge enhancement based on fractional Hilbert transformation with different phase shifts of 15°‒75°, and edge detection with different operation bandwidths of 4.6 GHz ‒ 36.6 GHz (Supplementary Note 2, Supplementary Fig. S3‒S6). By changing the relevant parameters, this resulted in processed images with different degrees of edge detection, motion blur, and edge enhancement. By simply programming the WaveShaper to shape the comb lines according to the designed tap coefficients, different image processing functions were realized without changing the physical hardware. This reflects the high reconfigurability of our video image processing system, which is challenging for image processing based on spatial-light devices12,13,14. In practical image processing, there is not one single processing function that has one set of parameters that can meet all the requirements. Rather, each processing function requires its own unique set of tap weights. Hence, the high degree of reconfigurability and versatility of our image processing system is critical to meet diverse and practical processing requirements.

Experimental results—real-time video

In addition to static image processing, our microcomb based RF photonic system can also process dynamic videos in real-time. Our results for real-time video processing are provided in supplementary Movie S2, while Supplementary Fig. S8–10 show samples of these experimental results. The supplementary Movie S2. starts off with the first original source video frames and is followed by the simulation and experiment results shown side by side for the differentiator, integrator, and Hilbert transformer. This is then followed by the 34 functions (Supplementary Note 2, Supplementary Table S1) performed simultaneous by the massively parallel video processor. Finally, high order of derivatives are shown, and the video ends with results based on full two-dimensional derivatives (see below).

The first original video had a resolution of 568 × 320 pixels and a frame rate of 30 frames per second. Supplementary Fig. S8a shows 5 frames of the original video, together with the corresponding electrical waveform after digital-to-analog conversion. Supplementary Fig. S8b–d show the corresponding results for the processed video after edge detection (0.5 order fractional differentiation), motion blur (integration with 75 taps), and edge enhancement based on a Hilbert transformation with an operation bandwidth of 18 GHz, respectively. As for the static image processing, the real-time video processing results show good agreement with theoretical predictions.

To fully exploit the bandwidth advantage of optical processing, we further performed massively parallel real-time multi-functional video processing. The experimental setup and results are shown in Fig. 4 and Supplementary Note 2, Supplementary Fig. S8–10. We used 95 comb lines around the C band in our demonstration. After flattening and splitting the comb lines via the first WaveShaper, we obtained 34 parallel processors, most of which consisted of five taps. We simultaneously performed 34 video image processing functions, including fractional differentiation with fractional order from 0.05 to 1.1, fractional Hilbert transformation with phase shift from 65° to 90°, an integrator, and bandpass Hilbert transformation with a 90° phase shift (see Supplementary Table S1 for detailed parameters for each function). The corresponding total processing bandwidth equals 64 GBaud × 34 (functions) × 8 bits = 17.4 Terabit/s ‒ well beyond the processing bandwidth of electrical video image processors10.

Discussion

To analyze the performance of our video image processor, we evaluated the processed images based on the ground truth for both quantitative and qualitative comparisons60. We used respective ground truths for the evaluation of 3 BSD (Berkeley Segmentation Database) images after edge detection and compared relevant performance parameters with the same images processed based on the widely used Sobel’s algorithm61,62. (In signal processing and data analysis, “Ground Truth” typically refers to the objectively true or correct values or information that serves as a reference for evaluating the performance or accuracy of a system or algorithm.) Fig. 5 shows the images processed using Sobel’s algorithm and our video image processor (including differentiation with different orders from 0.2 to 1.0). The comparison of the performance parameters including performance ratio (PR) and F-Measure is provided in Table 1, where higher values of these parameters reflect a better edge detection performance. As can be seen, our differentiation results for PR and F-Measures are better than Sobel’s approach, reflecting the high performance of our video image processor.

The maximum input rate we used was 64 GBaud, or Gigapixels/s. This, combined with the fact that we performed 34 channels with a video resolution of 568 × 320 that resulted in 181,760 pixels at a frame rate of 30 Hz, yields 5,452,800 pixels/s, resulting in simultaneous real-time processing of 64 × 109 × 34/(181,760 × 30) = 399,061 video signals per second. For HD videos (720 × 1280 = 921,600 pixels) at a frame rate of 50 Hz, this equates to ~47,222 video signals in parallel. The processing throughput can be increased even further by using more comb lines in the L-band.

We provide the root mean square errors (RMSEs) in Supplementary Fig. S7 and Table S2 to quantitatively assess the agreement between the measured waveforms and the theoretical results for different image processing functions. We find that for the Hilbert transformer, for example, with tunable phase shift, the RMSE values ranged from 0.0586 to 0.1045, depending on the specific phase shift angle. These RMSE values provide a quantitative measure of the agreement between the experimental measurements and the theoretical predictions. Lower RMSE values generally indicate a better correspondence between the two. Our results for the RSMEs indicate that the measured waveforms closely align with the expected behavior of the respective image processing functions.

The processing accuracy of our system is lower than electrical DSP image processing but higher than analog image processing based on passive optical filters13,22,30 (see Supplementary Fig. S7 and Table S1). Different lengths of fiber were used to be compatible with the different spacings of the different FSRs used (set by the Waveshaper) and to achieve an optimum RF bandwidth. This is mainly a result of the hybrid nature of our system, which is equivalent to electrical DSP systems but implemented by photonic hardware. There are a number of factors that can lead to tap errors during the comb shaping, thus leading to a non-ideal frequency response of the system as well as deviations between the experimental results and theory. These mainly include a limited number of available taps, the instability of the optical microcomb, the accuracy of the WaveShapers, the gain variation with wavelength of the optical amplifiers, the chirp induced by the optical modulator, the second-order dispersion (SOD) induced power fading, and the third-order dispersion (TOD) of the dispersive fiber. Chirp-induced errors refers to distortions that arise in the signal processing system due to the presence of chirp in the optical signals. Chirp (frequency modulation or shift in time) can be caused by various factors, such as dispersion or nonlinearity in the optical components.

We encode the image pixels directly on to the optical signal using the intensity modulator. The reason we slice the input image is because our AWG performs 1 dimensional signal operations, otherwise this is not necessary. There are a variety of ways to slice the input image. The video signal is encoded without any time delay onto the optical wavelengths. For processing the signal, although SMF is used to achieve the incremental delay lines required by the transversal filter transfer function, it does not slow down the speed of the system but only adds to the latency. For the same image/video signal with the same delay line, we only need one modulator to encode the input signal. We pre- post- the image using the arbitrary waveform generator to digitize the analog signal and convert it into a high speed analog signal to enable us to perform with the full capability of our signal processor. In principle this pre- post- processing is not necessary since the system can process and output analog signals directly. The AWG does not form a fundamental part of our processor.

In terms of the energy efficiency of the optical signal processor, we use the same approach as reported elsewhere5. The power consumption of the comb source is estimated to be 1500 mW while that of the EDFAs is estimated at 2000 mW (100 mW for each EDFA) and for the intensity modulator is ~3.4 V × 0.01 A = 34 mW. The overall computing speed of the optical signal processor is 2 × 34 × 5 × 62.9 = 21.386 TeraOPs/s. As such, the energy per bit of the optical signal processor is roughly (1500 + 2000 + 34 × 19) mW/21.386 TeraOPs/s = 0.194pJ/operation.

The number of available taps can be increased by using MRRs with smaller FSRs or optical amplifiers with broader operation bandwidths. The accuracy realized by the WaveShapers and the optical amplifiers was significantly improved by using a two-stage comb shaping strategy as well as the feedback loop calibration mentioned previously27. By using low-chirp modulators, the chirp-induced tap errors can be suppressed. The discrepancies induced by the SOD and TOD of the dispersive fibers can also be reduced by using a second WaveShaper to compensate for the group delay ripple of the system (see Supplementary Note 3, Supplementary Fig. S11).

Our massively parallel photonic video image processor, which operates on the principle of time-wavelength-spatial multiplexing, similar to the optical vector convolutional accelerator in our previous work5, is also capable of performing convolution operations for deep learning neural networks. This opens up new opportunities for image or video processing applications in robotic vision and machine learning. In particular, each parallel function can be trained and performed as many as 34 kernels with a size of 5 by 1 for the convolutional neural network, therefore could ultimately achieve a neural network, avoiding the bandwidth limitation given by the analog-to-digital converters. Note that, without the use of an AWG or OSC, our processor could directly process analog signals, while with the use of an AWG and OSC it can also process digital signals. Hence it effectively is equivalent to electrical DSP.

Although the system presented here operated at a speed of ~17 Terabits/s, it is highly scalable in speed. Figure S12 shows a video image processor using the C + L + S bands (with 405 wavelengths distributed over 81 processors with 5 taps each in size) and 19 spatial paths, all exploiting polarization, yielding a total processing bandwidth of 1.575 Petabits/s (Supplementary Note 4).

Our system is highly compatible with integrated technologies and so there is a strong potential for much higher levels of integration, even reaching full monolithic integration. The core component of our system, the microcomb, is already fully integrated. Further, all of the other components have been demonstrated in integrated form, including integrated InP spectral shapers59, high-speed integrated lithium niobite modulators63,64, integrated dispersive elements59, and photodetectors65. Finally, low power consumption and highly efficient microcombs have been demonstrated with single-soliton states66 and laser cavity-soliton Kerr combs67,68, which would greatly reduce the energy requirements. A key advantage to monolithic integration would be the ability to integrate electronic elements on-chip such as an FPGA module for feedback control. Finally, being much more compact, the monolithically integrated system should be much less susceptible to the environment, thus reducing the required level of feedback control.

Conclusions

In conclusion, we report the first demonstration of video image processing based on Kerr microcombs. Our RF photonic processing system, with an ultrahigh processing bandwidth of 17.4 Tbs/s, can simultaneously process over 399,061 video signals in real-time. The system is highly reconfigurable via programmable control, and can perform different processing functions without changing the physical hardware. We experimentally demonstrate different video image processing functions including edge detection, motion blur, and edge enhancement. The experimental results agree well with theory, verifying the effectiveness of using Kerr microcombs for ultrahigh-speed video image processing. Our results represent a significant advancement for fundamental photonic computing, paving the way for practical ultrahigh bandwidth real-time photonic video image processing on a chip.

Methods

Microcomb generation

We use SC microcombs generated by an integrated MRR (Fig. 3 and Supplementary Fig. S1–6) for video image processing. The SC microcombs, which include multiple self-organized solitons confined within the MRR, were also used for our previous demonstrations of RF photonic signal processing26,53,54,55,56, ultra-dense optical data transmission33, and optical neuromorphic processing5,57.

The MRR used to generate SC microcombs (Fig. 3b) was fabricated based on a complementary metal–oxide–semiconductor (CMOS) compatible doped silica glass platform32,33. It has a radius of ~592 μm, a high quality factor of ~1.5 million, and a free spectral range (FSR) of ~0.393 nm (i.e., ~48.9 GHz). The low FSR results in a large number of wavelength channels, which are used as discrete taps in our RF photonic transversal filter system for video image processing. The cross-section of the waveguide was 3 μm × 2 μm, resulting in anomalous dispersion in the C-band (Supplementary Fig. S1). The input and output ports of the MRR were coupled to a fiber array via specially designed mode converters, yielding a low fiber-chip coupling loss of 0.5 dB/facet.

In our experiment, a continuous-wave (CW) pump light was amplified to 30.5 dBm and the wavelength was swept from blue to red. When the detuning between pump wavelength and MRR’s cold resonance became small enough, the intra-cavity power reached a threshold, and optical parametric oscillation driven by modulation instability (MI) was initiated. Primary combs (Fig. 3d(ii, iii)) were first generated, with the comb spacing determined by the MI gain peak33,34,69. As the detuning changed further, a second jump in the intra-cavity power was observed, where distinctive ‘fingerprint’ SC comb spectra (Fig. 3d-iv) appeared, with a comb spacing equal to the MRR’s FSR. The SC microcomb arising from spectral interference between the tightly packaged solitons circulating along the ring cavity exhibits high coherence and low RF intensity noise (Fig. 3c), which are consistent with our simulations (Supplementary Movie S1). It is also worth mentioning that the SC microcomb is highly stable with only open-loop temperature control (Supplementary Fig. S2). In addition, it can be generated through manual adiabatic pump wavelength sweeping—a simple and reliable initiation process that also results in much higher energy conversion efficiency than single-soliton states5.

Microcomb shaping

To achieve the designed tap weights, the generated SC microcomb was shaped in power using liquid crystal on silicon (LCOS) based spectral WaveShapers. We used two-stage comb shaping in the video image processing experiments. The generated SC microcomb was pre-flattened and split by the first WaveShaper (Finisar 16000 S), which yields an improved optical signal-to-noise ratio (OSNR) and a reduced loss control range for the second-stage comb shaping. The pre-flattened and split comb was then accurately shaped by the second WaveShaper (Finisar 4000 S) according to the designed tap coefficients for different video image processing functions. The positive and negative tap coefficients were achieved by separating the wavelength channels into two spatial outputs of the second WaveShaper and then detected by a balanced photodetector (Finisar BPDV2150R).

In order to improve the comb shaping accuracy, a feedback control loop was employed for the second WaveShaper. First, we used RF Gaussian pulses as the system input and measured replicas of the input pulses in different wavelength channels. Next, we extracted peak intensities of the system impulse response and obtained accurate RF-to-RF tap coefficients. Finally, the extracted tap coefficients were subtracted from the ideal tap coefficients to obtain an error signal, which was used to calibrate the loss of the second WaveShaper. After several iterations of the comb shaping loop, an accurate impulse response that compensated for the non-ideal impulse response of the system was obtained, thus significantly improving the accuracy of the RF photonic video image processing. Directly measuring the system impulse response is more accurate compared to measuring the optical power of the comb lines, given the slight difference between the two ports into the balanced detector. The shaped impulse responses for different image processing functions are shown in the Supplementary Figs. S3‒6.

Derivative (from fractional to high order)

The transfer function of a differentiator is given by

where j equals to \(\sqrt{-1}\), ω represents the angular frequency, and N is the order of differentiation, which in our case can be both fractional70 and integral18, even complex. The experiment results for both fractional and integral order differentiation can be seen in Supplementary Movie S2. The fractional-order is tunable from 0.05 to 1.1, with a step of 0.05. We achieved high order differentiation with an order of 2, 2.5, 3, which to the best of our knowledge, is the highest order of derivative that can be achieved for video image processing.

Two-dimensional video image processing

Normally, processing functions such as differentiation, operating on video signals, only result in a one dimensional process—since it acts on individual lines of the video raster image. However, by appropriately pre-processing the video signal it is possible to obtain a fully two-dimensional derivative71. fz(x, y) represent the zth frame of a video signal with O × P pixels, where x = 0, 1, 2, …, O − 1, y = 0, 1, 2, …, P − 1. Thus, the two-dimensional derivative result is given by:

where M, N is the number of taps, u = 0, 1, 2, …, M—1, v = 0, 1, 2, …, N − 1.

The electrical input data was temporally encoded by an arbitrary waveform generator (Keysight M8195A). The raw input matrices were first sliced horizontally and vertically into multiple rows and columns, respectively, which were flattened into vectors and connected head-to-tail. After that, the generated vectors were multicast onto different wavelength channels via a 40-GHz intensity eletro-optic modulator (iXblue). For the video with a resolution of 303× 262 pixels and a frame rate of 30 frames per second, we used a sampling rate of 64 Giga samples/s to form the input symbols. A dispersive fiber was employed to provide a progressive delay T. Next, the electrical output waveform was resampled and digitized by a high-speed oscilloscope (Keysight DSOZ504A) to generate the final output. The magnitude and phase responses of the RF photonic video image processing system were characterized by a vector network analyser (Agilent MS4644B 40 GHz bandwidth) working in the S21 mode. Finally, we restored the processed video into the original size of the matrix and took the average of horizontally and vertically processed video and formed this into a two-dimensional processed video (Supplementary Movie S2).

Details of the video image dataset

The high definition (HD) image with a resolution of 1080 × 1620 pixels we performed is a photo taken by Nikon D5600 in front of the Exhibition building in the center of Melbourne city, Australia, in 2020. The video of 568 × 320 pixels was captured by a Drone Quadcopter UAV with Optical Zoom camera (DJL Mavic Air2 Zoom), this was a short trip during the eastern holiday, in 2019. The author and her friend were started from Melbourne to Adelaide, passing the pink lake and playing guitar, this was a great memory before the pandemic and continuous lockdown in Melbourne. The short video of the skateboard with a resolution of 303 × 262 pixels was taken by the author using iPhone SE in front of Victoria Library, Melbourne, Australia, in 2020.

Data availability

All data is available upon reasonable request to the authors.

References

Petrou M., Bosdogianni P. Image processing: the fundamentals, John Wiley (1999)

Riemensberger, J. et al. Massively parallel coherent laser ranging using a soliton microcomb. Nature 581, 164–170 (2020).

Hodge, V. J., Hawkins, R. & Alexander, R. Deep reinforcement learning for drone navigation using sensor data. Neural Comput. Appl. 33, 2015–2033 (2021).

Fusciello, M. et al. Artificially cloaked viral nano-vaccine for cancer immunotherapy. Nat. Commun 10, 5747 (2019).

Xu, X. et al. 11 TOPS photonic convolutional accelerator for optical neural networks. Nature 589, 44–51 (2021).

Wang P., Liang J., & Wang L. V., Single-shot ultrafast imaging attaining 70 trillion frames per second, Nat. Commun. https://doi.org/10.1038/s41467-020-15745-4 (2020).

Gao, L., Liang, J., Li, C. & Wang, L. V. Single-shot compressed ultrafast photography at one hundred billion frames per second. Nature 516, 74–77 (2014).

Wakunami, K. et al. Projection-type see-through holographic three-dimensional display. Nat. Commun. 7, 12954 (2016).

Tay, S. et al. An updatable holographic three-dimensional display. Nature 451, 694–698 (2008).

Gonzalez, R., Digital image processing, New York, NY: Pearson. ISBN 978-0-13-335672-4. OCLC 966609831, (2018).

Backus, John, Can Programming Be Liberated from the von Neumann Style? A Functional Style and Its Algebra of Programs, Communications of the ACM. Vol. 21, No. 8: 613–641. Retrieved September 19, 2020—via Karl Crary, School of Computer Science, Carnegie Mellon University, (1978).

Zhou, Y., Zheng, H., Kravchenko, I. I. & Valentine, J. Flat optics for image differentiation. Nat. Photonics 14, 316–323 (2020).

Zhu, T. et al. Plasmonic computing of spatial differentiation. Nat. Commun. 8, 15391 (2017).

Zhu, T. et al. Topological optical differentiator. Nat. Commun. 12, 680 (2021).

Antonik, P., Marsal, N., Brunner, D. & Rontani, D. Human action recognition with a large-scale brain-inspired photonic computer. Nat. Mach. Intell. 1, 530–537 (2019).

Zhou, T. et al. Large-scale neuromorphic optoelectronic computing with a reconfigurable diffractive processing unit. Nat. Photonics 15, 367–373 (2021).

Ashtiani F., Geers A. J., Aflatouni F. An on-chip photonic deep neural network for image classification. Nature https://doi.org/10.1038/s41586-022-04714-0 (2022).

Xu, X. et al. Reconfigurable broadband microwave photonic intensity differentiator based on an integrated optical frequency comb source. APL Photonics 2, 096104 (2017).

Wu, J. et al. RF photonics: an optical micro-combs’ perspective. IEEE J. Select. Top. Quantum Electron. 24, 1–20 (2018). Article: 6101020.

Sun, Y. et al. Applications of optical micro-combs. Adv. Opt. Photonics 15, 86–175 (2023).

H. Ji, C. Q. Liu, Motion blur identification from image gradients, CVPR (2008).

Davis, J. A., McNamara, D. E. & Cottrell, D. M. Analysis of the fractional Hilbert transform. Appl. Opt. 37, 6911–6913 (1998).

Tan, M. et al. Highly versatile broadband RF photonic fractional hilbert transformer based on a Kerr soliton crystal microcomb. J. Light. Technol. 39, 7581–7587 (2021).

Capmany, J. et al. Microwave photonic signal processing. J. Light. Technol. 31, 571–586 (2013).

Yang, T. et al. Experimental observation of optical differentiation and optical Hilbert transformation using a single SOI microdisk chip. Scie. Rep. 4, 3960 (2014).

Tan, M. et al. Microwave and RF photonic fractional Hilbert transformer based on a 50 GHz Kerr micro-comb. J. Light. Technol. 37, 6097–6104 (2019).

Tan, M. et al. Integral order photonic RF signal processors based on a soliton crystal micro-comb source. J. Optics 23, 125701 (2021).

Zangeneh-Nejad, F., Sounas, D. L., Alù, A. & Fleury, R. Analogue computing with metamaterials. Nat. Rev. Mater. 6, 207–225 (2020).

Spencer, D. T. et al. An optical-frequency synthesizer using integrated photonics. Nature 557, 81–85 (2018).

Ferrera, M. et al. On-chip CMOS-compatible all-optical integrator. Nat. Commun. 1, 29 (2010).

Del’Haye, P. et al. Optical frequency comb generation from a monolithic microresonator. Nature 450, 1214–1217 (2007).

Moss, D. J., Morandotti, R., Gaeta, A. L. & Lipson, M. New CMOS-compatible platforms based on silicon nitride and Hydex for nonlinear optics. Nat. Photonics 7, 597 (2013).

Corcoran, B. et al. Ultra-dense optical data transmission over standard fiber with a single chip source. Nat. Commun. 11, 2568 (2020).

Pasquazi, A. et al. Micro-combs: a novel generation of optical sources. Phys. Rep. 729, 1–81 (2017).

Kippenberg, T. J., Holzwarth, R. & Diddams, S. A. Microresonator-based optical frequency combs. Science 332, 555–559 (2011).

Brasch, V. et al. Photonic chip–based optical frequency comb using soliton Cherenkov radiation. Science 351, 357–360 (2016).

Suh, M.-G., Yang, Q.-F., Yang, K. Y., Yi, X. & Vahala, K. J. Microresonator soliton dual-comb spectroscopy. Science 354, 600–603 (2016).

Marin-Palomo, P. et al. Microresonator-based solitons for massively parallel coherent optical communications. Nature 546, 274–279 (2017).

Kues, M. et al. On-chip generation of high-dimensional entangled quantum states and their coherent control. Nature 546, 622–626 (2017).

Reimer, C. et al. Generation of multiphoton entangled quantum states by means of integrated frequency combs. Science 351, 1176–1180 (2016).

Del’Haye, P. et al. Phase-coherent microwave-to-optical link with a self-referenced microcomb. Nat. Photonics 10, 516–520 (2016).

Liang, W. et al. High spectral purity Kerr frequency comb radio frequency photonic oscillator. Nat. Commun. 6, 7957 (2015).

Xu, X. et al. Advanced RF and microwave functions based on an integrated optical frequency comb source. Opt. Express 26, 2569–2583 (2018).

Xu, X. et al. Broadband RF channelizer based on an integrated optical frequency Kerr comb source. J. Light. Technol. 36, 4519–4526 (2018).

Trocha, P. et al. Ultrafast optical ranging using microresonator soliton frequency combs. Science 359, 887–891 (2018).

Suh, M.-S. & Vahala, K. J. Soliton microcomb range measurement. Science 359, 884–887 (2018).

Kippenberg, T. J., Gaeta, A. L., Lipson, M. & Gorodetsky, M. L. Dissipative Kerr solitons in optical microresonators. Science 361, 567 (2018).

Sun, Y. et al. Quantifying the accuracy of microcomb-based photonic RF transversal signal processors. IEEE J. Select. Top. Quantum Electron. 29, 1–17 (2023).

Xu, X. et al. Microcomb-based photonic RF signal processing. IEEE Photonics Technol. Lett. 31, 1854–1857 (2019).

Tan, M. et al. RF and microwave photonic temporal signal processing with Kerr micro-combs. Adv. Phys. X 6, 1838946 (2021).

Tan, M. et al. Photonic RF and microwave filters based on 49GHz and 200GHz Kerr microcombs. Opt. Commun. 465, 125563 (2020).

Lu, Z. et al. Synthesized soliton crystals. Nat. Commun. 12, 1–7 (2021).

Xu, X. et al. Advanced adaptive photonic RF filters with 80 taps based on an integrated optical micro-comb source. J. Light. Technol. 37, 1288–1295 (2019).

Tan, M. et al. Photonic RF arbitrary waveform generator based on a soliton crystal micro-comb source. J. Light. Technol. 38, 6221–6226 (2020).

Xu, X. et al. Photonic RF and microwave integrator based on a transversal filter with soliton crystal microcombs. IEEE Trans. Circuits Syst. II Express Briefs 67, 3582–3586 (2020).

Xu, X. et al. Broadband microwave frequency conversion based on an integrated optical micro-comb source. J. Light. Technol. 38, 332–338 (2020).

Xu, X. et al. Photonic perceptron based on a kerr microcomb for high-speed, scalable, optical neural networks. Laser Photonics Rev. 14, 2000070 (2020).

Lunardi, L. M. et al. Tunable dispersion compensators based on multi-cavity all-pass etalons for 40Gb/s systems. J. Light. Technol. 20, 2136 (2002).

Metcalf, A. J. et al. Integrated line-by-line optical pulse shaper for high-fidelity and rapidly reconfigurable RF-filtering. Opt. Express 24, 23925–23940 (2016).

Khaire, P. A. & Thakur, N. V. A fuzzy set approach for edge detection. Int. J. Image Process. 6, 403–412 (2012).

Shi, T., Kong, J., Wang, X., Liu, Z. & Zheng, G. Improved Sobel algorithm for defect detection of rail surfaces with enhanced efficiency and accuracy. J. Cent. South Univ. 23, 2867–2875 (2016).

Liu, F. F. et al. Compact optical temporal differentiator based on silicon microring resonator. Opt. Express 16, 15880–15886 (2008).

Wang, C. et al. Integrated lithium niobate electro-optic modulators operating at CMOS-compatible voltages. Nature 562, 101 (2018).

Sahin, E., Ooi, K. J. A., Png, C. E. & Tan, D. T. H. Large, scalable dispersion engineering using cladding-modulated Bragg gratings on a silicon chip. Appl. Phys. Lett. 110, 161113 (2017).

Liang, D., Roelkens, G., Baets, R. & Bowers, J. E. Hybrid integrated platforms for silicon photonics. Materials 3, 1782–1802 (2010).

Stern, B., Ji, X., Okawachi, Y., Gaeta, A. L. & Lipson, M. Battery-operated integrated frequency comb generator. Nature 562, 401 (2018).

Bao, H. et al. Laser cavity-soliton micro-combs. Nat. Photonics 13, 384–389 (2019).

Rowley, M. et al. Self-emergence of robust solitons in a micro-cavity. Nature 608, 303–309 (2022).

Herr, T. et al. Temporal solitons in optical microresonators. Nat. Photonics 8, 145–152 (2013).

Tan, M. et al. RF and microwave fractional differentiator based on photonics. IEEE Trans. Circuits Syst. II Express Briefs 67, 2767–2771 (2020).

Gonzalez R. C. & Woods R. E. Digital image processing, New York: Addison-Wesley (1993).

Acknowledgements

This work was supported by the Australian Research Council Discovery Projects Program (No. DP150104327, No. DP190101576). R. M. acknowledges support by the Natural Sciences and Engineering Research Council of Canada (NSERC) through the Strategic, Discovery and Acceleration Grants Schemes, by the MESI PSR-SIIRI Initiative in Quebec, and by the Canada Research Chair Program. Brent E. Little was supported by the Strategic Priority Research Program of the Chinese Academy of Sciences, Grant No. XDB24030000.

Author information

Authors and Affiliations

Contributions

M.T., X.X., and D.J.M. developed the original concept. B.E.L. and S.T.C. designed and fabricated the integrated devices. M.T. performed the experiments. D.J.M., M.T., J.W., A.B., B.C., T.G.N. R.M. A.M., and X.X. contributed to the development of the experiment and to the data analysis and to the writing of the manuscript. D.J M., X.X., J.W., and A.M. supervised the research.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Engineering thanks Bert Jan Offrein and Bin Shi for their contribution to the peer review of this work. Primary Handling Editors: Chaoran Huang and Rosamund Daw.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tan, M., Xu, X., Boes, A. et al. Photonic signal processor based on a Kerr microcomb for real-time video image processing. Commun Eng 2, 94 (2023). https://doi.org/10.1038/s44172-023-00135-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s44172-023-00135-7

This article is cited by

-

Editors’ Choice 2023

Communications Engineering (2023)