Abstract

Background

Aiming at objective early detection of neuromotor disorders such as cerebral palsy, we propose an innovative non-intrusive approach using a pressure sensing device to classify infant general movements. Here we differentiate typical general movement patterns of the “fidgety period” (fidgety movements) vs. the “pre-fidgety period” (writhing movements).

Methods

Participants (N = 45) were sampled from a typically-developing infant cohort. Multi-modal sensor data, including pressure data from a pressure sensing mat with 1024 sensors, were prospectively recorded for each infant in seven succeeding laboratory sessions in biweekly intervals from 4 to 16 weeks of post-term age. 1776 pressure data snippets, each 5 s long, from the two targeted age periods were taken for movement classification. Each snippet was pre-annotated based on corresponding synchronised video data by human assessors as either fidgety present or absent. Multiple neural network architectures were tested to distinguish the fidgety present vs. fidgety absent classes, including support vector machines, feed-forward networks, convolutional neural networks, and long short-term memory networks.

Results

Here we show that the convolution neural network achieved the highest average classification accuracy (81.4%). By comparing the pros and cons of other methods aiming at automated general movement assessment to the pressure sensing approach, we infer that the proposed approach has a high potential for clinical applications.

Conclusions

We conclude that the pressure sensing approach has great potential for efficient large-scale motion data acquisition and sharing. This will in return enable improvement of the approach that may prove scalable for daily clinical application for evaluating infant neuromotor functions.

Plain language summary

The movement of a baby is used by health care professionals to determine whether they are developing as expected. The aim of this study was to investigate whether a pad containing sensors that measured pressure occurring as the babies moved could enable identification of different movements of the babies. The results we obtained were similar to those obtained from use of a computer to process videos of the moving babies or other methods using movement sensors. This method could be more readily used to check the movement development of babies than other methods that are currently used.

Similar content being viewed by others

Introduction

Over the past decades, our knowledge on human spontaneous movements, their onset, developmental trajectory, and predictive value for clinical outcomes has become increasingly profound1,2. Among the rich presentations of infant movements, a specific spontaneous motor repertoire, termed general movements by Prechtl and colleagues2,3, has gained substantial attention. The Prechtl general movements assessment (GMA) has proven to be an efficient and reliable diagnostic tool for detecting cerebral palsy within the first few months of human life4. The significance of general movements as a biomarker for divergent early brain development, and their long-term relevance for cognitive, speech-language, and motor development have been widely acknowledged5,6,7,8.

GMA is a method based on visual gestalt perception. The expertise of the GMA assessors comes from intensive training and sustaining practice. The cumulative cost and effort required for human assessors to achieve and maintain adequate performance are considerable. In common with other man-powered assessments, human factors may affect GMA assessors’ performance, which may have impeded an even broader application of this efficient diagnostic tool. As a consequence, increasing efforts on automated solutions to classify infant motor functions have been made in the last years to supplement the classic GMA9,10,11,12, where most attempts have been, remaining true to the GMA methodology, devoted to developing vision-based solutions, for example see13,14,15,16,17,18. Several sensor-based methods directly capturing 3D motion data of the infants have also been developed19,20,21,22,23,24,25. Both vision-based methods using marker-based body tracking26 and methods applying non-vision sensors19,20,21,22,23, require attaching elements or devices to the infant’s body, which can become cumbersome and might alter infants’ behavioural status and their motor output27. To optimise tracking and evaluating developmental behaviours in early infancy, we developed in 2015 a comprehensive multimodal approach28. One of our aims was to systematically examine the potential of different techniques and their combinations to classify, among others, infant movement patterns. With a prospective longitudinal approach, we recruited a cohort of typically developing infants. For recording their movements, we utilised, in addition to a multi-camera video set-up (2D-RGB and 3D Kinects), inertial motion units (IMUs, accelerometer sensors), and a pressure sensing mat28.

Pressure sensitive mats have been broadly applied in infant monitoring, sport training, and patient care to evaluate dynamic force distribution and displacement in sitting, lying, or standing positions in individuals with different mobilities and at different ages29,30,31,32,33,34,35,36,37,38,39. Pressure sensing devices have also been used for assessing preterm- and term-infants’ sleeping behaviours, gross motor patterns, and postural control40,41,42,43,44,45,46,47,48,49,50. Reported in a recent abstract, Johnson and colleagues51 used a force plate to assess motor patterns in 12 typically developing infants aged 2 to 7 months. They clustered the infants into three groups according to their movement variabilities (i.e., moderate, mild, and little variability). Greater variability captured by the pressure sensing device seemed to be associated with an age-specific general movement pattern, the fidgety movements (FMs), i.e., a general movement pattern that presents during 9–20 weeks of post-term age in typically developing infants27. No further technical details were revealed in the abstract. Kniaziew-Gomoluch and colleagues examined the postures of infants who presented either normal or abnormal FMs. They found statistical differences between the two groups in their Centre of Pressure (CoP) parameters measured by a force plate45,46. The authors, however, did not apply machine learning to classify general movements.

As pressure sensing mats record force changes in motion across spatial and temporal dimensions, it has the potential to distinguish infant movements that are different in their timing, speed, amplitude, spatial distribution, connection, and organisation (reflecting the involvement and displacement of different body-parts), which are the characterising features discriminating general movements at different ages, and more importantly, of distinctive qualities27 (physiological vs. pathological). Compared to other motion tracking techniques, the application of pressure mats does not require complex and time-consuming setups and is fully non-intrusive to the infants. The pressure mat data can be readily deidentified thus circumvent potential data privacy issues when it comes to data transfer and sharing52. If a pressure sensitive mat can reliably detect different infant general movement patterns, it will have great potential for routine clinical applications which may substantially increase the accessibility of GMA.

With the current proof-of-concept study, we sought to test the viability of using a pressure sensitive mat to classify different general movement patterns. We intended to use the pressure sensing mat to first analyse typical development, which shall build the basis for future investigations targeting altered development (i.e., classification of typical vs. atypical patterns pinpointing neurological dysfunction). Utilising data obtained from the aforementioned prospective-longitudinal infant cohort28, we aimed to examine whether pressure data can be used to differentiate typical FMs from “pre-fidgety movements”, i.e., writhing movements27.

In this study, we demonstrate that pressure sensing methodology can generate adequate infant movement classification. With ongoing technological advances on infant-suitable devices, easy-to-apply, non-intrusive pressure sensing solutions have great potential to be applied in daily clinical practice and surveillance of infant neuromotor functions. Developing pressure sensing approaches will forcefully contribute to meeting the urgent need of acquiring and sharing large datasets across centres, and in return, accelerate further development and improvement of the technology. Considering pros and cons of different sensing techniques, we suggest that multimodal non-intrusive (i.e., pressure and video) data acquisition and analyses combining different venues of motion information may be a propitious direction for research and practice. This approach may optimise and streamline infant movement evaluations enabling efficient and broader clinical implementation of GMA and help to objectively identify infants at elevated likelihood for developing neuromotor disorders such as cerebral palsy.

Methods

For the current study, we used a validated expert-annotated dataset reported in a previous study of our research group16 (please find details below; see also Fig. 1). Data acquisition was done at iDN’s BRAINtegrity lab at the Medical University of Graz, Austria. Movement data were collected as a part of the umbrella project with a prospective longitudinal design aimed to profile typical cross-domain development during the first months of human life28. Data analyses for the current study were done at the Systemic Ethology and Developmental Science Unit—SEE, Department of Child and Adolescent Psychiatry and Psychotherapy at the University Medical Centre Göttingen, Germany. The study was approved by the Institutional Review Board of the Medical University of Graz, Austria (27-476ex14/15) and the University Medical Centre Göttingen, Germany (20/9/19). Parents were informed of all experimental procedures and study purpose, and provided their written informed consent for participation and publication of results.

Participants

Participants of the umbrella project28 included 51 infants born between 2015 and 2017 to monolingual German-speaking families in Graz (Austria) and its close surroundings. Inclusion criteria were: uneventful pregnancy, uneventful delivery at term age (>37 weeks of gestation), singleton birth, appropriate birth weight, uneventful neonatal period, inconspicuous hearing and visual development. All parents completed high-school or higher level of education. The parents had no record of alcohol or substance abuse. From the 51 infants, one was excluded due to a diagnosed medical condition at 3 years of age. Another five were excluded due to incompleteness of recordings within the required age intervals (see below). The final sample for the current study comprised 45 infants (23 females).

Data acquisition

From 4 to 16 weeks of post-term age, each infant was assessed at 7 succeeding sessions in a standard laboratory setting in biweekly intervals. Post-term ages at the seven sessions were: T1 28 ± 2 days, T2 42 ± 2 days, T3 56 ± 2 days, T4 70 ± 2 days, T5 84 ± 2 days, T6 98 ± 2 days, and T7 112 ± 2 days. According to the GMA manual27, 5 to 8 weeks of post-term period (T2 and T3 belong to this period) is a transitional- or “grey”-zone between the writhing and the fidgety periods and is not ideal for assessing infant general movements. FMs are most pronounced in typically developing infants from 12 weeks of post-term age onwards27 (corresponding to T5-7). Therefore, to analyse infant general movements, data from T1 as “pre-fidgety period” and T5-7 as “fidgety period” were taken for the current study.

Infant movement data were recorded in form of RGB and RGB-D video streams, accelerometer and gyroscope data, and pressure sensing mat data28. All sensors were synchronised. Data recording procedure followed the GMA guidelines27. The pressure data was acquired using a Conformat pressure sensing mat53 (Tekscan, Inc., South Boston, Massachusetts, USA). The mat was laid on the mattress, covered by a standard cotton sheet. During a laboratory assessment, the infant was placed in supine on the mat by the parent. The Conformat contains 1024 pressure sensors arranged in a 32 × 32 grid array on an area of 471.4 × 471.4 mm2, producing pressure image frames (8 Bit, 32 × 32 pixels, sampling rate 100 Hz).

Data annotation

For movement classification using machine learning methods with pressure mat data, human-annotation data was needed. These annotation data were available from a previous study16. Human annotation was based on RGB recordings which were synchronised with the pressure mat recordings. In that previous study16, we first cut suitable videos (i.e., infants were, overall, awake and active and not fussy) from T1 to T5-7 of the 45 infants each into brief chunks (i.e., snippets). Based on initial pilot trials, we determined the shortest length of each video snippet to be 5 s, a reasonable duration of unit for machine learning, as well as a minimum length of video for human assessors feeling confident to judge whether the FM is present (FM+) or absent (FM−) on each snippet16, providing the ground truth labels to train and test classification models as described below.

For the purpose of proof-of-concept, only a fraction of the total available snippets (N = 19451) was sampled and annotated by human assessors. Out of the entire pool, 2800 snippets were randomly chosen: 1400 from T1, the pre-fidgety period, and the other 1400 from T5-7, the fidgety period. Two experienced GMA assessors, blind of the ages of the infants, evaluated each of the randomly ordered 2800 5-s snippets independently, labelling each snippet as “FM + ”, “FM−”, or “not assessable” (i.e., the infant during the specific 5 s was: fussy/crying, drowsy, hiccupping, yawning, refluxing, over-excited, self-soothing, or distracted, all of which distort infants’ movement pattern and shall not be assessed for GMA27. The interrater agreement of the two assessors for classes FM+ and FM− was Cohen’s kappa κ = 0.97. The intra-assessor reliability by rerating 280 randomly-chosen snippets (i.e., 10% of the sample) was Cohen’s kappa κ = 0.85 for assessor 1, and κ = 0.95 for assessor 2 for the classes FM+ and FM−. Snippets with discrepant labelling for classes FM+ vs. FM− by the assessors (N = 24), and the ones labelled as “not assessable” by either assessor (N = 990) were excluded. A remaining total of 1784 video snippets were labelled identically by both assessors as either FM+ (N = 956), or FM− (N = 828). Of the 1784 snippets, 1776 had corresponding synchronised pressure mat data, which were used for the machine learning procedures described below. Among the 1776 pressure mat snippets, 948 adopted the corresponding label of “FM + ”, and 828 of “FM−”.

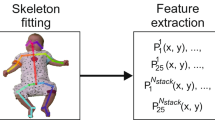

Feature extraction for motion encoding

A flow diagram of the feature extraction procedure is shown in Fig. 2. As input, we used the 1776 pressure mat recordings, each 5 s long, corresponding to the 5 s video snippets described above16, with a sampling rate of 100 Hz, which led to 500 frames per snippet. One frame consists of 1024 pressure sensor values arranged on a 32 × 32 grid (see frames on the left side in Fig. 2).

We first cropped the area [1:29, 4:29] of original grid size 32 × 32 (red rectangle), since in most cases the sensor values outside this area were 0. Thus, the size of the cropped area was 29 × 26 leading to 754 pressure sensor values. Generally, only two areas were strongly activated on the pressure mat, where activations on the top correspond to the infant shoulders and/or head, and activations at the bottom correspond to the infant hips. Therefore, we split the cropped grid of size 29 × 26 into two parts, 12 × 26 (top) and 17 × 26 (bottom), and tracked the CoP in these two areas.

Next, we computed position coordinates x and y of the CoP and the average pressure values p of the top and the bottom areas for each frame as the following:

Here, pt(i,j) and pb(i,j) correspond to the pressure sensor values at the position (i = 1..mt/b, j = 1..nt/b) of the top (t) and bottom (b) parts, respectively. To reduce signal noise, for each value x, y, and p, we applied moving average filter with a sliding window of size 5 frames (0.05 s).

To avoid biases that could be caused by infant size and weight, we normalised values x, y, and p between 0 and 1 as follows:

Thus, the original input of size 500 × 32 × 32 was reduced to 500 × 6, i.e., six signals of 500 time steps.

Classification models

For classifying infant movements (FM+ vs. FM−), we compared a support vector machine (SVM) and a feed-forward network (FFN), also known as multi-layer perceptron, with manually defined features against a convolutional neural network (CNN) and a long-short term memory (LSTM) network with learned features (for examples of network architectures see Fig. 3).

a feed-forward network F2, (b) convolutional neural network C3F2, and (c) long short-term memory network L1F1.2. For more details, please see Supplementary Table 1.

In case of the SVM and FFN, we used statistical features obtained from signals xt/b, yt/b, and pt/b and their derivatives x’t/b, y’t/b, and p’t/b by computing mean and standard deviation values for each signal. In one case, we only used statistical features from the original signals, which resulted in 12 input values in total. In another case, we used statistical features from both the original signals and their derivatives, which resulted in 24 input values in total. As shown in Supplementary Table 1, we investigated SVMs with different kernels (RBF and polynomial) and FFN architectures with one or two fully connected (FC) layers with 12 or 24 inputs.

In case of the CNN and the LSTM, we used the original signals xt/b, yt/b, and pt/b as input and allowed the networks to learn features from these signals by utilising one or multiple convolutional or LSTM layers (see Supplementary Table 1). For the CNN, behind the convolutional layer(s), we used an average pooling layer to reduce the input dimension as commonly used for the convolutional network architectures. This was then followed by one or two FC layers. All details of the network architectures and their parameters can be found in Supplementary Table 1. The schematic diagrams of an FFN architecture (F2), a CNN architecture (C3F2), and a LSTM architecture (L1F1.2) are presented in Fig. 3a–c, respectively.

The SVMs were implemented using Python scikit-learn library54. We used either the radial basis function (RBF) kernels or the polynomial kernels of degrees 1–3. Regularisation parameter C = [0.1, 1, 10, 100, 1000], and kernel coefficient gamma = [0.01, 0.1, 1, 10, 100] were tuned on the validation set (see section “Evaluation procedure and quantification measures” below).

All network architectures were implemented using TensorFlow55 and Keras API56. To train the network architectures we used the Adam optimiser with a binary cross-entropy as a loss function, batch size 4, and default training parameters, i.e., learning rate = 0.001, b1 = 0.9, b2 = 0.999, and e = 1e-07. To avoid overfitting, we used a validation stop with validation split 1/6 and patience 10.

Statistics and reproducibility

To evaluate and compare the performances of the above presented classification models, we used a 5-fold cross-validation procedure. We divided the dataset into five subsets (each subset contained snippets from nine different infants). One subset was used as the test set for each fold, and the remaining four subsets were used to train the network architecture. The number of snippets in the training and test sets for each fold is given in Table 1. In each fold in the training set, we had on average 662 (SD = 4) snippets for the absence of fidgety movements (FM−) and 758 (SD = 3) for the presence of fidgety movements (FM+) class. In the test sets, we had on average 166 (SD = 4) snippets for the FM− and 190 (SD = 3) for the FM+ class.

The training set was split into training (5/6 of the training data) and validation (1/6 of the training data) subsets. In case of the SVM, we trained 25 models with different parameter combinations C = [0.1, 1, 10, 100, 1000], and gamma = [0.01, 0.1, 1, 10, 100] on the training set. We then selected the model with the highest classification accuracy on the validation set, which was then evaluated on the test set. In case of the neural networks, for each fold we trained the network 20 times and then selected the model with the lowest loss score on the validation set, which was then evaluated on the test set.

For the evaluation of the classification performances, we used three common classification performance measures, i.e., sensitivity (true positive rate [TPR]), specificity (true negative rate [TNR]) and balanced accuracy (BA):

where TP is the number of true positives, TN the number of true negatives, FP the number of false positives, and FN the number of false negatives.

To compare classification accuracies of the network architectures, we calculated average classification performance measures across five test sets, confidence intervals of mean (CI 95%), and p values for comparison of means using two-sample t test. Statistical significance was set at p < 0.05.

Data and code are publicly available at Zenodo57.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Results

Signal examples

Examples of the pressure mat sensor values and the extracted feature signals x, y, and p are shown in Fig. 4, where the signals of an example of FM− and an example of FM+ are shown in the panels (a, b) and (c, d), respectively. In the panels (a, c), changes of pressure activity patterns caused by the infant’s movement were presented. Extracted signals are shown in the panels (b, d). In case of an absence of fidgety movements (FM−, b), local (short) signal patterns of lower frequency and larger amplitudes are observable. As a contrast, in case of a presence of fidgety movements (FM+, d), local (short) signal patterns of higher frequency and smaller amplitudes can be seen, which resembles the FM characteristics27.

a, c Pressure mat values (FM+ and FM− in a and c, respectively) obtained at 0.2, 0.4, …, 5 s. The blue and the red colours correspond to the low- and the high-pressure values, respectively. The red crosses correspond to the positions of the centre of mass for the top and the bottom areas of the pressure mat (see Fig. 2). b, d Signals for position x, y of the centre of mass, and the average pressure p (FM+ and FM− in b and d, respectively).

Classification results

Results of the classification performances for the best models are summarised and compared in Fig. 5. The performances of all models and all the performance measures are presented in Supplementary Table 2.

Statistics obtained from n = 5 test sets. For number of snippets in each test set please refer to Table 1. Coloured bars indicate for each model the average balanced accuracy (BA) obtained from the five test sets. Error bars denote the confidence intervals of the mean (CI 95%). The average BA between the CNN (C3F2) and the FFN (F1.3), and between the CNN (C3F2) and the LSTM (L1.F2) is significantly different (t test, p = 0.0343 and p = 0.0008). The average BA between the SVM (S2.P1) and the CNN (C3F2) is not statistically significant (t test, p = 0.0911).

SVMs. Applying SVMs with manually defined statistical features, the worst average classification performance was obtained when only the original signals x, y, and p (no derivatives, S1.RBF, S1.P1-3) were used, where the average balanced accuracy BA = 69.13–71.49%. Compared to the classification performance with additional features from the signal derivatives x’, y’, and p’ (S2.RBF, S2.P1-3), the average BA = 73.87–76.15%. However, the improvement from 71.49% (S1.RBF) to 76.15% (S2.P1) was not statistically significant (t test, p = 0.0776).

FFN architectures. Applying FFN architectures with manually defined statistical features, the worst average classification performance was obtained when only the original signals x, y, and p (no derivatives, network F1.1) were used, where the average balanced accuracy BA = 72.11%. Adding statistical features from the signal derivatives x’, y’, and p’ (network F1.3) and increasing the number of neurons of a FC layer from 100 to 200 improved the classification performance (average BA = 75.57%). However, this improvement was not statistically significant (t test, p = 0.2080). Adding a second FC layer (network F2) did not improve the classification performance (average BA = 73.58%).

CNN architectures. A CNN network architecture with learned features and only one convolutional layer (C1F1.1, four filters of size 7 × 1) led to a better average classification performance (BA = 77.46%), compared to the best average performance of the FFN architecture (BA = 75.57%). However, the difference was not statistically significant (t test, p = 0.4305).

Increasing the number of filters and the filter size (C1F1.2, 16 filters of size 13 × 1; C1F1.3, 64 filters of size 21 × 1) did not improve the classification performance, the average BA was 74.85% (C1F1.2) and 75.03% (C1F1.3), respectively. Increasing the number of neurons in a FC layer (C1F1.4) or adding a second FC layer (C1F2) also did not improve the classification performance, the average BA was 73.93% (C1F1.4) and 76.00% (C1F2), respectively.

Using architectures with two (C2F1) or three convolutional layers (C3F.1-2, C3F2) further improved the classification performance. The best classification performance was obtained by using a CNN architecture with three convolutional layers and two FC layers (C3F2), with an average BA = 81.43%.

LSTMs. The classification performance using LSTMs was inferior than using the other classification models. The average BA of LSTMs ranged from 66.93 to 69.04%. The highest average classification accuracy was obtained using one LSTM layer and two FC layers, L1F2 (average BA = 69.04%). Adding an additional LSTM layer and increasing the number of LSTM neurons did not improve the classification accuracy (average BA = 68.54%).

Model comparison. Comparison of the best classification models of the four network architectures are shown in Fig. 5. The CNN with learned features led to the highest average classification accuracy of 81.43% (CI = [78.00% 84.86%]). It outperformed the SVM (76.15%, CI = [72.00% 80.30%]) and the FFN (75.57%, CI = [72.65% 78.49%]) with manually defined features. The LSTM with learned features led to the worst classification performance (69.04%, CI = [65.94% 72.13%]).

Discussion

In the current study, we carried out a proof-of-concept evaluation and explored the feasibility of using a pressure sensing device to track and classify age-specific infant general movement patterns. We adopted an existing pre-annotated dataset from a typically developing infant cohort16 and examined whether a pressure data could be used to differentiate between typical movement patterns during the “fidgety age period” and the ones during the “pre-fidgety period”. With the current pressure mat approach, and the CNN architectures, the highest average classification accuracy achieved was 81%, with 86% sensitivity and 76% specificity for classifying presence vs. absence of the fidgety movements (FM+ vs. FM−).

We demonstrated that simple classification models such as SVMs or feed-forward network architectures (FFN) with manually defined statistical features can reach a moderate classification accuracy (up to 76%). With the CNN architectures that allow for learning relevant features instead of predefining them, a higher classification accuracy (up to 81%) was achieved. The lowest accuracy was obtained using the long short-term memory (LSTM) network (69%).

While pressure sensitive devices have been used to evaluate infant sleep-wake behaviours, gross motor patterns, and postural control40,41,42,43,44,45,46,47,48,49,50, they have rarely been used to classify infant general movements51. As such, at the moment, we can only compare the performance of the pressure mat to that of the vision-based sensors. We are aware that a direct comparison is not possible, since different data sets were used across different studies9,11. However, both the pressure mat technique and the vision-based methods are non-intrusive sensing approaches, which are conformable to the GMA guidelines27. In studies that attempted to distinguish FM+ versus FM−, the classification performance with the current pressure sensing mat seemed to be slightly lower than the performances based on RGB or RGB-D videos (see Table 2). The first possible reason for the lower performance might be that the mat used in this study was not specifically configurated to capture infant motion. As technology advances continuously, more sensitive and suitable pressure sensing devices for infant motion tracking may improve the performance of the pressure-based classification. Second, the lower performance may be partially explained by the fact that the pressure sensing mat only measures motion of some body parts as compared to motion data obtained from full-body tracking. Infants during “active-wakefulness”27 frequently lift their legs and arms above the lying surface2, here, the pressure mat. The motions of the lifted extremities can therefore not be captured directly but are indirectly translated through the changing force distribution patterns of the body parts (e.g., head, shoulder, back, and hips) that are in contact with the mat. This may partly explain the lower classification performance of the pressure mat in the current study compared to our previous study using the same dataset but analysing full-body skeleton data16. Third, for this proof-of-concept study, we only randomly sampled a fraction of the available data of the entire data pool. The performance of the algorithm may be improved if a greater amount of data would be included in future studies. Adopting an existing expert-annotated dataset, the performance of the pressure mat was currently based on rather short, 5 s long snippets. Using longer recordings for the machine learning procedures in the future might also improve the classification accuracy of the pressure sensing devices. Finally, one could also explore other CNN architectures such as temporal convolutional networks with residual connections which may improve the accuracy even further58.

Importantly, data used in the current study originated from a healthy, typically developing cohort. The absence of fidgety movements (FM−) in this dataset reflects normal, age-typical motor patterns, i.e., the writhing movements27, which are known to have significantly different quality and motion appearance from the pathological absence of FMs3. The pathological absence-of-fidgety patterns of infants with neurological deficits, e.g., with monotonous, jerky, or cramped-synchronised movement characters27, when compared to normal smooth and fluent FMs of the same aged infants, could be easier to detect by the pressure mat and result in higher classification performance. Our current dataset however does not allow for testing this hypothesis. Rather, following a classic physiology-prior-pathology paradigm59, with the data obtained from a healthy infant cohort, the present study was intended to examine the performance of the pressure sensing mat on classifying typical general movements, which are known to physiologically change their patterns during the first months of development, i.e., from the writhing to the fidgety pattern27. We would like to emphasise that the variabilities of the typical general movements are enormous, also within the same age period (e.g., the “fidgety age”), and shall not be underestimated60. Without a high-fidelity reflection and discernment of typical developmental patterns, attempts of classifying altered development may prove void. An AI-driven approach aiming at future clinical application therefore needs to investigate both typical and atypical patterns to warrant sensitivity as well as specificity.

As has been discussed in the recent reviews on AI-based GMA approaches, the objectives and the applied datasets of the existing studies are dissimilar from each other, which makes direct comparison of diverse approaches difficult9,10,11. Different labs each work on a separate set of data, often limited in sample size. It was often not reported in the published works, how the respective dataset was annotated and validated11, although valid annotations (e.g., FM+ vs. FM−) are the key for ML classification. Until now, no approved public-accessible large datasets are available in the field of researching general movements, although such dataset would be the basis for developing and comparing automated GMA solutions. This urgently calls for pooling and merging high-quality data across sites, which is challenging, partly due to the complex participants’ confidentiality and privacy concerns52,61. With the pressure sensing data, participants’ privacy protection can be easily achieved since no personal identifiable data is necessary for the analyses. As a comparison, with vision-based approaches, i.e., using cameras, which are predominantly used in the field9,10,11, facial images, being one of the most sensitive personal identifiers, are commonly present in the datasets. Data de-identification and privacy protection can only be done through additional laborious technical manipulations52.

Not to be forgotten, any clinical tool, if intended for broad application, has to be easy-to-use. Compared to other types of motion sensors (Table 3), data acquisition through pressure mats is non-intrusive, and requires minimum cost and setup efforts, which can be readily integrated into busy clinical routines almost anywhere. Importantly, the pressure sensing mat provides motion information in form of dynamic force changes in 2D-space. This is different from, and can provide a unique add-up to the information obtained through video data (usually body pose) or inertial motion sensors (acceleration and/or angular velocity)28. In case of cameras, additional algorithms need to be utilised to extract pose and/or motion information14,15,16,62, whereas accelerometers inertial motion units (IMUs), or a pressure sensing mat provide motion information directly20,23,24,46,51. Although single RGB cameras are easy to install and operate (no synchronisation nor calibration required), they only provide 2D pose/motion information as compared to RGB-D cameras or IMU sensors. Both single RGB and RGB-D cameras frequently suffer from occlusions (either caused by the setup, e.g., in an incubator, or by the infant’s own movements) and may lose track of some body parts from time to time. This limitation may be overcome by using multiple cameras26,63. However, such a setup becomes notably more complicated due to the necessity for synchronisation and calibration of the cameras, and also due to the amount of information generated, which needs to be processed to obtain 3D body pose and/or motion information64,65. Considering the strengths and limitations of different sensors9,10,11,12,66, pressure sensing mat data can augment other sensor modalities, especially single RGB or RGB-D camera setups to improve infant motion analysis.

Our results demonstrate that the currently applied pressure mat, although not specifically designed for tracking infant movements, delivered promising classification results in distinguishing typical fidgety from pre-fidgety movements. Although the classification performance of the pressure mat, in common with that of the most existent automated GMA approaches, is still inferior than the performance of human GMA experts10,11,12,66, the current study sets out an initial step for a line of non-intrusive AI-driven GMA research beyond using vision-based sensors (please also see below for comparison of different sensing modalities). It shall motivate further efforts to examine and improve the performance of the pressure sensors with extended datasets encompassing different patterns of general movements. Further studies shall evaluate if pressure sensors can reliably distinguish general movement patterns between: (a) normal pre-fidgety movements, i.e., age-typical writhing movement patterns before FMs emerge; (b) abnormal pre-fidgety movements, e.g., poor-repertoire or cramp-synchronised patterns27; (c) normal movement patterns during the fidgety age period; and (d) abnormal movement patterns during the fidgety age period, e.g., absent or abnormal FMs27. Our present study tested the classification between (a) and (c). This, from both the clinical and technological perspectives, is not comparable to the classification between (c) and (d), which was the focus of most studies listed in Table 2.

It has to be pointed out that the GMA is undoubtedly far beyond only identifying the presence or absence of the FMs3,11,67, although the FM pattern is of high diagnostic value60 and has hence gained extensive attention, including in the field of developing automated GMA10. Naturally, motion information captured by the pressure sensing devices, like by many other sensors, can tell us much more than whether a specific motility (e.g., the FMs) exists29,32,35,43,47. Future studies shall also investigate the potential of the pressure sensing mat for detecting other classes of motion features, within or beyond general movements, that are of clinical significance.

In short, pressure sensing solutions, by nature pseudonymised, provide a promising venue for realising easy and large-scale multi-centre data acquisition and sharing. This, if done, will enable synergy to develop, evaluate, and improve infant motion-tracking technologies that may ultimately scale up the implementation of AI-driven GMA.

Data availability

The data used for the classification experiments is publicly available at Zenodo: https://doi.org/10.5281/zenodo.810409757. The source data underlying results presented in Fig. 5 and Supplementary Table 2 is available in the Supplementary Data. All other data are available from the corresponding author on reasonable request.

Code availability

The code is publicly available at Zenodo: https://doi.org/10.5281/zenodo.810409757. Python scripts were tested on Linux OS with TensorFlow 2.10.1, Keras 2.10.0, Python 3.7.6, and Numpy 1.21.6. Matlab script was tested on Windows OS and MATLAB R2019b.

References

Einspieler, C., Prayer, D. & Marschik, P. B. Fetal movements: the origin of human behaviour. Dev. Med. Child Neurol. 63, 1142–1148 (2021).

Einspieler, C., Marschik, P. B. & Prechtl, H. F. R. Human motor behavior: prenatal origin and early postnatal development. Z. fur Psychol. / J. Psychol. 216, 147–153 (2008).

Prechtl, H. F. R. et al. An early marker for neurological deficits after perinatal brain lesions. Lancet 349, 1361–1363 (1997).

Novak, I. et al. Early, accurate diagnosis and early intervention in cerebral palsy: advances in diagnosis and treatment. JAMA Pediatr. 171, 897–907 (2017).

Kwong, A. K.-L. et al. Early motor repertoire of very preterm infants and relationships with 2-year neurodevelopment. J. Clin. Med. 11, 1833 (2022).

Einspieler, C., Bos, A. F., Libertus, M. E. & Marschik, P. B. The general movement assessment helps us to identify preterm infants at risk for cognitive dysfunction. Front. Psychol. 7, 406 (2016).

Peyton, C. et al. Correlates of normal and abnormal general movements in infancy and long-term neurodevelopment of preterm infants: insights from functional connectivity studies at term equivalence. J. Clin. Med. 9, 834 (2020).

Salavati, S. et al. The association between the early motor repertoire and language development in term children born after normal pregnancy. Early Hum. Dev. 111, 30–35 (2017).

Marcroft, C., Khan, A., Embleton, N. D., Trenell, M. L. & Plötz, T. Movement recognition technology as a method of assessing spontaneous general movements in high risk infants. Front. Neurol. 5, 284 (2015).

Irshad, M. T., Nisar, M. A., Gouverneur, P., Rapp, M. & Grzegorzek, M. AI approaches towards Prechtl’s assessment of general movements: a systematic literature review. Sensor 20, 5321 (2020).

Silva, N. et al. The future of general movement assessment: the role of computer vision and machine learning—A scoping review. Res. Dev. Disabil. 110, 103854 (2021).

Raghuram, K. et al. Automated movement recognition to predict motor impairment in high-risk infants: a systematic review of diagnostic test accuracy and meta-analysis. Dev. Med. Child Neurol. 63, 637–648 (2021).

Ihlen, E. A. F. et al. Machine learning of infant spontaneous movements for the early prediction of cerebral palsy: a multi-site cohort study. J. Clin. Med. 9, 5 (2020).

Nguyen-Thai, B. et al. A spatio-temporal attention-based model for infant movement assessment from videos. IEEE J. Biomed. Health Inform. 25, 3911–3920 (2021).

Groos, D. et al. Development and validation of a deep learning method to predict cerebral palsy from spontaneous movements in infants at high risk. JAMA Netw. Open 5, e2221325 (2022).

Reich, S. et al. Novel AI driven approach to classify infant motor functions. Sci. Rep. 11, 9888 (2021).

McCay K. D. et al. Towards explainable abnormal infant movements identification: a body-part based prediction and visualisation framework. In IEEE EMBS International Conference on Biomedical and Health Informatics (BHI) 1–4 (2021).

Tsuji, T. et al. Markerless measurement and evaluation of general movements in infants. Sci. Rep. 10, 1422 (2020).

Karch, D. et al. Quantification of the segmental kinematics of spontaneous infant movements. J. Biomech. 41, 2860–2867 (2008).

Abrishami, M. S. et al. Identification of developmental delay in infants using wearable sensors: full-day leg movement statistical feature analysis. IEEE J. Transl. Eng. Health Med. 7, 1–7 (2019).

Chung, H. U. et al. Skin-interfaced biosensors for advanced wireless physiological monitoring in neonatal and pediatric intensive-care units. Nat. Med. 26, 418–429 (2020).

Airaksinen, M. et al. Automatic posture and movement tracking of infants with wearable movement sensors. Sci. Rep. 10, 169 (2020).

Fontana, C. et al. An automated approach for general movement assessment: a pilot study. Front. Pediatr. 9, 720502 (2021).

Airaksinen, M. et al. Intelligent wearable allows out-of-the-lab tracking of developing motor abilities in infants. Commun. Med. 2, 69 (2022).

Hartog, D. et al. Home-based measurements of dystonia in cerebral palsy using smartphone-coupled inertial sensor technology and machine learning: a proof-of-concept study. Sensors 22, 4386 (2022).

Marchi, V. et al. Movement analysis in early infancy: towards a motion biomarker of age. Early Hum. Dev. 142, 104942 (2020).

Einspieler, C., Prechtl, H. F. R., Bos, A. F., Ferrari, F. & Cioni, G. Prechtl’s method on the qualitative assessment of general movements in preterm, term and young infants. Clin. Dev. Med. 167, 1–91 (2004).

Marschik, P. B. et al. A novel way to measure and predict development: a heuristic approach to facilitate the early detection of neurodevelopmental disorders. Curr. Neurol. Neurosci. Rep. 17, 1–15 (2017).

Nishizawa, Y., Fujita, T., Matsuoka, K. & Nakagawa, H. Contact pressure distribution features in down syndrome infants in supine and prone positions, analyzed by photoelastic methods. Pediatr. Int. 48, 484–488 (2006).

Bickley, C. et al. Comparison of simultaneous static standing balance data on a pressure mat and force plate in typical children and in children with cerebral palsy. Gait Posture 67, 91–98 (2019).

Saenz-Cogollo, J. F., Pau, M., Fraboni, B. & Bonfiglio, A. Pressure mapping mat for tele-home care applications. Sensors 16, 365 (2016).

Safi K., Mohammed S., Amirat Y. & Khalil M. Postural stability analysis—A review of techniques and methods for human stability assessment. In 4th International Conference on Advances in Biomedical Engineering (ICABME) 1–4 (2017).

Martinez-Cesteros, J., Medrano-Sanchez, C., Plaza-Garcia, I., Igual-Catalan, R. & Albiol-Pérez, S. A velostat-based pressure-sensitive mat for center-of-pressure measurements: a preliminary study. Int. J. Environ. Res. Public Health 8, 5958 (2021).

Low, D. C., Walsh, G. S. & Arkesteijn, M. Effectiveness of exercise interventions to improve postural control in older adults: a systematic review and meta-analyses of centre of pressure measurements. Sports Med. 47, 101–112 (2017).

Muehlbauer, T., Gollhofer, A. & Granacher, U. Associations between measures of balance and lower-extremity muscle strength/power in healthy individuals across the lifespan: a systematic review and meta-analysis. Sports Med. 45, 1671–1692 (2015).

Betker A. L., Szturm T. & Moussavi Z. Development of an interactive motivating tool for rehabilitation movements. In 27th IEEE International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) 2341-2344 (2006).

Dabrowski, S. & Branski, Z. Pressure sensitive mats as safety devices in danger zones. Int. J. Occup. Saf. Ergon. 4, 499–519 (1998).

Hubli, M. et al. Feedback improves compliance of pressure relief activities in wheelchair users with spinal cord injury. Spinal Cord 59, 175–184 (2021).

Garcá-Molina, P. et al. Evaluation of interface pressure and temperature management in five wheelchair seat cushions and their effects on user satisfaction. J. Tissue Viability. 30, 402–409 (2021).

Donati, M. et al. A modular sensorized mat for monitoring infant posture. Sensors 14, 510–531 (2013).

Dusing, S. C., Mercer, V., Yu, B., Reilly, M. & Thorpe, D. Trunk position in supine of infants born preterm and at term: an assessment using a computerized pressure mat. Pediatr. Phys. Ther. 17, 2–10 (2005).

Dusing, S. C., Kyvelidou, A., Mercer, V. S. & Stergiou, N. Infants born preterm exhibit different patterns of center-of-pressure movement than infants born at full term. Phys. Ther. 89, 1354–1362 (2009).

Dusing, S. C., Izzo, T. A., Thacker, L. R. & Galloway, J. C. Postural complexity influences development in infants born preterm with brain injury: relating perception-action theory to 3 cases. Phys. Ther. 94, 1508–1516 (2014).

Dusing, S. C., Thacker, L. R. & Galloway, J. C. Infant born preterm have delayed development of adaptive postural control in the first 5 months of life. Infant Behav. Dev. 44, 49–58 (2016).

Kniaziew-Gomoluch, K., Szopa, A., Kidon, Z., Siwiec, A. & Domagalska-Szopa, M. Design and construct validity of a postural control test for pre-term infants. Diagnostics 3, 96 (2023).

Kniaziew-Gomoluch, K. et al. Reliability and repeatability of a postural control test for preterm infants. Int. J. Environ. Res. Public Health 20, 1868 (2023).

Kobayashi, Y., Yozu, A., Watanabe, H. & Taga, G. Multiple patterns of infant rolling in limb coordination and ground contact pressure. Exp. Brain Res. 239, 2887–2904 (2021).

Rihar, A., Mihelj, M., Kolar, J., Pašic, J. & Munih, M. Sensory data fusion of pressure mattress and wireless inertial magnetic measurement units. Med. Biol. Eng. Comput. 53, 123–135 (2015).

Rihar, A. et al. Infant posture and movement analysis using a sensor-supported gym with toys. Med. Biol. Eng. Comput. 57, 427–439 (2019).

Whitney, M. P. & Thoman, E. B. Early sleep patterns of premature infants are differentially related to later developmental disabilities. J. Dev. Behav. Pediatr. 14, 71–80 (1993).

Johnson, T. L. et al. Artificial intelligence-based quantification of the general movement assessment using center of pressure patterns in healthy infants. J. Clin. Transl. Sci. 4, 105 (2020).

Marschik, P. B. et al. Open video data sharing in developmental science and clinical practice. iScience 26, 106348 (2023).

Tecscan. https://www.tekscan.com/pressure-mapping-sensors (2023).

Scikit-learn. https://scikit-learn.org (2023).

TensorFlow. https://www.tensorflow.org (2023).

Keras. https://keras.io (2023).

Kulvicius, T. & Marschik, P. B. Infant movement classification through pressure distribution analysis. Zenodo https://doi.org/10.5281/zenodo.8104097 (2023).

Bai, S., Kolter, J. Z. & Koltun, V. An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. Comput. Res. Repos. (CoRR) https://doi.org/10.48550/arXiv.1803.01271 (2018).

D’Irsay, S. Pathological Physiology. Sci. Mon. 23, 403–406 (1926).

Einspieler, C., Peharz, R. & Marschik, P. B. Fidgety movements – tiny in appearance, but huge in impact. J. Pediatr. 92, 64–70 (2016).

Ursin, G. et al. Sharing data safely while preserving privacy. Lancet 394, 1902 (2019).

Cao Z., Simon T., Wei S.-E. & Sheikh Y. Realtime multi-person 2D pose estimation using part affinity fields. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 7291-7299 (2017).

Shivakumar S. S. et al. Stereo 3D tracking of infants in natural play conditions. In IEEE International Conference on Rehabilitation Robotics (ICORR) 841-846 (2017).

Kim J.-H., Choi J. S. & Koo B.-K. Calibration of multi-Kinect and multi-camera setup for full 3D reconstruction. In IEEE International Symposium on Robotics (ISR) 1-5 (2013).

Kim J.-S. Calibration of multi-camera setups 69–72. (Boston, MA: Springer US, 2014).

Redd, C. B., Karunanithi, M., Boyd, R. N. & Barber, L. A. Technology-assisted quantification of movement to predict infants at high risk of motor disability: a systematic review. Res. Dev. Disabil. 118, 104071 (2021).

Einspieler, C. et al. Cerebral palsy: early markers of clinical phenotype and functional outcome. J. Clin. Med. 8, 1616 (2019).

Machireddy A. et al. A video/IMU hybrid system for movement estimation in infants. In 39th IEEE International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) 730-733 (2017).

Adde, L., Helbostad, J., Jensenius, A. R., Langaas, M. & Støen, R. Identification of fidgety movements and prediction of CP by the use of computer-based video analysis is more accurate when based on two video recordings. Physiother. Theory Pract. 29, 469–475 (2013).

Adde, L., Helbostad, J. L., Jensenius, A. R., Taraldsen, G. & Støen, R. Using computer-based video analysis in the study of fidgety movements. Early Hum. Dev. 85, 541–547 (2009).

Hesse N. et al. Computer vision for medical infant motion analysis: state of the art and RGB-D data set in European Conference on Computer Vision (ECCV) Workshops 32-49 (2018).

Acknowledgements

The authors thank all families for their participation. Thanks to the team members of the Marschik Labs in Graz and Göttingen (iDN—interdisciplinary Developmental Neuroscience and SEE—Systemic Ethology and Developmental Science), who were involved in recruitment, data acquisition, curation, and pre-processing: Gunter Vogrinec, Magdalena Krieber-Tomantschger, Iris Tomantschger, Laura Langmann, Claudia Zitta, Dr. Robert Peharz, Dr. Florian Pokorny, Dr. Simon Reich. We are/were supported by BioTechMed Graz and the Deutsche Forschungsgemeinschaft (DFG—stand-alone grant 456967546, SFB1528—project C03), the Laerdal Foundation, the Bill and Melinda Gates Foundation through a Grand Challenges Explorations Award (OPP 1128871), the Volkswagenfoundation (project IDENTIFIED), the LeibnizScience Campus, the BMBF CP-Diadem (Germany), and the Austrian Science Fund (KLI811) for data acquisition, preparation, and analyses. Special thanks also to our interdisciplinary international network of collaborators for discussing this study with us and for refining our ideas, and to the reviewers for their extensive feedback and advice. We also acknowledge support by the Open Access Publication Funds/transformative agreements of the Göttingen University.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

Conceptualisation, T.K., D.Z., C.E. and P.B.M.; Methodology, T.K., D.Z., F.W. and P.B.M; Data Curation, T.K.; Software, T.K.; Formal Analysis, T.K. and D.Z.; Visualisation, T.K.; Writing—Original Draught, T.K., D.Z. and P.B.M; Writing—Review & Editing, T.K., D.Z., K.N., S.B., M.K., C.E., L.P., F.W. and P.B.M.; Supervision, F.W. and P.B.M.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Medicine thanks Antoine Falisse and the other anonymous reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kulvicius, T., Zhang, D., Nielsen-Saines, K. et al. Infant movement classification through pressure distribution analysis. Commun Med 3, 112 (2023). https://doi.org/10.1038/s43856-023-00342-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s43856-023-00342-5

This article is cited by

-

Using the center of pressure movement analysis in evaluating spontaneous movements in infants: a comparative study with general movements assessment

Italian Journal of Pediatrics (2023)