Abstract

Fuzzy rough entropy established in the notion of fuzzy rough set theory, which has been effectively and efficiently applied for feature selection to handle the uncertainty in real-valued datasets. Further, Fuzzy rough mutual information has been presented by integrating information entropy with fuzzy rough set to measure the importance of features. However, none of the methods till date can handle noise, uncertainty and vagueness simultaneously due to both judgement and identification, which lead to degrade the overall performances of the learning algorithms with the increment in the number of mixed valued conditional features. In the current study, these issues are tackled by presenting a novel intuitionistic fuzzy (IF) assisted mutual information concept along with IF granular structure. Initially, a hybrid IF similarity relation is introduced. Based on this relation, an IF granular structure is introduced. Then, IF rough conditional and joint entropies are established. Further, mutual information based on these concepts are discussed. Next, mathematical theorems are proved to demonstrate the validity of the given notions. Thereafter, significance of the features subset is computed by using this mutual information, and corresponding feature selection is suggested to delete the irrelevant and redundant features. The current approach effectively handles noise and subsequent uncertainty in both nominal and mixed data (including both nominal and category variables). Moreover, comprehensive experimental performances are evaluated on real-valued benchmark datasets to demonstrate the practical validation and effectiveness of the addressed technique. Finally, an application of the proposed method is exhibited to improve the prediction of phospholipidosis positive molecules. RF(h2o) produces the most effective results till date based on our proposed methodology with sensitivity, accuracy, specificity, MCC, and AUC of 86.7%, 90.1%, 93.0% , 0.808, and 0.922 respectively.

Similar content being viewed by others

Introduction

The current trend of accumulation of huge amount of data in different databases pertaining to different domains has given rise to the unique opportunity of knowledge discovery/extraction using a plethora of data mining techniques1. These techniques2 can be explored in three ways namely knowledge types, architecture types, and analysis types along with their powerful applications in distinct research and practical domains to solve the interesting real-world problems. Data Mining plays a vital role in establishing smart agriculture application tools to accomplish real-time data analysis with large volume of data. Data mining tasks3 offer essential hidden patterns, correlation, and knowledge from the various applications of bioinformatics datasets, viscous dissipation, and activation energy4,5. Machine learning methods provide a set of techniques that can be used to create prediction/discriminatory models and subsequent knowledge extraction, which may facilitate in decision making or for better understanding of the concerned domain6,7. The “curse of dimensionality” plagues the effectiveness of various machine learning algorithms, but the development of dimensionality reduction methods8 have considerably impacted in reducing the effects of redundancy present in high dimensional datasets. In the fields of data mining, signal processing, biomedical imaging, agriculture, industrial engineering, and bioinformatics, researchers frequently face obstacles due to “curse of dimensionality” as it leads to enlarge the cost of data storage and extensive computing9. Moreover, this issue directly affects both the efficiency and accuracy to cope with different problems10. Dimensionality reduction process can easily eliminate redundancy and/or irrelevancy, noise, minimize the complexity of machine learning methods, and enhance the overall accuracy of classification process, and can be identified as an essential and key phase in pattern recognition scheme11.

Redundant features affects negatively to the various machine learning algorithms mostly resulting in high computation time and less accurate predictive models12. It also complicates the model interpretation. Feature reduction methods can be used to mitigate the negative effects of high dimensional data by facilitating the selection of low dimensional non-redundant subset of features. Feature reduction methods have been found to be very effective in a wide variety of research areas, including biological domain13,14.

Most popular methods of feature reduction algorithms fall under filter and wrapper methods. While wrapper methods are classifier dependent for the evaluation of features15,16, filter methods use classifier independent feature selection criterion and are generally less computationally intensive17.

Previously rough set theory18,19 have been applied very promisingly in feature selection20. Although classical rough set theory based feature selection methods21,22 can only be used on discrete features, which makes it mandatory for discretization of continuous features23,24. There is a fair chance of information loss during the process of discretization25.

The combination of fuzzy26 and rough sets27 effectively deals with uncertainty, vague and incomplete data. Rough set theory has been competently employed to produce the most informative features from a dataset consisted of discretized conditional attribute values. This informative feature subset is produced from the original features set with minimum information loss, and termed as reduct. Rough set deals with vagueness, whilst fuzzy set handles uncertainty. Fuzzy set theory ensures that real-valued datasets can be handled without any further discretization. By combining fuzzy set with rough set, information loss due to discretization can be effectively avoided as fuzzy rough set (FRS) can handle real-valued information system (dataset) directly. FRS can be effectively used for mitigating the effects of information loss as a consequence of discretization of features by using fuzzy similarity measures to tackle the continuous feature values28. Broadly, FRS aided dimensionality reduction29 methods can be categorized into two types30,31 which are based on discernibility matrix and dependency function32. Discernibility matrix assisted approaches provide numerous reduct sets33, whilst dependency function leads to a single feature subset34.

In FRS aided dimensionality reduction theory, a similarity relation is incorporated between the data points to construct lower and upper approximations. By taking union of the computed lower approximations, we obtain the positive region of decision. Here, the wider is the obtained membership to positive region; greater is the plausibility of instance belonging to an individual category35. Based on dependency function, we compute significance of a subset of features. Moreover, the conditional entropy measure is employed in to calculate reduct set for both homogeneous and heterogeneous information system respectively36,37,38. However, it may lead to misclassification of samples when there is a large degree of imbricate between diverse categories of data. Also, it can cope with only with membership of data point to a set, where uncertainty cannot be handled due to both identification and justification. Hence, there is an essential and utmost requirement of distinct kind of mathematical model that can both fit data, and at the same moment it can tackle uncertainty emerging due to identification39.

Intuitionistic fuzzy (IF) set40,41 is step ahead that offers two degree of freedom by taking into consideration both membership and non-membership, which can cope with uncertainty that emerges both in judgement and identification42. It has been successfully exercised in decision making43, image segmentation, rule generation, and machine learning44,45. In the recent few years, the assemblage of IF46 and rough sets47 are employed to establish numerous IF rough set models48,49 to effectively handle later uncertainty and vagueness in the data50,51. Huang et al.52 proposed a ranking based model for selecting the neighbourhood of objects53,54 and presented a Dominant IF Decision Table (DIFDT)55 by using discernibility matrix and assisted discernibility function23. They developed IFRS based reduction technique for knowledge extraction from given information system. Huang et al.56 presented the IF multigranulation rough set (IFMGRS) model and studied different reduction techniques to eliminate redundant granules by introducing reducts for three different types of IFMGRSs in 2014. Tan et al.57 used the concept of granular structure to introduce an IF rough set model58 and employed it for feature selection. Tiwari et al.59 discussed an IF tolerance relation, which was applied to establish IF rough set aided feature selection. Shreevastava et al.60 addressed different similarity relation assisted technique to deal with both supervised and semi-supervised data. Tiwari et al.61,62,63 and Shreevastava et al.64,65 elaborated different issues related to feature selection technique and presented several lower and upper approximations by using various mathematical ideas. A feature selection to track multiple samples was presented by Li et al.66 by using IF clustering notion. IF quantifier was introduced by Singh et al.67 to construct IF rough set model and its application to feature reduction. Jain et al.39 tried to minimize noise in the data by using the concept of IF granules and incorporated different types of IF relations to introduce feature selection both robust and non-robust. From the recent published articles, it is conspicuous that the use of IF set theory assisted notion for feature selection is still in its incipient stage. Uncertainty is measured in terms of entropy and has its origin in the telecommunications domain68,69. Mutual information (MI)70 aims to measure the relationship between feature and the target. Further, it can be stated that mutual information (MI)71 is an interesting quantity that evaluates the dependence between conditional features and has been repeatedly employed to solve an extensive diverse problems. Feature selection techniques can be converted into effective one by incorporating information entropy estimation notion for attribute extraction based on MI72 and the conventional feature selection approaches on the basis of class seperability. Broadly MI measures the amount of information that can be deduced from a random variable/vector about another random variable/vector73,74.

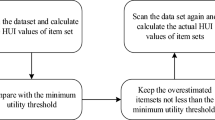

Max-relevance-minimum-redundancy method75,76 is based on the concept of MI and has been relevant in a number of previous studies. It deduces the target MI with minimum redundancy10,77 among the selected features. A number of MI based feature selection algorithms have been in practice in various domains72,74. Fuzzy rough entropy was effectively used to avoid the limitation of rough entropy to handle the real-valued feature data78,79, but fuzzy rough entropy leads to lessening monotonically with the rise of the dimensions of data, which can promptly reflect the roughness of information systems. This issue was resolved up to certain extent by presenting the extension of fuzzy rough based information entropy with conditional entropy, joint entropy, and mutual information. However, none of the works has handled the noise, vagueness, and uncertainty due to both identification and judgement simultaneously, which is frequently appearing in the current era of high-dimensional datasets due to advancement of internet based technologies. In the current study, a new IFRS based joint entropy, conditional entropy, and mutual information based on a new IF hybrid relation and IF granular structure to handle the different issues such as later uncertainty, vagueness, and imprecision available in the large volume of high dimensional datasets that may degrade the performances of learning algorithms. Firstly, a novel hybrid IF similarity relation is presented. Secondly, joint and conditional entropies are established in IF rough framework. Thirdly, IF rough mutual information is introduced. Then, lower and upper approximations are computed by using presented hybrid IF similarity relation. Thereafter, dependency function is computed by using the defined lower approximation. Next, significance of feature subset is computed by using IF rough mutual information. Further, a heuristic feature selection algorithm is discussed by using both significance and dependency function. IF rough mutual information are employed to measure the later uncertainty and the correlation between features and class. Next, this algorithm is applied on benchmark datasets, and the reduct is computed. The effectiveness of the proposed algorithm is further explained by measuring the performances of seven widely used learning techniques on reduced data produced by our method and four existing approaches. Finally, the proposed method is applied to enhance the overall prediction to discriminate the phsopholipidosis80 positive (PL+) and phsopholipidosis negative (PL-) molecules. Phospholipidosis is a condition when there is an abnormal buildup of phospholipids in various tissues due to the usage of cationic amphiphilic pharmaceuticals. Phsopholipidosis (PPL) is a reversible condition, and phospholipidosis levels revert to normal once the cationic amphiphilic medications are stopped81. Computational prediction of possible inducing characteristics utilizing structure-activity relationship (SAR) can enhance the traditional high throughput screening and drug development pipelines because to its rapidity and cost-effectiveness82.The main contributions of the entire study can be highlighted as follows:

Major contributions of the study

-

This study establishes a new hybrid IF similarity relation that can deal with both nominal and numerical features.

-

An IF granular structure is presented to handle the noise in mixed data.

-

IF rough entropy, joint entropy, and conditional entropy is given to handle the later uncertainty with information entropy.

-

Further, the idea of an If rough mutual information is discussed.

-

Moreover, this If rough mutual information is employed to evaluate both uncertainty and the correlation between conditional feature and decision class.

-

Then, a feature selection approach is introduced by using this IF rough mutual information concept.

-

Finally, a framework is designed based on our proposed methods to enhance the prediction of phospholipidosis positive molecules.

Theoretical background

In this segment, few essential basic notions about IF set, IF relation, IF information system, and mutual information is reviewed. These concepts can be explained/described as follows:

Definition 2.1

IF set An IF set X in \({\mathbb {U}}\) is well defined collection of samples/objects having the form

where, \({\mathbb {U}}\) portrays the set of data points/samples/objects. Moreover, \(\mu _{X}:{\mathbb {U}}\rightarrow [0,1]\) along with \(\nu _{X}:{\mathbb {U}}\rightarrow [0,1] \), which holds the essential condition \( 0\le \mu _{X}(x) + \nu _{X}(x)\le 1, \forall x \in {\mathbb {U}}\). Here, \(\mu _{X}(x)\) and \(\nu _{X}(x)\) are depicted as the imperative membership and non-membership grades for a given element \( x \in {\mathbb {U}}\). Further, \(\pi _{X}(x)= 1 - \mu _{X}(x) -\nu _{X}(x) \) portrays the hesitancy grade of \( x \in {\mathbb {U}}\). Additionally, we have \(0\le \pi _{X}(x) \le 1\), \(\forall x \in \) \({\mathbb {U}}\). Thus, the obtained ordered pair \(<\mu _{X},\nu _{X}>\) is depicted as a requisite IF value.

Definition 2.2

IF information system An IF information system (IFIS) can be exemplified by a quadruple ( \({\mathbb {U}}\),\( C, V_{IF},IF)\), where, we have \(V_{IF} \), which is comprised of all IF values. Further, we have a mapping, which can be portrayed by IF : \({\mathbb {U}}\) \(\times C\rightarrow V_{IF}\), in such a way that \(IF(x, a) = <\mu _{X}(x),\nu _{X}(x)>\),\(\forall x \in {\mathbb {U}}\), \( \forall a\in C\).

Definition 2.3

IF relation Let \(R(x_i,x_j)=(\mu _X(x_i,x_j),\nu _X(x_i,x_j))\) be an IF binary relation induced on the system. \(R(x_i,x_j)\) is IF similarity relation if it satisfies :

-

(1)

Reflexivity: For any given i and j,

$$\begin{aligned} \mu _{R}(x_i,x_j)=1~~{\text {and}}~~\nu _{R}(x_i,x_j)=0 \end{aligned}$$(2) -

(2)

Symmetry: For any given i and j,

$$\begin{aligned} \mu _{R}(x_i,x_j)=\mu _{R}(x_j,x_i)~~{\text {and}}~~\nu _{R}(x_i,x_j)=\nu _{R}(x_j,x_i) \end{aligned}$$(3)\(\forall x_i,x_j \in {\mathbb {U}}\)

Definition 2.4

Mutual information Mutual information (MI) can be expresserd based on broadely depicted entropy and well-known conditional entropy by using the following given equation

where, \(P \subseteq C\), H(D) and H(D|P) depict information entropy and conditional entropy respectively. Decrease of uncertainty about D gernerated by P is evaluated by mutual information and its inverse is computed in the same way. Mutual information is employed to calculate either volume of information of P enclosed in D or D included in P. H(P) is amount of information contained in P about itself which means I(P;P)=H(P)

Definition 2.5

Significance of conditional feature For a given IFIS and \(B \subseteq C \), if we have an arbitrary conditional dimension/feature \(b\in (C-B)\), then its significance can be illustrated by the following equation

and \(B=\phi \), \( SGF(b,B,D) =H(D)-H(D|{b})=I({b};D)\), which is a MI between conditional dimension/feature b and decision feature D. If the calculated value of SGF(b, B, D) is greater, then it insinuates that under the known condition of feature subset B, dimension b is found to be more potential for the available decision feature D.

Proposed work

In the underway segment, we demonstrate a hybrid IF similarity relation, granular structure, and MI. Based on these concepts, a feature selection procedure is introduced to discard irrelevancy and redundancy available in the high-dimensional information systems.

IF Relation: For all \( a \in C\), and \( x_i,x_j \in {\mathbb {U}}\), the hybrid similarity \(R_{a}^h\left( x_i,x_j\right) \) between \(x_i\) and \(x_j\) with respect to any given a can be defined by:

where, \(\zeta _a=1-R_{a}^h(x_i, x_j)\) is depicted as an adaptive IF radius. The IF relation and IF relation matrix enticed by a \(\in \) \({\mathbb {U}}\) are \(R_{a}^h\) and \(M_{R_{a}^h} = \left( r_{ij}\right) _{n \times n}\), where \(r_{ij} = R_{a}^h\left( x_i, x_j\right) \).

If we have \(C_1 = \{a_1,a_2,\dots ,a_{|C_1} \}\subseteq {\mathbb {C}}\), then,

Proof

-

(1)

Reflexive: If we take a case when \(x_i=x_j\), then, proposed relation follows only two cases, which are first and third. Moreover, other two cases are rejected by default.

Case 1. if \(a(x_i) = a(x_j)\) where a is a nominal , then we obtain \(R_{a}^h(x_i,x_j)\)=\(R_{a}^h(x_i,x_i)\)=1

Case 2. If a is numerical and \( |\mu _{a}(x_{i}) - \mu _{a}(x_{j})| \le \zeta _a \) and \( |\nu _{a}(x_{i}) - \nu _{a}(x_{j})| >\zeta _a \),then \(R^{h}_a\left( x_i,x_j\right) =1 -\frac{1}{n^2} \displaystyle \sum _{j=1}^{n} \sum _{i=1}^{n}(|\mu _{a}(x_{j}) - \mu _{a}(x_{i})||\nu _{a}(x_{j}) - \nu _{a}(x_{i})|)\)

Now,if we put \(x_i=x_j\), we get the folllowing results:

\(R^{h}_a(x_i,x_i)=1 -\frac{1}{n^2} \displaystyle \sum _{i=1}^{n}(|\mu _{a}(x_{i}) - \mu _{a}(x_{i})||\nu _{a}(x_{i}) - \nu _{a}(x_{i})|)\)

\(R^{h}_a(x_i,x_i)=1\), therefore, we get \(R^{h}_a\left( x_i,x_j\right) \) as refelxive

-

(2)

Symmetry:

$$\begin{aligned}&R_{a}^h\left( x_i, x_j\right) ={\left\{ \begin{array}{ll} 1, &{} a(x_i) = a(x_j) \text { and } a \text { is nominal;} \\ 0, &{} a(x_i) \ne a(x_j) \text { and } a \text { is nominal;} \\ 1 -\frac{1}{n^2} \displaystyle \sum _{i=1}^{n} \sum _{j=1}^{n}(|\mu _{a}(x_{i}) - \mu _{a}(x_{j})|\\ \hspace{2.5cm}\times |\nu _{a}(x_{i}) - \nu _{a}(x_{j})| ), &{} a \text { is numerical and } |\mu _{a}(x_{i}) - \mu _{a}(x_{j})| \le \zeta _a\\ &{}|\nu _{a}(x_{i}) - \nu _{a}(x_{j})|>\zeta _a;\\ 0, &{} a \text { is numerical and } |\mu _{a}(x_{i}) - \mu _{a}(x_{j})| > \zeta _a\\ &{}|\nu _{a}(x_{i}) - \nu _{a}(x_{j})| \le \zeta _a;\\ \end{array}\right. } \end{aligned}$$(8)$$\begin{aligned}&\quad R_{a}^h\left( x_i, x_j\right) ={\left\{ \begin{array}{ll} 1, &{} a(x_j) = a(x_i) \text { and } a \text { is nominal;} \\ 0, &{} a(x_j) \ne a(x_i) \text { and } a \text { is nominal;} \\ 1 -\frac{1}{n^2} \displaystyle \sum _{j=1}^{n} \sum _{i=1}^{n}(|\mu _{a}(x_{j}) - \mu _{a}(x_{i})|\\ \hspace{2.5cm}\times |\nu _{a}(x_{j}) - \nu _{a}(x_{i})|), &{} a \text { is numerical and } |\mu _{a}(x_{j}) - \mu _{a}(x_{i})| \le \zeta _a\\ &{}|\nu _{a}(x_{j}) - \nu _{a}(x_{i})|>\zeta _a;\\ 0, &{} a \text { is numerical and } |\mu _{a}(x_{j}) - \mu _{a}(x_{i})| > \zeta _a\\ &{}|\nu _{a}(x_{j}) - \nu _{a}(x_{i})| \le \zeta _a;\\ \end{array}\right. } \end{aligned}$$(9)Now, it can be identified that

$$\begin{aligned} R_{a}^h\left( x_i, x_j\right) =R_{a}^h\left( x_j, x_i\right) \end{aligned}$$So , \(R_{a}^h\left( x_i, x_j\right) \) is symmetric

Since, \(R_{a}^h\left( x_i, x_j\right) \) is both reflexive and symmetric. Hence, we can obviously conclude that \(R_{a}^h\left( x_i, x_j\right) \) is an IF similarity relation. \(\square \)

Granular structure

The IF granule \(\forall x_i\in {\mathbb {U}}\) is elicited by \(C_1\) as follows:

,

further,

\(\forall a \in P \) is subset of C and \(\epsilon \in [0,1]\)

By using IF granulation structure, rough entropy can be discussed into IF rough framework, and IF rough entropy of a feature can be described by:

Definition 3.1

The IF rough entropy of \(C_1\) can be given as:

It is obvious to identify that \(0 \le ET(C_1)\le \log _2n \) iff \(\forall x_i,x_j \in {\mathbb {U}}, R_{C_1}^h(x_i,x_j)=1, \left| [x_i]_{R_{C_1}^h}\right| =n ,\) so \(ET(C_1)=\log _2 n\). In this suit all the sample pairs are found to be identical. Therefore, the obtained granulation space is found to be the largest at this time, on the contrary \(\forall x_i\ne x_j \hspace{0.1cm} R_{C_1}^h(x_i,x_j)= 0,\) which indicates \(\left| [x_i]_{R_{C_1}^h}\right| =1\). Therefore, \(ET(C_1)=\log _2 n = 0 \). Now,the granulation space is instituated as the smallest one.

Definition 3.2

The IF joint rough entropy of \(C_1\) and \(C_2\) can be expressed by :

Definition 3.3

The IF rough conditional entropy of \(C_2\) relative to \(C_1\) can be addressed by the following equation :

Definition 3.4

The IF rough mutual information of \(C_2 \) and \(C_1\) can be computed as follows;

Definition 3.5

The IF rough mutual information between D and \(C_1\) can be illustrated by the equation:

By using this equation, IF rough mutual information \( I(d;C_1)\) considers as the correlation between \(C_1\) and decision feature D . If the obtained value of IF rough mutual information between D and \(C_2\) is higher, then, we get more correlated value between \(C_1\) and D.

Proposition 3.6

If \(C_1\subseteq C_2 \subseteq C\), then \(R_{C_1}^h \supseteq R_{C_2}^h\)

Proof

As discussed by the aforesaid definition 3.1, \(R_{c_1}^h(x_i,x_j)=\bigwedge \limits _{l=1}^{|C_1|}R_{C_1}^h (x_i,x_j)\), \(R_{c_2}^h(x_i,x_j)=\bigwedge \limits _{l=1}^{|C_2|}R_{C_2}^h (x_i,x_j)\) and \( |C_1|\le |C_2|\) \(\Rightarrow R_{c_2}^h(x_i,x_j)\subseteq R_{C_1}^h (x_i,x_j)\) \(\Rightarrow \) \(R_{C_1}^h \supseteq R_{C_2}^h\)

Now, \(R_{C_1}^h \supseteq R_{C_2}^h \Longleftrightarrow \forall x_i,x_j \in {\mathbb {U}}\);

\(R_{C_1}^h (x_i,x_j)\ge R_{C_2}^h(x_i,x_j)\) \(\square \)

Proposition 3.7

If \(R_{C_1}^h \subseteq R_{C_2}^h \), then \(ET\left( R_{C_1}^h \right) \le ET\left( R_{C_2}^h\right) \).

Proof

For a given \(R_{C_1}^h \subseteq R_{C_2}^h \), we have \(\forall x_i,x_j\in {\mathbb {U}} \). Now, we can simply write \(R_{C_1}^h(x_i,x _j) \le R_{c_2}^h(x_i,x_j)\) \(\Rightarrow \left| [x_i]_{R_{C_1}^h} \right| \le \left| [x_i]_{R_{C_2}^h} \right| \)

Therefore, we detect the result by using the definition 3.1 as \(ET\left( R_{C_1}^h \right) \le ET\left( R_{C_2}^h\right) \). \(\square \)

Proposition 3.8

IF \(C_1\subseteq C_2 \subseteq C\) then \( ET(C_1) \ge ET(C_2)\)

Proof

For any given \( C_1 \subseteq C_2 \), we have the following expression based on the Proposition 3.6,

\(R_{C_1}^h \supseteq R_{c_2}^h \). Moreover, by using Proposition 3.7, we can conclude the following result:

\(ET(C_1) \ge ET(C_2)\)

Proposition 3.8 depcits that IF rough entropy reduces when feature subset accquire larger size, whilst,it grows in case of features subset procures smaller size . It can be easily observed that IF rough entropy definition can evaluate the uncertainty of IF approximation space. \(\square \)

Proposition 3.9

Suppose \(C_1,C_2\subseteq C \), then \(ET(C_1,C_2)\le \text {min} [ET(C_1),ET(C_2)]\)

Proof

Since \(\forall x_i \in {\mathbb {U}}\) \([x_i]_{R_{C_1}^h} \cap [x_i]_{R_{C_2}^h} \subseteq [x_i]_{R_{C_1}^h}\) and \( [x_i]_{R_{C_1}^h} \cap [x_i]_{R_{C_2}^h} \subseteq [x_i]_{R_{C_2}^h}\) \(\Rightarrow \left| [x_i]_{R_{C_1}^h} \cap [x_i]_{R_{C_2}^h} \right| \le \left| [x_i]_{R_{C_1}^h} \right| \) and \(\left| [x_i]_{R_{C_1}^h} \cap [x_i]_{R_{C_2}^h}\right| \le \left| [x_i]_{R_{C_2}^h}\right| .\) By Proposition 3.2, we have \(ET(C_1,C_2) \le ET(C_1)\) and \(ET(C_1,C_2)\le ET(C_2) .\) \(\Rightarrow ET(C_1,C_2) \le \text {min} (ET(C_1),ET(C_2)).\) \(\square \)

Proposition 3.10

IF \(C_1\subseteq C_2 \subseteq C\), then \( ET(C_1,C_2) = ET(C_2)\)

Proof

Since \( C_1 \subseteq C_2 \), hence, by using the Proposition 3.6, we get

\( R_{C_1}^h\supseteq R_{C_2}^h \Rightarrow [x]_{R_{C_1}^h}\supseteq [x]_{R_{C_2}^h} \Rightarrow [x]_{R_{C_1}^h} \cap [x]_{R_{C_2}^h}=[x]_{R_{C_2}^h} \) So, \(ET(C_1,C_2)=ET(C_2) \) \(\square \)

According to the Proposition 3.10, when there are two IF granules produced by two potential feature subsets respectively, then IF joint rough entropy of the calculated two potential feature subsets is equal to the IF rough entropy of the feature subsets corresponding to relatively smaller IF granulation.

Proposition 3.11

\( ET(C_2 |C_1)= ET(C_2,C_1)- ET(C_1)\).

Proof

Based on the Definition 3.3, we have \(ET(C_1)+ ET(C_2 |C_1)= -\frac{1}{n} \sum _{i=1}^n \log _2 \frac{1}{|[x_i]_{R_{C_1}^h |}}-\frac{1}{n} \sum _{i=1}^n \frac{|[x_i]_{R_{C_1}^h}|}{|[x_i]_{R_{C_1}^h}| \cap |[x_i]_{R_{C_2}^h}|} \) \(\Rightarrow ET(C_1)+ ET(C_2|C_1)=-\frac{1}{n}\left[ \sum _{i=1}^n\left[ \log _2 \frac{1}{|[x_i]_{R_{C_1}^h |}} + \log _2 \frac{|[x_i]_{R_{C_1}^h}|}{|[x_i]_{R_{C_1}^h}| \cap |[x_i]_{R_{C_2}^h}|}\right] \right] \) \(\Rightarrow ET(C_1)+ET(C_2 |C_1)=- \frac{1}{n} \sum _{i=1}^n\left[ \log _2\frac{|[x_i]_{R_{C_1}^h |}}{|[x_i]_{R_{C_1}^h |}| |[x_i]_{R_{C_1}^h}| \cap |[x_i]_{R_{C_2}^h}| |}\right] \) \(\Rightarrow ET(C_1)+ ET(C_2 |C_1)=- \frac{1}{n} \sum _{i=1}^n \log _2 \frac{1}{|[x_i]_{R_{C_1}^h} \cap [x_i]_{R_{C_2}^h}|} \) \( \Rightarrow ET(C_1) + ET(C_2|C_1) = E(C_1,C_2) \) \( \Rightarrow ET(C_2|C_1) = ET(C_1,C_2) - ET(C_1)\) \(\square \)

Proposition 3.12

If \(C_1\subseteq C_2 \subseteq C\), then \( ET(C_2|C_1)=0 \)

Proof

Since, \( C_2 \subseteq C_1 \) , hence, based on the Proposition 3.6, we can conclude that\( R_{C_1}^h \subseteq R_{C_2}^h \). Therefore, \( \forall x_i\), \( [x_i]_{R_{C_1}^h} \subseteq [x_i]_{R_{C_2}^h} \), furthermore, \(\forall x_{i}\) , \( x_i,[x_i]_{R_{C_1}^h} \cap [x_i]_{R_{C_2}^h} = [x_i]_{R_{C_1}^h} \), now, based on the Definition 3.3, we have \( ET(C_2|C_1) =-\frac{1}{n} \sum _{n=1}^n \log _2 \frac{\left| [x_i]_{R_{C_1}^h}\right| }{\left| [x_i]_{R_{C_1}^h}\right| \cap \left| [x_i]_{R_{C_2}^h}\right| } \) \(\Rightarrow ET(C_2|C_1) =-\frac{1}{n} \sum _{n=1}^n \log _2 \frac{\left| [x_i]_{R_{C_1}^h}\right| }{\left| [x_i]_{R_{C_1}^h}\right| } = -\frac{1}{n} \sum _{n=1}^n \log _2 1 =0 \) \(\square \)

IF rough mutual information can’t only be used to measure the uncertainty of IF approximation space but also can be applied to evaluate the correlation between conditional feature and decision class.

Proposition 3.13

\(I(C_1; C_2)= ET(C_2)-ET(C_2|C_1)\) \( = ET(C_1)-ET(C_1|C_2)\)

Proof

Based on the Proposition 3.9, we have \( ET(C_2)-ET(C_2|C_1) =-\frac{1}{n} \sum _{i=1}^n \log _2 \frac{1}{\left| [x_i]_{R_{C_2}^h}\right| } + \frac{1}{n} \sum _{i=1}^n \log _2 \frac{\left| [x_i]_{R_{C_1}^h}\right| }{\left| [x_i]_{R_{C_1}^h} \cap [x_i]_{R_{C_2}^h} \right| } \) \( \Rightarrow ET(C_2)-ET(C_2|C_1) =- \frac{1}{n} \sum _{i=1}^n \left[ \log _2 \frac{1}{\left| [x_i]_{R_{C_2}^h}\right| } - \log _2 \frac{\left| [x_i]_{R_{C_1}^h}\right| }{\left| [x_i]_{R_{C_1}^h} \cap [x_i]_{R_{C_2}^h} \right| } \right] \Rightarrow ET(C_2)-ET(C_2|C_1) = - \frac{1}{n} \sum _{i=1}^n \log _2 \frac{\left| [x_i]_{R_{C_1}^h} \cap [x_i]_{R_{C_2}^h} \right| }{\left| [x_i]_{R_{C_1}^h} \right| \left| [x_i]_{R_{C_2}^h} \right| } = I(C_1; C_2)\) Similarly, we can get \(I(C_1; C_2)= ET(C_1)- ET(C_1 |C_2)\) \(\square \)

Proposition 3.14

\( I(C_1; C_2)= I(C_2;C_1)= ET(C_1) +ET( C_2)-ET(C_1,C_2)\)

Proof

Obviously \(I(C_1; C_2) = I(C_2; C_1)\) satisfies based on the Definitions 3.1, 3.4, and 3.5. Now, we obtain the following results: \(ET(C_1) +ET( C_2)-E(C_1,C_2)=- \frac{1}{n} \sum _{i=1}^n \log _2 \frac{1}{\left| [x_i]_{R_{C_1}^h}\right| } -\frac{1}{n} \sum _{i=1}^n \log _2 \frac{1}{\left| [x_i]_{R_{C_2}^h}\right| } + \sum _{i=1}^n \log _2 \frac{1}{\left| [x_i]_{R_{C_1}^h} \cap [x_i]_{R_{C_2}^h} \right| } \) \(\Rightarrow ET(C_1) +ET( C_2)-ET(C_1,C_2)= -\frac{1}{n} \sum _{i=1}^n \left[ \log _2 \frac{1}{\left| [x_i]_{R_{C_1}^h} \right| } + \log _2 \frac{1}{\left| [x_i]_{R_{C_2}^h} \right| } -\log _2 \frac{1}{\left| [x_i]_{R_{C_1}^h} \cap [x_i]_{R_{C_2}^h} \right| } \right] \) \( \Rightarrow ET(C_1) +ET( C_2)-ET(C_1,C_2)=-\frac{1}{n} \sum _{i=1}^n \log _2 \frac{\left| [x_i]_{R_{C_1}^h} \cap [x_i]_{R_{C_2}^h} \right| }{\left| [x_i]_{R_{C_1}^h}\right| \left| [x_i]_{R_{C_2}^h} \right| } =I(C_1; C_2)\) \(\square \)

Definition 3.15

For a given IFIS, let P be subset of conditional dimensions/features(C).Thereafter,\(\forall Y\in (C-P)\) is found to be the significance as \(\Omega (Y,P,D)\), which can be further given by:

\(Y=\phi , \Omega (T,P,D)\), and can be outlined as, \(\Omega (Y,D) =ET(D)- ET(D|{Y})=I({Y};D)\), which depicts the MI of IF conditional feature T and the decision feature D. If the value of \(\Omega (T,P,D)\) increases, then IF conditional dimension/feature T is obtained to be more relevant for a given decision feature D.

Experimentation

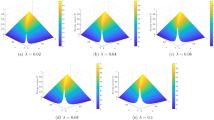

In the current experimental section, the performance of the proposed method is evaluated and compared with the existing fuzzy and IF sets assisted techniques. All the pre-processing concepts are implemented in Matlab 202383 and learning algorithms are implemented in WEKA84. Firstly, fuzzification and intuitionistic fuzzification of the real valued data is performed by using the methods proposed by Jensen et al.6 and Tan et al.57 respectively. Secondly, the reduced datasets are obtained by the previously presented approaches. Thirdly, different threshold parameters values are adjusted for our established method to produce the reduct. Then, reduced datasets are generated by discarding the noise to the maximum level. The reduct is computed by changing the value of \(\xi \) from 0.1 to 0.8 in small interval, and the value of \(\xi \) providing the maximum performance measures in the experiment is selected as the final one. To perform the entire experimental study, the following setup is exercised to conduct the comprehensive experiments:

Dataset

Ten benchmark datasets are taken from widely discussed University of California from Irvine based Machine Learning Repository85 to conduct the entire experiments. The required details of these datasets are outlined in Table 1. The dimension and size of these datasets depict that these are small to large datasets as number of data points range from 62 to 4521 and features range from 9 to 10000.

Classifiers

Seven different learning methods86 are applied to demonstrate the performance measures on the reduced datasets obtained from different feature selection techniques. RealAdaBoost with random forest as base classifier (RARF) and IBK are employed for the objective of evaluating overall classification accuracies with standard deviation by using diverse validation techniques for ten benchmark reduced datasets. Moreover, we applied naive bayes, SMO, IBK, RARF, PART, JRip,J48, and random forest (RF) to evaluate the performances based on various evaluation metrics for the reduced Nath et al.87 dataset for evaluating the effectiveness of the proposed technique when compared to existing method for discriminating PL+ and PL- molecules.

Dataset split: Feature selection process is carried out over complete information system. After production of reduced datasets, individual learning algorithm is evaluated based on percentage split of 66:34 and kd-fold cross validation. In percentage split technique, dataset is randomly divided into two parts, where training is done on 66% of the entire dataset, while 34% of the dataset is employed to perform testing. In kd-fold cross validation, whole dataset is randomly separated into kd subsets, where kd-1 parts form training set, whilst one is employed to form testing set. After kd such repetitions, average value of different evaluation metrics is considered as final performance. In the current study, the value of kd is taken as 10.

Performance evaluation metrics

The prediction performance measures of the seven learning algorithms from different categories are evaluated using both broadly elaborated threshold-dependent and threshold- independent assessment parameters. These assessment parameters are ascertained based on the calculated values of true positive (TRP), true negative (TRN), false positive (FLP), and false negative (FLN). TRP is computed number of correctly predicted positive data points; TRN is calculated number of correctly predicted negative data points. FLN is representation for the number of incorrectly predicted positive samples, while FLP is depiction for the number of incorrectly predicted negative samples. We employ different parameters namely: Sensitivity (Sn), Specificity (Sp), Accuracy (Ac), AUC, and MCC to measure the overall performances of the individual learning algorithms. Now, these evaluation parameters can be mathematically discussed as follows:

Sn: This calculates the overall percentage of correctly classified PPL+, which is specified by:

Sp: This includes the efficacious percentage of correctly classified PPL−, which is produced by:

Ac: The percentage of required overall correctly classified PPL+ and PPL− , which can be stated as:

AUC: It is applied to observe the important and required area under the receiver operating characteristic curve (ROC), the more tends its count towards 1, the better will be the obtained predictor.

MCC: Mathew’s correlation coefficient is a very much potential and the most awaited parameters, which is computed with the help of following equation:

This parameter is applied not only to clarify the effectiveness of the binary classifications but also to justify its efficiency. An MCC value tends towards 1 to specify that the predictor is the promising one.

Results and discussion

The details of the ten benchmark datasets along with the reduct as produced by four existing as well as presented methods is depicted in Table 1. Real-valued datasets are converted into fuzzy and IF values by using widely discussed Jensen et al.6 and Tan et al.57 concepts. Entire reduction process is accomplished over complete data by using both fuzzy and IF aided techniques. FSFrMI72, GIFRFS57, TIFRFS59, and FRFS6 are the earlier efficacious and effective techniques, which are incorporated to perform the comparative results (Table 2). Our proposed method produced reduct set range from 7 to 169, where reduct size is smaller when compared to reduct size by earlier approaches, except bank marketing and thyroid-hypothyroid datasets. For bank marketing dataset, FSFrM and GIFRFS resulted in relatively less size data, whilst smaller size is produced by FSFrMI and FRFS for thyroid-hypothyroid and fertility diagnosis datasets respectively in contrast with IFRFSMI. Moreover, for breast cancer, FSFrM and FRFS provide the similar size, whilst, for fertility diagnosis dataset FRFS produce similar size of the data when compared to the results presented by proposed method. From the recorded reduct in Table 1, it can be observed that our proposed technique is generating more reduced dimensions for most of the cases related to all the ten datasets rather than recently established powerful methods. We have presented the visualization of reduction process based on different methods in Fig. 1, which clearly indicates that our proposed method produces high percentage of overall feature elimination with the increment of total conditional features. Then, IBK and RARF are chosen to show the learning performances in terms of standard deviation with overall accuracies for the reduced datasets generated by four existing and our proposed techniques, where 10-fold cross validation is employed to avoid the overfitting. These results are reported in Table 2, where the ranks are outlined in the superscript of all the individual results. From the results available in Table 2, it is obvious that our proposed method is dispensing the better results in contrast with the results of other previous approaches regardless of reduced data produced by previous approaches, except the outcome for breast cancer and heart disease datasets. For breast cancer dataset, TIFRFS presents better outcome when compared to IFRFSMI by using both IBK and RARF, while, for heart disease dataset TIFRFS gave the best result with RARF. For colon and heart disease datasets, GIFRFS and TIFRFS leads to identical results as compared to IFRFSMI based results by IBK. Similar results are presented by RARF for fertility diagnosis and wdbc datasets based on the reduced datasets produced by FSFrMI and GIFRFS respectively in contrast with proposed method based reduced datasets. Entire results can be visualized by Figs. 2 and 3. These figures depict that proposed concept are very much effective for both low and high-dimensional datasets as the reduced datasets produced by this method always leads to increment of overall accuracies of the different learning algorithms regardless of their dimensionality size.

Our assumptions to verify the significance of our proposed method are as follows:

Null Hypothesis: All the employed methods are equivalent.

Alternate Hypothesis: There is significant difference among the employed methods.

Two widely accepted testing approaches namely Freidman test88 and Bonferoni Dunn test89 are applied to validate the significance of the presented method. Freidman test is used to perform comparative study of multiple models. Further, Bonferoni Dunn is employed to obtain which method is significantly different from proposed technique. The null hypothesis can be rejected at \(\alpha \%\) level of significance if the values between their average ranks is higher rather than critical distance value. In the current study, average ranks by both IBK and RARF based on our proposed method are recorded as the minimum value (Table II). These values are clearly depicting the superiority of our established models. Moreover, F-statistics computed values based on IFRFSMI are obtained larger for both IBK and RARF when compared to F-tabular value. F-statistics computed values for IBK and RARF are 23.09 and 32.38 (Table II), whilst F-tabular value is 2.634 (F(4,36) = 2.634 at 5% level of significance). Therefore, based on Dunn Test our proposed method is found as significantly different.

Case study: an application to discriminate PL+ and PL- molecules

One of the prime applications of machine learning based methods in cheminformatics is the reduction of enormous chemical space with respect to some property of interest. The reduced chemical space can then be validated using wet lab based experiments, thus making the fidelity of machine learning methods of outmost importance.

One of the hallmarks of phospholipidosis is the accumulation of phospholipids in the various types of tissues for eg. kidneys, eyes etc. mostly caused by cationic amphiphilic molecules. Highly accurate machine learning prediction models can facilitate in screening of phospholipidosis inducing compounds in early stages of drug discovery workflows, thereby reducing the cost and time associated with wet lab based experiments (Fig. 4).

The present methodology can open new possibilities for further research in early screening of phospholipidosis inducing molecules.

Now, our proposed approach is applied to Nath et al.87 dataset to produce the effective reduced form by minimizing noise, uncertainty, imprecision available in the data along with removal of redundant, and irrelevant attributes. Thereafter, seven classifiers from different categories are investigated to evaluate their performances over this reduced dataset based on sensitivity, AUC, Specificity, MCC, and accuracy, which have reported in Tables 3, 4, 5 and 6. Moreover, for original and reduced data, a commodious approach to represent theoverall performance measures of all the seven classifiers at the best decision threshold can be given by Receiver Operating Characteristic (ROC) curve, which furnishes a visual explanation of the classifiers performance. Figures 5 and 6 depict ROC curves for original and reduced dataset based on 10-fold cross validation. These figures indicate that RARF algorithm achieved the best AUC in comparison to all the other algorithms(\(>0.89\)).

To compare with the performance evaluation metrics for the phospholipidosis dataset, we used the same package in R (https://https://cran.r-project.org/web/packages/h2o/index.html)as used in the original work (Nath et al.87). We used a grid search strategy to obtain the best hyperparameters for the random forest algorithm Hyperpaprametersntrees = c(20,50,100,500),max depth = c(20,40,60,80),sample rate = c(0.2,1,0.01). Further, we used the same of features (JOELib+Structural alerts), which are calculated using the ChemMine tools webserver (https://chemminetools.ucr.edu/). The dataset consisted of 102 phospholipidosis inducing compounds (positive samples) and 83 phospholipidosis non-inducing compounds (negative samples), thus constituting a total of 185 molecules. Schematic representation for entire process is given by Fig. 7. In the current methodology, we start the process with a dataset consisted of phospholipidosis positive molecules and phospholipidosis negative molecules. Then, descriptor generator converts the initial data into target data. Further, SMOTE is applied to obtain the balanced dataset. Next, this dataset is converted into intuitionistic fuzzy information system by using Tan et al.57 approach. Thereafter, our proposed feature subset selection method is applied to remove noise, vagueness, irrelevancy, redundancy, and uncertainty to obtain reduced dataset. Moreover, several classifiers are used to discriminate positive and negative classes. Finally, RARF is identified as the best performer.

The performance evaluation metrics for the current method and the previous ensemble based method are presented in Table 7. The dataset preprocessing introduced in the current work resulted in enhanced performance evaluation metrics for the RF algorithm in comparison to the previously published results. Notably a 2 percent rise on overall accuracy is observed. As the dataset is slightly imbalanced, a rise in MCC for the current method proves the usefulness of the dataset preprocessing step. The ROC plot for the RF(h2o) model is presented in Fig. 4. An AUC value of 0.922 indicates an acceptable prediction model for phospholipidosis inducing molecules. In the end of the entire study, the list of abrreviations, signs, and symbols are presented in Table 8.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Conclusion

Dimensionality reduction broadly aims to obtain a feature subset from existing original feature set by using certain powerful evaluation criterion. Since dimensionality reduction can produce efficient feature subset, where feature selection has found as an interesting central technique for data pre-processing in various beneficial and interesting data mining tasks. Conventional fuzzy rough set frequently incorporates dependency function as an evaluation criterion of feature subset selection. However, this method only maintained the maximum membership grade of a data point to one decision class and found to be unable in discarding later uncertainty and noise up to certain extent, which cannot characterize the classification error. To avoid these issues, we presented a novel intuitionistic fuzzy aided technique, where feature selection method is established by integrating information entropy with IF rough set concept.

-

Initially, we established a hybrid IF similarity relation, which is further employed to present a novel IF rough joint and conditional entropies.

-

Then, IF granular structure was introduced based on the proposed hybrid similarity relation.

-

Thereafter, IF rough set model was described by using the aforesaid relation.

-

Based on these entropies and granular structure, we suggested a mutual information idea to compute the significance of the feature subset for a decision class.

-

Next, mathematical theorems are validated to justify the correctness of the proposed ideas.

-

By using the significance notion a heuristic IF rough feature selection algorithm is represented. Then, we apply this heuristic algorithm on ten benchmark datasets to illustrate extensive experiments.

-

Finally, proposed method is successfully employed to enhance the prediction performance for identifying PL+ and PL- molecules.

For dbworld-bodies dataset, our method has eliminated 99.83% features. Moreover, performance measures of learning algorithms were evaluated based on the reduced data produced by four existing and our proposed methods, where results clearly indicate superiority of the proposed technique. For thyroid- hypothyroid dataset, RARF has reported an accuracy of 99.11% and standard deviation of 0.46% for IFRFSMI based reduced dataset. For the discrimination of PL+ and PL- molecules, the best sensitivity is achieved based on 66:34 validation technique with 91.9%. The best overall result was obtained by RF(h2o) with sensitivity, specificity, accuracy, AUC, and MCC of 86.7%, 93.0%, 90.1%, 0.922, and 0.808 respectively.

The advantages of our proposed methodology can be outlined as bellow:

-

This study presents a new hybrid similarity relation that can handle mixed data in intuitionistic fuzzy framework.

-

Adaptive radius is computed in the recursive way from relation itself, which ensures the information loss.

-

IF granular structure is implemented to deal with noise in mixed data as it is based on our proposed hybrid relation.

-

IF rough mutual information is implemented to cope with noise and later uncertainty based on the proposed IF granular structure.

-

This study presents a new methodology to discriminate PL+ and PL- molecules in an efficient and efficacious way.

In future, the proposed hybrid similarity relation can be improved by providing a more effective definition of adaptive radius. Further, inner and outer significance can be computed by assembling mutual information in robust IF rough framework to establish efficient approach to calculate the correlation between feature subset and class.

Data availability

The data supporting this study’s findings are available from the corresponding author (Mohd Asif Shah) upon reasonable request.

References

Issad, H. A., Aoudjit, R. & Rodrigues, J. J. A comprehensive review of data mining techniques in smart agriculture. Eng. Agric. Environ. Food 12(4), 511–525 (2019).

Li, J. et al. Feature selection: A data perspective. ACM Comput. Surv. (CSUR) 50(6), 1–45 (2017).

Papakyriakou, D. & Barbounakis, I. S. Data mining methods: A review. Int. J. Comput. Appl. 183(48), 5–19 (2022).

Awais, M. & Salahuddin, T. Radiative magnetodydrodynamic cross fluid thermophysical model passing on parabola surface with activation energy. Ain Shams Eng. J. 15(1), 102282 (2024).

Awais, M. & Salahuddin, T. Variable thermophysical properties of magnetohydrodynamic cross fluid model with effect of energy dissipation and chemical reaction. Int. J. Mod. Phys. B, 2450197 (2023).

Jensen, R. & Shen, Q. Semantics-preserving dimensionality reduction: Rough and fuzzy-rough-based approaches. IEEE Trans. Knowl. Data Eng. 16(12), 1457–1471 (2004).

Awais, M., Salahuddin, T. & Muhammad, S. Effects of viscous dissipation and activation energy for the MHD Eyring-powell fluid flow with Darcy-Forchheimer and variable fluid properties. Ain Shams Eng. J. 15(2), 102422 (2024).

Chauhan, D. & Mathews, R. Review on dimensionality reduction techniques. In Proceeding of the International Conference on Computer Networks, Big Data and IoT (ICCBI-2019) 356–362 (Springer International Publishing, 2020).

Hu, J. et al. Orthogonal learning covariance matrix for defects of grey wolf optimizer: Insights, balance, diversity, and feature selection. Knowl.-Based Syst. 213, 106684 (2021).

Jia, W., Sun, M., Lian, J. & Hou, S. Feature dimensionality reduction: A review. Complex Intell. Syst. 8(3), 2663–2693 (2022).

Tubishat, M., Idris, N., Shuib, L., Abushariah, M. A. & Mirjalili, S. Improved Salp Swarm Algorithm based on opposition based learning and novel local search algorithm for feature selection. Expert Syst. Appl. 145, 113122 (2020).

Chandrashekar, G. & Sahin, F. A survey on feature selection methods. Comput. Electr. Eng. 40(1), 16–28 (2014).

Remeseiro, B. & Bolon-Canedo, V. A review of feature selection methods in medical applications. Comput. Biol. Med. 112, 103375 (2019).

Saeys, Y., Inza, I. & Larranaga, P. A review of feature selection techniques in bioinformatics. Bioinformatics 23(19), 2507–2517 (2007).

Bommert, A., Sun, X., Bischl, B., Rahnenführer, J. & Lang, M. Benchmark for filter methods for feature selection in high-dimensional classification data. Comput. Stat. Data Anal. 143, 106839 (2020).

Cai, J., Luo, J., Wang, S. & Yang, S. Feature selection in machine learning: A new perspective. Neurocomputing 300, 70–79 (2018).

Dash, M. & Liu, H. Feature selection for classification. Intell. Data Anal. 1(1–4), 131–156 (1997).

Pawlak, Z. Rough sets. Int. J. Comput. Inf. Sci. 11, 341–356 (1982).

Pawlak, Z., Grzymala-Busse, J., Slowinski, R. & Ziarko, W. Rough sets. Commun. ACM 38(11), 88–95 (1995).

Sivasankar, E., Selvi, C. & Mahalakshmi, S. Rough set-based feature selection for credit risk prediction using weight-adjusted boosting ensemble method. Soft. Comput. 24(6), 3975–3988 (2020).

Bania, R. K. & Halder, A. R-HEFS: Rough set based heterogeneous ensemble feature selection method for medical data classification. Artif. Intell. Med. 114, 102049 (2021).

Thangavel, K. & Pethalakshmi, A. Dimensionality reduction based on rough set theory: A review. Appl. Soft Comput. 9(1), 1–12 (2009).

Campagner, A., Ciucci, D. & Hüllermeier, E. Rough set-based feature selection for weakly labeled data. Int. J. Approx. Reason. 136, 150–167 (2021).

Jensen, R. Rough set-based feature selection: A review. In Rough Computing: Theories, Technologies and Applications 70–107 (2008).

Raza, M. S. & Qamar, U. Understanding and Using Rough Set Based Feature Selection: Concepts, Techniques and Applications (Springer, 2017).

Zadeh, L. A. Fuzzy sets. Inf. Control 8(3), 338–353 (1965).

Dubois, D. & Prade, H. Putting rough sets and fuzzy sets together. In Intelligent Decision Support: Handbook of Applications and Advances of the Rough Sets Theory (ed. Slowinski, R.) 203–232 (Springer, 1992).

Chen, J., Mi, J. & Lin, Y. A graph approach for fuzzy-rough feature selection. Fuzzy Sets Syst. 391, 96–116 (2020).

Qiu, Z. & Zhao, H. A fuzzy rough set approach to hierarchical feature selection based on Hausdorff distance. Appl. Intell. 52(10), 11089–11102 (2022).

Sang, B., Yang, L., Chen, H., Xu, W. & Zhang, X. Fuzzy rough feature selection using a robust non-linear vague quantifier for ordinal classification. Expert Syst. Appl. 230, 120480 (2023).

Yin, T., Chen, H., Li, T., Yuan, Z. & Luo, C. Robust feature selection using label enhancement and \(\beta \)-precision fuzzy rough sets for multilabel fuzzy decision system. Fuzzy Sets Syst. 461, 108462 (2023).

Wang, C., Huang, Y., Ding, W. & Cao, Z. Attribute reduction with fuzzy rough self-information measures. Inf. Sci. 549, 68–86 (2021).

Zhang, X., Mei, C., Chen, D. & Yang, Y. A fuzzy rough set-based feature selection method using representative instances. Knowl.-Based Syst. 151, 216–229 (2018).

Wang, C., Huang, Y., Shao, M. & Fan, X. Fuzzy rough set-based attribute reduction using distance measures. Knowl.-Based Syst. 164, 205–212 (2019).

Wang, C., Wang, Y., Shao, M., Qian, Y. & Chen, D. Fuzzy rough attribute reduction for categorical data. IEEE Trans. Fuzzy Syst. 28(5), 818–830 (2019).

Yang, X., Chen, H., Li, T. & Luo, C. A noise-aware fuzzy rough set approach for feature selection. Knowl.-Based Syst. 250, 109092 (2022).

Yang, X., Chen, H., Li, T., Zhang, P. & Luo, C. Student-t kernelized fuzzy rough set model with fuzzy divergence for feature selection. Inf. Sci. 610, 52–72 (2022).

Yuan, Z. et al. Attribute reduction methods in fuzzy rough set theory: An overview, comparative experiments, and new directions. Appl. Soft Comput. 107, 107353 (2021).

Jain, P., Tiwari, A. K. & Som, T. A fitting model based intuitionistic fuzzy rough feature selection. Eng. Appl. Artif. Intell. 89, 103421 (2020).

Annamalai, C. Intuitionistic fuzzy sets: New approach and applications (2022).

Dan, S. et al. Intuitionistic type-2 fuzzy set and its properties. Symmetry 11(6), 808 (2019).

Atanassov, K. T. & Stoeva, S. Intuitionistic fuzzy sets. Fuzzy Sets Syst. 20(1), 87–96 (1986).

Cornelis, C., De Cock, M. & Kerre, E. E. Intuitionistic fuzzy rough sets: At the crossroads of imperfect knowledge. Expert Syst. 20(5), 260–270 (2003).

Zhan, J., Masood Malik, H. & Akram, M. Novel decision-making algorithms based on intuitionistic fuzzy rough environment. Int. J. Mach. Learn. Cybern. 10, 1459–1485 (2019).

Zhang, Z. Attributes reduction based on intuitionistic fuzzy rough sets. J. Intell. Fuzzy Syst. 30(2), 1127–1137 (2016).

Atanassov, K. T. & Atanassov, K. T. Intuitionistic Fuzzy Sets (Springer, 1999).

Tseng, T.-L.B. & Huang, C.-C. Rough set-based approach to feature selection in customer relationship management. Omega 35(4), 365–383 (2007).

Zhang, X., Zhou, B. & Li, P. A general frame for intuitionistic fuzzy rough sets. Inf. Sci. 216, 34–49 (2012).

Zhou, L. & Wu, W.-Z. On generalized intuitionistic fuzzy rough approximation operators. Inf. Sci. 178(11), 2448–2465 (2008).

Jain, P. & Som, T. Multigranular rough set model based on robust intuitionistic fuzzy covering with application to feature selection. Int. J. Approx. Reason. 156, 16–37 (2023).

Liu, Y. & Lin, Y. Intuitionistic fuzzy rough set model based on conflict distance and applications. Appl. Soft Comput. 31, 266–273 (2015).

Huang, B., Zhuang, Y.-L., Li, H.-X. & Wei, D.-K. A dominance intuitionistic fuzzy-rough set approach and its applications. Appl. Math. Model. 37(12–13), 7128–7141 (2013).

Wang, C., Huang, Y., Shao, M., Hu, Q. & Chen, D. Feature selection based on neighborhood self-information. IEEE Trans. Cybern. 50(9), 4031–4042 (2019).

Xu, J., Shen, K. & Sun, L. Multi-label feature selection based on fuzzy neighborhood rough sets. Complex Intell. Syst. 8(3), 2105–2129 (2022).

Huang, B., Li, H., Feng, G. & Zhou, X. Dominance-based rough sets in multi-scale intuitionistic fuzzy decision tables. Appl. Math. Comput. 348, 487–512 (2019).

Huang, B., Guo, C.-X., Zhuang, Y.-L., Li, H.-X. & Zhou, X.-Z. Intuitionistic fuzzy multigranulation rough sets. Inf. Sci. 277, 299–320 (2014).

Tan, A. et al. Intuitionistic fuzzy rough set-based granular structures and attribute subset selection. IEEE Trans. Fuzzy Syst. 27(3), 527–539 (2018).

Zhou, L., Wu, W.-Z. & Zhang, W.-X. On characterization of intuitionistic fuzzy rough sets based on intuitionistic fuzzy implicators. Inf. Sci. 179(7), 883–898 (2009).

Tiwari, A. K., Shreevastava, S., Som, T. & Shukla, K. K. Tolerance-based intuitionistic fuzzy-rough set approach for attribute reduction. Expert Syst. Appl. 101, 205–212 (2018).

Shreevastava, S., Tiwari, A. & Som, T. Feature subset selection of semi-supervised data: An intuitionistic fuzzy-rough set-based concept. In Proceedings of International Ethical Hacking Conference 2018: eHaCON 2018, Kolkata, India (2019).

Tiwari, A. K., Shreevastava, S., Subbiah, K. & Som, T. An intuitionistic fuzzy-rough set model and its application to feature selection. J. Intell. Fuzzy Syst. 36(5), 4969–4979 (2019).

Tiwari, A. K., Shreevastava, S., Shukla, K. K. & Subbiah, K. New approaches to intuitionistic fuzzy-rough attribute reduction. J. Intell. Fuzzy Syst. 34(5), 3385–3394 (2018).

Tiwari, A. K., Shreevastava, S., Subbiah, K. & Som, T. An intuitionistic fuzzy-rough set model and its application to feature selection. J. Intell. Fuzzy Syst. 36(5), 4969–4979 (2019).

Shreevastava, S., Singh, S., Tiwari, A. & Som, T. Different classes ratio and Laplace summation operator based intuitionistic fuzzy rough attribute selection. Iran. J. Fuzzy Syst. 18(6), 67–82 (2021).

Shreevastava, S., Tiwari, A. K. & Som, T. Intuitionistic fuzzy neighborhood rough set model for feature selection. Int. J. Fuzzy Syst. Appl. (IJFSA) 7(2), 75–84 (2018).

Li, L. Q., Wang, X. L., Liu, Z. X. & Xie, W. X. A novel intuitionistic fuzzy clustering algorithm based on feature selection for multiple object tracking. Int. J. Fuzzy Syst. 21, 1613–1628 (2019).

Singh, S., Shreevastava, S., Som, T. & Jain, P. Intuitionistic fuzzy quantifier and its application in feature selection. Int. J. Fuzzy Syst. 21, 441–453 (2019).

Sun, L., Wang, L., Ding, W., Qian, Y. & Xu, J. Feature selection using fuzzy neighborhood entropy-based uncertainty measures for fuzzy neighborhood multigranulation rough sets. IEEE Trans. Fuzzy Syst. 29(1), 19–33 (2020).

Sun, L., Zhang, X., Qian, Y., Xu, J. & Zhang, S. Feature selection using neighborhood entropy-based uncertainty measures for gene expression data classification. Inf. Sci. 502, 18–41 (2019).

Fang, L. et al. Feature selection method based on mutual information and class separability for dimension reduction in multidimensional time series for clinical data. Biomed. Signal Process. Control 21, 82–89 (2015).

Fernandes, A. D. & Gloor, G. B. Mutual information is critically dependent on prior assumptions: Would the correct estimate of mutual information please identify itself?. Bioinformatics 26(9), 1135–1139 (2010).

Wang, Z. et al. Exploiting fuzzy rough mutual information for feature selection. Appl. Soft Comput. 131, 109769 (2022).

Xie, L., Lin, G., Li, J. & Lin, Y. A novel fuzzy-rough attribute reduction approach via local information entropy. Fuzzy Sets Syst. 473, 108733 (2023).

Xu, F., Miao, D. & Wei, L. Fuzzy-rough attribute reduction via mutual information with an application to cancer classification. Comput. Math. Appl. 57(6), 1010–1017 (2009).

Fang, H., Tang, P. & Si, H. Feature selections using minimal redundancy maximal relevance algorithm for human activity recognition in smart home environments. J. Healthc. Eng. 2020, 1–13 (2020).

Xie, S. et al. A new improved maximal relevance and minimal redundancy method based on feature subset. J. Supercomput. 79(3), 3157–3180 (2023).

Maji, P. & Garai, P. On fuzzy-rough attribute selection: Criteria of max-dependency, max-relevance, min-redundancy, and max-significance. Appl. Soft Comput. 13(9), 3968–3980 (2013).

Zhang, X., Mei, C., Chen, D. & Li, J. Feature selection in mixed data: A method using a novel fuzzy rough set-based information entropy. Pattern Recogn. 56, 1–15 (2016).

Zhang, X., Mei, C., Chen, D., Yang, Y. & Li, J. Active incremental feature selection using a fuzzy-rough-set-based information entropy. IEEE Trans. Fuzzy Syst. 28(5), 901–915 (2019).

Anderson, N. & Borlak, J. Drug-induced phospholipidosis. FEBS Lett. 580(23), 5533–5540 (2006).

Breiden, B. & Sandhoff, K. Emerging mechanisms of drug-induced phospholipidosis. Biol. Chem. 401(1), 31–46 (2020).

Shayman, J. A. & Abe, A. Drug induced phospholipidosis: An acquired lysosomal storage disorder. Biochim. Biophys. Acta (BBA)-Mol. Cell Biol. Lipids 1831(3), 602–611 (2013).

Salahuddin, T. Numerical Techniques in MATLAB: Fundamental to Advanced Concepts (CRC Press, 2023).

Frank, E., Hall, M., Trigg, L., Holmes, G. & Witten, I. H. Data mining in bioinformatics using Weka. Bioinformatics 20(15), 2479–2481 (2004).

Asuncion, A. & Newman, D. UCI machine learning repository. In: Irvine, CA, USA (2007).

Hall, M. et al. The WEKA data mining software: An update. ACM SIGKDD Explor. Newsl. 11(1), 10–18 (2009).

Nath, A. & Sahu, G. K. Exploiting ensemble learning to improve prediction of phospholipidosis inducing potential. J. Theor. Biol. 479, 37–47 (2019).

Friedman, M. A comparison of alternative tests of significance for the problem of m rankings. Ann. Math. Stat. 11(1), 86–92 (1940).

Dunn, O. J. Multiple comparisons among means. J. Am. Stat. Assoc. 56(293), 52–64 (1961).

Funding

The authors did not receive support from any organization for the submitted work.

Author information

Authors and Affiliations

Contributions

A.K.T.: Conceptualization, Problem formulation, Methodology, Original draft preparation, Reviewing and Editing, and Final drafting. R.S.: Numerical analysis, Programming, Mathematical Modelling. A.N.: Data curation, Programming, Simulation, Validation, Numerical analysis, Visualization, and System set-up. P.S.: Mathematical Modelling, Visualization, and Investigation. M.A.S.: Supervision, Problem formulation, Programming, Validation, Writing, Reviewing, and Editing.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tiwari, A.K., Saini, R., Nath, A. et al. Hybrid similarity relation based mutual information for feature selection in intuitionistic fuzzy rough framework and its applications. Sci Rep 14, 5958 (2024). https://doi.org/10.1038/s41598-024-55902-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-55902-z

Keywords

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.