Abstract

Quantifying healthy and degraded inner tissues in plants is of great interest in agronomy, for example, to assess plant health and quality and monitor physiological traits or diseases. However, detecting functional and degraded plant tissues in-vivo without harming the plant is extremely challenging. New solutions are needed in ligneous and perennial species, for which the sustainability of plantations is crucial. To tackle this challenge, we developed a novel approach based on multimodal 3D imaging and artificial intelligence-based image processing that allowed a non-destructive diagnosis of inner tissues in living plants. The method was successfully applied to the grapevine (Vitis vinifera L.). Vineyard’s sustainability is threatened by trunk diseases, while the sanitary status of vines cannot be ascertained without injuring the plants. By combining MRI and X-ray CT 3D imaging with an automatic voxel classification, we could discriminate intact, degraded, and white rot tissues with a mean global accuracy of over 91%. Each imaging modality contribution to tissue detection was evaluated, and we identified quantitative structural and physiological markers characterizing wood degradation steps. The combined study of inner tissue distribution versus external foliar symptom history demonstrated that white rot and intact tissue contents are key-measurements in evaluating vines’ sanitary status. We finally proposed a model for an accurate trunk disease diagnosis in grapevine. This work opens new routes for precision agriculture and in-situ monitoring of tissue quality and plant health across plant species.

Similar content being viewed by others

Introduction

Wood is a complex biological structure providing physical support and serving the needs of a living plant. Its degradation by stresses or pathogens exposes the plant to huge physiological and structural changes, but the consequences might not be immediately detectable from the outside. Trunk diseases can spread internally and silently, degrading woody tissues, then erratically leading to external symptoms, production losses and, ultimately, the plant's death. While accurate detection and monitoring of wood degradation require structural and functional characterization of internal tissues, collecting such data on entire plants—nondestructively and in-vivo—is extremely challenging. As a result, the study and management of wood diseases are almost impossible in-situ. These diseases generate enormous losses on a global scale, including for sectors such as the wine industry.

Grapevine trunk diseases (GTDs) are a major cause of grapevine (Vitis vinifera L.) decline worldwide1. They are mostly undetectable until advanced stages are reached, and the European Union has banned the only effective treatment, i.e., an arsenic-based pesticide. Therefore, vineyard sustainability is jeopardized, with yearly losses of up to several billion dollars2. Detecting and monitoring GTDs is extremely difficult: fungal pathogens insidiously colonize trunks, leaving different types of irreversibly decayed tissues1. The predominant GTD, Esca dieback, erratically induces tiger stripe-like foliar symptoms, but their origin remains poorly understood3, and their sole observation is not indicative of the vines' sanitary status4,5,6,7. Quantifying degraded tissues within living vines could help determine the plant’s condition and predict disease evolution. However, classical techniques8 require sacrificing the plant and often yield limited information. Reaching a reliable diagnosis is thus impossible in living plants.

Monitoring wood degradation using non-destructive imaging techniques has primarily been tested on detached organs, blocks, or planks, and using a single technique: Magnetic Resonance Imaging (MRI)9,10 or X-ray computed tomography (CT)11,12,13,14. In grapevine, CT scanning allowed the visualization of the graft union15, the xylem refilling16, tyloses-occluded vessels17, and the quantifying of starch in stems18. Synchrotron X-ray CT has been applied to leaves to investigate the origin of foliar symptoms related to trunk diseases, suggesting that symptoms might be elicited from the trunk3. X-ray CT and MRI were successfully combined to collect anatomical and functional information to investigate stem flow in-vivo19. These techniques were recently tested for trunk disease detection20 but were applied separately and on different wood samples, preventing the possibility of combining modalities and thus limiting their effectiveness, and failing to distinguish healthy and defective tissues using MRI.

Imaging-based plant studies are usually performed at a microscopic scale and on a specific detached tissue or organ but rarely on a whole plant. The field of investigation is limited by difficulties in adapting imaging devices to plants and in coupling signals collected from different imaging modalities, preventing the development of ‘digital twin’ models21 for plants.

Moreover, monitoring wood degradation using multimodal imaging necessitates proper registration and the identification of specific signatures for structural and physiological traits of interest. Such signatures are not well described on wood but are mandatory to deploy automatic quantitative approaches. Experts should perform a preliminary conjoint analysis of multimodal imaging signals together with manual pixel-wise annotation of wood degradation before deploying automatic morphological phenotyping methods based on tissue segmentation and machine learning.

To address these issues, we developed an end-to-end workflow for in-vivo phenotyping of internal woody tissues condition, based on multimodal and non-destructive 3D imaging, and assisted by AI-based automatic segmentation. This approach was applied to vine imaging datasets acquired in a clinical imaging facility. 3D images were acquired in five different modalities (X-rays for structure, three MRI parameters for function, and serial sections for expertise) on entire plants, and combined by an automatic 3D registration pipeline22. Based on serial section annotations, structural and physiological signatures characterizing early- and late-stages of wood degradation were identified in each imaging modality. Then a machine-learning model was trained to automatically classify tissue condition based on the multimodal imaging data, achieving high performance in distinguishing healthy and sick tissues. We could, therefore, perform an accurate quantification of intact, degraded, and white rot compartments within entire vine trunks. We also evaluated the contribution and efficiency of each imaging technique for tissue detection. Finally, we studied the relationships between external foliar symptom expression and the distribution of internal healthy and sick tissues.

This study highlights the potential of our 3D image- and AI-based workflow for non-destructive and in-vivo diagnosis of complex plant diseases in grapevine and other plants. It gives access to key indicators to evaluate vines’ inner sanitary status and allows structural and functional modeling of the whole plant. Plant-specific’ digital twins’21 could revolutionize agronomy by providing dedicated models for diseased plants and computerized assistance to diagnosis.

Results

Multimodal 3D imaging of healthy and sick tissues

Based on foliar symptom history, symptomatic- and asymptomatic-looking vines (twelve total) were collected in 2019 from a Champagne vineyard (France) and imaged using four different modalities: X-ray CT and a combination of multiple MRI protocols: T1-, T2-, and PD-weighted (w) (Fig. 1). Following imaging acquisitions, vines were molded, sliced, and each side of the cross-sections photographed (approx. 120 pictures per plant). Experts manually annotated eighty-four random cross-sections and their corresponding images according to visual inspection of tissue appearance. Six classes showing specific colorations were defined (Fig. 2a): (i) healthy-looking tissues showing no sign of degradation; and unhealthy-looking tissues such as (ii) black punctuations, (iii) reaction zones, (iv) dry tissues, (v) necrosis associated with GTD (incl. Esca and Eutypa dieback), and (vi) white rot (decay). The 3D data from each imaging modality—three MRIs, X-ray CT, and registered photographs—were aligned into 4D-multimodal images22. It enabled 3D voxel-wise joint exploration of the modality’s information and its comparison with empirical annotations by experts (Fig. 2b).

General Workflow: From multimodal vine imaging to data analysis. (1) and (2) Multimodal 3D imaging of a vine using MRI (T1-weighted, T2-w, and PD-w) and X-ray CT. (3) (Optional step) the vine is molded and then sliced every 6 mm. Pictures of cross-sections (both sides) are registered in a 3D photographic volume, and experts manually annotate some cross-sections. (4) Multimodal registration of the MRI, X-ray CT, and photographic data into a coherent 4D image using Fijiyama22. (5) Machine-learning-based voxel classification. Segmentation of images based on the tissue expert manual annotations: wood (intact, degraded, white rot), bark, and background. The classifier was trained and evaluated using manual annotations collected on different vines during Step 3. (6) Data analysis and visualization.

Multimodal imaging and signal analysis. (a) Correspondences between tissue categorization based either on visual observation of trunk cross-sections (6 classes), multimodal imaging data (7 classes), or AI-based segmentation (3 classes). (b) Multimodal imaging data collected on vines. XZ views of the photographic, X-ray CT, and MRI volumes, after registration using Fijiyama22. (c) Example of manual tissue annotation and corresponding multimodal signals. (d) Multimodal signal statistics estimated from random trunk cross-sections. (e) Multimodal signal values collected automatically on all 4D datasets (46.2 million voxels total) after AI-based voxel classification in three main tissue classes defined as intact, degraded, or white rot. (f) General trends for functional and structural properties during the wood degradation process, and proposed fields of application for MRI and X-ray CT imaging. Legend: letters on bar plots correspond to Tukey tests for comparing tissue classes in each modality.

A preliminary study of manually annotated random cross-sections led to the identification of general signal trends distinguishing healthy- and unhealthy-looking tissues (Fig. 2a,c,d). In healthy-looking wood, areas of functional tissues were associated with high X-ray absorbance and high MRI values, i.e., high NMR signals in T1-, T2-, and PD-weighted images, while nonfunctional wood showed slightly lower X-ray absorbance (approx. − 10%) and lower values in all three MRI modalities (− 30 to − 60%).

In unhealthy-looking tissues, signals were highly variable. Dry tissues, resulting from wounds inflicted during seasonal pruning, exhibited medium X-ray absorbance and very low MRI values in all three modalities. Necrotic tissues, corresponding to different types of GTD necrosis, showed medium X-ray absorbance (approx. − 30% compared to functional tissues) and medium to low values in T1-w images, while signals in T2-w and PD-w were close to zero (− 60 to − 85%). Black punctuations, known as clogged vessels, generally colonized by the fungal pathogen Phaeomoniella chlamydospora, were characterized by high X-ray absorbance, medium values in T1-w, and variable values in T2-w and PD-

w. Finally, white rot, the most advanced stage of degradation, exhibited significantly lower mean values in X-ray absorbance (− 70% compared to functional tissues; − 50% compared to necrotic ones) and in MRI modalities (− 70 to − 98%).

Interestingly, some regions of healthy-looking, uncolored tissues showed a particularly strong hypersignal in T2-w compared to the surrounding ones (Fig. 2d). Located in the vicinity of necrotic tissue boundaries and sometimes undetectable by visual inspection of the wood, these regions likely corresponded to the reaction zones described earlier, where host and pathogens strongly interact and show specific MRI signatures23.

These results highlighted the benefits of multimodal imaging in distinguishing different tissues for their degree of degradation, and in characterizing signatures of the degradation process. The loss of function was correctly highlighted by a significant MRI hyposignal. The necrosis-to-decay transition was marked by a strong degradation of the tissue structure and a loss of density revealed by a reduction in X-ray absorbance. While distinguishing different types of necrosis remained challenging because their signal distributions overlap, degraded tissues exhibited multimodal signatures permitting their detection. Interestingly, specific events such as reaction zones were detected by combining X-ray and T2-w modalities. Overall, MRI appeared to be better suited for assessing functionality and investigating physiological phenomena occurring at the onset of wood degradation when the wood still appeared healthy (Fig. 2f). In contrast, X-ray CT seemed more suited for discriminating more advanced stages of degradation.

Automatic segmentation of intact, degraded, and white rot tissues using non-destructive imaging

To propose a proper in-vivo GTD diagnosis method, we aimed to assess vine condition by automatically and nondestructively quantifying the trunks' healthy and unhealthy inner compartments in 3D. To achieve this complex task, we trained a segmentation model to detect the level of degradation voxel-wise, using imaging data acquired with non-destructive devices. In light of our initial observations and the identified ambiguities at the pixel level in small areas, we established a three-class categorization of tissue degradation: (1) ‘intact’ for functional or nonfunctional but healthy tissues; (2) ‘degraded’ for necrotic and other altered tissues; and (3) ‘white rot’ for decayed wood (Fig. 2a). This streamlined categorization is more straightforward for high-throughput expert labeling pixel-wise while providing sufficient detail to effectively characterize different levels of tissue degradation.

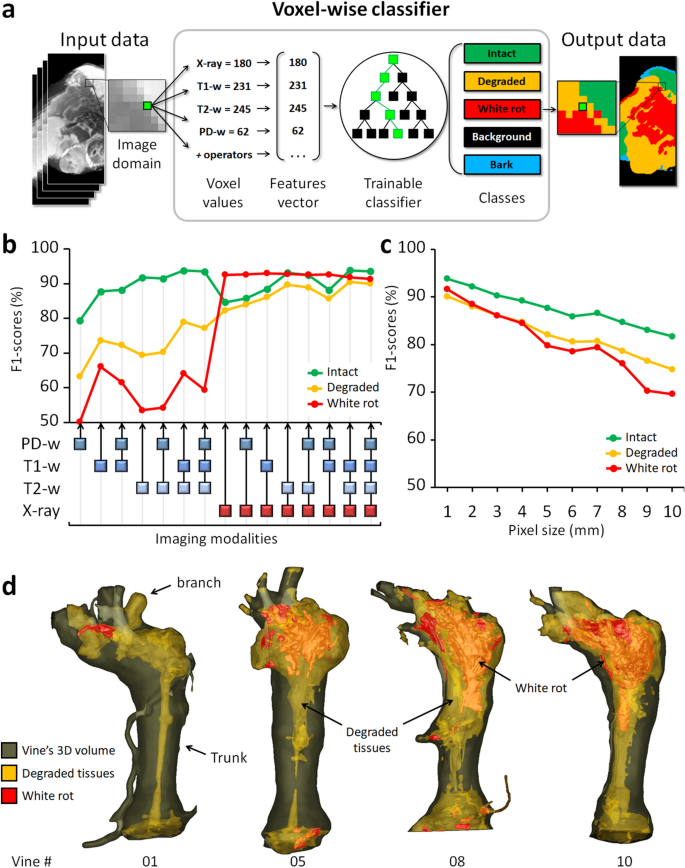

An algorithm was trained to automatically classify each voxel in one of the three classes based on its T1-w, T2-w, PD-w, and X-ray absorbance values (Fig. 3a). The classification was performed using the Fast Random Forest algorithm implemented in the Weka library24. The algorithm was first trained on a set of 81,454 manually annotated voxels (Table S1), then cross-validated, and finally applied to whole 4D-images (46.2 million voxels total) (Fig. 1, step 4). The validation process was conducted across 66 folds. In each fold, we trained the model on ten specimens and validated it on the annotated voxels from the remaining two specimens in the test set. The results from all folds were aggregated into a global confusion matrix, which was instrumental in identifying the primary sources of classification errors. The mean global accuracy of the classifier (91.6 ± 2.0%) indicated a high recognition rate, with minor variations among cross-validation folds (Table S2). The lowest performance (82.0%) occurred when testing specimens 3 and 4 after training on the others, and was the only instance of accuracy falling below 89%. The performance of other folds within the same category closely aligns with those in different-category folds, showcasing robustness across various validation conditions in the 66 cross-validation folds. In our evaluation, F1 scores were 93.6 ± 3.7% for intact, 90.0 ± 3.8% for degraded, and 91.4 ± 6.8% for white rot tissue classes. The great majority of incorrect classifications were either due to confusion between intact and degraded classes (53.4%) or between degraded and white rot (20.8%) (Table S3). Intact and white rot classes were seldom confused (< 0.001% error).

Automatic tissues segmentation. (a) AI-based image segmentation using multimodal signals. (b) Comparison of all possible imaging modality combinations for their effectiveness (F1-scores) in tissue detection. (c) Effectiveness of tissue detection at lower imaging resolutions (using four modalities). (d) 3D reconstructions highlighting the extent and localization of the degraded and white rot compartments in four vines.

The same validation protocol was used to compare the effectiveness of all possible combinations of imaging modalities for tissue detection (Fig. 3b): the most efficient combination was [T1-w, T2-w, X- ray] for detection of intact (F1 = 93.9 ± 3.4%) and degraded tissues (90.5 ± 3.2%); and [T1-w, X-ray] for white rot (93.0 ± 5.1%). Interestingly, the X-ray modality alone reached almost similar scores for white rot detection. In general, slightly better results (± 0.5%) were obtained without considering the PD-w modality, likely due to its lower initial resolution.

The classifier was finally applied to the whole dataset. Under the assumption of the proper generalization of our model, we utilized all available data, including computations on non-ground truth pixels during inference, to compute robust statistics and compare tissue contents in different vines. Considering the entire classified dataset (46.2 million voxels), mean signal values significantly declined between intact and degraded tissues (− 19.3% for X-ray absorbance; and − 57.3%, − 86.3% and − 71.3% for MRI T1-w, T2-w, and PD-w, respectively); and between degraded and white rot (− 56.0% for X-ray absorbance; and − 36.8%, − 76.8% and − 64.2% for MRI T1-w, T2-w, and PD-w, respectively) (Fig. 2e and Table S4).

With the increasing deployment of X-ray and NMR devices on phenotyping tasks, in-field imaging has become accessible, but at a heavy cost in terms of image quality and resolution. We challenged our method by training and evaluating the classifier at coarser resolutions, ranging from 0.7 to 10 mm per voxel (Fig. 3c). Results proved our approach maintained correct performances even at 10 mm (F1 ≥ 80% for intact; ≥ 70% for degraded and white rot), while a human operator can no longer recognize any anatomical structure or tissue class at this resolution.

These results confirmed the wide range of potential applications and the complementarity of the four imaging modalities. Combining medical imaging techniques and an AI-based classifier, it was possible to segment intact, degraded, and white rot compartments automatically and nondestructively inside the wood. This represents an important breakthrough in their visualization, volume quantification and localization in the entire 3D volume of the vines (Fig. 3d).

Deciphering the relationship between inner tissue composition and external symptomatic histories: A step toward a reliable in-situ diagnosis

Non-destructive detection of GTDs in vineyards is currently only possible through observing foliar symptoms and vine mortality. Numerous studies are based on these proxies for phenotyping. Foliage is usually screened at specific periods of the year when Esca or Eutypa dieback symptoms occur, and this screening is usually repeated for one or several years. However, new leaves are produced each year, and symptoms may not recur in following years, making any diagnosis hazardous at best, and attempts to correlate external and internal impacts of GTDs unsuccessful. Here, foliar symptoms were recorded yearly for twenty years since the plot was planted in 1999 (Fig. 4a). Together with the accurate quantification and localization of degraded and non-degraded compartments in trunks, this allowed more advanced investigations.

Deciphering the relationship between inner tissue degradation and external foliar symptoms. (a) Left: Detailed history of external GTD symptoms expression. Right: classification of vines based on their external sanitary status, either considering year 2019 only or the complete symptom history (right). (b) and (c) Internal tissue contents of the trunks. Vines are grouped per phenotypic categories, based either on the single 2019 observation or the complete 1999–2019 symptom history. Tissue percentages are calculated from the upper 25 cm of the trunk. (d) Comparison of phenotypic categories for the white rot and intact tissues distribution (mean and interval) along the trunk. Position 0 cm corresponds to the top of the trunk and initiation of branches (i.e., > 0 in branches; < 0 in trunk). (e) Comparison of vines for intact and white rot tissue contents in the region − 2 to + 2 cm (last 2 cm of the trunk and first 2 cm of the branches).

Half of the vines would have been misclassified as ‘healthy’ plants if solely using the foliar symptoms observed in 2019 as markers, despite harboring significantly degraded internal tissues (Fig. 4b). Indeed, despite the absence of leaf symptoms, these vines contained important volumes of deteriorated wood (up to 623 cm3 of degraded tissues and 281 cm3 of white rot) (Table S5). Utilizing the foliar symptom proxy would have led to different -and erroneous- diagnoses each year, confirming its unreliability (Fig. 4a). Correlations between foliar symptoms observed in 2019 and total internal contents were very weak (R2 = − 0.25, 0.27, and 0.18 for intact, degraded, and white rot, respectively). In contrast, internal tissue contents were better supported by categories considering the complete vine history (Fig. 4c). For example, the sum of foliar symptoms detected during the vine’s life was strongly correlated to the composition of internal tissues (R2 = − 0.87, 0.79, and 0.84 for intact, degraded, and white rot, respectively) and the correlation between inner contents and the date of the first foliar symptom expression was also high (− 0.87 for intact and 0.91 for white rot) (Table S6). The evaluation of the health status of plants through the observation of leaf symptoms should, therefore, be conducted over an extended period of monitoring.

As illustrated by 3D reconstructions (Fig. 3d), degraded and white rot compartments were mostly continuous and located at the top of the vine trunk. This result was consistent with previous reports and the positioning of most pruning injuries that are considered pathways for the penetration of fungal pathogens, causing GTDs1. However, the distribution and volumes of the three tissue classes helped distinguish different degrees of disease severity (Fig. 4d). In detail, the tissue content located in the upper last centimeters of the trunk and the insertion point of branches allowed efficient discrimination of the vine condition (Fig. 4e). On one hand, the proportion of intact tissues detected in this region discriminated between vines with mild forms of the disease (intact > 30%) and vines at more advanced stages (< 30%). On the other hand, the proportion of white rot distinguished the healthiest vines (white rot < 8%), more affected ones (8–15%), and the ones facing critical stages (> 15%). The volume of degraded tissues and the positioning of intact and white rot tissues allowed for fine-tuning the diagnosis (Fig. S1).

Non-destructive imaging and 3D modeling offered the possibility to access both internal tissue contents and spatial information without harming the plant (Fig. 5). In vines suffering from advanced stages of trunk diseases, white rot tissues were surrounded by degraded tissues; thin areas of intact functional tissues were limited to the periphery of trunk tops. As illustrated in Fig. 5, abiotic stresses such as a fresh wound can also greatly impact the functionality of surrounding tissues and affect plant survival even more.

3D visualization of internal tissue contents: example of a specimen at the critical stage of vine decline. (a) Original external view of the vine. (b) Combining MRI data volume rendering and white rot model. (c) 3D representation of the intact (green), degraded (orange) and white rot (red) compartments inside the trunk.

Discussion

A new method for non-destructive detection of wood diseases

Certain plant diseases are mostly undetectable until advanced stages are reached; this is the case for GTDs, where their detection is currently only possible through destructive techniques or observing erratically-expressed foliar symptoms. A non-destructive, reliable method for their detection is frequently expected1,25,26,27. To that end, we developed an innovative approach to nondestructively measure healthy and unhealthy tissues in living perennial vines. We combined (1) non-destructive 3D imaging techniques, (2) a registration pipeline for multimodal data, and (3) a machine-learning-based model for voxel classification. We were able to determine the level of tissue degradation voxel-wise, and to accurately segment, visualize, and quantify healthy and unhealthy compartments in the plants.

Among the imaging modalities tested, MRI has already proved relevant in assessing tissue functions in grapevine19 and in several applications in living plants28. In a recent study, MRI surprisingly failed to distinguish healthy and necrotic tissues in grapevine trunk samples20. Several factors could contribute to such a result: we employed a larger number of plants, operated with a higher magnetic field density and image resolution, and utilized a combination of three parameters (T1, T2, and PD), in contrast to their use of only one (PD). Additionally, while their image analysis was limited to ten transversal slices, we conducted our analysis using the entire 3D volume of the trunk. Here, MRI was found particularly well suited for detecting early stages of wood degradation, characterized by a significant loss of signal (57–86%) in T1-w and T2-w protocols between intact and degraded tissue classes. Combining MRI modalities provided information on tissue functionality and water content. T1-w was efficient for anatomical discrimination, and T2-w highlighted phenomena associated with host-pathogens interactions such as reaction zones23. Interestingly, the T2-w signal dropped by approx. 60% between functional and nonfunctional tissues but increased by 110% between nonfunctional and reaction zones. X-ray CT, on the other hand, was particularly efficient in detecting more advanced stages of wood deterioration characterized by a loss in structural integrity, highlighted by a 56% drop in X-ray absorbance between degraded and white rot tissues.

Multimodal imaging, together with the 4D registration step and machine learning to extract information, proved its efficacy: combining MRI and X-ray CT techniques significantly increased the quality of tissue segmentation. All possible imaging combinations were finally compared for their efficiency, and it is now possible to select the modality/modalities best suited to specific needs. For example, combining T1-w, T2-w, and X-ray was optimal for intact and degraded tissue detection, but T2-w alone also proved efficient, should using only one imaging modality be possible. For white rot detection, combining T1-w and X-ray, or using X-ray alone were the best options.

Given the small size of our training set, we used a strategy based on a Random Forest Classifier, training on the outputs of predefined image processing filters. Though we reached high precision, collecting more data would permit the use of deep learning tools, which could be particularly beneficial in achieving higher levels of predictive accuracy and robustness.

In grapevine, MRI and X-ray CT were recently tested for GTDs detection but applied separately and on different wood samples20. Here we collected multimodal 3D data on whole trunks of aged plants and developed a pipeline for automatic analysis. Although vines were cut up to gather data to train and evaluate the classifier, this approach is now feasible without harming the plants (Fig. 1). It opens several exciting prospects for diagnosis and applications to other plants and complex diseases.

GTD indicators based on internal tissue degradation rather than external foliar symptoms

New light was shed on classical monitoring studies when we compared foliar symptom histories with internal degradations. While previous reports showed necrosis volumes could be linked to the probability of Esca leaf symptoms occurrence and white rot volumes to apoplectic forms5,6,7,25, but only weak correlations between tainted or necrotic tissue contents and foliar symptoms were generally observed4,29,30. In most studies, plants are considered 'healthy' if they do not express any foliar symptoms during the experiment, which generally last just one or two years. Our results confirmed that the appearance of foliar symptoms in a given year is not linked to the volume of internal wood degradation and that a single foliar symptom expression is not a reliable marker of the plant’s actual health status in the GTD context. In cases where quantifying the extent of internal tissue degradation proves unfeasible, phenotyping of vine tolerance to GTDs must rely on consistent and prolonged monitoring of foliar symptom expression. Assessments based solely on a limited period of observation of foliar symptoms will result in misleading interpretations.

Here we considered that the internal tissue composition (intact, degraded, white rot) better reflects the severity of the disease affecting the vines and their actual condition. It seemed particularly relevant for asymptomatic vines: half of them, harboring large volumes of unhealthy tissues, would have been erroneously categorized as "healthy" plants using the foliar symptom proxy. Indeed, asymptomatic vines could have reached advanced stages of GTDs, while symptomatic ones could be relatively unharmed. In such cases, foliar symptoms-based diagnosis is unreliable; internal tissue content is the only reliable proxy of plant health. Necrotic and decay compartments are intuitively more stable indicators than foliage symptoms: once tissues have suffered irreversible damage, they will clearly remain nonfunctional.

An internal tissue-based model for accurate GTD diagnosis

A model based on the quality, quantity, and position of internal tissues could be proposed for an accurate diagnosis. Different stages in trunk damage could be distinguished: ‘Low’ damage is characterized by low volumes of altered tissue; ‘moderate’ by significant degraded and decayed contents but still a fair amount of peripheral intact tissue; and 'critical' damage, with very limited areas of intact tissue (Fig. 6). These stages could be evaluated directly in fields through non-destructive imaging detection, allowing a reliable diagnosis in living specimens.

In this study, we simulated performance at lower resolutions to reflect the capabilities of average portable imaging devices, with voxel sizes up to 10 mm. While we did not address all possible changes in image quality, this approach is a first step in indicating possible performances in “real-world” applications where access to high-resolution imaging devices is limited. While lower resolutions affect the performance of our pipeline, our results suggest a potential for wider application in field conditions, making this technique more accessible and practical. Future studies should explore methods to optimize accuracy at these reduced resolutions, thereby extending the utility of portable imaging devices in a variety of settings.

Based on trunk cross-sections, a threshold value of 10% white rot in branches has been proposed as a predictor for the chronic form of Esca7,25. This value could be a proper threshold between the low and moderate phases defined here, while 20% white rot would be the threshold into the critical stage. However, intact tissues should also be considered: a minimum of 30% intact tissues located in the last centimeters of the trunk could be proposed as a threshold for critical status.

Here all necrotic tissues were regrouped in a single degraded class, but defining more tissue classes—e.g., different types of necrosis—could enable studying each wood disease separately.

Grapevine is a tortuous liana in which both the proportion and configuration of tissues are highly irregular along the trunk and among plants. Thresholds for transitioning from one stage to the other would probably need to be established according to the grapevine variety. Considering their vigor and capacity to produce new functional tissues every year, some varieties might be able to cope with large volumes of unhealthy tissues while maintaining sufficient physiological and hydraulic functions. Other factors, such as the environment, fungal pathogens, and pruning mode, might also influence the plant’s capacity to survive with only very limited intact tissues1, and their impact could be measured using this novel non-destructive approach.

Predicting the course of diseases and assisting management strategies

The quantity and position of healthy and unhealthy tissues could be useful in predicting the evolution of plants' sanitary status. It would be tempting to predict that an asymptomatic vine might soon develop symptoms according to its intact tissue content and assuming its proximity to neo-symptomatic vines (Fig. 4e). Considering white rot and degraded tissues, we could also estimate that, among the asymptomatic-resilient vines, one would be likely to survive a few more years, while others would likely die within a few years. Additional, larger-scale data are required to confirm the effectiveness of these proxies for individual and accurate predictive diagnosis. However, multimodal imaging has already proved relevant for diagnosing the current status of the vines in this study.

White rot removal using a small chainsaw has been proposed to extend the life of seriously affected vines; this technique, curettage, currently under evaluation, is a particularly aggressive technique applied ‘blindly’, causing great damage to the plant31. A non-destructive imaging-based approach could improve precision surgery by enabling low-damage access to the sick inner compartments by giving access to the exact location and volume of sick tissues to be removed. It could also enable the in-vivo assessment of the long-term effectiveness of curettage and new automated pruning techniques32.

Finally, non-destructive and in-vivo monitoring studies of internal tissue contents could help identify plants that require urgent intervention, i.e., local treatment, curettage, surveillance, or to prioritize replacements in plots, facilitating vineyard management.

Conclusion

By providing direct access to internal tissue degradations in living plants, non-destructive imaging and AI-based image analysis provide new insights into complex diseases affecting woody plants. The results allow for a wide range of new, in vivo, and time-lapse studies to become accessible. For example, physiological responses to wounding and pathogen-linked infection could be monitored at the tissue level to search for varietal tolerance. At the individual level, long-term surveillance of healthy, necrotic, and decayed tissues could fine-tune prediction models, permit the evaluation of potential curative solutions, and facilitate the management of agricultural exploitation.

In grapevine, the enigmatic origin of Esca foliar symptoms and the influence of environmental factors on trunk disease development could probably be investigated more efficiently than traditional destructive methods. Based on a limited number of foliar symptom observations, previous studies might also have led to wrong interpretations, and wrong classification of varieties for their tolerance to GTDs, and could be revisited. If no alternative is possible, foliar symptoms should at least be considered with extreme caution after multiple years of surveying.

In medicine, imaging is often dedicated to single individuals, which is rarely the case for plants, which are generally considered at the population level. In viticulture, however, plots are perennial, and each vine represents a long-term financial investment. Individual and non-destructive diagnosis is therefore of great interest for vineyard sustainability, aiding decision-making in targeting a local treatment or replacing specific individuals. Long-term and complex diseases are also generally more difficult to handle.

Conceiving virtual digital twins21 of living plants would allow for monitoring complex diseases, modeling their evolution, and assessing the impact of novel solutions at different scales. Placing imaging at the bedside of plants offers great hopes and exciting perspectives which could help define next-generation management processes.

Materials and methods

Plants

A vineyard was planted in 1999 in Champagne, France, with Vitis vinifera L., cultivar Chardonnay rootstock 41B, and uses a traditional Chablis pruning system. Each vine was monitored yearly by the Comité Champagne for foliar symptom (FS) expression of GTDs, including Esca, Black dead arm, Botryosphaeria, and Eutypa diebacks. Observations were performed at different periods during the vegetative season to optimize the detection of different GTDs, if present.

Based on FS observed in 2019, vines were considered asymptomatic (healthy) or symptomatic (sick). Based on their whole FS history, vines were then sub-classified as follows:

-

(1)

Asymptomatic-always if they never expressed any FS.

-

(2)

Asymptomatic-resilient if they expressed FS in previous years but not in 2019.

-

(3)

Symptomatic-neo if they expressed FS for the first time in 2019.

-

(4)

Symptomatic-apoplectic if they died suddenly from typical apoplexy a few days before being collected.

For our study, vines showing different histories—three vines per subclass, 12 total Fig. 3a)—were manually collected from the vineyard on the 19th of August 2019. Plants were collected in an experimental plot managed by the Comité Champagne (Epernay, France) in accordance with the French legislation, and did not involve any species at risk of extinction nor endangered species of wild fauna and flora. Branches and roots were cut out approximately 15 cm from the trunk, and plants were individually packed in sealed plastic bags to prevent drying.

Multimodal imaging acquisitions

Multimodal imaging acquisitions were performed on each vine, from rootstocks to the beginning of branches, by Magnetic Resonance Imaging (MRI) and X-ray Computed Tomography (CT). MRI acquisitions were performed with Tridilogy, Groupe CRP—Imaneo (http://www.tridilogy.com) and the help of radiologists from CRP/Groupe Vidi at the Clinique du Parc (Castelnau-le-Lez, France), using a Siemens Magnetom Aera 1,5 Tesla and a human head antenna. Three acquisition sequences, T1-weighted(-w), T2-w, and PD-w were performed on each specimen, respectively:

-

(1)

3D T1 Space TSE Sagittal (Thickness 0.6 mm, DFOV 56.5 × 35 cm, 320 images, NEx 1, EC 1, FA 120, TR 500, TE 4.1, AQM 256/256).

-

(2)

3D T2 Space Sagittal (Thickness 0.9 mm, DFOV 57.4 × 35.5 cm, 160 images, NEx 2, EC 1, FA 160, TR 1100, TE 129, AQM 384/273).

-

(3)

Axial Proton Density Fat Sat TSE Dixon (Ep 5 mm, Sp 6.5, DFOV 57.2 × 38 cm, 40 images, NEx 1, EC 1, FA 160, TR 3370, TE 21, AQM 314/448).

X-ray CT acquisitions were performed at the Montpellier RIO Imaging platform (Montpellier, France, http://www.mri.cnrs.fr/en/) on an EasyTom 150 kV microtomograph (RX Solution). 3D volumes were reconstructed using XAct software (RX solution), resulting in approximately 2500 images per specimen at 177 µm/voxel resolution. Geometry, spot, and ring artifacts were corrected using the default correction settings when necessary.

Plant slicing and photographic acquisition

After MRI and X-ray CT acquisitions, plants were individually placed in rigid PVC tubes, molded in a fast-setting polyurethane foam filler, and cut into 6 mm-thick cross-sections using a bandsaw (Fig. 1, step 3). Cutting thickness was approx. 1 mm. Marks were placed on tubes to ensure regular slicing, and three rigid plastic sticks with different diameters were molded together with vines to serve as landmarks for their realignment. Both faces of each cross-section were then photographed using a photography studio, artificial light, a tripod, a digital camera (Canon 500d), and a fixed-length lens (EF 50 mm f/1.4 USM) to limit aberration and distortion. Approximately 120 pictures per plant were collected and registered into a coherent 3D photographic volume based on landmarks.

Data preprocessing: 4D multimodal registration

For each vine, 3D data from all modalities (MRI T1-w, T2-w and PD-w, X-ray CT, and 3D photographic volumes) were registered using Fijiyama22 and combined into a single 4D- multimodal image (voxel size = 0.68 mm × 0.68 mm × 0.60 mm) (Fig. 1, step 4). To compensate for possible magnetic field biases, which are generally present at the edge of the fields during MRI acquisitions, checkpoints were added manually, facilitating the estimation of non-linear compensations during 4D registration.

The registration accuracy was validated using manually placed landmarks (167 couples) distributed in the different modalities. Compared to MRI and X-ray CT modalities, the photographic volume presented a reduced number of images and light geometric distortions due to slicing irregularities. However, the alignment between photographs and other modalities resulted in an estimated average registration mismatch of 1.42 ± 0.98 mm (mean ± standard deviation). The alignment between photographs and other modalities was accurate enough to allow experts to manually annotate tissues directly on the 4D-multimodal images (see below).

Preliminary investigation of multimodal signals

An initial signal study was conducted on eighty-four cross-sections randomly sampled from three vines. Tissues were firstly classified into 6 different classes based on their visual appearance (Fig. 2a): (i) healthy-looking tissues showing no sign of degradation; (ii) black punctuations corresponding to clogged vessels; (iii) reaction zones described earlier23; (iv) dry tissues resulting from pruning injuries; (v) degraded tissues including several types of necrotic tissues; and (vi) white rot. Once considering X-ray CT and MRI images, experts could distinguish intact functional and intact nonfunctional tissues among the healthy-looking class, resulting in seven tissue classes in total (Fig. 2a,c). Moreover, some healthy-looking tissues showed specific MRI hypo- or hyper-signals and were re-classified as reaction zones (Fig. 2c). For these particular classes, an alteration of the wood aspect was not always visible by direct observation of the cross-sections.

Finally, multiple regions of interest (ROIs) were delineated by hand on the multimodal images and assigned to one of the seven tissue classes. For each selected voxel, values were gathered simultaneously from the four modalities (X-ray CT, T1-w, T2-w, and PD-w; 77,488 values total) using the registered multimodal images. The data were processed using R (v3.5.3) and the R-studio interface (v1.2.5001). Results are summarized in Fig. 2d. The significance of differences observed between the seven tissue classes was tested within each modality using Tukey tests and a 95% family-wise confidence level.

Automatic tissues segmentation of the whole 3D datasets

For each plant, thirteen cross-sections were sampled and manually annotated to label the corresponding voxels. Five classes were defined: background, bark, intact tissues, degraded tissues, and white rot (Fig. 2a). The annotation was performed using the Trainable segmentation plugin for Fiji33, which was extended to process multi-channel 3D images (see code availability). As a result, a set of 81,454 annotated voxels distributed among the twelve 3D volumes was produced (Table S1).

We trained an algorithm to classify each voxel Pi = (x, y, z) of the images (20 million voxels per specimen), attributing a class Ci among the five previously described (Fig. 3a). The classification was performed using the Fast Random Forest algorithm implemented in the Trainable Segmentation plugin, given its performance when working with 'small' training datasets (< 100.000 samples).

For each voxel, a feature vector Xi was built and then used by the classifier to predict the class Ĉii of the voxel Pi. The feature vector was enriched by applying specific image processing operators, such as local mean value, local variance, edge detector, difference of two Gaussians smoothing with varying sigma, local minimum value, local maximum value, local median value, and local variance, to the initial images for each imaging modality. These operators were parameterized using a scale factor, taking values from 1 to 64 voxels.

Evaluation of classifier performances

The classifier performances were evaluated using a k-fold cross-validation strategy. In each fold, the annotated voxels were split into a training set and a validation set. The train set, regrouping annotations from 10 plants, was used to train the classifier. The validation set, containing annotations from the two remaining plants, was used to assess the performances of the trained classifier. A global confusion matrix was computed from all 66 folds (Table S3). This matrix evaluated global and class-specific accuracies and F1-scores—considering both the test precision p and the recall r34—for each class and all possible combinations of imaging modalities (Fig. 3b and Table S2). F1-scores are generally considered a better indicator of performance because they highlight under- and over-estimations of a specific class more precisely.

Tissue quantification and 3D volumes reconstruction

For further analysis, we only considered voxels corresponding to areas of interest, i.e., tissue classes intact, degraded, and white rot. The number and localization of these voxels were collected for tissue quantification and visualization. 3D views presented in Figs. 3d and 5 were produced using isosurface extraction and volume rendering routines from VTK libraries35.

Relative positioning of tissue classes along the vines

To compare the position of intact, degraded, and white rot tissues in different vines, we estimated the geodesic distance separating each voxel from a common reference area point located at the center of the trunk, twenty centimeters below the trunk head. Using geodesic distances, we considered a region ranging from the last 20 cm of the trunk (defined as “position − 20”), passing through the top of the trunk (‘0’), to the first 5 cm of branches (‘+5’) (Fig. S2). This computation allowed the identification of voxel populations located within the same distance range while considering the tortuous shape of the trunks.

Simulation of performances at lower resolutions

Test images were built by image sub-sampling to simulate an average portable imaging device's resolution, resulting in voxel sizes ranging from 0.7 (original resolution) up to 10 mm. The corresponding annotated samples were converted accordingly, retaining the most represented label for each voxel volume. The classifier was then trained and tested on these low-resolution sample sets.

Data availability

The datasets generated and analyzed during the current study are available from the corresponding author upon reasonable request. The extension of the Trainable Segmentation plugin is open-source, and available as a fork of Trainable Segmentation on GitHub: https://github.com/Rocsg/Trainable_Segmentation/tree/Hyperweka.

References

Claverie, M., Notaro, M., Fontaine, F. & Wery, J. Current knowledge on Grapevine Trunk diseases with complex etiology: A systemic approach. Phytopathol. Mediterr. 59, 29–53. https://doi.org/10.14601/Phyto-11150 (2020).

Guerin-Dubrana, L., Fontaine, F. & Mugnai, L. Grapevine trunk disease in European and Mediterranean vineyards: Occurrence, distribution and associated disease-affecting cultural factors. Phytopathol. Mediterr. 58, 49–71. https://doi.org/10.14601/Phytopathol_Mediterr-25153 (2019).

Bortolami, G. et al. Exploring the hydraulic failure hypothesis of esca leaf symptom formation. Plant Physiol. 181, 1163–1174. https://doi.org/10.1104/pp.19.00591 (2019).

Mugnai, L., Graniti, A. & Surico, G. Esca (black measles) and brown wood-streaking: Two old and elusive diseases of grapevines. Plant Dis. 83, 404–418. https://doi.org/10.1094/PDIS.1999.83.5.404 (1999).

Lecomte, P. et al. New insights into esca of grapevine: The development of foliar symptoms and their association with xylem discoloration. Plant Dis. 96, 924–934. https://doi.org/10.1094/PDIS-09-11-0776-RE (2012).

Péros, J. P., Berger, G. & Jamaux-Despréaux, I. Symptoms, wood lesions and fungi associated with esca in organic vineyards in Languedoc–Roussillon (France). J. Phytopathol. 156, 297–303. https://doi.org/10.1111/j.1439-0434.2007.01362.x (2008).

Maher, N. et al. Wood necrosis in esca-affected vines: Types, relationships and possible links with foliar symptom expression. OENO One https://doi.org/10.20870/oeno-one.2012.46.1.1507 (2012).

Reis, P. et al. Vitis methods to understand and develop strategies for diagnosis and sustainable control of grapevine trunk diseases. Phytopathology 109, 916–931. https://doi.org/10.1094/PHYTO-09-18-0349-RVW (2019).

Hiltunen, S. et al. Characterization of the decay process of Scots pine caused by Coniophora puteana using NMR and MRI. Holzforschung 74, 1021–1032. https://doi.org/10.1515/hf-2019-0246 (2020).

Pearce, R. B., Sümer, S., Doran, S. J., Carpenter, T. A. & Hall, L. D. Non-invasive imaging of fungal colonization and host response in the living sapwood of sycamore (Acer pseudoplatanus L.) using nuclear magnetic resonance. Physiol. Mol. Plant Pathol. 45, 359–384. https://doi.org/10.1016/s0885-5765(05)80065-7 (1994).

Van den Bulcke, J., Boone, M., Van Acker, J. & Van Hoorebeke, L. Three-dimensional X-ray imaging and analysis of fungi on and in wood. Microsc. Microanal. 15, 395–402. https://doi.org/10.1017/S1431927609990419 (2009).

Hervé, V., Mothe, F., Freyburger, C., Gelhaye, E. & Frey-Klett, P. Density mapping of decaying wood using X-ray computed tomography. Int. Biodeterior. Biodegrad. 86, 358–363. https://doi.org/10.1016/j.ibiod.2013.10.009 (2014).

Hamada, J. et al. Variations in the natural density of European oak wood affect thermal degradation during thermal modification. Ann. For. Sci. 73, 277–286. https://doi.org/10.1007/s13595-015-0499-0 (2016).

Li, W. et al. Relating MOE decrease and mass loss due to fungal decay in plywood and MDF using resonalyser and X-ray CT scanning. Int. Biodeterior. Biodegrad. 110, 113–120. https://doi.org/10.1016/j.ibiod.2016.03.012 (2016).

Milien, M., Renault-Spilmont, A.-S., Cookson, S. J., Sarrazin, A. & Verdeil, J.-L. Visualization of the 3D structure of the graft union of grapevine using X-ray tomography. Sci. Horticult. 144, 130–140. https://doi.org/10.1016/j.scienta.2012.06.045 (2012).

Brodersen, C. R., Knipfer, T. & McElrone, A. J. In vivo visualization of the final stages of xylem vessel refilling in grapevine (Vitis vinifera) stems. New Phytol. 217, 117–126. https://doi.org/10.1111/nph.14811 (2018).

Czemmel, S. et al. Genes expressed in grapevine leaves reveal latent wood infection by the fungal pathogen Neofusicoccum parvum. PLoS ONE 10, e0121828. https://doi.org/10.1371/journal.pone.0121828 (2015).

Earles, J. M. et al. In vivo quantification of plant starch reserves at micrometer resolution using X-ray microCT imaging and machine learning. New Phytol https://doi.org/10.1111/nph.15068 (2018).

Bouda, M., Windt, C. W., McElrone, A. J. & Brodersen, C. R. In vivo pressure gradient heterogeneity increases flow contribution of small diameter vessels in grapevine. Nat. Commun. https://doi.org/10.1038/s41467-019-13673-6 (2019).

Vaz, A. T. et al. Precise nondestructive location of defective woody tissue in grapevines affected by wood diseases. Phytopathol. Mediterr. 59, 441–451. https://doi.org/10.14601/Phyto-11110 (2020).

Laubenbacher, R., Sluka, J. P. & Glazier, J. A. Using digital twins in viral infection. Science 371, 1105–1106. https://doi.org/10.1126/science.abf3370 (2021).

Fernandez, R. & Moisy, C. Fijiyama: A registration tool for 3D multimodal time-lapse imaging. Bioinformatics 37, 1482–1484. https://doi.org/10.1093/bioinformatics/btaa846 (2021).

Pearce, R. B. Decay development and its restriction in trees. Arboricult. Urban For. 26, 1–11. https://doi.org/10.48044/jauf.2000.001 (2000).

Witten, I. H., Frank, E., Hall, M. A. & Pal, C. J. Data mining, fourth edition: Practical machine learning tools and techniques (Morgan Kaufmann Publishers Inc., 2016).

Gramaje, D., Urbez-Torres, J. R. & Sosnowski, M. R. Managing grapevine trunk diseases with respect to etiology and epidemiology: Current strategies and future prospects. Plant Dis. 102, 12–39. https://doi.org/10.1094/PDIS-04-17-0512-FE (2018).

Ouadi, L. et al. Ecophysiological impacts of Esca, a devastating grapevine trunk disease, on Vitis vinifera L.. PLoS ONE 14, e0222586. https://doi.org/10.1371/journal.pone.0222586 (2019).

Mondello, V. et al. Grapevine trunk diseases: A review of fifteen years of trials for their control with chemicals and biocontrol agents. Plant Dis. 102, 1189–1217. https://doi.org/10.1094/PDIS-08-17-1181-FE (2018).

Van As, H. & van Duynhoven, J. MRI of plants and foods. J. Magn. Reson. 229, 25–34. https://doi.org/10.1016/j.jmr.2012.12.019 (2013).

Calzarano, F. & Di Marco, S. Wood discoloration and decay in grapevines with esca proper and their relationship with foliar symptoms. Phytopathol. Mediterr. 46, 96–101. https://doi.org/10.14601/Phytopathol_Mediterr-1861 (2007).

Kassemeyer, H. H. et al. Trunk anatomy of asymptomatic and symptomatic grapevines provides insights into degradation patterns of wood tissues caused by Esca-associated pathogens. Phytopathol. Mediterr. 61, 451–471. https://doi.org/10.36253/phyto-13154 (2022).

Pacetti, A. et al. Trunk surgery as a tool to reduce foliar symptoms in diseases of the esca complex and its influence on vine wood microbiota. J. Fungi (Basel) 7, 521. https://doi.org/10.3390/jof7070521 (2021).

Gentilhomme, T., Villamizar, M., Corre, J. & Odobez, J. M. Towards smart pruning: ViNet, a deep-learning approach for grapevine structure estimation. Comput. Electron. Agricult. 207, 107736. https://doi.org/10.1016/j.compag.2023.107736 (2023).

Arganda-Carreras, I. et al. Trainable Weka segmentation: A machine learning tool for microscopy pixel classification. Bioinformatics 33, 2424–2426. https://doi.org/10.1093/bioinformatics/btx180 (2017).

Chinchor, N. MUC-4 evaluation metrics. In Proceedings of the 4th conference on Message understanding. McLean, Virginia, Association for Computational Linguistics: 22–29 (1992).

Schroeder, W. J. & Martin, K. M. The Visualization Toolkit. In Visualization Handbook (eds Hansen, C. D. & Johnson, C. R.) Ch. 30, 593–614 (Butterworth-Heinemann, 2005).

Acknowledgements

This work was supported by the French Ministry of Agriculture and Food, France AgriMer, the Comité National des Interprofessions des Vins à appellation d’origine et à indication géographique (CNIV), and the Institut Français de la Vigne et du Vin (IFV) within VITIMAGE and VITIMAGE-2024 projects (program Plan National Dépérissement du Vignoble); and by Agropolis fondation-APLIM Etendard project (contract 1504-005). Imaging acquisitions were performed at Tridilogy, Groupe CRP—Imaneo; and the MRI platform member of the national infrastructure France-BioImaging supported by the French National Research Agency «Investments for the Future» (ANR-10-INBS-04), and of the Labex CEMEB (ANR-10-LABX-0004) and NUMEV (ANR-10-LABX-0020). The authors warmly thank Stéphane Bottalico and Renaud Lebrun (CNRS) for technical assistance, and Lindsay Hartley-Backhouse and Courtney Jallo (Scriptoria Solutions) for copy editing.

Author information

Authors and Affiliations

Contributions

R.F.: Methodology, Software, Investigation, Formal analysis, Visualization, Writing. L.L.C.: Conceptualization, Methodology, Investigation. S.M.: Conceptualization, Methodology, Investigation, Data Curation. J.-L.V.: Conceptualization, Methodology, Investigation, Writing. J.P.: Resources, Investigation. P.L.: Investigation, Data Curation, Writing. A.-S.S.: Conceptualization, Writing. P.C.: Investigation, Writing. M.C.: Conceptualization, Writing. C.G.-B.: Conceptualization, Writing. C.M.: Conceptualization, Methodology, Investigation, Formal analysis, Visualization, Funding acquisition, Supervision, Writing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Fernandez, R., Le Cunff, L., Mérigeaud, S. et al. End-to-end multimodal 3D imaging and machine learning workflow for non-destructive phenotyping of grapevine trunk internal structure. Sci Rep 14, 5033 (2024). https://doi.org/10.1038/s41598-024-55186-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-55186-3

Keywords

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.