Abstract

Artificial intelligence (AI) has become an integral part of many contemporary technologies, such as social media platforms, smart devices, and global logistics systems. At the same time, research on the public acceptance of AI shows that many people feel quite apprehensive about the potential of such technologies—an observation that has been connected to both demographic and sociocultural user variables (e.g., age, previous media exposure). Yet, due to divergent and often ad-hoc measurements of AI-related attitudes, the current body of evidence remains inconclusive. Likewise, it is still unclear if attitudes towards AI are also affected by users’ personality traits. In response to these research gaps, we offer a two-fold contribution. First, we present a novel, psychologically informed questionnaire (ATTARI-12) that captures attitudes towards AI as a single construct, independent of specific contexts or applications. Having observed good reliability and validity for our new measure across two studies (N1 = 490; N2 = 150), we examine several personality traits—the Big Five, the Dark Triad, and conspiracy mentality—as potential predictors of AI-related attitudes in a third study (N3 = 298). We find that agreeableness and younger age predict a more positive view towards artificially intelligent technology, whereas the susceptibility to conspiracy beliefs connects to a more negative attitude. Our findings are discussed considering potential limitations and future directions for research and practice.

Similar content being viewed by others

Introduction

Artificial intelligence (AI) promises to make human life much more comfortable: It may foster intercultural collaboration via sophisticated translating tools, guide online customers towards the products they are most likely going to buy, or carry out jobs that feel tedious to human workers1,2. At the same time, the proliferation of AI-based technologies is met with serious reservations. It is argued, for instance, that AI could lead to the downsizing of human jobs3,4, the creation of new intelligent weaponry5,6, or a growing lack of control over the emerging technologies7. Similarly, the recent and much-publicized presentation of new text- and image-creating AI (such as ChatGPT and Midjourney) has raised concerns about artistic license, academic fraud, and the value of human creativity8.

Crucially, individuals may differ regarding their evaluation of such chances and risks, and in turn, hold different attitudes towards AI. However, we note that scholars interested in these differences often used a basic ad-hoc approach to assess participants’ AI attitudes6,9, or focused only on highly domain-specific sub-types of AI10,11. Against this background, the current paper offers a twofold contribution. First, we present a novel questionnaire on AI-related attitudes—the ATTARI-12, which incorporates the psychological trichotomy of cognition, emotion, and behavior to facilitate a comprehensive yet economic measurement—and scrutinize its validity across two studies. Subsequently, we connect participants’ attitudes (as measured by our new scale) to several fundamental personality traits, namely the Big Five, the Dark Triad, and conspiracy mentality.

Research objective 1: measuring attitudes towards AI

Broadly speaking, the term artificial intelligence (AI) is used to describe technologies that can execute tasks one may expect to require human intelligence—i.e., technologies that possess a certain degree of autonomy, the capacity for learning and adapting, and the ability to handle large amounts of data12,13. As AI becomes deeply embedded in many technical systems and, thus, people’s daily lives, it emerges as an important task for numerous scientific disciplines (e.g. psychology, communication science, computer science, philosophy) to better understand users’ response to—and acceptance of—artificially intelligent technology. Of course, in order to achieve this goal, it is essential to employ theoretically sound measures of high psychometric quality when assessing AI-related attitudes. Furthermore, researchers need to decide whether they want to examine only a specific type of application (such as self-driving cars or intelligent robots) or focus on AI as an abstract technological concept that can be applied to many different settings. While both approaches have undeniable merit, it may be argued that the comparability of different research efforts—especially those situated at the intersection of different disciplines—clearly benefits from the latter, i.e., an empirical focus on attitudes towards AI as a set of technological capabilities instead of concrete use cases.

In line with this argument, scientific efforts have produced several measures to assess attitudes towards AI as a more overarching concept. These include the General Attitudes Towards Artificial Intelligence Scale (GAAIS13), the Attitudes Towards Artificial Intelligence Scale (ATAI14), the AI Anxiety Scale (AIAS15), the Threats of Artificial Intelligence Scale (TAI16), and the Concerns About Autonomous Technology questionnaire17. Upon thorough examination, however, we believe that none of these measures offer an entirely satisfactory option for at least one of five reasons. First, many of the above-mentioned measures only inquire participants about negative impressions and concerns regarding AI, leaving aside the possibility of distinctly positive attitudes15,16,17. Second, the measured attitudes towards AI are often subdivided into several factors13,14,15, which complicates handling and interpreting these scales from both a theoretical and a practical perspective. For instance, the GAAIS13 presents two sub-scales called “acceptance” and “fear,” even though it can be argued that these merely cover different poles of the same spectrum. Relatedly, the AIAS scale15 offers a four-factor solution with the (statistically induced) dimensions “learning,” “job replacement,” “sociotechnical blindness,” and “AI configuration”—clusters whose practical merit may be related to more specific use cases. Third, while some scales have a relatively low number of items (e.g., five items14), other scales are comparably long (e.g., 21 items15), which may complicate their use in research settings that need to include attitudes towards AI as one concept among multiple others. Fourth, for one of the reviewed scales14, the low number of items resulted in a notable lack of reliability. Fifth, none of the available scales acknowledge the cognitive, affective, and behavioral facets of attitudes in their designs18,19.

Hence, based on the identified lack of a one-dimensional, psychometrically sound, yet economic measure that captures both positive and negative aspects of the attitude towards AI, our first aim was to develop a scale that overcomes these limitations. For the sake of broad interdisciplinary applicability, we explicitly focused the creation of our scale on the perception of AI as a general set of technological capabilities, independent of its physical embodiment or use context. This perspective was not least informed by recent research, which indicated that people’s evaluation of digital ‘minds’ is likely to change once visual factors come into play20—further highlighting the merit of addressing AI as a disembodied concept.

Research objective 2: understanding associations between AI-related attitudes and personality traits

Thus far, most studies on public AI acceptance have explored the role of demographic and sociocultural variables, revealing that concerns about AI seem to be more prevalent among women, the elderly, ethnic minorities, and individuals with lower levels of education6,21. Furthermore, it was demonstrated that egalitarian values and distrust in science9, attachment anxiety22, and the exposure to dystopian science fiction6,23 constitute meaningful predictors of negative attitudes towards AI. Indeed, many of these findings are echoed by a large body of human–robot interaction literature, which also emphasizes the crucial impact of demographic variables, cultural norms, and media use on the public acceptance of robotic machinery24,25,26. Yet, considering that studies from this discipline rarely elucidate whether the reported effects arise from the perception of robotic bodies or robotic minds (i.e., AI), we suggest that they should only be considered as secondary evidence for the current topic.

Apart from considerable insight into the sociocultural predictors of (dis-)liking AI technology, much less is known about the impact of users’ personality factors on their AI-related attitudes. To our knowledge, only a handful of scientific studies have actually addressed this issue, and most of them have yielded rather limited results—either by focusing on very specific types of AI10,11,27 or by measuring reactions to physically embodied AI (e.g., robots), which makes it much harder to pinpoint the cause of the effect28,29. While another recent study indeed explored attitudes towards AI as a technical concept regardless of its specific use or embodiment30, this effort focused exclusively on negative attitudes, thus leaving out a substantial part (i.e., the positive side) of how users think and feel about artificially intelligent systems.

Therefore, building upon the reviewed work, our second objective was to scrutinize several central personality dimensions as predictors for people’s AI-related attitudes, including the well-known Big Five31 and Dark Triad of personality32. In addition to that, we include the rather novel construct of conspiracy mentality in our work33, considering its high contemporary relevance in an increasingly digital world34.

Overview of studies and predictions

Addressing the outlined research propositions, we present three studies (see Table 1 for a brief overview of main study characteristics). All materials, obtained data, and analysis codes for these three studies can be found in our project’s Open Science Framework repository (https://osf.io/3j67a/). Informed consent was collected from all participants before they took part in our research efforts.

Studies 1 and 2 mainly served to investigate the reliability and validity of our new attitudes towards AI scale, the ATTARI-12. Hence, these studies were mainly guided by several analytical steps examining the statistical properties of our measure, including its convergent validity, re-test reliability, and susceptibility to social desirability bias. In Study 3, we then set out to connect participants’ attitude towards AI (as measured by the developed questionnaire) to fundamental personality traits and demographic factors. For this third and final study, we will detail all theoretical considerations and hypotheses in the following.

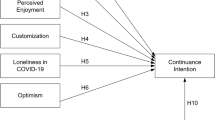

Faced with a lack of previous findings regarding personality predictors of AI-related attitudes, we deemed it important to anchor our investigation in a broader view at human personality. This perspective led us to first include the Big Five model31, which has remained the most widely used taxonomy of human personality for several decades. As the name suggests, the Big Five consist of five fundamental personality dimensions—openness to experience, conscientiousness, extraversion, agreeableness, and neuroticism—which are presumed to cover a substantial portion of the dispositional variance between individuals35,36. Adhering to this notion, we developed a first set of hypotheses about the individual contribution of each Big Five trait to people’s AI-related attitudes.

For openness to experience—which can be defined as the tendency to be adventurous, intellectually curious, and imaginative—we expected a clear positive relationship to AI-related attitudes. Since AI technologies promise many new possibilities for human society, people who are open to experience should feel more excited and curious about the respective innovations (this is mirrored by recent findings on self-driving cars11). We hypothesize:

H1

The higher a person’s openness for experience, the more positive attitudes they hold towards AI.

Conscientiousness can be described as the inclination to be diligent, efficient, and careful, and to act in a disciplined or even perfectionist manner. Considering that it may be difficult for humans to understand the inner workings of an AI system or to anticipate its behavior, it seems likely that conscientious people would express more negative views about AI, a technological concept that might make the world less comprehensible for them29.

H2

The higher a person’s conscientiousness, the more negative attitudes they hold towards AI.

The third of the Big Five traits, extraversion, encompasses the tendency to be out-going, talkative, and gregarious; in turn, it is negatively related to apprehensive and restrained behavior. Based on this definition, it comes as no surprise that extroverted individuals reported fewer concerns about autonomous technologies in previous studies29,30,37. Thus, we hypothesize:

H3

The higher people’s extraversion, the more positive attitudes they hold towards AI.

High agreeableness manifests itself in the proclivity to show warm, cooperative, and kind-hearted behavior. Regarding the topic at hand, previous research suggests that agreeableness might be (moderately) correlated with positive views about automation or specific types of AI11,27,30. In line with this, we assume:

H4

The higher a person’s agreeableness, the more positive attitudes they hold towards AI.

The last Big Five trait, neuroticism, is connected to self-conscious and shy behavior. People with high scores in this trait tend to be more vulnerable to external stressors and have trouble controlling spontaneous impulses. MacDorman and Entezari28 discovered that neurotic individuals reported significantly stronger eeriness after they had encountered an autonomous android than those with lower scores in the trait. Similar findings emerged with regards to people’s views on self-driving cars11 and narrow AI applications30. Based on this evidence as well as the generally anxious nature of neurotic individuals, our hypothesis is as follows:

H5

The higher a person’s neuroticism, the more negative attitudes they hold towards AI.

Although the Big Five are considered a relatively comprehensive set of human personality traits, research has yielded several other concepts that serve to make sense of interpersonal differences (and how they relate to attitudes and behaviors). Importantly, tapping into more negatively connotated aspects of human nature, Paulhus and Williams32 introduced the Dark Triad of personality, a taxonomy that gathers three malevolent character traits: Machiavellianism, psychopathy, and narcissism. Due to their antisocial qualities, the Dark Triad have been frequently connected to deviant behaviors, interpersonal problems, and increased difficulties in the workplace38,39. Moreover, they have become an important part of exploring interpersonal differences in attitude formation, not least including views on modern-day technology10. In consequence, our second set of hypotheses revolves around the potential influence of these rather vindictive personality traits.

Machiavellianism is a personality dimension that encompasses manipulative, callous, and amoral qualities. For our research topic, we contemplated two opposing notions as to how this trait could relate to AI attitudes. On the one hand, recent literature suggests that Machiavellian individuals might feel less concerned about amoral uses of AI and instead focus on its utilitarian value, which may reflect in more positive attitudes10. On the other hand, the prospect of AI-driven surveillance remains a prominent topic in the public discourse40—and we deemed it likely that people high in Machiavellianism would be wary of this, wanting for their more deviant actions to remain undetected. Weighing both arguments, we ultimately settled for the latter and hypothesized:

H6

The higher a person’s Machiavellianism, the more negative attitudes they hold towards AI.

A person scoring high on psychopathy is likely prone to thrill-seeking behavior and may experience only little anxiety. Unlike the deliberate manipulations typical for Machiavellian people, the psychopathic personality trait involves much more impulsive antisocial tendencies. Based on this, we came to assume a positive relationship between psychopathy and attitudes towards AI—not least considering that our dependent variable would also involve people’s emotional reactions towards AI, which should turn out less fearful among those high in psychopathy. We assumed:

H7

The higher a person’s psychopathy, the more positive attitudes they hold towards AI.

Broadly speaking, narcissists tend to show egocentric, proud, and unempathetic behavior, which is often accompanied by feelings of grandeur and entitlement. Whereas some qualitative research suggests that intelligent computers might deal a severe “blow to our narcissism” (41, p. 145), certain possibilities offered by AI could also appear quite attractive to people who score high in this trait—such as the futuristic notion of inserting aspects of oneself into a computer algorithm, to be preserved for all eternity. Surprisingly enough, inquiring participants about this very idea did not reveal a significant relation to narcissism in a recent study10. Then again, it should be noted that highly narcissistic individuals also tend to show open and extroverted behavior32, which would suggest a more positive relationship with AI-related attitudes depending on our other hypotheses. In summary, we settled on the following, cautiously positive assumption:

H8

The higher a person’s narcissism, the more positive attitudes they hold towards AI.

Lastly, we proceeded to the exploration of a dispositional variable on a notably smaller scale of abstraction, which appeared promising to us from a contemporary perspective: people’s conspiracy mentality. Strongly related to general distrust in people, institutions, and whole political systems, this personality trait focuses on the susceptibility to conspiracy theories, i.e., explanations about famous events that defy common sense or publicly presented facts33. Psychological research has shown that people who believe in one conspiracy theory are typically more likely to also believe in another, indicating a stable trait-like tendency to subscribe to an overly skeptical and distrusting way of thinking42. Further emphasizing this angle, recent research has suggested that the tendency to accept epistemically suspect information might constitute a single dimension that further connects to difficulties in analytic thinking, an overestimation of one’s own knowledge, as well as receptivity for ‘pseudo-profound bullshit’43.

Although they might not be quite as well-known as other prominent conspiracy theories (e.g., concerning the 9/11 attacks), there are in fact several conspiracist beliefs about the use of sophisticated technology and AI. The respective theories range from the idea that those in power want to replace certain individuals with intelligent machines44 to the belief that COVID-19 vaccinations are actually injections of sophisticated nano-chips to establish a global surveillance system45. While these notions might seem somewhat obscure to many, the triumph of social media as a news source has presented proponents of conspiracy theories with a powerful platform to disseminate their ideas46. In addition to that, the well-documented (mis-)uses of big data—e.g., in the Cambridge Analytica scandal—has led many people to at least think about how computer algorithms might be used for sinister purposes47. Taking into account all of these observations, we anticipated that conspiracy mentality relates to more negative attitudes towards AI:

H9

The higher a person’s level of conspiracy mentality, the more negative attitudes they hold towards AI.

Concluding the theoretical preparation of our study, we devised hypotheses on two basic demographic variables that were previously connected to AI-related attitudes in scientific publications. Specifically, it has been found that women typically hold more negative attitudes towards AI than men, which may, among other causes, be explained by societal barriers that limit women’s access to (and interest in) technical domains6,21. Likewise, studies have hinted at the higher apprehensiveness towards AI-powered technology among the elderly, potentially due to a lack of understanding and accessibility6. In the expectation to replicate these prior findings, we proposed:

H10

Women hold more negative attitudes towards AI than men.

H11

Older individuals hold more negative attitudes towards AI than younger individuals.

Study 1

The goal underlying Study 1 was to develop a psychometrically sound scale that was (a) one-dimensional, (b) incorporated items reflecting the three bases or facets of attitudes (cognitive, affective, behavioral) that have guided attitude research in psychology for the last decades18,19, and (c) consisted of positively and negatively worded items to ensure that attitudes were measured on a full spectrum between aversion and enthusiasm (and agreement bias did not confound the results systematically). Based on theory and existing attitude scales in applied fields13,48, 24 original items were generated. Item generation was guided by the goals of developing an equal number of negatively and positively worded items and an equal number of items representing the three attitude facets (cognitive, affective, behavioral). Adhering to prior AI theory49 and existing measurement approaches13, the items were preceded by an instruction that introduced the phenomenon of artificial intelligence to participants, in order to reduce semantic ambiguities about the attitude target. This instruction is an integral part of the measurement.

In a second step, the authors discussed the items, and excluded items that were ambiguous in content, too specific in terms of attitude target, or involved too much linguistic overlap with other items. The remaining scale consisted of twelve items, with each of the three psychological facets of human attitudes—cognitive, affective, and behavioral—being represented by two positively and two negatively worded items. Nevertheless, all twelve items were expected to represent one general factor: People’s attitude towards AI. The full scale (ATTARI-12) can be found in the Appendix.

To gain insight into the validity of our new scale, Study 1 further included items on more specific AI applications. We expected that general attitudes towards AI as measured by the ATTARI-12 would be positively associated with specific attitudes towards electronic personal assistants (e.g., Alexa) and attitudes towards robots. Moreover, we assessed participants’ tendency to give socially desirable answers to establish whether our novel instrument would be affected by social desirability bias. Based on our careful wording during the construction of the scale, we expected that people’s attitude towards AI (per the ATTARI-12) would not be substantially related to social desirability. The hypotheses and planned data analysis steps for Study 1 were preregistered at https://aspredicted.org/8B5_GHZ (see also Supplement S1).

Method

Ethics statement

In Germany, institutional ethics approval is not required for psychological research as long as it does not involve issues regulated by law50. All reported studies (Study 1, Study 2, and Study 3) were conducted in full accordance with the Declaration of Helsinki, as well as the ethical guidelines provided by the German Psychological Society (DGPs51). Of course, this also included obtaining informed consent from all participants before they were able to take part in this study.

Participants and procedure

Power analyses with semPower (Version 2.0.152) suggested a necessary sample size of at least 500 respondents for the planned study. Therefore, at least 600 participants were aspired in order to have a proper data basis for our analyses—while still retaining a buffer for potential exclusions. As such, a total of 601 participants were recruited from the US-American MTurk participant pool. To ensure satisfactory data quality, we set our MTurk request to at least 500 previously completed tasks (also known as HITs), as well as > 98% HIT approval rate. Each participant was rewarded $1.00 for their participation, which lasted around three to five minutes.

Following preregistered exclusion criteria (i.e., completion time, failing at least one of two attention checks), 111 participants were excluded from our analyses. More specifically, 31 participants completed the questionnaire in less than 120 s, 79 participants did not describe the study as instructed, and one participant reported a year of birth that differed from the reported age by more than three years. Thus, the final sample consisted of 490 participants (212 female, 273 male, 5 other or no answer). The participants were between 19 and 72 years old (M = 39.78 years, SD = 11.06). For additional demographic information, please consult Supplement S2. After participants had given their informed consent, they completed a first attention check before proceeding to the ATTARI-12 questionnaire. Following this, we assessed their attitudes towards electronic personal assistants and robots, before presenting a measure of socially desirable answering. Lastly, participants had to summarize the study’s topic as an additional attention check and respond to several sociodemographic questions.

Measures

Attitudes towards artificial intelligence

We applied the newly created ATTARI-12, using a five-point answer format to capture participants’ responses (1 = strongly disagree, 5 = strongly agree). Descriptive measures and the reliability of the scale are reported in the Results section.

Attitudes towards personal voice assistants (PVA)

Participants indicated their attitudes towards PVAs on three semantic differential items (with five gradation points) that were created for the purpose of this study (e.g., “hate it—love it”; Cronbach’s α = 0.94, M = 3.72, SD = 1.09; see Supplement S3).

Attitudes towards robots

People’s general assessment of robots was measured with three items that were previously used in robot acceptance research24. The items were answered on a four-point scale (Cronbach’s α = 0.77, M = 3.24, SD = 0.57; e.g., “Robots are a good thing for societies because they help people”, with 0 = totally disagree to 3 = totally agree, see Supplement S3).

Social desirability

Participants’ tendency to give socially desirable answers was measured with the Social Desirability Scale53. In this measure, participants have to answer whether 16 socially desirable or undesirable actions match their own behavior on a dichotomous scale (“true” or “false”). The number of socially desirable responses is added up for each participant, resulting in a range between 0 and 16 (Cronbach’s α = 0.85, M = 8.74, SD = 0.83).

Results

Our analysis of factorial validity was guided by the assumption that the items represent a single construct: People’s attitude towards AI. To this end, we compared different, increasingly less restrictive, models in a confirmatory factor analysis: The first model (a) specified a single factor and, thus, assumed that individual differences in item responses could be explained by a single, general attitude construct. However, this assumption is often too strong in practice because specific content facets or item wording might lead to minor multidimensionality. Therefore, Model (b) estimated a bifactor S-1 structure that, in addition to the general factor, included two orthogonal specific factors for the cognitive or affective items. These specific factors captured the unique variance resulting from the two content domains that were not accounted by the general attitude factor. Following Eid and colleagues54, no specific factor was specified for the behavioral items which, thus, acted as reference domain. Because the ATTARI-12 included positively and negatively worded items, Model (c) explored potential wording effects by evaluating a bifactor S-1 model that included an orthogonal specific factor for the negatively worded items. Finally, we combined Models (b) and (c) to study the joint effects of content and method effects.

The goodness of fit of these models are summarized in Table 2. Using established recommendations for the interpretation of these fit indices55, the single factor model exhibited an inferior fit to the data. Also, acknowledging the different content facets (cognitive, affective, behavioral) did not improve model fit. However, the ATTARI-12 exhibited non-negligible wording effects as demonstrated by Model (c). This model showed a good fit to the data with a comparative fit index (CFI) of 0.98, a root mean squared error (RMSEA) of 0.03, and a standardized root mean residual (SRMR) of 0.03. Despite the observed multidimensionality, all items showed good to excellent associations with the general factor as evidenced by rather high factor loadings falling between 0.48 and 0.88 (see Table 3). To examine the consequence of the multidimensional measurement structure in more detail, we examined how much of the systematic variance in the ATTARI-12 was explained by the general factor or the specific wording factor (cf.56). While the wording factor explained about 21% of the variance, most of the common variance (79%) was reflected by the general factor. As a result, the hierarchical omega reliability for the general factor was ωH = 0.83 but only ωH = 0.38 for the specific factor. These results emphasize that all twelve items comprised an essentially unidimensional scale operationalizing a strong general factor reflecting the overarching attitude towards AI. Based on the analyses, no items needed to be excluded from the scale due to subpar psychometric properties.

Descriptive and correlational results on the composite measure of the ATTARI-12 are shown in Table 4. The reliability in terms of internal consistency was excellent, Cronbach’s α = 0.93. The distribution approximated a normal distribution, skewness (= − 0.63) and kurtosis (= 0.22) were within the boundaries expected for psychometrically tested scales (also see Fig. 1). We acknowledge, however, that the distribution was somewhat left-skewed. As expected, the measure was positively correlated with attitudes towards electronic personal assistants (r = 0.60, p < 0.001) and with attitudes towards robots (r = 0.68, p < 0.001). ATTARI-12 scores were not substantially related to social desirability bias (r = 0.04, p = 0.411).

In sum, the one-dimensional ATTARI-12 measured attitudes towards AI in a reliable way, and the indicators of convergent (attitudes towards specific AI applications) and divergent (social desirability) construct validity corroborate the validity of the scale.

Study 2

The aim of the second study was to develop a German-language version of the scale and to gain further insight into the scale’s reliability and validity. In particular, we examined the re-test reliability of the scale, by administering the items at two points in time. We further assessed the extent to which the participants (undergraduates) wished to work with (or without) AI in their future careers. We expected a positive association between the latter variable and attitudes towards AI.

Method

This study consisted of two parts, administered online with an average delay of 31.40 days (SD = 2.31; range 26–36 days). Students of a social science major at the University of Würzburg, Germany participated for course credit. According to calculations with G*Power software—based on an expected small to moderate correlation of r = 0.25, 80% power, and α = 0.05—a minimum sample size of 123 participants was required. Yet, as we expected dropouts from Time 1 to Time 2, a starting sample of 180 participants was targeted. Eventually, 166 participants completed the survey at Time 1, and a total of 150 students provided data at both points in time. This final sample of 150 participants with complete data had an average age of 21.21 years (SD = 2.60; range: 18 to 41 years) and consisted predominantly of women (113 female, 36 male, 1 other). Nearly all of the participants were German native speakers (see Supplement S4 for detailed descriptive data and Supplement S8 for the German-language version of the scale). Importantly, since participants for Study 2 were recruited among German students (in contrast to the use of English-language survey panel members in Study 1), we are confident that our two validation studies were based on entirely different samples.

At time 1, participants answered the ATTARI-12, as well as four items that measured their interest in a career involving AI technology (two items were reverse-coded, e.g., “I would prefer a position in which AI plays no role”). The items went with a 5-point scale (1 = strongly disagree, 5 = strongly agree), Cronbach’s α = 0.85 (see Supplement S5). In the resulting index, higher scores indicated higher aspirations for AI-related careers. At the study’s second measurement time, the ATTARI-12 had to be answered once again. Furthermore, the study was initially designed to also include a measure of social desirability at this point (53, in its German translation); however, the respective assessment did not achieve satisfactory reliability (Cronbach’s α < 0.40) and was, therefore, excluded from our analysis.

Results

The main results are shown in Table 5. The reliability in terms of internal consistency was very good for the German-language version applied in this study, T1 Cronbach’s α = 0.91; T2 Cronbach’s α = 0.89. The distribution approximated a normal distribution, skewness (skewness T1 = − 0.58; skewness T2 = − 0.48) and kurtosis (kurtosis T1 = 0.07; kurtosis T2 = − 0.24) were within the boundaries expected for psychometrically sound scales (Fig. 1). Importantly, the test-re-test association was large, r(148) = 0.804, p < 0.001, supporting the reliability of the scale. Associations with the AI-career measure were significant and ranged around r = 0.60. In sum, the one-dimensional ATTARI-12 (German version) showed good psychometric properties.

Study 3

Having found empirical support for the validity of our newly created measure, we proceeded with our second main research goal: Exploring potential associations between personality traits and attitudes towards AI. To ensure transparency, we decided to preregister Study 3 before data collection, including all hypotheses and planned analyses (https://aspredicted.org/VRU_BJI).

Method

Participants

An a priori calculation of minimum sample size by means of G*Power software (assuming a small to moderate effect of f2 = 0.08, 80% power, α = 0.05, and eleven predictors in a hierarchical linear regression) resulted in a lower threshold of 221 participants. Since we intended to screen our sample for several data quality indicators and, thus, desired some leeway for potential exclusions, we recruited 353 US-American participants via the Amazon MTurk participant pool (age: M = 38.34 years, SD = 10.93; 112 female, 239 male, 2 other; payment $1.50). Specifically, we used the following MTurk criteria to ensure high data quality57,58: (a) at least 100 approved HITs; (b) HIT approval rate > 97%. Moreover, the web service “IPhub.info” was used to check whether users’ individual IP address correctly indicated the United States as a current location.

Examining the collected data, several measures were taken to exclude MTurk workers whose answers indicated careless and inattentive responding. First, we asked all participants to indicate their age and year of birth on two separate pages of the survey; if these answers deviated more than two years from each other, the participant was excluded (n = 10). Second, as a specific check against bot workers, participants were asked to name a type of vegetable (“eggplant”) that was depicted on a large-scale photograph. Apart from minor typos, all answers that did not resemble the correct answer in English language led to the removal of the data (n = 6). Third, participants were asked to choose the correct study topic (“artificial intelligence”) in a multiple-choice item, a task that was not fulfilled correctly by another n = 9 participants.

In addition, we initially considered removing all participants who had filled in the survey in less than four minutes, a threshold we had measured as the minimum time for attentive responding. Looking at our obtained data, this would have led to the additional exclusion of 81 participants. However, as we noticed that a lot of MTurk workers had finished the questionnaire in slightly less than four minutes, we decided to ease our initial exclusion criterion to a minimum duration of three minutes—leading to the exclusion of n = 30 participants. To make sure that this deviation from our preregistered analysis plan would not substantially change our results, we repeated all planned analyses with the initial four-minute criterion. Doing so, we found no noteworthy statistical differences (the results of this additional analysis are presented in Supplement S6).

Thus, in summary, our final sample consisted of 298 participants with a mean age of 39.29 years (SD = 11.08; range from 22 to 73 years). Gender balance was slightly skewed towards men (102 female, 195 male, 1 other). Regarding ethnicity, most participants identified themselves as White (77.9%), followed by Asian (8.1%), Black (7.7%), and Hispanic (3.7%). Level of education was relatively high, with most participants having obtained either a bachelor’s degree (49.7%), a master’s degree (16.1%) or a Ph.D. (2.7%). Lastly, we note that our sample was quite balanced in terms of political orientation. For a complete overview of the obtained descriptive data, please consult Supplement S7.

Measures

All items of the following measures were presented on 5-point scales (1 = strongly disagree; 5 = strongly agree).

Attitudes towards AI

To measure the main outcome variable, we administered the ATTARI-12 scale in its English version. Our data analysis showed that the scale again yielded excellent internal consistency this time around (Cronbach’s α = 0.92).

Big Five

The Big Five were assessed with the Big Five Inventory59, which consists of 44 items (openness: ten items, e.g., “I am a someone who is curious about many different things”; conscientiousness: nine items, e.g., “…does a thorough job”; extraversion: 8 items, e.g., “…is talkative”; agreeableness: nine items, e.g., “…is considerate and kind to almost everyone”; neuroticism: 8 items, e.g., “…can be tense”). We observed very good to excellent internal consistency for all five measured dimensions (openness: Cronbach’s α = 0.85; conscientiousness: Cronbach’s α = 0.85; extraversion: Cronbach’s α = 0.90; agreeableness: Cronbach’s α = 0.81; neuroticism: Cronbach’s α = 0.90).

Dark Triad

We assessed participants’ Dark Triad personality traits with the Short Dark Triad scale (SD360). This instrument includes nine items on Machiavellianism (e.g., “It’s not wise to tell your secrets”), six items on psychopathy (e.g., “People often say I’m out of control.”), and nine items on narcissism (e.g., “Many group activities tend to be dull without me.”). Reliability analyses suggested good to very good internal consistencies for all three scales (Machiavellianism: Cronbach’s α = 0.84; psychopathy: Cronbach’s α = 0.83; narcissism: Cronbach’s α = 0.78).

Conspiracy mentality

The Conspiracy Mentality Questionnaire (CMQ33) offers a measure of people’s general susceptibility to conspiracy theories and beliefs. It consists of five items that encapsulate an inherent skepticism about the workings of society, governments, and secret organizations (e.g., “I think that many important things happen in the world, which the public is never informed about.”; “I think that events which superficially seem to lack a connection are often the result of secret activities.”). With our data, we observed a very good Cronbach’s α of 0.86 for the CMQ.

Results

Table 6 summarizes descriptive information for and zero-order correlations between all study variables. On average, participants’ AI-related attitudes were slightly positive, M = 3.60, SD = 0.81—considering that a value of 3 indicates the neutral midpoint of our 5-point scale, which was assembled from equal numbers of negative (inversed) and positive items.

With our main data analysis slated to involve multiple regression, we first made sure that all necessary assumptions were met61: Residuals were independent and normally distributed, and neither multicollinearity nor heteroskedascity issues could be found. Moreover, satisfying Cook’s distance values revealed that no influential cases were biasing our model. As such, we proceeded with hierarchical linear regression as the core procedure of our data analysis. Using participants’ ATTARI score as the criterion, we first entered their age and gender as predictors (Step 1), before adding the Big Five (Step 2), the Dark Triad (Step 3), and conspiracy mentality (Step 4) to an extended regression model. Table 7 presents the main calculations and coefficients of this hierarchical regression analysis. As can be seen here, the first step of the regression resulted in an insignificant equation, F(2, 294) = 2.18, p = 0.115, with an R2 of 0.02. In contrast to this, the second step of the procedure (including the Big Five) yielded a significant result, F(7, 289) = 4.10, p < 0.001, ΔR2 = 0.08. Proceeding with Step 3, we found that adding the Dark Triad traits did not lead to a significant increase of R2 (ΔR2 < 0.01, p = 0.52). Entering participants’ conspiracy mentality as a final predictor, however, Step 4 resulted in a solution with significantly higher explained variance, F(11,285) = 4.17, p < 0.001, R2 = 0.14 (ΔR2 = 0.04). In the following, we will take a closer look at the predictive value of each entered predictor.

Predictive value of the Big Five

In response to hypotheses H1 through H5, we first directed our attention to the Big Five personality dimensions. Based on the second regression step, which was designed to include these variables, it was found that only agreeableness significantly predicted participants’ attitudes towards AI (p < 0.001), with a positive beta coefficient of 0.29. This positive association also persisted after entering other personality traits in Steps 3 and 4 of the regression. Hence, we conclude that higher agreeableness was related to more positive views about AI technology in our sample—providing empirical support for Hypothesis 4. Out of the other Big Five dimensions, openness to experience slightly missed the threshold of statistical significance in the final regression step, β = 0.11, p = 0.065, so that further exploration of the respective hypothesis may be warranted before rejecting it entirely. The remaining Big Five traits conscientiousness (H2), extraversion (H3), and neuroticism (H5), however, remained clearly insignificant as predictors.

Predictive value of the Dark Triad

Proceeding to the dark personality traits Machiavellianism, psychopathy, and narcissism (as entered during Step 3), we note that none of the three predictors approached the conventional threshold of statistical significance. As such, we cannot accept hypotheses H6 through H8; according to our data, more malevolent character traits were not predictive of people’s general attitudes about AI.

Predictive value of conspiracy mentality

Concluding our investigation of dispositional influences, we focused on participants’ conspiracy mentality as it was added during the regression’s final step. We found that this variable emerged as another meaningful predictor, β = − 0.22, p < 0.001. In support of Hypothesis 9, we therefore report that a stronger conspiracy mentality was related to more negative views towards artificially intelligent technology.

Predictive value of age and gender

Lastly, the impact of participants’ age and gender on their AI attitudes was explored. To this end, we focused on our final regression model with all predictors entered into the equation. While the influence of gender remained insignificant, it was examined that higher age was significantly related to lower scores in the ATTARI-12 scale, i.e., to more aversive cognitions, feelings, and behavioral intentions towards AI (β = − 0.17, p = 0.005). In light of this, our data successfully replicated previous findings regarding this sociodemographic variable, resulting in a positive answer to H11. Meanwhile, we reject H10 on the influence of gender.

General discussion

In a world shaped by autonomous technologies that are supposed to make human life safer, healthier, and more convenient, it is important to understand how people evaluate the very notion of artificially intelligent technology—and to identify factors that account for notable interindividual variance in this regard. Thus, the current project set out to explore the role of fundamental personality traits as predictors for people’s AI-related attitudes. In order to build our research upon a valid and reliable measurement of the outcome in question, we first developed a novel questionnaire—the ATTARI-12—and confirmed its psychometric quality across two studies. Distinguishing our instrument from extant alternatives, we note that our one-dimensional scale assesses attitudes towards AI on a full spectrum between aversion and enthusiasm. Furthermore, the ATTARI-12 items incorporate the classic trichotomy of human attitude (cognition, emotion, behavior), thus emerging as a conceptually sound way to measure people’s evaluation of AI. Lastly, since the instruction of the ATTARI-12 does not focus on specific use cases but rather on a broader understanding of AI as a set of technological abilities, we believe it may be suitable to be used across many different disciplines and research settings.

Utilizing our newly developed measure, we proceeded to our second research aim: Investigating potential connections between individuals’ AI-related attitudes and their personality traits. Specifically, we focused on two central taxonomies from the field of personality psychology (the Big Five, the Dark Triad), as well as conspiracy mentality as a trait of high contemporary relevance. By these means, we found significant effects for two of the explored dispositional predictors: Agreeableness (one of the Big Five traits) was significantly related to more positive attitudes about AI, whereas stronger conspiracy mentality predicted the opposite. We believe that both of these findings may offer intriguing implications for researchers, developers, and users of autonomous technology.

A personality dimension that typically goes along with an optimistic outlook at life62,63, agreeableness has been found to predict people’s acceptance of innovations in many different domains64. Psychologically speaking, this makes perfect sense: In line with their own benevolent nature, agreeable individuals tend to perceive others more positively as well—and may therefore come to think more about the opportunities than the risks of a new invention when forming their opinion. Since our specific measurement of participants’ attitudes requested them to consider both positive and negative aspects of AI, this tendency to focus more on the upside might have played an important role for the observed effect. Furthermore, we suppose that the trusting nature that often characterizes agreeable individuals65 offers another reasonable explanation for our result. Faced with a complex concept such as AI, which may appear obscure or downright incomprehensible to the layperson user, it arguably becomes all the more important for people to understand the intentions of the decision makers and stakeholders behind it. While the technological companies that develop AI systems might not always inspire the necessary confidence with their actions (e.g., excessive data collection, convoluted company policies), having a stronger inclination to trust others will likely compensate for this—presenting another reason as to why agreeableness might have emerged as a significant predictor in the current study.

A surprisingly similar argument may be put forward when addressing the second dispositional factor that achieved notable significance in our analyses, i.e., people’s conspiracy mentality. By definition, this personality trait is also anchored in perceptions of trust (or rather, a lack thereof), a notion that is echoed by our findings: The stronger participants expressed their skepticism about governments, organizations, and related news coverage (as indicated by items such as “I think many very important things happen in the world, which the public is never informed about”), the more negatively did their attitudes about AI turn out. In our reading, this further illustrates how transparency and perceived trustworthiness crucially affect the public acceptance of AI; just as having an agreeable, credulous character predicted more favorable attitudes, the disposition to suspect sinister forces on the global stage related to more negative views about autonomous technology.

On a societal level, we believe that our identification of conspiracy beliefs as a pitfall for AI-related attitudes is a particularly timely result, as it connects to the on-going debate about fake news in modern society. In the past few years, many people have started turning to social media as a source for news and education, which has paved the way for an unusual proliferation of misinformation66. Virtual spaces, in which like-minded individuals mutually confirm their attitudes and suspicious about different issues (so-called echo chambers; e.g., discussion forums or text messaging groups) have become highly prevalent, and, by these means, turned into a cause for concern among media scholars. Indeed, research suggests that using platforms such as Facebook, Twitter, or YouTube not only provides an immensely effective way to disseminate postfactual beliefs but may even increase people’s susceptibility to conspiracy theories in the first place67. Taken together with the fact that it is mostly complex and ambiguous topics for which people seek out postfactual information68, negative views about intelligent technology may proliferate most easily in the online realm. Arguably, the much-observed social media claim that COVID-19 vaccines secretly inject AI nanotechnology into unwilling patients45 illustrates this perfectly.

Based on the presented arguments, it stands to reason that the successful mass-adoption of intelligent technologies may also depend on whether postfactual theories about AI can be publicly refuted. In order to do so, it might be key to increase the public’s trust, not only in the concept of AI itself, but also in the organizations and systems employing it. For instance, developers may set out to educate users about the capabilities and limits of autonomous technologies in a comprehensible way, whereas companies might strive for more accessible policies and disclosures. At the same time, we note that overcoming deep-rooted fears and suspicions about AI will likely remain a great challenge in the future; in our expectation, the sheer sophistication and complexity of intelligent computers will continue to offer fertile ground for conspiracy theorists. Also, it cannot be ruled out that attempts to make AI more transparent in the future could also backfire, as conspiracy theorists might interpret these attempts as actual proof for a conspiracy in the first place. More so, the fact that popular movies and TV shows frequently present AI-based technology in a dangerous or creepy manner (e.g., in dystopian science fiction media23) may make it even more difficult to establish sufficient levels of trust—especially among those who tend to have a more skeptical mentality to begin with.

Apart from the interesting implications offered by the two significant personality predictors, several other examined traits showed no significant association with participants’ AI-related attitudes. Specifically, we found that three of the Big Five (conscientiousness, extraversion, and neuroticism) and all Dark Triad variables fell short in explaining notable variance in the obtained ATTARI-12 scores. Taken together with the abovementioned results, this indicates that user personality may have valuable yet limited potential to explain public acceptance of AI technology. In their nature as overarching meta-level traits, certain Big Five dimensions might simply be too abstract to address the nuances that characterize people’s experiences with—and attitudes towards—AI technology. For example, being a more extraverted person might involve aspects that both increase acceptance of AI (e.g., by being less apprehensive and restrained in general) and decrease it (e.g., by valuing genuine social connection to other humans, which may be challenged by new AI technology). Similarly, the more deviant personality traits included in the Dark Triad might be connected to both positive and negative views towards AI, thus preventing a significant prediction in a specific direction. Just as the many possibilities brought by new intelligent technology could be seen as useful tools to act manipulatively or enhance the self—suggesting a positive link to Machiavellianism and narcissism—they may also raise concerns among people scoring high in these traits, as AI might make it harder to act out devious impulses in an undetected or well-accepted manner.

Limitations and future directions

We would like to point out important limitations that need to be considered when interpreting the presented results of our project, especially the third and final study that served to address our main hypotheses. Although we recruited a relatively diverse sample in terms of age, gender, and socioeconomic background—and put several measures into place to ensure high data quality—our data on the association between AI attitudes and user personality are based on only one group of participants. Moreover, given that the sample of our third study was recruited via the same method as used in Study 1 (MTurk), we cannot rule out that certain participants might have taken part in both research efforts (even though, based on the very large participant pool of the chosen panel, we deem it highly unlikely that duplicate responses occurred). As such, replication efforts with different samples are encouraged in order to consolidate the yielded evidence. Not least, this concerns the recruitment of samples with a more balanced gender distribution, as our own recruitment yielded a notable majority of male participants. Also, considering that socio-economic and educational factors exert notable impact on people’s opinions towards technology (not least regarding AI; see6), shifting focus to different backgrounds should be most enlightening regarding the generalizability of our work. We believe that this might be especially important when focusing further on the dimension of conspiracy mentality—keeping in mind that a lack of education, media literacy, and analytic thinking ability have all been shown to predict the susceptibility to post-factual information43,69,70.

Along these lines, we suggest that studies with samples from different cultures should be carried out in order to establish whether our findings are consistent across national borders. Most recently, a study focusing on a sample of South Korean participants also reported positive associations between attitudes towards AI and the Big Five dimension agreeableness, especially regarding the sociality and functionality of AI-powered systems71. In contrast to our research, however, the authors did not pursue a one-dimensional measurement, thus yielding ambivalent findings (e.g., conscientiousness related to negative emotions towards AI but also to expectations of high functionality). Hence, we consider it worthwhile to apply the one-dimensional ATTARI-12 in other cultures in order to better understand people’s stance towards AI—and how this attitude is shaped by certain personality traits. Keeping in mind that our newly developed scale facilitated a reliable, one-dimensional measurement with samples in two countries, it appears as a promising tool for such examinations; even though, of course, further validation of our instrument is clearly welcome, especially for its non-English versions. Moreover, intercultural comparisons will have to ensure a consistent measurement of the personality traits in question. While both the Big Five and the Dark Triad have been highlighted for their cultural invariance72,73, some studies have also raised doubts about this claim74—so that the applicability of the used personality taxonomies might pose an additional challenge in this regard. A potential solution here would be to use the HEXACO inventory of personality75, which combines both the Big Five and the Dark Triad’s deviant traits into a novel taxonomy of human personality. Not only has the HEXACO model received strong support in terms of cultural invariance76, using it would also enable scholars to cross-validate the findings of the current study with another well-established instrument. Of course, completely different personality traits than the ones included in our endeavor—e.g., impulsivity, sensation seeking, or fear of missing out—could also be helpful to gain a thorough understanding of interindividual differences regarding people’s attitudes towards AI.

Additionally, it should be noted that future research into this topic can clearly benefit from assessing situational and motivational aspects that affect participants’ views on AI beyond their personality characteristics. For instance, recent research suggests that views on sophisticated technology are strongly modulated by prior exposure to science fiction media23,77, as well as philosophical views and moral norms78. Furthermore, people have been found to change their attitudes after several encounters with AI technology 79, adding another factor to the equation. Taken together, this suggests that future studies might tap into several profound covariates to disentangle the states and traits affecting attitudes towards AI. In any case, keeping in mind that our newly developed ATTARI-12 scale facilitated a reliable, one-dimensional measurement with samples of different age ranges and cultural backgrounds, we suggest that it constitutes a promising methodological cornerstone for future examinations of AI attitudes.

Conclusion

In our project, we investigated attitudes towards AI as a composite measure of people’s thoughts, feelings, and behavioral intentions. Striving to complement previous research that focused more on the acceptance of specialized AI applications, we created and utilized a new unidimensional measure: the ATTARI-12. We are confident that the developed measure may now serve as a useful tool to practically address AI as a macro-level phenomenon, which—despite encompassing a host of different programs and applications—is united by several shared fundamentals. In our expectation, the created scale can still be administered even when new and unforeseen AI technologies emerge, as it focuses more on underlying principles than specific capabilities. For practitioners and developers, this perspective may be particularly insightful, suggesting that individuals’ responses to new AI technology (e.g., as customers or employees) will not only be driven by the specific features of a certain technology, but also by a general attitude towards AI. Similarly, we hope that large-scale sociodemographic efforts (e.g., national opinion compasses) might be able to benefit from the provided measure.

Apart from that, we established significant connections between general AI attitudes and two selected personality traits. In our opinion, it is especially the observed association with participants’ conspiracy mentality that holds notable relevance in our increasingly complex world. If AI developers cannot find suitable ways to make their technology appear innocuous to observers (in particular to those who tend to seek out post-factual explanations), it might become increasingly challenging to establish innovations on a larger scale. However, we emphasize that negative attitudes and objections against AI technology are not necessarily unjustified; hence, when educating the public on AI technologies, people should be encouraged to reflect upon both potential risks and benefits. To reduce the risk for new conspiracy theories, we further encourage industry professionals to stay as transparent as possible when introducing their innovations to the public.

Lastly, we suggest that follow-up work is all but needed to elaborate upon our current contribution. While we remain convinced that measuring attitudes towards AI as a general set of technological abilities is meritorious, more tangible results could stem from including a theoretical dichotomy that has recently emerged in the field of human–machine interaction80,81,82. More specifically, it might be worthwhile to distinguish between AI abilities that relate to agency (i.e., planning, thinking, and acting) and those that relate to experience (i.e., sensing and feeling). In turn, scholars may be able to find out whether different user traits also relate to different attitudes towards “acting” and “feeling” AI—a nuanced perspective that would still allow for broader implications across many different technological contexts.

Data availability

All materials, obtained data, and analysis codes for the three reported studies can be found in the project’s Open Science Framework repository (https://osf.io/3j67a/).

References

Grewal, D., Roggeveen, A. L. & Nordfält, J. The future of retailing. J. Retail. 93(1), 1–6. https://doi.org/10.1016/j.jretai.2016.12.008 (2017).

Vanian, J. Artificial Intelligence will Obliterate These Jobs by 2030 (Fortune, 2019). https://fortune.com/2019/11/19/artificial-intelligence-will-obliterate-these-jobs-by-2030/.

Ivanov, S., Kuyumdzhiev, M. & Webster, C. Automation fears: Drivers and solutions. Technol. Soc. 63, 101431. https://doi.org/10.1016/j.techsoc.2020.101431 (2020).

Waytz, A. & Norton, M. I. Botsourcing and outsourcing: Robot, British, Chinese, and German workers are for thinking—not feeling—jobs. Emotion 14, 434–444. https://doi.org/10.1037/a0036054 (2014).

Gherheş, V. Why are we afraid of artificial intelligence (AI)?. Eur. Rev. Appl. Sociol. 11(17), 6–15. https://doi.org/10.1515/eras-2018-0006 (2018).

Liang, Y. & Lee, S. A. Fear of autonomous robots and artificial intelligence: Evidence from national representative data with probability sampling. Int. J. Soc. Robot. 9, 379–384. https://doi.org/10.1007/s12369-017-0401-3 (2017).

Fast, E., & Horvitz, E. Long-term trends in the public perception of artificial intelligence. In Proceedings of the 31st AAAI Conference on Artificial Intelligence pp. 963–969 (AAAI Press, 2016).

Thorp, H. H. ChatGPT is fun, but not an author. Science 379(6630), 313. https://doi.org/10.1126/science.adg7879 (2023).

Lobera, J., Fernández Rodríguez, C. J. & Torres-Albero, C. Privacy, values and machines: Predicting opposition to artificial intelligence. Commun. Stud. 71(3), 448–465. https://doi.org/10.1080/10510974.2020.1736114 (2020).

Laakasuo, M. et al. The dark path to eternal life: Machiavellianism predicts approval of mind upload technology. Pers. Individ. Differ. 177, 110731. https://doi.org/10.1016/j.paid.2021.110731 (2021).

Qu, W., Sun, H. & Ge, Y. The effects of trait anxiety and the big five personality traits on self-driving car acceptance. Transportation. https://doi.org/10.1007/s11116-020-10143-7 (2020).

Kaplan, J. Artificial Intelligence: What Everyone Needs to Know. (Oxford University Press, 2016).

Schepman, A. & Rodway, P. Initial validation of the general attitudes towards Artificial Intelligence Scale. Comput. Hum. Behav. Rep. 1, 100014. https://doi.org/10.1016/j.chbr.2020.100014 (2020).

Sindermann, C. et al. Assessing the attitude towards artificial intelligence: Introduction of a short measure in German, Chinese, and English language. Künstliche Intelligenz 35(1), 109–118. https://doi.org/10.1007/s13218-020-00689-0 (2020).

Wang, Y. Y., & Wang, Y. S. Development and validation of an artificial intelligence anxiety scale: An initial application in predicting motivated learning behavior. Interact. Learn. Environ. https://doi.org/10.1080/10494820.2019.1674887 (2019).

Kieslich, K., Lünich, M. & Marcinkowski, F. The Threats of Artificial Intelligence Scale (TAI): Development, measurement and test over three application domains. Int. J. Soc. Robot. https://doi.org/10.1007/s12369-020-00734-w (2021).

Stein, J.-P., Liebold, B. & Ohler, P. Stay back, clever thing! Linking situational control and human uniqueness concerns to the aversion against autonomous technology. Comput. Hum. Behav. 95, 73–82. https://doi.org/10.1016/j.chb.2019.01.021 (2019).

Rosenberg, M. J., & Hovland, C. I. Attitude Organization and Change: An Analysis of Consistency Among Attitude Components (Yale University Press, 1960).

Zanna, M. P. & Rempel, J. K. Attitudes: A new look at an old concept. In Attitudes: Their Structure, Function, and Consequences (eds Fazio, R. H. & Petty, R. E.) 7–15 (Psychology Press, 2008).

Stein, J.-P., Appel, M., Jost, A. & Ohler, P. Matter over mind? How the acceptance of digital entities depends on their appearance, mental prowess, and the interaction between both. Int. J. Hum.-Comput. Stud. 142, 102463. https://doi.org/10.1016/j.ijhcs.2020.102463 (2020).

McClure, P. K. “You’re fired”, says the robot. Soc. Sci. Comput. Rev. 36(2), 139–156. https://doi.org/10.1177/0894439317698637 (2017).

Gillath, O. et al. Attachment and trust in artificial intelligence. Comput. Hum. Behav. 115, 106607. https://doi.org/10.1016/j.chb.2020.106607 (2021).

Young, K. L. & Carpenter, C. Does science fiction affect political fact? Yes and no: A survey experiment on “Killer Robots”. Int. Stud. Q. 62(3), 562–576. https://doi.org/10.1093/isq/sqy028 (2018).

Gnambs, T. & Appel, M. Are robots becoming unpopular? Changes in attitudes towards autonomous robotic systems in Europe. Comput. Hum. Behav. 93, 53–61. https://doi.org/10.1016/j.chb.2018.11.045 (2019).

Horstmann, A. C. & Krämer, N. C. Great expectations? Relation of previous experiences with social robots in real life or in the media and expectancies based on qualitative and quantitative assessment. Front. Psychol. 10, 939. https://doi.org/10.3389/fpsyg.2019.00939 (2019).

Sundar, S. S., Waddell, T. F. & Jung, E. H. The Hollywood robot syndrome: Media effects on older adults’ attitudes toward robots and adoption intentions. Paper presented at the 11th Annual ACM/IEEE International Conference on Human–Robot Interaction, Christchurch, New Zealand (2016).

Chien, S.-Y., Sycara, K., Liu, J.-S. & Kumru, A. Relation between trust attitudes toward automation, Hofstede’s cultural dimensions, and Big Five personality traits. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 60(1), 841–845. https://doi.org/10.1177/1541931213601192 (2016).

MacDorman, K. F. & Entezari, S. Individual differences predict sensitivity to the uncanny valley. Interact. Stud. 16(2), 141–172. https://doi.org/10.1075/is.16.2.01mac (2015).

Oksanen, A., Savela, N., Latikka, R. & Koivula, A. Trust toward robots and artificial intelligence: An experimental approach to Human-Technology interactions online. Front. Psychol. 11, 3336. https://doi.org/10.3389/fpsyg.2020.568256 (2020).

Wissing, B. G. & Reinhard, M.-A. Individual differences in risk perception of artificial intelligence. Swiss J. Psychol. 77(4), 149–157. https://doi.org/10.1024/1421-0185/a000214 (2018).

Costa, P. T. & McCrae, R. R. Four ways five factors are basic. Pers. Individ. Differ. 13, 653–665. https://doi.org/10.1016/0191-8869(92)90236-I (1992).

Paulhus, D. L. & Williams, K. M. The Dark Triad of personality: Narcissism, Machiavellianism, and psychopathy. J. Res. Pers. 36, 556–563. https://doi.org/10.1016/S0092-6566(02)00505-6 (2002).

Bruder, M., Haffke, P., Neave, N., Nouripanah, N. & Imhoff, R. Measuring individual differences in generic beliefs in conspiracy theories across cultures: Conspiracy mentality questionnaire. Front. Psychol. 4, 225. https://doi.org/10.3389/fpsyg.2013.00225 (2013).

Imhoff, R. et al. Conspiracy mentality and political orientation across 26 countries. Nat. Hum. Behav. 6(3), 392–403. https://doi.org/10.1038/s41562-021-01258-7 (2022).

Nettle, D. & Penke, L. Personality: Bridging the literatures from human psychology and behavioural ecology. Philos. Trans. R. Soc. B 365(1560), 4043–4050. https://doi.org/10.1098/rstb.2010.0061 (2010).

Poropat, A. E. A meta-analysis of the five-factor model of personality and academic performance. Psychol. Bull. 135(2), 322–338. https://doi.org/10.1037/a0014996 (2009).

Kaplan, A. D., Sanders, T. & Hancock, P. A. The relationship between extroversion and the tendency to anthropomorphize robots: A bayesian analysis. Front. Robot. AI 5, 135. https://doi.org/10.3389/frobt.2018.00135 (2019).

Kaufman, S. B., Yaden, D. B., Hyde, E. & Tsukayama, E. The light vs. Dark triad of personality: Contrasting two very different profiles of human nature. Front. Psychol. 10, 467. https://doi.org/10.3389/fpsyg.2019.00467 (2019).

LeBreton, J. M., Shiverdecker, L. K. & Grimaldi, E. M. The Dark Triad and workplace behavior. Annu. Rev. Organ. Psychol. Organ. Behav. 5(1), 387–414. https://doi.org/10.1146/annurev-orgpsych-032117-104451 (2018).

Feldstein, S. The Global Expansion of AI Surveillance. Carnegie Endowment for International Piece. https://carnegieendowment.org/files/WP-Feldstein-AISurveillance_final1.pdf (2019).

Malabou, C. Morphing Intelligence: From IQ Measurement to Artificial Brains (Columbia University Press, 2019).

Swami, V., Chamorro-Premuzic, T. & Furnham, A. Unanswered questions: A preliminary investigation of personality and individual difference predictors of 9/11 conspiracist beliefs. Appl. Cognit. Psychol. 24, 749–761. https://doi.org/10.1002/acp.1583 (2010).

Pennycook, G. & Rand, D. G. Who falls for fake news? The roles of bullshit receptivity, overclaiming, familiarity, and analytic thinking. J. Pers. 88(2), 185–200. https://doi.org/10.1111/jopy.12476 (2019).

Shakarian, A. The Artificial Intelligence Conspiracy (2016).

McEvoy, J. Microchips, Magnets and Shedding: Here are 5 (Debunked) Covid Vaccine Conspiracy Theories Spreading Online (Forbes, 2021). https://www.forbes.com/sites/jemimamcevoy/2021/06/03/microchips-and-shedding-here-are-5-debunked-covid-vaccine-conspiracy-theories-spreading-online/

De Vynck, G., & Lerman, R. Facebook and YouTube Spent a Year Fighting Covid Misinformation. It’s Still Spreading (The Washington Post, 2021). https://www.washingtonpost.com/technology/2021/07/22/facebook-youtube-vaccine-misinformation/.

González, F., Yu, Y., Figueroa, A., López, C., & Aragon, C. Global reactions to the Cambridge Analytica scandal: A cross-language social media study. In Proceedings of the 2019 World Wide Web Conference 799–806. ACM Press. https://doi.org/10.1145/3308560.3316456 (2019).

Joyce, M. & Kirakowski, J. Measuring attitudes towards the internet: The general internet attitude scale. Int. J. Hum.-Comput. Interact. 31, 506–517. https://doi.org/10.1080/10447318.2015.1064657 (2015).

Bartneck, C., Lütge, C., Wagner, A., & Welsh, S. An Introduction to Ethics in Robotics and AI (Springer, 2021).

German Research Foundation. Statement by an Ethics Committee. https://www.dfg.de/en/research_funding/faq/faq_humanities_social_science/index.html (2023).

German Psychological Society. Berufsethische Richtlinien [Work Ethical Guidelines]. https://www.dgps.de/fileadmin/user_upload/PDF/Berufsetische_Richtlinien/BER-Foederation-20230426-Web-1.pdf (2022).

Jobst, L. J., Bader, M. & Moshagen, M. A tutorial on assessing statistical power and determining sample size for structural equation models. Psychol. Methods 28(1), 207–221. https://doi.org/10.1037/met0000423 (2023).

Stöber, J. The Social Desirability Scale-17 (SDS-17): Convergent validity, discriminant validity, and relationship with age. Eur. J. Psychol. Assess. 17(3), 222–232. https://doi.org/10.1027/1015-5759.17.3.222 (2001).

Eid, M., Geiser, C., Koch, T. & Heene, M. Anomalous results in G-factor models: Explanations and alternatives. Psychol. Methods 22, 541–562. https://doi.org/10.1037/met0000083 (2017).

Schermelleh-Engel, K., Moosbrugger, H. & Müller, H. Evaluating the fit of structural equation models: Tests of significance and descriptive goodness-of-fit measures. Methods Psychol. Res. 8(2), 23–74 (2003).

Rodriguez, A., Reise, S. P. & Haviland, M. G. Evaluating bifactor models: Calculating and interpreting statistical indices. Psychol. Methods 21(2), 137–150. https://doi.org/10.1037/met0000045 (2016).

Kennedy, R. et al. The shape of and solutions to the MTurk quality crisis. Polit. Sci. Res. Methods 8(4), 614–629. https://doi.org/10.1017/psrm.2020.6 (2020).

Peer, E., Vosgerau, J. & Acquisti, A. Reputation as a sufficient condition for data quality on Amazon Mechanical Turk. Behav. Res. Methods 46(4), 1023–1031. https://doi.org/10.3758/s13428-013-0434-y (2013).

John, O. P., Naumann, L. P. & Soto, C. J. Paradigm shift to the integrative Big-Five trait taxonomy: History, measurement, and conceptual issues. In Handbook of Personality: Theory and Research (eds John, O. P. et al.) 114–158 (Guilford Press, 2008).

Jones, D. N. & Paulhus, D. L. Introducing the Short Dark Triad (SD3): A brief measure of dark personality traits. Assessment 21(1), 28–41. https://doi.org/10.1177/1073191113514105 (2014).

Field, A. Discovering Statistics Using IBM SPSS Statistics (SAGE, 2013).

Baranski, E., Sweeny, K., Gardiner, G. & Funder, D. C. International optimism: Correlates and consequences of dispositional optimism across 61 countries. J. Pers. 89(2), 288–304. https://doi.org/10.1111/jopy.12582 (2020).

Sharpe, J. P., Martin, N. R. & Roth, K. A. Optimism and the Big Five factors of personality: Beyond neuroticism and extraversion. Pers. Individ. Differ. 51(8), 946–951. https://doi.org/10.1016/j.paid.2011.07.033 (2011).

Steel, G. D., Rinne, T. & Fairweather, J. Personality, nations, and innovation. Cross-Cult. Res. 46(1), 3–30. https://doi.org/10.1177/1069397111409124 (2011).

McCarthy, M. H., Wood, J. V. & Holmes, J. G. Dispositional pathways to trust: Self-esteem and agreeableness interact to predict trust and negative emotional disclosure. J. Pers. Soc. Psychol. 113(1), 95–116. https://doi.org/10.1037/pspi0000093 (2017).

Lazer, D. M. J. et al. The science of fake news. Science 359(6380), 1094–1096. https://doi.org/10.1126/science.aao2998 (2018).

Stecula, D. A. & Pickup, M. Social media, cognitive reflection, and conspiracy beliefs. Front. Polit. Sci. 3, 62. https://doi.org/10.3389/fpos.2021.647957 (2021).

van Prooijen, J. W. Why education predicts decreased belief in conspiracy theories. Appl. Cognit. Psychol. 31(1), 50–58. https://doi.org/10.1002/acp.3301 (2016).

Scheibenzuber, C., Hofer, S. & Nistor, N. Designing for fake news literacy training: A problem-based undergraduate online-course. Comput. Hum. Behav. 121, 106796. https://doi.org/10.1016/j.chb.2021.106796 (2021).

Sindermann, C., Schmitt, H. S., Rozgonjuk, D., Elhai, J. D. & Montag, C. The evaluation of fake and true news: On the role of intelligence, personality, interpersonal trust, ideological attitudes, and news consumption. Heliyon 7(3), e06503. https://doi.org/10.1016/j.heliyon.2021.e06503 (2021).

Park, J. & Woo, S. E. Who likes artificial intelligence? Personality predictors of attitudes toward artificial intelligence. J. Psychol. 156(1), 68–94. https://doi.org/10.1080/00223980.2021.2012109 (2022).

McCrae, R. R. & Costa, P. T. Jr. Personality trait structure as a human universal. Am. Psychol. 52(5), 509–516. https://doi.org/10.1037/0003-066X.52.5.509 (1997).

Rogoza, R. et al. Structure of Dark Triad Dirty Dozen across eight world regions. Assessment 28(4), 1125–1135. https://doi.org/10.1177/1073191120922611 (2020).

Gurven, M., von Rueden, C., Massenkoff, M., Kaplan, H. & Lero Vie, M. How universal is the Big Five? Testing the five-factor model of personality variation among forager–farmers in the Bolivian Amazon. J. Pers. Soc. Psychol. 104(2), 354–370. https://doi.org/10.1037/a0030841 (2013).

Lee, K. & Ashton, M. C. Psychometric properties of the HEXACO-100. Assessment 25, 543–556. https://doi.org/10.1177/1073191116659134 (2018).

Thielmann, I. et al. The HEXACO–100 across 16 languages: A Large-Scale test of measurement invariance. J. Pers. Assess. 102(5), 714–726. https://doi.org/10.1080/00223891.2019.1614011 (2019).

Mara, M. & Appel, M. Science fiction reduces the eeriness of android robots: A field experiment. Comput. Hum. Behav. 48, 156–162. https://doi.org/10.1016/j.chb.2015.01.007 (2015).

Laakasuo, M. et al. What makes people approve or condemn mind upload technology? Untangling the effects of sexual disgust, purity and science fiction familiarity. Palgrave Commun. https://doi.org/10.1057/s41599-018-0124-6 (2018).

Latikka, R., Savela, N., Koivula, A. & Oksanen, A. Attitudes toward robots as equipment and coworkers and the impact of robot autonomy level. Int. J. Soc. Robot. 13(7), 1747–1759. https://doi.org/10.1007/s12369-020-00743-9 (2021).

Appel, M., Izydorczyk, D., Weber, S., Mara, M. & Lischetzke, T. The uncanny of mind in a machine: Humanoid robots as tools, agents, and experiencers. Comput. Hum. Behav. 102, 274–286. https://doi.org/10.1016/j.chb.2019.07.031 (2020).

Gray, K. & Wegner, D. M. Feeling robots and human zombies: Mind perception and the uncanny valley. Cognition 125, 125–130. https://doi.org/10.1016/j.cognition.2012.06.007 (2012).

Grundke, A., Stein, J.-P. & Appel, M. Mind-reading machines: Distinct user responses to thought-detecting and emotion-detecting robots. Technol. Mind Behav. https://doi.org/10.17605/OSF.IO/U52KM (2021).

Yuan, K. H. & Bentler, P. M. Three likelihood-based methods for mean and covariance structure analysis with non-normal missing data. Sociol. Methodol. 30(1), 165–200. https://doi.org/10.1111/0081-1750.00078 (2000).

Satorra, A. & Bentler, P. M. Ensuring positiveness of the scaled difference chi-square test statistic. Psychometrika 75, 243–248. https://doi.org/10.1007/s11336-009-9135-y (2010).

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions