Abstract

Many experiments suggest that long-term information associated with neuronal memory resides collectively in dendritic spines. However, spines can have a limited size due to metabolic and neuroanatomical constraints, which should effectively limit the amount of encoded information in excitatory synapses. This study investigates how much information can be stored in the population of sizes of dendritic spines, and whether it is optimal in any sense. It is shown here, using empirical data for several mammalian brains across different regions and physiological conditions, that dendritic spines nearly maximize entropy contained in their volumes and surface areas for a given mean size in cortical and hippocampal regions. Although both short- and heavy-tailed fitting distributions approach \(90-100\%\) of maximal entropy in the majority of cases, the best maximization is obtained primarily for short-tailed gamma distribution. We find that most empirical ratios of standard deviation to mean for spine volumes and areas are in the range \(1.0\pm 0.3\), which is close to the theoretical optimal ratios coming from entropy maximization for gamma and lognormal distributions. On average, the highest entropy is contained in spine length (\(4-5\) bits per spine), and the lowest in spine volume and area (\(2-3\) bits), although the latter two are closer to optimality. In contrast, we find that entropy density (entropy per spine size) is always suboptimal. Our results suggest that spine sizes are almost as random as possible given the constraint on their size, and moreover the general principle of entropy maximization is applicable and potentially useful to information and memory storing in the population of cortical and hippocampal excitatory synapses, and to predicting their morphological properties.

Similar content being viewed by others

Introduction

Many experimental data suggest that dendritic spines (parts of excitatory synapses) in neurons are the storing sites of long-term memory (or long-term information), mainly in their molecular components, i.e. receptors and proteins1,2,3,4,5,6,7,8,9,10,11. Spines are dynamic objects10,12,13,14 that vary vastly in sizes and shapes15,16,17. Small spines can disappear in a matter of few days, while large spines can persist for months or even years2,4,18. Despite this variability, their size highly correlates with a magnitude of synaptic current (synaptic weight or strength), which suggests that there is a close relationship between spine structure and physiological function2. Moreover, the variability in spine’s size is also strictly associated with a variability in the number of AMPA receptors on spine membrane19, as well as with changeability in the size of postsynaptic density (PSD)20, which is composed of the thousands of proteins implicated in molecular learning and memory storage8,21.

Given high turnover of individual spines it is unclear how precisely the long-term information is stored in the brain4,11,22. Some theoretical models suggest that functional memory is stored on a population level in the distribution of large number of synaptic contacts7,23,24,25,26 or in the distribution of molecular switches contained in those global synapses27,28,29, not locally in single synapses. In this picture, global long-term information of a given neuronal circuit is associated with its pattern of synaptic connections (associated with a pattern of spine sizes and their internal molecular characteristics), and the appearance or disappearance of a single connection does not matter for the global information stability and its persistence. In this study, we also adopt this viewpoint that spine population is more important functionally for memory storage than individual spines. Indeed, in support of this view, we show below that the distribution of spine sizes during the whole development in human hippocampus is essentially invariant, despite obvious temporal variability on a single spine level (see also Ref.30).

The tight correlations between spine geometry and spine molecular composition, responsible for encoding and maintaining of memory, suggest that one can use the spine size as some measure of its information content31. On the other hand, despite large spine size variability, its population mean diameter is relatively stable in a narrow range of a fraction of micrometer30,32,33,34. This indicates that the mean spine size may be restricted due to limitations of cortical space associated with dense packing of different neuronal and non-neuronal components35,36. More importantly, the mean spine size should be also constrained by metabolic considerations, since synapses use large fractions of brain energy29,37,38. This is because postsynaptic current is proportional to average spine size2, which means that bigger spines with stronger synaptic strength are generally more energy consuming than smaller spines with weaker strength37,38,39,40. These arguments suggest that molecular information encoded in the geometry of dendritic spines should be limited by neuroanatomical and metabolic constraints, and both can be simply united by a single parameter, which is the population mean of spine size.

The main goal of this study is to investigate the long-term information capacity of dendritic spines related to memory, which we quantify by entropy associated with the distribution of their sizes. Consequently, the term information is meant below in this particular sense, and we often use entropy and “information capacity” (or information content) interchangeably. The specific questions we ask in this study are the following: Is such information optimized somehow, given the constraints on mean spine sizes? If so, how large is the deviation from the optimality for the parameters characterizing spine distributions? To answer these questions, we collected data from published literature on spine (or PSD) sizes (volumes, areas, length, and head width) for different mammals and different cortical and subcortical regions (see the "Methods"). These data allowed us to compute empirical Shannon entropy (related to information content) associated with spine sizes for species, brain region and condition, and to compare it with a theoretical upper bound on the entropy for a given mean spine size. Within this theory we can also compute the optimal ratios of spine size variability and compare it with the data.

Results

Fitting of dendritic spine sizes to lognormal, loglogistic, and gamma distributions, and empirical Shannon entropy

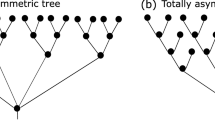

Previous empirical studies on dendritic spines have shown that their sizes (or synaptic weights) can be fitted well to either lognormal or gamma distributions13,33,34,41,42,43,44,45,46,47,48,49. However, these two types of the probability distributions differ significantly in terms of their asymptotic behavior: for very large spine sizes the former displays a heavy tail, while the latter decays exponentially with a short tail. Our first goal is to determine which distribution, with heavy or short tail, can better describe experimental data. In our analysis, we also added an additional probability density, not tried by previous studies, the loglogistic distribution that has a heavy tail (but see also Ref.17, for a special case of loglogistic distribution, which unfortunately has infinite mean and variance). This distribution has an interesting property, because for small sizes it behaves similar to the gamma distribution, whereas for large sizes it resembles the lognormal distribution, although it has a longer tail. For this reason, the loglogistic function is an alternative to the two extreme choices used in the past13,33,34,42,45,46,47,48,49.

In Figs. 1 and 2 we present histograms for the empirical spine volume and length from human cingulate cortex of two individuals (40 and 85 years old), taken from34. These histograms can be well fitted to the three mentioned theoretical distributions. The goodness of these fits was conducted using the Kolmogorov-Smirnov test with cumulative distribution function CDF, which confirms that all three distributions can be used (Supplementary Figs. S1 and S2). The quantitative agreement between the theoretical CDF and the empirical CDF depends on the number of bins \(N_{b}\) used for sampling, but in all studied cases the fits to the three theoretical distributions are always statistically significant at 95\(\%\) level of confidence (Supplementary Table T1). For example, for spine volume the best fits, as specified by the Kolmogorov-Smirnov distance \(D_{KS}\), are for lognormal and gamma distributions, but the latter distribution is a better choice for the larger \(N_{b}\) (Supplementary Table T1). Similarly, for spine length the best fits are mostly for gamma distributions, except in one case where lognormal is slightly better (for maximal possible \(N_{b}= 412\)). Nevertheless, it should be said that the differences between \(D_{KS}\) for these two dominant distributions are rather small, which suggests that it may be difficult to precisely pin-point which distribution, either with short or heavy tail, is a better fit.

Next, we want to determine how stable are the distributions of spine sizes across the whole developmental period (Fig. 3 and Supplementary Fig. S3). To address this, we use another collection of data on spine length and spine head diameter from human hippocampus (30, and private comm.). It is important to emphasize that the histograms for these two parameters seem visually invariant from the early age of 2 years to 71 years old, possibly with an exception for spine length of 5 month old for which the histogram is broader though with essentially the same mean (Fig. 3 and Supplemental Fig. S3). All empirical distributions of spine length and spine head diameter can be significantly described (at 95\(\%\) level of confidence) by all three theoretical distributions, however, the best fits (with the smallest \(D_{KS}\)) are provided predominantly by gamma distribution (Supplementary Figs. S4 and S5; and Table T2). Essentially, this result agrees with the other fits of spine length from cingulate cortex (Figs. S1 and S2), for which gamma distribution is also slightly superior.

Our next goal is to find empirical Shannon entropies associated with the discrete histograms of spine sizes in Figs. 1, 2 and 3 (and Supplementary Fig. S3), and to compare them to continuous entropies associated with the three fitting probability distributions. This is conducted using Eq. (1) in the Methods for the continuous fitting distributions, and using a discrete version of this equation for the histograms. Both entropies depend on spine size resolution, or equivalently, intrinsic noise amplitude \(\Delta x\) related to subspine molecular fluctuations associated with elementary changes in spine size (Eq. 2). Calculations reveal that the continuous distributions provide theoretical entropies (\(H_{th}\)) that very well approximate the empirical entropies (\(H_{em}\)) from the histograms for all fits in Figs. 1, 2 and 3 and S3, with small differences between the two mostly no more than 5\(\%\) (Table 1). Overall, the gamma distribution provides the best approximations, which in many cases deviate by less than 1\(\%\) from the empirical entropies. The heavy-tailed lognormal distribution also gives very good approximations (mostly \(1-3\%\)), while the loglogistic distribution yields a little less accurate numbers but still sufficiently close (mostly \(4-5\%\) of deviation).

To summarize, our fitting analysis reveals that the empirical data on spine sizes can be described well by the three different continuous distributions, either with short or heavy tails (gamma, lognormal, and loglogistic). However, slightly better fits are provided mostly by gamma and occasionally by lognormal, which suggests that spine sizes distributions do not have too heavy tails. Furthermore, the entropies associated with these distributions give reasonably good approximations to the empirical entropies, again primarily gamma and secondary lognormal.

Entropy associated with spine sizes is nearly maximal for spine volume and area

We collected a large data set of dendritic spine sizes from different sources (Tables 2, 3, 4 and 5; and Methods). These data contain values of mean and standard deviations of spine (or PSD) volume, surface area, length, and spine head diameter in different brain regions of several mammalian species (mostly cerebral cortex and hippocampus). Next, we make an assumption, based on the results and conclusions in Figs. 1, 2 and 3 and S1–S5, and Tables 1 and T1, T2, that all the collected data on spine sizes can be described well by the three discussed above distributions, and additionally that the entropies of these continuous distributions are good approximations of the empirical entropies related to empirical, mostly unknown, distributions of the collected data in Tables 2, 3, 4 and 5. With this logic in mind, we are able to estimate the entropy associated with each spine size parameter for the three distributions (Eq. (1) in the Methods), using only the means and standard deviations of the data. This is possible because Shannon entropy for these distributions can be expressed unambiguously only by their first two moments (without the knowledge of higher moments), i.e. by their means and standard deviations (see the "Methods").

We denote the values of the continuous entropies as \(H_{g}, H_{ln}, H_{ll}\) (Eqs. 10, 17, 27), and they provide a good measure of the empirical information content in dendritic spines. Additionally, we compute a theoretical upper bound on the entropy for a given mean spine size for each distribution (\(H_{g,m}, H_{ln,m}, H_{ll,m}\); Eqs. 12, 19, 29). The bound \(H_{g,m}\) for gamma distribution is the highest possible bound \(H_{max}\) for all existing distributions, not only for the three considered here (see the Methods; Eqs. (4) and (12)). Since mean spine sizes are generally different in different brain regions (and even different for the same region in different studies), the upper theoretical bounds of entropy are also generally different, as they depend on the mean size S (Eqs. 12, 19, 29). Moreover, the upper bounds for lognormal and loglogistic distributions are slightly smaller than the bound \(H_{max}\) for the gamma distribution. The latter bound is reached for a specific type of the gamma distribution with \(\alpha =1\), corresponding to an exponential distribution, for which an optimal ratio of standard deviation to mean spine size is equal to 1 (see the "Methods"). However, it should be stressed that none of our spine data can be fitted by an exponential distribution, since \(\alpha > 1\) in all fitting figures (Figs. 1, 2 and 3). This in turn suggests that real spine distributions deviate to some extent from the theoretically optimal exponential distribution. By comparing data driven continuous entropies H (\(H_{g}\), \(H_{ln}\), \(H_{ll}\)) for all three distributions to the maximal entropy \(H_{max}\), we can assess how close to the theoretical optimum is the entropy contained in spine sizes for each distribution. That closeness is quantified by an entropy efficiency \(\eta\) defined as the ratio \(\eta = H/H_{max}\) (Eq. 30 in the Methods).

Another relevant quantity that we compute is deviation from the optimality D (\(D_{g}, D_{ln}, D_{ll}\)), which measures a deviation of the two parameters characterizing a given distribution from their optimal values defining a corresponding upper bound entropy (Eqs. 38, 39 and 40 in the Methods). This quantity is analogous to a standard error, which means its smallness is an indicator of the closeness of the empirical parameters to their optimal values.

The results in Figs. 4 and 5, and Tables 2 and 3, for spine volume and area (including PSD volumes and areas) indicate that the corresponding data driven continuous entropies essentially reach their upper theoretical bounds in a huge majority of cases (the maximal possible bounds are boldfaced and given by \(H_{g,m}\)). This fact is also evident from \(90-100\%\) of the entropy efficiency \(\eta\), especially for gamma distribution, as well as from a relatively small values of the deviation from optimality D (Tables 2 and 3). Moreover, the empirical ratios of standard deviation to mean (SD/mean) for spine volumes and areas are in many cases in the range \(0.7-1.3\), and these values are only \(30 \%\) away from the optimal ratio (1.0) for gamma distribution (Figs. 4B and 5B). There are, however, a few exceptions that yield suboptimal entropies, most notably cerebellum for which SD/mean is much smaller than 1. There are also some negative values of entropy for gamma distributions (macaque monkey), but these cases are only mathematical artifacts (see the Discussion).

The near optimality of entropy for spine volume and area across different species and many cortical and hippocampal regions is a remarkable result. It suggests that regardless of brain size, brain region, age, or neurophysiological condition, the distributions of spine volume and area adjust themselves such that to almost maximize their information content subject to size constraint, in most cases. The maximal values of the entropy depend logarithmically on the mean spine volume or area, and are in the range \(2.3-3.5\) bits per spine, depending on average spine size. This means that spines on average contain between 5 and 11 distinguishable structural states.

The results for spine length and spine head (or neck) diameter show that the corresponding entropies are more distant to their theoretical optima, especially for head diameter, than those for spine volume or area (lower values of the efficiency \(\eta\), higher deviations D in Tables 4 and 5; Figs. 6 and 7). That is a consequence of higher deviations in their ratio SD/mean from optimality, which are mostly below 0.7 (Figs. 6B and 7B). Nevertheless, the spine length entropies, although not at the vicinity of the upper bound, are significantly closer to it than spine head diameter entropies, since the latter generally correspond to lower size ratios SD/mean (Figs. 6 and 7; Tables 4 and 5; boldfaced are the maximal upper bounds \(H_{g,m}\)). Interestingly, the information contained in spine length and head diameter (\(\sim 3-5\) bits) is greater than in spine volume and area, yielding more structural spine states between 8 and 30.

It is important to emphasize that the above general trends for entropy maximization across various mammals are also consistent with the results for human brain, for which we do know the detailed probability distributions of spine sizes (Figs. 1, 2 and 3 and S1–S5). Specifically, for human cingulate cortex, the best fits to spine volumes are (mostly) gamma and lognormal distributions (Table S1), which yield entropy efficiency \(\eta\) respectively at the level of \(97-99\%\) and \(92-93\%\), with relatively low deviations from optimality D at \(37-61\%\) and \(20-24\%\), and with SD/mean \(\approx 0.8\) for spine volumes (Table 2). In contrast, the same cortical region for spine length with similar best fitting distributions gives a noticeable lower entropy efficiency about \(86-90\%\), and much higher deviations D in the range \(184-478\%\), and generally lower ratios SD/mean (Table 4). For human hippocampus, spine length is the best described by gamma distribution (Table S2), which yields comparable results: the entropy efficiency \(88-90\%\) with deviations \(273-348\%\) (Table 4). On the other hand, for human hippocampal spine head diameter, which is best fitted by both gamma and loglogistic distributions, we obtain even lower entropy efficiency \(82-86\%\), and slightly higher deviations for gamma distribution at \(344-410\%\) (Table 5).

Taken together, the results shown in Figs. 4, 5, 6 and 7 indicate that although less information is encoded in spine volume and area than spine length or diameter, the former encoding is much more efficient. This means that the information capacity associated with volume and surface area is nearly maximal possible for given mean values of spine volume and area, across different species and different conditions.

Density of entropy in spines is not optimal

As an alternative to the problem of information maximization in dendritic spines, we consider also the possibility that not entropy but the entropy density could be maximized. In particular, we study the ratio F of continuous data driven entropy to mean spine size (volume, area, length, and diameter), as a relevant quantity for maximization (Eq. 41 in the Methods). From a theoretical point of view, for each of the three considered distributions the entropy density F exhibits a single maximum as a function of spine size. The height of that maximum corresponds to the upper bound on the entropy density, and our goal is to investigate how much the data driven F differs from that theoretical upper bound.

In Fig. 8 we present the data driven values of the entropy density and compare them to the maximal values of F. These results show that the densities of entropy F are generally far lower than their upper bounds. Specifically, F is \(\sim 1000\) and \(\sim 100\) times smaller than its maximal values for spine volume and area, respectively (Fig. 8A,B). For spine length and diameter the ratio F is closer to its upper bound, but still only at \(\sim 10\%\) (Fig. 8C,D). The primary reason for the strong suboptimality of entropy density is that the optimal spine size that maximizes F is below the size resolution \(\Delta x\) (see the "Methods"). That translates to very high maximal entropy density, which is unattainable for empirical spine sizes.

We conclude that the density of entropy is not optimized in dendritic spines, which suggests that this quantity is not as relevant as entropy itself for information encoding in synapses.

Sensitivity of entropy maximization on uncertainty in the model and in the data

We consider two types of uncertainty which might affect our general result of entropy maximization in spines. First type is associated with uncertainty in estimating the theoretical resolution \(\Delta x\) in Eq. (2). Second type is associated with experimental uncertainty in estimating standard deviations of spine sizes, which relates to the fact that spine sizes were collected using different techniques, and each can have a different systematic error.

In Fig. 9A we show the dependence of entropy and information efficiency on the uncertainty in the value of \(\Delta x\), which is characterized by the parameter r (see “Uncertainty parameter for intrinsic noise amplitude” in the Methods). The value \(r=1\) is the nominal value taken for estimates in Tables 2, 3, 4 and 5. Values of r different from unity quantify deviations from the assumption of the Poisson distribution for the number of actin molecules spanning a linear dimension of a typical spine. As can be seen, the \(50\%\) deviation from \(r=1\) (either up or down) can change the value of entropy by as much as 40 \(\%\) equally for all three distributions, but the information efficiency \(\eta\) changes only slightly by at most \(1.5-2 \%\) (Fig. 9A). The much greater decrease in efficiency \(\eta\) is observed only for much bigger deviations when the parameter r is significantly greater than 1, which is the case of very high variability in the number of actin molecules. The rate of decrease of \(\eta\) is both distribution and spine size parameter specific.

The dependence of entropy H and its efficiency \(\eta\) on the uncertainty q in the standard deviations of spine sizes (\(SD(q)= q\cdot SD\)) is qualitatively different (Fig. 9B). The value \(q=1\) corresponds to the empirical SD taken from Table 2, and \(q \ll 1\) or \(q \gg 1\) indicate either much smaller or much larger SD than the empirical value. All three distributions yield significant drops in entropy and efficiency for decreasing q from unity to zero. However, both H and \(\eta\) decay moderately by \(25-30 \%\) for \(50 \%\) reduction in q (from 1 to 1/2). The situation is different for enlarging q (Fig. 9B). In this case, the values of H and \(\eta\) decrease fast only for gamma distribution. For the remaining long-tailed distributions, entropy and efficiency vary very weakly, especially \(\eta\) for loglogistic is approaching \(100 \%\) asymptotically. The latter follows from the fact that optimal size ratio of SD/mean for loglogistic is approaching infinity.

Discussion

Summary of the main results: optimization of information encoded in spine volume and area, but not in spine length or head diameter

Our results suggest that the sizes of dendritic spines can be described equally well by three different skewed distributions (gamma, lognormal, loglogistic, though gamma yields a slightly better fits), since all of them yield statistically significant fits (Supplementary Tables T1 and T2). This generally also supports, more quantitatively, previous studies that fitted skewed distributions to spine sizes13,33,34,41,42,43,44,45,46,47,48,49. For the human data we used, this conclusion is independent on the number of bins, as verified by a goodness of fit procedure. Consequently, we calculated and compared spine entropies (i.e. information content in spines) for all these three probability densities, and confirmed that these continuous entropies are close, especially for gamma and lognormal, to the empirical entropies calculated from the histograms of the data sizes (Table 1). This positive result allowed us to extend with certain confidence the entropy calculations also to the cases of other brain regions and species for which we do not know the empirical distributions of spine sizes, but rather only their means and standard deviations. The latter two empirical parameters are sufficient to determine entropy for all three above distributions.

The main result of this study is that entropy (information encoded) of volumes and areas of cortical and hippocampal dendritic spines is almost maximized, achieving in many cases \(90-100 \%\) of its maximal value for given mean spine volume or surface area (Tables 2 and 3; Figs. 4 and 5). Equivalently, using a different perspective, this means that volumes and areas of dendritic spines are almost as random as possible given the constraint on their size. In other words, our calculations and data suggest that spines adjust simultaneously the means and standard deviations of their sizes to maximize information content in their structure. The important point is that spines achieve this maximization (for any given spine size) by adjusting only one parameter: the ratio SD/mean of their volumes or areas (i.e. the absolute values of SD and mean do not matter for such entropy maximization). Indeed, most of the empirical ratios SD/mean for spine volume and area are close to the optimal ratios for gamma (1.0) and lognormal (1.3) distributions. This general conclusion is also supported by relatively small deviations from the optimality of the parameters characterizing the distributions (D values mostly below \(20-30\%\) for heavy-tailed distributions). Furthermore, the optimality of entropy of spine volume or area is mostly independent of the investigated species (i.e. brain size), brain region (except cerebellum), age, or condition (but see below), which might suggest its possible universality, at least in the cerebral cortex and hippocampus (Tables 2 and 3). The exception of the cerebellum, with only \(35-45\%\) of its information efficiency is intriguing, but from a theoretical point of view it is a consequence of the uniformity of spine sizes (though not of PSD sizes) in that part of the brain50. That uniformity might serve some functional role, different from information storage.

In most cases, the near optimality of information content in spine volumes and areas is also independent of the type of the chosen spine size distribution. Several exceptions for the gamma distribution in Tables 4 and 5 reflect the fact that this probability density poorly handles cases in which standard deviation exceeds the mean. That is also the reason for several negative entropy values associated with gamma distribution in Tables 2 and 3, which is only a mathematical artifact (see the Methods after Eq. 9), and relates to the fact that continuous distributions can yield negative entropies (in contrast to discrete distributions). Generally, the negative entropy cases appear almost exclusively for macaque monkey, which leads us to a hypothesis that spine volumes and areas in this species are probably better described by the heavy tailed distributions. Such distributions give entropy efficiency closer to optimality (Tables 2 and 3). However, one must be careful with loglogistic distribution as its optimal size ratio SD/mean\(=\infty\), implying in practice that there is no a single optimum for this distribution. This fact, together with slightly worse fits to the volume and entropy data (Table 1 and Supplementary Table T1), suggests that loglogistic density is probably not the best choice for approximating spine size distributions, which likely do not have too heavy tails (do not decay as power law).

The near maximization of entropy in spine volumes and areas is not very sensitive on the uncertainties associated with precise values of noise amplitude \(\Delta x\) and empirical variances of spine sizes (Fig. 9). The first indicates that small deviations from the assumption of Poisson distribution for the actin number underlying spine structure do not have much effect on the result. The latter suggests that, despite different experimental techniques with various accuracies used for evaluating spine sizes in different studies, the precise values of standard deviations are not critical for the main result. Again, what matters most is the ratio of standard deviation to mean spine volume or area.

The efficiency of the information encoded in spine length and spine head (or PSD) diameter is significantly lower than that encoded in spine volume and area (compare Tables 4 and 5 with Tables 2 and 3; Figs. 6 and 7), suggesting that these variables do not maintain the synaptic information optimally. Similarly, the entropy density is also not optimized, as its empirical values are far below their upper bounds (Fig. 8). This indicates that information is not densely packed in the whole available space of dendritic spines, and furthermore, it seems that entropy density is not the relevant quantity for neuroanatomical and functional organization of dendritic spines, if we take an evolutionary point of view into account51,52.

Finally, there have been a few studies that are similar in spirit to our general approach but that differ in context and details. Notably, Refs.53,54 assumed an information maximization under constraints to derive optimal distribution of synaptic weights and to make some general qualitative observations. In53 the optimal distribution of synaptic weights derived in the context of perceptron was a mixture of Gaussian with Dirac delta, and they fitted that to Purkinje cells in rat cerebellum. In54, the authors used mainly discrete exponential or stretched exponential distributions for synaptic weights as optimal solutions of the entropy maximization, but without quantitative comparison between theory and experiment. In contrast, we took a different approach, with different distributions, and we actually proved the near maximization of information content in synaptic volumes and areas in different parts of the brain across several mammals. In the experimental work of31, the authors also estimate information content in dendritic spines, but use a different more engineering method, and only for one species and brain region: rat hippocampus.

Stability of synaptic information, memory, and physiological conditions

We found that different populations of dendritic spines can store between \(1.9-3.4\) bits of information per spine in their volumes and surface areas (Tables 2 and 3), which means that on average a typical spine can have between \(2^{1.9}-2^{3.4}\), i.e. \(4-10\) distinguishable geometric internal states. Because of the link between spine structure and function (e.g. mean spine volume is proportional to postsynaptic current;2), these geometric states can be translated into possible \(4-10\) physiological states. For example, for spine volume in rat hippocampus we obtain \(2.7-2.8\) bits per spine, which is either similar or slightly smaller than the numbers calculated in55 and31, \(\sim 2.7-4.7\) bits, and the difference can be attributed to a different method of information estimation. Our results indicate that spines in the mammalian cerebral cortex can hold about \((1-1.5)\cdot 10^{12}\) bits/cm\(^{3}\) of memory in their volumes and areas, assuming in agreement with the data that cortical synaptic density is roughly brain size independent and on average \(5\cdot 10^{11}\) cm\(^{-3}\)56,57.

On a single dendritic spine level neural memory is presumably stored in PSD structure, i.e. in the number and activities of its various proteins, which are coupled with AMPA and NMDA receptors on spine membrane1,3,4,5,8,58,59,60. Moreover, the data show that mean spine volume is proportional to mean PSD diameter20, implying that spine volume is positively correlated with “memory” variable associated with PSD size, and consequently, the entropies of both these variables should be proportional61. That suggests that entropy of spine volume (and/or area) could serve as a proxy for “memory capacity”, and its maximization should reflect near optimality of synaptic memory (given a size constraint).

It is also curious that entropy efficiency \(\eta\) is relatively stable even in many cases when the condition changes. For example, for situations stress vs. non-stress, or mutation vs. control, or LTP vs. non-LTP condition, \(\eta\) stays approximately constant despite changes in the mean and standard deviations of spine sizes (Tables 2, 3, 4 and 5). This again suggests that dendritic spines can adjust simultaneously their mean sizes and variances to maintain nearly maximal information content (e.g. data from62 for spine/PSD volumes during LTP induction in rat hippocampus; Table 2). However, there are some exceptions to that stability. For example, LTP induction generally enlarges spines but does not necessarily increases their entropy and efficiency, which can dynamically change during LTP (data from63; Table 3). Also, stress can decrease the amount of stored information in volumes and areas of spines in the rat prefrontal cortex (data of64; Tables 2 and 3), but paradoxically that same stress can increase the information encoded in spine length (Table 4). Similarly, in human prefrontal cortex, Alzheimer’s disease can decrease the stored information in spine length and head diameter (data of65; Tables 4 and 5). Overall, the widespread stability of information efficiency might suggest that some compensatory mechanisms take plays in synapses that counteract a local memory degradation. Interestingly, the same conclusion can be reached for the developmental data on human hippocampus30, where the corresponding entropy related to spine length and head diameter is remarkably invariant across the human lifespan (Tables 4 and 5). In contrast, entropy efficiency in PSD area of rat forebrain during development exhibits nonmonotonic behavior with a visible maximum for postnatal day 21, but the differences are not large (data of66; Table 3).

Given the above, one can speculate that a serious alternation of a neural circuit, e.g. by Alzheimer’s disease, can significantly modify the sizes of dendritic spines. That effect can be quantified by a single number, namely the entropy of the sizes distribution. We predict, based on the data of65 in Tables 4 and 5, that progression of Alzheimer’s disease should gradually reduce the entropy of spine volumes and areas away from maximal values. That would correspond to a decrease in the long-term synaptic information capacity, which should correlate with a decline in general “cognitive capabilities”. It would be interesting to perform such experiments linking spine structure, quantified by entropy, with behavioral performance in Alzheimer patients in different parts of the cerebral cortex and hippocampus.

Implications for neuroanatomical and metabolic organization of the cerebral cortex in the context of synaptic information capacity

Information is always associated with energy67, and there have been suggestions that information processing in neurons is energy efficient, with neurons preferring low firing rates37,40,68,69,70,71, and sublinear scaling of neural metabolism with brain size72. We have an analogous situation in this study for dendritic spines. From Eqs. (12), (19), and (29) we get that maximal entropy of spine sizes depends only logarithmically (weakly) on mean spine size, while their energy consumption is proportional to it. The latter follows from the fact that mean spine size scales linearly with the number of AMPA receptors on spine membrane19, and thus with spine energy consumption related to synaptic transmission37,38,40 and plasticity39. Consequently, spines should favor small sizes to be energy efficient for information storage, which qualitatively agrees with skewed empirical distributions of spine sizes showing substantial SD/mean ratios. We show here that these SD/mean ratios for spine volume and area are close to optimal for information content maximization. In this light, our result is essentially an additional example of the energetic efficiency of information73,74, this time on a synaptic level and on a long time scale, which might suggest an universality of optimal encoding in synapses via entropy maximization with a constraint. Furthermore, because entropy maximization allows us to compute optimal ratios of SD/mean for spine sizes, it can potentially serve as a useful tool to derive or predict neuroanatomical properties of synapses along dendrites, e.g. their sizes and densities75,76.

Methods

Data collection and analysis for the sizes of dendritic spines

All the experimental data on the sizes of dendritic spines used in this study were collected from different published sources, and concern several mammals. Data for mouse brain come from:33,45,63,77,78,79,80,81,82,83,84,85,86, for rat brain from32,50,62,64,66,87, for rabbit brain from88, for echidna brain from89, for cat brain from90,91, for macaque monkey brain from92,93,94,95,96, for dolphin brain from97, and for human brain from30,34,65,78,98,99. The data used are both from cortical and subcortical regions, at different animal ages and different physiological conditions. The cortical areas include: piriform, somatosensory, visual, prefrontal, cingulate, parietal, temporal, and auditory cortex. The subcortical regions include: hippocampus, striatum, cerebellum, and forebrain.

The data for fitting distributions of dendritic spine sizes were taken from34 (human cingulate cortex) and from30 (human hippocampus). For cingulate cortex we had either 5334 or 3355 data points related to spine volume, and either 5494 or 3469 data points related to spine length (for 40 and 85 years old individuals, respectively). For hippocampus we had the same number of data points for spine length and spine head diameter, but the numbers are age-specific. In particular, we had 819 data points for 5 month old, 1108 for 2 yrs old, 915 for 23 yrs old, 657 for 27 yrs old, 1024 for 38 yrs old, 1052 for 45 yrs old, 662 for 57 yrs old, 805 for 58 yrs old, 1022 for 68 yrs old, 1026 for 70 yrs old, and 1032 for 71 yrs old. Histograms from these data were fitted to the three distributions (gamma, lognormal, and loglogistic) using standard tools from Matlab and Python. As a measure of the goodness of fit we used the Kolmogorov-Smirnov test100,101. For this, we constructed a cumulative distribution function CDF for each data set (empirical CDF) and compared it to three theoretical CDF corresponding to lognormal, loglogistic, and gamma probability densities with the same mean and variance as the original raw data. The test consists in determining a Kolmogorov-Smirnov distance, i.e. the maximum absolute deviation \(D_{KS}\) of the empirical CDF from the theoretical CDF, and then comparing such \(D_{KS}\) with a critical value of deviation \(D_{N_{b}}(P)\), which depends on the number of sampling bins \(N_{b}\) and a level of significance P. If \(D_{KS} < D_{N_{b}}(P)\) for a given \(N_{b}\) and significance P, then the fit is statistically significant100. We used the level of significance \(P=0.05\) (corresponding to the confidence level of \(95\%\)), and a variable number of bins \(N_{b}\), either 20, 30, or a maximal possible number for a given sample (412 for cingulate cortex, and 256 or 100 for hippocampus). Among the three theoretical distributions to which the data were compared, we chose that with the smallest Kolmogorov-Smirnov distance \(D_{KS}\) as the best fit. That best fit often depends on \(N_{b}\). The code for calculations is provided in the Supplementary Information.

Theoretical modeling

Maximization of entropy

We express information content in a population of sizes of dendritic spines as Shannon entropy, which is a standard tool for estimation of general information67. Although Shannon entropy H is defined only for discrete stochastic variables, it can be also applied to continuous variables with the help of the so-called differential entropy h, which in turn is defined for continuous variables only61. The basic idea is that any continuous stochastic variable x can be decomposed into small bins of length \(\Delta x\), relating probabilities to probability density \(\rho (x)\) in those bins. This decomposition allows us to approximate Shannon entropy H(x) for discretized continuous variable with accuracy \(\Delta x\) by differential entropy \(h(x)= -\int dx\; \rho (x)\log _{2}(\rho (x))\) via the relation: \(H(x)\approx h(x) - \log _{2}\Delta x\) (see Theorem 9.3.1 in61). A direct consequence of this relation is that the amount of information contained in the probability distribution \(\rho (x)\) of some continuous random variable x (\(0 \le x \le \infty\)) can be quantified as (102; see Eqs. 4.16, 4.26, 4.27 and discussion in this book)

where \(\Delta x\) can be viewed as the limit on measurement accuracy that sets the scale for the resolution of x (see additionally Eq. (3.11) and related discussion in71 for a Gaussian case). The appearance of \(\Delta x\) in Eq. (1) has also a practical necessity for keeping entropy H dimensionless, which is implemented by making the argument of the logarithm unitless. (Note that the normalization condition, \(\int _{0}^{\infty } \rho (x) \; dx = 1\), must be satisfied, which implies that \(\rho (x)\) has the units of the inverse of x, and hence also the inverse of \(\Delta x\).)

We consider x to be either spine volume, spine surface area (or PSD area), spine head diameter, or spine length. The parameter \(\Delta x\) can be also interpreted as a fundamental intrinsic noise amplitude characterizing microscopic fluctuations of actin dynamics underlying spine structure, and \(\Delta x\) depends on average spine size (av. length \(\langle L\rangle\), av. area \(\langle A\rangle\), and av. volume \(\langle V\rangle\)) in the following way (the estimate is given below):

Equivalently, we can write Eq. (2) in a more compact form as \(\Delta x= c\langle x\rangle ^{\kappa }\), where \(\langle x\rangle\) denotes the average spine size, with \(\kappa = 1/2\) (length), 3/4 (area), 5/6 (volume), and c is the appropriate constant (with units). This average individual spine size is a typical spine size, and thus it is assumed that it is the same as the population mean spine size (see below). Note that because the noise amplitude \(\Delta x\) depends on \(\langle x\rangle\), it is generally different in different brain regions. It might be also useful to mention that the physical sense of \(\Delta x\) is conceptually similar to EPSP uncertainty as in the case of synaptic information transfer31, or to the temporal width of action potentials (temporal resolution) when studying information content in distributions of neural spikes71.

The formulas in Eq. (2) were derived under the assumption that the length of underlying actin filaments follows Poisson distribution103,104,105. We also performed some analysis when this assumption is relaxed. In this case, we took a substitution \(\Delta x \mapsto \Delta x_{r}= r\Delta x\), where r is the parameter (\(r \ge 0\)) characterizing the deviation from Poisson distribution (see below, the Sect. “Uncertainty parameter for intrinsic noise amplitude”).

In what follows, we solve the following optimization problem: we want to maximize the entropy for a given population mean of x. Putting the mathematical constraint on the mean value of x reflects the constraint coming from neuroanatomical and/or metabolical restrictions on spine size. It is important to note that the population mean is different in different brain regions, and thus this mathematical constraint is not fixed, but instead it is brain location dependent. Mathematically, this procedure is equivalent to the standard Lagrange optimization problem with the Lagrangian \({\mathcal {L}}\) defined as

where \(\langle x\rangle\) is the population mean (average) of x over the distribution \(\rho (x)\), S is the given mean spine size, and \(\lambda\) is the Lagrange multiplier. The resulting maximal entropy will be a function of the given mean spine size S.

A standard approach to entropy maximization is to use the Lagrangian given by Eq. (3) (supplemented by an additional constraint for probability normalization) and to find the best or optimal probability density that maximizes \({\mathcal {L}}\)61. Using that approach, it is straightforward to show that the optimal distribution is exponential, i.e. \(\rho _{op}(x)= e^{-x/S}/S\)61,71. For this optimal distribution we have that mean \(\langle x\rangle\) and standard deviation \(SD= \sqrt{\langle x^{2}\rangle - \langle x\rangle ^{2}}\) are both equal to S, such that their ratio \(SD/\langle x\rangle = 1\). Moreover, the maximal entropy \(H_{max}\) associated with this distribution is

where e is the Euler number (\(e\approx 2.718\)). It is important to stress, that \(H_{max}\) is the largest possible entropy for all possible distributions that are subject to the constraint on mean value of x.

In this study, however, we take a different approach. Instead of finding the optimal probability density, we assume three plausible distributions that are motivated by experimental data. All three chosen distributions, i.e. gamma, lognormal, and loglogistic, are two-parameter distributions, meaning that the shape of each of them depends on two parameters (for instance, these are \(\mu\) and \(\sigma\) for lognormal density). As a result, entropy and Lagrangian in Eq. (3) for each of these distributions can be computed directly, and in each case they depend on these two shape parameters. Importantly, since the shape parameters can be uniquely determined by the first two moments of x (see below), the entropy in each case can be found exactly by the knowledge of just two quantities: population mean and standard deviation of spine sizes (despite the fact that these distributions as such cannot be fully characterized by only these two moments). Our approach to entropy maximization for each distribution consists in maximization of the Lagrangian in Eq. (3) by optimizing respective shape parameters. That enables us to find how far a given experimental distribution is from an optimal distribution within a given class of probability densities. Interestingly, we find that the maximal entropy for gamma distribution (Eq. 12 below) is exactly the same as the one for the “optimal” exponential distribution (in Eq. 4). This is a consequence of the fact that exponential distribution is a special case of more general gamma distribution (with \(\alpha =1\) in Eq. 5). This means that the gamma distribution considered here can in principle attain the maximal possible entropy across all possible distribution (subject to the constraint on the mean).

Entropy of dendritic spines with gamma distribution

The gamma distribution of a random variable x (spine size) is skewed but it decays fast for large values of x. It is defined as

where \(\rho _{g}(x)\) is the probability density of x, the parameters \(\alpha\) and \(\beta\) are some positive numbers, and \(\Gamma (\alpha )\) is the Gamma function (\(\Gamma (1)= 1\)).

The entropy for the gamma distribution \(H_{g}\) is found from Eqs. (1) and (5), which generates

The integral on the right hand side can be evaluated explicitly with the help of the formula106

where \(\psi (\alpha )\) is the digamma function, defined as \(\psi (\alpha )= d\ln \Gamma (\alpha )/d\alpha\)106. Additionally, the average of x for this distribution is \(\langle x\rangle _{g}= \alpha /\beta\). Combining these results we obtain the entropy \(H_{g}\) as

The standard deviation for the gamma distribution (defined as \(\sigma _{g}=\sqrt{\langle x^{2}\rangle _{g} - \langle x\rangle _{g}^{2}}\)) is \(\sigma _{g}= \sqrt{\alpha }/\beta\). We can invert the relations for the mean and standard deviations to find the parameters \(\alpha\) and \(\beta\) for given experimental values of \(\langle x\rangle _{g}\) and \(\sigma _{g}\). The result is:

Note that the entropy \(H_{g}(\alpha ,\beta )\) can become negative for \(\alpha \ll 1\), since in this limit the digamma function behaves as \(\psi (\alpha ) \approx - 1/\alpha\). This situation corresponds to the cases in which \(\sigma _{g} \gg \langle x\rangle _{g}\), i.e. to the data points for which standard deviation is much greater than the mean.

With Eqs. (8) and (9) entropy \(H_{g}\) can be alternatively expressed as a function of population mean \(\langle x\rangle _{g}\) and standard deviation \(\sigma _{g}\). This is the fact we explore in determining entropy for experimental data in Tables 2, 3, 4 and 5, where we have only the means and standard deviations of empirical spine sizes. More precisely, with a slight rearrangement we can rewrite Eq. (7) as

where we used Eqs. (8) and (9) such that \(\langle x\rangle _{g}= \alpha /\beta\), and we introduced the function \(G(\alpha )\) defined as \(G(\alpha )= (1-\alpha )[\psi (\alpha )-1] + \ln \left( \Gamma (\alpha )/\alpha \right)\). The important point is that \(G(\alpha ) \le 0\) for all \(\alpha > 0\), which implies that the entropy is bounded from above by the following inequality

The right hand side of the above equation is the maximal value of entropy for a given mean \(\langle x\rangle _{g}\). The nonpositivity of the function \(G(\alpha )\) follows from the fact that \(G(\alpha )\) achieves a single maximum for \(\alpha = 1\), and \(G(1)= 0\) (derivative \(G'(\alpha )= (\alpha -1)[1/\alpha - \psi '(\alpha )]\) is positive for \(\alpha < 1\), and negative for \(\alpha > 1\), because \(\psi '(\alpha ) > 1/\alpha\);106). Note that entropy reaches its maximal value for the parameter \(\alpha = 1\), which corresponds to the optimal ratio of standard deviation to mean of spine sizes given by \(\sigma _{g}/\langle x\rangle _{g} = 1\) (see Eq. 8).

Alternatively, we can find the maximal entropy of the gamma distribution for a given mean size \(\langle x\rangle _{g}\) by solving the Lagrange optimization problem defined in Eq. (3) with entropy \(H_{g}(\alpha ,\beta )\) as in Eq. (7). The optimal parameters \(\alpha\) and \(\beta\) are found by setting \(\partial {\mathcal {L}}/\partial \alpha = 0\), \(\partial {\mathcal {L}}/\partial \beta = 0\), and \(\partial {\mathcal {L}}/\partial \lambda = 0\). As a result, their optimal values are \(\alpha _{0}=1\), \(\beta _{0}= 1/S\), and \(\lambda _{0}= -(1-\kappa )/S\). For this values, the maximal entropy of the gamma distribution is:

and we see that \(H_{g,m}\) is exactly the same as the upper bound of entropy in Eq. (11), if we set \(\langle x\rangle _{g} = S\). Moreover, \(H_{g,m}\) is also exactly equal to the maximal entropy \(H_{max}\) for all possible distributions of x, which is given by Eq. (4). Interestingly, the upper bound of entropy \(H_{g,m}\) depends logarithmically on mean spine size S.

Entropy of dendritic spines with lognormal distribution

The lognormal distribution of a random variable x is both skewed and has a heavy tail, and is defined as

where \(\rho _{ln}(x)\) is the probability density of x, and \(\mu\), \(\sigma\) are some parameters (\(\sigma > 0\)).

The entropy of the lognormal distribution \(H_{ln}\) can be determined by combining Eqs. (1) and (13). Using the substitution \(y= \ln (x)\) the corresponding integrals can be evaluated similarly as in the case of Gaussian distribution, which is a standard procedure. As a result, the entropy of lognormal distribution takes the form

The average of x for this distribution is \(\langle x\rangle _{ln}= \exp (\mu + \sigma ^{2}/2)\), and the standard deviation (\(\sigma _{ln}= \sqrt{\langle x^{2}\rangle _{ln} -\langle x\rangle _{ln}^{2}}\)) is \(\sigma _{ln}= \langle x\rangle _{ln}\sqrt{e^{\sigma ^{2}}-1}\). By inverting these relations, we find

Equations (15, 16) allow us to find the characteristic parameters \(\mu\) and \(\sigma\) defining the lognormal distribution from the experimental values of mean and standard deviation for the variable x, i.e. \(\langle x\rangle _{ln}\) and \(\sigma _{ln}\). Consequently, we can also express the entropy for lognormal distribution in Eq. (14) in terms of these empirical means and standard deviations, which is relevant for empirical data in Tables 2, 3, 4 and 5. The explicit dependence of entropy \(H_{ln}\) on empirical average spine size \(\langle x\rangle _{ln}\) is

This expression allows us to find immediately the maximal value of entropy, i.e. to determine its upper bound for a given mean \(\langle x\rangle _{ln}\), which is

The above inequality follows from the fact that \((1-\sigma ^{2} + \ln \sigma ^{2}) \le 0\) for all \(\sigma ^{2}\), which is a direct result of a well known inequality \(\ln (1+z) \le z\) valid for \(z \ge -1\) (with substitution \(z=\sigma ^{2} -1\)). The equality in Eq. (18) is reached for the parameter \(\sigma = 1\), which implies that the entropy of spine sizes is maximal for the optimal ratio of their standard deviation to mean \(\sigma _{ln}/\langle x\rangle _{ln} = \sqrt{e-1}= 1.31\) (see Eq. 16).

Alternatively, we can find the maximal entropy of the lognormal distribution for a given population mean \(\langle x\rangle _{ln}\) as before, i.e. by solving the Lagrange optimization problem defined in Eq. (3) with \(H_{ln}(\mu ,\sigma )\) as in Eq. (14). The optimal parameters \(\mu\) and \(\sigma\) are found by setting \(\partial {\mathcal {L}}/\partial \mu = 0\), \(\partial {\mathcal {L}}/\partial \sigma = 0\), and \(\partial {\mathcal {L}}/\partial \lambda = 0\). Their optimal values are: \(\mu _{0}=-0.5 + \ln (S)\), \(\sigma _{0}= 1\), and \(\lambda _{0}= -(1-\kappa )/S\). For this values, the maximal entropy of the lognormal distribution is:

and it is clear that \(H_{ln,m}\) is the same as the upper bound of entropy in Eq. (18), if we set \(\langle x\rangle _{ln}= S\). Note that \(H_{ln,m}\) depends logarithmically on mean spine size S, similar to \(H_{g,m}\). However, we have \(H_{ln,m} < H_{g,m}= H_{max}\), which is a consequence of the general result represented by Eq. (4) that all distributions have lower entropies than gamma distribution for a given mean.

Entropy of dendritic spines with loglogistic distribution

The loglogistic distribution of a random variable x is visually similar to the lognormal distribution with heavy tail, except that it decays as a power law for very large x and hence has a longer tail. The loglogistic probability density \(\rho _{ll}\) is defined as

where a and b are some positive parameters. Note that for \(x \gg a\) the probability density \(\rho _{ll}\) behaves asymptotically as \(\rho (x)_{ll} \sim 1/x^{b+1}\), which is a much slower decay than for the gamma distribution.

The entropy of the loglogistic distribution \(H_{ll}\) can be determined by combining Eqs. (1) and (20). Consequently, we have

The first integral on the right hand side (without the prefactor \((b-1)\)) is performed by the substitution \(y=(x/a)^{b}\). This transforms that integral into

which is equal to 0106. The second integral on the right hand side (without the prefactor 2) can be transformed, using the same substitution, into

which has a value equal to 1106. As a result, the entropy of loglogistic distribution takes the form

The mean \(\langle x\rangle _{ll}\) and standard deviation \(\sigma _{ll}\) for this distribution both exist, i.e. they are finite, provided the parameter \(b > 2\). In this case, we have

The inverse relations between the parameters a, b and \(\langle x\rangle _{ll}, \sigma _{ll}\) are given by

The first equation has to be solved numerically, for given empirical values of \(\langle x\rangle _{ll}, \sigma _{ll}\). The second equation determines a once the parameter b is known.

Equations (23, 24) render the entropy to depend alternatively on \(\langle x\rangle _{ll}\) and \(\sigma _{ll}\), which allows us to determine entropy for the empirical values of means and standard deviations in Tables 2, 3, 4 and 5. In particular, since \(a/b = (\langle x\rangle _{ll}/\pi )\sin (\pi /b)\), we can express the entropy explicitly as a function of mean \(\langle x\rangle _{ll}\) in the form

This implies that the maximal value of entropy is represented by the following inequality

which follows from the fact that \(\ln (\sin \frac{\pi }{b}) \le 0\), as \(0 \le \sin (\frac{\pi }{b}) \le 1\) for \(b \ge 2\). The maximal entropy is asymptotically approached for \(b\mapsto 2\), or equivalently if the ratio \(\sigma _{ll}/\langle x\rangle _{ll} \mapsto \infty\) (see Eq. 25). In practice, this means that empirical entropy never reaches exactly its maximal value, but it gets closer to its maximum the larger the ratio of standard deviation to the mean of spine sizes.

Alternatively, the maximal value of the entropy for loglogistic distribution for a given mean \(\langle x\rangle _{ll}\) is found by solving the Lagrange optimization problem defined in Eq. (3) with \(H_{ll}\) as in Eq. (22). The optimal parameters a and b are found by setting \(\partial {\mathcal {L}}/\partial a = 0\), \(\partial {\mathcal {L}}/\partial b = 0\), and \(\partial {\mathcal {L}}/\partial \lambda = 0\). Their optimal values are \(a_{0}=2S/\pi\), \(b_{0}= 2\), and \(\lambda _{0}= -(1-\kappa )/S\). For this values, the maximal entropy of the loglogistic distribution is:

and it is apparent that \(H_{ll,m}\) is the same as the upper bound of entropy in Eq. (28), if we set \(\langle x\rangle _{ll}= S\). Note that \(H_{ll,m} < H_{g,m}= H_{max}\), as expected, and additionally \(H_{ll,m} < H_{ln,m}\). Moreover, the upper bound entropy \(H_{ll,m}\) depends logarithmically on S, similar to the cases for gamma and lognormal distributions.

Definition of entropy efficiency

Entropy efficiency \(\eta\) is defined as the ratio of the continuous entropy (\(H_{ln}, H_{ll}, H_{g})\) to the theoretical maximal entropy (\(H_{max})\). Thus, for a specific probability distribution we have

where \(H_{max}\) is given by Eq. (4), and the index i denotes one of the distributions (either ln, ll, or g).

Estimation of the intrinsic noise amplitude \(\Delta x\)

Here we provide a justification for Eq. (2), which appeared above. Empirical data indicate that the size and shape of a dendritic spine is directly controlled by cytoskeleton, which consists mainly of the polymer filaments called F-actin107. Polymer F-actin is composed of many small monomers called G-actin, each with a characteristic size 7 nm108. This polymer has two characteristic turnover rates, one fast \(\sim 1.2\) min\(^{-1}\)109, and second slow \(\sim 0.06\) min\(^{-1}\)110, which suggests that the changing length of F-actin (addition or removal of monomers) can cause either rapid or slow fluctuations in the size and shape of a dendritic spine110,111. Therefore, we assume that the amplitude of F-actin length fluctuations sets the scale for the intrinsic noise amplitude in an individual spine size, which we denote as \(\Delta x\). We consider 3 separate cases for \(\Delta x\) related to spine length/diameter (\(\Delta x_{1D}\)), spine area (\(\Delta x_{2D}\)), and spine volume (\(\Delta x_{3D}\)).

1D case

Let L be the length of a spine (either spine head diameter or spine neck length). Since F-actin underlies the spine structure and sets the scale for length L, we can approximate L as a sum of several F-actin polymers in a row spanning the spine linear dimension112, i.e. \(L= \sum _{i=1}^{K} n_{i}\xi\), where K is the number of polymers, \(n_{i}\) is the number of monomers (G-actin) in the i-th polymer, each of length \(\xi = 0.007\) \(\mu\)m108. This implies the equality \(L= \xi \sum _{i=1}^{K} n_{i}= \xi N_{K}\), where \(N_{K}\) is the total number of monomers in all K polymers setting the spine linear dimension. The spine length L fluctuates due to variability in \(N_{K}\), which is caused by the fluctuations in the number of monomers \(n_{i}\) in each polymer. We make the simplest assumption that the fluctuations in \(n_{i}\) are governed by the Poisson stochastic process, which is consistent with empirical distributions of F-actin length in dendritic spines103, as well as with the basic models of polymer growth104,105. In fact, the process of addition and removal of monomers in a polymer chain can be described by a simple birth-death model, which naturally generates a Poisson distribution for the polymer length113. Since \(N_{K}\) is the sum of individual \(n_{i}\), each with a Poisson distribution, it also has a Poisson distribution61. Consequently, we assume that \(N_{K}\) has a stationary probability distribution of the form \(P(N_{K})= e^{-\nu }\nu ^{N_{K}}/N_{K}!\), where \(\nu\) is the intensity parameter (\(\nu\) can be different for different dendritic spines, but it does not matter for this analysis, since we consider here an individual “typical” spine). This implies that we have for the mean and standard deviation of \(N_{K}\) at the steady-state the following relations: \(\langle N_{K}\rangle = \nu\), and \(\sqrt{\langle N_{K}^{2}\rangle - \langle N_{K}\rangle ^{2}}= \sqrt{\nu }\), which leads to fluctuations in length L characterized by

and

We identify the intrinsic noise amplitude \(\Delta x_{1D}\) in this 1D case with the standard deviation of L, i.e. \(\Delta x_{1D}= \sqrt{\langle L^{2}\rangle - \langle L\rangle ^{2}}\). The last step is to combine Eqs. (31) and (32) such that to remove the unknown parameter \(\nu\), after which we obtain

where \(\langle L\rangle\) and \(\Delta x_{1D}\) are in \(\mu\)m. Note that the noise amplitude \(\Delta x_{1D}\) in 1D case is proportional to the square root of mean spine length \(\langle L\rangle\).

2D case

We assume, in agreement with the data, that the spine surface area is dominated by the surface area of the spine head, which is approximately a sphere. Thus the spine area A is approximately \(A= \pi D^{2}\), where D is both the spine head diameter and (in analogy to 1D case) the sum of the lengths of \(N_{K}\) F-actin polymers spanning the spine head, i.e. we have \(D= N_{K}\xi\). Furthermore, we have for the mean area \(\langle A\rangle = \pi \xi ^{2} \langle N_{K}^{2}\rangle\), and for the standard deviation of area \(\sqrt{\langle A^{2}\rangle - \langle A\rangle ^{2}}= \pi \xi ^{2} \sqrt{\langle N_{K}^{4}\rangle - \langle N_{K}^{2}\rangle ^{2}}\).

The moments of \(N_{K}\) for the Poisson distribution with the intensity parameter \(\nu\) are: \(\langle N_{K}^{2}\rangle = \nu ^{2} + \nu\), and \(\langle N_{K}^{4}\rangle = \nu ^{4} + 6\nu ^{3} + 7\nu ^{2} + \nu\), and thus we have an equation for the unknown parameter \(\nu\):

Since the value of \(\pi \xi ^{2}\) is \(1.5\cdot 10^{-4}\) \(\mu\)m\(^{2}\), and this is much smaller than any recorded value of spine (or PSD) area \(\langle A\rangle\) in Table 3 (by a factor of at least 200), we can safely assume that the parameter \(\nu \gg 1\) (mean of the total number of monomers spanning the spine head diameter much bigger than 1). This implies that we can neglect the linear term on the left in Eq. (34), and obtain

Similarly, for the standard deviation of A we have \(\sqrt{\langle A^{2}\rangle - \langle A\rangle ^{2}}= \pi \xi ^{2} \sqrt{4\nu ^{3} + 6\nu ^{2} + \nu } \approx 2\pi \xi ^{2}\nu ^{3/2}\). We identify the intrinsic noise amplitude \(\Delta x_{2D}\) in this 2D case with the standard deviation of A, and thus obtain

where the average spine area \(\langle A\rangle\) and \(\Delta x_{2D}\) are in \(\mu\)m\(^{2}\). Note that the noise amplitude \(\Delta x_{2D}\) in 2D case is proportional to \(\langle A\rangle ^{3/4}\).

3D case

We make a similar assumption as in 2D case, that volume V of a spine is dominated by the volume of spine head, which is approximately a sphere. Repeating similar steps as before, we have for the mean volume

and for the standard deviation of volume

where we used the fact that \(\nu \gg 1\), and the following moments of the Poisson distribution: \(\langle N_{K}^{3}\rangle = \nu ^{3} + 3\nu ^{2} + \nu\), and \(\langle N_{K}^{6}\rangle = \nu ^{6} + 15\nu ^{5} + 65\nu ^{4} + 90\nu ^{3} + 31\nu ^{2} + \nu\).

Combining of the equations for the mean and standard deviation, and identifying the intrinsic noise amplitude \(\Delta x_{3D}\) in this 3D case with the volume standard deviation, we obtain

where the average spine volume \(\langle V\rangle\) and \(\Delta x_{3D}\) are in \(\mu\)m\(^{3}\). Note that the noise amplitude \(\Delta x_{3D}\) in 3D case is nearly a linear function of mean spine volume. This is a very similar result to the empirical finding in17, where it was found that the standard deviation of intrinsic spine fluctuations could be well fitted to a linear function of spine volume.

Uncertainty parameter for intrinsic noise amplitude

The above relations for the intrinsic noise amplitude \(\Delta x\), summarized in Eq. (2), were derived under the assumption of Poisson distribution for the number of actin molecules spanning the linear dimension of the dendritic spine. Here, we introduce the uncertainty parameter r related to intrinsic noise amplitude as a measure of deviation from the Poisson distribution. We define the renormalized noise amplitude \(\Delta x_{r}\), which is used in calculations of the impact of noise uncertainty on the results, as \(\Delta x_{r}= r\Delta x\), i.e. we rescale the original noise amplitude or resolution by r. For \(r \ll 1\), the effective noise in spine size is very small, while for \(r \gg 1\), the effective noise in very large.

Deviation from optimality of the empirical parameters characterizing spine size distributions

We introduce a measure of deviation of the parameters characterizing a given distribution from their optimal theoretical values, as a relative combined error from optimality.

For the gamma distribution the deviation \(D_{g}\) is:

For the log-normal distribution the deviation \(D_{ln}\) is:

and for the log-logistic distribution the deviation \(D_{ll}\) is:

In Eqs. (38, 39 and 40) the parameters \(\alpha _{0}, \beta _{0}, \mu _{0}, \sigma _{0}\), and \(a_{0}, b_{0}\) are the optimal parameters for a given distribution. The smaller the value of D, the closer the empirical distribution is to its maximal entropy. For example, if both \(\alpha\) and \(\beta\) deviate from their respective optimal values by 50\(\%\), then \(D_{g}= 1/2\), or the deviation is 50\(\%\) in Tables 2, 3, 4 and 5. Similarly for the rest of the parameters.

Alternative measure of optimality: maximization of entropy density

As an alternative to the above approach with the maximization of the entropy for a given average spine size, we consider below the maximization of the density of entropy. The first measures the average number of bits for a typical spine size, while the second provides average number of bits per unit of spine volume (or surface area, or length). More precisely, we want to analyze the optimization problem in which we maximize the entropy of spine sizes per average spine size.

The fitness function F in this case takes the form:

where S is the mean and \(\sigma _{s}\) is the standard deviation of spine size. Interestingly, the function F displays maxima for each of the three probability densities. We look for optimal S and \(\sigma _{s}\) for which F is maximized, i.e. when \({\partial F}/{\partial S}= (1/S)\left[ {\partial H}/{\partial S} - H/S\right] = 0\), and \({\partial F}/{\partial \sigma _{s}}= (1/S){\partial H}/{\partial \sigma _{s}} = 0\).

For the gamma distribution, using Eq. (10) for H with \(\langle x\rangle _{g}= S\), we obtain the optimal mean \(S_{0}\) and standard deviation \(\sigma _{s,0}\) as \(S_{0}= \sigma _{s,0}= (c e^{-\kappa })^{1/(1-\kappa )}\), where c is the numerical parameter relating \(\Delta x\) and S in Eq. (2), i.e. \(\Delta x= c S^{\kappa }\). It can be easily verified that \(S_{0} < \Delta x(S_{0})\), and thus the optimal spine size is smaller than its intrinsic noise. The maximal value of the fitness function for gamma distribution, or the upper bound on entropy density, \(F_{g,m}= H(S_{0},\sigma _{s,0})/S_{0}\), is

For the lognormal distribution, using Eq. (17), we obtain the following optimal mean \(S_{0}= e(c/\sqrt{2\pi })^{1/(1-\kappa )}\), and standard deviation \(\sigma _{s,0}= \sqrt{e-1}S_{0}\). Note that for this distribution \(S_{0}\) is also smaller than \(\Delta x(S_{0})\). The corresponding maximal entropy density is

For the loglogistic distribution, using Eq. (27) with \(\langle x\rangle _{ll}= S\), we have the optimal \(S_{0}= (\pi c/e^{(1+\kappa )})^{1/(1-\kappa )}\), and \(\sigma _{s,0}= \infty\). Additionally, \(S_{0}\) is again smaller than \(\Delta x(S_{0})\). The maximal entropy density for loglogistic distribution is

Note that generally we have the following inequalities: \(F_{g,m}> F_{ln,m} > F_{ll,m}\), which means that gamma distribution provides the highest maximal information density. Moreover, for all three distributions optimal spine size is smaller than its corresponding intrinsic noise \(\Delta x\), which indicates that maximal entropy density is not attainable for empirical spines. In other words, real spines are too large to be optimized for entropy density.

Fitting of spine volume to lognormal, loglogistic, and gamma distributions. Empirical histograms of spine volumes from human cingulate cortex (rectangles; combined spines from apical and basal dendrites taken from34) were fitted to three different distributions (solid lines). Number of bins \(N_{b}=20\) for all plots. Below we provide mean values of the fitted parameters and corresponding 95\(\%\) confidence intervals in the brackets. ( A) Fits for 40 years old yield the following parameters: \(\mu = -1.56\) CI = [– 1.58, – 1.53], \(\sigma =0.87\) CI = [0.85, 0.88] (lognormal); \(a=0.22\) CI = [0.21, 0.23], \(b=2.03\) CI = [1.94, 2.12] (loglogistic); and \(\alpha =1.67\) CI = [1.61, 1.73], \(\beta =5.72\) CI = [5.51, 5.93] (gamma). (B) Fits for 85 years old give: \(\mu = -1.50\) CI = [– 1.53, – 1.46], \(\sigma =0.91\) CI = [0.88, 0.93] (lognormal); \(a=0.24\) CI = [0.23, 0.25], \(b=1.93\) CI = [1.83, 2.03] (loglogistic); and \(\alpha =1.56\) CI = [1.49, 1.63], \(\beta =4.91\) CI = [4.66, 5.18] (gamma).

Fitting of spine length to lognormal, loglogistic, and gamma distributions. Empirical histograms of spine length from human cingulate cortex (rectangles; combined spines from apical and basal dendrites taken from34) were fitted to three different distributions (solid lines). Number of bins \(N_{b}=20\) for all plots. Below we provide mean values of the fitted parameters and corresponding 95\(\%\) confidence intervals in the brackets. (A) Fits for 40 years old yield: \(\mu = 0.34\) CI = [0.33, 0.35], \(\sigma =0.52\) CI = [0.51, 0.53] (lognormal); \(a=1.46\) CI = [1.23, 1.69], \(b=3.44\) CI = [2.85, 4.03] (loglogistic); and \(\alpha =4.31\) CI = [3.61, 5.01], \(\beta =2.71\) CI = [2.48, 2.96] (gamma). ( B) Fits for 85 years old yield: \(\mu = 0.33\) CI = [0.32, 0.34], \(\sigma =0.52\) CI = [0.51, 0.53] (lognormal); \(a=1.44\) CI=[1.20, 1.68], \(b=3.42\) CI = [2.80, 4.04] (loglogistic); and \(\alpha =4.26\) CI = [3.49, 5.03], \(\beta =2.70\) CI = [2.42, 3.02] (gamma).

Similarity of spine head diameter distributions across human lifespan. Empirical data for human hippocampal spine head diameter (rectangles; taken from30) ranging from infancy, through maturity, to senility look very similar. These data were fitted to three different distributions (solid lines). (A) Fitting parameters for lognormal: \(\mu = -1.08\) CI = [– 1.11, – 1.05], \(\sigma =0.47\) CI = [0.44, 0.50], (5 months); \(\mu = -1.07\) CI = [– 1.09, – 1.05], \(\sigma =0.48\) CI = [0.46, 0.50], (2 years); \(\mu = -1.05\) CI = [– 1.07, – 1.03], \(\sigma =0.47\) CI = [0.45, 0.48], (23 years); \(\mu = -1.05\) CI = [– 1.06, – 1.03], \(\sigma =0.49\) CI = [0.47, 0.50], (27 years); \(\mu = -1.06\) CI = [– 1.07, – 1.04], \(\sigma =0.49\) CI = [0.48, 0.50], (38 years); \(\mu = -1.05\) CI = [– 1.06, – 1.03], \(\sigma =0.48\) CI = [0.47, 0.49], (45 years); \(\mu = -1.05\) CI = [– 1.06, – 1.03], \(\sigma =0.48\) CI = [0.46, 0.49], (57 years); \(\mu = -1.06\) CI = [– 1.07, – 1.04], \(\sigma =0.48\) CI = [0.46, 0.49], (58 years); \(\mu = -1.06\) CI = [-1.07, -1.04], \(\sigma =0.48\) CI = [0.47, 0.49], (68 years); \(\mu = -1.06\) CI = [-1.07, -1.05], \(\sigma =0.49\) CI = [0.48, 0.50], (70 years); \(\mu = -1.06\) CI = [-1.07, -1.04], \(\sigma =0.49\) CI = [0.48, 0.50], (71 years). (B) Fitting parameters for loglogistic: \(a=0.35\) CI = [0.24, 0.46], \(b=3.85\) CI = [2.55, 5.14], (5 months); \(a=0.35\) CI = [0.30, 0.40], \(b=3.70\) CI = [3.11, 4.28], (2 years); \(a=0.35\) CI = [0.31, 0.38], \(b=3.85\) CI = [3.40, 4.30], (23 years); \(a=0.36\) CI = [0.32, 0.39], \(b=3.70\) CI = [3.29, 4.10], (27 years); \(a=0.35\) CI = [0.32, 0.37], \(b=3.70\) CI = [3.37, 4.02], (38 years); \(a=0.36\) CI = [0.33, 0.38], \(b=3.70\) CI = [3.40, 3.99], (45 years); \(a=0.36\) CI = [0.33, 0.38], \(b=3.85\) CI = [3.56, 4.13], (57 years); \(a=0.35\) CI = [0.32, 0.37], \(b=3.85\) CI = [3.58, 4.11], (58 years); \(a=0.35\) CI = [0.33, 0.37], \(b=3.70\) CI = [3.45, 3.94], (68 years); \(a=0.35\) CI = [0.33, 0.37], \(b=3.70\) CI = [3.47, 3.92], (70 years); \(a=0.35\) CI = [0.33, 0.36], \(b=3.70\) CI = [3.48, 3.91], (71 years). (C) Fitting parameters for gamma: \(\alpha =5.03\) CI = [3.14, 6.90], \(\beta =13.44\) CI = [10.90, 16.80], (5 months); \(\alpha =4.82\) CI = [3.90, 5.74], \(\beta =12.68\) CI = [11.40, 14.30], (2 years); \(\alpha =5.01\) CI = [4.28, 5.73], \(\beta =12.98\) CI = [11.96, 14.30], (23 years); \(\alpha =4.77\) CI = [4.12, 5.41], \(\beta =12.19\) CI = [11.30, 13.38], (27 years); \(\alpha =4.59\) CI = [4.11, 5.06], \(\beta =11.80\) CI = [11.03, 12.70], (38 years); \(\alpha =4.80\) CI = [4.34, 5.25], \(\beta =12.27\) CI = [11.54, 13.01], (45 years); \(\alpha =4.90\) CI = [4.45, 5.34], \(\beta =12.55\) CI = [11.84, 13.38], (57 years); \(\alpha =4.88\) CI = [4.47, 5.28], \(\beta =12.68\) CI = [12.01, 13.40], (58 years); \(\alpha =4.73\) CI = [4.36, 5.09], \(\beta =12.21\) CI = [11.56, 12.85], (68 years); \(\alpha =4.63\) CI = [4.30, 4.96], \(\beta =11.96\) CI = [11.47, 12.55], (70 years); \(\alpha =4.59\) CI = [4.28, 4.90], \(\beta =11.79\) CI = [11.25, 12.28], (71 years).

Information encoded in spine volume is nearly optimal across brains with different sizes, regions, and physiological conditions. (A) Close alignment of the entropy data points to the maximal entropy curve (solid line, given by Eq. 4) for all three distributions. The gamma distribution has several outliers. The visible outlier for all three distributions is the point corresponding to rat cerebellum. (B) The ratio of empirical standard deviation to mean spine volume as a function of spine volume. Note that the majority of data points SD/mean fall within an “optimality zone” (dotted horizontal lines), which is a region with boundaries \(30 \%\) off the optimal ratio 1.0 for the gamma distribution (solid line). The data with the ratio SD/mean \(\gg 1\) are closer to maximal entropies for loglogistic distribution. Legend for data points in (A) and (B) panels: diamonds for mouse, circles for rat, squares for macaque monkey, triangles for human.

Information encoded in spine surface area is nearly optimal across mammalian brains with different regions and conditions. (A) Similar as in Fig. 4, the nearly optimal alignment of entropy data points to the maximal entropy curve (solid line, given by Eq. 4), with a cerebellum as an outlier. (B) The empirical ratios SD/mean for spine and PSD areas are mostly within the range 0.7–1.3 (dotted horizontal lines), which is close to the optimal ratio 1.0 for the gamma distribution (solid line). Legend for data points in (A) and (B): diamonds for mouse, circles for rat, pentagram for cat, squares for macaque monkey, triangles for human.

Information encoded in spine length is suboptimal. (A) Data driven continuous entropies are generally suboptimal (especially for the gamma distribution), but nevertheless they are relatively close to the maximal entropy curve (solid line). (B) The suboptimality is manifested by majority of empirical spine length ratios SD/mean values below 0.7, i.e., significantly lower than the optimal ratio for gamma distribution (1.0; solid line). Legend for data points in (A) and (B): diamonds for mouse, circles for rat, pentagram for cat, squares for macaque monkey, triangles for human.

Information encoded in spine head diameter is suboptimal. (A) Scattered data points, far away from the maximal entropy curve (solid line). (B) Majority of empirical ratios SD/mean are below 1/3, i.e., far away from the optimality zone (dotted lines) corresponding to the optimal ratio for gamma distribution (1.0; solid line). Legend for data points in (A) and (B): diamonds for mouse, circles for rat, stars for rabbit, plus for echidna, pentagram for cat, squares for macaque monkey, hexagon for dolphin, triangles for human.

Entropy density in spine sizes is far from optimal. Density of entropy F as a function of (A) spine volume, (B) spine surface area, (C) spine length, and (D) spine head diameter. Blue diamonds correspond to data points described by lognormal distribution, black squares correspond to loglogistic, and red triangles to gamma distribution. Note that all these data points are far below the theoretical upper bounds for the entropy density represented by three lines (blue solid line for lognormal, black dashed line for loglogistic, and green dotted line for gamma distribution).