Abstract

Bivariate data are frequently encountered in many applied fields, including econometrics, engineering, physiology, biology, and medicine. For bivariate analysis, a wide range of non-parametric and parametric techniques can be applied. There are fewer requirements needed for non-parametric procedures than for parametric ones. In this paper, the saddlepoint approximation method is used to approximate the exact p-values of some non-parametric bivariate tests. The saddlepoint approximation is an approximation method used to approximate the mass or density function and the cumulative distribution function of a random variable based on its moment generating function. The saddlepoint approximation method is proposed in this article as an alternative to the asymptotic normal approximation. A comparison between the proposed method and the normal asymptotic approximation method is performed by conducting Monte Carlo simulation study and analyzing three numerical examples representing bivariate real data sets. In general, the results of the simulation study show the superiority of the proposed method over the asymptotic normal approximation method.

Similar content being viewed by others

Introduction

Recently, several experiments have been performed based on bivariate data. As a result, bivariate data analysis is critical in statistical research and is typical of many studies. One of the important problems associated with bivariate data is the problem of testing the symmetry of the two bivariate distributions. Suppose the observed bivariate data takes the form \(Z=\left\{\left({x}_{1},{y}_{1}\right), \dots ,\left({x}_{n},{y}_{n}\right)\right\}\) where \(\left({x}_{1},{y}_{1}\right), \dots ,\left({x}_{n},{y}_{n}\right)\) are assumed to be mutually independent from a bivariate population follow the F distribution. The problem is to test the symmetrical hypothesis.

Sign testing is a common method of testing symmetry. Many statisticians and those interested in statistical inference have made many generalizations to the univariate sign test in order to obtain the corresponding test in the bivariate case. Work began on this point by both Hodges1 and Blumen2. After that, many studies appeared with the aim of providing and developing the sign tests for the bivariate case. In this regard, we can point out the contributions of Chatterjee3, Kohnen4, Dietz5, Brown and Hettmansperger6, Oja and Nyblom7, and Brown and Hettmansperger8. Brown et al.9 discussed the concepts of bivariate sign test and bivariate medians. Larocque et al.10 introduced an affine-invariant modification of the Wilcoxon signed-rank test for bivariate location problems. The advantage of this test over Jan and Randles11 test is that its asymptotic null distribution holds without assuming elliptical symmetry. Samawi12 introduced a bivariate sign test for the one-sample bivariate location problem using a bivariate ranked set sample. Ghute and Shirke13 developed a nonparametric control chart for monitoring the changes in the location of a bivariate process, the proposed chart is based on Bennett’s14 bivariate sign test.

The p-value plays an important role in hypothesis tests because of its important role in determining the acceptance or rejection of the null hypothesis. Therefore, approximating the exact p-value with high accuracy is a challenge in many statistical tests. In this context, the saddlepoint approximation method is suggested to approximate the exact p-value of a class of bivariate tests which takes the general linear form

where \({{\varvec{U}}}_{i}\) is the vector of score function based on observation of the sample, \({\beta }_{i}\) is the vector of indicators which has a sequence of ones and zeroes, and \({\varvec{C}}\) is a constant vector possibly depending on observation of the sample.

The saddlepoint approximation method is basically just a method for approximating the density function. Daniels15 was the first one who initially proposed the general application of the saddlepoint approximation for density function. The cumulative distribution function in the univariate case was approximated by Lugannani and Rice16 depending on the proposal of Daniels. Skovgaard17 provided a double saddlepoint approximation for the conditional distributions. A saddlepoint approximation for a bivariate distribution function was introduced by Wang18. Abd-Elfattah19 introduced an accurate and easy approximation for the distribution function of bivariate class of random sum distributions using saddlepoint approximation technique. Abd-Elfattah20 approximated the exact permutation distribution of a class of two-sample bivariate tests using saddlepoint approximation technique. Abd-Elfattah21 used the saddlepoint approximation to approximate the distribution function of the bivariate symmetry test statistic under competing risk data. Abd El-raheem and Abd-Elfattah22,23 approximated the exact permutation distribution of a class of two-sample tests for cluster data under two different randomization designs. For more recent articles in the saddlepoint approximation method; see Kamal et al.24,25. In the end, we can mention a number of important and basic references on the subject of saddlepoint approximations, which highlight the importance and applications of saddlepoint approximations in many branches and fields of statistics, namely: Booth and Butler26, Strawderman27, Butler28, Abd-Elfattah and Butler29, Kwok and Zheng30.

As mentioned earlier, our goal is to approximate the mid-p value of a class of bivariate sign tests. The focus here is on the mid p-value rather than ordinary p-value since the ordinary p-value is too conservative in comparison to the mid p-value, see Abd-Elfattah20. Such a class of bivariate sign tests is presented in detail in “Bivariate sign tests” section. The bivariate saddlepoint approximation is applied to approximate the mid-p value of a class of bivariate sign tests in “Bivariate saddlepoint approximations” section. “Illustrative examples and simulation studies” section compares the performance of the saddlepoint approximation and the asymptotic normal method using numerical examples and simulation studies.

Bivariate sign tests

This section presents two of the most frequently used bivariate sign tests in one sample problem. After that, we formulate the two statistics of such two tests in a general linear form to facilitate obtaining highly accurate approximation of exact p-value of such bivariate sign tests using bivariate saddlepint approximation method.

Bivariate sign test of Blumen2

The bivariate sign test was provided by Blumen2 to test the hypothesis that the medians of two variables have a specific value. This test was created to be independent of correlation between the two variables. Let \(\left({x}_{i},{y}_{i}\right)\) represent the bivariate sample points. In order to perform Blumen’s bivariate sign test, consider the \(n\) axes created by drawing a line across each \(\left({x}_{i},{y}_{i}\right)\) and the origin, and number the axes corresponding to the angle counterclockwise from the positive end of the horizontal axis. Let \({\gamma }_{i}=+ 1\, or\ - 1\) if the data point associated with the ith axis is higher (lower) than the horizontal axis. The center of gravity is calculated by computing the values at the intersection of the standardized vectors and the unit circle. Blumen’s test statistic is given by

If the null hypothesis is true, then \({l}_{1}\) and \({l}_{2}\) are approximately independent normal variables with mean zero and variance \(n/2.\)

Let \({\alpha }_{i}=\frac{{\gamma }_{i}+1}{2}\), then \({l}_{1}\) and \({l}_{2}\) in Eq. (2) become

and

where \({\alpha }_{i}=\left\{\mathrm{0,1}\right\}\). Now, the statistics \({l}_{1}\) and \({l}_{2}\) can be rewritten in the bivariate sign statistic form as

or in the form (1) with \({\beta }_{i}={\alpha }_{i}\) , \({{\varvec{U}}}_{i}= {2\left(\mathrm{cos}\left(\frac{\pi (i-1)}{n}\right),\mathrm{sin}\left(\frac{\pi (i-1)}{n}\right) \right)}^{T}\) and \({\varvec{C}}=-{\sum }_{i=1}^{n}{\left(\mathrm{cos}\left(\frac{\pi (i-1)}{n}\right),\mathrm{sin}\left(\frac{\pi (i-1)}{n}\right) \right)}^{T}.\)

Bivariate sign test of Brown et al.9

Brown et al.9 introduced another idea for bivariate symmetry test. Let \({{\varvec{z}}}_{1},\dots ,{{\varvec{z}}}_{n}\) be a sample drawn at random from a bivariate distribution. Brown et al.9 meant by symmetry here that \({{\varvec{z}}}_{{\varvec{i}}}-{\varvec{\mu}}\) and \({\varvec{\mu}}-{{\varvec{z}}}_{{\varvec{i}}}\) are identically distributed, where \({\varvec{\mu}}\) is the symmetry center. Thus, the null hypothesis of bivariate symmetry is defined by

The observed data can be represented in the following form

where \({\gamma }_{i}=1\) or \(-1\) if \({{\varvec{z}}}_{i}\) is above or below the horizontal axis, respectively, \({r}_{i}\) is the ith radius, and \(0\le {\varphi }_{1}\le {\varphi }_{2}\le \dots \le {\varphi }_{n}\le \pi\) are the ordered angles.

Under the null hypothesis, \(P\left({\gamma }_{i}=1\right)=P\left({\gamma }_{i}=-1\right)=\frac{1}{2}\).

Let \({{\varvec{z}}}^{T}=\left({z}_{1},{z}_{2}\right)\), and \({\dot{{\varvec{z}}}}^{T}=\left(-{z}_{2},{z}_{1}\right)\), then the gradient vector at the origin (divided by n) is given by

The statistic \({\varvec{q}}\) becomes simpler after some simplification as following:

where \({{\varvec{w}}}^{T}=\left({w}_{1},{w}_{2}\right)\) and using \({{\varvec{x}}}_{n+i}=-{{\varvec{x}}}_{i}\) such that:

The statistic \({\varvec{q}}\) is asymptotic normal with mean \({\varvec{\mu}}=E\left({\varvec{q}}|{H}_{0}\right)=0\) and covariance matrix \({\varvec{\sigma}}=\frac{1}{4}\sum_{i=1}^{n}{{\varvec{w}}}_{i}{{\varvec{w}}}_{i}^{T}\).

Let \({\alpha }_{i}=\frac{{\gamma }_{i}+1}{2}\) then \({\alpha }_{i}=\left\{\mathrm{0,1}\right\}\) and the statistic \({\varvec{q}}\) becomes

It is clear that the statistic \({\varvec{q}}\) takes the same form of the linear statistic in Eq. (1) with \({\beta }_{i}={\alpha }_{i}\), \({{\varvec{U}}}_{i}={{\varvec{w}}}_{i}\) and \({\varvec{C}}=-\frac{1}{2}{\sum }_{i=1}^{n}{{\varvec{w}}}_{i}\).

Bivariate saddlepoint approximations

The permutation distribution of the general from of the bivariate sign statistic in Eq. (1) is \({2}^{n}\). This distribution can be derived from the set \(\{{\beta }_{1}, . . .,{\beta }_{n}\}\) of independent and identically Bernoulli (1/2) random variables. The bivariate sign statistic in (1) can be written as two sign statistics as

with \({{\varvec{U}}}_{i}={\left({U}_{1i},{U}_{2i}\right)}^{T}\, \mathrm{and}\, {\varvec{C}}={({c}_{1},{c}_{2})}^{T}.\)

Let \({{\varvec{B}}}_{0}=(\tau ,\upsilon )\) be observed value of \({\varvec{B}}\), it is possible to calculate the mid-p value of the statistic \({\varvec{B}}\) at \({{\varvec{B}}}_{0}\) as

The \(\mathrm{mid}-\mathrm{p}\left({{\varvec{B}}}_{0}\right)\) can be approximated using saddlepoint approximation of the bivariate CDF which was developed by Wang18. The approximate formula presented by Wang18 is an approximation of the bivariate cumulative distribution function as a generalization of the approximation presented by Lugannani and Rice16 which is the approximation of the univariate cumulative distribution function. Both approximations are an approximation of the intractable integrals resulting from calculating different forms of probabilities. These approximations totally depend on the cumulant generating function (CGF).

The joint CGF of \({b}_{1}\) and \({b}_{2}\) is given by

Since \({{\varvec{B}}}_{0}=(\tau ,\upsilon )\) is the observed value of the statistic \({\varvec{B}}\), assume for fixed \((\tau ,\upsilon )\) that there exists a unique solution \(\left({t}_{0},{u}_{0}\right)\) of the following equation

and \(t={\widehat{t}}_{0}\) solves the equation

where \({K}_{1}(t)\) is the CGF of \({b}_{1}\), and similarly it can be assumed that \({K}_{2}(u)\) is the CGF of \({b}_{2}\).

According to the saddlepoint approximation of the bivariate CDF, the \(\mathrm{mid}-\mathrm{p}\left({{\varvec{B}}}_{0}\right)\) in (6) can be approximated as

where \({I}_{11}\sim\Phi ({\tau }_{1},{\upsilon }_{1},{\rho }_{1})\), \({I}_{12}\sim\Phi ({w}_{{u}_{0}})\phi ({\upsilon }_{0})\left\{{\upsilon }_{0}^{-1}-{({u}_{0}G)}^{-1}\right\}\), \({I}_{21}=\Phi \left({\upsilon }_{0}\right)\phi \left({\tau }_{1}\right)\left\{{w}_{{u}_{0}}^{-1}-{t}_{0}^{-1}{[{K}_{tt}\left({t}_{0},{u}_{0}\right)]}^{-1/2}\right\},\)

where

-

(1)

\({\tau }_{1}=sgn({\widehat{t}}_{0}){\left\{-2({K}_{1}\left({\widehat{t}}_{0}\right)-{\widehat{t}}_{0}\tau ) \right\}}^{1/2};\)

-

(2)

\({w}_{{u}_{0}}=sgn({t}_{0}){\left[-2\left\{K\left({t}_{0},{u}_{0}\right)-{K}_{2}\left({u}_{0}\right)-{t}_{0}\tau \right\}\right]}^{1/2};\)

-

(3)

\({\upsilon }_{0}=sgn({u}_{0}){\left[-2\left\{K\left({t}_{0},{u}_{0}\right)-{K}_{1}\left({\widehat{t}}_{0}\right)-\left({t}_{0}-{\widehat{t}}_{0}\right)\tau -{u}_{0}\upsilon \right\}\right]}^{1/2};\)

-

(4)

\(b=({w}_{{u}_{0}}-{\tau }_{1})/{\upsilon }_{0};\)

-

(5)

\(G={\left.{\left[{K}_{uu}\left(t,u\right)-{\left({K}_{tu}\left(t,u\right)\right)}^{2}/{K}_{tt}\left(t,u\right)\right]}^{1/2}\right|}_{\left({t}_{0},{u}_{0}\right)};\)

-

(6)

\({\rho }_{1}=-b/{(1+{b}^{2})}^{1/2};\)

-

(7)

\({\upsilon }_{1}={(\upsilon }_{0}-b{\tau }_{1})/{(1+{b}^{2})}^{1/2}.\)

Here \(\Phi (.,.,\rho )\) is the standard bivariate normal distribution, \(\rho\) is the correlation between the two components, and \(\Phi (.)\) and \(\phi \left(.\right)\) are the standard normal distribution and density functions.

To get, the value of the approximation in (9), some functions are required which are as follows

and

Illustrative examples and simulation studies

Three published real data sets are considered in this part to demonstrate the efficiency of the saddlepoint and normal approximations. Inclusive Monte Carlo simulation studies are also carried out to evaluate the accuracy of the saddlepoint approach compared to that of the traditional asymptotic method.

Examples

The precision of different approaches to approximate the exact p-value of bivariate sign tests may be illustrated using some numerical examples. As a result, three published real data sets are provided in order to compare the saddlepoint approximation and normal approximation methods. For Data Set 1, ten adult sons and their fathers participated in a study to assess eye refractions. Positive refractions indicated long-sightedness, while negative refractions showed near-sightedness. The sons were part of a large group collected in northern Finland for infants born in 1966. Data set 1 is presented in Table 1. More details can be found about this data set in Rantakallio31. We can indicate that several authors used the data presented in Table 1 to clarify some procedures for bivariate sign tests, for example, see Brown et al.9. Data set 2 is a simple study of twelve cotton textile workers who were researched by Merchant et al.32 to determine the effects of cotton dust exposure. Before and after each participant’s 6-h exposure to cotton dust, several factors were measured for each worker, including the change in closing volume and white blood cell count. Dietz5 used data set 2 to clarify the procedures of his bivariate sign test. This data set was included in Table 1 of Dietz5. Data set 3 is from Samawi et al.33. These data represent the bilirubin levels in jaundiced infants staying in the neonatal intensive care unit. Physicians are interested in jaundice because it may have a significant influence on hearing and neurological development and is a risk factor for death. It would be extremely beneficial to physicians if they could test the hypothesis that boys and females have the same median bilirubin level when weight groups are matched. The data was collected from five hospitals in Jordan and was limited to births in the first six months of 1997. Samawi et al.33 took fifteen pairs of male and female patients from the hospital records.

Table 2 shows the mid p-values for the three data sets for the Blumen2 and Brown et al.9 bivariate sign tests. Furthermore, the asymptotic normal p-values and saddlepoint p-values are also displayed in Table 2. In the remainder of this article, we refer to the Blumen2 and Brown et al.9 tests by test 1 and test 2, respectively. The simulated mid p-value (Sim) is derived based on \({10}^{6}\) permutations of the indicators {\({\beta }_{i}\)} by computing the ratio of cases in which B exceeds \({{\varvec{B}}}_{0}\) plus half the ratio of cases in which B equals to \({{\varvec{B}}}_{0}\).

In all three data sets, the saddle point approximation outperformed the normal approximation in terms of the simulated mid p-value precision.

Monte Carlo simulation study

Monte Carlo simulation studies are used to show the accuracy of the saddlepoint approximation over a wide range of simulated data from different bivariate distributions and different sample sizes. 1000 bivariate data sets of sizes \(n= 20,\) 30, 40, and 60 are generated from the bivariate exponential distribution, bivariate logistic distribution, bivariate normal distribution and bivariate Poisson distribution. For generating bivariate data from normal and Poisson distributions, three cases are taken into account for the correlation coefficient between the two variables: weak, moderate, and strong. While the data are generated from the bivariate exponential and logistic distributions assuming independence between the two variables. For the four distributions the following results “Sad.P.”, “E.Sad.”, and “E.Nor.” are presented in Tables 3, 4, 5 and 6, where “Sad.P.” is the proportion of the 1000 data sets for which the saddlepoint p-value is closer to the simulated exact mid p-value than the normal, “E.Sad.” is the average relative absolute error of the saddlepoint approximation, and “E.Nor.” is the average relative absolute error of the normal approximation. The estimated type I error and power of the considered tests at the 0.05 significance level are displayed in Tables 7 and 8, respectively.

We notice from Tables 3, 4, 5 and 6 that the mean absolute error of the proposed approximation method is less than that of the normal approximation method in all the assumed cases. Moreover, we can note that the convergence percentage of suggested approximation to the simulated exact p-values was not in any case less than 77.6%, but in some cases, it reached approximately 99.2%. It is observed that with increasing sample sizes there is an improvement in the normal approximation, but the saddlepoint approximation is more accurate and is closer to the simulated exact p-value, especially when the sample sizes are small.

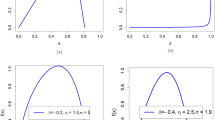

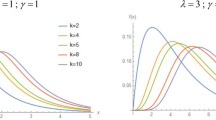

To facilitate a better understanding of the simulation results, the relative absolute errors of both the saddlepoint approximation and normal approximation for two cases of the simulation study are displayed in Figs. 1 and 2.

Concluding

Bivariate data analysis is becoming more and more important in many areas. Especially in the medical field, more than one variable such as tumor incidence and tumor size, blood pressure and pulse, weight and fat level, weight change and depression level are often studied. Leveraging Wang’s bivariate saddlepoint approximation technique, the exact p-values of a class of bivariate sign tests are approximated with high precision compared to normal approximation method. This high accuracy has been verified by analyzing three examples of real data and performing simulation study.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

Change history

19 December 2023

A Correction to this paper has been published: https://doi.org/10.1038/s41598-023-49383-9

References

Hodges, J. L. A bivariate sign test. Ann. Math. Stat. 26(3), 523–527 (1955).

Blumen, I. A new bivariate sign test. J. Am. Stat. Assoc. 53(282), 448–456 (1958).

Chatterjee, S. K. A bivariate sign test for location. Ann. Math. Stat. 37, 1771–1782 (1966).

Kohnen, R. & Lienet, G. A. Bivariate sign tests sensitive to homeo- and heteropoetic treatment effects. Biom. J. 21, 755–761 (1979).

Dietz, E. J. Bivariate nonparametric tests for the one-sample location problem. J. Am. Stat. Assoc. 77(377), 163–169 (1982).

Brown, B. M. & Hettmansperger, T. P. Affine invariant rank methods in the bivariate location model. J. R. Stat. Soc. Ser. B 49, 301–310 (1987).

Oja, H. & Nyblom, J. Bivariate sign tests. J. Am. Stat. Assoc. 84(405), 249–259 (1989).

Brown, B. M. & Hettmansperger, T. P. An affine invariant bivariate version of the sign test. J. R. Stat. Soc. Ser. B 51, 117–125 (1989).

Brown, B. M., Hettmansperger, T. P., Nyblom, J. & Oja, H. On certain bivariate sign tests and medians. J. Am. Stat. Assoc. 87(417), 127–135 (1992).

Larocque, D., Tardif, S. & Eeden, C. An affine-invariant generalization of the Wilcoxon signed-rank test for the bivariate location problem. Aust. N. Z. J. Stat. 45(2), 153–165 (2003).

Jan, S. L. & Ronald, R. H. A multivariate signed sum test for the one-sample location problem. J. Nonparametr. Stat. 4(1), 49–63 (1994).

Samawi, H., Al-Saleh, M. F. & AlSaidy, O. Bivariate sign test for one-sample bivariate location model using ranked set sample. Commun. Stat. Theory Methods 35(6), 1071–1083 (2006).

Ghute, V. & Shirke, D. Bivariate nonparametric synthetic control chart based on sign test. J. Ind. Syst. Eng. 6, 108–121 (2012).

Bennett, B. M. On multivariate sign tests. J. R. Stat. Soc. 24, 159–161 (1962).

Daniels, H. E. Saddlepoint approximation in statistics. Ann. Math. Stat. 25, 631–650 (1954).

Lugannani, R. & Rice, S. O. Saddlepoint approximations for the distribution of the sum of independent random variables. Adv. Appl. Probab. 12, 475–490 (1980).

Skovgaard, I. M. Saddlepoint expansions for conditional distributions. J. Appl. Probab. 24, 875–887 (1987).

Wang, S. Saddlepoint approximations for bivariate distributions. J. Appl. Probab. 27, 586–597 (1990).

Abd-Elfattah, E. F. Bivariate stopped-sum distributions using saddlepoint methods. Stat. Probab. Lett. 78, 1857–1862 (2008).

Abd-Elfattah, E. F. Saddlepoint p-values for two-sample bivariate tests. J. Stat. Plann. Inference 171, 92–98 (2016).

Abd-Elfattah, E. F. Bivariate symmetry tests for complete and competing risks data: A saddlepoint approach. J. Stat. Comput. Simul. 87(6), 1269–1275 (2017).

Abd El-Raheem, A. M. & Abd-Elfattah, E. F. Weighted log-rank tests for clustered censored data: Saddlepoint p-values and confidence intervals. Stat. Methods Med. Res. 29(9), 2629–2636 (2020).

Abd El-Raheem, A. M. & Abd-Elfattah, E. F. Log-rank tests for censored clustered data under generalized randomized block design: Saddlepoint approximation. J. Biopharm. Stat. 31(3), 352–361 (2021).

Kamal, K. S., Abd El-Raheem, A. M. & Abd-Elfattah, E. F. Weighted log-rank tests for left-truncated data: Saddlepoint p-values and confidence intervals. Commun. Stat. Theory Methods 52, 4103 (2021).

Kamal, K. S., Abd El-Raheem, A. M. & Abd-Elfattah, E. F. Weighted log-rank tests for left-truncated data under Wei’s urn design: Saddlepoint p-values and confidence intervals. J. Biopharm. Stat. 32(5), 641–651 (2022).

Booth, J. G. & Butler, R. W. Randomization distributions and saddlepoint approximations in generalized linear models. Biometrika 77, 787–796 (1990).

Strawderman, R. Higher-order asymptotic approximation: Laplace, saddlepoint, and related methods. J. Am. Stat. 95(452), 1358–1364 (2000).

Butler, R. W. Saddlepoint Approximations with Applications (Cambridge University Press, 2007).

Abd-Elfattah, E. F. & Butler, R. W. The weighted log-rank class of permutation tests: P-values and confidence intervals using saddlepoint methods. Biometrika 94, 543–551 (2007).

Kwok, Y. K. & Zheng, W. Saddlepoint Approximation Methods in Financial Engineering (Springer, 2018).

Rantakallio, P. The longitudinal study of the northern Finland birth cohort of 1966. Paediatr. Perinat. Epidemiol. 2, 59–88 (1988).

Merchant, J. A. et al. Responses to cotton dust. Arch. Environ. Health 30, 222–229 (1975).

Samawi, H., Al-Saleh, M. F. & Al Saidy, O. The matched pair sign test using bivariate ranked set sampling for different ranking-based schemes. Stat. Methodol. 6(4), 397–407 (2009).

Funding

Authors state no funding involved.

Author information

Authors and Affiliations

Contributions

All authors participated in the methodology, writing and reviewing of the article as well as the programming of the theoretical results and the analysis and discussion of the results.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this Article was revised: The original version of this Article contained an error in the order of the author names, which was incorrectly given as Ibrahim A. A. Shanan, Abd El-Raheem M. Abd El-Raheem, Ehab F. Abd-Elfattah. In addition, The original version of this Article contained an error in the Funding section. Full information regarding the corrections made can be found in the correction notice for this Article.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Shanan, I.A.A., Abd-Elfattah, E.F. & Abd El-Raheem, AR.M. A new approach for approximating the p-value of a class of bivariate sign tests. Sci Rep 13, 19133 (2023). https://doi.org/10.1038/s41598-023-45975-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-45975-7

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.