Abstract

Due to its low dependency on the control parameters and straightforward operations, the Artificial Electric Field Algorithm (AEFA) has drawn much interest; yet, it still has slow convergence and low solution precision. In this research, a hybrid Artificial Electric Field Employing Cuckoo Search Algorithm with Refraction Learning (AEFA-CSR) is suggested as a better version of the AEFA to address the aforementioned issues. The Cuckoo Search (CS) method is added to the algorithm to boost convergence and diversity which may improve global exploration. Refraction learning (RL) is utilized to enhance the lead agent which can help it to advance toward the global optimum and improve local exploitation potential with each iteration. Tests are run on 20 benchmark functions to gauge the proposed algorithm's efficiency. In order to compare it with the other well-studied metaheuristic algorithms, Wilcoxon rank-sum tests and Friedman tests with 5% significance level are used. In order to evaluate the algorithm’s efficiency and usability, some significant tests are carried out. As a result, the overall effectiveness of the algorithm with different dimensions and populations varied between 61.53 and 90.0% by overcoming all the compared algorithms. Regarding the promising results, a set of engineering problems are investigated for a further validation of our methodology. The results proved that AEFA-CSR is a solid optimizer with its satisfactory performance.

Similar content being viewed by others

Introduction

In real world applications, optimization problems are frequently non-differentiable, non-convex and discontinuous. Before the introduction of the most extensively used metaheuristic optimization technique, gradient descent approach was one of the optimization techniques employed as well as the Gauss–Newton technique1,2. The gradient-based optimization method is vulnerable to getting over the local optimums and reduces the precision of optimization. On the other hand, metaheuristic optimization algorithms are able to find ideal or nearly ideal solutions in a manageable time. These algorithms have been studied by many researchers in order to deal with difficult optimization problems, some of them are Genetic Algorithm (GA)3, Particle Swarm Optimization (PSO)4, Hybrid Artificial Humming Bird-Simulated Annealing (HAHA-SA)5, Differential Evolution (DE)6, Hybrid Flow Direction Optimizer-dynamic oppositional based algorithm (HFDO-DOBL)7, Firefly Algorithm (FA)8, Artificial Electric Field Algorithm (AEFA)9,10, Artificial Bee Colony Optimization (ABC)11, Hybrid Heat Transfer Search and Passing Vehicle Search Optimizer (MOHHTS–PVS)12, Cuckoo Search (CS)13, Chaotic Marine Predators Algorithm (CMPA)14 and Nelder Mead-infused INFO algorithm (HINFO-NM)15. AEFA has become the focus of research among these algorithms in recent years with its few parameters and its simple principle.

AEFA is a stochastic optimization algorithm based on swarm intelligence. Due to the interaction between the charged particles via electrostatic force; attraction or repulsion. The particles travel along the electrostatic field with the most charged particle leading. When it was first introduced, academics were intrigued by the efficiency of AEFA. It has been used extensively in numerous fields, such as machine learning16,17, assembly lines18, engineering problems19,20, feature selection21 and economic load dispatch problem22. The leading charged particle controls each iteration of the search process of AEFA.

In multimodal problems, the leading charged particle may occasionally enter a sub-optimal location23. The population is nevertheless subject to local optimal, when the leading charged particle becomes stranded in a sub-optimal location. Less diversity in population becomes inevitable because of the significant convergence of other particles toward the leading charged particle. Consequently, the standard AEFA has the similar problems as the majority of metaheuristic algorithms such as; lack of population variety and tendency to be trapped in local optimum points.

The aforementioned problems are the main motivation of this study. Therefore, a hybridized version of Artificial Electric Field Algorithm (AEFA) with Cuckoo Search (CS) using Refraction Learning (RL) is proposed and called AEFA-CSR. In this hybrid version, two search methods with different properties are introduced to provide new solutions in the population. One of them makes use of the idea of light refraction to learn opposite solution. This method is proposed in order to enhance the lead particle search functionality and broaden its range to avoid sub-optimality. Additionally, the CS method is used to increase population variety. By weakening the leadership of the leading particle and allowing particles to identify viable solutions will enable particles to be moved from other sub-optimal solutions. Using the aforementioned strategies in combination, the performance of AEFA-CSR becomes quite noticeable.

There are many AEFA variations in the literature. However, to the best of our knowledge, AEFA-CSR is the first variant of AEFA that employs CS and RL. In order to overcome the limitations of AEFA, CS is chosen in particular for enhancing the population diversity and RL was specifically applied for increasing the ability of escaping from multiple local optimums. These features assess the AEFA-CSR to perform contributions to the field with an enhanced solution quality and for real-world engineering problems. The steps are specifically investigated to obtain enhanced and strengthened features in order to overcome the limitations of AEFA.

The rest of the paper is structured as follows. In Section “Related work”, related metaheuristic optimization techniques, in particular AEFA variations and their contributions are given. In Section “Methodology”, the methodology used to form AEFA-CSR is discussed. The algorithms; AEFA, CS, RL are elaborated individually by mentioning the main motivation of this study. In Section “Experimental results”, the experimental results obtained by the proposed algorithm AEFA-CSR with the counterpart algorithms such as well-studied and commonly used ones, recently developed ones and hybrid ones for the benchmark functions are presented. Additionally, some particular tests to observe the efficiency of AEFA-CSR are employed and the results are discussed. Moreover, to measure the suitability of the algorithm for real world engineering design problems, some problems are studied and analyzed. Finally, in Section “Conclusion and future work”, the concluding statements and the future work are given.

Related work

There is a widespread use of metaheuristic optimization techniques. These techniques are used to address optimization challenges and can be categorized into three groups: physics-inspired algorithms, evolution-inspired algorithms and swarm-inspired algorithms. Physics-inspired algorithms imitate the physical laws that govern how individuals engage with each other and their search space. These laws include the laws of inertia, light refraction, gravitation and many others24. Few of the popular algorithms in this category are Gravitational Search Algorithm (GSA)25, Colliding Bodies Optimization (CBO)26 and Henry Gas Solubility Optimization (HGSO)27. Evolution-inspired algorithms simulate the natural process of evolution by trying different combinations of individuals to find the best solution. The best individuals are combined to form a new generation, which is the key advantage of this technique. Few of these algorithms are Genetic Algorithm (GA)3, Differential Evolution (DE)6 and Evolutionary Programming (EP)28. Swarm-inspired algorithms aim to develop intelligent swarm behaviors like animal grazing and bird flocking. In the search space, promising areas will be discovered by a population that collaborate and interact. Few of the recent swarm-inspired algorithms are Whale Optimization Algorithm (WOA)29, Seagull Optimization Algorithm (SOA)30, Particle Swarm Optimization (PSO)4, and Harris Hawks Optimization (HHO)31.

Each of the numerous metaheuristic algorithms has its own drawbacks. The local and global search balance in Grey Wolf Optimizer (GWO) is weak24. Rodríguez et al. investigated the possibility of altering the initial control parameters to improve the exploration process in GWO32. GWO does not have an effective diverse population, so Lou et al. switched from the typical real-valued encoding method to a complex-valued one which makes the population more diverse33. WOA easily enters the local optimum and suffers from premature convergence. Using chaotic maps, Oliva et al. modified WOA to prevent the population from entering local optima34. Shi et al. proposed a new chaos-based operator and a new neighbor selection strategy to speed up the convergence of Artificial Bee Colony (ABC) both of which improved the standard ABC35.

AEFA is a type of physics-inspired algorithm that mimics the group of particles interact and move along the search space. It is simple to implement and has fewer parameters. As a result, a variety of optimization problems have been successfully solved using this algorithm. Controller design36, multi-objective optimization problems37, soil shear strength prediction38, pattern search 39, vehicle routing 40 and tumor detection 17 are some of the examples of the research problems solved by AEFA. To improve performance and address the shortcomings of the AEFA, numerous academics have developed variants of the original AEFA in recent years. Malisetti and Pamula used Moth Levy methodology to create Moth Levy Artificial Electrical Field Algorithm (ML-AEFA) to solve the problem of entering the sub-optimality41. An algorithm with new strategy for velocity update and population initialization known as improved Artificial Electrical Field Algorithm (IAEFA) has been introduced in order to enhance the robustness of AEFA in handling complex problems42. Furthermore, due to the algorithm’s focus primarily on local search, it is unable to effectively perform efficient global exploration across the entire solution space. Cheng et al. used a log-sigmoid function in order to strike a balance between exploration and exploitation16. AEFA with inertia and repulsion known as improved Artificial Electrical Field Algorithm (IAEFA) is introduced by Bi et al. to avoid premature convergence and improve population diversity43. Modified Artificial Electrical Field Algorithm (mAEFA) is proposed by Houssein et al.23. Levy flight, local escaping operator and opposition learning are introduced to avert stagnation in regions of local optimal23. Extensive experiment shows improvement in convergence rate and search ability of AEFA. To attain better exploitation and exploration balance, Anita, Yadav and Kumar introduced AEFA for solving constrained optimization problems (AEFA-C) by constraining particle interaction to the search space's border using new velocity and location updates19. The improved version of the AEFA that was proposed by Demirören et al. as Opposition based Artificial Electrical Field Algorithm (ObAEFA) makes use of the opposition-based learning strategy to improve the AEFA exploration capabilities36. The improved performance of ObAEFA was vetted through several experiments. Petwal and Rani’s experimental findings show that the proposed algorithm is highly competitive and achieves the desired level of population diversity37. AEFA based on opposition learning is proposed to enhance its global exploration and local development capabilities. The opposition learning strategy is used to increase population diversity and exploitation, while the chaos strategy is used to improve the quality of the initial population, experiments demonstrate the algorithm's superior performance44. Furthermore, Levy flight mechanism that provides multiple distinct evolutionary strategies and enhances the local search capability was introduced to AEFA by Sinthia and Malathi17. The elitism selection theory ensures that the fittest survive and mutation operators increase population diversity. The performance of the multi strategy Artificial Electrical Field Algorithm (M-AEFA) is enhanced by the dynamic combination of these adaptive strategies.

Hybridizing AEFA with other types of algorithms like swarm-inspired or physics-inspired algorithms to enhance the performance of AEFA is another area of research interest. The poor exploitation as a result of the stochastic nature of AEFA is be improved by hybridizing AEFA with Nelder-Mead (NM) simplex. AEFA performs the global search while NM performs the local search. Test on popular functions show improved performance20. One of the well-known optimization algorithms DE is applied to create an effective hybrid by combining the capabilities of AEFA and DE (AEFA-DE). On IEEE Congress on Evolutionary Computation-2019 (CEC-2019) test functions, the performance of the suggested hybrid method is validated. The experimental findings imply that AEFA-DE performs better than the compared algorithms45.

AEFA is able to conduct a more in-depth search across the solution space using the local search mechanism18. It was discovered that the location of the charged particle and the mutual attraction of the nearby particles influence how the artificial electric field algorithm updates its position. Despite of the strong local search ability of AEFA, it has limited global search capacity. It is because of the charged particles have a strong ability to interact with information. The Sine–Cosine Algorithm (SCA) can better balance local and global search than AEFA. As a result, SCA’s update mechanism is included into the AEFA (SC-AEFA) by changing the iterative process of the algorithm40.

The major goal of the derivatives of AEFA is to increase search accuracy and convergence speed, in accordance with the numerous improvement methodologies indicated above. As a result, this study presents the AEFA-CSR, an improved Artificial Electric Field Algorithm based on Cuckoo Search (CS) with Refraction Learning (RL).

Methodology

Artificial electrical field algorithm

The Coulomb's law states that "electrostatic forces of repulsion or attraction among two different charge particles are in direct proportion with the product of charges and in inverse proportion with the square of the distances between their positions". This idea serves as the basis for the limitation of AEFA9. In this case, the charged particles are referred to as agents and the charges of particles are used to evaluate the agents' potentials. All charged particles may experience an electrostatic force of either repelling or attracting as a result of the movement of objects in the search space. The charges use electrostatic forces to communicate directly and their positions provide the best solution. As a result, charges are referred to as a function of the population's fitness and the potential solution. The electrostatic force of attraction states that the charged particles with the least charge are drawn to the charged particles with the most charge. In addition, the solution with the highest charge is thought to be the best46. The pseudocode of AEFA can be seen in Algorithm 1.

Assuming \({Y}_{j}=\left({Y}_{j}^{1},{Y}_{j}^{2},\dots ,{{Y}_{j}^{{ D}_{N}}}\right)\forall j=1,2 ,\dots ,N\) where the jth particle has dimension \({D}_{N}\). By employing the location and best personal fitness value of particular particle, that particle is able offer the best global fitness value in AEFA. To acquire the optimum position fitness value of any particle \(j\) throughout interval formulas are expressed below43,

where a particle’s personal best fitness and current position are represented as \({B}_{j}\) and \({Y}_{j}\) respectively. Furthermore, the force on the charge \(l\) exacted by \(j\) throughout interval \(I\) is given in the following Eq. (2)9,

where \({q}_{l}\) and \({q}_{j}\) are charge of any particle \(l\) and \(j\) is expressed as,

\(\mathrm{Worst} \left(t\right)\) and \(\mathrm{Best}(t)\) represent the worst and best fitness among all charges. \(K(t)\) and \(\varepsilon \) denote the Coulomb’s constant and a positive epsilon constant, respectively. The Euclidean distances between two independent particles at interval \(t\) is therefore represented as \({DIST}_{jl}(t)\) and are calculated as follows.

In addition, Eq. (6) gives the assessment of \({\text{max }}_{\text{iter}}\) and current \({\text{iteration}}\) with respect to the Coulombs rule. The parameters of the Coulombs rule are represented by \({K}_{0}\) and \(\gamma \) respectively.

where \({\text{max }}_{\text{iter}}\) refers to total number of iteration preset at the beginning and iter is the current iteration number when computing Coulomb’s constant.

The total electric force on \(jth\) particle with the dimension \({D}_{N}\) is thus stated as follows,

where \(R\) depict random number from the range of [0–1]. Individual charge divided by total individual charge of all particles is expressed as \({Q}_{l}\left(t\right)\) as follows.

Equations (9) and (10) describe the equations for the respective electric field \(E{F}_{j}^{{D}_{N}}(t)\) and acceleration \({\mathrm{Acc}}_{j}^{{D}_{N}}(t)\) of the jth particle having the dimension as \({D}_{N}\) over interval \(t\),

where \(M{a}_{j}\left(t\right)\) represent the mass of particle \(j.\) Equations (11) and (12) provide the update equations for the velocity and location of the jth particle as follows,

Cuckoo search

The strong reproductive strategy of some cuckoo species encourages the idea of Cuckoo Search (CS)13,47 which is a type of metaheuristic algorithm inspired by the swarm intelligence. Three rules regulate CS operations and the last rule entails adding some fresh random solutions to the process48. An approximation of it is a fraction \(Pa\) of the \(n\) number of host nests to create new nests. The basic steps of the CS can be determined by following the cuckoo breeding behavior, which can be found in49 and was summed up in50. The optimization issue to be tackled is portrayed as \(f(Y)\) where nests are represented as \(Y=\) \(\left\{{Y}_{1},{Y}_{2}, {Y}_{3},{\dots Y}_{D}\right\}\) with \(D\) dimensions. Within the designated search space there are \(N\) host nests \(\left\{{Y}_{i},i=1,\dots ,N\right\}\). Each of the \({Y}_{i}=\left\{{Y}_{i1},\dots ,{Y}_{iD}\right\}\) nests indicates a potential solution to the optimization task at hand. Finding the new population of \({Y}_{i}(t+1)\) nests is one of the algorithm’s crucial phases. Additionally, the following equation shows the use of the Levy flight to gain the new nests at a time \(t\),

where \(\lambda \) is a Lévy flight parameter, \(\oplus \) is an entry-wise multiplication operation, and \(\alpha \) is the step size. As aforementioned the third rule imitate this notion, host birds will abandon nest given alien eggs are found. In this case, the following method can be used to regenerate new nest with probability \(Pa\),

where two randomly chosen nest from host of nests are \({Y}_{y}\left(t\right)\) and \({Y}_{j}\left(t\right)\). \(R\) is a random value between [0,1]. The step by step execution of CS can be seen in Algorithm 2.

Artificial electric field employing Cuckoo search algorithm with Refraction learning (AEFA-CSR)

AEFA-CSR algorithm has been proposed with the proper use of the previous techniques. The algorithm does not only combine the benefits of the Artificial Electric Field Algorithm (AEFA) but two of the algorithms; Cuckoo Search (CS) and Refraction Learning (RL) as well as incorporating a sub-optimal avoidance technique. This offers quite noticeable global search capabilities as well as the ability to avoid being trapped into local optimum points. In the Algorithm 3, the detailed steps of AEFA-CSR are given.

Motivation

An algorithm may become inefficient, if the exploration ability performs excessive. In the same way excessive exploitation may trap the algorithm in sub-optimal prematurely and may provide unacceptable results. Consequently, the balance between exploration and exploitation is crucial for an algorithm’s efficiency51. It was discovered that the location of the charged particle and the mutual attraction of the nearby particles cause the position update of the candidate solutions. AEFA has good exploitation ability with limited global search capacity due to the strong ability of interaction of the particles40. AEFA solely uses the electrostatic force. This technique of updating population only draws other agents to the lead agent quickly by limiting population variety. As a result, AEFA is susceptible to becoming temporarily trapped.

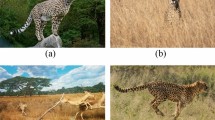

Figure 1 displays the migration of 20 agents utilizing the Sphere function, with the dimension set to 2 for visualization. The upper and lower bounds set to 10, − 10 and agents are shown at various iterations. The lead agent leads the migration as the other agents begin to transverse the solution space at iteration one. At iteration 10, AEFA starts to collect agents around the ideal area in the problem space as informed by the lead agent. The strong attraction force discourage exploration in other particles. This leads to reduction in population diversity as seen in Fig. 1. Peradventure the lead agent is trapped in some local optimal, other agents will also become trapped. In other words, the lead agent dominates the exploring capabilities of AEFA. The other particles need to be more capable of exploration and exploitation by weakening the leadership of lead agent. Also, the lead agent requires a local optimum avoidance approach. This drawback serves as the foundation for this work's motive.

Cuckoo search nest replacement strategy

Cuckoo Search (CS) nest replacement operator is used to replace some nests randomly with newly produced solutions to enhance the algorithm's exploration capability. With the use of this replacement operator in the CS, the exploration capability of the algorithm is quite strong52,53,54. Due to the efficiency that CS has, this method is applied to AEFA as an aid tool for its poor exploration ability.

This process involves by replacing a set of nests with new values based on a probabilistic selection. It is possible to choose any nest \({Y}_{i} \left(i\in \left[1,\dots ,N\right]\right)\) with a probability of \({p}_{a}\in [\mathrm{0,1}]\). A uniform random number \(\mathrm{R}\) within [0, 1] is assigned in order to carry out this procedure. When \(\mathrm{R}\) falls below \({p}_{a}\), the nest \({Y}_{i}\) is chosen and adjusted as shown in Eq. (15). In all other respects, \({Y}_{i}\) is unchanged. The Eq. (15) is shown as follows,

where \(y\) and \(j\) are random numbers from 1 to \(N\) and rand is a random number with normal distribution.

Refraction learning

Refraction Learning (RL) is based on the idea that light rays bend when they pass through an air-to-water transition. As an object's medium shifts the velocity shifts as well, bending in the direction of the boundary's normal. This theory aims to assist a candidate’s solutions in leaving the sub-optimal while retaining variety55. This kind of opposition-based learning can be considered more advanced to avoid sub-optimality. Refraction learning is used in Whale Optimization Algorithm (WOA) and Equalized Grey Wolf Optimizer (EGWO)24,56. In both of the applications, it can be seen from the statistical results that the local optimality is avoided via RL method. RL equations are stated as follows,

where \({x}^{*}\) represents a variable in the potential solution and \(\eta \) is the specified refraction index which is expressed as follows,

The refraction absorption index \(k\) is expressed as,

where Fig. 2 depicts the light's refraction with all variables, \(x\) and \({x}^{^{\prime}}\) denote the incidence point and the refraction point, respectively. Serving as upper limit, upper limit and center are the symbol of \(\mathrm{LB}\),\(\mathrm{UB}, O\). The parameters \(h\) and \({h}^{{^{\prime}}}\) define the distances from \(x\) to \(O\) and from \({x}^{{^{\prime}}}\) to \(O\). The refracted solution of \({x}^{*}\) is \({x}^{{^{\prime}}*}\).

Experimental results

Experiments are carried out on 20 standard benchmark functions to confirm the efficiency of AEFA-CSR for solving global optimization functions. The algorithms; Artificial Electric Field Algorithm (AEFA)9, Cuckoo Search (CS)47, Differential Evolution (DE)6, Firefly Algorithm (FA)8, Particle Swarm Optimization (PSO)4, Jaya Algorithm (JAYA)57, Hybrid-Flash Butterfly Optimization Algorithm (HFBOA)58, Sand Cat Swarm Optimization (SCSO)59, Salp Swarm Algorithm with Local Escaping Operator (SSALEO)60, Transient Search Optimization (TSO)61 and Chaotic Hybrid Butterfly Optimization Algorithm with Particle Swarm (HPSOBOA)62 were chosen for a detailed observation. The algorithms are chosen in a way to give a better insight to the readers such as well-studied and commonly used ones, recently developed ones which gained attention from the researchers in a short period of time and finally hybrid algorithms that are made up of powerful optimizers. Each algorithm is individually tested on the functions for 30 independent trials to ensure about their problem solving capabilities. The Wilcoxon Rank Sum test and the nonparametric Friedman test are used for statistical testing to represent the variations in the algorithms’ performances63,64. Several parameter combinations are put up to examine the influence of each control parameter on each of the algorithms. Additionally, convergence analysis, overall effectiveness with changing populations and dimensions, exploration and exploitation analyses, computational complexity tests are conducted. Afterwards, the efficiency of AEFA-CSR is validated using such real-world engineering problems; optimization of antenna S-parameters, welded-beam and compression designs.

Benchmark functions

Table 1 displays the fundamental properties of the 20 functions that were chosen for testing. F1 through F7 are unimodal functions that have just one global optimum solution within the specified boundary and are typically used to gauge the algorithm's ability to exploit regions of potential solution. F8–F20 are multimodal functions, F8–F13 are high-dimensional multimodal functions and F14–F20 are fixed-dimensional multimodal functions. These functions have multiple local extremes in each self-defined function's domain which are capable of detecting global exploration and can cause the algorithm's premature convergence.

Parameters

The values published in the original publications or often used in many research are chosen as parameters for the corresponding algorithms which are provided in Table 2.

Overall effectiveness

In this study, the results from Tables 3, 4, 5, 6 and 7 were used to evaluate the Overall Effectiveness (OE) of the AEFA-CSR to that of its counterparts. Equation (21) demonstrates that the number of test functions and losses for each algorithm can be used to determine the OE of the comparison algorithms60.

where N is the total number of function and L is number the number of losses incurred by an algorithm. In the tables, W and T indicate the number of wins and the number of ties respectively.

Dimension analysis

Given that dimensionality has a substantial impact on optimization accuracy, F1 through F13 are expanded from 30 to 100 dimensions to test the algorithms' abilities in solving the problems. The outputs of each algorithm is then assessed. The mean value (Avg) and standard deviation (Std) are used as the assessment indices to give the experiments greater credibility. Avg might indicate the algorithm's quality and accuracy of the solutions, while Std indicates the algorithm's stability. Population size is 30 and the maximum number of iterations for all algorithms is set to 1000.

The experimental findings with a dimension of 30 are displayed in Table 3. The analysis suggests that with unimodal functions (F1–F7), AEFA-CSR finds the best near ideal solution. It is noticeable that AEFA-CSR outperforms the other algorithms by a larger margin. This is because of the included RL mechanism which improves the algorithm's ability for local search as well as exploitation. With F8–F20 which are multimodal functions, AEFA-CSR performs the best on F8–F12, F14–F20 and obtained the global optimum for F9 and F11. All of the outcomes produced by AEFA-CSR are superior to those that are produced by AEFA. This suggests that after incorporating CS approach the improved population variety, the algorithm's exploration ability has increased in comparison to AEFA. A solid balance between exploitation and exploration is also successfully achieved by the algorithm as observed in the results of the fixed dimension functions.

Tables 3, 4 and 7 show the experimental study for varying dimensions while the population size kept as constant 30. Tables 4, 5 and 6 show the experimental study for varying population size while the dimension kept as constant 50. It can be observed that for a fixed population size, when the problem size grows in all the cases AEFA-CSR is superior to other compared algorithms in terms of Overall Effectiveness (OE) ranging from 76.93 to 90.0%. Similarly, it is apparent that for a fixed dimension size, when the population size grows, AEFA-CSR produced higher OE values ranging from 61.53 to 76.93%.

Convergence analysis

The convergence trajectories of 12 algorithms are presented in Fig. 3 to further analyze how well different algorithms accomplish convergence while addressing optimization functions. The dimension for the functions; F1–F13 is set to 30, while the functions F14–F20 are the fixed dimension functions. It is evident that the convergence precision of AEFA-CSR is significantly better on unimodal functions; F1–F6. AEFA-CSR maintained an extraordinary convergence rate and converges to the global optimal on F9, F11, F14, F16 and F20 in it performance with multimodal functions; F8–F20.

The convergence efficiency of AEFA-CSR is noticeably better than the AEFA. It is demonstrated that the population diversification adjustments and the introduction of RL technique are quite successful. The experimental findings show that AEFA-CSR has improved its optimization capability and convergence performance. Worthy of note is the algorithm’s rapid convergence will be applicable in optimization problems that the convergence is the essential component.

Statistical test

Garcia et al. made the point that it is insufficient to compare metaheuristic algorithm performance using only mean and standard deviation65. Therefore, during the iterative process, inescapable factors that have an impact on the experimental outcomes have been added66,67. The Wilcoxon Rank Sum test and the Friedman test are used in this study to examine the effectiveness of the algorithms.

The Friedman test is used to evaluate the experiment's validity by comparing the proposed AEFA-CSR to other algorithms. The Friedman test, one of the most popular and commonly applied statistical tests which is used to find significant differences between the outputs of two or more algorithms68. Table 8 displays the results of the Friedman tests. The algorithm with the lowest ranking is thought to be the most effective algorithm according to the Friedman test findings. The suggested AEFA-CSR is always rated first in the various scenarios; 30, 50 and 100 dimensions with population set to 30 according to the results in the Table 8. The AEFA-CSR has stronger competitive edge over the other algorithms.

The significance threshold p for the Wilcoxon Rank Sum test is set at 0.05. The technique is shown to be statistically better when p < 0.05. Table 9 displays the results achieved by Wilcoxon Rank Sum test. The symbols +/−/= denote that the suggested ways are better, worse or equal to than the existing approach67. Table 9 demonstrates that AEFA-CSR consistently offers R + values that are greater than R- values. Additionally, AEFA-CSR is superior than the other algorithms as an observation from Table 9 which shows that p values of the six algorithms are less than 0.05. That is an indication of which imply they are substantially different from AEFA-CSR. Table 8 further reveals when the dimension expanded from 30 to 100 with population set to 30, the + value of AEFA-CSR increased. This indicates the performance of AEFA-CSR does not decline like other algorithms. It is able to produce substantial improvement compared to the other algorithms as dimension increase. The findings demonstrate that the suggested AEFA-CSR has higher level of solution accuracy.

Conclusively, AEFA-CSR is more competitive than both traditional algorithms such as DE, PSO and innovative algorithms such as FA, JAYA, CS, SCSO and hybrid algorithms such as HPSOBOA. The newly introduced strategies are to be credited for the proposed algorithm’s greater achievements. Due to the RL solution strategy, improves local optimal escape mechanism and the CS lessen the dominance of the lead agent. Therefore, combining the two approaches significantly enhance the ability of AEFA to solve multimodal and unimodal functions.

Sensitivity analysis to parameters

Assessment to the sensitivity of parameters is also carried out in this part to investigate the impact of various parameters of AEFA-CSR. Population size, iteration number and dimension are maintained at 30, 1000 and 30 throughout the experiment. The starting values of the \(k\), \(\eta \) in Eqs. (15) and (16) are set to 1 and 0.25, then are altered throughout the test. The parameter \(Pa\) is varied within the range [0, 1] and the author of CS suggests a value of 0.25, while parameters \(k\) and \(\eta \) is varied within [1, 1000]47. As indicated in Table 10, there are eight variations of the AEFA-CSR developed. Each of which represents a combination of various parameters. Note that these settings can be changed to fit the particular problem. It can be also noted that for \(k\), \(\eta \) and \(Pa\), the values of 1000, 1000 and 0.1 are used in earlier experiments. The variation of AEFA-CSR with these parameters performed best based on the Friedman rank.

As seen in Table 10, AEFA-CSR with the parameters set as \(k\) = 1, \(\eta \) = 1 and \(Pa\) = 0.25 finds poorer results on all of the test functions compared to when the parameters of AEFA-CSR is set as \(k\) = 1000, \(\eta \) = 1000 and \(Pa\) = 0.1 with the exception of F1, F13 and F14-F20 where results are comparable, this imply that in functions with high dimensions (F1-F13)\(, k\) = 1000, \(\eta \) = 1000 and \(Pa\) = 0.1 is more robust to handle them with more accuracy. Also, result from Table 10 shows that with the parameters \(k\) = 1000, \(\eta \) = 1000 and \(Pa\) = 0.25 AEFA-CSR performance is comparable to, when the parameters are set as \(k\) = 10, \(\eta \) = 10 and \(Pa\) = 0.25 and \(k\) = 100, \(\eta \) = 100 and \(Pa\) = 0.25 with the exception of F8, F9 and F12 where the performance of \(k\) = 10, \(\eta \) = 10 and \(Pa\) = 0.25. Additionally, the result of varying \(Pa\) from 0.1 to 0.3 keeping \(k\) and \(\eta \) at 1000 have no significant difference on the extraordinary performance of the AEFA-CSR. From Table 11, it can be seen that there is no significant difference between the best set of parameters which is \(k\) = \(\eta \) = 1000 and Pa = 0.1 against \(k\) = \(\eta \) = 100 and Pa = 0.25, is \(k\) = \(\eta \) = 1000 and Pa = 0.25, \(k\) = \(\eta \) = 1000 and Pa = 0.2 and is \(k\) = \(\eta \) = 1000 and Pa = 0.3 as depicted by the P-values.

The average objective function values are illustrated for all test functions with number of independent runs as 30 are shown in Fig. 4 for various combinations of the \(k\), \(\eta \), and \(Pa\). As shown in Fig. 4, the parameter combination obtained show comparable exceptional outcomes for the majority of test functions when \(k\) and \(\eta \) = 1000, incases pairings of the \(k\) and \(\eta \) = 1, and 10. AEFA-CSR tends to perform poorly compared to when \(k\) and \(\eta \) = 1000. The illustrated convergence trajectory depict that to attain the best performance for AEFA-CSR, \(k\) and \(\eta \) may be tuned to a somewhat big number ideally 1000.

Exploration and exploitation analysis

Exploration is an ability of an optimization algorithm pursuing the diverse solutions in the unexplored area while exploitation is an ability of an optimization algorithm pursuing the solutions around the optimum solution of a problem. Since F1 and F2 are unimodal functions, they are quite appropriate to observe the algorithm’s exploitation ability. Similarly, F10 and F12 are multimodal functions which have multiple local optimums and they are quite suitable for measuring the exploration ability of the algorithm.

When there is an increase in the dimension, the local optimum points in the multimodal functions increase drastically. As it is indicated in Tables 3, 4 and 7, the proposed AEFA-CSR produced better results on varying dimensions 30, 50 and 100. It is a good indication that the algorithm overcome the multiple local optimum points by reaching the global optimum. This is achieved by a balanced exploration and exploitation.

Additionally, Fig. 5 is given to show the exploration and the exploitation stages of the algorithm AEFA-CSR graphically. For the functions analyzed in this figure, it is seen that the algorithm starts with a broad exploration and narrow exploitation. As the optimization process goes on, the balance between these are assembled.

Computational complexity

The computational complexity Big O notation is one of the metrics to evaluate the performance of metaheuristics. As it is shown in the Algorithm 3, there is only one loop and it is considered as O (N) where N is the number of agents in the population. When it comes to the entire complexity which include modifying the agents towards the optimum solution by calculating the fitness values, it is considered as O (\({\text{max }}_{{\text{iter}}{\text{ation}}}\) x N x D) where \({\text{max }}_{\text{iteration}}\) is the number of iterations and D is the dimension.

AEFA-CSR is compared with its competitors with respect to their computational time in Table 12. When we have a separate glance on the algorithms AEFA and CS, it is obvious that these algorithms require higher CPU time than the others. Since the proposed algorithm AEFA-CSR is a combination of AEFA, CS and RL, each of the method needs to be carried out during the optimization process individually. Therefore, the CPU time of AEFA-CSR is not always better than the compared methods because of its complex nature. Overall, it can be said that AEFA-CSR requires more computational time, but its efficiency is a way ahead of these algorithms. By considering the significant contributions of the AEFA-CSR, even in real engineering problems, an equilibrium can be constructed between the high accuracy and the amount of time required to solve the problems.

Engineering problems application

Optimization of antenna S-parameters

The suitability and efficiency of the algorithm in solving engineering problems are shown in this section. In order to demonstrate the suitability of the algorithm, a test suite that is made up of eight test problems for antenna design is chosen. The results are compared to those of other algorithms. Several formulas aimed at analyzing different antenna design problem known as test functions make up the test suite69,70. These enable effective assessment of an algorithm's performance.

It is not practical to evaluate optimization algorithms using electromagnetic simulation, since doing so for an antenna often takes a lot of time. Therefore, it is desirable for the pre-test of many antenna optimization techniques to have an effective test suite with analytical test functions. Several authors have proposed several test suites69,70,71. However, the test suite intended for antenna parameter optimization is rarely researched. The features of many antennas types using various types of formulas can be represented by a suitable test suite for antenna design. The objective functions that Zhang et al.72 successfully researched and proposed a test suite which covers a diverse characteristic of various types of antenna problems72,73 as displayed in Table 13.

The dimensions of the test functions F21–F24 and F26–F28 with the exception of F25 which is a non-scalable test function are set to 8 as suggested by Zhang et al.72. Parameters of each algorithm as seen Table 2 are maintained with population size of 20 and iteration size of 500.

The average (Avg) and standard deviation (Std) for each test function across 30 runs are shown in Table 14 and Fig. 6 displays their averaged convergence curves. In comparison to CS, PSO, DE, and JAYA, AEFA-CSR converges faster for F21 and F23 which represent single-antenna design problem. Also, obtaining the global optimal value for this functions suggests that AEFA-CSR is effective in addressing the single-antenna design problem. When compared to the other algorithms, AEFA-CSR has high efficiency for F22, F23 and F24 which depict multi-antenna properties and it is also able to escape the sub-optimality in F5. Because, multi-antenna problem typically exhibits the features of F22, F23, F24 and F25 concurrently. We may assume that AEFA-CSR is well equipped to solve them. The performance of AEFA-CSR for solving F26, F27 and F28 with the isolation characteristic of multi-antenna is comparable to that for F5. Refraction learning's ability to simulate complicated landscapes with several local extremums and steep, long, narrow valleys. CS improving variety in the population are the primary factors in AEFA-CSR's success.

Welded beam design problem

As a further validation of the performance of AEFA-CSR in real world optimization problem a well-known problem is chosen which is the welded beam design problem who was formulated by Rao74 and used in CEC 2020 test function suite75. The welded beam design problem has several design parameters as outlined in55. There are four design variables that need to be determined \({x}_{1},{x}_{2},{x}_{3}\) and \({x}_{4}.\) Under specific restrictions, the objective of WBD optimization is to reduce the overall cost. The restrictions are the buckling critical load \(PC\), the bending stress \(\sigma \), the shear stress \(\tau \), beam deflection \(\delta \) and the tail of the beam. The following are the objective function and constraints. The objective function which needs to be minimized is given below in Eq. (22).

The objective function is subject to the constraint equations given below (23) to (29).

where

with the constants; \(P=6000\mathrm{lb}\), \(L=14\mathrm{in},E=30\times {10}^{6}\mathrm{psi},G=12\times {10}^{6}\mathrm{psi}\), \({\tau }_{\mathrm{max}}=\mathrm{13,600psi},{\sigma }_{\mathrm{max}}=30,000\mathrm{psi}, {\delta }_{\mathrm{max}}=0.25\mathrm{in}\).

The boundaries of the variables are given as;

The results are compared with all the algorithms used for the experiments; AEFA, CS, DE, FA, PSO, JAYA, HFBOA, SSALEO, TSO, HPSOBOA and SCSCO. The population size is 30 and the algorithms are individually performed 20 times with a maximum of 500 iterations.

The best value of each optimization result for the twelve methods used to solve the welded beam design problem is shown in Table 15. Table 15 indicates that AEFA-CSR performs better than other methods on the optimization of welded beam design problem. It obtained the optimum value which is the least cost to be 1.695258.

Tension/compression spring optimization design problem

The tension/compression spring design problem’s optimization objective is to lower the spring weight60. It is a continuous constrained problem and the variables are wire diameter d, average coil diameter D, and effective coil number P. Constraints include subject to minimal deviation (g1), shear stress (g2), shock frequency (g3), and outside diameter limit (g4). The objective function and constrained equations are given below.

For decision variables, boundaries are given as,

The results are compared with all the algorithms used for the experiments; AEFA, CS, DE, FA, PSO, JAYA, HFBOA, SSALEO, TSO, HPSOBOA and SCSCO. The population size is 30 and the algorithms are individually performed 20 times with a maximum of 500 iterations.

The findings in Table 16 show that each algorithm's weight is relatively low, which puts the algorithms' engineering problem-solving precision to the test. The AEFA-CSR produced the lowest weight as 0.012663 when the algorithms are taken into consideration.

In order to measure the effect of hybridization applied to AEFA, such tests are carried out; overall effectiveness in changing dimension and population, convergence analysis, Wilcoxon rank-sum and Friedman statistical tests, sensitivity, exploration and exploitation analyses and computational complexity. Additionally, it’s performance is validated through a set of real engineering design problems. In all analyses, it is depicted that CS significantly increase the population diversity while RL updates the lead agent. Therefore, it gets closer to the global optimum at each time which is a result of successfully built balance between exploration and exploitation.

Conclusion and future work

This article proposes a solid optimizer AEFA-CSR that allows to solve engineering optimization problems with satisfactory performance. The comprehensive experimental analyses are conducted by including the commonly used, recently developed and hybrid algorithms on a benchmark test suite of 20 problems and three engineering design problems. It is well observed that the proposed algorithm AEFA-CSR is superior than the compared algorithms in terms of overall effectiveness. The algorithm’s performance for increasing population size is measured with the higher overall effectiveness in between 61.53 and 76.93%. When the dimension grows, the overall effectiveness is measured in between 76.93 and 90.0%. For the Wilcoxon Rank Sum statistical test for different control parameters combinations, AEFA-CSR attained the best performance than the other algorithms. However, in terms of computational time, since the algorithm is a combination of three separate methods, the results are not desirable as they are expected. Although the running time of AEFA-CSR is slightly more than the others, the running time is still acceptable and the algorithm produces more accurate and the efficient results than the others for all the functions analyzed. As a future work, the computational time results can be analyzed for further improvements. By considering the important contributions of AEFA-CSR, a balance might be built between the high accuracy and the computational time. Apart from this, it can be said with a confidence that the AEFA-CSR is a quite promising optimization algorithm and quite applicable in solving real-world engineering problems.

Data availability

The data obtained through the experiments are available upon a reasonable request from the first author O.R.A.

References

Hjalmarsson, H. Iterative feedback tuning: An overview. Int. J. Adapt. Control Signal Process. 16(5), 373–395. https://doi.org/10.1002/acs.714 (2002).

Del Ser, J. et al. Bio-inspired computation: Where we stand and what’s next. Swarm Evol. Comput. 48, 220–250. https://doi.org/10.1016/j.swevo.2019.04.008 (2019).

Holland, J. H. Genetic algorithms. Sci. Am. 267(1), 66–72. https://doi.org/10.1038/scientificamerican0792-66 (1992).

Kennedy, J. & Eberhart, R. Particle swarm optimization. In Proceedings of ICNN’95: International Conference on Neural Networks, vol. 4, 1942–1948 (1995). https://doi.org/10.1109/ICNN.1995.488968.

Yildiz, B. S. et al. A new hybrid artificial hummingbird-simulated annealing algorithm to solve constrained mechanical engineering problems. Mater. Test. 64(7), 1043–1050. https://doi.org/10.1515/mt-2022-0123 (2022).

Storn, R. & Price, K. Differential evolution: A simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 11(4), 341–359. https://doi.org/10.1023/A:1008202821328 (1997).

Yildiz, B. S. et al. A novel hybrid flow direction optimizer-dynamic oppositional based learning algorithm for solving complex constrained mechanical design problems. Mater. Test. 65(1), 134–143. https://doi.org/10.1515/mt-2022-0183 (2023).

Yang, X.-S. Firefly algorithm, Lévy flights and global optimization. In Research and Development in Intelligent Systems XXVI, London, 209–218 (2010). https://doi.org/10.1007/978-1-84882-983-1_15.

Anita, A. & Yadav, A. AEFA: Artificial electric field algorithm for global optimization. Swarm Evol. Comput. 48, 93–108. https://doi.org/10.1016/j.swevo.2019.03.013 (2019).

Adegboye, O. R. & Ülker, E. D. A quick performance assessment for artificial electric field algorithm. In 2022 International Congress on Human-Computer Interaction, Optimization and Robotic Applications (HORA), 1–5 (2022). https://doi.org/10.1109/HORA55278.2022.9799867.

Xiao, S. et al. Artificial bee colony algorithm based on adaptive neighborhood search and Gaussian perturbation. Appl. Soft Comput. 100, 106955. https://doi.org/10.1016/j.asoc.2020.106955 (2021).

Kumar, S., Tejani, G. G., Pholdee, N., Bureerat, S. & Mehta, P. Hybrid heat transfer search and passing vehicle search optimizer for multi-objective structural optimization. Knowl. Based Syst. 212, 106556. https://doi.org/10.1016/j.knosys.2020.106556 (2021).

Yang, X.-S. & Deb, S. Cuckoo search via Lévy flights. World Congress Nat. Biol. Inspired Comput. (NaBIC) 2009, 210–214. https://doi.org/10.1109/NABIC.2009.5393690 (2009).

Kumar, S. et al. Chaotic marine predators algorithm for global optimization of real-world engineering problems. Knowl. Based Syst. 261, 110192. https://doi.org/10.1016/j.knosys.2022.110192 (2023).

Mehta, P. et al. A Nelder Mead-infused INFO algorithm for optimization of mechanical design problems. Mater. Test. 64(8), 1172–1182. https://doi.org/10.1515/mt-2022-0119 (2022).

Cheng, J., Xu, P. & Xiong, Y. An improved artificial electric field algorithm and its application in neural network optimization. Comput. Electr. Eng. 101, 108111. https://doi.org/10.1016/j.compeleceng.2022.108111 (2022).

Sinthia, P. & Malathi, M. Cancer detection using convolutional neural network optimized by multistrategy artificial electric field algorithm. Int. J. Imaging Syst. Technol. 31(3), 1386–1403. https://doi.org/10.1002/ima.22530 (2021).

Niroomand, S. Hybrid artificial electric field algorithm for assembly line balancing problem with equipment model selection possibility. Knowl. Based Syst. 219, 106905. https://doi.org/10.1016/j.knosys.2021.106905 (2021).

Anita, A., Yadav, A. & Kumar, N. Artificial electric field algorithm for engineering optimization problems. Expert Syst. Appl. 149, 113308. https://doi.org/10.1016/j.eswa.2020.113308 (2020).

Izci, D., Ekinci, S., Orenc, S. & Demirören, A. Improved artificial electric field algorithm using Nelder-Mead simplex method for optimization problems. In 2020 4th International Symposium on Multidisciplinary Studies and Innovative Technologies (ISMSIT), 1–5 (2020). https://doi.org/10.1109/ISMSIT50672.2020.9255255.

Das, H., Naik, B. & Behera, H. S. Optimal selection of features using artificial electric field algorithm for classification. Arab. J. Sci. Eng. 46(9), 8355–8369. https://doi.org/10.1007/s13369-021-05486-x (2021).

Anita, A., Yadav, A. & Kumar, N. Application of artificial electric field algorithm for economic load dispatch problem. In Proceedings of the 11th International Conference on Soft Computing and Pattern Recognition (SoCPaR 2019), 71–79 (2021). https://doi.org/10.1007/978-3-030-49345-5_8.

Houssein, E. H., Hashim, F. A., Ferahtia, S. & Rezk, H. An efficient modified artificial electric field algorithm for solving optimization problems and parameter estimation of fuel cell. Int. J. Energy Res. 45(14), 20199–20218. https://doi.org/10.1002/er.7103 (2021).

Sun, L., Feng, B., Chen, T., Zhao, D. & Xin, Y. Equalized grey wolf optimizer with refraction opposite learning. Comput. Intell. Neurosci. 2022, e2721490. https://doi.org/10.1155/2022/2721490 (2022).

Rashedi, E., Nezamabadi-pour, H. & Saryazdi, S. GSA: A gravitational search algorithm. Inf. Sci. 179(13), 2232–2248. https://doi.org/10.1016/j.ins.2009.03.004 (2009).

Kaveh, A. & Mahdavi, V. R. Colliding bodies optimization method for optimum discrete design of truss structures. Comput. Struct. 139, 43–53. https://doi.org/10.1016/j.compstruc.2014.04.006 (2014).

Hashim, F. A., Houssein, E. H., Mabrouk, M. S., Al-Atabany, W. & Mirjalili, S. Henry gas solubility optimization: A novel physics-based algorithm. Future Gener. Comput. Syst. 101, 646–667. https://doi.org/10.1016/j.future.2019.07.015 (2019).

Fogel, G. B. Evolutionary programming. In Handbook of Natural Computing (eds Rozenberg, G. et al.) 699–708 (Springer, 2012). https://doi.org/10.1007/978-3-540-92910-9_23.

Mirjalili, S. & Lewis, A. The Whale optimization algorithm. Adv. Eng. Softw. 95, 51–67. https://doi.org/10.1016/j.advengsoft.2016.01.008 (2016).

Dhiman, G. & Kumar, V. Seagull optimization algorithm: Theory and its applications for large-scale industrial engineering problems. Knowl. Based Syst. 165, 169–196. https://doi.org/10.1016/j.knosys.2018.11.024 (2019).

Heidari, A. A. et al. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 97, 849–872. https://doi.org/10.1016/j.future.2019.02.028 (2019).

Rodríguez, L., Castillo, O. & Soria, J. Grey wolf optimizer with dynamic adaptation of parameters using fuzzy logic. IEEE Congress Evol. Comput. (CEC) 2016, 3116–3123. https://doi.org/10.1109/CEC.2016.7744183 (2016).

Luo, Q., Zhang, S., Li, Z. & Zhou, Y. A novel complex-valued encoding grey wolf optimization algorithm. Algorithms 9, 1. https://doi.org/10.3390/a9010004 (2016).

Oliva, D., Abd El Aziz, M. & Ella Hassanien, A. Parameter estimation of photovoltaic cells using an improved chaotic whale optimization algorithm. Appl. Energy 200, 141–154. https://doi.org/10.1016/j.apenergy.2017.05.029 (2017).

Shi, Y., Pun, C.-M., Hu, H. & Gao, H. An improved artificial bee colony and its application. Knowl. Based Syst. 107, 14–31. https://doi.org/10.1016/j.knosys.2016.05.052 (2016).

Demirören, A., Ekinci, S., Hekimoğlu, B. & Izci, D. Opposition-based artificial electric field algorithm and its application to FOPID controller design for unstable magnetic ball suspension system. Eng. Sci. Technol. Int. J. 24(2), 469–479. https://doi.org/10.1016/j.jestch.2020.08.001 (2021).

Petwal, H. & Rani, R. an improved artificial electric field algorithm for multi-objective optimization. Processes 8, 5. https://doi.org/10.3390/pr8050584 (2020).

Cao, M.-T., Hoang, N.-D., Nhu, V. H. & Bui, D. T. An advanced meta-learner based on artificial electric field algorithm optimized stacking ensemble techniques for enhancing prediction accuracy of soil shear strength. Eng. Comput. 38(3), 2185–2207. https://doi.org/10.1007/s00366-020-01116-6 (2022).

Alanazi, A. & Alanazi, M. Artificial electric field algorithm-pattern search for many-criteria networks reconfiguration considering power quality and energy not supplied. Energies 15(14), 14. https://doi.org/10.3390/en15145269 (2022).

Zheng, H. et al. An enhanced artificial electric field algorithm with sine cosine mechanism for logistics distribution vehicle routing. Appl. Sci. 12(12), 12. https://doi.org/10.3390/app12126240 (2022).

Malisetti, N. & Pamula, V. K. Energy efficient cluster based routing for wireless sensor networks using moth levy adopted artificial electric field algorithm and customized grey wolf optimization algorithm. Microprocess. Microsyst. 93, 104593. https://doi.org/10.1016/j.micpro.2022.104593 (2022).

Sobhanam, A. P., Mary, P. M., Mariasiluvairaj, W. I. & Wilson, R. D. Automatic generation control using an improved artificial electric field in multi-area power system. IETE J. Res. https://doi.org/10.1080/03772063.2021.1958076 (2021).

Bi, J., Zhou, Y., Tang, Z. & Luo, Q. Artificial electric field algorithm with inertia and repulsion for spherical minimum spanning tree. Appl. Intell. 52(1), 195–214. https://doi.org/10.1007/s10489-021-02415-1 (2022).

Tian, Y. et al. Improved artificial electric field algorithm based on multi-strategy and its application. Informatica 46(3), 3 (2022).

Chauhan, D. & Yadav, A. A hybrid of artificial electric field algorithm and differential evolution for continuous optimization problems. In Proceedings of 7th International Conference on Harmony Search, Soft Computing and Applications, 507–520 (2022). https://doi.org/10.1007/978-981-19-2948-9_49.

Anita, A. & Yadav, A. Discrete artificial electric field algorithm for high-order graph matching. Appl. Soft Comput. 92, 106260. https://doi.org/10.1016/j.asoc.2020.106260 (2020).

Yang, X.-S. & Deb, S. Engineering Optimisation by Cuckoo Search. (2010). https://doi.org/10.48550/arXiv.1005.2908.

Yang, X.-S. & Deb, S. Multiobjective cuckoo search for design optimization. Comput. Oper. Res. 40(6), 1616–1624. https://doi.org/10.1016/j.cor.2011.09.026 (2013).

Mareli, M. & Twala, B. An adaptive cuckoo search algorithm for optimisation. Appl. Comput. Inform. 14(2), 107–115. https://doi.org/10.1016/j.aci.2017.09.001 (2018).

Abed-alguni, B. H. & Paul, D. J. Hybridizing the cuckoo search algorithm with different mutation operators for numerical optimization problems. J. Intell. Syst. 29(1), 1043–1062. https://doi.org/10.1515/jisys-2018-0331 (2020).

Chou, Y.-H., Kuo, S.-Y., Yang, L.-S. & Yang, C.-Y. Next generation metaheuristic: Jaguar algorithm. IEEE Access 6, 9975–9990. https://doi.org/10.1109/ACCESS.2018.2797059 (2018).

Wang, G.-G., Gandomi, A. H., Yang, X.-S. & Alavi, A. H. A new hybrid method based on krill herd and cuckoo search for global optimisation tasks. Int. J. Bio-Inspired Comput. 8(5), 286–299. https://doi.org/10.1504/IJBIC.2016.079569 (2016).

Alkhateeb, F. & Abed-alguni, B. H. A hybrid cuckoo search and simulated annealing algorithm. J. Intell. Syst. 28(4), 683–698. https://doi.org/10.1515/jisys-2017-0268 (2019).

Cuevas, E. & Reyna-Orta, A. A cuckoo search algorithm for multimodal optimization. Sci. World J. 2014, 1–20. https://doi.org/10.1155/2014/497514 (2014).

Zhang, Q. et al. A novel chimp optimization algorithm with refraction learning and its engineering applications. Algorithms 15, 6. https://doi.org/10.3390/a15060189 (2022).

Long, W., Wu, T., Jiao, J., Tang, M. & Xu, M. Refraction-learning-based whale optimization algorithm for high-dimensional problems and parameter estimation of PV model. Eng. Appl. Artif. Intell. 89, 103457. https://doi.org/10.1016/j.engappai.2019.103457 (2020).

Venkata Rao, R. Jaya: A simple and new optimization algorithm for solving constrained and unconstrained optimization problems. Int. J. Ind. Eng. Comput. 7, 19–34. https://doi.org/10.5267/j.ijiec.2015.8.004 (2016).

Zhang, M., Wang, D. & Yang, J. Hybrid-flash butterfly optimization algorithm with logistic mapping for solving the engineering constrained optimization problems. Entropy 24, 4. https://doi.org/10.3390/e24040525 (2022).

Seyyedabbasi, A. & Kiani, F. Sand cat swarm optimization: A naturE−inspired algorithm to solve global optimization problems. Eng. Comput. https://doi.org/10.1007/s00366-022-01604-x (2022).

Qaraad, M. et al. Comparing SSALEO as a scalable large scale global optimization algorithm to high-performance algorithms for real-world constrained optimization benchmark. IEEE Access 10, 95658–95700. https://doi.org/10.1109/ACCESS.2022.3202894 (2022).

Qais, M. H., Hasanien, H. M. & Alghuwainem, S. Transient search optimization: a new meta-heuristic optimization algorithm. Appl. Intell. 50(11), 3926–3941. https://doi.org/10.1007/s10489-020-01727-y (2020).

Zhang, M., Long, D., Qin, T. & Yang, J. A chaotic hybrid butterfly optimization algorithm with particle swarm optimization for high-dimensional optimization problems. Symmetry 12(11), 11. https://doi.org/10.3390/sym12111800 (2020).

Meddis, R. Unified analysis of variance by ranks. Br. J. Math. Stat. Psychol. 33(1), 84–98. https://doi.org/10.1111/j.2044-8317.1980.tb00779.x (1980).

Wilcoxon, F. Individual comparisons by ranking methods. In Breakthroughs in Statistics (eds Kotz, S. & Johnson, N. L.) 196–202 (Springer, 1992). https://doi.org/10.1007/978-1-4612-4380-9_16.

García, S., Molina, D., Lozano, M. & Herrera, F. A study on the use of non-parametric tests for analyzing the evolutionary algorithms’ behaviour: A case study on the CEC’2005 special session on real parameter optimization. J. Heuristics 15(6), 617. https://doi.org/10.1007/s10732-008-9080-4 (2008).

Fan, Q. et al. Beetle antenna strategy based grey wolf optimization. Expert Syst. Appl. 165, 113882. https://doi.org/10.1016/j.eswa.2020.113882 (2021).

Derrac, J., García, S., Molina, D. & Herrera, F. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 1(1), 3–18. https://doi.org/10.1016/j.swevo.2011.02.002 (2011).

Zamani, H., Nadimi-Shahraki, M. H. & Gandomi, A. H. CCSA: Conscious neighborhood-based crow search algorithm for solving global optimization problems. Appl. Soft Comput. 85, 105583. https://doi.org/10.1016/j.asoc.2019.105583 (2019).

Gee, S. B., Tan, K. C. & Abbass, H. A. A benchmark test suite for dynamic evolutionary multiobjective optimization. IEEE Trans. Cybern. 47(2), 461–472. https://doi.org/10.1109/TCYB.2016.2519450 (2017).

Liu, B., Yang, H. & Lancaster, M. J. Synthesis of coupling matrix for diplexers based on a self-adaptive differential evolution algorithm. IEEE Trans. Microw. Theory Tech. 66(2), 813–821. https://doi.org/10.1109/TMTT.2017.2772855 (2018).

Wang, H., Jin, Y. & Doherty, J. A generic test suite for evolutionary multifidelity optimization. IEEE Trans. Evol. Comput. 22(6), 836–850. https://doi.org/10.1109/TEVC.2017.2758360 (2018).

Zhang, Z., Chen, H., Jiang, F., Yu, Y. & Cheng, Q. S. A benchmark test suite for antenna S-parameter optimization. IEEE Trans. Antennas Propag. 69(10), 6635–6650. https://doi.org/10.1109/TAP.2021.3069524 (2021).

Koziel, S. & Pietrenko-Dabrowska, A. Rapid variable-resolution parameter tuning of antenna structures using frequency-based regularization and sparse sensitivity updates. IEEE Trans. Antennas Propag. 1, 1–1. https://doi.org/10.1109/TAP.2022.3209281 (2022).

Ramakrishnan, B. & Rao, S. S. A general loss function based optimization procedure for robust design. Eng. Optim. 25(4), 255–276. https://doi.org/10.1080/03052159608941266 (1996).

Kumar, A. et al. A test-suite of non-convex constrained optimization problems from the real-world and some baseline results. Swarm Evol. Comput. 56, 100693. https://doi.org/10.1016/j.swevo.2020.100693 (2020).

Author information

Authors and Affiliations

Contributions

O.R.A.: designing experiment, doing tests, data analysis and writing. E.D.Ü.: project administration, data analysis, revising and editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Adegboye, O.R., Deniz Ülker, E. Hybrid artificial electric field employing cuckoo search algorithm with refraction learning for engineering optimization problems. Sci Rep 13, 4098 (2023). https://doi.org/10.1038/s41598-023-31081-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-31081-1

This article is cited by

-

Elitist-opposition-based artificial electric field algorithm for higher-order neural network optimization and financial time series forecasting

Financial Innovation (2024)

-

Chaotic opposition learning with mirror reflection and worst individual disturbance grey wolf optimizer for continuous global numerical optimization

Scientific Reports (2024)

-

DGS-SCSO: Enhancing Sand Cat Swarm Optimization with Dynamic Pinhole Imaging and Golden Sine Algorithm for improved numerical optimization performance

Scientific Reports (2024)

-

A Comprehensive Survey on Artificial Electric Field Algorithm: Theories and Applications

Archives of Computational Methods in Engineering (2024)

-

Cuckoo search algorithm based on cloud model and its application

Scientific Reports (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.