Abstract

In this study, point and interval estimations for the power Rayleigh distribution are derived using the joint progressive type-II censoring technique. The maximum likelihood and Bayes methods are used to estimate the two distributional parameters. The estimators’ approximate credible intervals and confidence intervals have also been determined. The Markov chain Monte Carlo (MCMC) method is used to provide the findings of Bayes estimators for squared error loss and linear exponential loss functions. The Metropolis–Hasting technique uses Gibbs to generate MCMC samples from the posterior density functions. A real data set is used to show off the suggested approaches. Finally, in order to compare the results of various approaches, a simulation study is performed.

Similar content being viewed by others

Introduction

The joint censoring method is extremely advantageous and practical when conducting comparative life tests of products from different units inside the same facility. Assume that two different lines within the same facility are producing products. Assume that two independent samples of sizes m and n are chosen at random from these two production lines and placed in a life-testing experiment at the same time. The experimenter uses a combination progressive type-II censoring strategy to save time, money, and the life-testing is completed when a specified number of failures occur to see:1,2,3,4, and5. In the literature, many authors have looked at the joint progressive type II censoring scheme (JP-II-CS) and inference methods. For example6 used the joint progressive type II censoring scheme (JP-II-CS) to incorporate the likelihood inference of two exponential distributions7 investigated Bayes estimation with JP-II-CS and the LINEX loss function8 provided the likelihood inference for k exponential distributions under the JP-II-CS9 introduced Weibull parameter point and interval estimates based on JP-II-CS. The JP-II-CS of two populations was considered by10, because the lifetime distributions of the experimental units in both populations follow two-parameter generalised exponential distributions and11 introduced the statistical inference of inverted exponentiated Rayleigh distribution under joint progressively type-II censoring. Also,12 proposed the power Rayleigh distribution, which has been utilised for lifetime modelling in reliability analysis,13 the lifetime performance index with power Rayleigh distribution is estimated with progressive first-failure censoring,14 presented methods for simulating the parameter of the Akshaya distribution using Bayesian and Non-Bayesian estimation,15 introduced a new distribution called generalized power Akshaya distribution and its applications,16 discussed characteristics and applications of the extended Cosine generalized family of distributions for reliability modeling, and17 developed a novel, flexible modification of the log-logistic distribution to model the COVID-19 mortality rate. It has also been fitted using a wide range of observational data from a variety of fields, including meteorology, finance, and hydrology (see18). Moreover,19 discussed an application of type II half logistic Weibull distribution inference for reliability analysis with bladder cancer. The joint progressive censoring scheme is quite useful to compare the lifetime distribution of products from different units which are being manufactured by two different lines in the same facility. The joint progressive censoring (JPC) scheme introduced by Rasouli and Balakrishnan6 can be briefly stated as follows. It is assumed that two samples of products of sizes m and n, respectively, are selected from these two lines of operation (say Line 1 and Line 2) for two populations Pop-1 and Pop-2 as shown in Figs. 1 and 2, and they are placed on a life testing experiment simultaneously.

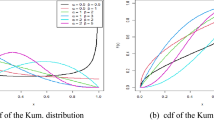

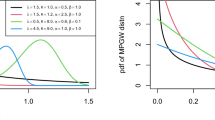

With application to flood frequency analysis, the power Rayleigh distribution has extremes. JP-II-CS is described as follows by20. The family of Rayleigh distribution is formed such as generalized Rayleigh distribution is introduced by12 and 21,22 discussed the log Rayleigh distribution23, derived beta generalized Rayleigh distribution, Weibull Rayleigh distribution is introduced by24 and 25 introduced exponentiated Rayleigh distribution. Several authors have considered extensions of Rayleigh distribution such as inverse Rayleigh by26, weighted inverse Rayleigh distribution by27 and transmuted Rayleigh distribution by28. The quality of the procedures used in statistical analysis depends heavily on the assumed probability model or distribution. Let \(X_{1},X_{2},...., X_{m}\) represent the lifetimes of m units for product A, and they are considered to be independent and identically distributed (iid) random variables from the power Rayleigh distribution with a cumulative distribution function (cdf) of

and probability density function (pdf) is

Similarly, let \(Y_{1},Y_{2},...., Y_{n}\), are lifetimes of n units for product B, and they are supposed to iid random variables from the power Rayleigh distribution with cdf is given by

and probability density function (pdf) is

where \(\beta _{1}\) and \(\beta _{2}\) are the shape parameters and \(\alpha _{1}\) and \(\alpha _{2}\) are a scale parameters. Let \(K = m+n\) denotes the total sample size and \(\lambda _{1}\le \lambda _{2} \le ...\le \lambda _{K}\) indicates the order statistics of the K random variables \({X_{1},X_{2},...., X_{m},Y_{1},Y_{2},...., Y_{n} }\). The JP-II-CS is applied as follows. At the time of the first failure, \(R_{1}\) units are randomly removed from the remaining \(K - 1\) surviving units. Similarly, at the time of the second failure, \(R_{2}\) units are randomly withdrawn from the remaining \(K - R_{1} - 2\) surviving units, etc. In the end, at the time of the rth failure units, all remaining \(R_{r} = K- r -\sum _{i=1}^{r-1} R_{i}\) surviving units are withdrawn from the life-testing experiment. Where the JP-II-CS \((R = R_1, R_2,...,R_r)\) and the total number of failures r are prefixed before the experiment. Suppose that \(R_i = S_i + T_i, i = 1, ..., r\) and \(S_i\) and \(T_i\) indicate the number of units withdrawn at the time of the ith failure is related to X and Y samples respectively, and these are unknown and random variables. The data observed in this form will consist of \((H, \lambda , S)\), where \((H = H_1, H_2,...,H_r)\), \(H_i = 1 \ or \ 0\) if \(\lambda _i\) comes from X or Y failure, respectively, \((\lambda = \lambda _1, \lambda _2,...,\lambda _r)\) with \(r < K\), and \((S = S_1, S_2,...,S_r)\).

In this research, the lifetime distributions of the experimental units in the two populations follow two-parameter generalized exponential distributions with the same scale parameter but different shape parameters. We investigate both the likelihood and the Bayesian inference of unknown model parameters. By solving a three-dimensional optimization problem, the maximum likelihood estimators (MLEs) of the unknown parameters can be produced. This problem can be solved using the Newton-Raphson approach. In this instance, the Hessian matrix must be computed, which may not be in the most convenient format. Furthermore, it has been discovered that the traditional Newton-Raphson approach may not be suitable for small effective sample sizes.

The following is a list of the paper’s objectives: The maximum likelihood estimators (MLEs) of the power Rayleigh distribution’s unknown parameters are derived in Maximum likelihood estimation” section. Approximate confidence intervals (ACIs) based on the MLEs are presented in “Bayesian method” section. “Application of real data” section is where the Bayesian analysis is carried out. In “Simulation” section, we examine real data sets to demonstrate the estimating methods presented in this paper. In “Conclusion” section, the simulation results are shown. Section 8 concludes with a brief conclusion.

Maximum likelihood estimation

Assume that \(X_1, X_2,... X_m\) are independently and identically distributed (i.i.d.) power Rayleigh random variables representing the lifetimes of m units for product A. Similarly, \(Y_1, Y_2,...\), and \(Y_n\) are assumed to denote the lifetimes of n units for product B, and they are assumed to be independent and identically distributed (i.i.d.) power Rayleigh random variables. According to Rasouli and Balakrishnan6, the likelihood function of\((S,H,\lambda )\) can be written as follows

where \(\lambda _{1}\le \lambda _{2}\le ...\le \lambda _{r},\) \(\bar{F}=1-F,\) \(\bar{G}=1-G,\) \(\sum _{i=1}^{r} s_{i} = m-m_{r},\) \(\sum _{i=1}^{r} t_{i} = n-n_{r},\) \(\sum _{i=1}^{r} R_{i} = \sum _{i=1}^{r} s_{i} + \sum _{i=1}^{r} t_{i}\), and \(C=D_{1} D_{2}\) with

As a result, the log-likelihood function can be written as:

To estimate the unknown parameters, take the first derivative of Eq. (7) with respect to \(\alpha _{1},\alpha _{2}, \beta _{1}\), and \(\beta _{2}\), which are given by:

and

The system of normal equations \(\frac{\partial \ell }{\partial \alpha _{1} }=0\), \(\frac{\partial \ell }{\partial \alpha _{2} }=0\), \(\frac{\partial \ell }{\partial \beta _{1} }=0\), and \(\frac{\partial \ell }{\partial \beta _{2} }=0\) has not closed form of its solution, so the numerical techniques to estimate the unknown parameters \(\alpha _{1},\alpha _{2}, \beta _{1}\), and \(\beta _{2}\) are used.

Asymptotic confidence intervals

The maximum likelihood estimators for the parameters cannot be obtained in analytic form. Therefore, their actual distributions cannot be derived. However, we can use the asymptotic distribution of the maximum likelihood estimator to derive confidence intervals for the unknown parameters \(\alpha _{1},\alpha _{2}, \beta _{1}\), and \(\beta _{2}\).

The \(100(1-\gamma )\%\) CIs for \(\alpha _{1},\alpha _{2}\) \(\beta _{1}~\), and \(\beta _{2}~\) can be calculated using the asymptotic normality of the maximum likelihood estimators with Var(\(\hat{\alpha _{1}}_{ML}\)), Var(\(\hat{\alpha _{2}}_{ML}\)), Var(\(\hat{\beta _{1}}_{ML}\)), and Var(\(\hat{\beta _{2}}_{ML}\)). The second derivatives with respect to \(\alpha _{1},\alpha _{2}\), \(\beta _{1}~\), and \(\beta _{2}~\) are provided by the log-likelihood function in Eq. (7).

Therefore, the observed Fisher information matrix \(\hat{I}_{ij}=\) \(E\left[ -\partial ^{2}\ell /\partial \phi _{i}~\partial \phi _{j}\right]\), where \(i,j=1,2,3,4~\), and \(\phi =\left( \phi _{1},\phi _{2},\phi _{3}, \phi _{4}\right) =\left( \alpha _1,\alpha _2,\beta _{1}, \beta _{2}\right) .\)

Hence, the observed information matrix is given by

Therefore, the inverting the observed information matrix \(\hat{I}\left( \alpha _1,\alpha _2,\beta _{1}, \beta _{2}\right)\) is used to obtain the asymptotic variance-covariance matrix for the MLEs. Where \(\hat{I}^{-1}\left( \alpha _1,\alpha _2,\beta _{1}, \beta _{2}\right)\) is obtained by

Thus, the \(100(1-\gamma )\%\) normal approximate CIs for \(\left( \alpha _1,\alpha _2,\beta _{1}, \beta _{2}\right)\) are

where \(Z_{\frac{\gamma }{2}}\) is the percentile of the standard normal distribution with right-tail probability \(\frac{\gamma }{2}\).

We will introduce another method to estimate the unknown parameters, such as the Bayesian technique. Bayesian analysis is a successful tool that has been proposed to estimate the unknown parameters. Comparing Bayesian inference to other methods of reasoning has various benefits.

Bayesian method

This section contains the Bayesian estimates of the unknown parameters \(\alpha _{1},\ \alpha _{2}, \beta _{1}\), and \(\beta _{2}\) of the power Rayleigh distribution based on JP-II-CS. Prior knowledge has been incorporated in terms of some prior distributions, and here we assume that the four parameters \(\alpha _{1},\ \alpha _{2}\), \(\ \beta _{1}\), and \(\beta _{2}\) are random variables having independent gamma priors.

where \(a_i, b_i, i=1,2,3,4\) are considered to be known and chosen to indicate the previous assumption on the unknown parameters. As a result, the joint prior density is given as

The posterior distribution of parameters \(\alpha _{1},\ \alpha _{2}~, \beta _{1}\), and \(\ \beta _{2}\) indicates \(\pi ^{*}\left( \alpha _{1},\ \alpha _{2},\ \beta _{1}, \ \beta _{2} \mid H, \lambda , S\right) ~\) by combining the likelihood function Eq. (6) with the prior via Bayes’ theorem, proportionality can be achieved and it can be written as

From Eq. (14) can be used to evaluate the joint posterior to proportionality.

We highlighted that solving Eq. (15) analytically is impossible due to the difficulty in obtaining closed forms for the marginal posterior distributions for each parameter. As a result, we propose using the Markov chain Monte Carlo (MCMC) technique to approximate29 and generate samples from posterior distributions, as well as to evaluate Bayes estimators of unknown parameters and construct the corresponding CRIs, using squared error (SE) and linear exponential (LINEX) loss functions. Abushal et al.30, EL-Sagheer and Hasaballah31, Parsi and Bairamov32 and Metropolis et al.33 are just a few of the studies that worked with the MCMC technique. From Eq. (15) the conditional posterior density function of \(\alpha _{1},\ \alpha _{2}~, \beta _{1}\) and \(\ \beta _{2}\) can be obtained as the following proportionality to simplify, we used \(\pi ^{*}_{1}\left( \alpha _{1}\right) , ~\pi ^{*}_{2}\left( \alpha _{2}\right) , ~\pi ^{*}_{3}\left( \beta _{1} \right)\) and \(\pi ^{*}_{4}\left( \beta _{2} \right)\) instead of \(\pi ^{*}_{1}\left( \alpha _{1}\mid \alpha _{2},\beta _{1}, \beta _{2}, H, \lambda , S\right) , ~\pi ^{*}_{2}\left( \alpha _{2}\mid \alpha _{1},\beta _{1}, \beta _{2}, H, \lambda , S\right) , ~\pi ^{*}_{3}\left( \beta _{1}\mid \alpha _{1},\alpha _{2}, \beta _{2}, H, \lambda , S \right)\) and \(\pi ^{*}_{4}\left( \beta _{2}\mid \alpha _{1},\alpha _{2},\beta _{1}, H, \lambda , S \right)\) respectively:

and

The conditional posterior function of \(\alpha _{1},\ \alpha _{2}~, \beta _{1}\) and \(\ \beta _{2}\) in Eqs. (16)–(19) cannot be reduced analytically to well known distributions. Consequently, it is difficult sample directly by standard methods, but the plot of them see in Figs. 3, 4, 5 and 6 display that they are similar to normal distribution.

Gibbs sampling

To produce the Bayesian estimate of unknown parameters and the related credible interval, we now employ the Gibbs sampling method, which is a subclass of Markov chain Monte-Carlo (MCMC) methods. Using the posterior conditional density functions of the parameters \(\alpha _{1},\ \alpha _{2}~\), \(\ \beta _{1}\) and \(\ \beta _{2}\), this approach produces posterior samples. Eq. (15) identifies the posterior density function of the parameters \(\alpha _{1},\ \alpha _{2}~\), \(\ \beta _{1}\) and \(\ \beta _{2}\). As indicated by Eqs. (16)–(19), the conditional density function of \(\alpha _{1},\ \alpha _{2}~\), \(\ \beta _{1}\) and \(\ \beta _{2}\) cannot be achieved in the form of the well-known density functions (19). As a result, we use the33, Metropolis–Hasting (MH) algorithm uses a normal proposal distribution to generate random samples from the posterior densities of \(\alpha _{1},\ \alpha _{2}~\), \(\ \beta _{1}\) and \(\ \beta _{2}\).

The steps of Gibbs sampling are described as follows:

-

Step 1. Start with an initial guess \((\alpha _{1}^{(0)},\alpha _{2}^{(0)},\beta _{1}^{(0)},\beta _{2}^{(0)})\) =\((\hat{\alpha _{1}},\hat{\alpha _{2}},\hat{\beta _{1}},\hat{\beta _{2}})\).

-

Step 2. Set \(t = 1\).

-

Step 3. Generate \((\alpha _{1}^{(t)},\alpha _{2}^{(t)},\beta _{1}^{(t)}, \beta _{2}^{(t)})\) from \(\pi _{1}^{*}(\alpha _{1}^{(t-1)}\mid H, \lambda , S)\), \(\pi _{2}^{*}(\alpha _{2}^{(t-1)}\mid H, \lambda , S)\), \(\pi _{3}^{*}(\beta _{1}^{(t-1)}\mid H, \lambda , S)\), and \(\pi _{4}^{*}(\beta _{2}^{(t-1)}\mid H, \lambda , S)\) using MH algorithm with the proposal distributions \(N(\alpha _{1}^{(t-1)}, \sqrt{\widehat{var(\alpha _{1})}})\), \(N(\alpha _{2}^{(t-1)}, \sqrt{\widehat{var(\alpha _{2})}})\), \(N(\beta _{1}^{(t-1)}, \sqrt{\widehat{var(\beta _{1})}})\), and \(N(\beta _{2}^{(t-1)}, \sqrt{\widehat{var(\beta _{2})}})\) respectively.

-

(i)

Generate proposals \(\alpha _{1}^{*}\) from \(N(\alpha _{1}^{(t-1)}, \sqrt{\widehat{var(\alpha _{1})}})\), \(\alpha _{2}^{*}\) from \(N(\alpha _{2}^{(t-1)}, \sqrt{\widehat{var(\alpha _{2})}})\), \(\beta _{1}^{*}\) from \(N(\beta _{1}^{(t-1)}, \sqrt{\widehat{var(\beta _{1})}})\), and \(\beta _{2}^{*}\) from \(N(\beta _{2}^{(t-1)}, \sqrt{\widehat{var(\beta _{2})}})\).

-

(ii)

Measure the acceptance probabilities \(\eta _{\alpha _{1}}=\min \left( 1, \frac{\pi _{1}^{*}(\alpha _{1}^{(t)}\mid H, \lambda , S)}{\pi _{1}^{*}(\alpha _{1}^{(t-1)}\mid H, \lambda , S)}\right)\), \(\eta _{\alpha _{2}}=\min \left( 1, \frac{\pi _{2}^{*}(\alpha _{2}^{(t)}\mid H, \lambda , S)}{\pi _{2}^{*}(\alpha _{2}^{(t-1)}\mid H, \lambda , S)}\right)\), \(\eta _{\beta _{1}}=\min \left( 1, \frac{\pi _{3}^{*}(\beta _{1}^{(t)}\mid H, \lambda , S)}{\pi _{3}^{*}(\beta _{1}^{(t-1)}\mid H, \lambda , S)}\right)\), and \(\eta _{\beta _{2}}=\min \left( 1, \frac{\pi _{4}^{*}(\beta _{2}^{(t)}\mid H, \lambda , S)}{\pi _{4}^{*}(\beta _{2}^{(t-1)}\mid H, \lambda , S)}\right)\).

-

(iii)

Generate \(u_{1}\), \(u_{2}\), \(u_{3}\) and \(u_{4}\) from Uniform (0, 1).

-

(iv)

If \(u_{1} < \eta _{\alpha _{1}}\), accept the proposal and set \((\alpha _{1}^{(t)})=(\alpha _{1}^{(*)})\) else, set \((\alpha _{1}^{(t)})=(\alpha _{1}^{(t-1)})\).

-

(v)

If \(u_{2} < \eta _{\alpha _{2}}\), accept the proposal and set \((\alpha _{2}^{(t)})=(\alpha _{2}^{(*)})\) else, set \((\alpha _{2}^{(t)})=(\alpha _{2}^{(t-1)})\).

-

(vi)

If \(u_{3} < \eta _{\beta _{1}}\), accept the proposal and set \((\beta _{1}^{(t)})=(\beta _{1}^{(*)})\) else, set \((\beta _{1}^{(t)})=(\beta _{1}^{(t-1)})\).

-

(vii)

If \(u_{4} < \eta _{\beta _{2}}\), accept the proposal and set \((\beta _{2}^{(t)})=(\beta _{2}^{(*)})\) else, set \((\beta _{2}^{(t)})=(\beta _{2}^{(t-1)})\).

-

(i)

-

Step 4. Set \(t = t + 1\).

-

Step 5. Repeat Steps (3)–(5) N times and get the posterior sample to estimate the unknown parameters \(\alpha _{1},\ \alpha _{2}~, \beta _{1}\) and \(\ \beta _{2}\).

Application of real data

In this section, we analyse a data set primarily for illustration purposes. Rasouli and Balakrishnan6 also used these data sets, originally obtained from34. The data includes the time intervals (in hours) between air conditioning system failures on a fleet of 13 Boeing 720 jet planes. For illustration purposes, we used the planes “7913” and “7914”. The following data is provided:

-

PLANE 7914: 3, 5, 5, 13, 14, 15, 22, 22, 23, 30, 36, 39, 44, 46, 50, 72, 79, 88, 97, 102, 139, 188, 197, 210.

-

PLANE 7913: 1, 4, 11, 16, 18, 18, 18, 24, 31, 39, 46, 51, 54, 63, 68, 77, 80, 82, 97, 106, 111, 141, 142, 163, 191, 206, 216.

For each sample, we fit the Power Rayleigh distribution and provide the results in Table 3. The Kolmogorov–Smirnov test statistic values (K–S) and corresponding p values were provided, indicating that the data fit the Power Rayleigh distribution with the parameters presented in Table 1.

So, the power Rayleigh distribution fits the data very well in both samples, and we have just plotted the empirical and fitted it in Fig. 7 for the first sample and Fig. 8 for the second sample. It is evident that the power Rayleigh distribution can be a better model for fitting this data. From the above data sets, we have generated JP-II-C sample with the censoring scheme. Assume that \(m = 24\) for the first sample and \(n = 27\) for the second sample, by implementing JP-II-CS where \(K = m + n\) denotes the total sample size, and when \(r = 10, S = ( 5, 0, 0, 0, 5, 0, 0, 0, 0, 8)\), \(T = (5, 0, 0, 0, 5, 0, 0, 0, 0, 10),\) and \(R = (10, 0, 0, 0, 10, 0, 0, 0, 0, 18)\). The generated data sets are provided below

and

Based on the above JP-II-CS sample, we compute the point estimate based on MLEs and the results of \(95\%\) ACIs for \(\alpha _{1},\alpha _{2},\beta _{1}~\), and \(\beta _{2}\), the results of which are shown in Tables 2 and 3. For Bayesian estimation, we used MCMC method based on 10, 000 MCMC sample and discard the first 1000 values as ‘burn-in’. We used the informative priors which follow the Gamma distribution with hyperparameters \(a_{i}=0.02\) and \(b_{i}=2\). Table 2 shows the Bayesian estimates for \(\alpha _1, \alpha _2, \beta _1\), and \(\beta _2\) under the SE and LINEX loss functions. The two samples can be seen that the power Rayleigh distribution fits the data very well and also we have just plotted the empirical S(t) and the fitted S(t) in Fig. 9 for the first sample and in Fig. 10 for the second sample. It is evident that the power Rayleigh distribution can be a good model fitting this data. Moreover, the results of the \(95\%\) CRIs for \(\alpha _{1},\alpha _{2},\beta _{1}~\) and \(\beta _{2}~\) are tabled in Table 3. As we can see, the variances of \(\alpha _{1},\alpha _{2},\) and \(\beta _{1}~\) are very large comparing to their values. This would lead to a negative lower bound of the asymptotic confidence intervals of \(\alpha _{1},\alpha _{2},\) and \(\beta _{1}~\). Since \(\alpha _{1},\alpha _{2},\) and \(\beta _{1}~\) cannot be negative, we truncate the lower limits at zero. This is one of the disadvantages of the maximum likelihood method.

Table 4 shows the comparison between the approximation of the expected values of the number of failures from the first production line before the test performance (A.E.B) and the mean of the exact number of failures after the test performance (M.E.A) when \(r=15,20,25,30\) and 35. The plots of the posterior density functions and the trace plots of the unknown parameters \(\alpha _{1}\), \(\alpha _{2}\), \(\beta _{1}\), and \(\beta _{2}\) using MCMC method have been shown in Figs. 11, 12, 13 and 14.

Simulation

A simulation study was performed to compare the performance of the different methods discussed in this paper. Suppose various sample sizes for the two populations as \(m, n = 10,20,30\), and various values of \(r = 5,10,15,20,30,40\). Also, set the parameters \((\alpha _{1}^{(t)},\alpha _{2}^{(t)},\beta _{1}^{(t)}, \beta _{2}^{(t)}) = (0.5,0.6,2.5,2.69,0.69,0.8,1.57,1.8)\). The MSEs, lengths of 95 % coverage probability (CP) for the parameters \((\alpha _{1}^{(t)},\alpha _{2}^{(t)},\beta _{1}^{(t)}\), and \(\beta _{2}^{(t)})\) have been evaluated using MLEs and MCMC with 10000 observations under SE and LINEX loss functions. This process is repeated 1000 times and the results of the mean values of MSE, lengths and CP, are displayed in Tables 5, 6, 7, and 8. Moreover, in this section a simulation study was conducted to compute the expected number of failures from the first production line (S.E.Mr) and also compute the approximated expected number of failures (A.E.Mr). We assumed various sample sizes for the two populations as\(~ m, n = 5,10,15, 20,25,30,40;50\) and various choices of JP-II-CS \(r = 5,10,20,30,40\) samples from the two PRD populations have been generated under the same truth values of these parameters, the results are presented in Table 9. The calculations in Table 9 are computed under the following assumptions: \(p = P(X_1 < X_2)\) where \(X_1, X_2\) are the lifetime of the first production line units and the second production line units, respectively, in which \(X_1\) is selected from PRD(0.5, 2.5), and \(X_2\) from PRD(0.6, 2.69), once more \(X_1\) selected from PRD(0.8, 1.8), and \(X_2\) from PRD(0.69, 1.57). We calculate the A.E.Mr according to Parsi and Bairamov32 as follows:

Conclusion

In this study, a joint type-II progressively censoring method was used to investigate two samples with a power Rayleigh distribution. It was believed that the scale parameters and form parameters were different. The MLE estimates were obtained using the maximum likelihood method. Performance of MLEs and Bayesian estimation methods were compared for informative and non-informative priors. Importance sampling was used to create Bayesian estimates. It was also investigated how estimates under square and LINEX loss functions compared. The best method for point estimates found out to be Bayesian inference under informative priors, and a number of censoring scheme structures were found. On a real data set, we have used the developed techniques. A simulation study is used to compare the performance of the proposed methods for different sample sizes (m, n). From the results, we observe the following:

-

1

. From Table 2, it can be seen that when \(c=2\), the Bayes estimates under the SE loss function are similar to those under the LINEX loss functions.

-

2

. It is observed that, from Table 3 the MCMC is better than the MLE in the sense of having the smallest lengths.

-

3

. It is clear from Table 4 the values of A.E.B. are smaller than the values of M.E.A. in all schemes.

-

4

. It can be seen from Table 9 the values of A.E.B. are relatively close to the values of M.E.A. in all schemes.

-

5

. It is evident that from Tables 5, 6, 7, and 8 the MSEs and CP of MLE are smaller than the MSEs of MCMC. Then, the performance of the Bayes estimates for the parameters \(\alpha _{1},\ \alpha _{2}~, \beta _{1}\) and \(\ \beta _{2}\) are better than the MLEs.

-

6

. It is observed that from Tables 5, 6, 7, and 8 the Bayes estimates under LINEX with \(c=2\) are provides better estimates in the sense of having smaller MSEs

-

7

. It is clear that from Tables 5, 6, 7 , and 8 when m, n and r increase the MSEs and the lengths decrease

-

8

. It is evident from Tables 5, 6, 7, and 8 that the MCMC CRIs give more accurate results than the ACIs since the lengths of the MCMC CRIs are less than the lengths of the ACIs, for various sample sizes.

Data availibility

Data are available in paper.

References

Tolba, A. H., Almetwally, E. M. & Ramadan, D. A. Bayesian estimation of a one parameter Akshaya distribution with progressively type II censord data. J. Stat. Appl. Prob. Int. J. 11, 565–579 (2022).

Ramadan, D. A., Almetwally, E. M., & Tolba, A. H. Statistical inference to the parameter of the Akshaya distribution under competing risks data with application HIV infection to aids. Ann. Data Sci. 1–27 (2022).

Sarhan, A. M. et al. Statistical analysis of a competing risks model with Weibull sub-distributions. Appl. Math. 8(11), 1671 (2017).

Sarhan, A. M., El-Gohary, A. I., Mustafa, A. & Tolba, A. H. Statistical analysis of regression competing risks model with covariates using Weibull sub-distributions. Int. J. Reliabi. Appl. 20(2), 73–88 (2019).

El-Sagheer, R. M., Tolba, A. H., Jawa, T. M., & Sayed-Ahmed, N. Inferences for stress-strength reliability model in the presence of partially accelerated life test to its strength variable. Comput. Intell. Neurosci. 2022, 1–13 (2022).

Rasouli, A. & Balakrishnan, N. Exact likelihood inference for two exponential populations under joint progressive type-II censoring. Commun. Stat. Theory Methods 39(12), 2172–2191 (2010).

Doostparast, M., Ahmadi, M. V. & Ahmadi, J. Bayes estimation based on joint progressive type II censored data under linex loss function. Commun. Stat. Simul. Comput. 42(8), 1865–1886 (2013).

Balakrishnan, N., Su, F. & Liu, K.-Y. Exact likelihood inference for k exponential populations under joint progressive type-ii censoring. Commun. Stat. Simul. Comput. 44(4), 902–923 (2015).

Mondal, S. & Kundu, D. Point and interval estimation of Weibull parameters based on joint progressively censored data. Sankhya b 81(1), 1–25 (2019).

Mondal, S. & Kundu, D. On the joint type-II progressive censoring scheme. Commun. Stat. Theo. Meth. 49(4), 958–976 (2020).

Fan, J. & Gui, W. Statistical inference of inverted exponentiated Rayleigh distribution under joint progressively type-II censoring. Entropy. 24(2), 171 (2022).

Bhat, A. & Ahmad, S. A new generalization of Rayleigh distribution: Properties and applications. Pak. J. Stat. 36(3), 225–250 (2020).

Mahmoud, M. A. W., Kilany, N. M. & El-Refai, L. H. Inference of the lifetime performance index with power Rayleigh distribution based on progressive first-failure-censored data. Qual. Reliab. Eng. Int. 36(5), 1528–1536 (2020).

Tolba, A. H. Bayesian and non-Bayesian estimation methods for simulating the parameter of the Akshaya distribution. Comput. J. Math. Stat. Sci. 1(1), 13–25 (2022).

Ramadan, A. T., Tolba, A. H. & El-Desouky, B. S. Generalized power Akshaya distribution and its applications. Open J. Model. Simul. 9(4), 323–338 (2021).

Mahmood, Z., Jawa, T. M, Sayed-Ahmed, N., Khalil, E., Muse, A. H., & Tolba, A. H. An extended cosine generalized family of distributions for reliability modeling: Characteristics and applications with simulation study. Math. Probl. Eng. 2022, 1–20 (2022).

Muse, A. H., Tolba, A. H., Fayad, E., Abu Ali, O. A., Nagy, M., & Yusuf, M. Modelling the covid-19 mortality rate with a new versatile modification of the log-logistic distribution. Comput. Intell. Neurosci. 2021, 1–14 (2021).

Chen, Y., Li, Y., & Zhao, T. Cause analysis on eastward movement of southwest china vortex and its induced heavy rainfall in South China. Adv. Meteorol. 2, 1–22 (2015).

Mohamed, R. A. H., Tolba, A. H., Almetwally, E. M., & Ramadan, D. A. Inference of reliability analysis for type ii half logistic Weibull distribution with application of bladder cancer. Axioms. 11(8), 1–19 (2022).

Zhang, Z. & Gui, W. Statistical inference of reliability of generalized Rayleigh distribution under progressively type-II censoring. J. Comput. Appl. Math. 361, 295–312 (2019).

Almongy, H. M., Almetwally, E. M., Aljohani, H. M., Alghamdi, A. S. & Hafez, E. A new extended Rayleigh distribution with applications of covid-19 data. Res. Phys. 23, 104012 (2021).

Rivet, B., Girin, L. & Jutten, C. Log-Rayleigh distribution: A simple and efficient statistical representation of log-spectral coefficients. IEEE Trans. Audio Speech Lang. Process. 15(3), 796–802 (2007).

Cordeiro, G. M., Cristino, C. T., Hashimoto, E. M. & Ortega, E. M. The beta generalized Rayleigh distribution with applications to lifetime data. Stat. Pap. 54(1), 133–161 (2013).

Merovci, F. & Elbatal, I. Weibull Rayleigh distribution: Theory and applications. Appl. Math. Inf. Sci. 9(5), 1–11 (2015).

Madi, M. & Raqab, M. Bayesian analysis for the exponentiated Rayleigh distribution. Metron Int. J. Stat. 67, 269–288 (2009).

Rosaiah, K., & Kantam, R. Acceptance sampling based on the inverse Rayleigh distribution. Econ. Qual. Cont. 2, 277–286 (2005).

Fatima, K. & Ahmad, S. Weighted inverse Rayleigh distribution. Int. J. Stat. Syst. 12(1), 119–137 (2017).

Merovci, F. Transmuted Rayleigh distribution. Aust. J. Stat. 42(1), 21–31 (2013).

Shokr, E. M., El-Sagheer, R. M., Khder, M. & El-Desouky, B. S. Inferences for two Weibull Frechet populations under joint progressive type-II censoring with applications in engineering chemistry. Appl. Math. 16(1), 73–92 (2022).

Abushal, T. A., Kumar, J., Muse, A. H., & Tolba, A. H. Estimation for Akshaya failure model with competing risks under progressive censoring scheme with analyzing of thymic lymphoma of mice application. Complexity 2022, 1–27 (2022).

EL-Sagheer, R. M., & Hasaballah, M. M.: Inference of process capability index for 3-burr-XII distribution based on progressive type-II censoring. Int. J. Math. Sci. 2020, 1–13 (2020).

Parsi, S. & Bairamov, I. Expected values of the number of failures for two populations under joint type-II progressive censoring. Comput. Stat. Data Anal. 53(10), 3560–3570 (2009).

Metropolis, N., Rosenbluth, A. W., Rosenbluth, M. N., Teller, A. H. & Teller, E. Equation of state calculations by fast computing machines. J. Chem. Phys. 21(6), 1087–1092 (1953).

Proschan, F. Theoretical explanation of observed decreasing failure rate. Technometrics 5(3), 375–383 (1963).

Author information

Authors and Affiliations

Contributions

1- All authors write the manuscript. 2- Dr. Ahlam and Dr. Dina write the program of the MCMC. 3- All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tolba, A.H., Abushal, T.A. & Ramadan, D.A. Statistical inference with joint progressive censoring for two populations using power Rayleigh lifetime distribution. Sci Rep 13, 3832 (2023). https://doi.org/10.1038/s41598-023-30392-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-30392-7

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.