Abstract

The analysis of the relationship between time and cost is a crucial aspect of construction project management. Various optimization techniques have been developed to solve time–cost trade-off problems. A hybrid multi-verse optimizer model (hDMVO) is introduced in this study, which combines the multi-verse optimizer (MVO) and the sine cosine algorithm (SCA) to address the discrete time–cost trade-off problem (DTCTP). The algorithm's optimality is evaluated by using 23 well-known benchmark test functions. The results demonstrate that hDMVO is competitive with MVO, SCA, the dragonfly algorithm and ant lion optimization. The performance of hDMVO is evaluated using four benchmark test problems of DTCTP, including two medium-scale instances (63 activities) and two large-scale instances (630 activities). The results indicate that hDMVO can provide superior solutions in the time–cost optimization of large-scale and complex projects compared to previous algorithms.

Similar content being viewed by others

Introduction

In project management, optimization is a highly useful tool to satisfy desired objectives under specific constraints. The productivity of different components of a project can be increased by optimization. The importance of optimization in a construction project has been emphasized for decades as it is used to find the ideal plan and schedule for completing a project. Cost optimization, time optimization, and Pareto front are three common forms of time–cost trade-off problems. The objective of the cost optimization problem is to minimize the total cost under specific conditions, including project implementation time and penalty costs for delays. Meanwhile, the time optimization problem is aimed at choosing alternative solutions to shorten the project implementation time while ensuring that the project cost does not exceed the revenue on the early operation of the project. The Pareto front is a multi-objective optimization problem to simultaneously optimize both project cost and time1.

Mirjalili, Mirjalili2 proposed a multi-verse optimizer (MVO) algorithm inspired by the Big Bang theory to satisfy the need for solving single-and multi-objective optimization problems. For result assessment, MVO is compared with other metaheuristic algorithms, such as particle swarm optimization (PSO), Genetic Algorithm (GA), Ant colony optimization (ACO), etc. The results show that the MVO algorithm can provide competitive, even superior results than those of other algorithms in most tested optimization problems. However, MVO has issues in balancing the exploration and exploitation mechanism of the search area and limitations in the search area exploitation during fast convergence, thus resulting in local optimization3.

The Sine Cosine Algorithm (SCA)4 was developed for focusing on the exploration and exploitation of the search space during optimization. The results of the test problems show that SCA can explore different regions of the search space, avoid local optimization, converge towards global optimization, and effectively exploit the promising region of the search space during optimization. In addition, the study shows that SCA converges significantly faster than PSO, GA, ACO, etc. SCA has been utilized to address optimization challenges in diverse domains since 20165. Like MVO, SCA has limitations. Specifically, its search area exploitation mechanism is not clearly expressed; therefore, it easily encounters fast convergence6, which results in local optimization.

Two algorithms with opposite advantages and disadvantages motivated us to develop a hybrid algorithm between MVO and SCA for optimal exploration and exploitation of the search area based on the strengths of each algorithm to achieve a balance between the two mechanisms. The hDMVO algorithm was developed by preserving MVO's mechanisms of white and black holes to ensure good exploration of the search area by MVO. Concurrently, good search area exploitation by the algorithm is guaranteed by SCA through the fact that the value closest to the global optimum is stored in a variable as the target and is never lost during the optimization. Therefore, hDMVO will achieve a reasonable balance between the exploration and the exploitation phases, which ensures that the algorithm can achieve global optimization and become an appropriate metaheuristic method for solving the DTCTP.

The resolution of large-scale DTCTPs is a crucial aspect in the management of any construction project. Despite the availability of several existing methods, they are not fully equipped to solve large-scale DTCTPs. Therefore, a hybrid multi-verse optimizer model (hDMVO) was developed by combining the MVO and the SCA to provide efficient solutions for medium- and large-scale DTCTPs and other optimization problems that can be applied in actual construction projects. This model also significantly enhances the decision-making ability of decision-makers.

The rest of this paper is organized as follows. Section "Literature review" summarizes the literature on the time–cost trade-off problems. Section "Model development" outlines the development of our hybrid multi-verse optimizer model. Section "Computational experiments" presents the results from the validation and application of our model. Finally, Sections "Conclusion" and "Recommendations for future work" conclude the study and outline future research directions.

Literature review

The stochastic optimization method is widely used in many fields of study7,8, which develops meta-heuristic techniques. Some popular meta-heuristic methods are inspired by animals in nature. For example, ant lion optimization (ALO) algorithm is modeled after the hunting behavior of antlions in nature. The Dragonfly algorithm (DA) is based on the static and dynamic swarming behaviors observed in dragonflies9. Africa Wild Dog Optimization Algorithm (AWDO) originates from the hunting mechanism of Africa wild dogs in nature10. Meanwhile, Genetic Algorithm (GA) is inspired by evolutionary principles, such as heredity, mutation, natural selection, and crossover11.

The development of new algorithms or improvement of current algorithms has recently attracted immense interest from researchers, which is related to the No Free Lunch (NFL) theorem12. Evidently, the NFL has enabled researchers to improve and adapt current algorithms for solving different problems or propose new algorithms to provide competitive results against current algorithms. There are a significant number of developed hybrid metaheuristic algorithms, including the ant colony system-based decision support system (ACS-SGPU)13, dragonfly algorithm–particle swarm optimization model14, quantum-based sine cosine algorithm15, the improved sine–cosine algorithm based on orthogonal parallel information16, the hybrid sine cosine algorithm with multi-orthogonal search strategy17.

The time–cost trade-off is extended to the discrete version, including various realistic assumptions and solved by the exact, heuristic, and metaheuristic methods. PSO and GA are metaheuristic methods commonly used in the DTCTP. Bettemir18 found that among eight metaheuristic methods, including a sole genetic algorithm, four hybrid genetic algorithms, PSO, ant colony optimization, and electromagnetic scatter search, PSO was one of the leading algorithms together with the hybrid genetic algorithm with quantum annealing for the large-scale cost optimization. Zhang and Xing19 proposed an algorithm combining PSO and fuzzy sets theory to solve the fuzzy time–cost–quality trade-off problem. Aminbakhsh and Sonmez20 developed the discrete particle swarm optimization method to solve the large-size time–cost trade-off problem. Aminbakhsh and Sonmez21 used Pareto front particle swarm optimizer (PFPSO) to simultaneously optimize the time and cost of large-scale projects. Sonmez and Bettemir22 presented a hybrid strategy based on GAs, simulated annealing, and quantum simulated annealing techniques for the cost optimization problem. Zhang et al.23 proposed a GA for the DTCTP in repetitive projects. Naseri and Ghasbeh24 used GA for the time–cost trade off analysis to compensate for project delays. Network analysis algorithm is also metaheuristic techniques used to solve the DTCTP25. Son and Khoi26 presented a slime mold algorithm model to solving time–cost–quality trade-off problem.

Despite their wide applications in solving the DTCTP, the metaheuristic techniques have several limitations. Therefore, hybrid metaheuristic methods are being developed and widely used in the DTCTP. An adaptive-hybrid genetic algorithm was proposed by Zheng27 for time–cost–quality trade-off problems. Said and Haouari28 developed a model wherein the simulation–optimization strategy and the mixed-integer programming formulation were used to solve the DTCTP. Tran, Luong-Duc29 presented an opposition multiple objective symbiotic organisms search (OMOSOS) model for time, cost, quality, and work continuity trade-off in repetitive projects. Eirgash et al.30 proposed a multi-objective teaching–learning-based optimization algorithm integrated with a nondominated sorting concept (NDS–TLBO), which is successfully applied to optimize the medium- to large-scale projects. A hybrid GALP algorithm combined with GA and linear programming (LP) was proposed by Alavipour and Arditi31 for time–cost tradeoff analysis. Albayrak32 developed an algorithm combining PSO and GA to solve the time–cost trade-off problem for resource-constrained construction projects. A population-based metaheuristics approach, nondominated sorting genetic algorithm III (NSGA III) was developed by Sharma and Trivedi33 to ensure the quality and safety in time–cost trade-off optimization. Li et al.34 presented an epsilon-constraint method-based genetic algorithm for uncertainty multimode time–cost–robustness trade-off problem.

This paper presents a hybrid multi-verse optimizer (hDMVO) model based on MVO and SCA, which can provide high-quality solutions for large-scale discrete time–cost trade-off optimization problems.

Model development

Discrete time–cost trade-off problem

The common objective of discrete time–cost tradeoff problem (DTCTP) is to minimize the total direct and indirect costs and such costs can be formulated as follows35:

subject to:

where C is the project cost; dcjk is the direct cost of mode k for activity j; xjk is a 0–1 variable which is 1 if mode k is selected for executing activity j, and 0 otherwise; ic is the daily indirect cost; D is the project duration; djk is the duration of mode k for activity j; Stj is the start time for activity j; and Scj is the set of immediate successors for j.

Hybrid multi-verse optimizer model for DTCTP

Multi-verse optimizer—MVO

The MVO algorithm is inspired by concepts which theoretically exist in astronomy, including white holes, black holes, and worm holes. White holes are the elements which form the universes and have been unobservable until now. Meanwhile, black holes are observable and characterized by a giant gravitational force which attracts all surrounding objects. The last element can exchange objects between different universes or different parts of a universe. In the MVO, the above three elements are mathematically modeled to develop an optimal method, simulate the teleportation and exchange of objects between universes through white/black and worm hole tunnels. In addition, the idea of the inflation of the universe is also applied to the MVO based on the inflation rate.

The model of the MVO algorithm is shown in Fig. 1. In this figure, the universe with a higher inflation rate will have a white hole, while a universe with a lower inflation rate will have a black hole. The objects will then be transferred from the white holes of the source universe to the black holes of the target universe. In order to improve the overall inflation rate of single universes, an assumption was made that the universes with high inflation rate would be more likely to have white holes. In contrast, the universes with low inflation rate are more likely to have black holes. In Fig. 1, the white points represent celestial bodies travelling through the worm holes. It can be observed that worm holes stochastically change celestial bodies regardless of their inflation rates.

The roulette wheel mechanism will be used. (Eq. 6) to mathematically model white or black hole tunnels and exchange celestial objects between universes. When optimization problems are solved with the maximized objective function, –NI will be changed into NI. In each iteration, universes will be rearranged based on their inflation rate (fitness value), and by the roulette wheel mechanism, one universe will be selected in the occurrence of white hole, assume that:

where d is the number of parameters (variables) and n is the number of universes (candidate solutions):

where \(x_{i}^{j}\) indicates the jth parameter of ith universe, Ui shows the ith universe, NI(Ui) is normalized inflation rate of the ith universe, r1 is a random number in [0, 1], and \({x}_{k}^{j}\) indicates the jth parameter of kth universe selected by a roulette wheel selection mechanism.

With the above-mentioned mechanism, universes keep the objects exchanged without interference. For accurate determination of the diversity of universes and exploitation, each universe has a wormhole to stochastically transport its objects through space. In order to provide local changes for each universe and improve inflation rate by using wormholes, worm hole tunes are assumed to always be established between a universe and a best universe formed so far. This mechanism is presented as follows:

where Xj indicates the jth parameter of best universe formed so far, TDR is a coefficient, WEP is another coefficient, lbj shows the lower bound of jth variable, ubj is the upper bound of jth variable, \({x}_{i}^{j}\) indicates the jth parameter of ith universe, and r2, r3, r4 are random numbers in [0, 1].

Two main coefficients, namely the wormhole existence probability (WEP) and travelling distance rate (TDR) can be seen in Eq. (7). The coefficient WEP was used to determine the wormhole existence probability in the universe. Such coefficient will linearly increase over the iterations (Eq. 8).

where the min variable is the minimum value, the max variable is the maximum value, l presents the number of the current iteration, and L presents the termination criteria (the maximum number of iterations).

TDR is a factor to determine the distance rate (variation) by which an object can be displaced by a wormhole around the best universe formed so far. (Eq. 9). In contrast to WEP, TDR decreases over iterations for more precise exploitation or local search around the best universe formed so far (Fig. 2).

where p presents the exploitation rate through the iterations. The larger p, the earlier and more precise exploitation/local search.

In the MVO algorithm, the optimization process starts with generating a set of random universes. At each iteration, objects in universes with higher inflation rates tend to travel to universes with lower inflation rates through white or black holes. Meanwhile, every universe has to face random processes of celestial bodies through wormholes to reach the best universe. This process is repeated until the termination criteria are satisfied (such as a predetermined maximum number of iterations).

Sine cosine algorithm-SCA

Stochastic population-based techniques have in common is to divide the optimization process into two phases: exploration and exploitation36. In the exploration phase, the optimization algorithm will abruptly combine solutions with a high random rate to find the promising region of the search space. However, in the exploitation phase, there will be gradual changes in the stochastic solutions, and the stochastic variations will be significantly less than those in the exploration phase.

In SCA, the mathematical equations for updating positions are given for both phases, see Eqs. (10) and (11):

where \(X_{j}^{t}\) is the position of the current solution in ith dimension at tth iteration, α1, α2 and α3 are random numbers, \(D_{j}^{t}\) is position of the destination point in ith dimension, and || indicates the absolute value.

The above two formulas are combined into a general formula as follows:

where α4 is a random number in [0,1].

In Eq. (12), it can be seen that SCA has 4 main parameters: α1, α2, α3, and α4. α1 defines the movement direction, α2 determines how far the movement should be towards or outwards the destination, α3 denotes random weights for destination. Finally, the parameter α4 switches between the sine and cosine components in Eq. (12).

A general model in Fig. 3 shows the effectiveness of the sine and cosine functions in the range [− 2, 2]. This figure shows how the range of sine and cosine changes in order to update the location of a response. Randomness is also achieved by determining a random number for α2 in [0, 2π] (Eq. (12)). Therefore, this mechanism ensures the exploration of the search space.

In each iteration, the range of Sine and Cosine functions in Eqs. (10)–(12) will be changed to balance the exploitation and exploration phases in order to find the promising regions of the search space and finally achieve the global optimization by Eq. (13):

where v is a constant, t is the current iteration and T is the maximum number of iterations. Figure 4 shows the reduction in the range of the sine and cosine functions over the course of iterations.

Hybrid multi-verse optimizer model for DTCTP

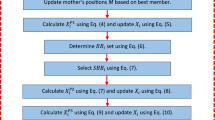

By taking advantage of SCA and MVO, the hDMVO is built to change the MVO's exploitation mechanism by the SCA's exploitation mechanism while preserving the MVO’s mechanisms of roulette wheel selection Eq. (14), thereby improving the hDMVO's search area exploration and exploitation.

where \({x}_{i}^{j}\) indicates the jth parameter of ith universe, Ui shows the ith universe, NI(Ui) is normalized inflation rate of the ith universe, δ1 is a random number in [0, 1], and \({x}_{k}^{j}\) indicates the jth parameter of kth universe selected by a roulette wheel selection mechanism.

A new formula which combines two algorithms MVO and SCA will be developed from Eq. (9) and Eq. (12) as follows:

where Xj indicates the jth parameter of best universe formed so far. TDR is wormhole existence probability was calculated by Eq. (8) with min = 0.2 and max = 3. WEP is travelling distance rate was calculated by Eq. (9) with p = 10. lbj shows the lower bound of jth variable, ubj is the upper bound of jth variable, \({x}_{i}^{j}\) indicates the jth parameter of ith universe, and δ2, δ3, δ4 are random numbers in [0, 1]. δ5 are also random numbers in [0, 2π]. The parameter δ5 defines how far the movement should be towards or outwards the destination. The pseudo-code and flowchart of our hDMVO method is given in Figs. 5 and 6. The set of parameters that are summarized in Table 1 provided an adequate combination for the hDMVO, MVO and SCA.

The complexity of the hDMVO algorithm is influenced by various factors such as the number of activities, schedules, iterations, roulette wheel selection mechanism, and sorting mechanism. The roulette wheel selection method, which is applied for every activity in each solution over the course of iterations, has a complexity of O(log N). The sorting of solutions is carried out at each iteration by utilizing the Quicksort algorithm, which has a complexity of O(N^2) in the worst-case scenario. As a result, the overall computational complexity is:

where n is the number of activities, N is the number of schedules, and T is the maximum iterations.

In the optimization process, the solution a is evaluated to be better than solution b if:

In case the project cost is equal (Ca = Cb), The option with the shortest completion time is considered the best one:

In case both options a and b have the same project cost and project duration, the optimal solution will be stochastically selected.

The exploration and exploitation of the search space will be significantly improved thanks to the hDMVO algorithm. Such hybrid algorithm not only searches for the optimal solution from the sets of solutions stochastically generated at the initial phase, but can also exploit the space between solutions through each iteration to find new promising regions of the search space.

Computational experiments

Convergence behaviours

To assess the optimization capabilities of hDMVO, a comprehensive analysis was conducted using twenty-three well-known benchmark test functions. Comparisons were made to the results obtained from four other optimization algorithms, including MVO, SCA, DA, and ALO. These benchmark functions are grouped into three categories: unimodal, multimodal, and fixed-dimension multi-modal functions, as detailed in Tables 2, 3 and 4.

In order to ensure a fair comparison, all of the algorithms were executed 30 times for each benchmark function. Statistical results, including the mean value (ave) and standard deviation (std), were collected from 30 runs of the algorithm. It is important to note that 30 search agents and a maximum of 500 iterations were utilized in the analysis. The statistical results of the hDMVO algorithm, as well as those of other comparative algorithms (DA, ALO, SCA, and MVO), can be found in Tables 5, 6 and 7.

It should be noted that unimodal functions possess a single global optimum, making them an appropriate choice for evaluating the exploitation mechanism. An examination of the results presented in Table 5 reveals that hDMVO exhibited superior exploitability when compared to other swarm-based optimization algorithms (DA, ALO, SCA, and MVO) in the unimodal test functions, as demonstrated by its performance in 7 out of 7 for MVO and DA, 5 out of 7 for ALO and 4 out of 7 for SCA.

Unlike unimodal functions, multimodal benchmark functions possess a global optimization point in addition to many local optima. Therefore, multimodal test functions are well-suited for evaluating the exploration capabilities of hDMVO. The results for multimodal test functions (Table 6) show that hDMVO performs better than MVO, DA and ALO, and is comparable to SCA (6 out of 6 for MVO, ALO and DA, 5 out of 6 for SCA). Therefore, the ability of hDMVO to effectively avoid local optima and explore the search space has been demonstrated through its performance.

The composite test functions, as the name implies, are a combination of various unimodal and multimodal test functions, which include variations such as rotation, shifting, and bias. These composite test functions have a similar real search space with multiple local optima, which is beneficial for testing the balance between exploration and exploitation of the search space. The results of the hDMVO algorithm's performance with composite test functions (F14–F23) are presented in Table 7. The results indicate that the hDMVO outperforms other population-based optimization algorithms in terms of average values, thus demonstrating its ability to effectively balance search space exploration and exploitation.

The performance of the hDMVO algorithm in terms of convergence, in comparison to other state-of-the-art algorithms (DA, ALO, SCA, and MVO), is depicted in Figs. 7, 8 and 9. Through the use of 10 agents and 150 iterations, the study generated convergence curves which demonstrate that hDMVO has a higher likelihood of reaching optimal convergence on a majority of the benchmark test functions.

Medium-scale instances of DTCTP

The medium-scale instances include two 63-activity problems wherein each activity has maximum five modes22. The network diagram of this problem is shown in Fig. 10. The time–cost alternatives for these instances are listed in Table 8. The medium-scale instances, including 1.37 × 1042 possible solutions will be tested at two different levels of indirect costs. The indirect cost in the first problem (63a) is 2300USD/day, while that in the second problem (63b) is 3500USD/day. The optimal solutions for these two problems are 5,421,120USD and 6,176,170USD, respectively. The hDMVO algorithm has been implemented in Python and is compatible with Visual Studio Code. The testing of all instances of DTCTP were performed on a personal computer featuring an Intel Core i7-8750H 2.20 GHz CPU and 8.0 GB of RAM.

Activity on the node (AoN) representation of the 63-activity network (Aminbakhsh and Sonmez20).

hDMVO obtains exceptional results in medium-scale experimental problems, wherein the best values among the ten runs are 5,444,670USD for problems 63a (Table 9) and 6,211,720USD for problems 63b (Table 10). The distribution of percentage deviations for hDMVO, MVO, and SCA are illustrated in Figs. 11 and 12, using ten trials of the 63a and 63b DTCTP problems. The figures demonstrate that hDMVO has a smaller deviation percentage compared to MVO and SCA, indicating its ability to effectively balance exploration and exploitation when solving medium-scale DTCTP problems.

The average percent deviation (APD) of hDMVO from the global optimal for problems 63a and 63b is summarized in Table 11. The results show that hDMVO outperforms ACO, GA, and electromagnetism mechanism (EMS)18, sole genetic algorithm (GA), hybrid genetic algorithm (HA)22, modified adaptive weight approach with genetic algorithms (MAWA-GA), modified adaptive weight approach with particle swarm optimization (MAWA-PSO) and modified adaptive weight approach with teaching learning based optimization (MAWA-TLBO)37. hDMVO also outperforms the two original algorithms named MVO and SCA when its ADPs are 0.61% and 0.71% for problems 63a and 63b, respectively, within 50,000 schedules. As evident from Table 11, hDMVO outperforms both native algorithms (MVO and SCA) in medium-scale instances. By searching only 50,000 solutions out of 1.37 × 1042 potential solutions, hDMVO can identify high-quality solutions that were highly close to the optimal value.

Large-scale instances of DTCTP

The large-scale instances include two 630-activity problems, wherein each activity has a maximum of five modes, including 2.38 × 10421 possible solutions22. These cases represent the size of an actual construction project. The indirect costs for large-scale instances (630a and 630b) are similar to those of medium-scale instances (2300USD/day and 3500USD/day for 63a and 63b, respectively).

The hDMVO algorithm also shows its effectiveness in large-scale experimental problems; the best values for ten runs in problems 630a and 630b are 54,816,950USD (Table 12) and 62,505,580USD (Table 13), respectively. Figures 13 and 14 show the boxplots of ten percentage deviations of hDMVO, MVO and SCA by testing problems 630a and 630b. From Figs. 13 and 14, the percentage deviations of hDMVO are much smaller than those of MVO and SCA. The results thus demonstrated the stability of hDMVO when solving the large-scale DTCTP problem.

For large-scale instances, hDMVO provides superior results (Table 14) against GA, genetic algorithm with simulated annealing (GASA), hybrid genetic algorithm with quantum simulated annealing (HGAQSA), genetic memetic algorithm with simulated annealing (GMASA), genetic algorithm with simulated annealing and variable neighborhood search (GASAVNS), PSO, electromagnetic scatter search (ESS)18, and GA and HA22. hDMVO completely outperforms SCA and performs slightly better than MVO. hDMVO also yields better results than NDS–TLBO30 in problem 630b; for problem 630a, hDMVO achieves APD of 1.27% when searching for 50,000 solutions, while NDS–TLBO achieves APD of 1.1% when searching for 250,000 solutions. hDMVO's ADP values for problems 630a and 630b are 1.27% and 1.28%, respectively (Table 8), which were significantly superior to those of the two original algorithms, MVO and SCA. By just searching for 50,000 solutions out of 2.38 × 10421 potential solutions, hDMVO can achieve high-quality solutions for large-scale instances. These results indicate that hDMVO has overcome the disadvantages of MVO and SCA in search space exploration and exploitation to achieve the optimal value.

Conclusion

This study presents a combined model of MVO and SCA for global optimization. The combination's objective is to make use of the exploration of MVO and the search space exploitation of SCA to achieve an effective balance between the two phases during optimization. hDMVO is developed to combine the search space exploitation mechanism of MVO and SCA while preserving MVO's mechanisms of roulette wheel selection, thereby improving hDMVO's search exploration and exploitation. hDMVO was comprehensively evaluated by twenty-three benchmark optimization problems. The results indicate that hDMVO is more likely to achieve global optimization compared with SCA and MVO. In this study, hDMVO is proposed to solve the discrete time–cost trade-off problem in construction projects. The results of the computational experiments reveal that hDMVO can achieve high-quality solutions for medium- and large-scale DTCTP and can be used to optimize the cost–time problems for actual projects. With the obtained results, hDMVO is seen as an appropriate metaheuristic method for solving the DTCTP problem as well as other optimization problems.

Recommendations for future work

In this study, the application of hDMVO is limited to solving DTCTP problems with the finish-to-start relationship. However, in actual construction projects, DTCTP problems in construction projects often include the start-to-start, finish-to-finish, and start-to-finish relationships. Therefore, in future studies, hDMVO will be used to solve DTCTP problems with complicated relationships simultaneously and on a large scale to obtain more comprehensive solutions for project management. The hDMVO model has been shown to effectively balance exploration and exploitation when compared to other state-of-the-art swarm-based optimization algorithms (DA, ALO, SCA, and MVO). Additionally, the hDMVO model also demonstrates competitive performance in medium- and large-scale DTCTPs. However, limitations in local optima avoidance are also clearly demonstrated by hDMVO when applied to large-scale problems. To overcome these limitations, future research will involve the development of a combination model that incorporates hDMVO with other techniques such as modified adaptive weight approach and opposition-based learning, to enhance its performance in solving optimization problems in the construction industry and other technical fields.

Data availability

Some or all data, models, or code that support the findings of this study are available from the corresponding author upon reasonable request.

References

Vanhoucke, M. & Debels, D. The discrete time/cost trade-off problem: Extensions and heuristic procedures. J. Sched. 10(4), 311–326 (2007).

Mirjalili, S., Mirjalili, S. M. & Hatamlou, A. Multi-verse optimizer: A nature-inspired algorithm for global optimization. Neural Comput. Appl. 27(2), 495–513 (2016).

Laith, A. Multi-verse optimizer algorithm: A comprehensive survey of its results, variants, and applications. Neural Comput. Appl. 32(16), 12381–12401 (2020).

Mirjalili, S. SCA: a sine cosine algorithm for solving optimization problems. Knowl. Based Syst. 96, 120–133 (2016).

Rizk-Allah, R. M. & Hassanien, A. E. A comprehensive survey on the sine–cosine optimization algorithm. Artif. Intell. Rev. https://doi.org/10.1007/s10462-022-10277-3 (2022).

Abualigah, L. & Diabat, A. Advances in sine cosine algorithm: A comprehensive survey. Artif. Intell. Rev. 54(4), 2567–2608 (2021).

Parejo, J. A. et al. Metaheuristic optimization frameworks: A survey and benchmarking. Soft. Comput. 16(3), 527–561 (2012).

Zhou, A. et al. Multiobjective evolutionary algorithms: A survey of the state of the art. Swarm Evol. Comput. 1(1), 32–49 (2011).

Mirjalili, S. Dragonfly algorithm: A new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Comput. Appl. 27(4), 1053–1073 (2016).

Son, P. V. H. & Khoi, T. T. Development of Africa Wild Dog optimization algorithm for optimize freight coordination for decreasing greenhouse gases. In ICSCEA 2019 (eds Reddy, J. N. et al.) 881–889 (Springer, 2020).

Holland, J. H. Adaptation in Natural and Artificial Systems: An Introductory Analysis with Applications to Biology, Control, and Artificial Intelligence (MIT Press, 1992).

Wolpert, D. H. & Macready, W. G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1(1), 67–82 (1997).

Zhang, Y. & Thomas Ng, S. An ant colony system based decision support system for construction time-cost optimization. J. Civ. Eng. Manag. 18(4), 580–589 (2012).

Son, P. V. H., Duy, N. H. C. & Dat, P. T. Optimization of construction material cost through logistics planning model of dragonfly algorithm—Particle swarm optimization. KSCE J. Civ. Eng. 25(7), 2350–2359 (2021).

Rizk-Allah, R. M. A quantum-based sine cosine algorithm for solving general systems of nonlinear equations. Artif. Intell. Rev. 54(5), 3939–3990 (2021).

Rizk-Allah, R. M. An improved sine–cosine algorithm based on orthogonal parallel information for global optimization. Soft. Comput. 23(16), 7135–7161 (2019).

Rizk-Allah, R. M. Hybridizing sine cosine algorithm with multi-orthogonal search strategy for engineering design problems. J. Comput. Des. Eng. 5(2), 249–273 (2018).

Bettemir, Ö. H. Optimization of Time-Cost-Resource Trade-Off Problems in Project Scheduling Using Meta-Heuristic Algorithms (2009).

Zhang, H. & Xing, F. Fuzzy-multi-objective particle swarm optimization for time–cost–quality tradeoff in construction. Autom. Constr. 19(8), 1067–1075 (2010).

Aminbakhsh, S. & Sonmez, R. Discrete particle swarm optimization method for the large-scale discrete time–cost trade-off problem. Expert Syst. Appl. 51, 177–185 (2016).

Aminbakhsh, S. & Sonmez, R. Pareto front particle swarm optimizer for discrete time-cost trade-off problem. J. Comput. Civ. Eng. 31(1), 04016040 (2017).

Sonmez, R. & Bettemir, Ö. H. A hybrid genetic algorithm for the discrete time–cost trade-off problem. Expert Syst. Appl. 39(13), 11428–11434 (2012).

Zhang, L., Zou, X. & Qi, J. A trade-off between time and cost in scheduling repetitive construction projects. J. Ind. Manag. Optim. 11(4), 1423 (2015).

Naseri, H. & Ghasbeh, M. A. E. Time-cost trade off to compensate delay of project using genetic algorithm and linear programming. Int. J. Innov. Manag. Technol. 9(6), 285–290 (2018).

Bettemir, Ö. H. & Talat Birgönül, M. Network analysis algorithm for the solution of discrete time-cost trade-off problem. KSCE J. Civ. Eng. 21(4), 1047–1058 (2017).

Son, P. V. H. & Khoi, L. N. Q. Utilizing artificial intelligence to solving time–cost–quality trade-off problem. Sci. Rep. 12(1), 20112 (2022).

Zheng, H. Multi-mode discrete time-cost-environment trade-off problem of construction systems for large-scale hydroelectric projects. in Proceedings of the Ninth International Conference on Management Science and Engineering Management (Springer, 2015).

Said, S. S. & Haouari, M. A hybrid simulation-optimization approach for the robust discrete time/cost trade-off Problem. Appl. Math. Comput. 259, 628–636 (2015).

Tran, D.-H. et al. Opposition multiple objective symbiotic organisms search (OMOSOS) for time, cost, quality and work continuity tradeoff in repetitive projects. J. Comput. Des. Eng. 5(2), 160–172 (2018).

Eirgash, M. A., Toğan, V. & Dede, T. A multi-objective decision making model based on TLBO for the time-cost trade-off problems. Struct. Eng. Mech. 71(2), 139–151 (2019).

Alavipour, S. R. & Arditi, D. Time-cost tradeoff analysis with minimized project financing cost. Autom. Constr. 98, 110–121 (2019).

Albayrak, G. Novel hybrid method in time–cost trade-off for resource-constrained construction projects. Iran. J. Sci. Technol. Trans. Civ. Eng. 44(4), 1295–1307 (2020).

Sharma, K. & Trivedi, M. K. Latin hypercube sampling-based NSGA-III optimization model for multimode resource constrained time–cost–quality–safety trade-off in construction projects. Int. J. Constr. Manag. 22(16), 3158–3168 (2020).

Li, X. et al. Multimode time-cost-robustness trade-off project scheduling problem under uncertainty. J. Comb. Optim. 43(5), 1173–1202 (2020).

De, P. et al. The discrete time-cost tradeoff problem revisited. Eur. J. Oper. Res. 81(2), 225–238 (1995).

Črepinšek, M., Liu, S.-H. & Mernik, M. Exploration and exploitation in evolutionary algorithms: A survey. ACM Comput. Surv. (CSUR) 45(3), 1–33 (2013).

Toğan, V. & Eirgash, M. A. Time-cost trade-off optimization of construction projects using teaching learning based optimization. KSCE J. Civ. Eng. 23(1), 10–20 (2019).

Acknowledgements

We would like to thank Ho Chi Minh City University of Technology (HCMUT), VNU-HCM for the support of time and facilities for this study.

Funding

The authors whose names are listed immediately below certify that they have NO affiliations with or involvement in any organization or entity with any financial interest (such as honoraria; educational grants; participation in speakers’ bureaus; membership, employment, consultancies, stock ownership, or other equity interest; and expert testimony or patent-licensing arrangements), or non-financial interest (such as personal or professional relationships, affiliations, knowledge or beliefs) in the subject matter or materials discussed in this manuscript.

Author information

Authors and Affiliations

Contributions

Both P.V.H.S. and N.D.N.T. wrote all the main manuscript, prepared all the figures, tables and checked revision before submission.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Son, P.V.H., Nguyen Dang, N.T. Solving large-scale discrete time–cost trade-off problem using hybrid multi-verse optimizer model. Sci Rep 13, 1987 (2023). https://doi.org/10.1038/s41598-023-29050-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-29050-9

This article is cited by

-

Hybrid whale optimization algorithm for enhanced routing of limited capacity vehicles in supply chain management

Scientific Reports (2024)

-

Apply EZStrobe to simulate the finishing work for reducing construction process waste

Scientific Reports (2024)

-

Enhancing engineering optimization using hybrid sine cosine algorithm with Roulette wheel selection and opposition-based learning

Scientific Reports (2024)

-

Solving time cost optimization problem with adaptive multi-verse optimizer

OPSEARCH (2024)

-

Achieving improved performance in construction projects: advanced time and cost optimization framework

Evolutionary Intelligence (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.