Abstract

The power spectrum of brain activity is composed by peaks at characteristic frequencies superimposed to a background that decays as a power law of the frequency, \(f^{-\beta }\), with an exponent \(\beta \) close to 1 (pink noise). This exponent is predicted to be connected with the exponent \(\gamma \) related to the scaling of the average size with the duration of avalanches of activity. “Mean field” models of neural dynamics predict exponents \(\beta \) and \(\gamma \) equal or near 2 at criticality (brown noise), including the simple branching model and the fully-connected stochastic Wilson–Cowan model. We here show that a 2D version of the stochastic Wilson–Cowan model, where neuron connections decay exponentially with the distance, is characterized by exponents \(\beta \) and \(\gamma \) markedly different from those of mean field, respectively around 1 and 1.3. The exponents \(\alpha \) and \(\tau \) of avalanche size and duration distributions, equal to 1.5 and 2 in mean field, decrease respectively to \(1.29\pm 0.01\) and \(1.37\pm 0.01\). This seems to suggest the possibility of a different universality class for the model in finite dimension.

Similar content being viewed by others

Introduction

In the last two decades, the hypothesis that the brain operates near a critical point has gained a large evidence. The first experiments pointing in this direction were done on organotypic cultures and acute slices of rat cortex1, where scale-free distributions of activity avalanches were found. Since then, the hypothesis has been confirmed in many systems in vitro and in vivo, from cortical activity of awake monkeys2 to the resting MEG of human brain3. The distribution of avalanche sizes is found to scale as \(P(S)\sim S^{-\alpha }\), that of avalanche durations as \(P(T)\sim T^{-\tau }\), while the mean size of avalanches scales as \(\langle S\rangle \sim T^\gamma \) as a function of the duration4,5,6,7,8. A good indicator of criticality is believed to be given by the scaling relation \(\gamma =\frac{\tau -1}{\alpha -1}\), as originally predicted in the theory of crackling noise9,10, as well as by the collapse of rescaled shapes of avalanches of different durations4,7,8. The simple branching model of avalanche propagation predicts exponents \(\alpha =3/2\), \(\tau =2\) and \(\gamma =2\), observed in some experimental realizations1,11 and in models of neural dynamics, including the fully-connected stochastic Wilson–Cowan model7. However, some experimental results have found an exponent \(\gamma \) around 1.3, not compatible with the value 2 predicted by the branching process universality class, even when the scaling relation is experimentally satisfied. For instance, results on spike avalanches measured in the urethane-anesthetized rat cortex12, cultured cortical networks4, ex-vivo recordings of the turtle visual cortex5, and somatosensory barrel cortex of the anesthetized rat13, have found \(\gamma \) around 1.3 with the scaling relation \(\gamma =\frac{\tau -1}{\alpha -1}\) satisfied.

Another important feature of neuronal dynamics is the power-law decay \(P(f)\sim f^{-\beta }\) of the power spectrum of EEG, MEG, resting state fMRI, and local field potential as a function of frequency14,15,16,17,18, once the peaks corresponding to characteristic frequencies of oscillations have been subtracted. The values of \(\beta \) are found to be between 1 and 1.3 in the EEG and MEG of healthy patients16,17, while they are around 2 for epileptic patients19. On quite general grounds, the exponent \(\beta \) is predicted to be equal to the exponent \(\gamma \) of the relation \(\langle S\rangle \sim T^\gamma \)9. It seems therefore that there are at least two universality classes in brain dynamics, one that can be called the “mean-field” class, represented by the branching model and the fully-connected Wilson–Cowan model, that is characterized by \(\alpha \simeq 3/2\) and \(\beta \simeq \gamma \simeq \tau \simeq 2\) (the equality is exact for the branching model), and another characterized by a lower value of the exponents, \(\beta \simeq \gamma \lesssim 1.3\), \(\alpha \simeq 1.3\), \(\tau \simeq 1.4\). The experimental measurement of \(\gamma \) around 1.3, not compatible with the value of branching process, opened a debate, and it raised the question if a different model, with a different universality class12,20, might be required to explain the critical brain data4,5,12,13, or if different mechanisms, such as subsampling21, should be invoked to reconcile data with branching model.

In the present paper we study the 2D version of the stochastic Wilson–Cowan model7,22, where neurons are distributed uniformly on a 2D lattice, and connections between them decay exponentially with the distance. We can tune the network topology from 2D to fully-connected by changing the ratio between the range \(\lambda \) of the exponential decay of the connections, and the side of the lattice L. While in the fully-connected case the dynamics of the model is completely described by specifying two variables, the fraction of active excitatory and inhibitory neurons, in the 2D case one needs to define such fractions at each site of the lattice. As a consequence, while the fully-connected model is characterized by just two characteristic relaxation times, in the 2D model one finds a whole spectrum of times, related to the different Fourier modes of the neural activity. By making a system size expansion one finds that, if the number of neurons on each site of the lattice is large, the dynamical equations governing the evolutions of the Fourier modes decouple, and each mode obeys to the same equations of the fully-connected model, but with a different relaxation time.

Independently of the network topology, the model shows a critical point at a characteristic value of a parameter measuring the difference between the strength of excitatory and inhibitory connections. For values above the critical point, the model displays a self-sustained dynamics even in the absence of external input. At the critical point, one of the characteristic times diverges, related to the mode \({\varvec{k}}=0\) in spatially extended topologies, while the times of the other modes scale as \(|{\varvec{k}}|^{-2}\). This feature, together with the density of the wave numbers which scales as \(|{\varvec{k}}|\) in two dimensions, gives rise to a power spectrum that is proportional to \(f^{-1}\) (pink noise).

In the next section we introduce the model, then we study the power spectrum and relaxation functions of the firing rate in the linear case (large neuron density). We then look at the avalanche size and duration distributions, and show that at the critical point the distributions are scale free, with exponents depending on the topology of the system. Finally, we show that the exponents \(\beta \) and \(\gamma \) have approximately the same dependence on the inverse frequency and duration of avalanches, respectively, and in the 2D model tend respectively to 1 and 1.3 at low frequency and large durations.

Schematic representation of the 2D model. On each site of the lattice there are \(n_E\) excitatory and \(n_I\) inhibitory neurons. Connections between neurons depend on the type of neuron (excitatory or inhibitory) and on the distance measured in lattice spacings. Neurons on the same site have \(r_{ij}=0\).

The model

Let us consider a two-dimensional \(L\times L\) lattice, where on each site there are \(n_E=N_E/L^2\) excitatory and \(n_I=N_I/L^2\) inhibitory neurons, with connections depending on the distance \(r_{ij}\) measured in lattice spacings, as shown in the scheme in Fig. 1. Note that all pairs i and j of neurons belonging to the same site have distance \(r_{ij}=0\), so that inside one lattice site the network is fully-connected. Neurons are modeled as in22, namely they can be in two states, active and quiescent. The rate of transition from active to quiescent state is \(\alpha \) for all the neurons, while the rate from quiescent to active state is given by an activation function \(f(s_i)\) of the input \(s_i\) of the i-th neuron, given by

where \(a_j=0,1\) if the j-th neuron is quiescent or active respectively, \(w_{ij}\) are the connections between neurons, and \(h_i\) is an external input. We consider the activation function

In the following we set \(\alpha =0.1\) \(\hbox {ms}^{-1}\), and \(\beta =1\) \(\hbox {ms}^{-1}\). We study here a version of the model where the connections between neurons do not depend only on the type of presynaptic neuron, as in the fully-connected case, but depend also on the distance between neurons. Namely, the connection between neurons j (pre-synaptic) and i (post-synaptic) is given by

where we have defined \({\tilde{w}}_{0,0}=\sum _{j}w_{ij}\) as the sum of all the connections incoming in one neuron, which we take to be the same for all neurons, \({\tilde{w}}_{s,0}=\sum _{j}|w_{ij}|\), and \(r_{ij}\) is the distance between neurons. The second subscript in \({\tilde{w}}_{0,0}\) and \({\tilde{w}}_{s,0}\) refers to the wave-number \({\varvec{k}}=0\), see Eq. (13). The normalization factors \({{\mathcal {N}}}_E\) and \({{\mathcal {N}}}_I\) are defined as \({{\mathcal {N}}}_E=\sum _{j\in E}e^{-r_{ij}/\lambda }\), \({{\mathcal {N}}}_I=\sum _{j\in I}e^{-r_{ij}/\lambda }\), where E and I are respectively the set of all excitatory and inhibitory neurons. Note that, when \(\lambda \gg L\), we recover the fully-connected model, well studied in the past7,22,23. On the other hand, when \(L\gg \lambda \), the topology of the connections changes to two-dimensional.

We consider the same input \(h_i\equiv h\) for all neurons. As the connections \(w_{ij}\) depend only on the distance between neurons, and the input h is the same for different neurons, the system is translationally invariant. The fraction \(\Sigma \) of active neurons is a stochastic variable that at stationarity fluctuates around the fixed point value, given by the equation (see "Methods" section)

with \(s_0={\tilde{w}}_{0,0}\Sigma _0+h\). Note that Eq. (4) does not depend on the chosen topology of the connections, fully-connected or sparse or depending on the distance, but only on the condition that the sum of incoming connections \({\tilde{w}}_{0,0}\) is the same for all neurons, because this makes the system translationally invariant and the fixed point activity \(\Sigma _0\) equal for all the lattice sites. In Ref.7 it was shown that there is a critical point at \(h=0\) and \({\tilde{w}}_{0,0}=\beta ^{-1}\alpha \). For \({\tilde{w}}_{0,0}\) larger than the critical value, an attractive fixed point with \(\Sigma _0>0\) exists even when the external input \(h\rightarrow 0\). In the case of the fully-connected model, the connections \(w_{ij}\) do not depend on the spatial position of neurons, but only on the functional type (excitatory or inhibitory) of the pre-synaptic and post-synaptic neuron. In Refs.7,22,23 a further simplification was considered, that \(w_{ij}\) depends only on the type of the pre-synaptic neuron, and is given by \(w_{ij}=\frac{1}{2N_E}({\tilde{w}}_{0,0}+{\tilde{w}}_{s,0})\) if j is excitatory and \(w_{ij}=\frac{1}{2N_I}({\tilde{w}}_{0,0}-{\tilde{w}}_{s,0})\) if j is inhibitory. In this case, the temporal autocorrelation function of time dependent variables, like the fraction of active neurons or the firing rate, can be written in the limit of large number of neurons as23

where X(t) is the variable considered, \(X_0\) is its average value in time, \(A_{1}\) and \(A_{2}\) are constant coefficients, and \(\tau _1\), \(\tau _2\) are the characteristic relaxation times. While \(\tau _2\) is always small, and lower than \(\alpha ^{-1}\), the time \(\tau _1\) diverges at the critical point7.

In the fully-connected case, the state of the system depends only on two variables \(\Sigma \) and \(\Delta \), that correspond to the sum and difference between the fractions of activated excitatory and inhibitory neurons; conversely for connections depending on the distance, the values \(\Sigma _{\varvec{r}}\) and \(\Delta _{\varvec{r}}\) on each site of the lattice are necessary to characterize the activity. They are defined as

where \(m_{\varvec{r}}\) and \(l_{\varvec{r}}\) are respectively the number of active excitatory and inhibitory neurons on the lattice site \({\varvec{r}}\). Equivalently, we can use the Fourier transforms \(\Sigma _{\varvec{k}}\) and \(\Delta _{\varvec{k}}\), where \({\varvec{k}}\)’s are \(L^2\) different wave vectors. As the system is translationally invariant, the fixed point is characterized by \(\Sigma _{\varvec{k}}=\Delta _{\varvec{k}}=0\) for \({\varvec{k}}\ne 0\). Moreover, for the wave vector \({\varvec{k}}=0\), at the fixed point \(\Delta _0=0\) (this is a consequence of the fact that connections do not depend on the post-synaptic neuron), while \(\Sigma _0\) obeys the same Eq. (4) of the fully-connected case. Therefore, for \({\tilde{w}}_{0,0}\le \beta ^{-1}\alpha \), the fixed point value \(\Sigma _0\) goes to zero when the external input \(h\rightarrow 0\), while for \({\tilde{w}}_{0,0}>\beta ^{-1}\alpha \) it remains finite even for \(h\rightarrow 0\).

A quantity that will be considered in the following is the local firing rate, defined as

where \(s_{\varvec{r}}\) is the input defined by Eq. (1) of neurons on site \({\varvec{r}}\). Note that all the neurons on site \({\varvec{r}}\) have the same input. The probability that a neuron on site \({\varvec{r}}\) fires (makes a transition from inactive to active state) in the interval of time \(\Delta t\) is \(R_{\varvec{r}}(t)\Delta t\).

Temporal correlations and power spectrum

We now consider a variable \(X_{\varvec{r}}(t)\) defined on a site \({\varvec{r}}\), that can be for instance the fraction of active neurons \(\Sigma _{\varvec{r}}(t)\), or the firing rate \(R_{\varvec{r}}(t)\), given by a superposition of all its Fourier components,

As shown in "Methods", for large number of neurons different Fourier components of the activity decouple, and obey the same evolution equation of the total activity in the system with full connectivity. Therefore the autocorrelation function of \(X_{\varvec{r}}(t)\) will be given by the sum of the autocorrelation functions of its Fourier components,

While \(A_{2,{\varvec{k}}}\) and \(\tau _{2,{\varvec{k}}}\) remain always finite and small, \(A_{1,{\varvec{k}}}\) and \(\tau _{1,{\varvec{k}}}\) diverge proportionally to \(|{\varvec{k}}|^{-2}\) at the critical point. In particular \(\tau _{1,{\varvec{k}}}^{-1}\simeq D_0|{\varvec{k}}|^2\), where \(D_0\) is a constant defined in "Methods", see Eq. (17). The power spectrum of the activity on a site of the lattice is given by the Wiener-Khinchin theorem as the temporal Fourier transform of the autocorrelation function,

and given the dependence of \(A_{1,{\varvec{k}}}\) and \(\tau _{1,{\varvec{k}}}\) on the wave number at the critical point, in a system with a two-dimensional structure one finds a dependence \(P(f)\propto 1/f\) for frequencies between \(f_{\text {min}}=L^{-2}D_0\) and \(f_{\text {max}}=\lambda ^{-2}D_0\), where L is the linear size of the lattice and \(\lambda \) the range of the connections (see "Methods"). Note that the exponent of the power spectrum depends on the dimension of the space, in particular \(P(f)\propto f^{-1/2}\) in \(d=3\), \(P(f)\propto \log (1/f)\) in \(d=4\), and \(P(f)=\text {const.}\) (white noise) in dimension \(d>4\). At frequencies \(f\gg f_{\text {max}}\) only the Fourier components with the fastest relaxation time \(f_{\text {max}}^{-1}\) survive in the spectrum, so that \(P(f)\propto f^{-2}\) in any spatial dimension.

(A) Autocorrelation and (B) power spectrum of the single site firing rate in a two-dimensional \(L\times L\) model, in the linear approximation (number of neurons \(N\rightarrow \infty \)), as a function of the distance from the critical point. We set \(L=100\), \({\tilde{w}}_{s,0}=13.8\), \({\tilde{w}}_{0,0}=0.1\), and external input h between \(10^{-4}\) and \(10^{-8}\). The connections between neurons depend on the distance as in Eq. (3), with \(\lambda =1\).

In Fig. 2A,B we show the dependence of the autocorrelation function and of the power spectrum on the distance with respect to the critical point, which is given by \({\tilde{w}}_{0,0}=\beta ^{-1}\alpha =0.1\) and \(h=0\). Far from the critical point, the distribution of times for different wave vectors is narrow, the autocorrelation decays exponentially with good approximation and the power spectrum can be described by a Lorentzian. Near the critical point, we have a wide distribution of times, that gives rise to a 1/f decay in the spectrum. As shown in Fig. 2A, the corresponding relaxation function exhibits the slow decays \(a-b\ln t\) for a wide interval of times24.

(A) Autocorrelation and (B) power spectrum of the single site firing rate in a two-dimensional \(L\times L\) model, in the linear approximation (number of neurons \(N\rightarrow \infty \)), as a function of the range \(\lambda \) of the interactions. We set \(L=100\), \({\tilde{w}}_{s,0}=13.8\), \({\tilde{w}}_{0,0}=0.1\), \(h=10^{-8}\), and \(\lambda \) between 1 and \(\infty \). Note that in the limit \(\lambda =\infty \) the system is no longer 2D but fully-connected. The exponent \(\beta \) of the power-law decay of the power spectrum \(P(f)\sim f^{-\beta }\) is \(\beta = 1\) for the 2D model (\(\lambda \ll L\)) in agreement with the analytical predictions in section "Methods", while \(\beta =2\) in the fully-connected case (\(\lambda =\infty \)). (C) Power spectrum for connections decaying as a power law \(r^{-\omega }\), with the same L, \({\tilde{w}}_{s,0}\), \({\tilde{w}}_{0,0}\) and h of panels A and B. In this case \(\beta =2\) for \(\omega =0\), while \(\beta =1\) for \(\omega \ge 4\).

As shown in Fig. 3A,B, the 1/f dependence of the spectrum depends not only on the distance from the critical point, but also on the range of the connections. If the range \(\lambda \) grows, the autocorrelation functions and power spectrum tend to those of the fully-connected system, that is characterized by just two correlation times \(\tau _1\) and \(\tau _2\), where \(\tau _2\) is a short time of the order of \(\alpha ^{-1}\) while \(\tau _1\) is large near the critical point, so that the autocorrelation is well described at long times by a single exponential. In Fig. 3C, we show the power spectrum evaluated in systems where connections decay as a power law. Namely, in Eq. (3), instead of the factor \(e^{-r/\lambda }\) we put a factor \(\min (1,r^{-\omega })\). The case \(\omega =0\) coincides with the case \(\lambda =\infty \), and gives again the \(1/f^2\) decay of the spectrum. Larger values of \(\omega \) correspond to connections decaying more quickly, and for \(\omega \ge 4\) one finds the 1/f decay characteristic of short range connections.

Relation between avalanche and power spectrum exponents

In this section we compare the size and duration distributions of avalanches of activity of a site of the lattice in the 2D case (with short range connections) and in the fully-connected model. In the following we set \(n_E=n_I=10^8\) neurons for each site, and compare the behaviour of the network with \(L=40\) and \(\lambda =1\) (2D), with that of a network with \(L=1\) (fully-connected). We remark here that in a network with \(L=1\) the distance between all neurons is zero, so that in Eq. (3) the connections \(w_{ij}\) depend only on the type of neuron, and the model coincides with the model studied in7,22,23, with full connectivity.

We simulated the system with Langevin dynamics, see Eq. (7). To speed up the simulations, we have set the connections \(w_{ij}\) in Eq. (3) to zero when \(r_{ij}>3\lambda \). We made 60 different runs both for \(L=1\) and \(L=40\), for respectively \(3.5\times 10^7\) ms and \(2.5\times 10^5\) ms, discarding the first \(10^7\) and \(4\times 10^4\) ms, and collecting the avalanches on all sites in the 2D system. We define an avalanche as follow: we divide the time in discrete bins of width \(\delta =1\) ms, and consider the time evolution of the activity on a single site. We identify an avalanche as a continuous series of time bins in which there is at least one spike (i.e., a transition of one neuron from a quiescent to an active state in the site being considered). Note that, when simulating the system with Langevin dynamics Eq. (7), we extract the number of spikes in the interval \(\delta \) from a Poissonian distribution with mean equal to the temporal integral over \(\delta \) of the firing rate defined in Eq. (5) multiplied by the number of neurons in the site.

We remark here that avalanches are relative to the activity on a single site, so an avalanche begins and ends when the activity on the considered lattice site is zero, regardless of the activity on the other sites of the lattice. The size of the avalanche is defined as the total number of spikes of neurons belonging to the site considered, while the duration is the number of time bins of the avalanche multiplied by the width \(\delta \) of the bins. Note that all the lattice sites are equivalent, because the connections are translationally invariant and boundary conditions are periodic, so we expect the same distribution of sizes and durations on all the sites of the network. Therefore, to improve statistics, we compute the average of the distributions over all the sites of the lattice.

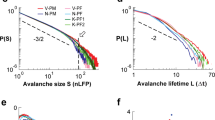

The distributions of avalanche size and duration are expected to follow power-law scalings, \(P(S) \sim S^{-\alpha }\) and \(P(T)\sim T^{-\tau }\). In Fig. 4 we report the results for the 2D system (\(L=40\), blue dots) and the fully-connected system (\(L=1\), red dots), for the size distribution (A) and duration distribution (B). The distributions show a clear dependence on the topology of the network. In the fully-connected model (red dots), exponents are \(\alpha =1.48\pm 0.01\) and \(\tau =2.05\pm 0.01\), very close to the values characteristic of the branching model of neural dynamics, as already observed in7. In the 2D system (blue dots), after a small size and duration regime characterized by exponents close to one, one finds, up to the cut-off of the distributions, a scaling regime with exponents \(\alpha =1.29\pm 0.01\) and \(\tau =1.37\pm 0.01\), markedly smaller than the mean-field values.

(A) Avalanche size distribution P(S) for a 2D system with \(L=40\), with a range of connections \(\lambda =1\) (blue dots) and for a fully-connected system with \(L=1\) (red dots). Other parameters are \({\tilde{w}}_{s,0}=13.8\), \({\tilde{w}}_{0,0}=0.1\), \(h=10^{-8}\), \(\delta =1\) ms. The number of neurons is \(10^8\) per site. (B) Avalanche duration distribution P(T) for the same system and parameters. The fits of the power-law exponents are done with the Python program “powerlaw”25 in the ranges indicated by the black lines.

We have then considered the relation \(\langle S\rangle (T)\) between the duration of an avalanche and its mean size (Fig. 5A), for the same system and parameters of Fig. 4. We fit the measured data, in the same ranges of Fig. 4B, with a power law \(T^{\gamma }\). Also in this case, we observe a marked difference between the fully-connected model (red dots), where the exponent is \(\gamma =2.04\pm 0.01\), and the 2D system where \(\gamma =1.35\pm 0.01\). Note that in both cases the expected relation9,10

is approximately satisfied. As for the size and duration distributions, in the 2D system the scaling regime for durations \(10^3<T<5\times 10^4\) ms is preceded by a different scaling behaviour with an exponent close to 2.

To put in evidence the dependence of the exponent \(\gamma \) on the range of durations chosen for the fit, in Fig. 5B we show the exponent \(\gamma \) of a fit of \(\langle S\rangle (T)\) with a power law \(T^\gamma \) in a sliding window \([T,10\,T]\). Intervals of durations where the exponent is nearly constant represent ranges where \(\langle S\rangle (T)\) can be well fitted by a power law. In the fully-connected case (red dots) there is a range of durations, between \(10^2\) and \(10^3\) ms, where \(\langle S\rangle (T)\sim T^\gamma \) with \(\gamma \simeq 2\), as already observed in7, while for longer durations the exponent drops to lower values due to the cut-off of the distribution. On the other hand, in the 2D case (blue dots), we observe a range between \(10^3\) and \(10^4\) where \(\langle S\rangle (T)\sim T^\gamma \) with \(\gamma \simeq 1.3\).

To investigate the relation between the exponent \(\gamma \) of \(\langle S\rangle (T)\) and the exponent \(\beta \) of the power spectrum, in Fig. 5D we plot the power spectrum P(f) of the single site firing rate defined in Eq. (5), for the same system size and parameters of Fig. 5A,B and Fig. 4. The power spectrum of the fully-connected system is well fitted by a Lorentzian, with a white noise behaviour for frequencies lower than 1 Hz, and a decay with an exponent \(\beta =1.98\pm 0.02\) for frequencies larger than 1 Hz. Conversely the spectrum of the 2D system, as anticipated analitically for the \(N\rightarrow \infty \) case, shows a decay with an exponent \(\beta =1.02\pm 0.02\) for frequencies between 0.05 and 1 Hz, intermediate between white noise at lower frequencies (not shown) and brown noise at higher ones. As we have done with the exponent \(\gamma \) in Fig. 5B, in Fig. 5E we plot the exponent \(\beta \) of a fit \(P(f)\sim f^{-\beta }\) in a sliding window of range \([0.1\,f,f]\). Comparing Fig. 5E with Fig. 5B, it can be seen that exponents \(\gamma \) and \(\beta \) have a similar dependence respectively on the avalanche duration and on the inverse frequency. In the case of the fully-connected system (red dots) both exponents are around 2 in the ranges \([10^2,10^3]\) ms and [1, 10] Hz, and decay to lower values for longer avalanches or smaller frequencies. In the case of the 2D system the exponents are nearly constant in the ranges \([10^3,10^4]\) ms and [0.1, 1] Hz, although they tend to quite different values, namely \(\beta \simeq 1\), \(\gamma \simeq 1.3\). The reason of this discrepancy is to be further investigated.

In Fig. 5C we show \(\langle S\rangle (T)\) for different sizes of the lattice, from \(L=1\) (fully-connected case) to \(L=40\), while in Fig. 5F we plot the exponent \(\gamma \) measured by fits of the data in panel C in a range of mean sizes between \(5\times 10^6\) and \(10^8\). It can be seen that the exponent remains near to \(\gamma =2\) for small sizes, and drops to a value near \(\gamma =1.3\) for large lattices.

(A) Mean size of an avalanche \(\langle S\rangle (T)\) as a function of its duration, for the same system and parameters of Fig. 4; (B) Exponent \(\gamma \) of a fit of \(\langle S\rangle (T)\) with a function \(T^\gamma \), in a sliding window \([T,10\,T]\). The dashed lines show the exponents given by the fits of \(\langle S\rangle (T)\) in panel (A), and used in Fig. 6 for the collapse of the avalanche shapes. (C) Same as in (A), but showing also \(\langle S\rangle (T)\) for many sizes between \(L=1\) and \(L=100\). (D) Power spectrum P(f) of the single site firing rate; (E) Exponent \(\beta \) of a fit of the power spectrum P(f) with a function \(f^{-\beta }\), in a sliding window \([0.1\,f,f]\). To make the comparison with panel B easier, the scale on the x-axis is inverted: high frequencies (small times) are on the left, low frequencies (large times) on the right, (F) Exponent \(\gamma \) of the fit of \(\langle S\rangle (T)\) for different sizes of the lattice. The fit is restricted to mean sizes in the range \([5\times 10^6,10^8]\).

(A) Average shape of the avalanches having duration in an interval \([T/1.09,1.09\,T]\) centered on the duration T listed in the legend, divided by \(T^{1-\gamma }\), for the same parameters of Fig. 4 and for the fully-connected system (\(L=1\)); (B) The same for the 2D system (\(L=40\)).

Scaling of the shape of avalanches

Together with the relation Eq. (6), another test of the “criticality” hypothesis for avalanche activity, is the scaling of the shape of the avalanches. Denoting with V(t) the mean number of spikes observed at time t during an avalanche of duration T, the total size of the avalanche is given by \(S=\int _0^T dt V(t)\sim T^\gamma \), so it is expected that the normalized shape \(V(t)T^{1-\gamma }\) depends only on the rescaled time t/T. This relation should hold as long as the mean size \(\langle S\rangle (T)\) is well fitted by a power law \(T^\gamma \), that is for durations where the exponent of the sliding window fits are nearly constant in Fig. 5B. Looking at Fig. 5B, we expect a collapse of the shapes in the interval \(10^2<T<10^3\) ms for the fully-connected system, with a value \(\gamma \sim 2.04\), and in the interval \(10^3<T<10^4\) ms for the 2D system, with a value \(\gamma \sim 1.35\). We highlight such values of \(\gamma \) by dashed lines in the figure. The collapse of the shapes is indeed quite well verified, for such values and duration ranges, as shown in Fig. 6A,B. Note that, as expected, an exponent \(\gamma \simeq 2\) corresponds to a shape that is nearly parabolic (fully-connected system), while in the case of the 2D system the exponent \(\gamma \simeq 1.3\) corresponds to a more flattened shape26.

Dependence on the fraction of inhibitory neurons

In this section we investigate the role of the ratio between excitatory and inhibitory neurons, by simulating the system also for a fraction 80/20 of excitatory and inhibitory neurons, more similar to cortical networks. The total number of neurons on each site of the lattice is \(2\times 10^8\) as in the previously studied (50/50) case, so that we have \(n_E=1.6\times 10^8\) and \(n_I=4\times 10^7\). Note that, as it is apparent from Eq. (3), the strength of the excitatory/inhibitory synapses is inversely proportional to the number of excitatory/inhibitory neurons, so that if the number of inhibitory neurons is decreased, the strength of inhibitory connections is correspondingly increased. In this way we ensure that the critical point corresponds to the same value of \({\tilde{w}}_{0,0}=\beta ^{-1}\alpha \) and \(h=0\).

In Fig. 7 we compare the four cases considered: fully-connected 50/50, fully-connected 80/20, 2D 50/50 and 2D 80/20. We note that the fraction of inhibitory neurons affects only the cut-off in the distributions P(S) and P(T), while the mean avalanche size \(\langle S\rangle (T)\) and the power spectrum P(f) are not affected. Moreover the cut-off has a significative change only in the 2D system, and is much less affected in the fully-connected case. In the insets of Fig. 7A,B, we report a collapse of the 50/50 and 80/20 distributions in the 2D case, showing that the cutoff is approximately four (three) times smaller in the 80/20 case for the size (duration) distributions.

In conclusion, we can affirm that the observed dependence of critical exponents on the spatial dimensionality is preserved also for different fractions of inhibitory neurons.

(A) Avalanche size distribution P(S) for the fully-connected and 2D models, and two different fractions of excitatory and inhibitory neurons, 50/50 and 80/20. The total number of neurons on each site is \(2\times 10^8\) in all cases. Other parameters as in Figs. 4 and 5 . Inset: collapse of the distributions in the 2D case. The cut-off \(S_c\) is \(4\times 10^8\) in the 50/50 case and \(10^8\) in the 80/20 case. (B) Avalanche duration distribution. Inset: collapse of the distributions in the 2D case. The cut-off \(T_c\) is \(3\times 10^4\) in the 50/50 case and \(10^4\) in the 80/20 case. (C) Mean size of the avalanche as a function of the duration. (D) Power spectrum of the firing rate.

Conclusions

We have studied the stochastic Wilson–Cowan model on a 2D lattice, with connections decaying exponentially with the distance. We use a connection weight that decays exponentially with distance to model the structural anatomical connectivity that exhibits exponential decay with distance. Recent research indeed, using data from retrograde tracer injections, shows that influence of interareal distance on connectivity patterns is conform to an exponential distance rule (EDR), according to which the projection lengths decay exponentially with inter-areal separation27,28,29,30,31. Spatial decay constant is of order of few mm for white matter, and few tenths of mm for gray matter, in mouse and macaque,28,29. Notably, in the 2D model, as in the fully-connected case, varying the difference between the strength of excitatory and inhibitory connections, the model undergoes a second order transition from a phase where the activity tends to zero in absence of external input, to a phase where the activity is self-sustained even in absence of external inputs. At the critical point, one of the relaxation times diverge. While the fully-connected model is characterized by just two relaxation times, one of which is always lower than \(\alpha ^{-1}\), where \(\alpha \) is the deactivation rate of neurons, the 2D system has a spectrum of relaxation times, related to the different Fourier modes that can be defined on the lattice. Such relaxation times become proportional to \(D_0^{-1}|{\varvec{k}}|^{-2}\) at the critical point, where \(D_0\) is a constant and, as a consequence, the model in 2D shows a logarithmic decay of the relaxation functions and a \(f^{-1}\) behaviour of the power spectrum, in an interval of frequencies between \(f_{\text {min}}=L^{-2}D_0\) and \(f_{\text {max}}=a^{-2}D_0\), where L is the lattice size, \(\lambda \) is the range of connections, and \(D_0\) is defined in Eq. (17). We emphasize that the 1/f behaviour is observed for the single site activity (or the activity of a localized group of sites), being the superposition of all the Fourier components with a spectrum of relaxation times. Conversely, the activity of the entire system corresponds to the single Fourier component \({\varvec{k}}=0\) and therefore exhibits a \(1/f^2\) behavior. Moreover, we have shown that the change in the behaviour of the power spectrum is related to a marked change in the exponents of the avalanche distributions. Note that, in the case of the 2D system, we have defined avalanches considering the activity on a single site and not in the whole system, in order to use the same signal considered to define the power spectrum. This choice has been also determined by the observation that, in a large 2D system, activity in the entire system never goes to zero, which would make the introduction of a thresholding procedure necessary to define avalanches. Clearly, although avalanches are measured locally, their distribution reflects the activity of all the sites of the system. In the 2D case, the exponents \(\alpha \) and \(\tau \) of the size and duration distributions become smaller than the ones predicted in the mean-field case, respectively \(\alpha \simeq 1.3\) and \(\tau \simeq 1.4\), while the exponent \(\gamma \) that relates the mean size to the avalanche duration decreases from 2 to 1.3. It remains to be understood the discrepancy between \(\gamma \) and the exponent \(\beta \) of the power spectrum. Notably in the 2D model the avalanche shape collapse is quite well verified with a value \(\gamma \simeq 1.35\), showing a more flattered shape, while it is \(\gamma \simeq 2\) in the fully-connected case.

Discrepancy between the fully-connected \(\gamma \simeq 2\) prediction and the exponent \(\gamma \simeq 1.3\) observed in some experiments12, with a large interval of exponents \(\alpha \) and \(\tau \) of the size and duration distributions all falling on the \(\gamma \simeq 1.3\) line, may be attributed to the effective topology of the measured activity. For example measurement able to record spiking activity of large areas of neuronal populations may reflect the structured topology of the network, while more localized measures (in highly connected areas) may be better approximated by mean-field i.e. fully-connected topology. It would be interesting to compare the corresponding scaling behavior of the power spectra of experimental activity in different topological conditions, to confirm the existence of different universality classes.

Methods

We consider a two-dimensional lattice of \(L\times L\) sites. On each site there are \(n_E=N_E/L^2\) excitatory neurons and \(n_I=N_I/L^2\) inhibitory ones. Define \(m_{\varvec{r}}\) and \(l_{\varvec{r}}\) respectively the number of active excitatory and inhibitory neurons on the site \({\varvec{r}}=(x,y)\), with \(x,y=0,\ldots ,L-1\).

In the Gaussian noise approximation, the temporal evolution of the system can be effectively described in terms of the coupled non-linear Langevin equations32

where \(\alpha \) is the rate of deactivation of the neurons, f(s) is the input dependent rate of activation, \(s^{(e)}_{\varvec{r}}\) and \(s^{(i)}_{\varvec{r}}\) are respectively the inputs of the excitatory and inhibitory neurons on site \({\varvec{r}}\). They are given by

where \(n_E^{-1}w^{(ee)}_{{\varvec{r}}{{\varvec{r}}^\prime }}\) is the strength of the connection between excitatory neurons on site \({{\varvec{r}}^\prime }\) and those on site \({\varvec{r}}\), etc...\(h_{\varvec{r}}^{(e)}\) and \(h_{\varvec{r}}^{(i)}\) are the external inputs, \(\eta _{E,{\varvec{r}}}(t)\) and \(\eta _{I,{\varvec{r}}}(t)\) are Gaussian white noise functions.

The fractions of active neurons \(x_{\varvec{r}}=m_{\varvec{r}}/n_E\), \(y_{\varvec{r}}=l_{\varvec{r}}/n_I\) obey therefore the equations

Let us suppose now that connections depend only on the distance \(|{\varvec{r}}-{{\varvec{r}}^\prime }|\), and the external input is independent of the site, \(h_{\varvec{r}}^{(e)}=h_0^{(e)}\), \(h_{\varvec{r}}^{(i)}=h_0^{(i)}\). In these hypotheses the fixed point of Eq. (8) is the same for all sites, and corresponds to \(x_{\varvec{r}}=x_0\), \(y_{\varvec{r}}=y_0\), with

and

We can perform a system size expansion, changing variables from the fractions of active neurons \(x_{\varvec{r}}\) and \(y_{\varvec{r}}\) to the deviations of the fractions with respect to the fixed point. Defining \(x_{\varvec{r}}=x_0+n_E^{-1/2}\xi _{E,{\varvec{r}}}\), \(y_{\varvec{r}}=y_0+n_I^{-1/2}\xi _{I,{\varvec{r}}}\), substituting in Eq. (8), and neglecting terms that are small when the number of neurons is large, we obtain the linear Langevin equations

The Eq. (9) are translationally invariant and linear, therefore it is convenient to perform a Fourier transform and define

where \({\varvec{k}}=\frac{2\pi }{L}(k_x,k_y)\), with \(k_x,k_y=0,\ldots ,L-1\).

Substituting in (9), we obtain the decoupled equations for each of the \(L\times L\) pairs of Fourier modes \({\tilde{\xi }}_{E,{\varvec{k}}}\) and \({\tilde{\xi }}_{I,{\varvec{k}}}\),

where \({\tilde{w}}^{(ee)}_{\varvec{k}}=\sum \limits _{\varvec{r}}e^{i{\varvec{k}}\cdot ({\varvec{r}}-{{\varvec{r}}^\prime })}w^{(ee)}_{{\varvec{r}}{{\varvec{r}}^\prime }}\), etc..., \(\langle \eta _{E,{\varvec{k}}}(t)\eta _{E,{\varvec{k}}^\prime }(t^\prime )\rangle =\delta _{{\varvec{k}}^\prime ,-{\varvec{k}}}\delta (t-t^\prime )\), etc...

If the connections do not depend on the post-synaptic neuron, but only on the pre-synaptic one, that is \({\tilde{w}}^{(ee)}_{{\varvec{k}}}={\tilde{w}}^{(ie)}_{{\varvec{k}}}={\tilde{w}}^{(e)}_{\varvec{k}}\), \({\tilde{w}}^{(ei)}_{{\varvec{k}}}={\tilde{w}}^{(ii)}_{\varvec{k}}={\tilde{w}}^{(i)}_{\varvec{k}}\), and the external input is \(h_0^{(e)}=h_0^{(i)}=h\), we can further simplify the equations, making the variable substitution \({\tilde{\xi }}_{\Sigma ,{\varvec{k}}}=\frac{{\tilde{\xi }}_{E,{\varvec{k}}}+{\tilde{\xi }}_{I,{\varvec{k}}}}{2}\), \({\tilde{\xi }}_{\Delta ,{\varvec{k}}}=\frac{{\tilde{\xi }}_{E,{\varvec{k}}}-{\tilde{\xi }}_{I,{\varvec{k}}}}{2}\). In this case, the fixed point values \(x_0\) and \(y_0\) of the excitatory and inhibitory fraction of active neurons are the same, so that we can define \(x_0=y_0=\Sigma _0\), and \(s_0^{(e)}=s_0^{(i)}=s_0\), and the matrix in Eq. (10) becomes upper triangular

where

and

where we have used the fact that \(w^{(e)}_{{\varvec{r}}{{\varvec{r}}^\prime }}=w^{(e)}_{{{\varvec{r}}^\prime }{\varvec{r}}}\), \(w^{(i)}_{{\varvec{r}}{{\varvec{r}}^\prime }}=w^{(e)}_{{{\varvec{r}}^\prime }{\varvec{r}}}\). Note that \(\tau _{2,{\varvec{k}}}\) is independent of \({\varvec{k}}\). The fixed point input \(s_0\) can be written as \(s_0={\tilde{w}}_{0,0}\Sigma _0+h\), and we report here for convenience the form of the fixed point equation,

As we have seen, each of the Fourier modes behaves exactly as the total fraction of neurons in the model with all-to-all connections, but with parameters \(\tau _{1,{\varvec{k}}}\), \({\tilde{w}}_{\text {ff},{\varvec{k}}}\) that depend on the Fourier mode \({\varvec{k}}\). The autocorrelation function of the fluctuations \({\tilde{\xi }}_{\Sigma ,{\varvec{k}}}\) is therefore given by23

where

By performing an inverse Fourier transform, we can find the autocorrelation function of \(\xi _{\Sigma ,{\varvec{r}}}=\frac{\xi _{E,{\varvec{r}}}+\xi _{I,{\varvec{r}}}}{2}\), which is given by

The power spectrum of the variable \(\xi _{\Sigma ,{\varvec{r}}}(t)\) is therefore given by the Wiener-Khinchin theorem as

The linear Eq. (10) depend basically on parameters defined at the fixed point. The fixed point can undergo different kinds of transitions (bifurcations). We will consider here the case of the transcritical bifurcation, where one of the eigenvalues of the matrix in Eq. (11) vanishes, in particular we will consider the case in which the eigenvalue \(\tau _{1,0}^{-1}\) corresponding to the mode \({\varvec{k}}=0\) vanishes, so that

For values of \(|{\varvec{k}}|\) smaller than \(\lambda ^{-1}\), where \(\lambda \) is the range of the connections \(w^{(e)}_{{\varvec{r}}{{\varvec{r}}^\prime }}\) and \(w^{(i)}_{{\varvec{r}}{{\varvec{r}}^\prime }}\), taking the first two terms of the Taylor expansion of the cosine in Eq. (13a), we have

where \(\theta \) is the angle between \({\varvec{k}}\) and \({\varvec{r}}-{\varvec{r}}^\prime \), d is the dimension of the space, and \(\Gamma (x)\) is the Gamma function. Putting this expression in Eq. (12a), and using Eq. (15), we obtain that \(\tau _{1,{\varvec{k}}}^{-1}\simeq D_0|{\varvec{k}}|^2\), where

The values of \(\tau _{1,{\varvec{k}}}\) therefore diverge for \(|{\varvec{k}}|\rightarrow 0\), so that we can consider \(\tau _{1,{\varvec{k}}}\gg \tau _{2,{\varvec{k}}}\) for low values of the wave number. In this case, we can approximate

Therefore both \(\tau _{1,{\varvec{k}}}\) and \(A_{1,{\varvec{k}}}\) diverge for \(|{\varvec{k}}|\rightarrow 0\), while \(\tau _{2,{\varvec{k}}}\), \(A_{2,{\varvec{k}}}\) e \({\tilde{w}}_{\text {ff},{\varvec{k}}}\) remain finite. Neglecting terms relative to \(\tau _{2,{\varvec{k}}}\) in the power spectrum, we obtain

In d spatial dimensions, the density of wave numbers is \(\left( \frac{L}{2\pi }\right) ^d\,k^{d-1}dk\), and changing the sum with an integral

where \(f_{\text {max}}=\lambda ^{-2}D_0\) with \(\lambda \) the range of the connections, while \(f_{\text {min}}=L^{-2}D_0\). For \(f_{\text {min}}\ll f\ll f_{\text {max}}\) and \(d<4\), the integral is approximately independent of f, so that \(P(f)\sim f^{(d-4)/2}\). In particular \(P(f)\sim 1/f\) in \(d=2\) and \(P(f)\sim 1/f^{1/2}\) in \(d=3\). In \(d=4\) there is a divergence \(x^{-1}\) in the integrand, so that \(P(f)\sim -\log f\), while for \(d>4\) the divergence exactly cancels the factor \(f^{(d-4)/2}\), so that the spectrum becomes constant (white noise). Note that the previous derivation holds only for \(\lambda \ll L\), otherwise the condition \(f_{\text {min}}\ll f\ll f_{\text {max}}\) cannot be met.

Data availability

The datasets analysed during the current study are available from the corresponding author on reasonable request.

References

Beggs, J. M. & Plenz, D. Neuronal avalanches in neocortical circuits. J. Neurosci. 23, 11167 (2003).

Petermann, T. et al. Spontaneous cortical activity in awake monkeys composed of neuronal avalanches. Proc. Natl. Acad. Sci. 106, 15921 (2009).

Shriki, O. et al. Neuronal avalanches in the resting meg of the human brain. J. Neurosci. 33, 7079 (2013).

Friedman, N. et al. Universal critical dynamics in high resolution neuronal avalanche data. Phys. Rev. Lett. 108, 208102 (2012).

Shew, W., Clawson, W. W. & Pobst, J. Adaptation to sensory input tunes visual cortex to criticality. Nat. Phys. 11, 659 (2015).

Scarpetta, S., Apicella, I., Minati, L. & de Candia, A. Hysteresis, neural avalanches, and critical behavior near a first-order transition of a spiking neural network. Phys. Rev. E 97, 062305 (2018).

de Candia, A., Sarracino, A., Apicella, I. & de Arcangelis, L. Critical behaviour of the stochastic Wilson–Cowan model. PLoS Comput. Biol. 17, e1008884 (2021).

Nandi, M. K., Sarracino, A., Herrmann, H. J. & de Arcangelis, L. On the scaling of avalanche shape and activity spectrum in neuronal networks. Phys. Rev. E 106, 024304 (2022).

Kuntz, M. C. & Sethna, J. P. Noise in disordered systems: The power spectrum and dynamic exponents in avalanche models. Phys. Rev. B 62, 11699 (2000).

Sethna, J. P., Dahmen, K. A. & Myers, C. R. Crackling noise. Nature 410, 242 (2001).

Miller, Stephanie R., Yu, Shan & Plenz, Dietmar. The scale-invariant, temporal profile of neuronal avalanches in relation to cortical \(\gamma \)–oscillations. Sci. Rep. 9, 1–14 (2019).

Fontenele, A. J. et al. Criticality between cortical states. Phys. Rev. Lett. 122, 208101 (2019).

Mariani, B. et al. Neuronal avalanches across the rat somatosensory barrel cortex and the effect of single whisker stimulation. Front. Syst. Neurosci. 15, 709677 (2021).

Novikov, E., Novikov, A., Shannahoff-Khalsa, D., Schwartz, B. & Wright, J. Scale-similar activity in the brain. Phys. Rev. E 56, R2387 (1997).

Bedard, C., Kroeger, H. & Destexhe, A. Does the 1/f frequency scaling of brain signals reflect self-organized critical states?. Phys. Rev. Lett. 97, 118102 (2006).

Dehghani, N., Bedard, C., Cash, S., Halgren, E. & Destexhe, A. Comparative power spectral analysis of simultaneous elecroencephalographic and magnetoencephalographic recordings in humans suggests non-resistive extracellular media. J. Comput. Neurosci. 29, 405 (2010).

Pritchard, W. The brain in fractal time: 1/f-like power spectrum scaling of the human electroencephalogram. Int. J. Neurosci. 66, 119 (1992).

Zarahn, E., Aguirre, G. & Esposito, M. D. Empirical analyses of bold fmri statistics. Neuroimage 5, 179 (1997).

He, B. J., Zempel, J. M., Snyder, A. Z. & Raichle, M. E. The temporal structures and functional significance of scale-free brain activity. Neuron 66, 353 (2010).

Dalla Porta, Leonardo & Copelli, Mauro. Modeling neuronal avalanches and long-range temporal correlations at the emergence of collective oscillations: Continuously varying exponents mimic m/eeg results. PLoS Comput. Biol. 15, e1006924 (2019).

Carvalho, T. T. A. et al. Subsampled directed-percolation models explain scaling relations experimentally observed in the brain. Front. Neural Circuits 14, 576727 (2021).

Benayoun, M., Cowan, J. D., van Drongelen, W. & Wallace, E. Avalanches in a stochastic model of spiking neurons. PLoS Comput. Biol. 6, e1000846 (2010).

Sarracino, A., Arviv, O., Shriki, O. & de Arcangelis, L. Predicting brain evoked response to external stimuli from temporal correlations of spontaneous activity. Phys. Rev. Res. 2, 033355 (2020).

Hooge, F. N. & Bobbert, P. A. On the correlation function of \(1/f\) noise. Phys. B 239, 223 (1997).

Alstott, J., Bullmore, E. & Plenz, D. A python package for analysis of heavy-tailed distributions. PLoS One 9, e95816 (2014).

Baldassarri, A. Universal excursion and bridge shapes in abbm/cir/bessel processes. J. Stat. Mech. Theory Exp. 8, 083211 (2021).

Ercsey-Ravasz, M. et al. A predictive network model of cerebral cortical connectivity based on a distance rule. Neuron 80, 184–197 (2013).

Gămănuţ, R. et al. The mouse cortical connectome characterized by an ultra dense cortical graph maintains specificity by distinct connectivity profiles. Neuron 97(3), 698 (2018).

Horvát, S. et al. Spatial embedding and wiring cost constrain the functional layout of the cortical network of rodents and primates. PLoS Biol. 14, e1002512 (2016).

Markov, N. T. et al. Cortical high-density counterstream architectures. Science 342, 1238406 (2013).

Markov, N. T. et al. The role of long-range connections on the specificity of the macaque interareal cortical network. Proc. Natl. Acad. Sci. 110v, 5187–5192v (2013b).

van Kampen, N. G. Stochastic Processes in Physics and Chemistry (North Holland, 2007)

Acknowledgements

AdC, IA and LdA acknowledge financial support from the MIUR PRIN 2017WZFTZP “Stochastic forecasting in complex systems”. AS acknowledges financial support form MIUR PRIN 201798CZLJ. LdA and AS acknowledge support from Program (VAnviteLli pEr la RicErca: VALERE) 2019 financed by the University of Campania “L. Vanvitelli”.

Author information

Authors and Affiliations

Contributions

All authors designed the project, conceived the model and planned the simulations. I.A. performed the simulations and analyzed data. All authors wrote and reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Apicella, I., Scarpetta, S., de Arcangelis, L. et al. Power spectrum and critical exponents in the 2D stochastic Wilson–Cowan model. Sci Rep 12, 21870 (2022). https://doi.org/10.1038/s41598-022-26392-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-26392-8

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.