Abstract

The critical brain hypothesis has emerged as an attractive framework to understand neuronal activity, but it is still widely debated. In this work, we analyze data from a multi-electrodes array in the rat’s cortex and we find that power-law neuronal avalanches satisfying the crackling-noise relation coexist with spatial correlations that display typical features of critical systems. In order to shed a light on the underlying mechanisms at the origin of these signatures of criticality, we introduce a paradigmatic framework with a common stochastic modulation and pairwise linear interactions inferred from our data. We show that in such models power-law avalanches that satisfy the crackling-noise relation emerge as a consequence of the extrinsic modulation, whereas scale-free correlations are solely determined by internal interactions. Moreover, this disentangling is fully captured by the mutual information in the system. Finally, we show that analogous power-law avalanches are found in more realistic models of neural activity as well, suggesting that extrinsic modulation might be a broad mechanism for their generation.

Similar content being viewed by others

Introduction

The critical brain hypothesis has been much investigated since scale-free neuronal avalanches were found in 2003 by Beggs and Plenz1. By analyzing Local Field Potentials (LFPs) from cortical slices and cultures on chips, their seminal work showed that neural activity occurred in cascades, named neuronal avalanches. Remarkably, they found that both the sizes and the lifetimes of these avalanches were power-law distributed, with exponents surprisingly close to the ones of a critical mean-field branching process. Since then, such power-laws have been repeatedly observed in experiments2,3,4,5,6,7, giving rise to the idea that the brain might be poised near the critical point of a phase transition8,9,10,11,12,13,14,15. Yet, this hypothesis is still widely debated, and reconciling the different views and experimental results remains pressing.

On the one hand, many subsequent works showed that the presence of power-law avalanches is not a sufficient condition for criticality, as they might emerge from different mechanisms16,17,18,19,20. A perhaps stronger test for criticality is whether the avalanche exponents satisfy the crackling-noise relation, a relation that connects the avalanche exponents to the scaling of the average avalanche size \(\langle {s}\rangle\) with its duration T21,22,23, defined by the exponent \({\langle s(T)\rangle } \sim T^\delta\). In particular, recent findings24,25,26 suggest that, while the avalanche exponents found in different experimental settings do vary, they all lay along the scaling line defined by the crackling-noise relation with a seemingly universal exponent \(\delta \approx 1.28\). Nevertheless, it was recently suggested that this relation can be fulfilled in different settings27 and even in models of independent spiking units, for a range of choices of the power-law fitting method28.

On the other hand, the debate about the “nature” of the transition is very much open, and thus its hypothetical universality class is poorly understood. In particular, recent works have proposed that the observed transition might be related to a synchronous-asynchronous one25,29,30,31 or a disorder-induced transition32, contrarily to the original hypothesis of a simpler critical branching process.

Arguably, a more fundamental signature of criticality is the presence of power-law correlations in space33,34. In particular, a key feature of both equilibrium and non-equilibrium systems is a correlation length that, in the thermodynamic limit, diverges at criticality. In finite systems, such scale-free correlations manifest themselves in a correlation length that scales with the system size. Yet, the study of these correlations has usually been applied at coarser scales, such as in whole-brain data32,35, and only recently in specific cortical areas36. When such spatial information is not available, phenomenological renormalization group procedures based on the correlation structure between the underlying degrees of freedom have been recently proposed, although with some limitations in their interpretability37,38.

All in all, these results still lack a unifying framework. In this work, we draw a possible path to reconcile these different signatures of criticality. First, we analyze spatially extended LFPs from the rat’s somatosensory barrel cortex. The multi-electrodes array records LFPs from a whisker-related barrel column that spans vertically the six layers of the barrel cortex. We find both scale-free avalanches that satisfy the crackling-noise relation and signatures of criticality beyond avalanches in the form of spatial correlations with a characteristic length that grows linearly with the system size. Then, we introduce an archetypal framework in which the origin of these different signatures can be disentangled, considering both a paradigmatic model that can be tackled analytically and a more biophysically sound model. In doing so, we shed a light on the underlying mechanisms from which such properties of neural activity emerge. In particular, our work suggests that, whereas avalanches may emerge from an external stochastic modulation that affects all degrees of freedom in the same way, interactions between neural populations are the fundamental biological mechanism that gives rise to seemingly scale-free correlations.

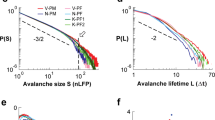

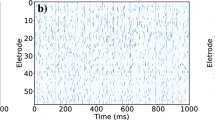

(a) Left: scheme of the array used to obtain the LFPs data from all the cortical layers of the barrel cortex (adapted from39); right an example of the LFPs signals for different layers and the corresponding discretization. An array of 256 channels organized in a \(64 \times 4\) matrix is inserted in a barrel column and the signals from the cortical layers are collected by \(55 \times 4\) electrodes. (b–d) Avalanche statistics obtained from the analysis of LFPs data in a rat. Both the distribution of the avalanches (b) sizes and (c) durations are power-laws, and (d) the crackling-noise relation is satisfied. (e) Scaling of the correlation length with the system size in LFPs data, averaging over four different rats. The error bars are shown as 5 standard deviations from the mean for visual ease. The correlation length scales linearly with the system size with no plateau in sight, a hallmark of criticality.

Results

Scale-free avalanches and correlations in LFPs

In this work, we study LFPs activity in the primary somatosensory cortex of four rats using state-of-the-art spatially extended multi-electrodes arrays. The cortical activity is recorded through a 256-channels array organized in a 64 rows \(\times\) 4 columns matrix with an inter-electrode distance of 32 \(\mu m\) (see “Methods” and Fig. 1a). The avalanche statistics is analyzed in LFPs data following standardized pipelines for their detection (see “Methods”)2,5,40, and we report a full statistical analysis of our dataset in41. The distribution p(s) of the avalanches sizes and of the avalanche duration p(T) are computed and fitted using a corrected maximum likelihood method41,42. We find that both are statistically compatible with the expected power-laws \(P(s)\sim s^{-\tau }\) and \(P(T)\sim T^{-\tau _t}\) as we show in Fig. 1b–c. Averaging over four rats, we find an inter-rat variability with average exponents \(\langle {\tau }\rangle =1.75 \pm 0.1\) and \(\langle {\tau _t}\rangle =2.1 \pm 0.3\) (see Supplementary Information). In Fig. 1d we show that the crackling-noise relation21 holds, by comparing \(\delta _\mathrm {pred} = \frac{\tau _t-1}{\tau -1}\) with the exponent obtained by fitting the average avalanche sizes as a function of their duration, i.e., \(\langle {s(T)}\rangle \sim T^{\delta _\mathrm {fit}}\). Averaging over each of our rats, we find \(\langle {\delta _{\mathrm {pred}}}\rangle =1.47\pm 0.18\) and \(\langle {\delta _\mathrm {fit}}\rangle = 1.46 \pm 0.14\). Further details on the fitting procedure can be found in the Methods and in41, where we also use higher frequency data (MUAs) from the same experimental condition, but with a much less dense array. Although MUAs are not suitable for the study of the correlation length, they reproduce more closely the avalanche exponents and in particular the scaling exponent \(\delta \approx 1.28\)24,25,26. On the other hand, LFPs are known to be strongly affected by finite-size effects1, as we show in41 by performing finite-size scaling on LFPs from the same set of experiments.

Then, thanks to the extended spatial resolution of the multi-electrodes array, we focus on the spatial correlations of the fluctuations of the measured LFP activity and whether they display any signature of criticality35,43. We study the scaling of the correlation length \(\xi\) as a function of the system sizes L by selecting different portions of the array44,45 (see “Methods”) and we find that \(\xi\) scales linearly with L. This result can be interpreted as a signature of the presence of underlying long-range correlations that scale with the system size. In fact, through simulations on control models displaying a critical point, subsampling, as we do, has been shown to be practically equivalent to considering systems of different sizes45. This behavior matches exactly what would happen at a critical point, where the correlation length diverges in the thermodynamic limit and thus grows with the size of a finite system. Hence, we find that the measured neural activity in the barrel cortex at rest displays two different signatures of criticality—power-law avalanches and scale-free spatial correlations.

A disentangled model for extrinsic activity and internal couplings

In order to try and unfold the underlying processes from which these collective properties emerge, we assume that neural activity may be decomposed in two parts19,46: (i) the intrinsic activity, which is the activity driven by interactions between neurons or populations of neurons—in our case, the propagation dynamics across the multi-layer network of the interconnected neurons along the barrel; (ii) the extrinsic activity, which instead corresponds to the activity modulated by an external or global unit—in our case, the external inputs triggering or modulating the propagation (e.g. synaptic current injection from the thalamic inputs). Taking into account extrinsic activity becomes particularly important when neural activity is not analyzed in an isolated context—e.g., from neural slices—but rather directly from a portion of the animal brain, as we do here.

To this end, we introduce a paradigmatic model of N continuous real variables \((v_1, \dots , v_N)\), denoting the activity of N units (e.g. neurons or, as in our case, distinct populations of neurons as measured by our LFPs). Intrinsic activity corresponds to pairwise interactions among these units, whereas extrinsic activity is modeled through a common external input that affects all the units in the same way. To fix the ideas, we first consider the simple case of a multivariate Ornstein-Uhlenbeck (mOU) process, in which the external input corresponds to a common modulation of the noise strength. Although not realistic from a biophysical point of view, this model is simple enough to be treated analytically and to provide a clear physical interpretation, while being complex enough to display non-trivial behaviors47. Moreover, mOU processes have been already considered in the literature in the context of fMRI signals48,49,50,51. As we will see, the results we obtain for extrinsic activity are qualitatively unchanged even in more biophysically-sound models. Therefore, we first consider

where \(\eta _i(t)\) are standard white noises, A is a \(N\times N\) symmetric matrix and \({\mathscr{D}}(t)>0\) corresponds to a noise strength modulation from an external input shared among all the units. We also write \(A_{ij} = \frac{1}{\gamma _i} - {\mathscr{W}}_{ij}\), where \(\gamma _i\) is the characteristic time of the i-th unit, and \({\mathscr{W}}\) is the matrix of the effective synaptic strengths, whose diagonal entries are set to zero. In order to derive analytical results, following17, we define the noise modulation \({\mathscr{D}(t)}\) as

where D(t) is itself an OU process \({\dot{D}}(t) = -D(t)/\gamma _D + \sqrt{\theta } \eta _D(t)\) and \({\mathscr{D}^{*}} >0\) is a properly chosen threshold. Therefore, the noise modulation \({\mathscr{D}(t)}\) is described by periods in which it is constant in time and equal to \({\mathscr{D}^{*}}\), and periods in which it changes according to an OU process with values \({\mathscr{D}}(t)>{\mathscr{D}^{*}}\).

We first consider the case in which the units are driven only by this extrinsic activity and not by the intrinsic one, i.e., we set the internal interactions to \({\mathscr{W}}_{ij} = 0\), \(\forall i, j\). We refer to this case as the “extrinsic model”. Then, we add back interactions by reconstructing an effective connectivity49 in order to match the correlations matrix of our data, hence considering the “interacting model”.

Scale-free avalanches from extrinsic activity

In the absence of internal interactions, at each time-step the units are conditionally independent given the common external modulation \({\mathscr{D}}\). However, we typically do not have experimental access to such external modulation. In general, we can only describe the joint stationary probability distribution \(p(v_1, \dots , v_N)\) of the units alone. Let us now consider that \(\gamma _{D} \gg \gamma _i\), which corresponds to the assumption that the time-scale of the modulation is much slower than the one of the units19. In this limit, the process of \(v_i\) reaches stationarity much faster than the process of \({\mathscr{D}}\), thus the joint stationary distribution is given by52

where \(p(v_i|{\mathscr{D}})\) is the stationary solution to the Fokker-Planck equation53 associated to Eq. (1) at a fixed \({\mathscr{D}}\), and \(p({\mathscr{D}})\) is the stationary solution associated to Eq. (2). Notice that, although the conditional probability distribution is factorizable, in general \(p(v_1, \dots , v_N) \ne \prod _{i=1}^Np(v_i)\), i.e., the presence of the unobserved modulation results in an effective dependence between the units.

With the choice of an Ornstein-Uhlenbeck process for \(v_i\) described in the previous section, \(p(v_i | {\mathscr{D}})\) is Gaussian and we are able to compute these distributions analytically. We find

where H is the Heaviside step function, and

As noted before, it is clear that \(p(v_i, v_j) \ne p(v_i)p(v_j)\). In principle, we are able to compute the joint probability distribution for any number of variables in the same way. Crucially, notice that

which implies that the units, although not independent, are always uncorrelated. This follows immediately from the fact that all the expectation values where a variable \(v_i\) appears an odd number of times vanish, e.g.,

since \(\int dv_i \,v_i \,p(v_i | {\mathscr{D}\!\!}) = 0\). Therefore, in the extrinsic Ornstein-Uhlenbeck model, the variables are always uncorrelated. This property will be useful when we will consider the case of a non-zero \({\mathscr{W}}_{ij}\), which will be the sole source of correlations in the model.

The dependence between the units induced by the modulation is shaped by the parameter \({\mathscr{D}^{*}}\). If \({\mathscr{D}^{*}}\) is high enough, the modulation is rare and the units are always dominated by noise as we can see in Fig. 2c. On the other hand, if \({\mathscr{D}^{*}}\) is small, whenever \({\mathscr{D}}(t) = {\mathscr{D}^{*}}\) the noise contribution to the units will be vanishing and the activity will follow an exponential decay. Therefore, in this regime, each \(v_i\) will typically alternate periods of quasi-silence to periods of activity. In other words, depending on the value of \({\mathscr{D}^{*}}\), this model can either reproduce a noise-driven behavior or a bursty, coordinated one, as shown in Fig. 2a.

Most importantly, the low \({\mathscr{D}^{*}}\) regime is also the onset of power-law distributed avalanches of neural activity, as we see by simulating the model at different \({\mathscr{D}^{*}}\) and performing the same analysis as in LFPs. We find that, as \({\mathscr{D}^{*}}\) decreases, a transition between exponential decaying avalanches and power-law distributed ones appears. Figures 2e–g shows that for \({\mathscr{D}^{*}}\) small enough, the stochastic modulation produces scale-free avalanches in both size and time with exponents \(\tau ^\mathrm {ext} = 1.60 \pm 0.01\) and \(\tau ^\mathrm {ext}_t = 1.77 \pm 0.01\). Crucially, these avalanches satisfy the crackling-noise relation, that is we find \(\delta _\mathrm {fit}^\mathrm {ext} = 1.21 \pm 0.01\) from the fit, while expecting \(\delta _\mathrm {pred}^\mathrm {ext} = 1.28 \pm 0.02\) from the avalanches exponents. Moreover, the rescaled temporal profiles of these avalanches collapse to a single curve, and the avalanche exponents is unaffected by the size of the system (see Supplementary Information). These results are strictly related to a low value of \({\mathscr{D}^{*}}\), that is, on the alternating periods of low and high noise strength. In fact, for higher \({\mathscr{D}^{*}}\), the noise strength is always high and only exponential-decaying avalanches are present as we see in Fig. 2h–j. Notably, in this high-\({\mathscr{D}^{*}}\) regime, rare but large events that correspond to periods in which \({\mathscr{D}}>{\mathscr{D}^{*}}\) result in non-exponential tails of the distribution. This suggests that the shift between exponential and power-law avalanches is smooth, and indeed the avalanche exponents change gradually as \({\mathscr{D}^{*}}\) becomes smaller (see Supplementary Information).

Avalanche statistics generated by the model at \({\mathscr{D}^{*}} = 0.3\) (a–b, e–g) and at \({\mathscr{D}^{*}} = 5\) (c-d,h-j), with \(\gamma _D = 15\) and \(\theta = 1\) and \(\gamma _i = \gamma = 0.05\) for the extrinsic model. (a–b) Comparison between the trajectories of \({\mathscr{D}}(t)\), \(v_i\) and the corresponding discretization in the low-\({\mathscr{D}^{*}}\) regime for (a) the extrinsic model and (b) the interacting one. (c–d) Same, but in the high-\({\mathscr{D}^{*}}\) regime. (e–g) If \({\mathscr{D}^{*}}\) is low, avalanches are power-law distributed with almost identical exponents in the extrinsic and interacting model, \(\tau ^\mathrm {ext} = 1.60 \pm 0.01\), \(\tau ^\mathrm {int} = 1.55 \pm 0.01\) and \(\tau ^\mathrm {ext}_t = 1.77 \pm 0.01\), \(\tau ^\mathrm {int}_t = 1.74 \pm 0.01\). The crackling-noise relation is verified in both cases. (h–j) Same plots, now in the high-\({\mathscr{D}^{*}}\) regime. Avalanches are now fitted with an exponential distribution. Notice that larger events, corresponding to periods in which \({\mathscr{D}}(t)>{\mathscr{D}^{*}}\), show up in the distributions’ tails, suggesting that the shift between exponentials and power-laws is smooth. (j) The average avalanche size as a function of the duration scales with an exponent that, as \(D^{*}\) increases, becomes closer to the trivial one \(\delta _\mathrm {fit}^\mathrm {ext} \approx \delta _\mathrm {fit}^\mathrm {int} \approx 1\).

Let us note that these exponents are different from the ones obtained in LFP data, but this is perhaps not surprising. In fact, beside the simplicity of this paradigmatic model, such exponents have been found to depend on the experimental settings54 and on individual variability24. Nevertheless, our framework reproduces the scaling exponent \(\delta \approx 1.28\)24,25, which we also find in our experimental settings in MUAs data41. Notably, in critical systems \(\delta\) is expected to obey the crackling-noise relation \(\delta = (\tau _t -1)/(\tau -1)\)21,24. However, following arguments similar to the ones proposed in27, one can derive such relation with the sole assumptions that avalanches are power-law distributed and that they satisfy \(s \sim T^\delta\), i.e., fluctuations in the size of an avalanche given its duration are negligible. Then,

from which it follows immediately that \(\delta = (\tau _t -1)/(\tau -1)\). These assumptions are certainly satisfied in critical points, where the exponent \(\delta\) is related to other critical exponents by a number of scaling relations21. Yet, the crackling-noise relation may hold also hold in other settings, as we find in our modeling framework.

Scale-free correlations from internal couplings

We have shown that in the paradigmatic model described by Eq. (1) with \({\mathscr{W}}_{ij} = 0\) the extrinsic modulation alone generates power-law avalanches in the low-\({\mathscr{D}^{*}}\) limit. Yet, this extrinsic activity cannot explain correlations such as the ones observed in Fig. 1e, as shown by Eq. (6). Hence, we now consider the interacting model, i.e., we consider the case in which both the extrinsic and the intrinsic components of activity are present.

Since in the extrinsic model described in the previous section the units are uncorrelated, we can infer the values of \(A_{ij}\) directly from the data49. In particular, we solve the inverse problem in such a way that the correlations of Eq. (1) match the experimentally-measured correlations \(\sigma _{ij}\) of our LFPs (see “Methods”). The effective connectivity A obeys the Lyapunov equation

The different regimes for the interacting model with such interaction matrix are plotted in Fig. 2b and d.

As shown in Fig. 2e–g, all avalanches exponents \(\tau \approx 1.6\), \(\tau _t \approx 1.75\) and the crackling-noise relation exponent \(\delta \approx 1.28\) are not changed significantly by the inclusion of direct interactions among the units, nor the high \({\mathscr{D}^{*}}\) regime is changed either, as shown in Fig. 2h–j. The fact that the exponents do not change when we add interactions to our model suggests that the avalanches are not affected by the interactions themselves—that is, in our model, they are determined by the extrinsic modulation. On the other hand, with these effective interactions we are now able to study the scaling of the correlation length \(\xi\) as a function of the system sizes L. We plot the results in Fig. 3a. As in our data, \(\xi\) scales linearly with L in the interacting model—in fact, we can show that, even though \(A_{ij}\) depends on the modulation parameters \(({\mathscr{D}^{*}}, \gamma _D, \theta )\), the scaling of the correlation length does not (see “Methods”). Hence, in our model, scale-free correlations are not only strictly dependent on the interaction network, but they are completely unaffected by avalanches.

These results suggest a profound implication—in our model, extrinsic and intrinsic activity are disentangled. If we measure neural avalanches alone, we might not be able to infer anything about the intrinsic neural dynamics of our model. However, we might have access to such dynamics if we investigate the correlations of the fluctuations. This disentangling is deeply related to the dependency structure of our model. We can probe such structure by computing the mutual information

which captures pairwise dependencies in the system that go well beyond simple correlations. In Fig. 3b we show that a non-zero mutual information emerges in the extrinsic model in the low \({\mathscr{D}^{*}}\) limit. Remarkably, the onset of this dependency is also the onset of the coordinated behavior between the units, i.e., of power-law distributed avalanches. On the other hand, the mutual information vanishes only in the trivial limit \({\mathscr{D}^{*}} \rightarrow \infty\), since at finite \({\mathscr{D}^{*}}\) Eq. (5) is never exactly factorizable. When interactions are added back, the mutual information is simply shifted independently of \({\mathscr{D}^{*}}\) (see “Methods”), as we see in Fig. 3b. Crucially, this interaction-dependent constant shift of the mutual information is a signal that the effects of external modulation and the effective interactions are completely disentangled, as recently shown for similar models52.

(a) The correlation length of the interacting model scales linearly with the system size, as in the data. In the extrinsic model, as expected, the correlation length of the fluctuations is constant and equal to 1, i.e., the correlation function drops to zero for adjacent electrodes. (b) Comparison between the mutual information in the extrinsic model (\(\theta = 1\), \(\gamma _D = 10\), \(\gamma _1 = 0.1\), \(\gamma _2 = 0.5\)) and, as an example, in the interacting model with two units. Notice that the onset of a non-vanishing mutual information induced by \({\mathscr{D}}(t)\) is also the onset of power-law distributed avalanches, whereas the mutual information arising from interactions is independent of \({\mathscr{D}^{*}}\).

Scale-free avalanches in more biologically relevant extrinsic models

The multivariate Ornstein-Uhlenbeck (mOU) is a paradigmatic model which, albeit simple enough to allow for an analytical treatment, does not account for many biological aspects. In order to show that our results generalize to more biologically sound models, we now consider the neural activity described by a Wilson–Cowan model55,56,57,58,59 as a variant of the extrinsic model. It includes both excitatory and inhibitory synapses and non-linearities in the transfer function, and its derivation is based on arguments over the dynamics of the neurons and action potentials55, which makes it a general tool to model mesoscopic neural regions.

We consider a stochastic version of the Wilson–Cowan model56,57,58,59, which includes a stochastic term that accounts for the finite size of the populations. We consider N non-interacting neural populations, and each one is modeled through the activity of two sub-populations, one of excitatory neurons \(E_i\) and one of inhibitory neurons \(I_i\). \(E_i\) and \(I_i\) are defined as the densities of active excitatory or inhibitory neurons, and can be interpreted as firing rates. They evolve according to

where \(\alpha\) is the rate of spontaneous activity decay, \(\omega _{E, I}\) are the synaptic efficacies, and \(\eta _{E,I}\) are uncorrelated Gaussian white noises with population-size dependent strength \(\sigma \propto \frac{1}{\sqrt{K}} = \frac{I}{\sqrt{L}}\),with K and L that are the number of excitatory and inhibitory neurons corresponding to each neural population56,58. The response function f(s) is given by

where \(s = \omega _{E} E_i - \omega _{I} I_i + h\) is the average incoming current from the other synaptic inputs and an external input h. For each unit, we are interested in the firing rate of the overall population \(\Sigma _i = (E_i + I_i)/2\). \(\beta\) will be set to 1 from now on. Importantly, we consider the case in which the units are inhibition dominated, i.e., when \(\omega _{I} > \omega _{E}\), with a small noise amplitude \(\sigma\), and are non-interacting with each other.

The external modulation comes into this model through the external current h. As a potential candidate for a biological realization of this external, stochastic driving, we consider the effective input that comes from other, yet unobserved, neural populations. Hence, we model h as the firing rate \(h = \left( E^{(h)} + I^{(h)}\right) /2\) of another Wilson–Cowan model, namely

In order to reproduce avalanches, as the external input h we should choose a stochastic modulation that displays bursts of activity separated by periods of silences. Importantly, it was recently shown in59 that this model admits a critical point at \(\omega _{0_C} = \omega _E - \omega _I = \frac{\alpha }{\beta }\), where power-law distributed avalanches will emerge independently of the size of the system. Thus, clearly, a possible choice for the external stochastic modulation would be a Wilson–Cowan unit in the critical state.

Another potential candidate is still a Wilson–Cowan model, but in a balanced state56,57 defined by \(\omega ^{(h)}_0 = \omega ^{(h)}_E - \omega ^{(h)}_I\ll \omega ^{(h)}_{\mathrm{S}} = \omega ^{(h)}_E + \omega ^{(h)}_I\), and we set the parameters so that \(\omega ^{(h)}_0 > \omega ^{(h)}_{0_C}\). Crucially, in this scenario, the mechanism giving rise to avalanches is fundamentally different. With these parameters, and in the absence of noise, the dynamics predicts a stable up state. Yet, by increasing the noise amplitude, such up state can be destabilized, leading to large excursions in the down state and thus to avalanches. This phenomenon is a consequence of the non-normality of the matrix describing the linearized dynamics, that can cause a system to be reactive—i.e., its dynamics can exhibit unusually long-lasting transient behaviors even if it asymptotically converges to a stable fixed point, and that coincides with the condition \(\omega _0 \ll \omega _S\)57.

For these reasons, here we choose as an effective input h the firing rate coming from a neural population in a balanced state. In the Supplementary Information, we study the case in which such input comes instead from a population in the critical state \(\omega _{0_C} = \frac{\alpha }{\beta }\), and we show that the results are qualitatively similar to the ones reported here.

Intuitively, we are considering a case in which N populations of neurons evolve according to a WC model in the inhibition dominated phase, and all receive the same input by another, yet unobserved, population of neurons.

Although we cannot analytically tackle this model, we simulate the Langevin equations Eqs. (10) and (12) and from each firing rate \(\Sigma _i\) we generate trains of events and analyze avalanches by temporal binning through the average inter-event interval (see “Methods”). If the noise strength \(\sigma _h\) is high enough, h spends most of the time close to the down state, while showing frequent bursts of activity—this behavior is qualitatively equivalent to the low-\({\mathscr{D}^{*}}\) limit previously considered, and is depicted in Fig. 4a. Again, in this regime we find that avalanche sizes and durations are power-law distributed and satisfy the crackling-noise relation, as we see in Fig. 4b–d. On the other hand, if \(\sigma _h\) is lower, the external input h is not modulated as in the previous high \({\mathscr{D}^{*}}\) limit. Once more, in this regime avalanches obtained through temporal binning are exponentially suppressed both in their size and in their duration, as we see in Fig. 4e–h.

Hence, the proposed extrinsic mechanism is valid beyond the paradigmatic case of an Ornstein-Uhlenbeck process we previously considered. Finally, we note that a balanced \(h = \left( E^{(h)} + I^{(h)}\right) /2\) is only one of the possible choices that could be considered for the external modulation. Crucially, we believe it is one that achieves a significant biological realism, being linked to E-I balance31. Moreover, a large number of parameters can satisfy this condition, thus not requiring extreme fine-tuning, as long as the size of the system remains finite (e.g., for our choice of parameters, at a noise level \(\sigma ^{(h)} = 5 \times 10^{-3}\) corresponding to K = \(L = 40000\) neurons, avalanches are not present56). We highlight that other choices of external modulation should be able to generate bursts of activity separated by periods of silence. For instance, another relevant choice would be Brunel’s model in the synchronous irregular phase60.

Avalanche statistics generated by the Wilson Cowan units. The Wilson Cowan units are always in an inhibition dominated phase, i. e. \(\omega _{I} = 7\) and \(\omega _{E} = 6.8\), and \(\alpha = 1\). Their external input h is instead always in a balanced state, in particular \(\omega ^{(h)}_{E} = 50.5\), \(\omega ^{(h)}_{I} = 49.5\). Its other parameters are \(h^{(h)} = 10^{-3}\) and \(\alpha ^{(h)} = 0.1\). In Figures (a–d) however, \(\sigma ^{(h)}\), the amplitude of the noise, is increased to \(2.5 \times 10^{-2}\) so that the up state can be destabilized by the noise. In Figures (e–h) instead the noise is reduced to \(5 \times 10^{-3}\) so that the up state is stable. (a, e) Comparison between the trajectories of h, \(\frac{E_i + I_i}{2}\) and the corresponding trains of events in the high (a) and low (e) \(\sigma ^{(h)}\) regime. (b–d) If \(\sigma ^{(h)}\) is high avalanches are power-law distributed and the crackling-noise relation is verified. (f–g) Same plots, now in the low \(\sigma ^{(h)}\) regime. Avalanches are now fitted with an exponential distribution. (h) The average avalanche size as a function of the duration scales with an exponent that, as \(\sigma ^{(h)}\) decreases, becomes closer to the trivial one \(\delta _\mathrm {fit} \approx 1\).

Discussion

We have measured the activity in spatially-extended LFPs data from the rat’s barrel cortex and we have found the appearance of neural avalanches and of the crackling noise relation. However, a variety of mechanisms can generate these properties. Nonetheless, our data also display a correlation length that scales linearly with the size of the system, a key feature of critical systems. In order to understand the possible origin of these signatures of criticality, we have developed a archetypal, but of analytical ease, framework where the intrinsic contributions to the neuronal activity—due to the direct interaction between the units themselves—and the extrinsic ones—arising from externally-driven modulated activity—are exactly disentangled. Crucially, our work and our results fit in a well-established and fruitful research line that studies null mechanisms for the emergence of neuronal avalanches17,19. We believe that being able to disentangle such null mechanisms with more biological insightful properties (e.g. neural correlations) is instrumental in understanding what avalanches can teach us about neuronal and brain dynamics. Thus, building on recent works that have stressed the importance of considering external inputs when studying brain criticality54, our work wants to highlight the importance of disentangling extrinsic and intrinsic factors, that inherently contributes to neural activity.

When considering a properly chosen external modulation, our model displays a regime in which power-law avalanches that satisfy the crackling-noise relation emerge and that are compatible with the exponent \(\delta \approx 1.28\) found in24,25. Crucially, this result holds even in other extrinsic models, such as when considering Wilson–Cowan units modulated by an effective input coming from unobserved neural populations. The same value of the \(\delta\) exponent is known to have been found in a variety of neural systems, and our results suggest that it could be explained by a slow time varying extrinsic dynamics19 that affects all the neural units in the same way. On the other hand, it was recently shown26 that this exponent may arise as a consequence of measuring only a fraction of the total neural activity, i.e., of subsampling. Let us also note that avalanches distributed with all exponents compatible with a critical branching process (i. e., with \(\delta = 2\)) were found experimentally in61, once properly taking into account the role of gamma-oscillations. Further work is still needed to understand the emergence of such exponents and in which conditions they are robustly reproduced in experiments.

At the same time, while scale-free spatial correlations can and do coexist with power-law avalanches, these kinds of critical signatures cannot be explained by the extrinsic activity alone. Crucially, our archetypal model allows us to combine this extrinsic dynamics to an intrinsic interaction matrix, inferred directly from the experimental data to match the spatial correlations we find in our experiments. When we do so, we show that these two signatures of criticality can be disentangled—avalanches appear as a consequence of the external modulation and are only slightly affected by the interactions, and, vice-versa, the interactions determine the spatial correlations independently of the external modulation. Hence, we believe that scale-free correlations may be deeply related to the origin of criticality in the brain, playing a fundamental role in the advantages it might achieve by being critical9,15,62.

Remarkably, it was recently shown that in models similar to the one considered here the mutual information always receives distinct and disentangled contributions from the internal interactions and from an environmental, external dynamics52. Although, clearly, the presence of a non-zero mutual information cannot be a sufficient condition for power-law avalanches to appear, in our extrinsic model their emergence does correspond to the onset of a non-vanishing mutual information. This fact suggests a promising future perspective. By explicitly considering both the intrinsic activity and the extrinsic contributions, one might be able to combine all these considerations into a unified information-theoretic view—perhaps helping to unfold the underlying biological mechanisms at the origin of the observed signatures of criticality in neural activity.

Methods

The study is reported in accordance with ARRIVE guidelines.

Experimental setting

Surgical procedures

LFPs recordings are performed on Wistar rats, which are maintained under standard environmental conditions in the animal research facility of the Department of Biomedical Sciences of the University of Padova. All the procedures are approved by the local Animal Care Committee (O.P.B.A.) and the Italian Ministry of Health (authorization number 522/2018-PR) and all methods are performed in accordance with relevant guidelines and regulations. Young adult rats aged 36 to 43 days and weighting between 150 and 200 g are anesthetized with an intra-peritoneal induction mixture of tiletamine-xylazine (2 mg and 1.4 g/100 g body weight, respectively), followed by additional doses (0.5 mg and 0.5 g/100 g body weight) every hour. The anesthesia level is constantly monitored by testing the absence of eye and hind-limb reflexes and whiskers’ spontaneous movements. Each animal is positioned on a stereotaxic apparatus where the head is fixed by teeth- and ear-bars. To expose the cortical area of interest, an anterior-posterior opening in the skin is made in the center of the head and a window in the skull is drilled over the somatosensory barrel cortex at stereotaxic coordinates \(-1 \div -4\) AP, \(+4 \div +8\) ML referred to bregma63. A slit in the meninges is then carefully made with fine forceps at coordinates \(- 2.5\) AP, \(+ 6\) ML for the subsequent insertion of the recording probe. As a reference, the depth is set at 0 m when the electrode proximal to the chip tip touches the cortical surface. The neuronal activity is recorded from the entire barrel cortex (from 0 to \(- 1750 \,\upmu\text{m}\)), which is constantly bathed in Krebs’ solution (in mM: NaCl 120, KCl 1.99, NaHCO3 25.56, KH2PO4 136.09, CaCl2 2, MgSO4 1.2, glucose 11). An Ag/AgCl electrode bathed in the extracellular solution in proximity of the probe is used as reference.

Recordings

LFPs are recorded through a custom-made needle that integrates a high-density array, whose electrodes are organized in a \(64\times 4\) matrix. The operation principle of the multi-electrode-arrays used to record LFPs is an extended CMOS based EOSFET (Electrolyte Oxide Semiconductor Field Effect Transistor). The recording electrodes are 7.4 \(\upmu\)m in diameter size and the needle is 300 \(\upmu\)m in width and 10 mm long. The x- and y-pitch (i.e. the distance between adjacent recording sites) are 32 \(\upmu\)m. The multiplexed signals are then digitized by a NI PXIe-6358 (National Instruments) up to 1.25MS/s at 16bit resolution and saved to disk by a custom LabVIEW acquisition software. The LFP signal is sampled at 976.56 Hz and band-pass filtered (2–300 Hz). The dataset analyzed for this work consist in 20 trials of basal activity lasting 7.22 seconds, that are recorded from 4 rats.

LFPs peaks detection

For the detection of LFP events, the standard deviation (SD) and the mean of the signal were computed for each channel. In order to distinguish real events from noise, a three SD threshold was chosen based on the distribution of the signal amplitudes which significantly deviated from a Gaussian best fit above that threshold. Both negative and positive LFPs (i.e., nLPFs and pLFPs, respectively) were considered as events in accordance with previous works64. One reason is that across the depth of the cortex there are polarity changes in the LFP signal because of compensatory capacitive ionic currents, particularly along dendrites of pyramidal cells65. Since in our experiments electrodes span multiple cortical layers, both nLFPs and pLFPs were found and detected. Moreover, alternatively, pLFPs can be related to the activation of populations of inhibitory neurons. For detection, each deflection was considered terminated only after it crossed the mean of the signal.

Avalanches analysis

To study avalanches’ statistics, the data are temporally binned, and avalanches are defined as sequences of bins that present activity, and an avalanche ends once an empty bin is found—the temporal bin chosen is the average inter-event interval1. Then, the distribution p(s) of the avalanches sizes—the number of events in each avalanche—and of the avalanche duration p(T) are computed and fitted using a corrected maximum likelihood method41,42. In particular, following the methods proposed in42,66, avalanche sizes and lifetimes are fitted with discrete power-laws \(p(y; \alpha ) = \frac{y^{-\alpha }}{\sum _{x = x_{min}}^{x = x_{max}}x^{-\alpha }}\). The parameter \(x_{max}\) is set to the maximum observed size or duration. \(x_{min}\) is selected as the one that minimizes the Kolmogorov-Smirnov distance (KS) between the cumulative distribution function (CDF) of the data and the CDF of the theoretical distribution fitted with the parameter that best fits the data for \(y \ge x_{min}\)66. To assess goodness-of-fit we compared the experimental data against 1000 surrogate datasets drawn from the best-fit power-law distribution with the same number of samples as the experimental dataset. The data were considered power-law distributed if the fraction of the KS statistics of the surrogates which were greater than the KS statistic for the experimental data was greater than 0.1. We also take into account the fact that while maximum likelihood methods rely on the independence assumption, actual data often display correlations, and this may lead to false rejection of the statistical laws42. As the authors of42 suggest, before performing the fit and assessing p-values, we undersample the data in order to decorrelate them, by estimating the time \(\tau ^{*}\) after which two observations (e.g., the avalanche sizes) are independent from each other, as done in41,42.

Scaling of the correlation length

The correlation length \(\xi\) can be defined as the average distance at which the correlation of the fluctuations around the mean crosses zero44, and it is known to diverge at criticality in the thermodynamic limit33. For finite systems, this behavior can be probed by computing the correlation length at different system sizes. For each time-series we compute their fluctuations around the mean activity,

Different sizes of the system, i.e., different portions of the array, are selected and, importantly, the mean activity is computed for each system size, considering the channels inside the portion of the array45. Hence, we study the behavior of the correlation length with system sizes corresponding to different subsamples from the multi-electrodes array probe36. We assume that the units of our model have the same topology as our data, i.e. we assume that the units are placed as the channels in the \(55 \times 4\) array of our experimental setup. Since in our case the array shape is rectangular, we consider the number of rows L as the relevant dimension and build subsampled systems of size \(L \times 4\), with L that decreases from the maximum of 55 channels down to 5 channels.

For each system’s subset, we compute the average correlation function of the fluctuations between all pairs of channels separated by a distance r,

where \(\langle {\cdot }\rangle _t\) stands for the average over time, \(\langle {\cdot }\rangle _{i,j}\) is the average over all pairs of channels separated by a distance r and

with T is the length of the time series. Then \(\xi\) is computed as the zero of the correlation function \(C(r = \xi ) = 0\). To reduce the noise effects, results were averaged across all possible sub-regions for any given size. Then the \(\xi\) are plotted against the relative system size L and the slope of the fit is obtained through linear regression.

Correlations in the interacting model

The process studied in the main text is a multivariate Ornstein-Uhlebeck process53 of the form

where B(t) is a diagonal matrix whose diagonal elements are given by \(\sqrt{{\mathscr{D}}(t)}\) and \({\varvec{Z}}(t)\) denotes a Wiener process. In the case of non-interacting units, which we use to model the extrinsic activity, the matrix A is again diagonal with entries \(A_{ij} = \delta _{ij}/\gamma _i\). If the matrix B were constant in time, the covariance matrix \(\sigma\) of the mOU would be determined by the continuous Lyapunov equation49,53

Since in our case the matrix B is a stochastic variable, we need to marginalize over its stationary distribution p(B). Then, we immediately get

where Q is a diagonal matrix whose elements are given by

Then, taking the transpose of Eq. (17) and assuming that A is symmetric, we end up with a Lyapunov equation for the matrix A - \(\sigma A + A \sigma = Q\). In principle, we could relax the assumption of symmetry of the matrix A by considering the covariance matrix and the time shifted covariances49. However, this introduces further approximations and in the present work we are only interested in the covariance matrix. Hence we end up with a model

where \(A_{ij}\) depends on the the parameters of the stochastic modulation \(({\mathscr{D}^{*}}, \gamma _D, \theta )\). Notice that if we write \({\tilde{A}}_{ij} = A_{ij}/f({\mathscr{D}^{*}}, \gamma _D, \theta )\) we need to solve the Lyapunov equation \(\sigma {{\tilde{A}}} + \tilde{A}\sigma = \mathbbm{1}\) that only depends on \(\sigma\), the covariance matrix of the data. Then, if we rescale the experimental time series by their standard deviation, \(\sigma\) coincides with the correlation matrix of our data, and we refer to it in the main text. If we introduce \(\tilde{{\mathscr{D}}} = {\mathscr{D}}/f\) and \({\tilde{v}}_i = v_i/\sqrt{f}\) we end up with

and clearly

Therefore, the covariance between \({{\tilde{v}}}_i\) and \({{\tilde{v}}}_j\) is proportional to the covariance between \(v_i\) and \(v_j\). This implies that at different \((D^{*}, \theta , \gamma _D)\) we find a rescaled interaction matrix \(A_{ij}\), but the scaling of correlation length does not change.

Mutual information in the interacting model

The stationary probability distribution solution of the interacting model with a generic interaction matrix A is

where the matrix \(\Sigma\) is determined by \(\left( {A}{\Sigma } + {\Sigma }{A}^T \right) /2 = \mathbbm {1}\) and

In general, we can define a multivariate information between these N variables and the results of the main text would not change. In practice, however, it is very hard to perform the related numerical integration if N is large. Therefore, in the main text we consider the exemplary case of the mutual information, i.e., the case of two variables that interact through the matrix element \(A_{12} = {\tilde{A}}_{12} f({\mathscr{D}^{*}}, \gamma _D, \theta )\) with \({\tilde{A}}_{12} = 2\). As recently shown for simpler models52, the mutual information of this model receives a distinct—and constant—contribution from the interaction matrix and from the modulation induced by \({\mathscr{D}^{*}}\). In fact, if we consider \(A = \mathrm {diag}(\gamma _1, \dots , \gamma _N)\), i.e., we consider the extrinsic model, the only the contribution to the mutual information comes from the external modulation, whereas the addition of interactions simply shifts the mutual information by a constant value at all \({\mathscr{D}^{*}}\).

Data availability

The dataset generated for this study is available upon request to corresponding author.

References

Beggs, J. M. & Plenz, D. Neuronal avalanches in neocortical circuits. J. Neurosci. 23(35), 11167–11177 (2003).

Petermann, T. et al. Spontaneous cortical activity in awake monkeys composed of neuronal avalanches. Proc. Natl. Acad. Sci. 106, 15921–15926. https://doi.org/10.1073/pnas.0904089106 (2009).

Yu, S. et al. Higher-order interactions characterized in cortical activity. J. Neurosci. 31, 17514–17526. https://doi.org/10.1523/JNEUROSCI.3127-11.2011 (2011).

Hahn, G. et al. Neuronal avalanches in spontaneous activity in vivo. J. Neurophysiol. 104(6), 3312–3322. https://doi.org/10.1152/jn.00953.2009 (2010).

Gireesh, E. D. & Plenz, D. Neuronal avalanches organize as nested theta- and beta/gamma-oscillations during development of cortical layer 2/3. Proc. Natl. Acad. Sci. https://doi.org/10.1073/pnas.0800537105 (2008).

Mazzoni, A. et al. On the dynamics of the spontaneous activity in neuronal networks. PLoS ONE 2(5), e439 (2007).

Pasquale, V., Massobrio, P., Bologna, L., Chiappalone, M. & Martinoia, S. Self-organization and neuronal avalanches in networks of dissociated cortical neurons. Neuroscience 153(4), 1354–1369 (2008).

de Arcangelis, L., Perrone-Capano, C. & Herrmann, H. J. Self-organized criticality model for brain plasticity. Phys. Rev. Lett. 96, 028107 (2006).

Kinouchi, O. & Copelli, M. Optimal dynamical range of excitable networks at criticality. Nat. Phys. 2, 348–351 (2006).

Shew, W. L. & Plenz, D. The functional benefits of criticality in the cortex. Neuroscientist 19(1), 88–100. https://doi.org/10.1177/1073858412445487 (2013).

Hesse, J. & Gross, T. Self-organized criticality as a fundamental property of neural systems. Front. Syst. Neurosci. 8, 166 (2014).

Hidalgo, J. et al. Information-based fitness and the emergence of criticality in living systems. Proc. Natl. Acad. Sci. 111, 10095–10100 (2014).

Tkačik, G. et al. Thermodynamics and signatures of criticality in a network of neurons. Proc. Natl. Acad. Sci. 112, 11508–11513 (2015).

Rocha, R. P., Koçillari, L., Suweis, S., Corbetta, M. & Maritan, A. Homeostatic plasticity and emergence of functional networks in a whole-brain model at criticality. Sci. Rep. 8, 1–15 (2018).

Muñoz, M. A. Colloquium: criticality and dynamical scaling in living systems. Rev. Modern Phys. 90, 031001. https://doi.org/10.1103/RevModPhys.90.031001 (2018).

Touboul, J. & Destexhe, A. Can power-law scaling and neuronal avalanches arise from stochastic dynamics?. PLoS ONE 5, 1–14. https://doi.org/10.1371/journal.pone.0008982 (2010).

Touboul, J. & Destexhe, A. Power-law statistics and universal scaling in the absence of criticality. Phys. Rev. E 95, 012413. https://doi.org/10.1103/PhysRevE.95.012413 (2017).

Martinello, M. et al. Neutral theory and scale-free neural dynamics. Phys. Rev. X 7, 041071. https://doi.org/10.1103/PhysRevX.7.041071 (2017).

Priesemann, V. & Shriki, O. Can a time varying external drive give rise to apparent criticality in neural systems?. PLoS Comput. Biol. 14, e1006081 (2018).

Faqeeh, A., Osat, S., Radicchi, F. & Gleeson, J. P. Emergence of power laws in noncritical neuronal systems. Phys. Rev. E 100, 010401 (2019).

Sethna, J., Dahmen, K. & Myers, C. Crackling noise. Nature 410, 242–250. https://doi.org/10.1038/35065675 (2001).

Friedman, N. et al. Universal critical dynamics in high resolution neuronal avalanche data. Phys. Rev. Lett. 108, 208102. https://doi.org/10.1103/PhysRevLett.108.208102 (2012).

di Santo, S., Villegas, P., Burioni, R. & Muñoz, M. A. Simple unified view of branching process statistics: Random walks in balanced logarithmic potentials. Phys. Rev. E 95, 032115. https://doi.org/10.1103/PhysRevE.95.032115 (2017).

Fontenele, A. J. et al. Criticality between cortical states. Phys. Rev. Lett. 122, 208101. https://doi.org/10.1103/PhysRevLett.122.208101 (2019).

Buendia, V., Villegas, P., Burioni, R. & Noz, M. A. M. Hybrid-type synchronization transitions: where incipient oscillations, scale-free avalanches, and bistability live together. Phys. Rev. Res. 3, 023224 (2021).

Carvalho, T. T. A. et al. Subsampled directed-percolation models explain scaling relations experimentally observed in the brain. Front. Neural Circ. 14, 83. https://doi.org/10.3389/fncir.2020.576727 (2021).

Scarpetta, S., Apicella, I., Minati, L. & de Candia, A. Hysteresis, neural avalanches, and critical behavior near a first-order transition of a spiking neural network. Phys. Rev. E 97, 062305 (2018).

Destexhe, A. & Touboul, J. D. Is there sufficient evidence for criticality in cortical systems?. eNeuro https://doi.org/10.1523/ENEURO.0551-20.2021 (2021).

di Santo, S., Villegas, P., Burioni, R. & Muñoz, M. A. Landau-ginzburg theory of cortex dynamics: scale-free avalanches emerge at the edge of synchronization. Proc. Natl. Acad. Sci. 115, E1356–E1365. https://doi.org/10.1073/pnas.1712989115 (2018).

Dalla Porta, L. & Copelli, M. Modeling neuronal avalanches and long-range temporal correlations at the emergence of collective oscillations: Continuously varying exponents mimic M/EEG results. PLoS Comput. Biol. 15, 1–26. https://doi.org/10.1371/journal.pcbi.1006924 (2019).

Poil, S. S., Hardstone, R., Mansvelder, H. D. & Linkenkaer-Hansen, K. Critical-state dynamics of avalanches and oscillations jointly emerge from balanced excitation/inhibition in neuronal networks. J. Neurosci. 32, 9817–9823. https://doi.org/10.1523/JNEUROSCI.5990-11.2012 (2012).

Ponce-Alvarez, A., Jouary, A., Privat, M., Deco, G. & Sumbre, G. Whole-brain neuronal activity displays crackling noise dynamics. Neuron 100, 1446-1459.e6. https://doi.org/10.1016/j.neuron.2018.10.045 (2018).

Binney, J. J., Dowrick, N. J., Fisher, A. J. & Newman, M. The theory of critical phenomena: an introduction to the renormalization group (Oxford University Press Inc, USA, 1992).

Henkel, M., Hinrichsen, H. & Lübeck, S. Non-equilibrium phase transitions. Volume 1: absorbing phase transitions (Springer, 2009).

Haimovici, A., Tagliazucchi, E., Balenzuela, P. & Chialvo, D. R. Brain organization into resting state networks emerges at criticality on a model of the human connectome. Phys. Rev. Lett. 110, 178101. https://doi.org/10.1103/PhysRevLett.110.178101 (2013).

Ribeiro, T. L. et al. Trial-by-trial variability in cortical responses exhibits scaling in spatial correlations predicted from critical dynamics. https://doi.org/10.1101/2020.07.01.182014 (2020). https://www.biorxiv.org/content/early/2020/07/02/2020.07.01.182014.full.pdf.

Meshulam, L., Gauthier, J. L., Brody, C. D., Tank, D. W. & Bialek, W. Coarse graining, fixed points, and scaling in a large population of neurons. Phys. Rev. Lett. 123, 178103 (2019).

Nicoletti, G., Suweis, S. & Maritan, A. Scaling and criticality in a phenomenological renormalization group. Phys. Rev. Res. 2, 023144. https://doi.org/10.1103/PhysRevResearch.2.023144 (2020).

Zhang, Z.-W. & Deschênes, M. Intracortical axonal projections of lamina vi cells of the primary somatosensory cortex in the rat: a single-cell labeling study. J. Neurosci. 17, 6365–6379. https://doi.org/10.1523/JNEUROSCI.17-16-06365.1997 (1997).

Shriki, O. et al. Neuronal avalanches in the resting meg of the human brain. J. Neurosci. 33, 7079–7090. https://doi.org/10.1523/JNEUROSCI.4286-12.2013 (2013).

Mariani, B. et al. Neuronal avalanches across the rat somatosensory barrel cortex and the effect of single whisker stimulation. Front. Syst. Neurosci. 15, 89 (2021).

Gerlach, M. & Altmann, E. G. Testing statistical laws in complex systems. Phys. Rev. Lett. 122, 168301. https://doi.org/10.1103/PhysRevLett.122.168301 (2019).

Fraiman, D. & Chialvo, D. R. What kind of noise is brain noise: anomalous scaling behavior of the resting brain activity fluctuations. Front. Physiol. 3, 307. https://doi.org/10.3389/fphys.2012.00307 (2012).

Cavagna, A. et al. Scale-free correlations in starling flocks. Proc. Natl. Acad. Sci. 107, 11865–11870. https://doi.org/10.1073/pnas.1005766107 (2010).

Martin, D. A. et al. Box scaling as a proxy of finite size correlations. Sci. Rep. 11, 1–9 (2021).

Ferrari, U. et al. Separating intrinsic interactions from extrinsic correlations in a network of sensory neurons. Phys. Rev. E 98, 042410 (2018).

Nozari, E. et al. Is the brain macroscopically linear? a system identification of resting state dynamics. arXiv:2012.12351 (2020).

Saggio, M. L., Ritter, P. & Jirsa, V. K. Analytical operations relate structural and functional connectivity in the brain. PLoS ONE 11, 1–25. https://doi.org/10.1371/journal.pone.0157292 (2016).

Gilson, M., Moreno-Bote, R., Ponce-Alvarez, A., Ritter, P. & Deco, G. Estimation of directed effective connectivity from fMRI functional connectivity hints at asymmetries of cortical connectome. PLoS Comput. Biol. 12(3), e1004762 (2016).

Gilson, M. et al. Network analysis of whole-brain fMRI dynamics: a new framework based on dynamic communicability. Neuroimage 201, 116007. https://doi.org/10.1016/j.neuroimage.2019.116007 (2019).

Arbabyazd, L. et al. Virtual connectomic datasets in Alzheimer’s disease and aging using whole-brain network dynamics modelling. eNeuro https://doi.org/10.1523/ENEURO.0475-20.2021 (2021).

Nicoletti, G. & Busiello, D. M. Mutual information disentangles interactions from changing environments. Phys. Rev. Lett. 127, 228301. https://doi.org/10.1103/PhysRevLett.127.228301 (2021).

Gardiner, C. W. Handbook of stochastic methods for physics, chemistry and the natural sciences, vol. 13 of Springer Series in Synergetics 3rd edn. (Springer-Verlag, 2004).

Fosque, L. J., Williams-García, R. V., Beggs, J. M. & Ortiz, G. Evidence for quasicritical brain dynamics. Phys. Rev. Lett. 126, 098101. https://doi.org/10.1103/physrevlett.126.098101 (2021).

Wilson, H. R. & Cowan, J. D. Excitatory and inhibitory interactions in localized populations of model neurons. Biophys. J . 12, 1–24. https://doi.org/10.1016/S0006-3495(72)86068-5 (1972).

Benayoun, M., Cowan, J. D., van Drongelen, W. & Wallace, E. Avalanches in a stochastic model of spiking neurons. PLoS Comput. Biol. 6, 1–13 (2010).

di Santo, S., Villegas, P., Burioni, R. & Muñoz, M. A. Non-normality, reactivity, and intrinsic stochasticity in neural dynamics: a non-equilibrium potential approach. J. Stat. Mech: Theory Exp. 2018, 073402. https://doi.org/10.1088/1742-5468/aacda3 (2018).

Wallace, E., Benayoun, M., van Drongelen, W. & Cowan, J. D. Emergent oscillations in networks of stochastic spiking neurons. PLoS ONE 6, 1–16. https://doi.org/10.1371/journal.pone.0014804 (2011).

de Candia, A., Sarracino, A., Apicella, I. & de Arcangelis, L. Critical behaviour of the stochastic Wilson–Cowan model. PLoS Comput. Biol. 17, 1–23. https://doi.org/10.1371/journal.pcbi.1008884 (2021).

Brunel, N. Dynamics of sparsely connected networks of excitatory and inhibitory spiking neurons. J. Comput. Neurosci. 8, 183–208. https://doi.org/10.1023/A:1008925309027 (2000).

Miller, S. R., Yu, S. & Plenz, D. The scale-invariant, temporal profile of neuronal avalanches in relation to cortical gamma oscillations. Sci. Rep. 9, 1–14 (2019).

Bialek, W. & Mora, T. Are biological systems poised at criticality?. J. Stat. Phys. 144, 268–302 (2011).

Swanson, L. Brain maps: structure of the rat brain 3rd edn. (Academic press, London, 2003).

Shew, W. et al. Adaptation to sensory input tunes visual cortex to criticality. Nat. Phys. 11, 659–663 (2015).

Buzsáki, G., Anastassiou, C. & Koch, C. The origin of extracellular fields and currents - EEG, ECoG, LFP and spikes. Nat. Rev. Neurosci. 13, 407–420. https://doi.org/10.1038/nrn3241 (2012).

Clauset, A., Shalizi, C. R. & Newman, M. E. J. Power-law distributions in empirical data. SIAM Rev. 51, 661–703 (2009).

Acknowledgements

S.S. acknowledges DFA and UNIPD for SUWE_BIRD2020_01 grant, and INFN for LINCOLN grant. S.V. acknowledges support from Horizon 2020 (European Commission), FET Proactive, SYNCH project (GA number 824162). We thank Claudia Cecchetto for the initial analyses on the electrophysiological data and suggestions on relevant literature and Victor Buendia for insightful discussions.

Author information

Authors and Affiliations

Contributions

S.S. designed the theoretical study and supervised the research with S.V., while M.B. and S.V. designed the experiments. M.M. conducted the experiments. B.M. analyzed the data. B.M., G.N. and S.S. formulated the model. B.M. and G.N. performed the analytical calculations, implemented the simulations and analyzed the results. B.M., G.N., S.V. and S.S. interpreted the results. G.N. prepared the figures. All authors contributed to the article and approved the submitted version.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mariani, B., Nicoletti, G., Bisio, M. et al. Disentangling the critical signatures of neural activity. Sci Rep 12, 10770 (2022). https://doi.org/10.1038/s41598-022-13686-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-13686-0

This article is cited by

-

Box scaling as a proxy of finite size correlations

Scientific Reports (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.