Abstract

Penetration enhancement of renewable energy sources is a core component of Korean green-island microgrid projects. This approach calls for a robust energy management system to control the stochastic behavior of renewable energy sources. Therefore, in this paper, we put forward a novel reinforcement learning-driven optimization solution for the convex problem arrangement of the Gasa island microgrid energy management as one of the prominent pilots of the Korean green islands project. We manage the convergence speed of the alternating direction method of multipliers solution for this convex problem by accurately estimating the penalty parameter with the soft actor-critic technique. However, in this arrangement, the soft actor-critic faces sparse reward hindrance, which we address here with the normalizing flow policy. Furthermore, we study the effect of demand response implementation in the Gasa island microgrid to reduce the diesel generator dependency of the microgrid and provide benefits, such as peak-shaving and gas emission reduction.

Similar content being viewed by others

Introduction

To combat global warming, substituting RESs for fossil fuels is more beneficial to the island regions of the earth. As global warming continues, oceans rise, and islands disappear1. On the other hand, energy storage systems (ESS) mitigate the random nature of RESs, allowing microgrid power networks to take over the island’s power supply without relying on central power plants2,3.

In Korea, with 471 residential islands, using a microgrid to electrify the islands is the most cost-effective solution. In this country, 171 residential islands electrify with stand-alone microgrids, whereas DGs supplied their power earlier4. According to the Korean government plan, 63.8 GW of RESs will be installed by 2030. This amount is 20% of national electricity generation and would result in a 37% reduction in \(CO_{2}\) emissions5. Implementing the Green Islands project is a significant step in this direction. One of the seven pilot green islands is Gasa Island, which initially operated as a stand-alone microgrid powered by three DGs6.

Due to the objective of the Korean green island project, Gasa Island has moved towards entirely relying on RESs with assistance from ESSs. In this self-sufficient microgrid, DGs were used only as backup power in case of contingency. However, it is impossible to achieve this goal without an intelligent, robust EMS unit that can coordinate energy supply resources performance to reduce greenhouse gases. Since the use of ESSs on this island, in addition to the compensation of RESs power absence, is the voltage and frequency regulation, large-scale ESSs are needed. A RES’s primary contribution on this island is to reduce greenhouse gas emissions, not to reduce residents’ electricity bills4. Meanwhile, introducing DR to lower the peak-average ratio reduces the need for further investment in ESSs and RESs besides minimizing DG utilization in emergencies. As a result, in this paper, we examine the energy management of Gasa Island as part of the Green Island project, which aims to implement economic dispatch as a baseline, and then develop EMS using DR programs to reduce DG usage and ultimately greenhouse gas emissions. According to these objectives and the constraints of high penetration of RESs in green islands, in addition to the uncertainty in loads, the Gasa island EMS problem is stochastic, high-dimensional, and sequential. The MG EMS arrangement should consider several factors based on the type of MG, including centralized or decentralized decision making, optimization methods, RESs output, and load uncertainty management. So far, MG EMS optimization has been carried out using a mathematical approach, meta-heuristics, and artificial intelligence (AI)7. These EMS scheduling may follow a centralized or decentralized manner. A real-time EMS for microgrid in a decentralized fashion is presented in8. This paper examined the MG voltage and frequency stability from a power electronics perspective. Economic model predictive control (EPMC) offered economic dispatch for residential microgrids in9. Authors in this paper addressed applying prediction error in RESs output in their model with stochastic MPC in the future attempt. However, it is costly and complex to implement MPC. However, uncertainty in RESs power production is crucial to be considered to provide an accurate EMS vastly in literature implemented by deploying probability distribution. Raghav et al.10 hired a sparrow search algorithm to arrange EMS, considering forecasting errors of RESs output and load prediction. They compared their method with a wide range of other metaheuristic-based optimization methods. Uncertainty in PV and WT power prediction is considered in the optimization of microgrid EMS11 by deploying different probability density functions. The authors of this paper used the quantum teaching learning-based (QTLBO) optimization method and compared the results with a real-coded genetic algorithm, differential evolution, and TLBO. This paper focuses on the robust arrangement of fast converged optimization methods to support online EMS. Another goal is to reduce DG roles to strengthen the \(\hbox {CO}_{2}\)-free island target through DR. Additionally, this paper deploys historical data of RESs output power and load in the modeling environment considering their randomness behavior.

ADMM is a widely used response to EMS arrangement as a complex problem since of deploying distributed computational approach to reduce the severity of high-dimensional characteristics of microgrid EMS problem12. ADMM solved the quadratic and non-quadratic format of the economic dispatch problem of the islanded microgrid in13. The proposed algorithm showed high performance in balancing power consumption and generation of the island microgrid. Lyu et al. in14 proposed a dual-consensus ADMM to provide less communication-dependent microgrid economic dispatch scheduling. ADMM was used to solve the microgrid economic dispatch considering ESS costs in15 and16. However, a primary concern of hiring ADMM is the hyper-parameter dependency of its convergence to the optimum. Designing dynamic step-size for updating dual variables and converting ADMM to proximal gradient method are remedies has been offered to overcome the convergence hindrance of ADMM17,18,19,20.

Recently, considerable literature has grown up around the theme of sequential prediction of deep learning21 and in an augmented horizon deep reinforcement learning (DRL) to estimate the penalty parameter of ADMM22,23. DRL proved the fast convergence provision of ADMM by justifying penalty parameters in22. However, the utilized DRL method was TD3 which suffers from hyper-parameter dependency and worsens the large-scale vulnerability of solving convex problems such as microgrids EMS. Zeng et al.23 solved the quadratic format of distributed optimal power flow with the help of Q-learning to estimate the penalty parameter of ADMM. By transferring the residual value variant to the reward function in each iteration, the authors in this paper solved the sparse reward problem of arranged reinforcement learning. Moreover, this paper hired reinforcement learning, i.e., Q-learning’s discrete action space limited the more credited actions searching possibilities. Therefore, in this paper, we employ the state-of-the-art of SAC to offer a fast and accurate converged ADMM. In contrast to other methods that experience the local optimum trap after a certain number of iterations due to ineffective exploration, SAC supports continuous action space and adds stochasticity to the policy, providing excellent action exploration that persists until the last training process iterations24. We deploy the NFP technique to increase the density of the policy probability distribution to overcome the sparse reward issue. The contribution of this paper has four folds:

-

Providing a convex problem arrangement of Gasa island EMS considering DR and load flow constraints to make profits for consumers and the utility grid.

-

Arrangement of a SAC-based solution to estimate the penalty parameter of ADMM to support the high-dimension and complex problem of Gasa island EMS.

-

Solving sparse reward hindrance with a less computational burden on the learning process of SAC algorithm by arranging high-density action space with the NFP approach.

-

Exploration of less dependency on conventional generators and acquisition costs with DR implementation.

The remainder of this paper is organized as follows. By specifying the objective function and microgrid elements constraints, “Problem formulation” section formulates the problem. “Proposed method” section represents the solution method, and “Results and discussion” section investigates the novelty of the proposed solution by analysis of the results and compare with benchmark methods. Later on, “Conclusion” section discloses the most relevant conclusions of our work.

Problem formulation

Microgrid objective function

In this paper, we consider two scenarios for the EMS arrangement of the Gasa island microgrid. The first scenario includes photovoltaic cells (PV), wind turbines (WT), DGs, ESSs, and loads, as shown in Fig. 1. In the second scenario, we schedule the DR for the residential load to decrease the peak-average ratio. This approach reduces DG consumption and carbon emission production, making the green island a more practical objective. Consequently, we define the objective function of the Gasa island microgrid as minimizing power generation costs for the first scenario, and we enhance that by attaching minimization of power consumption cost for consumers through DR implementation. We formulate the objective function of the Gasa island microgrid, considering two scenarios as follows.

where i: The number of generation units; j: The number of loads; T: The time period of optimization; \(c^g\): The cost of power generation (KRW); \(P^g\): The amount of power generation (kW); \(c^s\): The cost of start-up and shut-down of conventional power generation units (KRW); \(u^s\): Conventional power generation units on/off status; \(P^L\): The amount of power consumption (kW); price: The price of load power consumption (KRW).

The first term of (1) calculates the cost of power generation, and the second part is the power consumption expenses. According to Fig. 1, \(C^g\) can be applied to power generation units that include PV, WT, DG, and ESS in discharging mode. The cost of start-up and shut-down applies to conventional units, which is DG in this study. Since we plan to reduce peak load through implementing DR, consumption cost minimization is meaningful for this program participant loads.

Microgrid elements modeling and constraints

The main objective of \(\mathrm{CO}_2\) reduction in green island advent encourages priority of RESs in supplying loads. Therefore, we decline the cost of RESs power generation. The only RESs constraint is the maximum amount of power that can produce.

where \(P^{PV}_{max}\) and \(Q^{PV}_{max}\) are the maximum active/reactive power generation of PVs. \(P^{WT}_{max}\) and \(Q^{WT}_{max}\) are WTs’ highest amount of active/reactive power production.

DG is the only conventional generator in the Gasa island microgrid. In addition to its limitations concerning the amount of energy generated, the DG faces constraints regarding its working duration and variation in power output as follows.

where \(T^{DG}_{up}\) and \(T^{DG}_{down}\) determine minimum up and down time, respectively. \(u^{DG}\) is a binary value that shows the DG is on or off. The DG’s power generation cost is calculated according to (10).

where \(a_1\), \(a_2\), and \(a_3\) are factors for the fuel cost of DG. We also consider start-up cost of DG (\(c^{s,DG}\)) as a fixed number of 10 KRW.

The followings represent the main constraints that attach to the ESSs performance.

where \({\eta }^{ch}\) and \({\eta }^{dch}\) are ESS charging and discharging efficiency. \(SoC_{min}\) and \(SoC_{max}\) determine up and down limits of state of charge (SoC), and \(\tau \) is the time slot. This study estimates the battery degradation cost based on (14).

where \(\hbox {E}^{\mathrm{b,rated}}\) and \(\hbox {E}^{\mathrm{b(t)}}\) are rated stored energy and available energy of battery at time t, respectively. \(\eta ^{\mathrm{leakage}}\) determines leakage loss, \(\sigma \) is a coefficient to calculate battery degradation during its lifespan, \(\hbox {c}^{\mathrm{b,inv}}\) is the initial investment to provide battery, and \(\hbox {n}^{\mathrm{ch,dch}}\) is the number of full charge and discharge cycles.

Gasa island includes 164 households25. To model the residential load, we hire the Enertalk open dataset that consists of the per appliance load consumption of 22 houses in Korea26. This dataset provides commonly deployed appliances’ power consumption data of a Korean household, including refrigerator, Kimchi refrigerator, water purifier, rice cooker, washing machine, and TV. We aggregated the power consumption of appliances by around 13% based on27 to estimate the heating and cooling system. We implemented DR on residential consumers to decrease the peak-average ratio. Therefore, we categorize the Enertalk appliance records into non-controllable, shiftable, and reducible loads. TV, Kimchi refrigerator, refrigerator, and rice cooker are non-controllable loads. The washing machine and heating and cooling system are shiftable and reducible loads, respectively. Therefore the electricity consumption of the heating and cooling system during the DR program based on inside building temperature (\(Temp^{in}\)) has the following restrictions28,29.

where \(\Delta \) \(Temp^{in}\)(\(\tau \)) is the inside building temperature deviation during time step \(\tau \). \(Temp^{in}_{min}\) and \(Temp^{in}_{max}\) are the inside building maximum and minimum desirable temperature. \(\beta \) and \(\gamma \) are the building thermal capacitance (kWh/ \(^{\circ }\)C) and reactance ( \(^{\circ }\)C/kW), respectively. \( P^{H \& C}\)(t) is the power usage of the heating or cooling system in time t.

To calculate the cost of load, we consider the time of use (TOU) price (\(p_{t}^{TOU}\)) according to Table 130.

To provide a trade-off between utility profit in decreasing peak-average ratio with higher TOU in peak hours and consumer comfort, we add the anxiety rate term (\({\mathcal {A}}_{rate}\)) to the cost function to respect consumers’ preferred power consumption rate.

In the case of the heating and cooling system, the DR affects the customer comfort where the inside temperature (\(Temp^{in}\)(t)) exceeds limitations that are defined in (17)31.

where K is the anxiety rate coefficient.

Additionally, according to the customers’ preferred time of using the washing machine, the anxiety rate of the washing machine (\({\mathcal {A}}_{rate}^{ WM}\)) participating in DR is determined as follows.

where \(h_{s}\) and \(h_{f}\) are the lower and upper preferred times of using the washing machine, respectively. \(\sigma \) and \(\zeta \) determine penalty coefficients of shifting washing machines out of consumers’ preferred time.

We deploy the water station load profile from32 and simulate school daily power consumption from33. The amount of lighthouse and radar base power consumption of Gasa island in the average daily load profile decline in this paper. During power dispatch scheduling, we consider load flow constraints for each branch of the Gasa island microgrid as follows.

where,

where i and j are bus indexes. \(P^{i,j}(t)\) and \(Q^{i,j}(t)\) are active and reactive power flow of lines between i and j buses at time t. \(V^{i}_{min}\) and \(V^{i}_{max}\) are lower and upper voltage limitations of each bus. \(P^{SCh}\), \(P^{WP}\), and \(P^{Lh}\) are the school, water pump station, and lighthouse active power consumption, respectively. \(P^{l,b}\) denotes non-DR participants’ residential load, and \(P^{l,dr}\) is DR participants’ residential load. \( \Delta P^{H \& C,dr}\), and \(\Delta P^{WM,dr}\) are the amount of heating and cooling systems and washing machines’ active power consumption that contributes to DR. We modify the objective function in (1) based on Gasa island microgrid constraints as follows.

subject to (2)–(9), (12), (13), (16)–(29). This formulation arranges a quadratic form of convex problem, which is solved in the following section based on ADMM technique.

Proposed method

ADMM

ADMM is a popular and reliable approach for convex quadratic programming. The conventional ADMM method attempts to solve the following problem34:

subject to

where x and \(x^{\prime }\) are the optimization variables, f and g are coefficient matrices, d is the sequence, and f(x) and g(\(x^{\prime }\)) are convex functions of optimization variables.

We arrange the Lagrangian function for the convex problem (31) as follows.

where \(\lambda \) is a relevant vector of multiplier for constraints (32), and \(\rho \) is the penalty factor.

ADMM iteratively updates optimization variables according to the following.

This iterative procedure will converge when the primal and dual residuals of ADMM techniques meet their thresholds \(\epsilon _p\) and \(\epsilon _d\), respectively, as follows.

where \(\parallel r_k^p\parallel \) and \(\parallel r_k^p\parallel \) represent the primal and dual residuals of the ADMM technique, calculated according to (39) and (40), respectively.

We deploy the decompose technique introduced in23 to solve our ADMM problem. To this end, we consider two penalty parameter vectors as follows.

where \(\rho _{PQ}\) is the penalty parameter for active and reactive power and \(\rho _{V \theta }\) denotes voltage and phase angle penalty parameter. \( n_{PQ} \) and \(n_{V\theta } \) are the number of constraints for each pair of active and reactive power and voltage and phase angle, respectively.

Since penalty parameters are determinant factors in ADMM dual and primal in each iteration to converge according to (37) and (38), the sequential characteristic of these parameters calculation encourages using the DRL method to estimate penalty parameters.

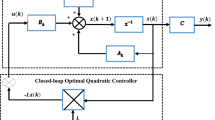

SAC algorithm with enhanced exploration

SAC is an actor-critic DRL method with sample efficiency specification of its off-policy approach24. SAC works in both discrete and continuous environments. The main characteristic of SAC is stochastic-based policy optimization by adding entropy to policy. This characteristic gives advantages of productive exploration and, consequently, a higher convergence rate to off-policy methods, such as deep deterministic policy gradient (DDPG) and twin delayed DDPG (TD3). Its sample efficiency due to learning from experience saved in the replay buffer is superior to on-policy techniques such as proximal policy optimization (PPO) and trust region policy optimization (TRPO). The entropy term (\({\mathcal {H}}(.)\)) is defined according to (29). The portion of entropy in the learning policy is determined by temperature parameter \(\alpha \), which is decreased during the learning iteration as follows.

The entropy term will update the Bellman equation of the value network training process according to (45).

The critic in SAC includes value function \(V_\psi \) and soft Q-function \(Q_\theta \). The actor contains policy network \(Q_\phi \). The policy network in SAC chooses action from Gaussian probability distribution according to the squashing function \(f_\phi \)(\(\epsilon \);\(s_{t}\)) as follows.

To use SAC for parameter estimation of the ADMM, we need to arrange the Markov decision process (MDP), including state, action, transition function, and reward. The state will be the decision variables of the ADMM problem, which are the Gasa island microgrid elements’ active and reactive power as follows.

SAC will predict the suitable penalty parameter from the continuous action space according to (48), (49).

The reward function is defined as follow.

However, this reward function is a sparse reward. The NFP is a trick that is used in this study to empower stability and provision of efficient action space exploration to defeat the sparse reward issues35. A set of invertible functions establishes normalizing flows. With the change of probability distribution variables, normalizing flows sequentially transform a distribution to a more density distribution as follows36.

where \(z_{0}\) is the base distribution and \(z_N\) is the final flow. The density of continuous variable the \(z_N\) parametrized by \(\phi \) is as follows.

One of the simplest methods to determine invertible function f is RealNVP. We deployed the method introduced in37 to combine SAC and normalizing flows. We will reparametrize the Gaussian distribution of action selection with RealNVP invertible transformation as follows.

where \(\pi (a|s)\)= \(z_{0}\) and the log density of action is as follows.

Therefore, the normal SAC will be modified by adding a gradient step on the normalized flow layers during the \(\phi \) setting.

The proposed technique to determine penalty parameter of ADMM with the contribution of SAC and NFP is represented in Fig. 2.

Results and discussion

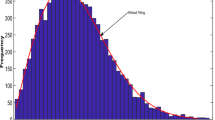

We deploy our proposed algorithm to optimize the Gasa island microgrid EMS in this section. The optimization takes place hourly and can be extended to shorter intervals based on available data. Figure 3 depicts the load profile for the island power consumers based on “Microgrid elements modeling and constraints” section. Since the residential load profile is for August, we choose the WT and PV output power taken from38 and the output temperature39 according to this period, as shown in Fig. 4. We simulate the microgrid and implement a centralized ADMM solution as a baseline and our optimization solution with the CVXPY package40 to use OSQP solver41 in the Python environment. The simulations are accomplished on a PC with Intel(R) Core (TM) i5-10400F CPU @ 2.90GHz.

The SAC algorithm’s performance was compared with different hidden layer numbers and batch sizes to justify DNN parameters. Figure 5 shows how the learning process of DNN varies depending on hyperparameters. Based on the results, two layers with a batch size of 128 will result in a trade-off regarding learning convergence, stability, and complexity. It will not be advantageous to extend the network size to three layers and the batch size to 256 according to Fig. 5b. Table 2 depicts the microgrid element specifications and solution algorithm parameters.

Figure 6 demonstrates the convergence speed comparison of the proposed algorithm and conventional ADMM. The effectiveness of our hired technique appears in this figure, where surplus convergence of vanilla ADMM based on the number of iterations. By learning the best policy, the RL agent speeds up convergence for dual and primal residuals to 300 and 500 iterations, respectively, while for normal ADMM, this process time is double.

Figure 7 shows the Gasa island microgrid economic power dispatches along with the SoC level of BESS with and without DR. This figure illustrates the effectiveness of DR planning in reducing DG utilization during the understudy day. Without DR, the DG starts to work with a higher power generating amount of 315 kW at 1 a.m. to compensate for the shortage of RESs power shown in Fig. 7a. However, DR deployment causes this amount to reduce to 210 kW, as can be seen in Fig. 7b. Additionally, without DR, DG, due to ramp-down time limitations, should stay working on 315 kW, although the amount of shortage power is lower than this amount of generation. Moreover, we also solved the QP arrangement of Gasa island with the CPLEX solver as an analytical method. Table 3 represents our proposed SAC-NF-ADMM to improve the performance of ADMM in case of operational cost for under study day.

Figure 8 shows the voltage magnitude of the whole Gasa island power network nodes. This figure delineates the voltage magnitude of the island power network stay in the allowed range between 0.99 and 1.01 p.u.

The effects of DR scheduling on the cooling system and washing machine are represented in Figs. 9 and 10. The washing machine takes part in the DR program by adjusting operating hours to off-peak and mid-peak with lower TOU pricing. The results of DR implementation show that the anxiety rates perfectly direct the optimization problem to keep the desired time of washing machines’ working hours. As we discussed before, the washing machine’s preferred time of working is between 4 and 12 a.m. Therefore, as can be seen in Fig. 9, the washing machine power usage between 9 and 5 p.m. with higher TOU transferred to other times of day in the desired range.

The optimization algorithm justifies indoor temperature in the desired temperature between 23 and 25 \(^{\circ }\)C. The inside temperature tends to be higher between 10 a.m. and 5 p.m., where TOU is the highest amount, resulting in less power consumption for the cooling system compared to situations without DR schedules.

Figure 11 reveals the DR scheduling resulted in peak shaving of around 20% for residential load during peak hours. The green color shaded area in Fig. 11 shows peak shaving, while a red color shaded area signifies a transferred portion of peak load relating to washing machine usage. The most significant benefit of DR scheduling for consumers is dropping 21% in total consumer electricity bills, as illustrated in Fig. 12. After DR implementation, DG cost dropped by around 42%, which is another evidence of reduced DG usage and fewer gas emissions.

Here, we deployed the TOU policy to schedule DR scheduling currently utilized in the Korean power system. TOU is one of the price-based DR policies. It is also possible to implement DR on Gasa Island using other price-based DR models, such as real-time pricing (RTP) and critical peak pricing (CPP). Furthermore, incentive-based DR methods, including direct load control and emergency demand response programs (EDRP), can be used jointly with price-based DR with incentive payments from utilities to increase customer profits and encourage them to participate in DR. Therefore, in our future investigation, we will use a combination of price-based and incentive-based DR techniques to study their effect on \(\hbox {CO}_{2}\) emission reduction. On the other hand, to completely meet the current situation of Gasa island, we deployed the WT and PV output power historical data. However, in our future attempt to consider uncertainties in WT and PV power generation, we deploy long short-term memory (LSTM) based solution to provide the RESs predictor.

Conclusion

In this paper, we arranged the EMS unit for the Gasa island microgrid as one of the prominent Korean Green island project pilots. The proposed approach improves the main objective of this green island microgrid from the feasible framework for RESs utilization to a profitable microgrid for consumers with DR deployment. Additionally, our method resulted in less dependency on DGs with DR schedules. The ADMM-based solution for EMS provided a fast converged process of optimization by penalty parameter prediction with the state-of-the-art DRL method SAC. We released each iteration from the computational cost of transferring dual variable variants by definition of an independent, constant reward to each converged iteration. However, this approach resulted in a sparse reward hindrance for the process of training the agent. To overcome this problem, we used NFP to increase the probability distribution of policies. The results showed the proposed ADMM converged 50% faster than vanilla ADMM. Additionally, the implemented DR scheduling on reducible and shiftable residential load decreased 20% of the peak load. Since in this paper we considered current situation of Gasa island microgrid network TOU which is utilized DR policy in Korean power system conisdered to implement DR. In our future work, we will develop our study with utilizing CPP and EDRP. Furthermore, we will hire LSTM based predictor to estimate RESs output prediction.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Huh, T. Analyzing the configuration of knowledge transfer of the green island projects in S. Korea. Korean J. Policy Stud. 32(1), 1–26 (2017).

Tightiz, L., Yang, H. & Piran, M. A survey on enhanced smart micro-grid management system with modern wireless technology contribution. Energies 13(9), 2258 (2020).

Ganjei, N., Zishan, F., Alayi, R., Samadi, H., Jahangiri, M., Kumar, R. & Mohammadian, A. Designing and sensitivity analysis of an off-grid hybrid wind-solar power plant with diesel generator and battery backup for the rural area in Iran. J. Eng. 2022 (2022).

Kang, T. South Korea’s experience with smart infrastructure services: Smart grids. Inter-American Development Bank 1–36 (2020).

Zhang, X., Wei, Q. S. & Oh, B. S. Cost analysis of off-grid renewable hybrid power generation system on Ui Island, South Korea. Int. J. Hydrog. Energy 47(27), 13199–13212 (2022).

Hwang, W. Microgrids for electricity generation in the Republic of Korea. NAPSNet Special Reports (2020).

Rangu, S. K., Lolla, P. R., Dhenuvakonda, K. R. & Singh, A. R. Recent trends in power management strategies for optimal operation of distributed energy resources in microgrids: A comprehensive review. Int. J. Energy Res. 44(13), 9889–9911 (2020).

Ullah, Z. et al. Advanced energy management strategy for microgrid using real-time monitoring interface. J. Energy Storage 52, 104814 (2022).

Alarcón, M. A., Alarcón, R. G., González, A. H. & Ferramosca, A. Economic model predictive control for energy management of a microgrid connected to the main electrical grid. J. Process Control 117, 40–51 (2022).

Raghav, L. P., Kumar, R. S., Raju, D. K. & Singh, A. R. Analytic hierarchy process (AHP)-swarm intelligence based flexible demand response management of grid-connected microgrid. Appl. Energy 306, 118058 (2022).

Raghav, L. P., Kumar, R. S., Raju, D. K. & Singh, A. R. Optimal energy management of microgrids using quantum teaching learning based algorithm. IEEE Trans. Smart Grid 12(6), 4834–4842 (2021).

Hu, M., Xiao, F. & Wang, S. Neighborhood-level coordination and negotiation techniques for managing demand-side flexibility in residential microgrids. Renew. Sustain. Energy Rev. 135, 110248 (2021).

Chen, G. & Yang, Q. An ADMM-based distributed algorithm for economic dispatch in islanded microgrids. IEEE Trans. Ind. Inform. 14(9), 3892–3903 (2018).

Lyu, C., Jia, Y. & Xu, Z. A novel communication-less approach to economic dispatch for microgrids. IEEE Trans. Smart Grid 12(1), 901–904 (2021).

Bhattacharjee, V. & Khan, I. A non-linear convex cost model for economic dispatch in microgrids. Appl. Energy 222, 637–648 (2018).

Zhou, C. & Zheng, C. 2020 15th IEEE Conference on Industrial Electronics and Applications (ICIEA) 839–844 (2020).

Wu, K. et al. Fast distributed Lagrange dual method based on accelerated gradients for economic dispatch of microgrids. Energy Rep. 6, 640–648 (2020).

Chang, X. et al. Accelerated distributed hybrid stochastic/robust energy management of smart grids. IEEE Trans. Ind. Inform. 17(8), 5335–5347 (2021).

Ullah, M. H. & Park, J. Peer-to-peer energy trading in transactive markets considering physical network constraints. IEEE Trans. Smart Grid 12(4), 3390–3403 (2021).

Li, Z., Li, P., Yuan, Z., Xia, J. & Tian, D. Optimized utilization of distributed renewable energies for island microgrid clusters considering solar-wind correlation. Electric Power Syst. Res. 206, 107822 (2022).

Biagioni, D. et al. Learning-accelerated ADMM for distributed dc optimal power flow. IEEE Control Syst. Lett. 6, 1–6 (2020).

Ichnowski, J. et al. Accelerating quadratic optimization with reinforcement learning. Adv. Neural Inf. Process. Syst. 34, 21043–21055 (2021).

Zeng, S., Kody, A., Kim, Y., Kim, K. & Molzahn, D. K. A reinforcement learning approach to parameter selection for distributed optimization in power systems. arXiv preprint arXiv:2110.11991 (2021).

Haarnoja, T., Zhou, A., Abbeel, P. & Levine, S. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor (2018). In Paper Presented at the 35th International Conference on Machine Learning, Stockholm, Sweden (2018).

Tightiz, L. & Yang, H. Resilience microgrid as power system integrity protection scheme element with reinforcement learning based management. IEEE Access 9, 83963–83975 (2021).

Shin, C. et al. The ENERTALK dataset, 15 Hz electricity consumption data from 22 houses in Korea. Sci. Data 6(1), 1–13 (2019).

Seo, Y. & Hong, W. Constructing electricity load profile and formulating load pattern for urban apartment in Korea. Energy Build. 78, 222–230 (2014).

Lee, D. & Choi, S. Reinforcement learning-based energy management of smart home with rooftop solar photovoltaic system, energy storage system, and home appliances. Sensors 19(18), 3937 (2019).

Shi, Y. et al. Distributed model predictive control for joint coordination of demand response and optimal power flow with renewables in smart grid. Appl. Energy 290, 116701 (2021).

Korea electric power corporation. Electric rates table in South Korea. http://cyber.kepco.co.kr/ckepco/front/jsp/CY/E/E/CYEEHP00201.jsp. Accessed 30 May 2022.

Luo, F., Kong, W., Ranzi, G. & Dong, Z. Y. Optimal home energy management system with demand charge tariff and appliance operational dependencies. IEEE Trans. Smart Grid 11(1), 4–14 (2019).

Ghasemi, S., Mohammadi, M. & Moshtagh, J. A new look-ahead restoration of critical loads in the distribution networks during blackout with considering load curve of critical loads. Electric Power Syst. Res. 191, 106873 (2021).

Mincu, P. R. & Boboc, T. A. 2017 International Conference on Energy and Environment (CIEM) 480–484 (IEEE, 2017).

Mhanna, S., Verbič, G. & Chapman, A. C. Adaptive ADMM for distributed AC optimal power flow. IEEE Trans. Power Syst. 34(3), 2025–2035 (2018).

Ward, P. N., Smofsky, A. & Bose, A. J. Improving exploration in soft-actor-critic with normalizing flows policies. arXiv preprint arXiv:1906.02771 (2019).

Kobyzev, I., Prince, S. J. & Brubaker, M. A. Normalizing flows: An introduction and review of current methods. IEEE Trans. Pattern Anal. Mach. Intell. 43(11), 3964–3979 (2020).

Mazoure, B., Doan, T., Durand, A., Pineau, J. & Hjelm, R. D. Leveraging exploration in off-policy algorithms via normalizing flows (2020). In Paper Presented at 3rd Conference on Robot Learning Osaka, Japan (2020).

Open energy system databases renewables.ninja. http://www.renewables.ninja. Accessed 1 June 2022.

The weather year round anywhere. https://weatherspark.com/h/d/142033/2017/8/15/. Accessed 1 June 2022.

Diamond, S. & Boyd, S. CVXPY: A python-embedded modeling language for convex optimization. J. Mach. Learn. Res. 17(1), 1–5 (2016).

Stellato, B., Banjac, G., Goulart, P., Bemporad, A. & Boyd, S. OSQP: An operator splitting solver for quadratic programs. Math. Program. Comput. 12(4), 637–672 (2020).

Acknowledgements

This work was supported by the National Research Foundation of Korea (NRF) Grant funded by the Korea government (MSIT) (NRF-2021R1F1A1063640).

Author information

Authors and Affiliations

Contributions

Conception: L.T. Acquisition of data: L.T. Analysis of data: all authors. Writing: L.T. Critical revision: J.Y. Final approval: all authors.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tightiz, L., Yoo, J. A robust energy management system for Korean green islands project. Sci Rep 12, 22005 (2022). https://doi.org/10.1038/s41598-022-25096-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-25096-3

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.