Abstract

Protein language models have emerged as an alternative to multiple sequence alignment for enriching sequence information and improving downstream prediction tasks such as biophysical, structural, and functional properties. Here we show that a method called SPOT-1D-LM combines traditional one-hot encoding with the embeddings from two different language models (ProtTrans and ESM-1b) for the input and yields a leap in accuracy over single-sequence-based techniques in predicting protein 1D secondary and tertiary structural properties, including backbone torsion angles, solvent accessibility and contact numbers for all six test sets (TEST2018, TEST2020, Neff1-2020, CASP12-FM, CASP13-FM and CASP14-FM). More significantly, it has a performance comparable to profile-based methods for those proteins with homologous sequences. For example, the accuracy for three-state secondary structure (SS3) prediction for TEST2018 and TEST2020 proteins are 86.7% and 79.8% by SPOT-1D-LM, compared to 74.3% and 73.4% by the single-sequence-based method SPOT-1D-Single and 86.2% and 80.5% by the profile-based method SPOT-1D, respectively. For proteins without homologous sequences (Neff1-2020) SS3 is 80.41% by SPOT-1D-LM which is 3.8% and 8.3% higher than SPOT-1D-Single and SPOT-1D, respectively. SPOT-1D-LM is expected to be useful for genome-wide analysis given its fast performance. Moreover, high-accuracy prediction of both secondary and tertiary structural properties such as backbone angles and solvent accessibility without sequence alignment suggests that highly accurate prediction of protein structures may be made without homologous sequences, the remaining obstacle in the post AlphaFold2 era.

Similar content being viewed by others

Introduction

Recently, Alphafold2 has achieved what was thought impossible: predicted protein structures at experimental accuracy for the majority of target proteins in critical assessment of structure prediction techniques (CASP14)1. This revolution was built on accumulating improvement in predicting backbone secondary structure2,3,4,5,6 and residue-residue contact maps7,8,9 along with advances in deep learning techniques. This success, however, does not mean the protein structure prediction problem is solved, as AlphaFold2 requires a minimum of 30 effective homologous sequences to achieve an accurate structure prediction1 and a large portion of proteins lacks homologous sequences10. Moreover, sequence-homology search requires increasingly intensive computing time. Thus, it is essential to develop accurate structure prediction methods without relying on homologous sequences. To do this, the first step is to develop accurate prediction of protein backbone and other 1D-structural properties for proteins with few homologous sequences.

To date, only a few single-sequence-based methods have been developed for protein secondary structure prediction. Examples are PSIPRED-Single11, SPIDER3-Single12, ProteinUnet13, and SPOT-1D-Single14. PSIPRED-Single predicts the secondary structure only while SPIDER3-Single, ProteinUnet, and SPOT-1D-Single predicts secondary structure, Accessible Surface Area (ASA)15, Half-Sphere Exposure (HSE)16 and Backbone torsion angles (\(\psi \), \(\phi \), \(\theta \), and \(\tau \)). SPIDER3-Single employed iterative learning on a two-layer Bidirectional Long-Short-Term-Memory(LSTM) cells17 on a training set of approximately 10000 proteins. ProteinUnet followed the same strategy except replacing the Bi-LSTM model with a convolution-based Unet architecture18, which achieved a similar performance but with a smaller computational requirement. More recently, SPOT-1D-Single improved over all previous predictors by taking advantage of a high sequence identity training set and an ensemble of Convolution and LSTM based hybrid to improve the performance on completely independent test sets. Although these single-sequence models do improve over profile-based methods for proteins with a low number of effective homologous sequences (Neff), there is above 10% gap for those sequences with higher Neff values: 74% for three-state secondary structure prediction, compared to 86% by profile-based techniques14.

Recently, unsupervised deep learning methods inspired by Natural Language Processing were introduced to extract features from protein sequences19,20,21,22. These methods were trained on extensive protein databases such as Uniref23, Uniclust24, Pfam25, and BFD26,27. One state-of-the-art protein language model (LM) is ProtTrans22 trained on the Uniref50 dataset. It employs a transformer-based auto-encoder model T5 to generate the embedding. Another protein language model also trained on Uniref50 dataset is ESM-1b, which uses a 34 Transformer model21. Both ProtTrans and ESM-1b trained downstream models for predicting secondary structure with significant improved performance. However, they did not provide comprehensive benchmark testing. Moreover, they did not examine other one-dimensional properties such as ASA, Half-Sphere Exposure and Backbone torsion angles.

In this work, we explored the combined use of ProtTrans and ESM-1b generated embedding to train a downstream predictor of secondary structure and other 1D tertiary structural properties. We demonstrated that the new alignment-free model can achieve a performance comparable to or better than sequence-profile-based prediction of 1D structural properties for both high and low Neff proteins without searching for homologous sequences.

Results

Feature analysis

Our model was built on a combination of three main input features: single-sequence one-hot, ESM-1b, and ProtTrans encodings. We trained three individual neural network models (Two-Layer LSTM, MS-ResNet, and MS-Res-LSTM) with different combinations of these three input features. Results are shown in Fig. 1 for three-state (SS3) secondary structure prediction on independent test sets of TEST2018, TEST2020, and Neff1-2020 datasets. The accuracy on the easy TEST2018 (86.5% by three features) is significantly higher than on the hard set TEST2020 (80%) as expected. At the single feature level, both ESM-1b and ProtTrans encodings are significantly better than one-hot encoding in predicting secondary structure. ProtTrans has comparable performance to ESM-1b in TEST2018 but a better performance in the more difficult TEST2020 and Neff1-2020 sets. Adding one-hot encoding to ProtTrans makes marginal improvement over ProtTrans alone on TEST2018 but a larger improvement in TEST2020 and Neff1-2020. On the other hand, adding one-hot encoding to ESM-1b makes a comparable performance on TEST2018 but worse performance on TEST2020. Similar trends are also visible for eight-state (SS8) prediction (Supplementary Fig. S1). What is the most important is that combining three features makes a consistent improvement in all three datasets and three networks although adding one-hot-encoding only leads to minor improvement over ProtTrans+ESM-1b embedding but is statistically significant (P-value of \({1.81\times 10^{-2}}\) on all test sets combined). The improvement is the largest for the difficult case (TEST2020 and Neff1-2020). Overall, the three-state (SS3) secondary structure accuracy for all test sets improves 7-10% from 73-76% for a single-sequence-based method (one-hot-encoding) to 80-86% after combining three features. Moreover, the performance on TEST2020 and Neff1-2020 is nearly identical, indicating that, unlike profile-based models, the performance of the current alignment-free method is independent of how many homologous sequences a protein has. Similar trends were observed for eight-state (SS8) structure prediction (Supplementary Fig. S1), ASA, and HSE (Supplementary Table S1), and backbone torsion angle prediction (Supplementary Table S2).

Ensemble learning

The individual models were combined into an ensemble to further improve model performance. To demonstrate the advantage of ensemble learning over individual models, Table 1 presents the results of the selected three models and the results of the ensemble on TEST2018 and TEST2020. As we can see, for all properties tested, the trends we observed in this table is similar to what we observed in our previous work SPOT-1D-Single. The ensemble performance drops the error for \(\psi \), \(\phi \), \(\theta \), and \(\tau \) over the best individual model by 0.90%, 0.89%, 1.20%, and 0.87% on TEST2018, respectively. For secondary structure prediction three-state (SS3) and eight-state (SS8) the ensemble accuracy is 86.74% and 76.47%, which is 0.27% and 0.52% better than the best individual model. Similar improvement is also visible in Pearson’s Correlation Coefficient (PCC) for ASA, HSE-U, and CN predictions. The same trend is observed for TEST2020.

Methods comparison

The performance for three-state (SS3) secondary structure prediction given by our ensemble method named SPOT-1D-LM is compared with four single-sequence-based methods PSIPRED-Single, SPIDER3-Single, ProteinUnet and SPOT-1D-Single along with two profile-based methods SPOT-1D and NetSurfP-2.0 on six different test sets (TEST2018, TEST2020, Neff1-2020, CASP12-FM, CASP13-FM, and CASP14-FM) in Fig. 2. The result confirms a large leap from 72-74% by single-sequence-based methods to 80-86% by alignment-profile-based methods for the prediction accuracy for TEST2018, TEST2020, CASP12-FM, CASP13-FM, and CASP14-FM. The performance of profile-based methods is worse than the performance of single-sequence-based methods only for Neff1-2020, confirming the previous finding that profile-based methods lose their accuracy when lacking homologous sequences. Importantly, our language-model-based method achieves a comparable performance to the profile-based methods for all test sets. Furthermore, it improves over single-sequence-based methods even for Neff1-2020. For example, SPOT-1D-LM performs 0.66%, 1.6%, and 17% better than SPOT-1D, NetSurfP-2.0, and SPOT-1D-Single, respectively, for SS3 prediction for TEST2018. Its performance on TEST2020, CASP12-FM and CASP13-FM is comparable to that of the profile-based SPOT-1D and better than that of the profile-based NetSurfP-2.0. Similar trends are also observed for SS8 prediction, as shown in Supplementary Fig. S2. The improvement over profile-based methods on Neff1-2020 and single-sequence-based methods on all test sets is further confirmed by the statistical significance analysis in Supplementary Table S3. The matching performance of SPOT-1D-LM with profile-based models on backbone torsion angles are also illustrated in Tables 2, 3 for TEST2020, Supplementary Table S4 for CASP12-FM and Supplementary Table S5 for CASP13-FM. In order to compare to the downstream secondary structure prediction models from ProtTrans and ESM-1b, we compared our model SPOT-1D-LM on CASP12 and NEW364 test set used in ProtTrans, and SPOT-1D-LM outperforms both as shown in Supplementary Table S6.

Comparing the accuracy of secondary structure prediction of SPOT-1D-LM (this work) with single sequence methods (SPIDER3-Single, ProteinUnet, and SPOT-1D-Single) and sequence-profile-based methods (SPOT-1D and NetSurfP-2.0) on six test sets (TEST2018, TEST2020, Neff1-2020, CASP12-FM, CASP13-FM, and CASP14-FM) for three-state (SS3) secondary structure prediction.

Secondary structure is dominated by local interactions. How about structural properties that are based on tertiary structures? Fig. 3 examines the performance of different predictors for ASA prediction on six different test sets (TEST2018, TEST2020, Neff1-2020, CASP12-FM, CASP13-FM, and CASP14-FM). Again, we observe that profile-based methods perform far better than single-sequence-based methods in ASA prediction except when Neff=1 (Neff1-2020). More importantly, SPOT-1D-LM performs the best for all test sets. It outperforms the profile-based method NetSurfP-2.0 by 4%, 4%, 10%, 0.9% and 9% on TEST2018, TEST2020, Neff1-2020, CASP12-FM, and CASP13-FM, respectively. Comparing to SPOT-1D, its improvement is 3%, 6%, 19%, 1%, and 9%, respectively. Better or comparable performance is also observed for other tertiary structural properties such as contact number (CN) and half sphere exposures (HSE-u and HSE-d) as shown in Table 2 for TEST2018, Table 3 for TEST2020, Supplementary Table S4 for CASP12-FM and Supplementary Table S5 for CASP13-FM.

As in Fig. 2 but for prediction of tertiary structure proteins (solvent accessibility).

To enlarge our test sets, TEST2020 contains low-resolution structures. To examine if these low-quality proteins affect our conclusion above, we also obtained TEST2020-HQ. As shown in Supplementary Table S7, we found that the performance on TEST2020 is essentially the same as the performance on TEST2020-HQ for all structural properties predicted. We also compared SPOT-1D-LM to other predictors on CASP14-FM proteins and find that SPOT-1D-LM outperforms other predictors, including profile-based methods for all properties as shown in Supplementary Table S8.

Discussions

In this paper, we have developed a new Language-model-based method for predicting one-dimensional structural properties of proteins, including secondary structure, solvent accessible surface area, and backbone torsion angles. We employed an ensemble of three network variants of ResNet and LSTM models, trained on approximately 40000 proteins with embedding generated from ESM-1b and ProtTrans. The model is then compared to other predictors on independent and non-redundant test sets created by removing any remote homologs (TEST2020, CASP12-FM, and CASP13-FM) or by 25% sequence identity cutoff (TEST2018). The large improvement of our method over any single-sequence-based methods for all structural properties is observed for all six test sets (TEST2018, TEST2020, Neff1-2020, CASP12-FM, CASP13-FM, and CASP14-FM). More importantly, we demonstrated that an alignment-free method can match or improve over an alignment-based method in predicting 1D structural properties, regardless if it is secondary-structure or tertiary-structure-based 1D properties.

The alignment-free method proposed here can skip the intensive computing time required to search for homologous sequences from an exponentially expanding sequence database. As shown in Supplementary Table S9, generating PsiBlast sequence profiles and HMM models will require 9.3 hours and 6.9 hours, respectively, for 250 proteins in TEST2018 by utilizing 16 cores of Intel(R) Xeon(R) CPU E5-2620 v4 @ 2.10GHz machine. After that, it takes additional 0.23 hours by NetSurfP-2.0 or 1.1 hours by SPOT-1D to complete the prediction. SPOT-1D, an ensemble of six different models, uses SPOT-Contact, SPIDER3, CCMpred and DCA as input. This makes the pipeline for SPOT-1D extensively time-consuming. By comparison, SPOT-1D-LM takes a total 0.29 hours on the same 16-core CPU for complete prediction with the same or better accuracy. The single-sequence method SPOT-1D-Single is quicker than SPOT-1D-LM (0.04 hours) but with poorer performance. Moreover, SPOT-1D-LM can complete the whole prediction on a Titan X GPU for 0.04 hours only. Thus, it is now feasible for making highly accurate genome-scale prediction on protein secondary and tertiary structural features.

This method is limited to a protein of \(\le \)1024 amino-acid residues. This should not hamper the analysis of protein sequence features because the largest structural domain found so far contains 692 amino acid residues28,29 with the majority \(<200\) residues. Large (long) proteins usually are made of multiple, mostly independent structural domains connected by intrinsically disorder regions. Thus, it is possible to divide a protein into shorter domains prior to make secondary structure or other structural property prediction by using protein domain prediction tools30.

The successful matching performance between alignment-free and alignment-based methods highlights the potential of using a similar combination of language models for other structural properties such as proteins intrinsic disorder31 and distance-based contact maps8,32 as well as for end-to-end tertiary structure prediction1,33,34. In particular, AlphaFold2 has successfully predicted protein structures at an experimental accuracy in CASP14 for those proteins with a minimal of 30 homologous sequences1. Our results indicated the possibility that the success of AlphaFold2 can expand to the proteins without homologous sequences by using a combination of language models as input, rather than the multiple aligned homologous sequences as an input35,36.

Methods

Datasets

The training and test datasets employed in this work are from our previous work for developing SPOT-1D-Single. Briefly, we started with the dataset prepared by ProteinNet at the highest sequence identity cutoff of 95% according to mmseqs2 tool37 to maximize the training data. This leads to 50914 proteins submitted to PDB before the year 2016 with resolution \(<2.5\)Å.

To avoid overfitting and achieve an effective validation, we randomly selected 100 proteins one by one from the training set and compared their Hidden Markov Model (HMM) against the HMM of all other proteins in the training set at an e-value cutoff of less than 0.1. Any proteins that were remotely similar to the 100 validation proteins were removed from the training set. In addition, we removed any proteins with length more than 1024. This led to 38913 proteins for training and 99 proteins for validation.

The first test set employed is TEST20185 with 250 proteins released between January 01, 2018 and June 17, 2018 with resolution \(< 2.5\)Å and R-free \(< 0.25\), and have sequence similarity less than 25% to all pre-2018 proteins. We further obtained a hard test set TEST2020 that includes all proteins released between May 2018 and April 2020 with removal of close and remote homologs using HMM models to all proteins released before 2018 on PDB. Due to the limitation of the language model used, we further removed the proteins with lengths greater than 1024. The final TEST2020 contains 671 proteins. A further resolution constraint of \(<2.5\)Å and R-free\(<0.25\) led to 124 proteins forming TEST2020-HQ. Separating the proteins without homologs (Neff=1) from TEST2020, Neff1-2020 dataset was curated with 46 proteins.

Apart from the above-mentioned test sets, we also employed independent test sets CASP12-FM (released in year 2016) CASP13-FM (released in year 2018) and CASP14-FM (released in year 2020). These test sets include 22, 17, and 15 free-modelling proteins released during CASP12, CASP13, and CASP14, respectively. Free modeling targets are those proteins without known structural templates in the protein databank at the time of releases, which are after all proteins in the training and validation sets.

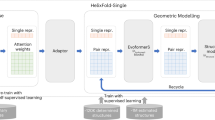

Input features

As shown in Fig. 4, we employed the one-hot encoding from the protein sequence concatenated to the language model embeddings generated using ESM-1b and ProtTrans models. The one-hot encoding has a dimension of L \(\times \) 20, where L is the length of the protein. The embedding from ESM-1b is generated from a model trained on the Uniref50 dataset and has a dimension of L \(\times \) 1280. The ProtTrans model was also trained on the Uniref50 and employed the T5-XL model to generate an embedding of dimension of L \(\times \) 1024. Concatenating all these features yielded the final input features of dimension L \(\times \) 2324. This input was utilized for both classification and regression models.

Outputs

The outputs of this method have been divided into two main categories: classification and regression. The classification output is extracted from the classification models with 11 output nodes dedicated for protein secondary structure. We use the Dictionary of Secondary Structure of Proteins (DSSP) for assigning three-state (SS3) and eight-state (SS8) secondary structures38. We also predicted 1D structural properties which fall under the regression category. They include the solvent accessible surface area (ASA), protein backbone angles (\(\psi \), \(\phi \), \(\theta \), and \(\tau \)), half-sphere exposures (HSE), and contact number (CN). These outputs are identical to the predictions in our previous methods SPOT-1D5 and SPOT-1D-Single14.

Neural network architecture

The model utilized in SPOT-1D-LM follows the neural networks utilized in SPOT-1D-Single14. In brief, we employed an ensemble of three neural network architectures: 2-layer BiLSTM, multi-scale-ResNet (MS-ResNet), and multi-scale-Res-LSTM (MS-Res-LSTM). The ensemble of LSTM-BRNN and ResNet-based models help the models to identify short- and long-range context throughout the sequence5. In total, we trained three models to form an ensemble of three for the classification tasks and regression tasks, respectively. Similar to SPOT-1D-Single, both classification and regression models were trained on a batch size of ten with cross-entropy loss and L1-loss, respectively. The ensemble of classification models employed the mean of the classification probabilities from each model. The mean was also employed for the ensemble of the ASA, HSE-u, HSE-d and CN regression models. For the angle prediction, we utilized the median as in SPOT-1D5 to avoid forbidden angle regions.

The first model we trained is a two-layered bidirectional-LSTM with hidden dimension of 1024 followed by two fully connected layers of size 100017. A dropout rate of 0.5 after each LSTM layer was used to avoid overfitting. The second model we trained is a MS-Resnet, which is made of three parallel stacks of ResNet architectures with a great performance for similar tasks5,8. The three stacks differ from each other in terms of the kernel size. The first, second, and third stacks of the ResNets have the kernel sizes of three, five, and seven, respectively. Each parallel stack has 15 blocks of ResNet for which the sizes of convolutional layers vary after every five blocks from 64-256. At the end, the output from all three stacks is then concatenated and passed through the output layer. In every ResNet block, we normalized and activated the output of each convolutional layer by applying batch normalization and ReLU activation function39,40. We also applied a dropout rate of 0.5 in each block. The third model we trained is MS-Res-LSTM. This model is a hybrid of the first two models. It includes the MS-ResNet in which one parallel stack of three is replaced by four bidirectional-LSTM layers of a hidden size of 128. The ResNet block stacks have the same configuration as the MS-ResNet stacks with kernel sizes of 5 and 7, respectively. A dropout rate of 0.5 was employed in the bidirectional-LSTM layer.

Performance evaluation

The three-state (SS3) and eight-state (SS8) secondary-structure predictions were evaluated based on the percentage accuracy by concatenating all the proteins together and making an overall assessment. Prediction of ASA, HSE-u, HSE-d, and CN was evaluated by calculating the Pearson’s Correlation Coefficient (PCC) between true and predicted values for each protein and then averaged over the whole dataset41. To evaluate the model performance for the backbone angles (\(\psi \), \(\phi \), \(\theta \), and \(\tau \) ), we calculate the Mean Absolute Error (MAE) between true angles and predicted angles for the whole dataset concatenated together.

Methods comparison

SPOT-1D-LM developed here was compared with single-sequence-based predictors SPOT-1D-Single, ProteinUnet, SPIDER-Single3, and PSIPRED-Single. We also compared our method against profile-based methods SPOT-1D, and NetSurfP-2.0. All above-stated methods have stand-alone programs available online at the following links respectively:

-

SPOT-1D-Single: https://github.com/jas-preet/SPOT-1D-Single.

-

ProteinUnet: https://codeocean.com/capsule/2521196/tree/v1.

-

SPIDER3-Single: https://servers.sparks-lab.org/downloads/SPIDER3-Single_np.tgz.

-

PSIPRED-Single: http://bioinfadmin.cs.ucl.ac.uk/downloads/psipred/.

-

SPOT-1D: https://servers.sparks-lab.org/downloads/SPOT-1D-local.tar.gz.

-

NetSurfP-2.0: https://services.healthtech.dtu.dk/service.php?NetSurfP-2.0.

Data availability

The data used by SPOT-1D-LM is publicly available at https://github.com/jas-preet/SPOT-1D-LM.

Code availability

SPOT-1D-LM predictor is available as a server at https://sparks-lab.org/server/SPOT-1D-LM/ and standalone software at https://github.com/jas-preet/SPOT-1D-LM to run on a local computer.

References

Jumper, J. et al. Highly accurate protein structure prediction with AlphaFold. Nature 1–11 (2021).

Wang, S., Peng, J., Ma, J. & Xu, J. Protein secondary structure prediction using deep convolutional neural fields. Sci. Rep. 6, 1–11 (2016).

Fang, C., Shang, Y. & Xu, D. MUFOLD-SS: New deep inception-inside-inception networks for protein secondary structure prediction. Proteins Struct. Funct. Bioinform. 86, 592–598 (2018).

Klausen, M. S. et al. NetSurfP-2.0: Improved prediction of protein structural features by integrated deep learning. Proteins Struct. Funct. Bioinform. 87, 520–527 (2019).

Hanson, J., Paliwal, K., Litfin, T., Yang, Y. & Zhou, Y. Improving prediction of protein secondary structure, backbone angles, solvent accessibility and contact numbers by using predicted contact maps and an ensemble of recurrent and residual convolutional neural networks. Bioinformatics 35, 2403–2410 (2019).

Xu, G., Wang, Q. & Ma, J. OPUS-TASS: A protein backbone torsion angles and secondary structure predictor based on ensemble neural networks. Bioinformatics 36, 5021 (2020).

Wang, S., Sun, S., Li, Z., Zhang, R. & Xu, J. Accurate de novo prediction of protein contact map by ultra-deep learning model. PLoS Comput. Biol. 13, e1005324 (2017).

Hanson, J., Paliwal, K., Litfin, T., Yang, Y. & Zhou, Y. Accurate prediction of protein contact maps by coupling residual two-dimensional bidirectional long short-term memory with convolutional neural networks. Bioinformatics 34, 4039–4045 (2018).

Li, Y., Zhang, C., Bell, E. W., Yu, D.-J. & Zhang, Y. Ensembling multiple raw coevolutionary features with deep residual neural networks for contact-map prediction in CASP13. Proteins Struct. Funct. Bioinform. 87, 1082–1091 (2019).

Pearson, W. R. An introduction to sequence similarity (“homology’’) searching. Curr. Protoc. Bioinform. 42, 3 (2013).

McGuffin, L. J., Bryson, K. & Jones, D. T. The PSIPRED protein structure prediction server. Bioinformatics 16, 404–405 (2000).

Heffernan, R. et al. Single-sequence-based prediction of protein secondary structures and solvent accessibility by deep whole-sequence learning. J. Comput. Chem. 39, 2210–2216 (2018).

Kotowski, K., Smolarczyk, T., Roterman-Konieczna, I. & Stapor, K. ProteinUnet: An efficient alternative to SPIDER3-single for sequence-based prediction of protein secondary structures. J. Comput. Chem. 42, 50 (2020).

Singh, J. et al. SPOT-1D-Single: Improving the single-sequence-based prediction of protein secondary structure, backbone angles, solvent accessibility and half-sphere exposures using a large training set and ensembled deep learning. Bioinformatics 1, 9 (2021).

Chothia, C. Hydrophobic bonding and accessible surface area in proteins. Nature 248, 338–339 (1974).

Heffernan, R. et al. Highly accurate sequence-based prediction of half-sphere exposures of amino acid residues in proteins. Bioinformatics 32, 843–849 (2016).

Schuster, M. & Paliwal, K. K. Bidirectional recurrent neural networks. IEEE Trans. Signal Process. 45, 2673–2681 (1997).

Ronneberger, O., Fischer, P. & Brox, T. U-net: Convolutional networks for biomedical image segmentation. in International Conference on Medical image computing and computer-assisted intervention, 234–241 (Springer, 2015).

Rao, R. et al. Evaluating protein transfer learning with TAPE. Adv. Neural Inf. Process. Syst. 32, 9689–9701 (2019).

Heinzinger, M. et al. Modeling aspects of the language of life through transfer-learning protein sequences. BMC Bioinform. 20, 723 (2019).

Rao, R., Meier, J., Sercu, T., Ovchinnikov, S. & Rives, A. Transformer protein language models are unsupervised structure learners. bioRxivhttps://doi.org/10.1101/2020.12.15.422761 (2020).

Elnaggar, A. et al. ProtTrans: Towards cracking the language of life’s code through self-supervised deep learning and high performance computing. arXiv preprintarXiv:2007.06225 (2020).

Suzek, B. E., Huang, H., McGarvey, P., Mazumder, R. & Wu, C. H. UniRef: Comprehensive and non-redundant UniProt reference clusters. Bioinformatics 23, 1282–1288 (2007).

Mirdita, M. et al. Uniclust databases of clustered and deeply annotated protein sequences and alignments. Nucleic Acids Res. 45, D170–D176 (2017).

Bateman, A. et al. The pfam protein families database. Nucleic Acids Res. 32, D138–D141 (2004).

Steinegger, M., Mirdita, M. & Söding, J. Protein-level assembly increases protein sequence recovery from metagenomic samples manyfold. Nat. Methods 16, 603–606 (2019).

Steinegger, M. & Söding, J. Clustering huge protein sequence sets in linear time. Nat. Commun. 9, 1–8 (2018).

Jones, S. et al. Domain assignment for protein structures using a consensus approach: Characterization and analysis. Protein Sci. 7, 233–242 (1998).

Wheelan, S. J., Marchler-Bauer, A. & Bryant, S. H. Domain size distributions can predict domain boundaries. Bioinformatics 16, 613–618 (2000).

Wang, Y., Zhang, H., Zhong, H. & Xue, Z. Protein domain identification methods and online resources. Comput. Struct. Biotechnol. J. 19, 1145 (2021).

Hanson, J., Paliwal, K. K., Litfin, T. & Zhou, Y. SPOT-Disorder2: Improved protein intrinsic disorder prediction by ensembled deep learning. J. Genom. Proteom. Bioinform. 17(6), 645–656 (2019).

Wu, Q. et al. Protein contact prediction using metagenome sequence data and residual neural networks. Bioinformatics 36, 41–48 (2020).

AlQuraishi, M. End-to-end differentiable learning of protein structure. Cell Syst. 8, 292–301 (2019).

Baek, M. et al. Accurate prediction of protein structures and interactions using a three-track neural network. Science 373, 871–876 (2021).

Chowdhury, R. et al. Single-sequence protein structure prediction using language models from deep learning. bioRxiv (2021).

Wang, W., Peng, Z. & Yang, J. Single-sequence protein structure prediction using supervised transformer protein language models. bioRxiv (2022).

Steinegger, M. & Söding, J. MMseqs2 enables sensitive protein sequence searching for the analysis of massive data sets. Nat. Biotechnol. 35, 1026–1028 (2017).

Kabsch, W. & Sander, C. Dictionary of protein secondary structure: Pattern recognition of hydrogen-bonded and geometrical features. Biopolym. Orig. Res. Biomol. 22, 2577–2637 (1983).

Ioffe, S. & Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv preprintarXiv:1502.03167 (2015).

Agarap, A. F. Deep learning using rectified linear units (relu). arXiv preprintarXiv:1803.08375 (2018).

Benesty, J., Chen, J., Huang, Y. & Cohen, I. Pearson correlation coefficient. In Noise reduction in speech processing, 1–4 (Springer, 2009).

Acknowledgements

This work was supported by the Australian Research Council DP210101875 to K.P. We gratefully acknowledge the use of the High-Performance Computing Cluster Gowonda to complete this research and the aid of the research cloud resources provided by the Queensland Cyber Infrastructure Foundation (QCIF). We also gratefully acknowledge the support of NVIDIA Corporation with the donation of the Titan V GPU used for this research. The support of Shenzhen Science and Technology Program (Grant No. KQTD20170330155106581) and the Major Program of Shenzhen Bay Laboratory S201101001 are also acknowledged.

Author information

Authors and Affiliations

Contributions

JS\(^{*}\), KP, and JS designed network architectures, JS\(^{*}\) and TL prepared the data sets. JS\(^{*}\) generated input features and performed deep learning models training, the results analysis, wrote the manuscript, and build a standalone tool and web API. TL and JS\(^{*}\) participated in the initial design. YZ assisted in result analysis, and improved the whole manuscript. All authors read, contributed to the discussion, and approved the final manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Singh, J., Paliwal, K., Litfin, T. et al. Reaching alignment-profile-based accuracy in predicting protein secondary and tertiary structural properties without alignment. Sci Rep 12, 7607 (2022). https://doi.org/10.1038/s41598-022-11684-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-11684-w

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.