Abstract

Expert weight determination is a critical issue in the design concept evaluation process, especially for complex products. However, this phase is often ignored by most decision makers. For the evaluation of complex product design concepts, experts are selected by clusters with different backgrounds. This work proposes a novel integrated two-layer method to determine expert weight under these circumstances. In the first layer, a hybrid model integrated by the entropy weight model and the Multiplicative analytical hierarchy process method is presented. In the second layer, a minimized variance model is applied to reach a consensus. Then the final expert weight is determined by the results of both layers. A real-life example of cruise ship cabin design evaluation is implemented to demonstrate the proposed expert weight determination method. To analyze the feasibility of the proposed method, weight determination with and without using experts is compared. The result shows the expert weight determination method is an effective approach to improve the accuracy of design concept evaluation.

Similar content being viewed by others

Introduction

Design concept evaluation is a critical phase in new product development (NPD). An ideal initial design concept can match customers’ requirements, and save time and cost for companies in the competitive global market1. The preliminary design concept often shows the novelty, feasibility and quality of the product. Design concept evaluation in the early stage is a phase to choose a suitable plan from the initial design concepts. It is essential in the early stage of NPD, especially for complex products2, because once the design concept is fixed, it is not easy to modify it in later stages. The requirements and preferences of customers and the structure and material of the product are determined in this phase3. The cost and sustainability of the product are also estimated.

As the design concept evaluation is based on a variety of factors, it can be seen as a multiple attributes decision making (MADM) problem. In MADM problems, due to the uncertain environment, individual judgement may be imprecise and subjective, and thus group decision making (GDM) is an effective solution in design concept evaluation4. In GDM, the ranking of the alternatives is recommended by integrating experts’ judgements. Kabak et al.5 reviewed related literature and proposed a generic conceptual MADM framework with three stages, as shown in Fig. 1. The first stage is the structuring stage. Alternatives, attributes and experts are defined in this stage, and the weights of experts are also determined here. After that, in the assessment stage, the weights of attributes are obtained. In the final selection phase, the alternatives are ranked based on an appropriate mathematical model.

In the past, the alternative ranking approach attracted the most significant attention. Up to 2010, over 70 selection methods had been previously proposed in MADM problems. According to King6, the analytical hierarchy process (AHP), utility theory, graphical tools, quality function deployment (QFD) and fuzzy logic7,8 approaches are the most popular methods in design concept evaluation. In particular, the Technique for Order Preference by Similarity to Ideal Solution (TOPSIS), the VlseKriterijumska Optimizacija I KOmpromisno Resenje (VIKOR), the ELimination Et Choice Translating REality (ELECTRE) methods and their extended approaches have been developed to address MADM problems9,10,11,12. Scholars are willing to establish a proper evaluation model to deal with specific real-life projects. However, studies on expert weight determination are very limited13,14. Expert weight determination is only mentioned in 41% of the top-cited papers on GDM. Therefore, experts are treated as a homogeneous group by default, and the individual weight is set to average. Design concept evaluation is a specific application area of MADM methods1, and the most frequently cited studies in this area are listed in Table 1. Although researchers have made efforts to optimize the method to obtain criteria weights, expert weight is rarely mentioned.

In Fig. 2, from the cognitive system of design concept evaluations, we can infer that an expert needs to make an individual judgement based on their cognition and preference. Thus, the judgement is subjective, depending on the expert’s knowledge, culture, experience and aesthetic. However, it is practically impossible to form a group of experts with a similar background. The drawback of an individual in the expert group may influence the accuracy of experts’ judgement.

Cognitive system of design concept evaluation20.

Once the weight of each expert is distributed equally or ignored, the final decision may lead to an incorrect result. Thus, some researchers started to develop an appropriate method to determine the expert weight. In their investigations, not every selected expert is familiar with all the criteria requiring judgement, and some specialized criteria may be beyond the cognition of some experts. Thus, the contribution of experts may not be equal in the decision process. It is essential to determine the weight of experts in the decision process to eliminate any deviation caused by the experts’ imprecise cognition.

Generally, there are three ways to solve weight determination problems: the subjective approach, the objective approach and the integrated approach, as shown in Fig. 3. For the subjective methods, the weight of experts is calculated by integrating the evaluation of each other expert, depending on their age, attitude, experience etc.21. The objective methods are based on available evaluation data, and no extra information is required. Hence, the expert weight is computed based on an appropriate mathematical model22. Compared to the subjective methods, they are generally more objective, but they ignore the expert’s personal preference. In this part, the expert weight determination methods are reviewed.

In the early stage, subjective methods dominated in studies on expert weight determination. Expert weights were assigned by the supervisors or by comparison between individual expert groups. In the subjective expert weight determination, pairwise comparison matrices were established, then methods with a geometric mean were proposed to determine the expert weight. Multiplicative AHP23 and Simple Multi-Attribute Rating Technique (SMART) are two key approaches in subjective expert weight determination24.

Ramanathan and Ganesh23 were the first scholars to develop the Multiplicative AHP framework in the expert weight determination area. They proposed an eigenvector based method by comparing the experts’ influence in pairs, as interpersonal comparison can help experts achieve a consensus without any interaction23. Barzilai et al.24 improved the Multiplicative AHP method and explained the relationship between the Multiplicative AHP method and the SMART method. The geometric mean of the scaled gradation indices or values is applied in both methods21. As well as the methods mentioned above, Delphi is another competent subjective approach in expert weight determination. Azadfallah25 applied the Delphi technique by comparing the attributes by experts in pairs, then computed the weights with the eigenvector method.

Subjective expert weight determination methods are based on relative judgements. To make precise judgement, the experts are expected to be familiar with every attribute. However, in real-life projects, it is not practical for every expert to meet all the requirements. Moreover, once the number of attributes, alternatives, or experts increases, pairwise comparative work would be a huge project. Due to the heavy workload, pairwise comparative judgements are not easy to implement in complex MADM problems. Objective weight determination methods have developed rapidly in recent years. There are three objective expert weight determination methods, as well as some special methods such as the Markov Chain’s theory26.

In similarity-based methods, expert weights are determined by distinguishing the expert’s evaluation or measuring the expert’s distance to the aggregated decision27,28. The similarity-based study is very close to the TOPSIS method, in which the expert whose decision has minimum distance to the ideal solution has the highest weight. Yue28,29 proposed a modified TOPSIS method, to determine the expert weights by measuring the distance between the expert judgement and the ideal decision. Yang proposed a rough group decision matrix to determine the expert weights30. Wan31 constructed a bi-objective program which could maximize the minimum degree of acceptance and minimize the maximal degree of rejection, and also introduced three approaches to measure the distance between the individual preference and the group. Jiang32 introduced a novel method to measure the distance between the expert preference and the ideal solution.

Index-based methods are divided into two groups: consensus-based methods and consistency-based methods. In consensus-based methods, experts are assigned to adjust their preferences, or vary their weights to make their judgements more similar33. Pang34 developed a non-linear programming model, and determined the expert weight by maximizing the group consensus where the expert weight adjusts adaptively with the experts’ decision. Xu35 also used a non-linear model, and proposed a genetic algorithm in expert weight determination. Dong36 introduced a consensus reaching process in group decision making. The method is proposed for non-cooperative expert groups, and the expert weights are determined dynamically by a self-management mechanism. Consistency-based methods are superior in inaccurate judgements, using consistency indexes against the group decision to determine the weights of experts37. When some experts have higher reliabilities compared to others, the decision makers need to reach a consistent view through negotiations. Liu38 introduced an expert weight adjusting method based on the consistency-based method. The expert weights are firstly computed by the AHP method and the entropy method, and then optimized by the consistency level of a black-start result. Chen39 established a collective consistency matrix of all experts and determined the weight of experts by the consistency degree of each expert.

When the group is large, a clustering-based method may be appropriate in real-life problems. A multi-level weight determination method may optimize weight via different models according to the features of the current layer40. Sometimes experts are assembled in several specific groups, and the experts in the same group have a similar background, but the gaps between groups are large. As shown in Fig. 4 by Liu40, a two-layer model is proposed based on the 2-tuple linguistic (2TL) model. In the first layer, decision makers are separated into clusters by certain criteria. The weight of the group depends on the importance of itself. The clusters are regarded as small systems with similar individual information, which is a well-organized system. Liu utilized an Entropy Weight Model to reach consistency. In each cluster, the experts’ status, occupations and experiences are close. Therefore, their judgements should be similar but not identical41. To reach a consensus, a Minimized Variance Model is implemented in this layer to seek a minimized deviation among all variables.

Two-layer expert weight determination method by Liu40.

The literature proposed problem-solving methods through a specific mathematical model or operator to determine expert weights, through methods such as comparing the deviation between the expert preference and the ideal solution, or simply adjusting the expert weight to make the experts achieve consensus. However, there are still some drawbacks. First, as some of the mathematical models or operators may focus on the overall average, some information that deviates from the average level may be ignored. Second, existing studies usually obtain the expert weight by a specific method. Once the problem is complex, one single method cannot reflect a real-life problem accurately.

The integrated method relies on an integration of two or more methods to eliminate the drawbacks of a single approach or simplify a complex method. As integrated methods show their advantage in solving complex GDM problems which have become more pronounced in recent years, an increasing number of integrated expert weight determination methods are presented in decision making22. Qi42 proposed models based on various conditions under interval-valued intuitive fuzzy decision environments and determined both criteria weight and expert weight. First, they introduced a method to measure the gap between decision matrices and the ideal decision matrix, and then they developed an approach to evaluate the similarity degree between individual decision matrices. Liu43 proposed another expert weight determination method. The method integrated both subjective and objective expert weight determinations in decision making. First, a plant growth simulation algorithm is applied to get the generalized Fermat–Torricelli point of individual preferences with interval number decision matrices. Then a similarity-based expert weight determination method is used. Finally, the expert weights obtained from both methods are aggregated. Jabeur44 also determined the expert weight based on subjective and objective components.

In product design concept evaluation, a group of experts must be selected in a proper way to ensure the correctness of the assessment. In complex product design concept evaluation projects, as shown in Fig. 5, experts are normally selected from experienced consumers and expert producers45.

Normally, most expert producers are designers and manufacturers. Hence, we can categorize the decision makers into the designer cluster (DC), the manufacturer cluster (MC), and the consumer cluster (CC). The cluster category can perfectly reflect the clustering information of experts. In addition, the integration of subjective and objective weights can improve the accuracy of the design concept evaluation46. However, recent studies seldom consider the subjective expert weight due to the workload when the expert group is large.

In our work, we integrated the subjective and objective expert weights with a 2-layer cluster weight determination. The distribution of expert preferences in the clustering-based method is illustrated in Fig. 6. In the cluster layer (Layer 1), the Shannon entropy model can illustrate the organization of the condition of the clusters, but cannot well reflect the individual expert preferences. Hence, an aggregated method integrated by AHP and entropy weight model is proposed under this layer. In the subsection layer (Layer 2), the decision makers in the same cluster are the experts with similar knowledge and background, so their preferences should be highly consistent40. Under the circumstances, subjective pairwise comparison is omitted here, because on the one hand, it may largely increase workload, and on the other hand, the influence may not be obvious. Thus, an objective minimized variance model is used here.

The rest of this paper is organized as follows. In “Preliminaries”, relevant expert weight determination methods are reviewed. In “Methodology”, the conceptual framework of expert weight determination is presented. In “Case study”, a real-life example is implemented and related analysis is presented. In “Comparison of methods with and without using weight determination”, a comparison with and without using expert weight determination is discussed. In “Conclusions”, the conclusion is provided.

Preliminaries

This study proposes a novel integrated expert weight determination method. Before presenting our method, some related expert weight determination methods are reviewed.

The multiplicative AHP method

Multiplicative AHP is a significant subjective expert weight determination method. The method is easy to implement in real-life cases23. Similar to other subjective methods, the Multiplicative AHP determines the expert weight through pairwise comparisons. Initially, each expert in the decision maker group is allocated to assess every group member. It may lead to a personal upward bias21,26. To eliminate the error caused by the expert’s assessment, in Honert’s study, each expert’s comparisons with the expert him/herself are no longer counted. For example, each expert in the decision maker group with \(G\) experts only needs to make \((G-1)\) comparisons.

The expert weight determination method of Multiplicative AHP in Honert’s approach can be summarized in the following steps21.

Step 1: Assume the expert group has \(G\) members, DM \(y\) is a member of the expert group. As is shown in Table 2, every expert is assigned to make a linguistic comparison between attributes by individual judgement using pre-provided words (Very strong preference / Strong preference / Definite preference / Weak preference / Indifferent). \({S}_{k}\) and \({S}_{j}\) represent the expert’s preference for the alternatives \({A}_{k}\) and \({A}_{j}\), respectively. Hence, comparison between \({S}_{k}\) and \({S}_{j}\) can be converted into a numerical value \({\delta }_{jky}\) by a geometric scale based on the content of Table 2. Then matrix \({\{r}_{jkd}\}\) can be obtained by the equation below:

where \(\upgamma \) represents a scale parameter, with a frequently-used value of \(\mathit{ln}2\). Next, approximate vector \(p\) of stimulus values can be determined by the logarithmic least-squares method by Lootsma47. The vector \(p\) minimizes

Step 2: Substituting \({w}_{j}=\mathrm{ln}{p}_{j}\),\({w}_{k}=\mathrm{ln}{p}_{k}\) and \({q}_{jky}=\mathrm{ln}{r}_{jky}=\upgamma {\delta }_{jky}\), the function transforms to

The associated set of normal equations can be transferred by \({\mathrm{w}}_{j}\) to

where \(j=\mathrm{1,2},\dots ,G\). The variate \(j\) has the same value range in the following equations in this part.\({N}_{jk}\) denotes the cardinality of \({S}_{jk}\). According to Lootsma, \({N}_{jk}=G-2\). \((G-1)\) comparisons are made for each expert, the maximum pairwise comparison is \((G-1)(\mathrm{G}-2)/2\), and Eq. (4) can be rewritten as:

The equation can be simplified as:

Step 3: For any alternative \({A}_{k}\) and \({A}_{j}\), from Table 2, we have \({q}_{jky}=-{q}_{kjy}\), \({S}_{jj}\) is empty and \({q}_{jjy}=0\).

Assume each expert made all the comparisons, then \(\sum\limits_{k=1,k\ne j}^{G}{\mathrm{w}}_{k}=0\), the equation can be written in the following form:

Thus the expert weight of decision makers \({p}_{j}\) can be computed by Eq. (8).

After normalization with the equation below, the subjective expert weight \({w}_{i}^{s}\) can be determined.

2-tuple linguistic and the model of the two-layer weight determination method

Definitions

As described in “Introduction”, the two-layer weight determination method is a critical cluster-based method. The 2-tuple linguistic (2TL) provides the environment of the two-layer method, and this part introduces the rationale to select 2TL. Due to the uncertain environment, fuzzy set48, rough set49, grey decision50, and some other extended methods are applied in MADM problems51. 2TL is a model based on the linguistic fuzzy set proposed by Herrera and Martínez52. A symbolic translation value \(\mathrm{\alpha \epsilon }\left[-0.5,\right.\left.0.5\right)\) is introduced to the linguistic fuzzy set to describe the flexibility of words. Reviews and extended models of 2TL can be found in Martínez53,54 and Malhotra55. Xu proposed non-linear aggregation operators in decision making with uncertain linguistic information56,57. Wang and Hao58 introduced a new 2TL model based on ‘symbolic proportion’ to preserve the integrity of information, as the proportional 2-tuples can well illustrate the uncertainty of the linguistic judgement. As linguistic term sets distribute uniformly and unsymmetrically, Herrera59 defined the term sets as unbalanced linguistic term sets to demonstrate the non-linear thinking of human beings, and proposed a method integrated by a representative algorithm and a computational approach. As an effective method in MADM, the 2TL model has produced crucial results in various areas such as quality assessment60, web system design61 and group decision making62. The 2TL model perfectly matches most subjective problems as the linguistic variables have their advantage in expressing ‘approximate information’. For example, we can use the 2TL model to describe ‘how young the person is’, using the linguistic words ‘very young, young or not young’.

In design concept evaluation, experts are assigned to evaluate plans on a large number of different attributes. Some of the attributes do not have an exact value, and thus the evaluation of the experts may be subjective and imprecise. Approaches such as the House of Quality method with multi-point scale measurement and intuitive fuzzy set are proposed to tackle the challenge. However, the 2TL is suitable for design concept evaluation problems for two reasons. First, design concept evaluation is complex multi-attribute decision making based on the aggregation of the decision makers’ judgements, which belongs to the MADM problem. Second, experts prefer to make judgements in natural language with some adverbs of degree such as ‘very’, ‘extremely’ etc.55 In design concept evaluation, raw data and information may be uncertain, imprecise and vague, which well matches the category of 2TL model problem-solving, and the evaluation data can be used as raw data in some MADM methods such as TOPSIS and VIKOR.

The notions, terminology definitions, and related equations of 2TL are presented below.

Definition 1

Let \(\mathrm{S}=\{{s}_{i}|i=\mathrm{0,1},2,\dots ,t\}\) set as a linguistic term set (LTS), let \(\beta \in \left[0,t\right]\) be a numeric result of the LTSs and \(t+1\) be the LTS cardinality. We have two values \(i=\mathrm{round}(\beta )\) and \(\alpha =\beta -i\), where \(\alpha \) is called a symbolic translation, \(i\in \left[0,t\right]\) and \(\alpha \in [-\mathrm{0.5,0.5})\). \(\mathrm{round}(.)\) is the round operation in the definition.

Definition 2

52 Let \(\upbeta \in \left[0,t\right]\) be the aggregation of LTSs from S, \(\mathrm{S}=\{{s}_{i}|i=\mathrm{0,1},2,\dots ,t\}\). The 2-tuple gives the same information of \(\beta \) and can be described as:

where \(\overline{S }=S\times [-\mathrm{0.5,0.5})\) in expression (10), \(\Delta \) is a function to obtain the 2-tuple linguistic information. With the help of value 0 as the “symbolic translation” in 2-tuple linguistic term demonstration, \({s}_{i}\) transfers to a 2-tuple \(({s}_{i}, 0)\). Herrera52 also gives a comparison rule of 2-tuples. Assume \(({s}_{i}, {\mathrm{\alpha }}_{1})\) and \(({s}_{j}, {\mathrm{\alpha }}_{2})\) are both 2-tuples, then

-

If \(\mathrm{i}<\mathrm{j}\Rightarrow({s}_{i}, {\mathrm{\alpha }}_{1})< ({s}_{j}, {\mathrm{\alpha }}_{2})\);

-

If \(\mathrm{i}=\mathrm{j}\), then

-

If \({\mathrm{\alpha }}_{1}={\mathrm{\alpha }}_{2}\Rightarrow \left({s}_{i}, {\mathrm{\alpha }}_{1}\right)=({s}_{j}, {\mathrm{\alpha }}_{2})\);

-

If \({\mathrm{\alpha }}_{1}<{\mathrm{\alpha }}_{2}\Rightarrow ({s}_{i}, {\mathrm{\alpha }}_{1})< ({s}_{j}, {\mathrm{\alpha }}_{2})\);

-

If \({\mathrm{\alpha }}_{1}>{\mathrm{\alpha }}_{2}\Rightarrow \left({s}_{i}, {\mathrm{\alpha }}_{1}\right)> ({s}_{j}, {\mathrm{\alpha }}_{2})\).

-

Definition 3

52 Let \(\mathrm{S}=\{{s}_{i}|i=\mathrm{0,1},2,\dots ,t\}\). \({\Delta }^{-1}\) is a function restoring the 2-tuple \(({s}_{i}, {\mathrm{\alpha }}_{i})\) to its numerical value \(\beta \in \left[0,t\right]\subset R\), where

Definition 4

52 Let \(\mathrm{S}=\{{s}_{i}|i=\mathrm{0,1},2,\dots ,t\}\). Then \(\mathrm{t}\) 2-tuples is denoted by \(({s}_{1}, {\mathrm{\alpha }}_{1})\) to \(({s}_{t}, {\mathrm{\alpha }}_{t})\). The 2-tuple arithmetic mean (TAM) is given as:

where \(\mathrm{\alpha }\in [-\mathrm{0.5,0.5})\) in Eq. (14).

Definition 5

63 The deviation between 2-tuples \(\left({s}_{i}, {\mathrm{\alpha }}_{i}\right)\) and \(\left({s}_{j}, {\mathrm{\alpha }}_{j}\right)\) can be described as:

Moreover, we can easily get the following results from Eq. (15):

-

\(d\left(\left({s}_{i}, {\mathrm{\alpha }}_{i}\right),\left({s}_{j}, {\mathrm{\alpha }}_{j}\right)\right)=-d\left(\left({s}_{j}, {\mathrm{\alpha }}_{j}\right),\left({s}_{i}, {\mathrm{\alpha }}_{i}\right)\right)\);

-

\(d\left(\left({s}_{i}, {\mathrm{\alpha }}_{i}\right),\left({s}_{j}, {\mathrm{\alpha }}_{j}\right)\right)=d\left(\left({s}_{i}, {\mathrm{\alpha }}_{i}\right),\left({s}_{x}, {\mathrm{\alpha }}_{x}\right)\right)+d\left(\left({s}_{x}, {\mathrm{\alpha }}_{x}\right),\left({s}_{j}, {\mathrm{\alpha }}_{j}\right)\right)\).

Definition 6

52 Let \(\mathrm{S}=\{{s}_{i}|i=\mathrm{0,1},2,\dots ,t\}\),\(\mathrm{t}\) 2-tuples is denoted by \(({s}_{1}, {\mathrm{\alpha }}_{1})\) to \(({s}_{t}, {\mathrm{\alpha }}_{t})\), let \(\upomega ={({\upomega }_{1},\dots ,{\upomega }_{t})}^{T}\) as the weight vectors of S. The 2-tuple weight average (TWA) operator is given as:

where \(\mathrm{\alpha }\in [-\mathrm{0.5,0.5})\) in Eq. (16).

Definition 7

40 Let \(\mathrm{S}=\{{s}_{i}|i=\mathrm{0,1},2,\dots ,t\}\). A 2-tuple matrix is expressed as \(\mathrm{B}={({b}_{ij})}_{m\times n}\), where \({b}_{ij}=\left({s}_{ij},{\alpha }_{ij}\right), {s}_{ij}\in S\),\(\mathrm{\alpha }\in [-\mathrm{0.5,0.5})\). Let \(\mathrm{Cov}(\mathrm{a},\mathrm{b})\) be the covariance between a and b, let 2-tuple \(\overline{{b }_{j}}=\left(\overline{{s }_{j}},\overline{{\alpha }_{j}}\right)\), let \(\mathrm{d}[\left({s}_{i}, {\mathrm{\alpha }}_{1}\right),\left({s}_{j}, {\mathrm{\alpha }}_{2}\right)]\) be the deviation between \(\left({s}_{i}, {\mathrm{\alpha }}_{1}\right)\) and \(\left({s}_{j}, {\mathrm{\alpha }}_{2}\right)\). Then

When \(\mathrm{j}=\mathrm{k}\), let \({\sigma }_{j}^{2}\) be the variance deviation of \({b}_{j}\), then have \({\sigma }_{j}^{2}=\mathrm{Cov}\left({b}_{j},{b}_{j}\right)\). The equation above can be converted to:

where \(\mathrm{j}=\mathrm{1,2},\dots ,\mathrm{n}\).

Computing process

The computing process of the two-layer determination method is described as:

Assume \(S=\{{s}_{i}|i=\mathrm{0,1},2,\dots ,u\}\) is an LTS. The criteria (attribute) group and the expert group are expressed as \(C=\{{c}_{1},{c}_{2},\dots ,{c}_{n}\}\) and \(E=\{{e}_{1},{e}_{2},\dots ,{e}_{m}\}\) respectively. \(A=\{{A}_{1},\dots ,{A}_{l}\}\) represented as l alternatives. The experts (E) are assigned to evaluate the alternatives (A) according to the criteria (C) using the LTS (S). The experts are required to give their evaluation using linguistic terms. As for expert \({e}_{k}\), the decision matrix \({X}_{k}\) is described as:

where \(i,j\) and \(k\) represent the index of alterative (\({\mathrm{A}}_{i}\)), criteria (\({\mathrm{c}}_{j}\)) and expert (\({\mathrm{e}}_{k}\)) respectively. In the two-layer method, m experts can be divided into f clusters, expressed as \(G=\{{G}_{1},{G}_{2},\dots ,{G}_{f}\}\). There are \({m}_{y}\) experts in the cluster \({G}_{y}\), where \(\sum\limits_{y=1}^{f}{m}_{y}=m\) and \(y\le f\).

Step 1: Minimized variance model in layer 2.

The minimized variance model relies on minimizing the total variance preference in a cluster. The matrix \({X}_{k}\) can be transferred into 2-tuple \({B}_{k}\) as follows:

where \({b}_{ij}^{k}\) is a 2-tuple.

In the second layer, assume expert \({e}_{k}\) is the \({p}^{th}\) expert in the cluster \({G}_{y}\), we denote by \({B}_{yp}\) the \({p}^{th}\) decision matrix in \({G}_{y}\). Hence, we can rewrite Eq. (20) as:

where \(p\le {m}_{y}\). To make the computing process simple, the matrix of an expert is converted to a vector:

where \(t=l\times n\). The decision matrix of cluster \({G}_{y}\) is

To get the optimized solution, it is required to make the sum of the variance of the attributes weight small.

According to Definition 7, we can get the weight evaluation of cluster \({G}_{y}\) using Eqs. (24) to (27):

where \({\overline{F} }_{j}^{y}\) is the arithmetic mean of \({F}_{ij}^{y}\), and \({\lambda }_{yi}={({\lambda }_{1},{\lambda }_{2},\dots ,{\lambda }_{{m}_{y}})}^{T}\) indicates the weight vectors of experts in cluster \({G}_{y}\), and \(\sum\limits_{i=1}^{{m}_{y}}{\lambda }_{yi}=1\). The definition of symbol * in this equation is defined as:

The summary of variances is:

The optimization model is shown below:

Then, the optimal weight value of variate \({\lambda }_{y}\) can be determined as \({\lambda }_{y}^{*}=({\lambda }_{1},{\lambda }_{2},\dots ,{\lambda }_{{m}_{y}})\).

Step 2: Entropy weight model in Layer 1.

In the first layer, the TAM operator is applied in the entropy weight model. As the basic equation of entropy, we have:

where the constant k is calculated as \(k=1/\mathrm{ln}n\). Let \(D={({d}_{yj})}_{f\times n}\) as the decision matrix of the clusters. The equation above can be converted to:

Let \({w}_{y}={({w}_{1},{w}_{2},\dots ,{w}_{f})}^{T}\) be the weight vector of clusters. We can compute the cluster weight by the equation below:

Step 3: Expert weight calculation.

By now, the expert weight in cluster \({\lambda }_{yi}^{*}\) and the cluster weight \({w}_{y}\) are determined, and the ith expert weight in cluster Gy, the expert weight we can be obtained by multiplying the two weights.

Thus, the expert weight can be determined by the two-layer weight determination method.

Methodology

In design concept evaluation, attributes are selected from multiple dimensions, such as shape, color, ergonomics, material and manufacturing technology, etc. The experts are categorized into clusters depending on their different backgrounds. Therefore, a two-layer expert weight determination is a good solution to design concept evaluation in NPD. However, there are still some drawbacks to this method. The complexity of different products varies, experts in the customer cluster may not be familiar with how the product works, and they may make judgements relying only on their experiences. Moreover, if the experts’ weights merely depend on the objective method, the subjective preferences of the experts are ignored. Thus, an integrated two-layer expert weight determination approach is proposed, as shown in Fig. 7. In the first layer, we used a mixed weight determination method based on the AHP and entropy weight model. In the second layer, a minimized variance model as mentioned above was applied. After that, the final expert weight is determined by combining the two layers’ results. The proposed method is illustrated below.

Step 1: In this step, a 9 LTS S is constructed according to the 2TL environment.

Then, the experts are assigned to give their preferences by the languages given in the LTS. The criteria (attribute) group and the expert group are expressed as \(C=\{{c}_{1},{c}_{2},\dots ,{c}_{n}\}\) and \(E=\{{e}_{1},{e}_{2},\dots ,{e}_{m}\}\) respectively. \(A=\{{A}_{1},\dots ,{A}_{l}\}\) represents l alternatives. Then, m experts can be divided into f clusters, expressed as \(G=\{{G}_{1},{G}_{2},\dots ,{G}_{f}\}\). There are \({m}_{y}\) experts in the cluster \({G}_{y}\), where \(\sum\limits_{y=1}^{f}{m}_{y}=m\) and \(y\le f\).

The experts are assigned to give their evaluation using linguistic terms. As for expert \({e}_{k}\), the decision matrix \({A}_{k}\) is described as:

where \(i,j\) and \(k\) represent the index of alternative (\({A}_{i}\)), criteria (\({\mathrm{c}}_{j}\)) and expert (\({\mathrm{e}}_{k}\)), respectively. The matrix \({A}_{k}\) can also be shown with 2-tuple:

where \({b}_{ij}^{k}\) is a 2-tuple. In the second layer, assume expert \({e}_{k}\) is the \({p}^{th}\) expert in the cluster \({G}_{y}\), we denote by \({B}_{yp}\) the \({p}^{th}\) decision matrix in \({G}_{y}\). Hence, we can rewrite Eq. (33) as:

where \(p\le {m}_{y}\). To make the computing process simple, the matrix of an expert is converted to a vector:

where \(t=l\times n\). The decision matrix of the designer cluster is:

Thus, the decision matrix of layer 2 can be represented as \(F={({F}^{1}, {F}^{2},\dots , {F}^{f})}^{T}\).

Step 2: The weight of experts in the corresponding cluster is calculated by the minimized variate model in the second layer.

Generally, experts in the same cluster have a similar background, which means the experts have a high possibility of having similar preferences for the alternatives. It is required to minimize the variance of the attributes of the clusters. To get the optimized solution, it is required to make the sum of the variance of the attributes weight small. Using Eqs. (37) to (40), we can get the weight evaluation of the designer cluster:

where \({\overline{F} }_{j}^{y}\) is the arithmetic mean of \({F}_{ij}^{y}\), and \({\lambda }_{yi}={({\lambda }_{1},{\lambda }_{2},\dots ,{\lambda }_{x})}^{T}\) indicates the weight vectors of experts in cluster \({G}_{y}\), and the summary of weight vectors is 1. The definition of symbol * in this equation is:

The summary of variances is:

The optimization model is shown below:

Then, the optimal weight value of variate \({\lambda }_{y}\) can be determined as \({\lambda }_{y}^{*}=({\lambda }_{1},{\lambda }_{2},\dots ,{\lambda }_{x})\). Similarly, the optimal weight value of the rest of the clusters in the second layer can be determined in the same way.

Step 3: Next, we determined the cluster weight in the first layer by a hybrid method. In this step, we use the entropy weight model to determine the objective weight of clusters.

In the first layer, the TAM operator is applied in the entropy weight model. For the cluster \({G}_{y}\), as the basic equation of entropy, we have:

where the constant k is calculated as \(k=1/\mathrm{ln}n\). Let \(D={({d}_{Dj})}_{f\times n}\) as the decision matrix of clusters. The equation above can be converted to:

Let \({\mathrm{w}}^{o}={({w}_{1}^{o},{w}_{2}^{o},\dots ,{w}_{f}^{o})}^{T}\) be the weight vector of clusters. Then

The result of \({\mathrm{w}}^{o}\) can be computed as the weight vector of clusters by the objective method.

Step 4: Here in this step, a subjective method is also implemented in cluster weight determination. The experts are assigned to assess the importance of three groups by the AHP method. They give their comparison of preferences among the clusters.

First, the experts are assigned to compare the clusters, the comparison among \({G}_{1},{G}_{2},\dots ,{G}_{f}\). Every expert is assigned to make a linguistic comparison between clusters by individual judgement using specified words (Very strong importance / Strong importance / Definite importance / Weak importance / Indifferent). The comparisons made by experts in \({G}_{y}\) between cluster \({G}_{\alpha }\) and \({G}_{\beta }\) are converted into a gradation index by geometric scale shown in Table 3. The comparison can be recorded in the comparative table in the form of Table 3. Where \({G}_{y}^{x}({G}_{\alpha }/{G}_{\beta })\) represents the comparison of \({G}_{\alpha }/{G}_{\beta }\) made by the \({x}^{th}\) expert in cluster \({G}_{y}\). After that, the arithmetic means of the comparison by cluster can be computed, which is also shown in Table 4. \({\delta }_{\alpha \beta y}\) denotes the arithmetic mean of the comparison \({G}_{\alpha }/{G}_{\beta }\) made by experts in cluster \({G}_{y}\), then we have \({\delta }_{\alpha \beta y}={G}_{y}({G}_{\alpha }/{G}_{\beta })\).

The comparison matrix of clusters \({\{r}_{\alpha \beta y}\}\) can be determined by the equation below:

where \(\upgamma \) represents a scale parameter, with a frequently-used value of \(\mathrm{ln}2\). Next, the approximate vector \(p\) of stimulus values can be determined by the logarithmic least-squares method by Lootsma47. The vector \(p\) minimizes

where \({p}_{\alpha }\) and \({p}_{\beta }\) represent the relative power of \({G}_{\alpha }\) and \({G}_{\beta }\) made by \({G}_{y}\), respectively. Assume \({q}_{\alpha \beta y}=\mathrm{ln}{r}_{\alpha \beta y}\) and \({w}_{\alpha }=\mathrm{ln}{p}_{\alpha }\), expression (45) can be described as:

The associated set of normal equations can be transferred by \({w}_{\alpha }\) to:

where \(\alpha =\mathrm{1,2},\dots ,f\), and the variate \(\alpha \) has the same value range in the following equations in this part.\({N}_{\alpha \beta }\) denotes the cardinality of \(G\). According to Lootsma, we have \({N}_{\alpha \beta }=f-2\). \((f-1)\) comparison made for each expert, the maximum pairwise comparisons are \((f-1)(f-2)/2\), and Eq. (47) can be rewritten as:

The equation can be simplified as:

For any cluster \({G}_{\alpha }\) and \({G}_{\beta }\), from Table 2, we have \({q}_{\alpha \beta y}=-{q}_{\beta \alpha y}\), \({G}_{\alpha \alpha }\) is empty and \({q}_{\alpha \alpha y}=0\).

Assume each expert made all the comparisons, then \(\sum\limits_{\beta =1,\beta \ne \alpha }^{f}{\mathrm{w}}_{k}=0\), the equation can be written in the following form:

Thus the expert weight of decision makers \({p}_{\alpha }\) can be computed by Eq. (48).

After normalization with the equation below, the subjective expert weight \({w}_{i}^{s}\) can be determined.

Step 5: In Step 3 and Step 4 above, the objective cluster weight and the subjective cluster weights were determined. Here in this step, combined weights are determined using the equation below to get the combined weight of clusters.

where \(\mu \) is the adjusting coefficient, here \(\mu \in [\mathrm{0,1}]\) represents the superiority of the subjective method over the objective method in the combination. When \(\mu >0.5\), the subjective determination of the DM group is superior, on the contrary, when \(\mu <0.5\), the objective method is superior, and when \(\mu =0.5\), both methods made the same contribution in the cluster weight determination.

Step 6: Thus, the final weight of each expert \({\upomega }_{ui}\) can be computed by:

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed consent

No informed consent was required, because the data are anonymized.

Case study

In our study, the new two-layer expert weight determination method is applied in optimization of the cabin design plan for a mid-sized cruise ship. Before the evaluation, three design schemes are proposed, and a decision needs to be made from the three alternatives. To make the decision, a 10 attribute criteria index shown in Table 5 is fixed to determine the alternatives.

A 30-expert group is formed with 10 cruise ship interior designers, 10 cruise ship manufacturing specialists and 10 customers with over two cruise trip experiences. The raw data is shown in Appendix 1.

First, according to Eqs. (32) to (40), a minimized variance mode is established. Then the optimized lambdas(λ) are determined by the software Lingo. The corresponding weight of experts in each group are shown in Table 6.

After that, according to the TAM operator from Definition 3, the decision matrix D is shown in Table 7.

We can calculate the objective weight of the clusters \({\mathrm{w}}^{o}=(\mathrm{0.424,0.373,0.203})\).

We get the pairwise judgement about the importance of the clusters from Appendix 2. The data in Table 8 is the arithmetic mean calculated from subjective pairwise judgements using the weighting method in each cluster. Here in the table, we leave the cell blank when the cluster is compared against itself. For other cells, the symbol “-” means that the corresponding row or column is not permitted to be compared with others. The normal equation can be expressed as:

In the equation, \({\mathrm{q}}_{\alpha \beta y}=\upgamma {\updelta }_{\alpha \beta y}\), Eq. (55) can be simplified as:

For DC, \(\alpha =1\), we have

For MC, \(\alpha =2\), we have

For CC, \(\alpha =3\), we have

Thus, we can compute \({\mathrm{w}}_{\alpha }\) as

The weight of the clusters can be calculated by Eqs. (50) to (52). In this case, the subjective weights of clusters are:

The integrated weight of the clusters can be computed by Eq. (53), and when \(\mu =0.5\), the weights of clusters are:

The final weights of each expert w are shown in Table 9.

Comparison of methods with and without using weight determination

Among MADM methods, TOPSIS is widely used in supply chain management, design concept evaluation, business and marketing management, and some other fields. To illustrate the influence of the expert weight, a comparative analysis is made using the TOPSIS method with and without expert weight determination.

The steps of the TOPSIS method are shown here.

Step 1: Normalize the decision matrix.

For a decision matrix \(\mathrm{X}=\left\{{x}_{ij}\right\}\) has \(m\) alternatives and \(n\) criteria,\(1\le i\le m\) and \(1\le j\le n\), respectively.\( {r}_{ij}\) represents the normalized matrix.

Step 2: Compute the matrix with criteria weight.

where \({w}_{j}\) represents the weight of criterion \(j\).

Step 3: Calculate the positive ideal solutions (PIS)/negative ideal solutions (NIS).

where \({v}_{j}^{+}=\{\mathit{max}\left({v}_{ij}\right)\;for\; benefit-type\; criterion; \mathit{min}\;\left({v}_{ij}\right)\; for\; cost-type\; criterion\}\) and \({v}_{j}^{-}=\{\mathit{min}\left({v}_{ij}\right)\;for\; benefit-type\; criterion;\; \mathit{max}\;\left({v}_{ij}\right)\; for\; cost-type\; criterion\}\).

Step 4: Compute the separation measures of alternatives.

Let \({{\varvec{S}}}_{{\varvec{i}}}^{+}\) / \({{\varvec{S}}}_{{\varvec{i}}}^{-}\) be the distance between the alternative and the PIS/NIS.

Step 5: Calculate the closeness index (CI) value.

The rank of the alternatives can be determined by comparing \(CIs\). The alternative with the highest \(CI\) is the best solution.

The criteria weights are determined by the subjective method, shown in Table 10.

According to the TOPSIS method, the relative variates are calculated. \({S}_{i}^{+}\),\({S}_{i}^{-}\), \(CIs\) of alternatives with and without expert weights are shown in Table 11.

From Table 11 and Fig. 8, it is clear that the ranks of alternatives are different with and without considering expert weight. In Fig. 8, both methods demonstrate alternative \({A}_{1}\) is the least ideal option, and far inferior to the other two solutions. However, when the expert weight is considered, the most feasible alternative changed from \({A}_{3}\) to \({A}_{2}\). On the other hand, the variation of \({S}_{i}^{+}\),\({S}_{i}^{-}\), \(CI\) with and without expert weight is obvious from Table 11.

The difference is caused by the weight of experts. We can infer from the comparative analysis that if the gap between alternatives is huge, the expert weight determination may not influence the final decision. Otherwise, if a gap between alternatives exists but is not particularly large, the expert weight determination may be considered more in decision making. Ignoring the expert weight may cause us to miss the best alternative in real-life projects as verified in this section.

Conclusions

Expert weight determination in design concept evaluation is a critical part that is usually ignored by decision makers. A proper weight determination can make the decision making process more accurate. This paper presented an integrated two-layer expert weight determination method under a complex design concept evaluation process. In some complex problems, experts are divided into clusters by certain characteristics, and the weight of experts can be calculated by individuals (layer 1) and clusters (layer 2). In the first layer, the minimized variance model is presented to determine the individual weight in each group. In the second layer, a hybrid weight determination method is proposed by combining the entropy weight method and the AHP method. A case study in cruise ship cabin design was implemented using the proposed method. Comparison of the results showed that weight determination in a complex product design process is essential, and may sometimes cause different outcomes.

References

Song, W., Ming, X. & Wu, Z. An integrated rough number-based approach to design concept evaluation under subjective environments. J. Eng. Des. 24, 320–341 (2013).

Herbeth, N. & Dessalles, S. Brand and design effects on new product evaluation at the concept stage. Int. J. Trends Market. Manag. 2, 2 (2017).

Zheng, X., Ritter, S. & Miller, S. How concept selection tools impact the development of creative ideas in engineering design education. J. Mech. Des. 2, 2 (2018).

Geng, X., Chu, X. & Zhang, Z. A new integrated design concept evaluation approach based on vague sets. Expert Syst. Appl. 37, 6629–6638 (2010).

Kabak, Ö. & Ervural, B. Multiple attribute group decision making: A generic conceptual framework and a classification scheme. Knowl.-Based Syst. 123, 13–30 (2017).

King, A. M. & Sivaloganathan, S. Development of a methodology for concept selection in flexible design strategies. J. Eng. Des. 10, 329–349 (1999).

Si, G., Cai, W., Wang, S. & Li, X. Prediction of relatively high-energy seismic events using spatial–temporal parametrisation of mining-induced seismicity. Rock Mech. Rock Eng. 53, 5111–5132 (2020).

Cai, W. et al. A principal component analysis/fuzzy comprehensive evaluation model for coal burst liability assessment. Int. J. Rock Mech. Min. Sci. 100, 62–69 (2016).

Zhe, C., Kai, S., Qing, Z. & Neng, C. Evaluation of office chair design using TOPSIS-PSI method (in Chinese). J. For. Eng. 5(30), 179–184. https://doi.org/10.13360/j.issn.2096-1359.201912003 (2020).

Gul, M., Celik, E., Aydin, N., Gumus, A. T. & Guneri, A. F. A state of the art literature review of VIKOR and its fuzzy extensions on applications. Appl. Soft Comput. 46, 60–89 (2016).

Govindan, K. & Jepsen, M. B. ELECTRE: A comprehensive literature review on methodologies and applications. Eur. J. Oper. Res. 2, 2 (2016).

Behzadian, M., Otaghsara, S. K., Yazdani, M. & Ignatius, J. A state-of-the-art survey of TOPSIS applications. Expert Syst. Appl. 39, 13051–13069 (2012).

Choo, E. U., Schoner, B. & Wedley, W. C. Interpretation of criteria weights in multicriteria decision making. Comput. Ind. Eng. 37, 527–541 (1999).

Carlsson, C. & Fullér, R. Fuzzy multiple criteria decision making: Recent developments. Fuzzy Sets Systems 78, 139–153 (1996).

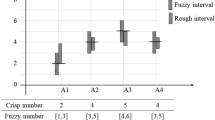

Zhai, L.-Y., Khoo, L.-P. & Zhong, Z.-W. Design concept evaluation in product development using rough sets and grey relation analysis. Expert Syst. Appl. 36, 7072–7079. https://doi.org/10.1016/j.eswa.2008.08.068 (2009).

Zhu, G.-N., Hu, J., Qi, J., Gu, C.-C. & Peng, Y.-H. An integrated AHP and VIKOR for design concept evaluation based on rough number. Adv. Eng. Inform. 29, 408–418. https://doi.org/10.1016/j.aei.2015.01.010 (2015).

Tiwari, V., Jain, P. K. & Tandon, P. Product design concept evaluation using rough sets and VIKOR method. Adv. Eng. Inform. 30, 16–25. https://doi.org/10.1016/j.aei.2015.11.005 (2016).

Shidpour, H., Da Cunha, C. & Bernard, A. Group multi-criteria design concept evaluation using combined rough set theory and fuzzy set theory. Expert Syst. Appl. 64, 633–644. https://doi.org/10.1016/j.eswa.2016.08.022 (2016).

Zhu, G., Hu, J. & Ren, H. A fuzzy rough number-based AHP-TOPSIS for design concept evaluation under uncertain environments. Appl. Soft Comput. 91, 106228 (2020).

Yang Mei, X. M. Furniture image evaluation method based on user’s multi-dimensional sensory needs (in Chinese). Pack. Eng. 8, 111–117 (2020).

Van den Honert, R. Decisional power in group decision making: a note on the allocation of group members’ weights in the multiplicative AHP and SMART. Group Decis. Negotiat. 10, 275–286 (2001).

Koksalmis, E. & Kabak, Ö. Deriving decision makers’ weights in group decision making: An overview of objective methods. Inf. Fusion 49, 146–160 (2019).

Ramanathan, R. & Ganesh, L. Group preference aggregation methods employed in AHP: An evaluation and an intrinsic process for deriving members’ weightages. Eur. J. Oper. Res. 79, 249–265 (1994).

Barzilai, J. & Lootsma, F. Power relations and group aggregation in the multiplicative AHP and SMART. J. Multi-Crit. Decis. Anal. 6, 155–165 (1997).

Azadfallah, M. The extraction of expert weights from pair wise comparisons in Delphi method. J. Appl. Inf. Sci. 3, 2 (2015).

Bodily, S. E. Note—A delegation process for combining individual utility functions. Manag. Sci. 25, 1035–1041 (1979).

Yue, Z. Deriving decision maker’s weights based on distance measure for interval-valued intuitionistic fuzzy group decision making. Expert Syst. Appl. 38, 11665–11670 (2011).

Yue, Z. Approach to group decision making based on determining the weights of experts by using projection method. Appl. Math. Model. 36, 2900–2910 (2012).

Yue, Z.J.K.-B. An extended TOPSIS for determining weights of decision makers with interval numbers. Knowl.-Based Syst. 24, 146–153 (2011).

Yang, Q., Du, P.-A., Wang, Y. & Liang, B. J. P. O. A rough set approach for determining weights of decision makers in group decision making. PLoS ONE 12, e0172679 (2017).

Wang, F. & Wan, S. Possibility degree and divergence degree based method for interval-valued intuitionistic fuzzy multi-attribute group decision making. Exp. Syst. Appl. 141, 112929 (2020).

Jiang, Z. & Wang, Y. Multiattribute group decision making with unknown decision expert weights information in the framework of interval intuitionistic trapezoidal fuzzy numbers. Math. Probl. Eng. 2014, 2 (2014).

Xu, X., Zhang, Q. & Chen, X. Consensus-based non-cooperative behaviors management in large-group emergency decision-making considering experts’ trust relations and preference risks. Knowl.-Based Syst. 190, 105108 (2020).

Pang, J., Liang, J. & Song, P. An adaptive consensus method for multi-attribute group decision making under uncertain linguistic environment. Appl. Soft Comput. 58, 339–353. https://doi.org/10.1016/j.asoc.2017.04.039 (2017).

Xu, Z. & Cai, X. Minimizing group discordance optimization model for deriving expert weights. Group Decis. Negot. 21, 863–875 (2012).

Dong, Y., Zhang, H. & Herrera-Viedma, E. Integrating experts’ weights generated dynamically into the consensus reaching process and its applications in managing non-cooperative behaviors. Decis. Support Syst. 84, 1–15 (2016).

Ilieva, G. Fuzzy group full consistency method for weight determination. Cybern. Inf. Technol. 20, 50–58 (2020).

Liu, W., Lin, Z., Wen, F. & Ledwich, G. Analysis and optimisation of the preferences of decision-makers in black-start group decision-making. IET Gen. Trans. Distrib. 7, 14–23 (2013).

Chen, S.-M., Cheng, S.-H. & Lin, T.-E. Group decision making systems using group recommendations based on interval fuzzy preference relations and consistency matrices. Inf. Sci. 298, 555–567 (2015).

Liu, B., Shen, Y., Chen, Y., Chen, X. & Wang, Y. A two-layer weight determination method for complex multi-attribute large-group decision-making experts in a linguistic environment. Inf. Fusion 23, 156–165 (2015).

Xu, X. H., Sun, Q., Pan, B. & Liu, B. Two-layer weight large group decision-making method based on multi-granularity attributes. J. Intell. Fuzzy Syst. 33, 1797–1807 (2017).

Qi, X., Liang, C. & Zhang, J. Generalized cross-entropy based group decision making with unknown expert and attribute weights under interval-valued intuitionistic fuzzy environment. Comput. Ind. Eng. 79, 52–64 (2015).

Liu, W. & Li, L. An approach to determining the integrated weights of decision makers based on interval number group decision matrices. Knowl.-Based Syst. 90, 92–98 (2015).

Jabeur, K., Martel, J.-M. & Khélifa, S. B. A distance-based collective preorder integrating the relative importance of the group’s members. Group Decis. Negot. 13, 327–349 (2004).

Crilly, N., Moultrie, J. & Clarkson, P. J. Seeing things: Consumer response to the visual domain in product design. Des. Stud. 25, 547–577 (2004).

Song, W., Niu, Z. & Zheng, P. Design concept evaluation of smart product-service systems considering sustainability: An integrated method. Comput. Ind. Eng. https://doi.org/10.1016/j.cie.2021.107485 (2021).

Lootsma, F. A. Scale sensitivity in the multiplicative AHP and SMART. J. Multi-Criter. Decis. Anal. 2, 87–110 (1993).

Zadeh, L. A. Fuzzy sets. Inf. Control 8, 338–353 (1965).

Pawlak, Z. Rough set theory and its applications to data analysis. Cybern. Syst. 29, 661–688 (1998).

Golinska, P., Kosacka, M., Mierzwiak, R. & Werner-Lewandowska, K. Grey decision making as a tool for the classification of the sustainability level of remanufacturing companies. J. Clean. Prod. 105, 28–40 (2015).

Chen, Z., Zhong, P., Liu, M., Sun, H. & Shang, K. A novel hybrid approach for product concept evaluation based on rough numbers, shannon entropy and TOPSIS-PSI. J. Intell. Fuzzy Syst. 2, 12087–12099 (2022).

Herrera, F. & Martínez, L. A 2-tuple fuzzy linguistic representation model for computing with words. IEEE Trans. Fuzzy Syst. 8, 746–752 (2000).

Martínez, L., Rodriguez, R. M. & Herrera, F. The 2-tuple Linguistic Model 23–42 (Springer, 2015).

Martínez, L. & Herrera, F. An overview on the 2-tuple linguistic model for computing with words in decision making: Extensions, applications and challenges. Inf. Sci. 207, 1–18 (2012).

Malhotra, T. & Gupta, A. A systematic review of developments in the 2-tuple linguistic model and its applications in decision analysis. Soft Comput. 2, 1–35 (2020).

Xu, Z. Uncertain linguistic aggregation operators based approach to multiple attribute group decision making under uncertain linguistic environment. Inf. Sci. 168, 171–184 (2004).

Xu, Z. A note on linguistic hybrid arithmetic averaging operator in multiple attribute group decision making with linguistic information. Group Decis. Negot. 15, 593–604 (2006).

Wang, J.-H. & Hao, J. A new version of 2-tuple fuzzy linguistic representation model for computing with words. IEEE Trans. Fuzzy Syst. 14, 435–445 (2006).

Herrera, F., Herrera-Viedma, E. & Martínez, L. A fuzzy linguistic methodology to deal with unbalanced linguistic term sets. IEEE Trans. Fuzzy Syst. 16, 354–370 (2008).

Celotto, A., Loia, V. & Senatore, S. Fuzzy linguistic approach to quality assessment model for electricity network infrastructure. Inf. Sci. 304, 1–15 (2015).

Esteban, B., Tejeda-Lorente, Á., Porcel, C., Arroyo, M. & Herrera-Viedma, E. TPLUFIB-WEB: A fuzzy linguistic Web system to help in the treatment of low back pain problems. Knowl.-Based Syst. 67, 429–438 (2014).

Zhang, H. J. A. M. M. Some interval-valued 2-tuple linguistic aggregation operators and application in multiattribute group decision making. Appl. Math. Model. 37, 4269–4282 (2013).

Dong, Y., Hong, W.-C., Xu, Y. & Yu, S. Selecting the individual numerical scale and prioritization method in the analytic hierarchy process: A 2-tuple fuzzy linguistic approach. IEEE Trans. Fuzzy Syst. 19, 13–25 (2010).

Acknowledgements

This work was supported by the Key Research and Development Plan of Shandong Province (2019GGX104102) awarded to Peisi Zhong, Natural Science Foundation of Shandong Province (ZR2017MEE066) awarded to Peisi Zhong, High-tech Ship project of the Ministry of Industry and Information Technology awarded to Qing Ma (MC-201917-C09), and Culture and Tourism Research Project of Shandong Province (21WL(H)64) awarded to Zhe Chen.

Author information

Authors and Affiliations

Contributions

Conceptualization: All authors; Methodology: P.Z., Z.C. and M.L.; Data collection: Q.M.; Data Analysis: Z.C., Q.M. and G.S.; Writing—original draft preparation: Z.C.; Writing—review and editing: Z.C., P.Z. and G.S.; Funding acquisition: P.Z., Q.M. and Z.C.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chen, Z., Zhong, P., Liu, M. et al. An integrated expert weight determination method for design concept evaluation. Sci Rep 12, 6358 (2022). https://doi.org/10.1038/s41598-022-10333-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-10333-6

This article is cited by

-

A Novel Decision-Making Approach for Product Design Evaluation Using Improved TOPSIS and GRP Method Under Picture Fuzzy Set

International Journal of Fuzzy Systems (2023)

-

A novel integrated MADM method for design concept evaluation

Scientific Reports (2022)

-

Datasets of skills-rating questionnaires for advanced service design through expert knowledge elicitation

Scientific Data (2022)

-

New aggregation functions for spherical fuzzy sets and the spherical fuzzy distance within the MULTIMOORA method with applications

Autonomous Intelligent Systems (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.