Abstract

In this work, we present a Quantum Hopfield Associative Memory (QHAM) and demonstrate its capabilities in simulation and hardware using IBM Quantum Experience.. The QHAM is based on a quantum neuron design which can be utilized for many different machine learning applications and can be implemented on real quantum hardware without requiring mid-circuit measurement or reset operations. We analyze the accuracy of the neuron and the full QHAM considering hardware errors via simulation with hardware noise models as well as with implementation on the 15-qubit ibmq_16_melbourne device. The quantum neuron and the QHAM are shown to be resilient to noise and require low qubit overhead and gate complexity. We benchmark the QHAM by testing its effective memory capacity and demonstrate its capabilities in the NISQ-era of quantum hardware. This demonstration of the first functional QHAM to be implemented in NISQ-era quantum hardware is a significant step in machine learning at the leading edge of quantum computing.

Similar content being viewed by others

Introduction

Since the advent of quantum computing, one of the primary applications which has piqued the interest of both academia and industry is quantum machine learning1,2,3,4. With the characteristics of quantum computing driven by superposition and entanglement of quantum states, quantum computing can create and model patterns in data which cannot be easily created in classical computing. Additionally, quantum parallelism allows for quantum gates to evaluate outputs of functions with multiple inputs simultaneously5. Quantum machine learning is therefore expected to produce significant speedup in machine learning algorithms3. Already, quantum machine learning models have been developed for generic quantum neural networks6,7,8, quantum dynamic neural networks9, continuous-variable quantum neural networks10, quantum convolutional neural networks11,12, and quantum Hopfield neural networks13. In large part, quantum machine learning research has recently been centered around the use of variational quantum algorithms as machine learning models14,15,16,17 due to their high expressibility and rapid trainability compared to classical networks17. Extensive research has also been done at the intersection of classical and quantum machine learning to create hybrid machine learning techniques18,19,20, as well as in techniques for training quantum neural networks21 and in machine learning using quantum-enhanced feature spaces22.

The characteristics of quantum computing are of particular interest for Hopfield network23 applications6,13,24,25. Hopfield networks become intractable to compute classically due to their fully connected nature, though a recent paper claims “Hopfield networks is all you need”26 for many complex machine learning tasks. With quantum computing, significant improvements in time and memory overhead for Hopfield networks have been claimed through algorithmic advantages, training from a superposition of input states and parallel computation6,13, resulting in theorized polynomial or exponential capacity improvements for some quantum associative memory designs24,25,27.

Several studies theorize quantum associative memories with improved capacity24,27, though neither have been implemented in hardware. The original design in 199827 utilizes both spin-\(\frac{1}{2}\) and spin-1 qubits to demonstrate a theoretical associative memory, and a more recent design24 involves long-range interactions of fully-connected qubits to generate a unitary evolution over time. Another approach implements a quantum neuron to perform pattern recognition28, but this design does not follow Hopfield dynamics and has not been extended to an associative memory system.

In the NISQ era of quantum computing29, quantum computers are limited by their low number of qubits and their high vulnerability to noise and quantum errors. Therefore, implementation of quantum machine learning architectures in quantum hardware is very difficult. Of the above designs, only one has been implemented in hardware28.

In this work, we demonstrate a Quantum Hopfield Associative Memory (QHAM) implementable in IBMQ30, IBM’s open source development platform allowing for the implementation of quantum algorithms in quantum hardware based on superconducting qubits. We consider the effects of quantum errors in simulation via the IBMQ QASM simulator31, as well as in IBMQ hardware devices of up to 15 qubits. We differentiate from prior works by following Hopfield dynamics with the use of a quantum neuron and by implementing the QHAM in a format compatible with quantum gate-based circuits utilized in IBMQ. Our design can be tested on an actual quantum processor in IBMQ considering noise sources such as readout noise32, quantum gate errors, and qubit decoherence33. Both our quantum neuron and our QHAM show resilience to noise and do not require mid-circuit measurement or reset operations. Thus, our QHAM implementation is the first QHAM to be successfully demonstrated in quantum hardware.

Results

Background on Hopfield associative memories

The Hopfield network, first developed by J. J. Hopfield in 198223, is a type of classical neural network which has demonstrated widespread capabilities in machine learning, most notably in creating associative (content-addressable) memories. In an associative memory, one or more memory states are learned by the network and stored in the network’s weight matrix w (also called the neural interaction matrix). These states can be recovered by introducing an input state to the network which is close in Hamming distance to one of the stored states, then evolving the state of the network by updating individual neurons until convergence is reached.

Consider a Hopfield network of n fully connected neurons. Let each memory state stored in the network be represented by a vector \(\varepsilon\) with elements \(\varepsilon _i \in \{-1,1\}\), such that \(\varepsilon\) is of length n and each \(\varepsilon _i\) represents the binary state of neuron i. For m stored memories \(\varepsilon ^{(1:m)}\), the elements \(w_{ij}\) of the network’s weight matrix w can be trained using Hebbian rules such that:

where \(\varepsilon _{i}^{\mu }\) represents bit i from pattern \(\mu\) and \(\varepsilon _{j}^{\mu }\) represents bit j from the same pattern. Each element \(w_{ij}\) represents the interaction weight between neuron i and neuron j. Note that \(w_{ij}\) has the restriction \(w_{ii}=0, \forall i\) such that no neuron has a connection with itself.

Let the vector x describe the initial state of the Hopfield network with n neurons where each neuron state is represented by \(x_i \in \{-1,1\}\). The network undergoes a series of update steps where individual neurons have their states overwritten according to the interaction of the network state x with the weight matrix \(w_{ij}\). Neurons are updated using the rule:

where \(h_i\) is the threshold of neuron i and where each i to be updated can be chosen randomly or in a predefined order. One can also define \(\theta _i = \sum _{j=1}^{n}w_{ij}x_j\) in order to simplify the notation, where \(\theta _i\) is known as the input signal to the neuron i. As successive updates occur, the state of the network will converge to a state which is a local minimum of the energy function \(E=-\frac{1}{2}\sum _{i,j}w_{ij}x_ix_j + \sum _ih_ix_i\). Each of the stored memories are local minima of this energy function, and thus the stored memories are intuitively known as “attractors” since the network state will converge to the attractor which is nearest in Hamming distance. The initial state of the network is also known as a “probe,” as it is used to search the associative memory and return the nearest attractor. In this work, we mimic these attractor dynamics in the quantum regime to create a Quantum Hopfield Associative Memory.

Background on quantum neuron design

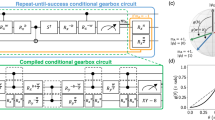

Several artificial neuron designs have been developed to apply this classical machine learning paradigm to quantum machine learning applications6,28,34,35. We base our system on the quantum neuron developed by Cao et al.6 and modify their structure for implementation in IBMQ in a single, uninterrupted quantum circuit. The basic structure of the neuron developed by Cao et al. is shown in Fig. 1.

Quantum neuron circuit architecture based on Cao et al.6. The basic quantum neuron architecture designed by Cao et al.6 utilizes controlled rotations dependent on a superposition of input states to create a controlled rotation \(CR_y(2\phi )\). A measurement of \(|0\rangle\) on the input qubit indicates that the output qubit has been rotated \(R_y(2q(\phi ))\) with \(q(\phi )=arctan(tan^2\phi )\). If \(|1\rangle\) is measured, the circuit has applied \(R_y(\frac{\pi }{2})\) to the output qubit, in which case this rotation is reversed and the circuit is repeated until success is achieved.

In a quantum system, each classical neuron state \(x_i\) can be mapped to a qubit in state \(|0\rangle\) or \(|1\rangle\). Mapping each neuron state to the probability of measuring \(|1\rangle\) in the qubit, \(x_i = -1\) corresponds to a pure \(|0\rangle\) state (\(P(|1\rangle )=0\)) and \(x_i = 1\) corresponds to a pure \(|1\rangle\) state (\(P(|1\rangle )=1\)). Any non-classical value of \(x_i\in (-1,1)\) can be represented as a superposition state, such as \(x_i=0\) being represented by \(|s_i\rangle = \frac{|0\rangle +|1\rangle }{\sqrt{2}}\) where \(P(|1\rangle )=0.5\)6. One possible mapping of classical states to quantum states in this manner is described by:

The full quantum system is thus represented by \(|s\rangle =|s_1s_2 \ldots s_n\rangle\). In order to mimic the attractor dynamics of a classical Hopfield network, we desire an update rule similar to Eq. (2) which can update the state of a single quantum neuron \(|s_i\rangle\) based on the interaction of \(w_{ij}\) and \(|s\rangle\) compared to a given threshold. In order to accomplish this, Cao et al.6 consider the quantum circuit shown in Fig. 1 which produces a rotation on a target qubit of \(R_y(2arctan(tan^2\phi ))\) if the input qubit measures \(|0\rangle\), where \(R_y\) represents a rotation generated by the Pauli Y operator around the Y-axis of the Bloch sphere. This rotation, when applied to an initial \(|0\rangle\) state, produces a \(|1\rangle\) measurement probability of \(P(|1\rangle )=\frac{sin^4(\phi )}{sin^4(\phi )+cos^4(\phi )}\) on the output qubit. This output probability is used as an analog to the classical neuron, in which \(\phi > \frac{\pi }{4}\) produces \(P(|1\rangle )\) close to 1 and \(\phi < \frac{\pi }{4}\) produces a \(P(|1\rangle )\) close to 0, corresponding to the classical \(x_i =\pm 1\) states. The parameter \(\phi\) relates to the classical \(\theta\) parameter by:

where \(\gamma\) is a normalization factor such that \(0<\phi <\frac{\pi }{2}\). Using this construction, the threshold behavior is achieved following Eq. (2) where all \(h_i=0\). The function chosen for \(\gamma\) is:

We have slightly modified \(\gamma\) from the Cao et al. design6 by removing the threshold term since all \(h_i=0\) in the Hopfield associative memory application, and by using \(\frac{\pi }{4}\) in the numerator instead of the approximate value of 0.7. This helps to ensure that quantum states corresponding to the classical \(x_i = 1\) are as close as possible to \(|s_i\rangle = |1\rangle\).

The \(CR_y(2\phi _i)\) rotation gate in the circuit in Fig. 1 is composed of rotations of the neuron state \(|s_i\rangle\) controlled by each \(|s_j\rangle\) which depend on the neural interconnection matrix elements \(w_{ij}\), followed by a rotation dependent on the bias term \(\beta\). The bias term \(\beta\) is set by:

which we simplify from the original design6 using the knowledge that all \(h_i=0\). When these rotations are combined, the resulting operation is a rotation \(R_y(2\phi _i)\) on the target qubit.

This circuit operates in a Repeat-Until-Success (RUS)36 manner wherein a measurement of \(|0\rangle\) on the input qubit indicates that the rotation of \(R_y(2arctan(tan^2\phi ))\) has been successfully applied to the output qubit. If the input qubit measures \(|1\rangle\), this indicates that a rotation of \(R_y(\frac{\pi }{2})\) has been applied instead, in which case \(R_y(-\frac{\pi }{2})\) is applied to reverse the initial rotation and the circuit is repeated. This process continues until \(|0\rangle\) is measured on the input qubit to indicate a successful rotation of the output qubit by \(R_y(2arctan(tan^2\phi ))\). Additionally, Cao et al. introduce a recursion method for this circuit which sharpens the rotation function to \(R_y(2(arctan(tan^2\phi ))^{\circ k})\), where k is the number of recursions. This function performs more closely to a binary activation function as in Eq. (2) and thereby reduces the error rate of the system. Throughout this work, we compare our system to the \(k=1\) case of this design which does not include recursion.

This quantum neuron design is used to model Hopfield attractor dynamics wherein \(\phi >\frac{\pi }{4}\) (equivalently, \(\theta >h=0\)) results in \(P(|1\rangle )\) close to 1 and \(\phi <\frac{\pi }{4}\) results in \(P(|1\rangle )\) close to 0. The derivation and characterization of this quantum neuron model is shown and described in more detail by Cao et al.6.

Challenges in hardware implementation

The RUS circuit design presents difficulty for implementation in quantum hardware due to its requirement of mid-circuit measurement and reset operations. The mid-circuit measurements are used to perform the check for success and the reset operations are used to recycle the output qubit for successive updates. These operations are a limiting factor for the hardware implementation of many quantum algorithms, as measuring or resetting the state of a qubit can result in collapsing the wavefunction of other qubits in the system. IBMQ is one quantum development platform which has recently enabled reset operations in its newest hardware for dynamic circuit execution37, but avoiding qubit reset in favor of sequential circuit execution remains desirable for many quantum hardware platforms and applications.

The qubit overhead of the Cao et al. design is dependent on the use of reset operations in order to reset the ancilla qubits (the input and output qubits in Fig. 1) to \(|0\rangle\) to recycle them for many qubit updates6. Should the system be implemented in hardware where reset is not available, this overhead increases from \(n+2\) qubits (where n is the size of the associative memory) to \(n+2u\) qubits, where u represents the number of updates to be performed on the system. This qubit overhead is included in Table 1.

In its basic form, the Cao et al. quantum neuron design6 also has very high gate complexity, thereby incurring extensive time overhead upon execution. The gate complexity of this design is shown in Table 1 and derived in more detail in the Supplementary Information. The high gate complexity of the quantum neuron becomes a significant problem for the overall error rate of the system. As errors occur in each gate, the large number of gates leads to significant error in the overall output of the system as errors cascade from one gate operation to the next. A metric called quantum volume38 describes the largest circuit of equal width and depth that a quantum computer can successfully implement. Considering the small quantum volume of modern quantum systems in the NISQ era (shown for IBMQ systems in Supplementary Information Table S1), hardware execution of a circuit as complex as an associative memory requires low circuit complexity to be successfully implementable in hardware. Reducing gate complexity thus becomes a very important factor to the design of a QHAM.

Proposed quantum neuron

To address the challenges in the original quantum neuron design6, we propose a quantum neuron design which avoids mid-circuit measurement and reset operations and which improves resilience to errors in quantum hardware. In order to avoid these operations, the measurement operation on the input qubit in the neuron described in Fig. 1 is removed. In the design by Cao et al.6, this measurement was used for classical conditioning to determine whether or not the circuit construction must be repeated in the Repeat-Until-Success construction. By removing this conditioning step, the probability of measuring \(|1\rangle\) at the output qubit is changed from \(\frac{sin^4(\phi )}{sin^4(\phi )+cos^4(\phi )}\) to simply \(sin^2(\phi )\), which is the same output probability as that which is obtained by applying a rotation of \(CR_y(2\phi )\). Therefore, we modify our quantum neuron from the original structure in Fig. 1 to simply be a \(CR_y(2\phi )\) rotation controlled by all other qubits in the system, as shown in Fig. 2 and following the same construction as the boxed \(CR_y(2\phi _i)\) rotation in Fig. 1.

Proposed quantum neuron circuit structure. (a) Our proposed quantum neuron for quantum hardware implementation. The full controlled rotation \(CR_y(\phi _i)\) breaks down into sub-rotations dependent on each control qubit as shown in Fig. 1. (b) Quantum circuit representing two updates of a selected qubits, \(|s_3\rangle\) then \(|s_2\rangle\). In this case, \(|s_3\rangle\) is updated using the state described by \(|s_1 s_2 s_4\rangle\), then \(|s_2\rangle\) is being updated using the state described by \(|s_1 s_3^{\prime } s_4\rangle\).

This reduction removes mid-circuit measurement operations and significantly lowers the circuit complexity of the neuron design, as only a single multi-controlled rotation is performed rather than the full, repeated construction shown in Fig. 1. A summary of the differences between our neuron and the neuron by Cao et al.6 is shown in Table 1. As derived in the Supplementary Information, the gate complexity of our design is only \((10n-3)u\), where n is the size of the associative memory and u is the number of updates performed. By comparison, the gate complexity of the RUS neuron is \([20n(f+1)-4f-5]u\), where f is the average number of failures in the RUS process. This significant reduction in gate complexity reduces the time overhead of the circuit and reduces the effect of cascading errors in each qubit operation. Additionally, our design can be rid of reset operations by not recycling the ancilla (target) qubit for successive updates at the cost of qubit overhead.

To update an initial qubit state \(|s_i\rangle\) to a new state \(|s_i\rangle '\), the rotation by \(2\phi\) is performed on an ancilla (target) qubit initialized to state \(|0\rangle\), then the output of the neuron on the ancilla qubit is swapped to the qubit which held the original state using a quantum SWAP gate. An example of this circuit using an initial state of length \(n=4\) and performing an update of the third qubit \(|s_3\rangle\) followed by an update of the second qubit \(|s_2\rangle\) is shown in Fig. 2b. In this example, the qubit used to perform the update is not recycled in order avoid reset operations. Avoiding reset operations improves the accuracy of the neuron and allows for implementation in hardware which does not support reset operations. In order to save on qubit overhead in systems where reset is possible, one can recycle the ancilla for multiple updates by performing the \(CR_y\) rotation, swapping the updated state to the desired qubit, resetting the ancilla to \(|0\rangle\), and performing the next update. Since the quantum neuron design requires the target qubit to be in state \(|0\rangle\) before performing the rotations, one cannot simply perform the rotation on the desired qubit directly. However, the SWAP operation can also be avoided by reassigning the target qubit to be the \(|s_i\rangle '\) and discarding the qubit which originally held \(|s_i\rangle\).

In the original neuron6, a majority vote of many measurements of the updated qubit is used to reassign the state to pure \(|0\rangle\) or \(|1\rangle\) immediately after being updated in order to achieve a binary activation function. As this requires mid-circuit measurement and repeated execution of the circuit, we leave the updated qubits in their state produced by the update between evaluation steps as shown in Fig. 2b. Since this means that the update step does not produce a true binary result reassigning qubit states to true \(|0\rangle\) and \(|1\rangle\), there will be an inherent error rate as a result of the update step with respect to the true classical Hopfield update. Multiple updates are performed in succession to reach a desired endpoint of the circuit, after which the majority vote method can used to extract the result to a classical output, or further evaluation can be continued in the quantum domain.

The output of the RUS neuron is shown in Fig. 3a. Using a noise model defined by IBMQ which models ibmq_quito hardware noise, we observe a significant error rate particularly in the region \(\phi <\frac{\pi }{4}\). This neuron cannot be tested in the ibmq_quito hardware because of the required mid-circuit measurement and reset operations and because of the limited size of the ibmq_quito architecture. We expect that this quantum neuron would perform significantly worse in true hardware than in noise model simulation considering the connectivity constraints in hardware mentioned leading to increased gate complexity.

The simulation and hardware accuracy of our simplified quantum neuron is shown in Fig. 3b. We observe that our updated design is more resilient to noise and hardware errors than the RUS design because it requires less gates and less qubits. The simulation results of our neuron design incorporating the ibmq_quito noise model shown in Fig. 3b almost exactly overlap with the desired output function. The hardware measurements show degradation particularly in the \(\phi >\frac{\pi }{4}\) regime, but this hardware error is much lower than the simulated error in the RUS design.

In the original design, an activation function was applied to the rotation angle of the output state, namely \(arctan(tan^2(\phi ))\), whereas in our design, we allow for the rotation around the Bloch sphere itself to act as an activation function, such that the probability of measuring \(|1\rangle\) follows the non-linear function \(P(|1\rangle )=sin^2(\phi )\) dependent on \(\phi\). This reduction results in a more gradual activation function between measuring \(|0\rangle\) and \(|1\rangle\) (as shown in Fig. 3) but allows for uninterrupted execution in a single quantum circuit and reduces the qubit overhead and gate complexity of the circuit. While the slope of the activation function in our neuron is more gradual, it also shows much better resilience to noise and demonstrates performance in hardware which closely models its expected output, as shown in Fig. 3. However, this more gradual slope of the activation function can reduce the accuracy of update steps where \(\phi\) is close to its midpoint of \(\frac{\pi }{4}\).

The quantum Hopfield associative memory

To model an associative memory using our quantum neuron, an initial state vector \(\bar{x}\) is given and converted to its quantum representation \(|s\rangle =|s_1 s_2 \ldots s_n\rangle\). Given this initial state and a set of attractor states trained into the weight matrix w as described by Eq. (1), updates are performed on individually selected qubits to move the network state closer to the nearest attractor. The qubit overhead of the system with attractors and initial state of length n, which we define as q, is determined by:

given that the test is performed in an environment where the qubit used for the updates can be recycled using a reset operation. However, when qubits cannot be recycled in hardware, an additional qubit is required per update (as in Fig. 2). When performing u updates, Eq. (7) becomes:

This qubit overhead is included in Table 1.

Note that there are fundamental differences in the way updates are performed in our design and in the classical Hopfield associative memory. In the classical case, each update results in the updated \(x_i\) being rewritten to \(-1\) or \(+1\) depending on the value of the update rule with respect to the desired threshold, typically described by Eq. (2). However, in our quantum case, we utilize a rotation around the Bloch sphere where \(P(|1\rangle )=sin^2(\phi _i)\) and therefore the updated qubit states are rarely a true \(|0\rangle\) or \(|1\rangle\). Cascading many updates on all qubits in the system in this fashion without stopping execution of the quantum circuit intermediately to rewrite the qubit states to pure \(|0\rangle\) or \(|1\rangle\) based on a majority vote measurement value (as in Cao et al.6) will eventually result in the state of each qubit converging to a superposition state depending on the average value of the probe elements rather than converging to a memory state as desired. Therefore, in order to maintain the quantum nature of the system within the capabilities of quantum hardware, we perform either individually selected updates on specific qubits or random updates up to a set hyperparameter of u total updates which can be tuned for maximum accuracy. After all of the updates, the system state can be measured with a desired number of “shots” to determine the probability distribution for each state. To determine the classical output of the system, we take a majority vote of many measurements of each of the individual qubits in the system to compose the final memory recall result.

Simulating the QHAM

Simulation results for two-attractor systems of sizes 4, 9 and 16 are shown in Fig. 4. The vectors representing the system states are reshaped to squares where the index of the memory elements are read left to right, top to bottom. In the size 9 and 16 cases, we update multiple selected qubits which are in “corrupted” superposition states of \(\frac{|0\rangle +|1\rangle }{\sqrt{2}}\) (equivalent to \(x_i=0\) described previously), starting from lowest index corrupted qubit and following in order of index to demonstrate the performance of individual updates with our quantum neuron. Qubits which are updated first in this order tend to have a lower accuracy than qubits which are updated later in the update chain, since in the early updates there are more corrupted states used as controls for the update. This can be alleviated by updating the qubits multiple times, as in Fig. 5. Note that Figs. 4 and 5 show the \(P(|1\rangle )\) of each qubit. From this, a majority vote of each of the qubit measurements can be taken to compose a classical binary result. One potential application of this execution method is in restoring images with corrupted pixels.

QASM simulation of QHAM of size 4, 9, and 16. Simulation results of the quantum Hopfield associative memory system for sizes (a) 4, (b) 9 and (c) 1631 with two given attractor states. Each color graded box represents a qubit in each system state, and the number inside the box is the exact probability of measuring \(|1\rangle\) for that individual state.

QHAM multiple update demonstration. Updating each corrupted qubit from the Fig. 4c memory twice improves the accuracy of the several of the updated qubits, particularly those which are towards the beginning of the update order.

Testing the QHAM in IBMQ hardware

We also demonstrate the \(n=4\) system in the 15-qubit ibmq_16_melbourne hardware40 shown in further detail in Fig. S1 of the Supplementary Information. The hardware results for the \(n=4\) system execution are shown in Fig. 6. The ideal \(n=4\) system with a single update should require 5 qubits for its execution according to Eq. (7). However, since each qubit in the device is not directly physically connected to all other qubits, intermediate qubits must be used in order to transfer information between control and target qubits. As a Hopfield network requires high connectivity, the overall qubit connectivity of the system is crucial for performance in order to avoid these intermediate connections and additional qubits which contribute to increased noise in the system. Further analysis of hardware limitations and noise can be found in the Supplementary Information, including Table S1 and Table S2.

QHAM implemented in quantum hardware. Hardware results for the \(n=4\), single update system obtained from the ibmq_16_melbourne device40. The numbers in each box (representing each qubit) represent the probability of measuring \(|1\rangle\) for each qubit. Two attractors are given and an initial state with \(|x_3\rangle\) corrupted to a superposition state is updated to the final state.

Determining effective memory capacity

To benchmark the QHAM we study its effective memory capacity41,42 which quantifies the number of memories m which can be successfully stored and recalled in an associative memory of size n. We test the effective memory capacity using a similar method to the classical Hopfield associative memory capacity calculations developed by McEliece et al.41 and Newman42.

First, we introduce m patterns of length n which are composed of random, independent values of \(\pm 1\). These patterns are used as attractors with the set of all attractors described by \(\varepsilon ^{(1:m)}\) and are used to develop the weight matrix according to Eq. (1). We then introduce a random probe (input state) of length n which is less than \(\rho n\) in Hamming distance from one of the stored memory patterns. Each memory or probe can be described by \(x=[x_1,x_2,\ldots ,x_n]\) with \(x_j\in \{-1,1\}\). For example, with \(m=2\), \(n=5\) and \(\rho =0.25\), we could consider \(\varepsilon _1 = [1,1,1,1,1]\) and \(\varepsilon _2 = [-1,-1,-1,-1,-1]\). An acceptable probe within \(\rho n = 0.25n\) from one of these attractors could have at most one flipped bit from its closest attractor, i.e. the probe could be \(x = [-1,1,1,1,1]\) which is within \(\rho n\) of \(\varepsilon _1\), or \(x = [-1,-1,1,-1,-1]\) which is within \(\rho n\) of \(\varepsilon _2\). As the Hopfield network performs updates on random elements of the probe, the state of the probe converges to the stored memory which is the closest in Hamming distance to the original probe. In a rigorous analysis by McEliece et al.41, it is derived that with \(0\le \rho <\frac{1}{2}\), the maximum m patterns which can be successfully recalled in a classical associative memory grows as:

We map these m memories and the probe to the quantum domain following Eq. (3), prepare the input state described by the equivalent quantum representation of the probe \(s=|s_1s_2\ldots s_n\rangle\) and create the Hebbian weight matrix using the attractors following Eq. (1). Random updates are performed up to the hyperparameter u (representing the number of updates to perform) and the output of all n states are measured. After all of the updates, the system state can be measured with a desired number of “shots” to determine the probability distribution for each state. To determine the classical output of the system, we take a majority vote of many measurements of each of the individual qubits in the system to compose the final memory recall result.

Effective QHAM Capacity. (a) Majority vote accuracy measurements for the QHAM for memories of length \(n\in \{4,5,\ldots ,10\}\) and \(m\in \{1,2,\ldots ,10\}\) versus \(\alpha =m/n\). (b) Density accuracy measurements from the same test conditions as (a) versus m/n. (c) Demonstration of hyperparameter tuning for u. In this case, the optimal u based on majority vote accuracy is 16 for \(m=2\) and 12 for \(m=4\).

Simulation of QHAM effective memory capacity

To analyze effective memory capacity, we perform Monte Carlo simulation with many random memories and probes to determine the accuracy of the system in reproducing the desired memory state. The capacity results for \(n=\{4,5,\ldots ,10\}\), \(m=\{1,2,\ldots ,10\}\) and \(\rho =0.2\) are shown in Fig. 7. Figure 7a shows these results as a function of \(\alpha =\frac{m}{n}\) using a term we call “majority vote accuracy,” which is measured as the percentage of accurate memory strings obtained after taking a majority vote of many measurements of each of the individual qubits in the system. For example, if an experiment is run with 8192 “shots” as we use for our simulations, we take a majority vote for each qubit such that if a majority of the 8192 shots return \(|s_0\rangle =|0\rangle\), then result of qubit \(|s_0\rangle\) is assigned to \(|0\rangle\). For a target memory of \(|s\rangle =|0110\rangle\), the majority vote accuracy is measured as the percentage of tests in which \(|0110\rangle\) is returned after the majority vote result of each individual qubit is taken.

In Fig. 7b, we analyze an accuracy metric which we call “density accuracy” which describes the average rate of each of the individual qubits being measured in the correct state. This measurement quantifies the average accuracy of each individual qubit in the system and is used to model how the results propagate in the quantum domain, as it is a close reflection of the superposition states of each of the individual qubits. For example, if \(|s_0\rangle\) returns the desired value \(|0\rangle\) in \(90\%\) of shots, and \(|s_1\rangle\) returns the desired value \(|1\rangle\) in 100% of shots, then the density accuracy of the two qubit system is 95%. The results in Fig. 7a,b have been tuned to the value of the hyperparameter u which yields the maximum majority vote accuracy. An example of this hyperparameter tuning is shown in Fig. 7c. Since we cannot check for convergence in the quantum domain after each individual update because this would require mid-circuit measurement, we tune u with Monte Carlo simulation.

In these QHAM implementations, we notice trends mirroring the classical memory capacity calculations, even with the neuron activation function not being perfectly binary (as shown in Fig. 3). With \(\rho =0.2\) and \(n\in [4,5,\ldots ,10]\), the probes which are allowed to be introduced contain no flipped bits when \(n=4\) or \(n=5\), and a single flipped bit for \(6\le n \le 10\). Based on these results, we clearly see two separate trends in Fig. 7 wherein a greater number of flipped bits in the probe leads to lower classification accuracy. Since the \(\rho\) we define is an upper bound, we can also calculate an effective \(\rho\), \(\rho _{eff}\), which corresponds to the true percentage of bits in the probe which are flipped. For the \(n=4\) and \(n=5\) cases this \(\rho _{eff}=0\), and for the case of \(n=10\), for example, \(\rho _{eff}=0.1\). Following Eq. (9) using \(\rho _{eff}\), we would then expect m to be 1.44, 1.55, and 1.39 for \(n=4\), 5 and 10 respectively, which can be rounded down to the largest number of memories which can be stored for successful recall, specifically \(m=1\) in these cases. With \(\rho =0.2\) and \(n\in [4,5,\ldots ,10]\), we see the \(m=1\) case for \(n=4\) and \(n=5\) demonstrate perfect recall in the majority vote accuracy test (which extracts the associative memory to the classical domain), and the \(m=1\) cases for \(n>5\) show very high recall percentage which increases with n. In the density accuracy measurements in Fig. 7b, which reflect the accuracy of the system in the quantum domain, we observe well over 90 \(\%\) accuracy for all n when \(m=1\). We also demonstrate tuning of the u parameter in Fig. 7c based on the majority vote accuracy measurements. Figure 7c shows the results of tuning the QHAM of length \(n=10\) when storing \(m=2\) and \(m=4\) memories. In these examples, the majority vote accuracy increases with each update as incorrect bits are corrected up to an optimal number of updates u. After this optimal u, the accuracy tends to decrease as the inaccuracy inherent in the neuron activation function (shown in Fig. 3) propagates through the circuit and leads to the incorrect changing of correct bits in the memory string. With a larger number m of stored memories, spurious minima are more likely to exist in the optimization landscape of the associative memory system, lowering the optimal u and the overall accuracy of the system. Overall, the accuracy improves with increasing updates up until the optimal u, beyond which the probability of incorrectly flipping an incorrect bit outweighs the probability of correctly flipping an incorrect bit. In this example, the optimal u is shown to be 16 for \(m=2\) and 12 for \(m=4\). All of the data shown in Figs. 7a and 7b are tuned using this metric.

Simulation of QHAM effective memory capacity considering noise

In addition to simulating the effective memory capacity of the QHAM system in the noiseless scenario, we perform measurements of the QHAM capacity under the effects of simulated hardware noise from the ibmq_16_melbourne noise model as shown in Fig. 8. The sources of this noise due to quantum errors are further described in the Supplementary Information. With \(n=\{4,5,\ldots ,10\}\), \(m=\{1,2,\ldots ,10\}\) and \(\rho =0.2\) as in the noiseless simulations, we see the \(m=1\) case for \(n=4\) and \(n=5\) demonstrate perfect recall when the output is extracted to the classical domain in the majority vote accuracy test in Fig. 8a, and degradation of the recall for larger n. In these measurements, we observe that memories with larger size n show lower effective capacity for the same \(\rho _{eff}\). This is explained by the larger number of updates performed in these cases and the need to take majority votes from n probabilistic qubit outputs wherein inaccuracies caused by noise can compound for large n. Without this inaccuracy from the majority vote extraction to the classical domain, the density accuracy metric shown in Fig. 8b tends to show a more condensed trend regardless of n. However, compared to the same tests without any noise modeling as shown in Fig. 7, the noise model results show significant degradation in their density accuracy measurements, for example the \(m=1\), \(n=4\) case showing approximately 95% accuracy as opposed to perfect accuracy in the noiseless test. We also demonstrate tuning of the u parameter in Fig. 8c based on the majority vote measurements. Tuning u shows less of a defined optimization point when noise effects are included compared to the noiseless case, as increasing the number of updates leads to more compounding error due to noise. Increasing the number of updates increases the gate complexity of the quantum circuit, thereby increasing the amount of noise injected by each gate operation, thus increasing the error inherent in the neuron (see Fig. 3). Therefore, the optimal u tends to be lower in the noisy associative memory tests than in the equivalent noiseless associative memory, as the noise introduced by further gate complexity eventually outweighs the benefit of performing an update.

Effective QHAM Capacity using ibmq_16_melbourne noise model. (a) Majority vote accuracy for the QHAM for memories of length \(n\in \{4,5,\ldots ,10\}\) and \(m\in \{1,2,\ldots ,10\}\) versus \(\alpha =\frac{m}{n}\). (b) Density accuracy from the same test conditions as (a) versus \(\frac{m}{n}\). (c) Demonstration of tuning for u. In this case, the optimal u based on majority vote accuracy is 11 for \(m=2\) and 10 for \(m=4\).

QHAM effective memory capacity in hardware

For limited cases, we are able to implement the QHAM in the ibmq_16_melbourne hardware. The same test structure as used in simulation is performed for \(n=\{3,4,5\}\), \(m=\{2,3,4\}\), \(\rho =0.2\) and 1024 shots, as shown in Fig. 9. We note that the number of trials performed for these measurements is much lower than the number of trials performed in simulation due to hardware availability.

Effective QHAM capacity using ibmq_16_melbourne40 hardware. (a) Majority vote accuracy for the QHAM in hardware for \(n=\{3,4,5\}\), \(m=\{2,3,4\}\), \(\rho =0.2\) versus \(\alpha = m/n\). (b) Density accuracy using the same test conditions as (a) versus m/n.

Clearly, the hardware implementation of the associative memory degrades the ability of the system to store memories, even more than the test with simulated noise from the noise model of the same hardware device. This is due to the significantly increased noise from lack of hardware connectivity and the increased number of qubits required for hardware execution of the QHAM circuit. While the noise model takes quantum error effects into account, it does not take into account these limitations. The noise model construction is explained in further depth in the Supplementary Information. Even for small memory cases such as the \(n=\{3,4,5\}\) tests, the errors caused by the lack of full connectivity in the physical qubits become significant in the full system implementation. There is some recall in the single pattern case for \(n=3\), but an accuracy metric of around 60 % in the majority vote case is quite low for the production of meaningful results. In this case, quantum error correcting codes would become highly useful.

Discussion

We have demonstrated a Quantum Hopfield Associative Memory system which can be executed in real quantum hardware via IBMQ. The number of qubits scales linearly with the length of the memory in simulation and in hardware where reset operations are available. If reset operations are not possible, the system also scales linearly in the number of updates. The accuracy of this system depends strongly on quantum errors and noise, but we demonstrate high resilience to these errors when extracting the quantum superposition states to the classical domain via a majority vote of many circuit shots. Noise modeling in simulation via IBMQ aids in understanding the effects of different types of noise on quantum circuit performance, while testing in hardware shows limitations due to a low number of qubits and limited connectivity. We benchmark the QHAM by measuring its effective memory capacity which is shown to mimic the effective memory capacity of classical Hopfield associative memory systems. The output of the QHAM can also remain in the quantum domain via n entangled superposition states with high accuracy, which could then be used for further quantum computation.

The implementation of quantum associative memory models such as ours are significant for the advancement of machine learning in the domain of quantum computing. In the NISQ era of quantum computing, this successful QHAM implementation in hardware is a significant demonstration of what can be done in quantum hardware even in devices with a limited number of qubits and high noise. With applications in image recognition, optimization, data restoration from corrupt storage and more, our QHAM has high potential for further development in various application spaces. Further study pathways include noise-aware training, quantum algorithm developments for producing true Hopfield threshold dynamics in the quantum domain, and quantum hardware developments for increased connectivity. There have been promising developments in quantum associative memory models which theorize polynomial or exponential improvements in memory capacity24,25,27, which is a major motivation to continue this work to determine a route for true quantum advantage in this area. Our study demonstrates a step in this direction by being the first of its kind in which hardware implementation is explored.

Methods

All QHAM testing was performed using IBMQ. Most data was collected via the QASM Simulator using noise models produced by the hardware systems, and some hardware tests were performed for cases which were small enough to fit on the quantum hardware, specifically on ibmq_16_melbourne, the largest publicly available IBMQ hardware device at the time of this work with 15 qubits. The noise models are frequently updated and calibrated by IBM to maintain accuracy to the true systems, and new versions of hardware are frequently being released. In this study, the specific IBMQ hardware versions utilized were: ibmq_5_yorktown (ibmqx2) v2.2.6, ibmq_16_melbourne v2.3.8, ibmq_athens v1.3.10, ibmq_santiago v1.3.10, ibmq_lima v1.0.2, ibmq_quito v1.0.4, ibmq_belem 1.0.0, and ibmq_armonk v2.4.0.

All QASM Simulator and hardware tests run with IBMQ use a variable called “shots” which represents the number of repeated tests which will be performed in the QASM simulator in order to gain an output probability distribution which is close to describing the true superposition state of the qubits. For the tests shown in Figs. 4, 5 and 6 and Table S2, we use \(shots=8192\), the maximum allowed by IBMQ at the time of these experiments. For the Monte Carlo tests shown in Figs. 7, 8 and S2, we use \(shots=1024\) to save on run time, then perform many of these same tests and take a separate majority vote of the results to produce the effective memory capacity measurements. For all n in simulation we perform 1000 tests for the Monte-Carlo simulation (for \(1024*1000=1{,}024{,}000\) total “shots”), and in hardware we perform only 30 tests due to long hardware queue times.

Data availability

The code used for IBMQ simulation, testing and data analysis and the data produced and analyzed in this study are available from the authors upon reasonable request.

References

Schuld, M., Sinayskiy, I. & Petruccione, F. An introduction to quantum machine learning. Contemp. Phys. 56, 172–185. https://doi.org/10.1080/00107514.2014.964942 (2014).

Perdomo-Ortiz, A., Benedetti, M., Realpe-Gómez, J. & Biswas, R. Opportunities and challenges for quantum-assisted machine learning in near-term quantum computers. Quantum Sci. Technol. 3, 030502. https://doi.org/10.1088/2058-9565/aab859 (2018).

Biamonte, J. et al. Quantum machine learning. Nature 549, 195–202. https://doi.org/10.1038/nature23474 (2017).

Farhi, E. & Neven, H. Classification with quantum neural networks on near term processors. arXiv:1802.06002 (2018).

Chuang, I. L. & Yamamoto, Y. Simple quantum computer. Phys. Rev. A 52, 3489–3496. https://doi.org/10.1103/physreva.52.3489 (1995).

Cao, Y., Guerreschi, G. G. & Aspuru-Guzik, A. Quantum neuron: an elementary building block for machine learning on quantum computers. arXiv:1711.11240 (2017).

Diep, D. N. Some quantum neural networks. Int. J. Theor. Phys. 59, 1179–1187. https://doi.org/10.1007/s10773-020-04397-1 (2020).

Daskin, A. A simple quantum neural net with a periodic activation function. In 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC). https://doi.org/10.1109/smc.2018.00491 (2018).

Cui, Y., Shi, J. & Wang, Z. Complex rotation quantum dynamic neural networks (CRQDNN) using complex quantum neuron (CQN): Applications to time series prediction. Neural Netw. 71, 11–26. https://doi.org/10.1016/j.neunet.2015.07.013 (2015).

Killoran, N. et al. Continuous-variable quantum neural networks. Phys. Rev. Res.https://doi.org/10.1103/physrevresearch.1.033063 (2019).

Cong, I., Choi, S. & Lukin, M. D. Quantum convolutional neural networks. Nat. Phys. 15, 1273–1278. https://doi.org/10.1038/s41567-019-0648-8 (2019).

Henderson, M., Shakya, S., Pradhan, S. & Cook, T. Quanvolutional neural networks: Powering image recognition with quantum circuits. arXiv:1904.04767 (2019).

Rebentrost, P., Bromley, T. R., Weedbrook, C. & Lloyd, S. Quantum hopfield neural network. Phys. Rev. A.https://doi.org/10.1103/physreva.98.042308 (2018).

Cerezo, M. et al. Variational quantum algorithms. Nat. Rev. Phys. 3, 625–644. https://doi.org/10.1038/s42254-021-00348-9 (2021).

Mangini, S., Tacchino, F., Gerace, D., Bajoni, D. & Macchiavello, C. Quantum computing models for artificial neural networks. EPL (Europhys. Lett.) 134, 10002. https://doi.org/10.1209/0295-5075/134/10002 (2021).

Bharti, K. et al. Noisy intermediate-scale quantum (NISQ) algorithms. arXiv:2101.08448 (2021).

Abbas, A. et al. The power of quantum neural networks. Nat. Comput. Sci. 1, 403–409. https://doi.org/10.1038/s43588-021-00084-1 (2021).

Xia, R. & Kais, S. Hybrid quantum-classical neural network for calculating ground state energies of molecules. Entropy 22, 828. https://doi.org/10.3390/e22080828 (2020).

Liu, J. et al. Hybrid quantum-classical convolutional neural networks. arXiv:1911.02998 (2019).

Sleeman, J., Dorband, J. & Halem, M. A hybrid quantum enabled RBM advantage: Convolutional autoencoders for quantum image compression and generative learning. arXiv:2001.11946 (2020).

Beer, K. et al. Training deep quantum neural networks. Nat. Commun. 11, 808. https://doi.org/10.1038/s41467-020-14454-2 (2020).

Havlíček, V. et al. Supervised learning with quantum-enhanced feature spaces. Nature 567, 209–212. https://doi.org/10.1038/s41586-019-0980-2 (2019).

Hopfield, J. J. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. 79, 2554–2558. https://doi.org/10.1073/pnas.79.8.2554 (1982).

Diamantini, M. C. & Trugenberger, C. A. High-capacity quantum associative memories. J. Appl. Math. Phys. 04, 2079–2112. https://doi.org/10.4236/jamp.2016.411207 (2016).

Schuld, M., Sinayskiy, I. & Petruccione, F. The quest for a quantum neural network. Quantum Inf. Process. 13, 2567–2586. https://doi.org/10.1007/s11128-014-0809-8 (2014).

Ramsauer, H. et al. Hopfield networks is all you need. CoRRarXiv:2008.02217 (2020).

Ventura, D. & Martinez, T. Quantum associative memory with exponential capacity. In 1998 IEEE International Joint Conference on Neural Networks Proceedings. IEEE World Congress on Computational Intelligence (Cat. No.98CH36227), Vol. 1, 509–513. https://doi.org/10.1109/IJCNN.1998.682319 (1998).

Tacchino, F., Macchiavello, C., Gerace, D. & Bajoni, D. An artificial neuron implemented on an actual quantum processor. npj Quantum Inf. 5, 26. https://doi.org/10.1038/s41534-019-0140-4 (2019).

Preskill, J. Quantum computing in the NISQ era and beyond. Quantum 2, 79. https://doi.org/10.22331/q-2018-08-06-79 (2018).

IBM Quantum. https://quantum-computing.ibm.com/ (2021).

ibmq_qasm_simulator v0.1.547, IBM Quantum team. Retrieved from: https://quantum-computing.ibm.com/ (2021).

Nachman, B., Urbanek, M., de Jong, W. A. & Bauer, C. W. Unfolding quantum computer readout noise. npj Quantum Inf. 6, 84. https://doi.org/10.1038/s41534-020-00309-7 (2020).

Kofman, A. G. & Kurizki, G. Unified theory of dynamically suppressed qubit decoherence in thermal baths. Phys. Rev. Lett. 93, 130406. https://doi.org/10.1103/PhysRevLett.93.130406 (2004).

Torrontegui, E. & García-Ripoll, J. J. Unitary quantum perceptron as efficient universal approximator. EPL (Europhys. Lett.) 125, 30004. https://doi.org/10.1209/0295-5075/125/30004 (2019).

Hu, W. Towards a real quantum neuron. Nat. Sci. 10, 99–109. https://doi.org/10.4236/ns.2018.103011 (2018).

Wiebe, N. & Kliuchnikov, V. Floating point representations in quantum circuit synthesis. New J. Phys. 15, 093041. https://doi.org/10.1088/1367-2630/15/9/093041 (2013).

Córcoles, A. et al. Exploiting dynamic quantum circuits in a quantum algorithm with superconducting qubits. Phys. Rev. Lett.https://doi.org/10.1103/physrevlett.127.100501 (2021).

Cross, A. W., Bishop, L. S., Sheldon, S., Nation, P. D. & Gambetta, J. M. Validating quantum computers using randomized model circuits. Phys. Rev. A 100, 032328. https://doi.org/10.1103/PhysRevA.100.032328 (2019).

ibmq_quito v1.0.4, IBM Quantum team. Retrieved from: https://quantum-computing.ibm.com/ (2021).

ibmq_16_melbourne v2.3.8, IBM Quantum team. Retrieved from: https://quantum-computing.ibm.com/ (2021).

McEliece, R., Posner, E., Rodemich, E. & Venkatesh, S. The capacity of the Hopfield associative memory. IEEE Trans. Inf. Theory 33, 461–482. https://doi.org/10.1109/TIT.1987.1057328 (1987).

Newman, C. M. Memory capacity in neural network models: Rigorous lower bounds. Neural Netw. 1, 223–238. https://doi.org/10.1016/0893-6080(88)90028-7 (1988).

Acknowledgements

We acknowledge the use of IBM Quantum Services for this work. The views expressed are those of the authors, and do not reflect the official policy or position of IBM or the IBM Quantum team.

Author information

Authors and Affiliations

Contributions

N.E.M. designed the quantum neuron and QHAM system, performed IBMQ simulations and hardware experiments, and analyzed the data presented in this work. S.M. advised the study and provided valuable insight into the machine learning concepts analyzed in this work. N.E.M. wrote the manuscript and supplemental information text and prepared all figures. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Miller, N.E., Mukhopadhyay, S. A quantum Hopfield associative memory implemented on an actual quantum processor. Sci Rep 11, 23391 (2021). https://doi.org/10.1038/s41598-021-02866-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-02866-z

This article is cited by

-

Community detection in brain connectomes with hybrid quantum computing

Scientific Reports (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.