Abstract

SARS-CoV-2 (Severe acute respiratory syndrome coronavirus 2), a virus causing severe acute respiratory disease in humans, emerged in late 2019. This respiratory virus can spread via aerosols, fomites, contaminated hands or surfaces as for other coronaviruses. Studying their persistence under different environmental conditions represents a key step for better understanding the virus transmission. This work aimed to present a reproducible procedure for collecting data of stability and inactivation kinetics from the scientific literature. The aim was to identify data useful for characterizing the persistence of viruses in the food production plants. As a result, a large dataset related to persistence on matrices or in liquid media under different environmental conditions is presented. This procedure, combining bibliographic survey, data digitalization techniques and predictive microbiological modelling, identified 65 research articles providing 455 coronaviruses kinetics. A ranking step as well as a technical validation with a Gage Repeatability & Reproducibility process were performed to check the quality of the kinetics. All data were deposited in public repositories for future uses by other researchers.

Measurement(s) | Decimal reduction time |

Technology Type(s) | Modelling of kinetics |

Factor Type(s) | Temperature • Relative humidity • Genus • Strain |

Sample Characteristic - Organism | Coronaviridae |

Similar content being viewed by others

Background & Summary

The first cases of coronaviruses disease 2019 (COVID-19) due to SARS-CoV-2 were detected in China in December 2019 and spread afterwards quickly around different countries from around January-February 20201. From the first months of viral dissemination, SARS-CoV-2 clusters were observed, in particular in occupational environments for several essential sectors such as health care centres2,3 or food processing plants4,5,6,7. The transmission of coronaviruses among humans was reported as possibly through aerosol (inhalation of aerosolized or falling contaminated droplets) or through contact (hand, objects or surfaces)8,9. Infected persons (symptomatic or asymptomatic) can send out several contaminated droplets that could stay in aerosol, be directly inhaled or fall on surfaces and potentially infect afterwards other persons. However, the changes of the infectious viral load in the contaminated droplets susceptible to be inhaled and to induce disease are not well known in either aerosol or surfaces. Furthermore, the persistence of SARS-CoV-2 depends on environmental conditions that are very different from one occupational location to another10,11. Some factors such as airflow, ventilation, temperature and relative humidity modify the probability of SARS-CoV-2 transmission through respiratory pathways since it can affect droplet movements and virus survival, notably through droplet desiccation12. Some studies reported the influence of few temperature and/or relative humidity conditions. For example, the virus remains infectious for longer periods at lower temperatures and very high relative humidity10, metal surfaces such as stainless steel could allow the virus to remain infectious longer than on others under some specific temperature-humidity conditions13,14. Studies on coronaviruses persistence were generally conducted by experiments in laboratory consisting in monitoring the virus kinetics under controlled conditions. The reduction of virus infectivity over time could be evaluated by fitting inactivation mathematical models on experimental data. Studying the effects of environmental conditions encountered in food premises requires collecting kinetics data as exhaustively as possible to cover large ranges of values for each condition: inert or food surfaces, temperature, relative humidity, experimental quantification method, virus strain etc. To our knowledge, such exhaustive kinetics dataset are not available yet in the literature. It should also be noted that the gathering of data between different studies is not easy. For example, kinetics are frequently plotted in research articles but raw data are not often provided in all of them as quantitative (numerical) values that can be used for further studies of theirs.

Thus, the main goal of this paper was to collect and make available the compilation of a large dataset of quantitative kinetics related to SARS-CoV-2 and other coronaviruses under different conditions useful for assessing and modelling their persistence in food processing environments.

Methods

The overall procedure for collecting and pre-treating literature data is briefly illustrated in Fig. 1. Firstly, a literature review to identify relevant publications presenting kinetics data was carried out. This research was based on a query of scientific bibliographical databases in accordance with the PRISMA guidelines15 (Step 1). The second step consisted in converting raw data from scientific publications (texts, tables or figures) into a ready-to-use numerical dataset with a manual collection for tables or a semi-automated collection after digitalization of figures (Step 2). Afterwards, an inactivation primary model was fitted on each kinetics to estimate the viral infectivity reduction parameter and its uncertainty (Step 3). Finally, a quality-ranking step (Step 4) was performed to evaluate the quality of the data collected from kinetics. The quantitative dataset and the different tools used in this procedure are freely available and detailed in the following sub-sections.

Scoping review

The scoping review is part of a scientific project to describe the persistence of coronaviruses in food production environments. Firstly, a query process was carried out to identify relevant records, using weekly advanced searches on several topics associated with SARS-CoV-2. This weekly literature search was conducted between March 2020 and 25th August 2021 using a combination of keywords related to the main thematic (1) “SARS-CoV-2 and coronavirus” joined by the logical connector AND with one of the following (2a) “Human and food”, (2b) “Water” or (2c) “Environmental persistence”. The keywords used for each theme are specified in Table 1. Studies were collected weekly from two bibliographic search engines PubMed and Scopus (from March 2020 to August 2021) and by query on Frontiers (from November 2020 to August 2021). A date restriction was defined: only publications from the 1st of January 2020 were collected. The search was limited to publications with abstracts written in English. The last query process using those above-mentioned criteria performed on the 25th of August 2021 identified overall 14,267 references exported to EndNote software, after duplicate removal. From this corpus, a thematic filter about “Persistence” was built with a “from group” tools in EndNote software. This filter consisted in selecting articles with “persisten*” “survival” or “stability” in the field “Title–Abstract–Keywords”, joined by the logical connector AND with “environment*”. This search resulted in 418 references. These records were afterwards filtered in accordance with the PRISMA Statement guidelines15 (Fig. 2), using different inclusion/exclusion criteria based on title, abstract and sometimes full-text when needed. The inclusion criteria were (1) studies on persistence on materials, surfaces or aerosols or (2) studies on working environment. The exclusion criteria were (1) studies on therapeutic or vaccine development, (2) studies on untreated wastewater, (3) studies on diet or nutrition (4) language other than English and French, and (5) full text not available. A first screening step identified 82 references for which full papers were read to determine if they were included as fully documented kinetics of persistence data (either in tables or in figures) or used to complete the identification of relevant publications (e.g. in the case of reviews). All the above screening and completion stages identified a final total number of 65 studies in which available raw kinetics data could be extracted from tables and/or figures.

Information associated with kinetics data

The second step consisted in converting raw data from scientific publications into ready-to-use numerical dataset by extracting data from texts, tables and/or using figure digitalization techniques. This step provided several “kinetics”, meaning the tracking of viral titer at different time points under different conditions. Kinetics corresponding to viral genome quantification (e.g. by RT-qPCR techniques) were excluded since such quantification did not represent the viral infectivity. In total, 464 kinetics were available from the 65 identified studies11,13,14,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77 (Tables 2 and 3). It is worth noting that kinetics for coronaviruses other than SARS-CoV-2 collected in these studies were also retained for analyses.

Several information associated with the above kinetics were gathered in an Excel spreadsheet (more details in the Data records section). For each identified kinetics, we assigned a unique kinetics key. The virus Strains, Species, Subgenus and Genus were indicated for each kinetics, as well as other conditions such as the nature of the materials (stainless steel, plastic, paper,…), the medium (liquid media, aerosol, porous and non-porous surfaces), temperature and relative humidity (expressed in range and or median value), pH (if available) etc. Other information was also indicated for each kinetics such as the initial virus load, the type of cell used for infectious viral titration (Vero, etc.) or the number of experimental replicates. Table 2 provides an overview of the extracted kinetics from SARS-CoV-2 studies, the Table 3 from studies for other coronaviruses.

Data extraction

The persistence kinetics data were taken from texts, tables or figures in the research papers identified above. Data from tables or texts were manually filled into the database. Raw data from figures were extracted using the R package metadigitize78. This tool provided the possibility to process simultaneously many figures, as well as reproducibility (e.g., correcting the digitalized data, sharing digitalization). For reproducibility purposes, all files associated with raw digitalizations of our study are provided and detailed in the Data records section. The monitored time points extracted from all studies (extractions from tables or figures digitalization) were converted and expressed in hours in order to homogenize for comparative purposes between studies.

Viral infectivity reduction parameter

The third step aimed to estimate a parameter to characterize the viral infectivity reduction for each kinetics (condition). This parameter, denoted D and expressed in hour, characterized the decimal log reduction time and was estimated by fitting a primary inactivation model on the extracted data79. The value of D corresponding to the inverse of the slope from a linear model10,71 was written as follows:

with N0 and N corresponding to the number of infectious viruses at the initial time point and the time point t (expressed in hour), respectively. The model was fitted independently on each kinetics using the function nls() from the R package nlstools80 running with the ‘nl2sol’ algorithm from the Port library81. The starting parameter values necessary for the fitting algorithm was set depending on the kinetics curves or optimized using the R package nls282 in some rare cases of non-convergence. For each kinetics, a value of D was estimated as well as its uncertainty expressed by standard error value SE. The value of log10 D was computed accordingly for each kinetics. Finally, the coefficient of variation was calculated as the proportion: CV=SE/D.

Evaluation of kinetics quality

In this work, kinetics data were collected from different publications in which the laboratory experiments were not conducted with the same design. These data represented then important variabilities, e.g. in terms of number of time points, replicates, etc. Therefore, criteria can be useful to evaluate and classify the quality of the collected kinetics. Indeed, the quality of raw data was susceptible to influence the statistical estimation of D. Criteria-based ranking approaches have been proposed in some predictive microbiology studies aiming to deal with difficulties in terms of data selection (inclusion or exclusion for modelling)83,84. Herein, for the establishment of a quality score by kinetics, we considered three criteria: (i) the number of the time points of the kinetics; (ii) the importance of the extracted point considering if it represented a single value or multiple measure (i.e. at least two technical replicates); and (iii) the value of the coefficient of variation (CV) of the estimated value of D, characterizing the fit quality of the inactivation model. For each kinetics, we attributed three scores corresponding to these three criteria and classified them into different categories. It is worth noting that ones can arbitrarily define the threshold values separating these categories as well as the given corresponding score values depending on the studies and the extracted dataset. In our work, for each kinetics, the score associated with the number of time points, denoted s1, was firstly defined as follows:

where nt corresponds to the number of time points collected from the kinetics.

The score s2, based on the importance of points (‘unique’ or ‘multiple’), was defined as follows:

The score s3, based on the coefficient of variation CVi of the kinetics i, was given as follows:

or s3 = 1 for some kinetics for which standard errors and coefficients of variation could not be computed (kinetics with only two points).

Finally, a global score S taking into account all criteria was calculated for each kinetics:

The calculated scores for all kinetics are gathered in Spreadsheets and R Data objects provided in the Data Records section.

Data Records

Intermediate data: Figure digitalization raw files

The digitalized source figures (jpg or png image files) were used in the second step of the collection procedure (see Fig. 1). The digitalization were carried using the R package metaDigitise78 that automatically created the directory denoted ‘caldat’ containing raw digitalization files to assure traceability and also to avoid re-doing manual digitalization at every run of the procedure. Raw digitalization files generated by metaDigitise were automatically renamed like their corresponding image files. All sources figures and digitalization raw files used herein are provided in a data repository85.

Input and output quantitative data spreadsheet

Output spreadsheets were obtained at the end of the overall collection procedure under the Excel and CSV file formats (see Fig. 1) (“DataRecord_OutputData.xlsx” and “DataRecord_OutputData.csv”).

All information reported from publications (experimental conditions, figure sources, publication references, DOI, etc.) related to each kinetics used as input, as well as the corresponding estimated values as described in the Method section (D, coefficient de variation, scores, etc.) are present85. Each row represents a kinetics, each column is completed, when available, by qualitative and quantitative variables, as follows:

-

ID of each kinetics (Kinetics key), denoted for example: ‘K001’, ‘K002’, etc.;

-

ID of each study (Study key);

-

Studied viruses and their classification: Genus, Sub-genus, Virus, Strain;

-

Temperature considered in the experimental design: temperatures were gathered by precise values reported from the publication if available (column Temperature) or by ranges (column Temperature range) in which case its median values were considered (e.g. 23.5 °C reported in Temperature for a range of 22–25 °C given in the publications);

-

Relative humidity (columns Relative humidity and Relative humidity range) considered in the experimental design: as for temperatures, RH were reported as precise values and/or ranges;

-

pH values (column pH) if available;

-

Information related to the matrices sorted in three columns:

-

(i) the studied matrices (Studied matrices – fully named) in as described in publications, with some details;

-

(ii) the standardized matrices (column Standardized matrices), is a practical annotation to class similar matrices, such as liquid medium, stainless steel, etc.; and

-

(iii) the medium grouping the above matrices as four classes, which are “liquid media”, “porous surface”, “non-porous surface” or “aerosol” (column Medium);

-

-

Information related to the kinetics monitoring methods including:

-

(i) the quantification method (column Quantification method) indicating the experimental techniques such as viral infectivity assays by different cell types;

-

(ii) the used inoculum (column Inoculum) and

-

(iii) the replicate (column Replicate) indicating if the monitored kinetics were extracted as unique time points or multiple ones;

-

-

Sources of kinetics including

-

the bibliographic references (column References);

-

the name of tables or figures (column Table or Figure of the study) in the original publication where the kinetics raw data were transcribed or digitalized and

-

the corresponding file names of these tables and figures (column Re-transcribed tables or digitalized figures) provided in Data records allowing their re-use by other researchers;

-

-

Total number of points (column nb_points) extracted from each kinetics;

-

Different estimated values for all kinetics collected in the present study as described in the Method section:

-

(i) values of D (column Dvalues)

-

(ii) its standard error (column Dvalues_stderr) and

-

(iii) the coefficient of variation (column Dvalues_CV);

-

(iv) the decimal log of D (column log10D)

-

-

For some kinetics and for comparison purposes, the estimated values of log10D previously estimated using another modelling approach10 (column log10D_AEM);

-

The scores given to each kinetics, including s1, s2 and s3 as well as the global score S (columns s1, s2, s3 and S, respectively).

Input and output as RData object

All input used and output obtained at the end of the collection workflow is also provided as a ready-to-use RData object (DATASET.RData)85. From this RData object, when opened in R/RStudio softwares, one can extract:

-

the input and output data spreadsheet described above (object DATASET);

-

raw data (from tables or figures) associated with the monitoring of each kinetics (measured values at each sampling time points), only the data above LOQ are recorded (object kinetics_rawdata);

-

regression plots (inactivation linear model, see Method section) generated for each kinetics (object regplot). These regression plots were also exported as PDF files provided (output_adjusted_kinetics). The pattern of inactivation kinetics (increasing or decreasing) may be different depending on the unit used by the authors (e.g. logTCID50/ml, \({\rm{\log }}(\frac{{N}_{t}}{{N}_{0}})\), viral titer reduction in percentage, etc).

Technical Validation

The technical validation focused on the figures’ digitalization step, since the latter remained a manual work that could probably vary from one user to another. In order to check the quality of the data collected by digitalization, this step was re-conducted repeatedly by three independent users for evaluating its repeatability and its reproducibility. This checking procedure was performed on a random sample of eleven kinetics among those collected, and each kinetics was digitalized three times per user. The values of the parameter D were afterwards estimated as described in previous sections. The comparison between the values of D estimated by different users was firstly done by fitting the major axis regression model on bootstrap data generated for each pair of users86,87. Afterwards, the Gage R&R tool from the R package SixSigma88,89 was used in order to identify and quantify the error parts in the estimated values of log_10 D due to the user repeatability as well as the between user reproducibility, respectively. The R scripts and data associated with the technical validation procedure are provided (see the ‘Code Availability’ section below).

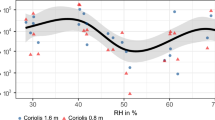

As illustration, the comparison between users (denoted user 1, 2 and 3, respectively) by major axis regression is plotted in Fig. 3 (user 1, plotted in the X-axis, was arbitrarily chosen herein as the reference one for comparison). The results of the Gage R&R analysis showed a good repeatability: the latter estimated a very low error part due to intra-user variation, estimated at only 0.01% of the overall variation. The error part due to the between-users variation was estimated at 1.5%. After confrontation between experimenters, this part of inter-users error can be explained by the difficulties for choosing the points to be digitalized. Indeed points below the limit of quantification (LOQ) should not be included for avoiding bias. This choice could then strongly influence the estimation of log10 D as illustrated in Fig. 3, since this parameter conditioned the slope of the linear model fitted to the chosen data points. Yet, in many articles, the information LOQ is not provided. In such conditions, it is up to scientist in charge of digitalization to include/exclude points to be included. This is prone to introduce uncertainty especially for points corresponding to the end of the experiment. In view of this user-dependent choice, in the present study, we provided then all raw digitalization files that be imported, re-used or modified by other users if needed according to their expertise.

Usage Notes

Re-use of figure digitalization files

The digitalization step were done using the R package metaDigitise78 providing reproducible and flexible tools for tracing every digitalization. In practice, the digitalizations were done using R commands (check the R scripts provided in the Code Availability section) allowing users to process the different image files ready-to-digitalize. This process consisted, for each image (plot), to click manually on the different chosen points of the image to calibrate the plotted axes and convert afterwards the different clicked points (from curves, barplots, etc.) to numerical values saved in R object. The different groups of points can be assigned with user-defined group names in order to separate different kinetics from the same image if necessary. For each digitalized image, a digitalization file is automatically generated in a specific directory, denoted ‘caldat’ to ensure the traceability of this manual step. Indeed, such a file can give the possibility, using R commands, to import the numerical values already digitalized and/or edit/recalibrate some values/points by other users if needed without having to re-process the whole image .

Code availability

All data records files and R scripts used for the data collection procedure are schematized in Fig. 4 and available at online repositories85: https://github.com/lguillier/SACADA_Database and https://zenodo.org/record/6572948#.YouM3ajP3tR.

The database (bibliographic references) was also extracted as RIS and BIB files for open-source software.

References

Spiteri, G. et al. First cases of coronavirus disease 2019 (COVID-19) in the WHO European Region, 24 January to 21 February 2020. Euro Surveill 25, https://doi.org/10.2807/1560-7917.ES.2020.25.9.2000178 (2020).

Magnusson, K., Nygård, K., Methi, F., Vold, L. & Telle, K. Occupational risk of COVID-19 in the first versus second epidemic wave in Norway, 2020. Euro Surveill 26, https://doi.org/10.2807/1560-7917.es.2021.26.40.2001875 (2021).

Airoldi, C. et al. Seroprevalence of SARS-CoV-2 Among Workers in Northern Italy. Annals of work exposures and health, https://doi.org/10.1093/annweh/wxab062 (2021).

Mallet, Y. et al. Identification of Workers at Increased Risk of Infection During a COVID-19 Outbreak in a Meat Processing Plant, France, May 2020. Food and environmental virology, 1–9, https://doi.org/10.1007/s12560-021-09500-1 (2021).

Dyal, J. W. et al. COVID-19 Among Workers in Meat and Poultry Processing Facilities - 19 States, April 2020. MMWR. Morbidity and mortality weekly report 69, https://doi.org/10.15585/mmwr.mm6918e3 (2020).

Middleton, J., Reintjes, R. & Lopes, H. Meat plants-a new front line in the covid-19 pandemic. BMJ (Clinical research ed.) 370, m2716, https://doi.org/10.1136/bmj.m2716 (2020).

Steinberg, J. et al. COVID-19 Outbreak Among Employees at a Meat Processing Facility - South Dakota, March-April 2020. MMWR. Morbidity and mortality weekly report 69, 1015–1019, https://doi.org/10.15585/mmwr.mm6931a2 (2020).

Kutter, J. S., Spronken, M. I., Fraaij, P. L., Fouchier, R. A. & Herfst, S. Transmission routes of respiratory viruses among humans. Curr Opin Virol 28, 142–151, https://doi.org/10.1016/j.coviro.2018.01.001 (2018).

Chu, D. K. et al. Physical distancing, face masks, and eye protection to prevent person-to-person transmission of SARS-CoV-2 and COVID-19: a systematic review and meta-analysis. The Lancet 395, 1973–1987, https://doi.org/10.1016/s0140-6736(20)31142-9 (2020).

Guillier, L. et al. Modeling the inactivation of viruses from the Coronaviridae family in response to temperature and relative humidity in suspensions or on surfaces. Applied and Environmental Microbiology 86, https://doi.org/10.1128/AEM.01244-20 (2020).

Morris, D. H. et al. Mechanistic theory predicts the effects of temperature and humidity on inactivation of SARS-CoV-2 and other enveloped viruses. Elife 10, https://doi.org/10.7554/eLife.65902 (2021).

Nicas, M., Nazaroff, W. W. & Hubbard, A. Toward understanding the risk of secondary airborne infection: emission of respirable pathogens. J Occup Environ Hyg 2, 143–154, https://doi.org/10.1080/15459620590918466 (2005).

van Doremalen, N. Aerosol and Surface Stability of SARS-CoV-2 as Compared with SARS-CoV-1. The New England journal of medicine, https://doi.org/10.1056/NEJMc2004973 (2020).

Chin, A. W. H. et al. Stability of SARS-CoV-2 in different environmental conditions. The Lancet Microbe 1, https://doi.org/10.1016/s2666-5247(20)30003-3 (2020).

Moher, D., Liberati, A., Tetxlaff, J., Altman, D. G. & Group, P. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. Annals of Internal Medicine 151, 264–270 (2009).

Leclercq, I., Batejat, C., Burguiere, A. M. & Manuguerra, J. C. Heat inactivation of the Middle East respiratory syndrome coronavirus. Influenza Other Respir Viruses 8, 585–586, https://doi.org/10.1111/irv.12261 (2014).

Darnell, M. E., Subbarao, K., Feinstone, S. M. & Taylor, D. R. Inactivation of the coronavirus that induces severe acute respiratory syndrome, SARS-CoV. J Virol Methods 121, 85–91, https://doi.org/10.1016/j.jviromet.2004.06.006 (2004).

Rabenau, H. F. et al. Stability and inactivation of SARS coronavirus. Med Microbiol Immunol 194, 1–6, https://doi.org/10.1007/s00430-004-0219-0 (2005).

Laude, H. Thermal inactivation studies of a Coronavirus, transmissible gastroenteritis virus. J. gen. Virol. 56, 235–240, https://doi.org/10.1099/0022-1317-56-2-235 (1981).

Casanova, L. M., Jeon, S., Rutala, W. A., Weber, D. J. & Sobsey, M. D. Effects of air temperature and relative humidity on coronavirus survival on surfaces. Appl Environ Microbiol 76, 2712–2717, https://doi.org/10.1128/AEM.02291-09 (2010).

Casanova, L., Rutala, W. A., Weber, D. J. & Sobsey, M. D. Survival of surrogate coronaviruses in water. Water Res 43, 1893–1898, https://doi.org/10.1016/j.watres.2009.02.002 (2009).

Bucknall, R., King, L., Kapikian, A. & Chanock, R. Studies With Human Coronaviruses 11. Some Properties of Strains 22YE and OC43 (36224). P.S.E.B.M 139, 10.3181%2F00379727-139-36224 (1972).

Van Doremalen, N., Bushmaker, T. & Munster, V. J. Stability of Middle East respiratory syndrome coronavirus (MERS-CoV) under different environmental conditions. Euro Surveill 18, 10.2807/1560-7917.es2013.18.38.20590 (2013).

Chan, K. H. et al. The Effects of Temperature and Relative Humidity on the Viability of the SARS Coronavirus. Adv Virol 2011, 734690, https://doi.org/10.1155/2011/734690 (2011).

Lai, M. Y. Y., Cheng, P. K. C. & Lim, W. W. L. Survival of Severe Acute Respiratory Syndrome Coronavirus. Infectious Diseases Society of America 41, 67–71, https://doi.org/10.1086/433186 (2005).

Warnes, S. L., Little, Z. R. & Keevil, C. W. Human Coronavirus 229E Remains Infectious on Common Touch Surface Materials. mBio 6, e01697–01615, https://doi.org/10.1128/mBio.01697-15 (2015).

Batéjat, C., Grassin, Q., Manuguerra, J.-C. & Leclercq, I. Heat inactivation of the Severe Acute Respiratory Syndrome Coronavirus 2. Journal of biosafety and biosecurity 3, 1–3, https://doi.org/10.1016/j.jobb.2020.12.001 (2021).

Christianson, K. K., Ingersoll, J. D., Landon, R. M., Pfeiffer, N. E. & Gerber, J. D. Characterization of a temperature sensitive feline infectious peritonitis coronavirus. Archives of Virology 109, 185–196, https://doi.org/10.1007/BF01311080 (1989).

Pagat, A.-M. et al. Evaluation of SARS-Coronavirus Decontamination Procedures. Applied Biosafety 12, 100–108, https://doi.org/10.1177/153567600701200206 (2007).

Gundy, P. M., Gerba, C. P. & Pepper, I. L. Survival of Coronaviruses in Water and Wastewater. Food and environmental virology 1, https://doi.org/10.1007/s12560-008-9001-6 (2008).

Ye, Y., Ellenberg, R. M., Graham, K. E. & Wigginton, K. R. Survivability, Partitioning, and Recovery of Enveloped Viruses in Untreated Municipal Wastewater. Environ Sci Technol 50, 5077–5085, https://doi.org/10.1021/acs.est.6b00876 (2016).

Lamarre, A. & Talbot, P. J. Effect of pH and temperature on the infectivity of human coronavirus 229E. Canadian Journal of Microbiology 35, 972–974, https://doi.org/10.1139/m89-160 (1989).

Hofmann, M. & Wyler, R. Quantitation, Biological and Physicochemical Properties of Cell Culture-adapted Porcine Epidemic Diarrhea Coronavirus (PEDV). Veterinary Microbiology 20, 131–142, https://doi.org/10.1016/0378-1135(89)90036-9 (1989).

Quist-Rybachuk, G. V., Nauwynck, H. J. & Kalmar, I. D. Sensitivity of porcine epidemic diarrhea virus (PEDV) to pH and heat treatment in the presence or absence of porcine plasma. Vet Microbiol 181, 283–288, https://doi.org/10.1016/j.vetmic.2015.10.010 (2015).

Hulst, M. M. et al. Study on inactivation of porcine epidemic diarrhoea virus, porcine sapelovirus 1 and adenovirus in the production and storage of laboratory spray-dried porcine plasma. J Appl Microbiol 126, 1931–1943, https://doi.org/10.1111/jam.14235 (2019).

Kariwa, H., Fujii, N. & Takashima, I. Inactivation of SARS coronavirus by means of povidone-iodine, physical conditions and chemical reagents. Dermatology 212(Suppl 1), 119–123, https://doi.org/10.1159/000089211 (2006).

Mullis, L., Saif, L. J., Zhang, Y., Zhang, X. & Azevedo, M. S. Stability of bovine coronavirus on lettuce surfaces under household refrigeration conditions. Food Microbiol 30, 180–186, https://doi.org/10.1016/j.fm.2011.12.009 (2012).

Ahmed, W. et al. Decay of SARS-CoV-2 and surrogate murine hepatitis virus RNA in untreated wastewater to inform application in wastewater-based epidemiology. Environmental research 191, 110092, https://doi.org/10.1016/j.envres.2020.110092 (2020).

Behzadinasab, S., Chin, A., Hosseini, M., Poon, L. & Ducker, W. A. A Surface Coating that Rapidly Inactivates SARS-CoV-2. ACS applied materials & interfaces 12, 34723–34727, https://doi.org/10.1021/acsami.0c11425 (2020).

Biryukov, J. et al. Increasing Temperature and Relative Humidity Accelerates Inactivation of SARS-CoV-2 on Surfaces. mSphere 5, https://doi.org/10.1128/mSphere.00441-20 (2020).

Bivins, A. et al. Persistence of SARS-CoV-2 in Water and Wastewater. Environmental Science and Technology Letters 7, 937–942, https://doi.org/10.1021/acs.estlett.0c00730 (2020).

Blondin-Brosseau, M., Harlow, J., Doctor, T. & Nasheri, N. Examining the persistence of human Coronavirus 229E on fresh produce. Food Microbiol 98, 103780, https://doi.org/10.1016/j.fm.2021.103780 (2021).

Bonny, T. S., SYezli, S. & Lednicky, J. A. Isolation and identification of human coronavirus 229E fromfrequently touched environmental surfaces of a university classroomthat is cleaned daily. American Journal of Infection Control 46, 105–107, https://doi.org/10.1016/j.ajic.2017.07.014 (2018).

Casanova, L., Rutala, W. A., Weber, D. J. & Sobsey, M. D. Coronavirus survival on healthcare personal protective equipment. Infection Control and Hospital Epidemiology 31, 560–561, https://doi.org/10.1086/652452 (2010).

Chan, K. H. et al. Factors affecting stability and infectivity of SARS-CoV-2. J. Hosp. Infect. 106, 226–231, https://doi.org/10.1016/j.jhin.2020.07.009 (2020).

Dai, M. et al. Long-term survival of salmon-attached SARS-CoV-2 at 4 °C as a potential source of transmission in seafood markets. The Journal of infectious diseases 223, 537–539, https://doi.org/10.1093/infdis/jiaa712 (2021).

De Rijcke, M., Shaikh, H. M., Mees, J., Nauwynck, H. & Vandegehuchte, M. B. Environmental stability of porcine respiratory coronavirus in aquatic environments. PloS one 16, e0254540, https://doi.org/10.1371/journal.pone.0254540 (2021).

Fukuta, M., Mao, Z. Q., Morita, K. & Moi, M. L. Stability and Infectivity of SARS-CoV-2 and Viral RNA in Water, Commercial Beverages, and Bodily Fluids. Front. Microbiol. 12, https://doi.org/10.3389/fmicb.2021.667956 (2021).

Grinchuk, P. S., Fisenko, K. I., Fisenko, S. P. & Danilova-Tretiak, S. M. Isothermal evaporation rate of deposited liquid aerosols and the sars-cov-2 coronavirus survival. Aerosol and Air Quality Research 21, 1–9, https://doi.org/10.4209/aaqr.2020.07.0428 (2021).

Harbourt, D. E. et al. Modeling the stability of severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) on skin, currency, and clothing. PLoS Negl Trop Dis 14, e0008831, https://doi.org/10.1371/journal.pntd.0008831 (2020).

Huang, S. Y. et al. Stability of SARS-CoV-2 Spike G614 Variant Surpasses That of the D614 Variant after Cold Storage. mSphere 6, https://doi.org/10.1128/mSphere.00104-21 (2021).

Kasloff, S. B., Strong, J. E., Funk, D. & Cutts, T. Stability of SARS-CoV-2 on Critical Personal Protective Equipment, https://doi.org/10.1101/2020.06.11.20128884 (2020).

Kratzel, A. et al. Temperature-dependent surface stability of SARS-CoV-2. Journal of Infection 81, 452–482, https://doi.org/10.1016/j.jinf.2020.05.066 (2020).

Kwon, T., Gaudreault, N. N. & Richt, J. A. Environmental stability of sars‐cov‐2 on different types of surfaces under indoor and seasonal climate conditions. Pathogens 10, 1–8, https://doi.org/10.3390/pathogens10020227 (2021).

Lee, Y. J., Kim, J. H., Choi, B. S., Choi, J. H. & Jeong, Y. I. Characterization of Severe Acute Respiratory Syndrome Coronavirus 2 Stability in Multiple Water Matrices. Journal of Korean medical science 35, e330, https://doi.org/10.3346/jkms.2020.35.e330 (2020).

Liu, Y. et al. Stability of SARS-CoV-2 on environmental surfaces and in human excreta. J Hosp Infect 107, 105–107, https://doi.org/10.1016/j.jhin.2020.10.021 (2021).

Malenovská, H. Coronavirus Persistence on a Plastic Carrier Under Refrigeration Conditions and Its Reduction Using Wet Wiping Technique, with Respect to Food Safety. Food and environmental virology, 1–6, https://doi.org/10.1007/s12560-020-09447-9 (2020).

Magurano, F. et al. SARS-CoV-2 infection: the environmental endurance of the virus can be influenced by the increase of temperature. Clinical Microbiology and Infection 27, 289.e285–289.e287, https://doi.org/10.1016/j.cmi.2020.10.034 (2021).

Matson, J. M. et al. Effect of Environmental Conditions on SARS-CoV-2 Stability in Human Nasal Mucus and Sputum. Emerging Infectious Diseases 26, 2276–2278, https://doi.org/10.1007/s13312-020-1851-5 (2020).

Norouzbeigi, S. et al. Stability of severe acute respiratory syndrome coronavirus 2 in dairy products. Journal of Food Safety, https://doi.org/10.1111/jfs.12917 (2021).

OCLC. REALM Project - Test 2: Natural attenuation as a decontamination approach for SARS-CoV-2 on five paper-based library and archives materials. (2020).

OCLC. REALM Project - Test 3: Natural attenuation as a decontamination approach for SARS-CoV-2 on five plastic-based materials. (2020).

OCLC. REALM Project - Test 4: Natural attenuation as a decontamination approach for SARSCoV-2 on stacked library materials and expanded polyethylene foam. (2020).

OCLC. REALM Project - Test 5: Natural attenuation as a decontamination approach for SARS-CoV-2 on textile materials. (2020).

OCLC. REALM Project - Test 6: Natural attenuation as a decontamination approach for SARS-CoV-2 on building materials. (2020).

OCLC. REALM Project - Test 7 and 8: Natural attenuation as a decontamination approach for SARS-CoV-2 on materials at various temperatures. (2020).

Owen, L., Shivkumar, M. & Laird, K. The Stability of Model Human Coronaviruses on Textiles in the Environment and during Health Care Laundering. mSphere 6, https://doi.org/10.1128/mSphere.00316-21 (2021).

Pastorino, B., Touret, F., Gilles, M., de Lamballerie, X. & Charrel, R. N. Prolonged Infectivity of SARS-CoV-2 in Fomites. Emerging Infectious Diseases, 2256–2257, https://doi.org/10.5217/ir.2020.00084 (2020).

Paton, S. et al. Persistence of Severe Acute Respiratory Syndrome Coronavirus 2 (SARS-CoV-2) Virus and Viral RNA in Relation to Surface Type and Contamination Concentration. Appl Environ Microbiol 87, e0052621, https://doi.org/10.1128/aem.00526-21 (2021).

Pottage, T. et al. A Comparison of Persistence of SARS-CoV-2 Variants on Stainless Steel. J Hosp Infect https://doi.org/10.1016/j.jhin.2021.05.015 (2021).

Riddell, S., Goldie, S., Hill, A., Eagles, D. & Drew, T. W. The effect of temperature on persistence of SARS-CoV-2 on common surfaces. Virology Journal 17, https://doi.org/10.1186/s12985-020-01418-7 (2020).

Ronca, S. E., Sturdivant, R. X., Barr, K. L. & Harris, D. SARS-CoV-2 Viability on 16 Common Indoor Surface Finish Materials. Health Environments Research and Design Journal https://doi.org/10.1177/1937586721991535 (2021).

Saknimit, M., Inatsuki, I., Sugiyama, Y. & Yagami, K.-I. Autopsies and Asymptomatic Patients During the COVID-19 Pandemic: Balancing Risk and Reward. Exp. Anim. 37, 341–345, https://doi.org/10.3389/fpubh.2020.595405 (1988).

Sizun, J., Yu, M. W. N. & Talbot, P. J. Survival of human coronaviruses 229E and OC43 in suspension and after drying on surfaces: a possible source of hospital-acquired infections. J. Hosp. Infect. 46, 55–60 (2020). 10.1053.jhin.2000.0795.

Smither, S. J., Eastaugh, L. S., Findlay, J. S. & Lever, M. S. Experimental aerosol survival of SARS-CoV-2 in artificial saliva and tissue culturemedia at medium and high humidity. Emerging Microbes & Infections 9, 1415–1417, https://doi.org/10.1080/22221751.2020.1777906 (2020).

Szpiro, L. et al. Role of interfering substances in the survival of coronaviruses on surfaces and their impact on the efficiency of hand and surface disinfection. https://doi.org/10.1101/2020.08.22.20180042 (2020).

Sala-Comorera, L. et al. Decay of infectious SARS-CoV-2 and surrogates in aquatic environments. Water Res 201, 117090, https://doi.org/10.1016/j.watres.2021.117090 (2021).

Reproducible, flexible and high-throughput data extraction from primary literature: The metaDigitise R package. (Biorxiv, 2018).

Katzin, L. I., Sandholzer, L. A. & Strong, M. E. Application of the Decimal Reduction Time Principle to a Study of the Resistance of Coliform Bacteria to Pasteurization. Journal of Bacteriology 45, 265–272 (1943).

Baty, F. et al. A Toolbox for Nonlinear Regression in R: The Package nlstools. Journal of Statistical Software 66 (2015).

NL2SOL - An Adaptive Nonlinear Least-Squares Algorithm (ACM Transactions on Mathematical Software, 1981).

nls2: Non-linear regression with brute force (2015).

Pujol, L. et al. Establishing equivalence for microbial-growth-inhibitory effects (“iso-hurdle rules”) by analyzing disparate listeria monocytogenes data with a gamma-type predictive model. Appl Environ Microbiol 78, 1069–1080, https://doi.org/10.1128/AEM.06691-11 (2012).

Guillier, L. Predictive Microbiology Models and Operational Readiness. Procedia Food Science 7, 133–136, https://doi.org/10.1016/j.profoo.2016.05.003 (2016).

Luong, N-D., Chaix, E. & Guillier, L. lguillier/SACADA_Database: v1.3_Dataset_Accepted, Zenodo, https://doi.org/10.5281/zenodo.7158240 (2022).

Warton, D. I., Duursma, R. A., Falster, D. S. & Taskinen, S. smatr 3 - an R package for estimation and inference about allometric lines. Methods in Ecology and Evolution 3, 257–259 (2012).

Modelling Functions that Work with the Pipe v. 0.1.8 (2020).

Cano, E., Moguerza, J. & Redchuk, A. Six Sigma with R. (Springer, 2012).

Cano, E., Moguerza, J. & Corcoba, M. Quality Control with R. (Springer, 2015).

Grinchuk, P. S., Fisenko, K. I., Fisenko, S. P. & Danilova-Tretiak, S. M. Isothermal Evaporation Rate of Deposited Liquid Aerosols and the SARS-CoV-2 Coronavirus Survival. Aerosol and Air Quality Research 21, https://doi.org/10.4209/aaqr.2020.07.0428 (2021).

Norouzbeigi, S. et al. Stability of SARS-CoV-2 as consequence of heating and microwave processing in meat products and bread. Food Science and Nutrition https://doi.org/10.1002/fsn3.2481 (2021).

Acknowledgements

This research was funded by the French Research National Agency (ANR) in the framework of the SACADA Project (ANR-21-CO13-0001). The authors are grateful to collaborators of the SACADA consortium: Géraldine Boué, Steven Duret, Michel Federighi, Maxence Feher, Lisa Fourniol, Yvonnick Guillois, Pauline Kooh, Sophie Le Poder, Anne-Laure Maillard, Jean-Claude Manuguerra, Mathilde Pivette, Jessica Vanhomwegen, Prunelle Waldman.

Author information

Authors and Affiliations

Contributions

Conceptualization: E. Chaix, L. Guillier, N.-D. M. Luong; Methodology: E. Chaix, L. Guillier, N.-D. M. Luong, M. Sanaa; Software: L. Guillier, N.-D. M. Luong; Formal analysis: E. Chaix, L. Guillier, N.-D. M. Luong; Investigation: C. Batéjat, E. Chaix, L. Guillier, I. Leclercq, N.-D. M. Luong, S. Martin-Latil, M. Sanaa; Data curation: E. Chaix, C. Druesne, L. Guillier, N.-D. M. Luong; Write – original draft preparation: E. Chaix, L. Guillier, N.-D. M. Luong; Writing – review and editing: C. Batéjat, E. Chaix, C. Druesne, L. Guillier, I. Leclercq, N.-D. M. Luong, S. Martin-Latil, M. Sanaa; Supervision: E. Chaix, L. Guillier, M. Sanaa; Project administration: E. Chaix, S. Martin-Latil, M. Sanaa; Funding acquisition: E. Chaix, M. Sanaa.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Luong, ND.M., Guillier, L., Martin-Latil, S. et al. Database of SARS-CoV-2 and coronaviruses kinetics relevant for assessing persistence in food processing plants. Sci Data 9, 654 (2022). https://doi.org/10.1038/s41597-022-01763-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-022-01763-y