Abstract

Minimizing and understanding errors is critical for quantum science, both in noisy intermediate scale quantum (NISQ) devices1 and for the quest towards fault-tolerant quantum computation2,3. Rydberg arrays have emerged as a prominent platform in this context4 with impressive system sizes5,6 and proposals suggesting how error-correction thresholds could be significantly improved by detecting leakage errors with single-atom resolution7,8, a form of erasure error conversion9,10,11,12. However, two-qubit entanglement fidelities in Rydberg atom arrays13,14 have lagged behind competitors15,16 and this type of erasure conversion is yet to be realized for matter-based qubits in general. Here we demonstrate both erasure conversion and high-fidelity Bell state generation using a Rydberg quantum simulator5,6,17,18. When excising data with erasure errors observed via fast imaging of alkaline-earth atoms19,20,21,22, we achieve a Bell state fidelity of \(\ge 0.997{1}_{-13}^{+10}\), which improves to \(\ge 0.998{5}_{-12}^{+7}\) when correcting for remaining state-preparation errors. We further apply erasure conversion in a quantum simulation experiment for quasi-adiabatic preparation of long-range order across a quantum phase transition, and reveal the otherwise hidden impact of these errors on the simulation outcome. Our work demonstrates the capability for Rydberg-based entanglement to reach fidelities in the 0.999 regime, with higher fidelities a question of technical improvements, and shows how erasure conversion can be utilized in NISQ devices. These techniques could be translated directly to quantum-error-correction codes with the addition of long-lived qubits7,22,23,24.

Similar content being viewed by others

Main

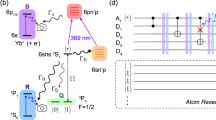

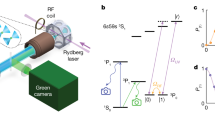

We begin by detailing our erasure-conversion scheme and how it is used in conjunction with Bell state generation, resulting in fidelities that are competitive with other state-of-the-art platforms15,16,25,26. Our experimental apparatus has been described in detail before13, and is based on trapping individual strontium atoms in arrays of optical tweezers19,20 (Methods). Strontium features a rich energy structure, allowing us to utilize certain energy levels as a qubit subspace to perform entangling operations and separate levels for the detection of leakage errors (Fig. 1a).

a, Level structure used in this work. We distinguish two subspaces: a qubit subspace in which the atoms interact via their Rydberg states and a measurement subspace used to detect leakage errors from the qubit subspace with single-site resolution, realizing erasure conversion. b, Sketch of the erasure-conversion scheme, as applied to Bell pair generation. After arranging atoms into pairs (top) we prepare them in \(\left|g\right\rangle \), and entangle them via the Rydberg blockade mechanism (right), denoted by a unitary operation \(\widehat{U}(t)\). Immediately afterwards, we auto-ionize atoms in \(\left|r\right\rangle \), effectively projecting the populations of the Bell states, and follow with a fast erasure-conversion image to detect leakage out of the qubit subspace during the preparation or evolution periods. This is followed by the final detection of atoms in \(\left|g\right\rangle \), yielding two separate, independent images. We can discard data from pairs where atoms are detected in the erasure-error image, termed erasure excision in the following. Atom fluorescence images are single shot, with post-processing applied to improve detection fidelity30 (Methods). c, Lower bounds for Bell state fidelities with (blue) and without (pink) the erasure excision, and using incoherent repumping to reduce preparation errors instead of erasure excision (green; Methods). We present the results for the raw data, corrected for measurement errors and corrected for SPAM errors. All data are averaged over eight pairs of atoms that are excited in parallel. Error bars represent a 68% confidence interval (Extended Data Fig. 5 and Methods).

To controllably generate entanglement between atoms, we use Rydberg interactions27,28,29. When two atoms in close proximity are simultaneously excited to high-lying electronic energy levels, called Rydberg states, they experience a distance-dependent van der Waals interaction V = C6/r6, where r is the interatomic spacing and C6 is an interaction coefficient. If the Rabi frequency, Ω, which couples the ground, \(\left|g\right\rangle \), and Rydberg, \(\left|r\right\rangle \), states is much smaller than the interaction shift, Ω/V ≪ 1, the two atoms cannot be simultaneously excited to the Rydberg state (Fig. 1b, inset), a phenomena known as Rydberg blockade. In this regime, the laser drives a unitary operation, \(\widehat{U}(t)\), that naturally results in the two atoms forming a Bell state, \(\left|{\varPsi }^{+}\right\rangle =\frac{1}{\sqrt{2}}(\left|gr\right\rangle +\left|rg\right\rangle )\), between the ground and Rydberg states (Fig. 1b).

This Bell state generation has several major practical limitations. Of particular interest here are leakage errors to the absolute ground state, 1S0, which are converted to erasure errors in our work as described below (and in Extended Data Fig. 1). The first error of this type is imperfect preparation of atoms in \(\left|g\right\rangle \) before applying \(\widehat{U}(t)\). The second arises from decay out of the Rydberg state along multiple channels. We distinguish decay into ‘bright’ states, which we can image, and ‘dark’ states, which are undetected (Extended Data Fig. 2). The former primarily refers to low-lying energy states that are repumped to 1S0 as part of the imaging process or decay to 1S0 via intermediate states, while the latter mainly consists of nearby Rydberg states accessed via blackbody radiation.

Here we use a scheme—theoretically proposed7 but not yet demonstrated—that allows us to detect the location of such leakage errors (Fig. 1b), converting them into so-called erasure errors, that is, errors with a known location9. To this end, we demonstrate fast, 24-μs imaging of atoms in 1S0 (Extended Data Fig. 1) with single-site resolution and \(0.98{0}_{-1}^{+1}\) fidelity. Such fast imaging has previously been performed for a few, freely propagating, alkali atoms30, but not for many trapped atoms in tweezer arrays or alkaline-earth atoms (Methods).

Our general procedure is shown in Fig. 1b (further details in Extended Data Fig. 3). We first rearrange31,32 atoms into pairs, coherently transfer them to \(\left|g\right\rangle \) and then perform the entangling \(\widehat{U}\) operation. Immediately after, we auto-ionize the atoms to project the populations of the resultant state.

We then perform the fast erasure image; any atoms that are detected are concluded to be the result of some leakage error process. Importantly, the erasure image does not affect atoms remaining in \(\left|g\right\rangle \), and is extremely short compared with its lifetime, resulting in a survival probability in \(\left|g\right\rangle \) of \(0.999995{4}_{-12}^{+12}\) (Extended Data Fig. 1 and Methods). Hence, the erasure image does not perturb the subsequent final readout. Thus, we obtain two separate images characterizing a single experimental repetition, with the final image showing the ostensible result of \(\widehat{U}\) and the erasure image revealing leakage errors with single-site resolution.

We note that this work is not a form of mid-circuit detection as no superposition states of \(\left|g\right\rangle \) and \(\left|r\right\rangle \) exist at the time of the erasure image. Instead, our approach is a noise mitigation strategy via erasure excision, where experimental realizations are discarded if erasures are detected. In contrast to other leakage mitigation schemes previously demonstrated in matter-based qubit platforms33,34,35, we directly spatially resolve leakage errors in a way that is decoupled from the performed experiment, is not post-selected on the final qubit readout and does not require any extra qubits to execute.

However, the coherence between \(\left|g\right\rangle \) and \(\left|r\right\rangle \) can, in principle, be preserved during erasure detection for future applications; in particular, we see no significant difference in Bell state lifetime with and without the imaging light for erasure detection on (Extended Data Fig. 4 and Methods). We also expect long-lived nuclear qubits encoded in \(\left|g\right\rangle \) to be unperturbed by our implementation of erasure conversion7,22,23,24.

Bell state generation results

With a procedure for performing erasure conversion in hand, we now describe its impact on Bell state generation. Experimentally, we only obtain a lower bound for the Bell state generation fidelity13 (Methods and Extended Data Fig. 5); the difference of this lower bound to the true fidelity is discussed further below.

We first coherently transfer atoms to \(\left|g\right\rangle \) as described before, and then consider three scenarios (Fig. 1c and Extended Data Table 1). In the first, as a baseline we perform the entangling unitary \(\widehat{U}\) without considering any erasure detection results (pink bars). In the second, we excise data from any pairs of atoms with an observed erasure error (blue bars). Finally, we compare against another strategy for mitigating preparation errors through incoherent repumping13, but without erasure detection (green bars). Notably, the raw value for the Bell state lower bound with erasure excision is \(\ge 0.996{2}_{-13}^{+10}\), which is significantly higher than with the other methods. This difference mainly comes from erasure excision of preparation errors and, to a much lower degree, Rydberg decay. These contribute at the level of about 5 × 10−2 and \({1.2}_{-3}^{+3}\times 1{0}^{-4}\), respectively (Methods).

Correcting for final measurement errors, we find a lower bound of \(\ge 0.997{1}_{-13}^{+10}\), which quantifies our ability to generate Bell pairs conditioned on finding no erasure events. To quantify the quality of the Rydberg entangling operation \(\widehat{U}(t)\) itself, we further correct for remaining preparation errors that are not detected in the erasure image (Methods), and find a state preparation and measurement (SPAM) corrected lower bound of \(\ge 0.998{5}_{-12}^{+7}\).

To our knowledge, these bare, measurement-corrected and SPAM-corrected values are, respectively, the highest two-qubit entanglement fidelities measured for neutral atoms so far, independent of the means of entanglement generation. While Bell state generation as demonstrated here is not a computational two-qubit quantum gate—which requires additional operations—our results are indicative of the fidelities achievable in Rydberg-based gate operations.

Error modelling

Importantly, we understand remaining errors in the entangling operation as well the nature of detected erasure errors from a detailed ab initio error model simulation for SPAM-corrected fidelities (Methods and Fig. 2). We identify limited interaction strength as a dominant effect that restricted SPAM-corrected entanglement fidelities in our previous work13 (Fig. 2a); in particular, one major difference here is that we operate at smaller distance and hence larger V/Ω. In line with experimental data (red markers), fidelities at large distances are limited to \({F}_{{\rm{Bell}}}\,\le 1-\frac{5}{8}{(\varOmega /V)}^{2}\) obtained from perturbation theory (black dashed line; Methods).

a, SPAM-corrected Bell state fidelity as a function of the ratio of interaction energy and Rabi frequency, V/Ω. Error-free simulations (blue line) show that fidelities continually increase with increasing V/Ω, in agreement with results from perturbation theory (dashed line). For large enough interaction strength (V/Ω > 50), other error sources become dominant, and we use a noisy open system dynamics simulation from which we obtain an estimate of the true fidelity (light grey fill) and for the lower-bounding procedure used in the experiment (dark grey fill). We find good agreement between simulation and experimental results with erasure excision (pink markers). b, Predicted Bell state fidelity for V/Ω = 140 from simulations turning on a single noise term at a time. Dominant limitations come from laser frequency and intensity noise, as well as decay of the Rydberg state into dark states. We also show the results when taking into account all errors (Methods), for both the true fidelity and the lower-bound estimation (right). The lower bound significantly underestimates the true fidelity. The shaded areas in a and the error bars in b represent the standard deviation of the mean over 5,000 trajectories. Error bars on the experimental results represent the standard error of the mean.

For strong enough interaction, V/Ω > 50, corresponding to distances of r < 3 μm, other error sources become limiting. In this short-distance regime, the experimental SPAM-corrected fidelity lower bound is in good agreement with the error model prediction of \(\ge 0.9988{1}_{-3}^{+3}\) (dark grey fill).

Our error model results show that the lower-bound procedure significantly underestimates the true fidelity (light grey fill), which is found to be \(0.9993{1}_{-6}^{+6}\). This effect arises because the lower bound essentially evaluates the fidelity of \(\widehat{U}\) by a measurement after performing \(\widehat{U}\) twice (Methods), meaning particular errors can be exaggerated. Given the good match of the error model and experimental fidelity lower bounds, we expect this effect to be present in experiment as well, and to underestimate the true SPAM-corrected fidelity by about 5 × 10−4.

The remaining infidelity is a combination of multiple errors. In Fig. 2b, we report an error budget for the most relevant noise source contributions to the Bell state infidelity (Methods) at the experimentally chosen V/Ω = 140. Frequency and intensity laser noise are dominant limitations, but could be alleviated by improving the stability of the laser power, and reducing its linewidth, for instance, via cavity filtering36. Eliminating laser noise completely would lead to fidelities of about 0.9997 in our model. The other major limit is Rydberg state decay into dark states, which cannot be converted into an erasure detection with our scheme. This decay is mostly blackbody induced7,37, and thus could be greatly reduced by working in a cryogenic environment38, leaving mostly spontaneous decay that is bright to our erasure detection. Accounting for these improvements, it is realistic that Rydberg-based Bell state generation in optical tweezers arrays could reach more than 0.9999 fidelity in the coming years.

Quantum simulation with erasure conversion

Having demonstrated the benefits of erasure excision for the case of improving two-qubit entanglement fidelities, we now show it can be similarly applied to the case of many-body quantum simulation, demonstrating the utility of erasure detection for noisy intermediate scale quantum (NISQ) device applications. As part of this investigation, we also distinguish erasure errors from preparation and Rydberg spontaneous decay, the latter of which becomes more visible in a many-body setting and for longer evolution times.

As a prototypical example, we explore a quasi-adiabatic sweep into a \({{\mathbb{Z}}}_{2}\)-ordered phase (Fig. 3a) through the use of a varying global detuning39 (Fig. 3b). In this ordered phase, ground and Rydberg states form an antiferromagnetic (AFM) pattern, with long-range order appearing at a quantum phase transition. Unlike previous examples17,40, we operate in the effectively attractive interacting regime of the Rydberg-blockaded space39, which features a true two-fold-degenerate ground state for systems with an even number of atoms, even for open boundary conditions (Methods), and without explicitly modifying the boundary40. The ground state in the deeply ordered limit consists of two oppositely ordered AFM states, \(\left|grgr...gr\right\rangle \) and \(\left|rgrg...rg\right\rangle \).

a,b, We perform (b) quasi-adiabatic sweeps with N = 26 spins in the effective ground-state manifold of (a) an attractive Rydberg Hamiltonian (Methods), starting from the initially disordered phase and ending in the \({{\mathbb{Z}}}_{2}\) ordered phase. c, We perform two erasure images, one preceding the quantum simulation (to detect preparation errors) and one following (to partially detect Rydberg decay errors). d, The probability for detecting a preparation error (orange markers) remains constant while the probability for detecting a decay error (green markers) grows in agreement with the Rydberg lifetime and detection infidelities (solid lines; Methods). e, The total probability, PAFM, for forming either of the AFM \({{\mathbb{Z}}}_{2}\) states is improved by performing erasure excision on all errors (blue markers), compared with only on preparation errors (orange markers) or performing no excision (pink markers). The sensitivity of PAFM with respect to a change in Δ also increases with erasure excision (inset). f, The probability distribution for measuring a given AFM magnetization is initially peaked at 0 in the disordered phase, before bifurcating when entering the \({{\mathbb{Z}}}_{2}\) phase, consistent with spontaneous symmetry breaking. g, Deep in either phase, erasure excision leads to a sharpening of the probability distribution (left and right). Around the phase transition, we observe a close-to-flat distribution (middle). Error bars represent the standard error of the mean.

Staying adiabatic during ground-state preparation requires evolution over microseconds, orders of magnitude longer than the two-qubit entanglement operation shown before, which magnifies the effect of Rydberg decay. To differentiate between leakage out of the qubit manifold due to either preparation errors or Rydberg decay, we perform two erasure images, one before the adiabatic sweep, which captures preparation errors, and one after (Fig. 3c). The second image allows us to measure Rydberg decay into the detection subspace throughout the sweep. For a system size of N = 26 atoms (Fig. 3d), we see the number of detected preparation erasures (orange markers) stays constant over the course of a 3-μs sweep; conversely, the number of detected decay erasures (green markers) grows over time, in good agreement with the measured Rydberg lifetime and erasure image infidelities (green solid line; Methods).

With the ability to distinguish these effects, we plot the total probability to form either of the AFM states, \({P}_{{\rm{AFM}}}=P(| grgr...gr\rangle )\,+\) \(P(| rgrg...rg\rangle )\)(Fig. 3e). At the conclusion of the sweep, we find \({P}_{{\rm{AFM}}}=0.3{3}_{-2}^{+2}\) without any erasure excision (pink markers). By excising instances with preparation erasures, this fidelity is improved to \(0.4{4}_{-2}^{+2}\) (orange markers) and is then further improved to \(0.4{9}_{-2}^{+2}\) by additionally excising Rydberg decay erasures. The sharpness of the signal, exemplified by the derivative of PAFM with respect to the detuning, is similarly improved near the phase boundary (Fig. 3e, inset). We also observe that the gain in PAFM from erasure excision increases with system size (Extended Data Fig. 6).

We further explore how errors affect quantities reflecting higher-order statistics. To this end, we explore the probability distribution to find magnetic order of different magnitude by studying the AFM magnetization operator, defined as

where \({\widehat{Z}}_{S}={\sum }_{j\in S}{\widehat{Z}}_{j}\) is the total magnetization operator in sublattice S = A (odd sites) or S = B (even sites) respectively, NS is the number of atoms in each sublattice, and \({\widehat{Z}}_{j}=\left|r\right\rangle \left\langle r\right|-\left|g\right\rangle \left\langle \,g\right|\) is the local magnetization at site j. We plot the probability to find a specific eigenvalue, M, of \(\widehat{M}\) as a function of detuning (Fig. 3f). While the values of M are initially tightly grouped around M = 0 in the disordered phase, as the sweep progresses the probability distribution bifurcates, forming two separate dominant peaks in the \({{\mathbb{Z}}}_{2}\) phase, consistent with aforementioned two-fold spontaneous symmetry breaking across the quantum phase transition. We find that erasure excision improves the sharpness of the distribution in both the disordered and \({{\mathbb{Z}}}_{2}\) phases (Fig. 3g). Near the phase transition, the distribution is close to flat, consistent with order appearing at all length scales.

These results demonstrate improvements in fidelity for preparation of long-range-ordered ground states with erasure excision in quantum simulation experiments, a proof of principle for utilizing erasure conversion in NISQ-type applications.

Learning from erasure errors

Finally, we turn to studying a tool enabled by our implementation of erasure conversion: exploring the effect of errors on experimental outcomes at a microscopic level and studying correlations between different error sources, which is enabled by having three separate images for a given experimental run (Fig. 4a). In particular, we consider the joint probability distribution, \({\mathcal{P}}({e}_{1}^{(i)},{e}_{2}^{(\,j)},{e}_{3}^{(k)})\), that atoms at sites i, j and k are detected respectively in the preparation erasure image (e1), the decay erasure image (e2) and the final state detection image (e3).

a, Post-processed30 single-shot atom fluorescence images (Methods). After arranging the array (top panel), we use the fast erasure images to learn how detected errors (middle panels) affect detection outcomes (bottom panel). b, Conditional probability to detect no atom in the final image as a function of sweep progress and distance from a hypothetical erasure event. In the case of no erasures (left), the resulting profile is uniform. However, when conditioning on detecting a preparation erasure (middle), the error breaks the \({{\mathbb{Z}}}_{2}\) symmetry by establishing a single AFM order. In the case of conditioning on decay errors (right), the situation is more complex. c, AFM magnetization (equation (1)) as a function of time. Preparation erasures (orange markers) lead to a growth of a single AFM order with Rydberg excitations predominantly on sublattice A (defined as sites an odd distance from the erasure position). Decay erasures (green markers) follow a similar trend at early times by acting as effective preparation errors, but past the critical point (vertical dashed line), their behaviour reverses: a decay erasure spontaneously breaks the two-fold symmetry, where the neighbouring sites must have been in the ground state due to the \({{\mathbb{Z}}}_{2}\) structure, yielding Rydberg excitations on sublattice B. d, For the maximum sweep duration, the probability of detecting a Rydberg decay erasure (bars) is significantly increased (decreased) from the baseline level (pink fill) at a distance of one (two) away from the site of a detected preparation erasure, induced by the altered Rydberg population on these sites due to the crystal formation in b, indicating direct detection of correlations between errors through erasure imaging. Error bars represent the standard error of the mean.

We again consider adiabatic sweeps into the \({{\mathbb{Z}}}_{2}\) phase as in Fig. 3, but now with a total duration of 8 μs. We first study \({\mathcal{P}}({e}_{3}^{(\,j+d)}=0| {e}_{1}^{(\,j)}=0)\) where d is the distance from site j, equivalent to finding a Rydberg excitation on site j + d, conditioned on finding no preparation erasure on site j. We plot this quantity (Fig. 4b, left) as a function of both d and the sweep duration. We explicitly average over choices of j and find a signal essentially uniform in d.

However, if we instead consider \({\mathcal{P}}({e}_{3}^{(\,j+d)}=0| {e}_{1}^{(\,j)}=1)\), the probability to find a Rydberg excitation on site j + d conditioned on detecting a preparation erasure on site j, markedly different behaviour emerges (Fig. 4b, middle). For simplicity, we further post-select on instances where only a single erasure is detected across the entire array. At intermediate sweep times, we observe that an AFM order forms around the preparation erasure error position. We interpret the error as breaking the atom chain into two shorter chains; excitations will naturally form at the system edges of these shorter chains to maximize the Rydberg density in the attractive regime in which we operate (Methods). This effectively pins the Rydberg density around the error, which then establishes a preferred AFM order further out into the array. Interestingly, the equivalent quantity for decay erasures, \({\mathcal{P}}({e}_{3}^{(\,j+d)}=0| {e}_{2}^{(\,j)}=1)\), shows a more complex behaviour.

To quantify this behaviour more explicitly, we consider a variant of the AFM magnetization (equation (1)) conditioned on the erasure location, where sublattice A (B) is now defined as being sites an odd (even) distance away from an erasure. In Fig. 4c, we plot the mean AFM magnetization for both the preparation (orange circles) and decay erasure (green circles) cases. Preparation erasures develop a negative, single AFM order as they pin Rydberg excitations at odd distances away from the erasure.

Decay erasures behave similarly before the critical point, as Rydberg decay acts effectively as a preparation error. However, past the critical point, this behaviour changes: decay now acts as a measurement on the AFM superposition ground state, selecting one of these orders. In this case, assuming perfect \({{\mathbb{Z}}}_{2}\) states, the neighbouring sites must have been in the ground state to detect a decay, meaning the AFM order is reversed from the preparation case. This leads to data that first dip to negative values and then grow to positive values past the phase transition (green markers in Fig. 4c).

We also study correlations between preparation errors and Rydberg decay. In particular, a preparation error forces atoms at odd intervals from the preparation erasure to have a higher probability to be in Rydberg states, meaning they should also be more likely to decay. As shown in Fig. 4d, we directly observe this effect at the end of the sweep by considering \({\mathcal{P}}({e}_{2}^{(\,j+d)}=1| {e}_{1}^{(\,j)}=1)\), the probability to detect a decay erasure at a distance d away from a preparation erasure. For d = 1 (d = 2), this probability is significantly increased (decreased) from the unconditional decay erasure probability, in line with the increased (decreased) Rydberg population on these sites, which shows that errors are correlated.

Before concluding, we note that erasure excision for preparation errors using the first erasure image can be considered heralding the subsequent quantum simulation on the presence of atoms in tweezers in the correct initial state. For erasure excision of Rydberg decay using the second erasure image, we interpret the post-selected results as coming from a non-jump trajectory in a Monte Carlo wavefunction approach41.

Discussion and outlook

Our results could have broad implications for quantum science and technology. First, our two-qubit entanglement fidelity values and associated error modelling imply that Rydberg arrays, which have already demonstrated scalability to hundreds of atoms5,6, can be simultaneously equipped with high-fidelity two-qubit operations, a unique combination across all platforms. Besides our current demonstration of about 0.999 SPAM-corrected two-qubit fidelity, modelling implies that values of about 0.9997 could be possible with laser noise improvements alone. Furthermore, utilizing a cryogenic environment could freeze out blackbody decay to a large degree38, with remaining decay detected as an erasure, leaving almost no intrinsic decoherence. In this context, we note very recent results for improved computational gate fidelities42.

Second, the demonstrated erasure conversion techniques could find widespread applications for both classical and quantum error correction. For classical correction, our techniques could be modified to correct for state-preparation errors via subsequent atom rearrangement31,32, instead of just excising such events. Furthermore, thermal excitations could be converted to erasures and subsequently removed by driving a blue sideband transition between 1S0 and 3P0 (Fig. 1a) before the fast image and subsequent atom rearrangement31,32, effectively realizing erasure-based atomic cooling.

For quantum error correction, our techniques could be combined with a long-lived qubit that is dark to the fast image, for example, realized with the 3P0 nuclear qubit in neutral Sr (ref. 43) and Yb (refs. 22,24), or S1/2 in Ca+ and Ba+ ions10. Similarly, schemes for implementing erasure conversion in superconducting circuits have been put forward11,12. Such techniques could lead to markedly reduced quantum-error-correction thresholds7,8 for fault-tolerant quantum computing.

Third, our results also show clearly how NISQ applications1 can benefit from erasure conversion. Our demonstrated improvements for analogue quantum simulation of ground-state physics could be extended to non-equilibrium dynamics, for example, targeting regimes generating large entanglement entropies18, with the potential to reach a quantum advantage over classical simulations44. We note that while our implementation of erasure excision slows down the effective sampling rate of the quantum device (Extended Data Fig. 7), the classical cost can increase highly nonlinearly with the resulting fidelity increase, and we hence expect a gain for such tasks. Furthermore, we envision that erasure excision will improve other tasks such as quantum optimization45 and potentially quantum metrology46.

Finally, insights into erasure–error correlations, as in Fig. 4, could be used to understand error processes in NISQ devices in unprecedented detail, in particular if erasure detection could be made time-resolved with respect to the many-body dynamics. This could also be used to realize post-measurement physics with erasure detection, such as measurement-induced phase transitions47,48 and measurement-altered quantum criticality49.

Note added in proof: During completion of this work, we became aware of work performing erasure detection with ytterbium atoms50.

Methods

Fast imaging on the erasure detection subspace

Here we describe how we perform the erasure imaging that allows us to detect site-resolved leakage errors30. To both avoid any extra heating coming from the imaging beams and optimize the imaging fidelity, we shine two identical counter-propagating beams with crossed π-polarization and Rabi frequencies of Ω/2π ≈ 40 MHz on the 1S0 → 1P1 transition (Extended Data Fig. 1a). This minimizes the net force on an atom, and the crossed polarization avoids intensity interference patterns.

We highlight the characteristic features of this imaging scheme experimentally. We show in Extended Data Fig. 1b the survival probability of atoms in 1S0 as a function of imaging time. After 4 μs, more than 80% of the atoms are lost. However, the number of detected photons continues to increase: even though the kinetic energy of the atoms is too large to keep them trapped, their mean position remains centred on the tweezers. Importantly, for our implementation of erasure excision, atom loss during the erasure image is inconsequential for our purposes as long as the initial presence of the atom is correctly identified, but in any case, other fast imaging schemes may alleviate this effect51. After about 24 μs, the atomic spread becomes too large and the number of detected photons plateaus. The obtained detection histogram is shown in Extended Data Fig. 1c. We present the results both for empty (blue) and filled (red) tweezers, which we achieve by first imaging the atoms using usual, high survival imaging for initial detection in a 50% loaded array, then perform the fast image. We obtain a typical detection fidelity of \(0.98{0}_{-1}^{+1}\) of true positives and true negatives, limited by the finite probability for atoms in 1P1 to decay into 1D2 (Extended Data Fig. 1a).

This imaging scheme is sufficiently fast to avoid perturbing atoms in 3P0, as measured by losses from 3P0 as a function of imaging time (Extended Data Fig. 1d). We fit the data (circles) using a linear function (solid line), and obtain a loss of \(0.000004{6}_{-12}^{+12}\) per image, consistent with the lifetime of the 3P0 state52 of about 5 s for the trap depth of 45 μK used during fast imaging.

As to the nature of the detected erasure errors for the Bell state generation, we find that preparation errors contribute the vast majority of erasure events compared with bright Rydberg decay, and excising them has a more significant impact on reducing infidelities. In particular, application of \(\widehat{U}\) lasts for only about 59 ns, which is significantly shorter than the independently measured bright state decay lifetime of \(16{8}_{-14}^{+14}\,{\rm{\mu }}{\rm{s}}\) (Extended Data Fig. 2). The error model described in Fig. 2 suggests that excising such errors results in an infidelity reduction of only \(1.{2}_{-3}^{+3}\times 1{0}^{-4}\) (Methods). Conversely, preparation errors account for about 5 × 10−2 infidelity per pair due to the long time between preparation in \(\left|g\right\rangle \) and Rydberg excitation (Extended Data Fig. 3). Hence, the gains in fidelity from erasure conversion mainly come from eliminating nearly all the preparation errors, which has the added benefit of significantly reducing error bars on the SPAM-corrected values. Still, SPAM-corrected values might also benefit from the small gain in eliminating the effect of bright state decay, and from avoiding potential deleterious effects arising from higher atomic temperature in the repumper case.

For erasure detection used in the context of many-body quantum simulation, we adjust the binarization threshold for atom detection to raise the false-positive imaging fidelity to 0.9975, while the false-negative imaging fidelity is lowered to about 0.6 (Fig. 3d); this is done as a conservative measure to prioritize maximizing the number of usable shots while potentially forgoing some fidelity gains (Extended Data Fig. 7).

We note that the scheme we show here is not yet fundamentally limited, and there are a number of technical improvements that could be made. First, the camera we use (Andor iXon Ultra 888) has a quantum efficiency of about 80%, which has been improved in some recent models, such as quantitative complementary metal oxide semiconductor (qCMOS) devices. Further, we currently image atoms from only one direction, when, in principle, photons could be collected from both objectives53. This would improve our estimated total collection efficiency of about 4% by a factor of 2, leading to faster imaging times with higher fidelity (as more photons could be collected before that atoms were ejected from the trap). Furthermore, the fidelity may be substantially improved by actively repumping the 1D2 state back into the imaging manifold to not effectively lose any atoms via this pathway.

Details of Rydberg excitation

Our Rydberg excitation scheme has been described in depth previously13. Before the Rydberg excitation, atoms are initialized from the absolute ground state 5s2 1S0 to the metastable state 5s5p 3P0 (698.4 nm) through coherent drive. Subsequently, tweezer trap depths are reduced by a factor of ten to extend the metastable state lifetime.

For Rydberg excitation and detection, we extinguish the traps, drive to the Rydberg state (5s61s 3S1, mJ = 0, 31 nm), where mJ is the magnetic quantum number of the total angular momentum, and finally perform auto-ionization of the Rydberg atoms13. Auto-ionization has a characteristic timescale of about 5 ns, but we perform the operation for 500 ns to ensure total ionization. We report a more accurate measurement of the auto-ionization wavelength as about 407.89 nm. In the final detection step, atoms in 3P0 are read out via our normal imaging scheme13,54.

Atoms can decay from 3P0 between state preparation and Rydberg excitation, which is 60 ms to allow time for the magnetic fields to settle. In previous work13, we supplemented coherent preparation with incoherent pumping to 3P0 immediately before Rydberg operations. However, during the repumping process, atoms can be lost due to repeated recoil events at low trap depth, which is not detected by the erasure image, and thus can lower the bare fidelity. Even with SPAM correction of this effect, we expect the fidelity with repumping to be slightly inferior owing to an increased atomic temperature for pumped atoms.

Rydberg Hamiltonian

The Hamiltonian describing an array of Rydberg atoms is well approximated by

which describes a set of interacting two-level systems, labelled by site indices i and j, driven by a laser with Rabi frequency Ω and detuning Δ. The interaction strength is determined by the C6 coefficient and the lattice spacing a. Operators are \({\widehat{X}}_{i}={\left|r\right\rangle }_{i}{\left\langle g\right|}_{i}+{\left|g\right\rangle }_{i}{\left\langle r\right|}_{i}\) and \({\widehat{n}}_{i}={\left|r\right\rangle }_{i}{\left\langle r\right|}_{i}\), where \({\left|g\right\rangle }_{i}\) and \({\left|r\right\rangle }_{i}\) denote the metastable ground and Rydberg states at site i, respectively, and ℏ is the reduced Planck constant.

For the case of measuring two-qubit Bell state fidelities, we set Ω/2π = 6.2 MHz. Interaction strengths in Fig. 2a are directly measured at interatomic separations of 4 μm and 5 μm, and extrapolated via the predicted 1/r6 scaling to the level at 2.5 μm. Mean atomic distances are calibrated via a laser-derived ruler based on shifting atoms in coherent superposition states55. We calibrate C6/2π = 230(25) GHz μm6 using maximum likelihood estimation (and associated uncertainty) from resonant quench dynamics18, which additionally calibrates a systematic offset in our global detuning.

For performing many-body quasi-adiabatic sweeps, the detuning is swept symmetrically in a tangent profile from +30 MHz to −30 MHz, while the Rabi frequency is smoothly turned on and off with a maximum value of Ω/2π = 5.6 MHz. For an initially positive detuning, the \(\left|r\right\rangle \) state is energetically favourable, making the all-ground initial state, \(\left|gg...gg\right\rangle \), the highest energy eigenstate of the blockaded energy sector, where no neighbouring Rydberg excitations are allowed. For negative detunings, where \(\left|g\right\rangle \) is energetically favourable, the highest energy state uniquely becomes the symmetric AFM state \((\left|grgr...gr\right\rangle +\left|rgrg...rg\right\rangle )/\sqrt{2}\) in the deeply ordered limit. Thus, considering only the blockaded energy sector, sweeping the detuning from positive to negative detuning (thus remaining in the highest energy eigenstate) is equivalent to the ground-state physics of an effective Hamiltonian with attractive Rydberg interaction and inverted sign of the detuning. This equivalence allows us to operate in the effectively attractive regime of the blockaded phase diagram of ref. 39. For our Hamiltonian parameters, we use exact diagonalization numerics to identify the infinite-size critical detuning using a scaling collapse near the finite-system size minimum energy gap56.

Error modelling

Our error model has been described previously13,18. We perform Monte Carlo wavefunction-based simulations57, accounting for a variety of noise sources including time-dependent laser intensity noise, time-dependent laser frequency noise, sampling of the beam intensity from the atomic thermal spread, Doppler noise, variations of the interaction strength from thermal spread, beam pointing stability and others. All of the parameters that enter the error model are independently calibrated via selective measurements directly on an atomic signal if possible, as shown in Extended Data Table 2. Parameters are not fine-tuned to match the measured Bell state fidelity, and the model equally well describes results from many-body quench experiments18.

Extraction of the Bell state fidelity

To extract the Bell state fidelities quoted in the main text, we use a lower-bound method13, which relies on measuring the populations in the four possible states Pgr, Prg, Pgg and Prr during a Rabi oscillation between \(\left|gg\right\rangle \) and \(\left|{\varPsi }^{+}\right\rangle \). The lower bound on Bell state fidelity is given by:

where \({P}_{i}^{2{\rm{\pi }}}\) are the measured probabilities for the four states at 2π, and \({P}_{gr+rg}^{{\rm{\pi }}}\) is the probability Pgr + Prg measured at π. To measure these probabilities with high accuracy, we concentrate our data-taking around the π and 2π times (Extended Data Fig. 5a), and fit the obtained values using quadratic functions \(f(t)={p}_{0}+{p}_{1}{(t-{p}_{2})}^{2}\), where t is time, and (p0, p1, p2) are free parameters. We first detail the fitting method, then how we obtain the four probabilities, and finally the extraction of the Bell state fidelity from these.

Fitting method

We perform a fit that takes into account the underlying beta distribution of the data and prevents systematic errors arising from assuming a Gaussian distribution of the data. The aim of the fit is to obtain the three-dimensional probability density function Q(p0, p1, p2) of f, using each experimental data point i defined by its probability density function \({{\mathcal{P}}}_{i}(x)\), where x is a probability. To obtain a particular value of \(Q({\widetilde{p}}_{0},{\widetilde{p}}_{1},{\widetilde{p}}_{2})\), we look at the corresponding probability density function value \({{\mathcal{P}}}_{i}(\,f({t}_{i}))\) for each data point i, where \(f({t}_{i})={\widetilde{p}}_{0}+{\widetilde{p}}_{1}{({t}_{i}-{\widetilde{p}}_{2})}^{2}\), and assign the product of each \({{\mathcal{P}}}_{i}(\,f({t}_{i}))\) to the fit likelihood function:

We repeat this for various \([{\widetilde{p}}_{0},{\widetilde{p}}_{1},{\widetilde{p}}_{2}]\).

The result of such fitting method is shown in Extended Data Fig. 5b (black line), where we present \(f(t)={p}_{0}+{p}_{1}{(t-{p}_{2})}^{2}\) for [p0, p1, p2] corresponding to the maximum value of Q(p0, p1, p2). We emphasize that this results in a lower peak value than a standard fitting procedure that assumes underlying Gaussian distributions of experimentally measured probabilities (red line). Choosing this lower peak value eventually will provide a more conservative but more accurate value for the Bell state fidelity lower bound than the naive Gaussian approach.

Obtaining the four probability distributions

Our method to obtain the probability density functions of the four probabilities at π and 2π times ensures both that the sum of the four probabilities always equals one and that their mutual correlations are preserved. We first extract the beta distribution of Prr by gathering all the data around the π and 2π times (Extended Data Fig. 5c). In particular, the mode of the obtained beta distribution at π is Prr ≈ 0.0005. The distribution of Pgr+rg and Pgg are obtained by fitting the data in the following way. We perform a joint fit on Pgr+rg using a fit function f1(t), and on Pgg using a fit function f2(t). The fit functions are expressed as:

which ensures that the sum of the four probabilities is always equal to 1. We then calculate the joint probability density function Q1,2(p0, p1, p2) of both f1 and f2 using the method described above. In particular:

where \({{\mathcal{P}}}_{i}^{gr+rg}\) (\({{\mathcal{P}}}_{i}^{gg}\)) is the probability density function associated with Pgr+rg (Pgg) for the ith experimental data point. In particular, we impose that p0 ≤ 1 − Prr to avoid negative probabilities. We show the resulting Q1,2(p0, p1, p2) in Extended Data Fig. 5d as two-dimensional maps along (p0, p1) and (p0, p2).

We then obtain the one-dimensional probability density function for p0 by integrating over p1 and p2 (Extended Data Fig. 5d). This provides the fitted probability density function of Pgr+rg, and hence Pgg = 1 − Prr − Pgr − Prg at π time. We repeat this process for various values of Prr, for both π and 2π times.

At the end of this process, we obtain different probability density functions for each Prr value. The asymmetry between Pgr and Prg is obtained by taking the mean of Pgr − Prg at π and 2π times. We assume the underlying distribution to be Gaussian, as Pgr − Prg is centred on 0, and can be positive or negative with equal probability.

Bell state fidelity

Now that we have the probability density function for all four probabilities at π and 2π times, we move on to the Bell state fidelity extraction. For both π and 2π, we perform a Monte Carlo sampling of the beta distribution of Prr, which then leads to a joint probability density function for Pgr+rg and Pgg. We then sample from this, and use equation (3) to obtain a value for the Bell state fidelity lower bound. We repeat this process 1 million times, and fit the obtained results using a beta distribution (Extended Data Fig. 5e). We observe an excellent agreement between the fit and the data, from which we obtain \({F}_{{\rm{Bell}}}\ge 0.996{2}_{-13}^{+10}\), where the quoted value is the mode of the distribution and the error bars represent the 68% confidence interval.

We use the same method to obtain the measurement-corrected Bell fidelity and the SPAM-corrected one. After drawing the probabilities from the probability density functions, we infer the SPAM-corrected probabilities from our known errors, described in detail previously13. We use the values reported in Extended Data Table 2. During this process, there is a finite chance that the sum of probabilities does not sum up to one. This comes from the fact that the probability density functions and the SPAM correction are uncorrelated, an issue that is avoided for raw Bell fidelity extraction owing to the correlated fit procedure described above. We use a form of rejection sampling to alleviate this issue by restarting the whole process in the case of such event. We perform this 1 million times, and fit the obtained results using a beta distribution (Extended Data Fig. 5f). We observe an excellent agreement between the fit and the data, from which we obtain a SPAM-corrected fidelity \({F}_{{\rm{Bell}}}\ge 0.998{5}_{-12}^{+7}\), where the quoted value is the mode of the distribution and the error bars represent the 68% confidence interval.

Interaction limitation for Bell fidelity

We estimate the theoretically expected Bell state fidelity using perturbation analysis. Specifically, the resonant blockaded Rabi oscillation for an interacting atom pair is described by the following Hamiltonian

where V = C6/r6 is the distance-dependent, interaction strength between two atoms separated at distance r (equation (2)). As the two-atom initial ground state, \(\left|\psi (0)\right\rangle =\left|gg\right\rangle \), has even parity under the left–right reflection symmetry, the Rabi oscillation dynamics can be effectively solved in an even-parity subspace with three basis states of \(\left|gg\right\rangle \), \(\left|rr\right\rangle \) and \(\left|{\varPsi }^{+}\right\rangle =\frac{1}{\sqrt{2}}(\left|gr\right\rangle +\left|rg\right\rangle )\). In the Rydberg-blockaded regime where V ≫ Ω, we can perform perturbation analysis with the perturbation parameter \(\eta =\varOmega /\sqrt{2}V\) and find that the energy eigenvectors of the subspace are approximated as

with their corresponding energy eigenvalues of E1 ≈ V( − η − η2/2), E2 ≈ V(η − η2/2) and E3 ≈ V(1 + η2/2), respectively. Rewriting the initial state using the perturbed eigenbasis, we solve

to obtain the analytical expression of the maximum achievable Bell state fidelity, FBell, at a given perturbation strength η. Keeping the solution up to the second order of η, we find

obtained at \(t={\rm{\pi }}/\sqrt{2}\varOmega \).

Statistics reduction due to erasure excision

Our demonstration of erasure excision explicitly discards some experimental realizations (Extended Data Fig. 6), which can be seen as a downside of the method. However, this is a controllable trade-off: by adjusting the threshold for detecting an erasure error, we can balance gains in fidelity versus losses in experimental statistics (as shown in Extended Data Fig. 7) for whatever particular task is of interest. In general, the optimum probably always includes some amount of erasure excision, as it is usually better to remove erroneous data than keeping them.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Code availability

The code that supports the findings of this study are available from the corresponding author upon reasonable request.

References

Preskill, J. Quantum computing in the NISQ era and beyond. Quantum 2, 2–79 (2018).

Shor, P. W. Scheme for reducing decoherence in quantum computer memory. Phys. Rev. A 52, R2496 (1995).

Knill, E., Laflamme, R. & Zurek, W. Threshold accuracy for quantum computation. Preprint at https://arxiv.org/abs/quant-ph/9610011 (1996).

Saffman, M. Quantum computing with atomic qubits and Rydberg interactions: progress and challenges. J. Phys. B 49, 202001 (2016).

Scholl, P. et al. Quantum simulation of 2D antiferromagnets with hundreds of Rydberg atoms. Nature 595, 233–238 (2021).

Ebadi, S. et al. Quantum phases of matter on a 256-atom programmable quantum simulator. Nature 595, 227–232 (2021).

Wu, Y., Kolkowitz, S., Puri, S. & Thompson, J. D. Erasure conversion for fault-tolerant quantum computing in alkaline earth Rydberg atom arrays. Nat. Commun. 13, 4657 (2022).

Sahay, K., Jin, J., Claes, J., Thompson, J. D. & Puri, S. High threshold codes for neutral atom qubits with biased erasure errors. Preprint at https://arxiv.org/abs/2302.03063 (2023).

Grassl, M., Beth, T. & Pellizzari, T. Codes for the quantum erasure channel. Phys. Rev. A 56, 33–38 (1997).

Kang, M., Campbell, W. C. & Brown, K. R. Quantum error correction with metastable states of trapped ions using erasure conversion. PRX Quantum 4, 020358 (2023).

Teoh, J. D. et al. Dual-rail encoding with superconducting cavities. Preprint at https://arxiv.org/abs/2212.12077 (2022).

Kubica, A., Haim, A., Vaknin, Y., Brandão, F. & Retzker, A. Erasure qubits: overcoming the t1 limit in superconducting circuits. Preprint at https://arxiv.org/abs/2208.05461 (2022).

Madjarov, I. S. et al. High-fidelity entanglement and detection of alkaline-earth Rydberg atoms. Nat. Phys. 16, 857–861 (2020).

Levine, H. et al. Parallel implementation of high-fidelity multiqubit gates with neutral atoms. Phys. Rev. Lett. 123, 170503 (2019).

Clark, C. R. et al. High-fidelity Bell-state preparation with 40Ca+ optical qubits. Phys. Rev. Lett. 127, 130505 (2021).

Negîrneac, V. et al. High-fidelity controlled-z gate with maximal intermediate leakage operating at the speed limit in a superconducting quantum processor. Phys. Rev. Lett. 126, 220502 (2021).

Bernien, H. et al. Probing many-body dynamics on a 51-atom quantum simulator. Nature 551, 579–584 (2017).

Choi, J. et al. Preparing random states and benchmarking with many-body quantum chaos. Nature 613, 468–473 (2023).

Cooper, A. et al. Alkaline-earth atoms in optical tweezers. Phys. Rev. X 8, 41055 (2018).

Norcia, M. A., Young, A. W. & Kaufman, A. M. Microscopic control and detection of ultracold strontium in optical-tweezer arrays. Phys. Rev. X 8, 41054 (2018).

Saskin, S., Wilson, J. T., Grinkemeyer, B. & Thompson, J. D. Narrow-line cooling and imaging of ytterbium atoms in an optical tweezer array. Phys. Rev. Lett. 122, 143002 (2019).

Jenkins, A., Lis, J. W., Senoo, A., McGrew, W. F. & Kaufman, A. M. Ytterbium nuclear-spin qubits in an optical tweezer array. Phys. Rev. X 12, 21027 (2022).

Burgers, A. P. et al. Controlling Rydberg excitations using ion-core transitions in alkaline-earth atom-tweezer arrays. PRX Quantum 3, 020326 (2022).

Ma, S. et al. Universal gate operations on nuclear spin qubits in an optical tweezer array of 171Yb atoms. Phys. Rev. X 12, 021028 (2022).

Bruzewicz, C. D., Chiaverini, J., McConnell, R. & Sage, J. M. Trapped-ion quantum computing: progress and challenges. Appl. Phys. Revi. 6, 021314 (2019).

Kjaergaard, M. et al. Superconducting qubits: current state of play. Annu. Rev. Condens. Matter Phys. 11, 369–395 (2020).

Lukin, M. D. et al. Dipole blockade and quantum information processing in mesoscopic atomic ensembles. Phys. Rev. Lett. 87, 37901 (2001).

Gaëtan, A. et al. Observation of collective excitation of two individual atoms in the Rydberg blockade regime. Nat. Phys. 5, 115–118 (2009).

Isenhower, L. et al. Demonstration of a neutral atom controlled-not quantum gate. Phys. Rev. Lett. 104, 10503 (2010).

Bergschneider, A. et al. Spin-resolved single-atom imaging of 6Li in free space. Phys. Rev. A 97, 63613 (2018).

Endres, M. et al. Atom-by-atom assembly of defect-free one-dimensional cold atom arrays. Science 354, 1024–1027 (2016).

Barredo, D., de Leseleuc, S., Lienhard, V., Lahaye, T. & Browaeys, A. An atom-by-atom assembler of defect-free arbitrary two-dimensional atomic arrays. Science 354, 1021–1023 (2016).

Hayes, D. et al. Eliminating leakage errors in hyperfine qubits. Phys. Rev. Lett. 124, 170501 (2020).

Stricker, R. et al. Experimental deterministic correction of qubit loss. Nature 585, 207–210 (2020).

McEwen, M. et al. Removing leakage-induced correlated errors in superconducting quantum error correction. Nat. Commun. 12, 1761 (2021).

Levine, H. et al. High-fidelity control and entanglement of Rydberg-atom qubits. Phys. Rev. Lett. 121, 123603 (2018).

Löw, R. et al. An experimental and theoretical guide to strongly interacting Rydberg gases. J. Phys. B 45, 113001 (2012).

Schymik, K.-N. et al. Single atoms with 6000-second trapping lifetimes in optical-tweezer arrays at cryogenic temperatures. Phys. Rev. Appl. 16, 034013 (2021).

Fendley, P., Sengupta, K. & Sachdev, S. Competing density-wave orders in a one-dimensional hard-Boson model. Phys. Rev. B 69, 75106 (2004).

Omran, A. et al. Generation and manipulation of schrödinger cat states in Rydberg atom arrays. Science 365, 570–574 (2019).

Carmichael, H. J. Quantum trajectory theory for cascaded open systems. Phys. Rev. Lett. 70, 2273–2276 (1993).

Evered, S. J. et al. High-fidelity parallel entangling gates on a neutral-atom quantum computer. Nature https://doi.org/10.1038/s41586-023-06481-y (2023).

Barnes, K. et al. Assembly and coherent control of a register of nuclear spin qubits. Nat. Commun. 13, 2779 (2022).

Arute, F. et al. Quantum supremacy using a programmable superconducting processor. Nature 574, 505–510 (2019).

Ebadi, S. et al. Quantum optimization of maximum independent set using Rydberg atom arrays. Science 376, 1209–1215 (2022).

Pezzè, L., Smerzi, A., Oberthaler, M. K., Schmied, R. & Treutlein, P. Quantum metrology with nonclassical states of atomic ensembles. Rev. Mod. Phys. 90, 35005 (2018).

Skinner, B., Ruhman, J. & Nahum, A. Measurement-induced phase transitions in the dynamics of entanglement. Phys. Rev. X 9, 031009 (2019).

Li, Y., Chen, X. & Fisher, M. P. A. Quantum Zeno effect and the many-body entanglement transition. Phys. Rev. B 98, 205136 (2018).

Garratt, S. J., Weinstein, Z. & Altman, E. Measurements conspire nonlocally to restructure critical quantum states. Phys. Rev. X 13, 021026 (2023).

Ma, S. et al. High-fidelity gates and mid-circuit erasure conversion in an atomic qubit. Nature https://doi.org/10.1038/s41586-023-06438-1 (2023).

Deist, E., Gerber, J. A., Lu, Y.-H., Zeiher, J. & Stamper-Kurn, D. M. Superresolution microscopy of optical fields using tweezer-trapped single atoms. Phys. Rev. Lett. 128, 083201 (2022).

Shaw, A. L. et al. Dark-state enhanced loading of an optical tweezer array. Phys. Rev. Lett. 130, 193402 (2023).

Graham, T. M. et al. Multi-qubit entanglement and algorithms on a neutral-atom quantum computer. Nature 604, 457–462 (2022).

Covey, J. P., Madjarov, I. S., Cooper, A. & Endres, M. 2000-times repeated imaging of strontium atoms in clock-magic tweezer arrays. Phys. Rev. Lett. 122, 173201 (2019).

Shaw, A. L. et al. Multi-ensemble metrology by programming local rotations with atom movements. Preprint at https://arxiv.org/abs/2303.16885 (2023).

Samajdar, R., Choi, S., Pichler, H., Lukin, M. D. & Sachdev, S. Numerical study of the chiral 𝕫3 quantum phase transition in one spatial dimension. Phys. Rev. A 98, 023614 (2018).

de Léséleuc, S., Barredo, D., Lienhard, V., Browaeys, A. & Lahaye, T. Analysis of imperfections in the coherent optical excitation of single atoms to Rydberg states. Phys. Rev. A 97, 53803 (2018).

Acknowledgements

We acknowledge discussions with, and feedback from, H. Pichler, H. Bernien, J. Preskill, J. Covey, C. Pattinson, K. Slagle, H. Manetsch, J. Thompson, K. Leung, E. Bataille and I. Madjarov. We acknowledge support from the Institute for Quantum Information and Matter, an NSF Physics Frontiers Center (NSF Grant PHY-1733907), the DARPA ONISQ programme (W911NF2010021), the NSF CAREER award (1753386), the AFOSR YIP (FA9550-19-1-0044), the NSF QLCI programme (2016245) and the US Department of Energy, Office of Science, National Quantum Information Science Research Centers, Quantum Systems Accelerator. P.S. acknowledges support from the IQIM postdoctoral fellowship. R.B.-S.T. acknowledges support from the Taiwan-Caltech Fellowship. R.F. acknowledges support from the Troesh postdoctoral fellowship.

Author information

Authors and Affiliations

Contributions

P.S., A.L.S. and M.E. conceived the idea and experiment. P.S., A.L.S, R.B.-S.T., R.F. and J.C. performed the experiments, data analysis and numerical simulations. P.S., A.L.S., R.B.-S.T., R.F. and J.C. contributed to the experimental set-up. P.S., A.L.S. and M.E. wrote the paper with input from all authors. M.E. supervised this project.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature thanks Wolfgang Lechner and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data figures and tables

Extended Data Fig. 1 Fast imaging on the erasure detection subspace.

a, Sketch of the involved energy levels. We detect atoms in 1S0 by strongly driving the 1S0 ↔ 1P1 transition. b, Survival of atoms in 1S0 (green) and number of detected photons (red) as a function of the imaging time. We observe an increase of detected photons whereas the atoms are already lost: even though the kinetic energy of the atoms is too large to keep them trapped, their mean position remains centred on the tweezers thanks to the use of two counter-propagating beams with equal power. After approximately 24 μs, the atomic spread becomes too large to measure a significant increase in detected photons. c, Typical histograms of the number of detected photons for 24 μs imaging. Using a slow, high-fidelity image prior to the fast image, we can detect if a tweezer is empty (blue) or filled (red). The typical detection fidelity which corresponds to equal error probability in detecting absence or presence of an atom is 98.0(1)%. d, Losses from 3P0 as a function of time, expressed in number of fast images. The survival probability of an atom in 3P0 is 99.99954(12)% for one image, consistent with its 5 second lifetime.

Extended Data Fig. 2 Rydberg lifetime.

a, Pseudo-level diagram of the Rydberg dynamics and associated decay channels. During Rydberg evolution with Rabi frequency Ω, the Rydberg atom can decay either into a set of states which is ‘bright’ to the imaging process (including both the erasure images and the final detection images), e.g states like 5s5p 3P2, or into states which are ‘dark’ to the imaging process, e.g. nearby Rydberg states. A small percentage of decays into ‘bright’ states can go directly into 3P0 where they can be re-excited by the Rydberg driving; note that such decays are dark in the erasure image, but bright in the final detection image. b, Measurement of the dark state decay lifetime, measured by performing a π-pulse on the Rydberg transition, waiting a variable amount of time, and then returning atoms to the ground state (inset). c, Measurement of the bright state decay lifetime, measured by performing a Rydberg π-pulse, waiting, and then performing an auto-ionization pulse to destroy any remaining Rydberg or dark state excitations. d, We prepare atoms into 3P2 (a bright state), and then perform continuous Rydberg driving. Atoms are lost from the trap at a rate which increases with increasing Rabi frequency. e, The lifetime of atoms in 3P2 scales inversely with the square of the Rabi frequency (i.e. scales inversely with the intensity of the Rydberg beam). We attribute this to a photo-ionization process which can convert bright state decay into dark state decay through prolonged Rydberg excitation, as shown in a. Markers in b-d are experimental data where error bars are often smaller than the marker sizes, and solid lines represent exponential fits.

Extended Data Fig. 3 Experimental sequence.

Sketch of the experimental sequence including the erasure detection for a the Bell state generation experiment, and b the many-body experiment. Both experiments have the same global architecture: we start by loading the atoms into the desired geometry, then initialize the atoms in \(\left|g\right\rangle \), perform the Bell state generation or quantum simulation, and finally read out by auto-ionizing atoms in \(\left|r\right\rangle \) and imaging atoms in \(\left|g\right\rangle \). The main difference between both experiments concerns the erasure detection. In a, we utilize a single erasure detection, placed after auto-ionizing atoms in \(\left|r\right\rangle \). In b, we perform two erasure images: one before applying \(\widehat{U}(t)\), and one after auto-ionization.

Extended Data Fig. 4 Coherence preservation during erasure detection.

a,b, We prepare the Bell state \(\left|{\Psi }^{+}\right\rangle \), and measure the relevant populations for Bell fidelity extraction Pgr + Prg and Pgg (after an extra π pulse) as a function of holding time. We perform a 2π pulse in the middle of the holding time to get rid of dephasing effect due to e.g. Doppler effect. We present the results with (blue) and without (red) performing the erasure imaging during the holding time. We observe no significant difference between the two conditions, which suggests that the erasure detection imaging light, in principle, does not destroy the coherence of the Bell state.

Extended Data Fig. 5 Bell state fidelity measurement from blockaded Rabi oscillations.

a, Probabilities of measuring both atoms in the ground state Pgg (blue markers) and a single atom in the ground state Pgr + Prg (red markers) in the blockaded regime as a function of excitation time. Solid lines are guides to the eye. b, Zoom over the π time, where we prepare the Bell state \(\left|{\Psi }^{+}\right\rangle \) (see main text). We use a quadratic fit function of the form \(f(x)={p}_{0}+{p}_{1}{(x-{p}_{2})}^{2}\) to extract the population values at π and 2π times. We show the fit results (i) assuming the experimental data have a Gaussian uncertainty (red line), and (ii) using their true Beta distribution (black line). c,d, Fitting method used to obtain the probability distributions of Prr, Pgg, and Pgr + Prg at π and 2π times. We first experimentally obtain the Beta distribution of the probability Prr to observe both atoms in the Rydberg state. We then perform a joint fit on Pgr + Prg and Pgg with the same p1 and p2 fit coefficients for both. We fix the value of Prr, and condition the joint fit such that the sum of all probabilities is always equals to one. We repeat this process for various values of Prr. The results shown here are for Prr = 0.0005, which is the mode of the obtained Beta distribution for Prr. The fitting method uses the true, experimentally measured Beta distribution of each data point. We obtain corresponding probability density functions for each Prr. We perform this method independently for both π and 2π times. e, Resulting Bell state fidelity lower bound using the probability density functions of Prr and Pgr + Prg. We start by randomly drawing from the Prr distribution, then assign the corresponding probability density function of Pgr + Prg, and draw a value from it. The asymmetry between Pgr and Prg is obtained by averaging over each experimental data point, and is assumed to be Gaussian. We repeat this process 1 million times for both π and 2π times. We obtain the corresponding probability density function (blue line), which we fit using a Beta distribution (orange dashed line). f, SPAM-corrected Bell state fidelity lower bound distribution, obtained by correcting the probabilities after randomly drawing them from their respective probability density functions.

Extended Data Fig. 6 System size scaling of sweep fidelity.

a, Total probability for forming either of the \({{\mathbb{Z}}}_{2}\) states following the quasi-adiabatic sweeps presented in Fig. 3, here presented for the final sweep time as a function of system size for full erasure-excision (blue markers), preparation erasure-excision (orange markers), and the baseline (pink markers) data. b, The ratio gain from using full erasure-excision grows as a function of system size, both with respect to the baseline values (pink markers), and to the case of only excising preparation erasures (orange markers). Solid lines are linear guides to the eyes. c, For a fixed detection threshold of 5 photons, the number of erasure errors also increases as a function of system size.

Extended Data Fig. 7 Controlling fidelity gains from erasure-excision.

Erasure-excision explicitly trades improved experimental fidelity for a reduced number of experimental repetitions. However, this process is controllable by adjusting the threshold used for detecting atoms during erasure images. Changing the threshold essentially changes the false positive and false negative rate for detecting erasures correctly (inset). We plot the total AFM probability (green markers) after a sweep as in Fig. 3 of the main text, and vary the detection threshold used for identifying erasures. For too high a threshold, many erasure events go unnoticed, and so erroneous outcomes become relatively more prevalent, reducing the overall fidelity. As the detection threshold is lowered, more true erasures, where an atom is actually present in the erasure image, are correctly detected, which improves the fidelity. However, lowering the threshold too far (in our case past roughly 5 photons) increases the likelihood of seeing false positive erasures; excising data based on these events discards experimental statistics with relatively little gain in fidelity. In the main text, we select a detection threshold of 5 photons.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Scholl, P., Shaw, A.L., Tsai, R.BS. et al. Erasure conversion in a high-fidelity Rydberg quantum simulator. Nature 622, 273–278 (2023). https://doi.org/10.1038/s41586-023-06516-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41586-023-06516-4

This article is cited by

-

An elementary review on basic principles and developments of qubits for quantum computing

Nano Convergence (2024)

-

Benchmarking highly entangled states on a 60-atom analogue quantum simulator

Nature (2024)

-

Logical quantum processor based on reconfigurable atom arrays

Nature (2024)

-

Phase shifting control for IQ separation in qubit state tomography

Quantum Information Processing (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.