Abstract

Reservoir computing is a neuromorphic architecture that may offer viable solutions to the growing energy costs of machine learning. In software-based machine learning, computing performance can be readily reconfigured to suit different computational tasks by tuning hyperparameters. This critical functionality is missing in ‘physical’ reservoir computing schemes that exploit nonlinear and history-dependent responses of physical systems for data processing. Here we overcome this issue with a ‘task-adaptive’ approach to physical reservoir computing. By leveraging a thermodynamical phase space to reconfigure key reservoir properties, we optimize computational performance across a diverse task set. We use the spin-wave spectra of the chiral magnet Cu2OSeO3 that hosts skyrmion, conical and helical magnetic phases, providing on-demand access to different computational reservoir responses. The task-adaptive approach is applicable to a wide variety of physical systems, which we show in other chiral magnets via above (and near) room-temperature demonstrations in Co8.5Zn8.5Mn3 (and FeGe).

Similar content being viewed by others

Main

Physical separation between processing and memory units in conventional computer architectures causes substantial energy waste due to the repeated shuttling of data, known as the von Neumann bottleneck. To circumvent this, neuromorphic computing1,2, which draws inspiration from the brain to provide integrated memory and processing, has attracted a great deal of attention as a promising future technology. Reservoir computing3,4,5 is a type of neuromorphic architecture with complex recurrent pathways (the ‘reservoir’) that maps input data to a high-dimensional space. Weights within the reservoir are randomly initialized and fixed, and only the small one-dimensional weight vector that connects the reservoir to the output requires optimization using computationally cheap linear regression. As such, reservoir computing can achieve powerful neuromorphic computation at a fraction of the processing cost relative to other schemes, for example, deep neural networks, where the whole weight network (typically involving more than millions of nodes) must be trained6.

Although reservoir computing was originally conceived in software3, nonlinear and history-dependent responses of physical systems have also been exploited as reservoirs7,8. The field of physical reservoir computing has been rapidly expanding with several promising demonstrations using optical systems9, analogue electronic circuits10, memristors11,12, ferroelectrics13, magnetic systems14,15,16,17,18,19 and even a bucket of water20. Skyrmions, topologically non-trivial magnetic whirls, have also been proposed as hosts for reservoir computing21,22,23,24 and experimentally demonstrated25,26,27 as part of rapidly growing research efforts towards neuromorphic computing using magnetic systems28,29,30,31,32.

Despite such rapid development, one of the outstanding challenges for creating powerful physical reservoirs is establishing a methodology for task-adaptive control of reservoir properties8, often characterized by the nonlinearity, memory capacity and complexity metrics of the reservoir33,34,35,36. However, physical systems typically have a narrow and fixed set of reservoir properties without having much room to change, since the above metrics tend to be constrained to a particular response of a physical system. Because of this, a physical reservoir tends to perform well for some specific tasks but poorly for others, which require different reservoir properties. This is a severe drawback relative to software reservoirs, where such properties can be tuned by changing lines of code.

Here, we demonstrate task-adaptive reservoir computing using the spectral space of a physical system that has rich, phase-tunable dynamical modes. As a model system of this approach, we use spin resonances of the chiral magnet Cu2OSeO3 (refs. 37,38,39). Since different magnetic phases (skyrmion, helical and conical phases) exhibit distinct resonances, they offer broadly varying reservoir properties and computing performance, which can be reconfigurably tuned via the magnetic field and temperature. We use magnetic field cycling40,41 to input data and measure the spin-wave spectra at each input step to efficiently achieve high-dimensional mapping. By quantitatively assessing each phase as a reservoir, we find that the thermodynamically metastable skyrmion phase has a strong memory capacity due to the magnetic field-driven gradual nucleation of skyrmions with an excellent performance in future prediction tasks. By contrast, the conical phase has modes with great reservoir nonlinearity and complexity, which are ideal for transformation tasks. By making full use of this phase-tunable nature, we achieve a strong performance across a broad range of tasks in a single physical system. High-temperature demonstration of the task-adaptive physical reservoir concept using other chiral magnets, that is, Co8.5Zn8.5Mn3 and FeGe, indicates that the concept is indeed ubiquitous.

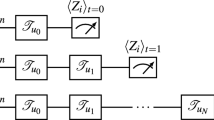

Reservoir computing scheme

Our physical reservoir (Fig. 1a) is constructed using the field- and temperature-dependent gigahertz spin dynamics of Cu2OSeO3 (ref. 38). We apply a specific sequence of magnetic field inputs and map out the spin-wave spectra of Cu2OSeO3 to form a two-dimensional matrix18. Subsequently, the reservoir matrix is multiplied by a weight vector Wout to produce the individual output value for each input. We use standard ridge regression to optimize Wout for each task with training data. The trained reservoir is then run for the unseen test datasets to assess the reservoir computing performance via the mean squared error (MSE; see Methods). The rich phase diagram of Cu2OSeO3 offers multiple magnetic textural phases, including the thermodynamically metastable skyrmion phase40,41,42,43, each of which exhibits distinct spin dynamics properties. The task-adaptive nature of our physical reservoir comes from the reconfigurable on-demand control between these magnetic phases via both temperature and magnetic field.

a, Illustration of a task-adaptive reservoir computing framework. Different magnetic phases are accessed by controlling the external magnetic field (H) and temperature (T). The rightmost panel shows the experimental schematic of the VNA-assisted spin-wave spectroscopy setup. VNA, vector network analyser. b, Typical input scheme for forecasting (left: Mackey–Glass signal) and transformation (right: sine wave) tasks. The original input signal, u(t), is mapped to u'(N), which is defined by the mapped field-cycling protocol (see main text and Methods for details). Note that Hrange defines the full range of applied fields, where the distance between Hlow and Hhigh at any given N, is the width of cycling, Hrange/2. A single field cycle is highlighted by the orange box in the transformation panel. c, S11 (denoted as ΔS11 after pre-processing; see Methods) as a function of the frequency f after accumulating N field cycles and visualization of R(N, M); a collective spectral evolution for N field cycles for skyrmion and conical phases, separated into ‘training’ and ‘test’ datasets. d, Results after applying Wout on the unseen ‘test’ dataset. Left: forecasting of a differential chaotic time-series data, Mackey–Glass signal by 10 future steps. Right: transformation of a sine wave to a square-wave signal. In both cases, reservoir prediction (transformation) results are plotted in blue (purple), the red dotted line depicts the target signal and the grey line represents the control prediction where ridge regression is performed on the raw input data without the physical reservoir. MSEFC and MSETR quantify the computation performance of forecasting and transformation, respectively.

As shown in Fig. 1b, the input layer consists of sequential magnetic field values, u′ = (H1, H2, H3, …, Hn), produced by projecting a given input function of each task onto the field. Taking the transformation task as an example, each field cycle N starts with a low magnetic field Hlow, increasing to a high magnetic field Hhigh and comes back to a new Hlow, where their separation is defined by Hrange/2 with a centre field Hc. The individual field points (Hlow, Hmid and Hhigh) are modulated by the input functions tailored for specific tasks. For the transformation tasks, the input function is a sine curve encoded over 100 field cycles; our forecasting tasks use a chaotic oscillatory Mackey–Glass time series44 to modulate the field-cycling base with N, as shown in the left panel of Fig. 1b (Methods). This scheme can be applied to input any time-series dataset into the physical reservoir.

To create a two-dimensional reservoir matrix, R(N, M), we use an experimental setup illustrated in the rightmost panel of Fig. 1a to measure the microwave reflection spectra S11 of Cu2OSeO3 crystals, recording M frequency channels between 1 and 6 GHz for each field cycle at Hlow labelled by N (Methods). Using this scheme, the physical reservoir effectively broadcasts a single field input value to multiple M values as frequency multiplexing. Figure 1c shows the spectral output of our reservoir in response to input time-series datasets (left: Mackey–Glass, right: sine wave). The spectral states of each phase (left: skyrmion, right: conical) change as we perform field cycling—see the individual spectra sampled at different N values in Fig. 1c. Using S11(N, f) in the colour heatmap plots, we form R(N, M) as shown in the middle panel of Fig. 1a, where χij represents the magnetic susceptibility for each input field and frequency.

Using 70% of the reservoir response as the training dataset Rtrain shown in Fig. 1c, we perform ridge regression to calculate the weights Wout against a target function Y, where Y = RtrainWout. The calculated Wout and the remaining 30% of the reservoir Rtest are subsequently used to evaluate the reservoir performance via the MSE. Figure 1d exemplifies this final process of our reservoir computing protocol by showing the physical reservoir’s attempt (blue line) at reproducing the target signal (red dotted line) for two tasks: the left panel shows a forecast of the chaotic Mackey–Glass signal ten future steps ahead (MG(N + 10)); the right panel shows the nonlinear transformation of a sine-wave input to a square-wave target. For both tasks, the excellent performance of reservoir computing is confirmed by the low MSE values: 3.7 × 10−3 for the forecasting task by the skyrmion reservoir; 7.3 × 10−7 for the transformation task by the conical reservoir. The virtue of the reservoir components can be assessed using these two values compared with those calculated by computing the same tasks without the reservoirs (grey curves): 6.2 × 102 and 5.4 × 102 for the forecasting and transformation tasks, respectively.

Phase-tunable physical reservoir computing

The phase-tunable nature of our physical reservoir computing stems from the rich magnetic phase diagram of Cu2OSeO3 shown in Fig. 2a ref. 37. Added to this diagram is the metastable skyrmion phase, which can be generated at low temperatures below ~35 K using quenching techniques or field-cycling protocols40,41,42. We leverage this phase tunability to create the task-adaptive nature of our physical reservoir, as detailed below.

a, Schematic of the temperature phase diagram for the bulk crystal Cu2OSeO3. The yellow dashed vertical (horizontal) line indicates the experimental conditions for our cycling experiments shown in c (d). b, The cycling number dependence of the spin-wave spectra in Cu2OSeO3 for Hc = 60 mT and 4 K. The evolution of the skyrmion-phase spectrum is shown for increasing values of N. Grey lines are added as a guide to the eye to keep track of the skyrmion modes. c, Hc dependence of the spin-wave spectra in Cu2OSeO3 for 4 K after 920 field cycles. d, Temperature dependence of the spin-wave spectra for Hc = 60 mT after 920 field cycles. e, Microwave absorption spectra as a function of f and N for different values of Hc at T = 4 K (upper row) and 35 K (lower row). The input signal in all plots is a sine wave with Hrange = 90 mT.

Figure 2b displays the N dependence of the spectra for Hc and temperature inside the skyrmion phase. For N = 100, a sharp peak around 4 GHz can be clearly observed, corresponding to low-energy spin-wave modes of the thermodynamically stable conical phase39,40,41. As we cycle further, the conical mode amplitude shrinks and the skyrmion modes appear around 2–3 GHz, as highlighted by the grey curves for N = 130–170. These are the counterclockwise and breathing modes of the metastable low-temperature skyrmion phase generated by field cycling40,41. The mode frequencies move with our input magnetic fields, and as the cycling proceeds the skyrmions are continuously destroyed and renucleated, as evident from the peak amplitude. When we carry out experiments for different Hc values, we can clearly demonstrate the tunability of the magnetic phases for our reservoir computing, as shown in Fig. 2c where the spectra are obtained after 920 field cycles with Hrange = 90 mT at 4 K. A similar tunability can be achieved by changing the temperature at a fixed Hc of 60 mT, as shown in Fig. 2d. The skyrmion modes are clearly identified for 4 K and 15 K and disappear for higher temperatures (25 K and 35 K), where the spectra are dominated by those from the conical phase. Finally, a collection showing the field-cycle evolution of spectra for various Hc and temperatures is presented in Fig. 2e to demonstrate the range of phase/spectral tunability. Individual spectral scans for further evolution of N as a variation of Hc can be found in Supplementary Fig. 3 (Supplementary Note 1).

Reservoir performance

Figure 3a–c compares the reservoir’s performance on different tasks using magnetic phases of skyrmion (Hc = 60 mT), skyrmion–conical hybrid (Hc = 98 mT) and conical modes (Hc = 185 mT) at 4 K with Hrange = 90 mT and N = 1,000. For forecasting, the system is trained to predict the future behaviour of a Mackey–Glass signal of ten steps ahead. The reservoir performance is evaluated quantitatively by calculating the MSE between the reservoir prediction and the target signal.

MSE performance comparison of different computation tasks across three distinct physical phases (skyrmion, skyrmion–conical hybrid and conical) at T = 4 K. In a–c, the red dotted and grey curves represent the target functions and the computation results without the physical reservoirs, respectively. Blue, orange and purple curves display calculations with the physical reservoirs of skyrmion, skyrmion–conical hybrid and conical phases, respectively. a, Forecasting a Mackey–Glass chaotic time series of ten steps ahead (MG(N + 10)). b, Nonlinear transformation of a sine-wave input into saw waveforms. c, Combined transform/forecasting of ten future steps of a cubed Mackey–Glass signal. d, Illustration of the mapped field-cycling protocol visualized as a modified boxplot (details in the main text). e,g, Evaluation of MSE values at a constant Hrange as a variation of Hc and T, respectively, for forecasting (MG(N + 10)) (e) and transformation (square wave) (g) target applications. f, Evaluation of MSE values at a constant Hc as a variation of Hrange and T for a transformation (square wave) target application. The colour scale in g also applies to f.

As shown in Fig. 3a, when Hc is increased and the reservoir is transfigured from the skyrmion to the conical phase, the prediction performance deteriorates and the MSE increases by a factor of approximately 18. In the conical phase, the reservoir prediction is as bad as the one without the reservoir. The opposite trend is observed for transformation tasks, where the MSE is notably improved when switching from the skyrmion reservoir to the conical reservoir, as shown in Fig. 3b. Although the skyrmion reservoir still performs well with an MSE of the order of 10−4, the conical reservoir excels with an MSE of 3.7 × 10−7 for the sine-to-saw transformation task. By setting Hc at 98 mT, we create a hybrid reservoir phase where both skyrmion and conical modes coexist. This particular reservoir configuration outperforms both the individual skyrmion and conical reservoirs for a complex forecasting–transformation task of predicting ten future steps ahead for a cubed Mackey–Glass signal from a normal Mackey–Glass input shown in Fig. 3c. See Methods and Supplementary Note 3 for details of target generations and a broader selection of further forecasting and transformation tasks, with a tunable reservoir performance demonstrated throughout in Supplementary Fig. 6.

Figure 3e–g maps the observed reservoir performance on the phase diagram, with Fig. 3d as an aid to reading these plots. The upper and lower whiskers represent the maximum and minimum magnetic field values in the cycling scheme, respectively. The height of the box represents Hrange, and the central line defines Hc. The MSE values are encoded as the box colour. The initial cycle begins at the bottom of the lower whisker and gradually moves up and down as a function of N. Figure 3e shows the reservoir performance for forecasting MG(N + 10) at Hrange = 90 mT. The strongest performance is found when the field cycling lies entirely inside the skyrmion phase at lower temperatures. The performance gradually worsens as the field cycling moves beyond the skyrmion phase and is reduced dramatically when leaving the skyrmion phase at high temperatures. This excellent forecasting performance of the skyrmion reservoir is highly correlated with its memory capacity, as we discuss below.

For the transformation tasks, we show the reservoir performance for two parameter dependencies, Hrange and Hc. In Fig. 3f, where a variation of Hrange for Hc = 73 mT is shown, it is clear for all measured temperatures that larger Hrange values provide an optimal reservoir performance, maximizing the balance between the key reservoir properties associated with the tasks. In Fig. 3g, we observe that the reservoirs run with input mappings extending deeper into the helical phase (Hc = 35 mT) perform far more poorly, whereas the optimal performance for the transformation task is demonstrated when the reservoir substantially includes the conical phase that has strong nonlinearity and complexity. The MSE values displayed for Fig. 3e,g highlight that the performance from the identical reservoirs is starkly different between the two types of computational task.

The computational performance of our magnetic reservoirs can be related to their physical properties. Figure 4a–c displays the spectral evolution of different magnetic phases with field cycling. The high (low) transformation performance of the conical (helical) phase can be associated with the size of the frequency shift by the magnetic field. The dispersion curve of the helical phase displays a notably flat profile in comparison with the other magnetic phases in chiral magnets45, resulting in a poor computational performance with its peak position shifting very weakly in response to the field input. Much higher amplitude frequency shifts are found in the highly performing conical and skyrmion phases, producing strong nonlinearity and complexity in their reservoirs, and hence low MSE values in the transformation tasks—see further/detailed analysis in Supplementary Note 2 and Supplementary Fig. 4. The origin of the excellent performance of the skyrmion reservoirs for forecasting tasks can be explained by comparing the spectra across the three phases at the same field values but at different points in the input field cycle, labelled as A–D in Fig. 4d. The spectra of both the helical (Fig. 4e) and conical (Fig. 4g) phases are identical across points A–D, showing that these phases respond only to the current field input being applied and lack any memory response for magnetic field inputs. By contrast, the skyrmion spectra in Fig. 4f are dissimilar across points A–D, meaning that the spectral response depends not on only the field value but also past field inputs. This is the source of the crucial physical memory response for forecasting tasks, arising from the magnetic field-driven nucleation of metastable skyrmions and the annihilation of other magnetic phases40,41,42,43. More quantitative and detailed discussions are available in the next section and Supplementary Note 2.

a–c, Spin-wave spectra of the helical (a), skyrmion (b) and conical (c) magnetic phases. d, Sine-wave input sequence defining the applied field amplitudes. e–g, Spin-wave spectra of the helical (e), skyrmion (f) and conical (g) phases at nodes of the sine-wave input fields from d. h, Hc evolution of the MSE values at T = 4 K and Hrange = 90 mT, for forecasting (MG(N + 10)) and transformation (square wave) target applications. Note that MSE' denotes the normalized scale of MSE for [0, 1], where 0 (1) represents the best (worst) MSE. A (meta)stable magnetic field range for each phase is colour-coded. i, MSE' and task-agnostic metric results as a function of Hc at T = 4 K. j, Correlation matrix of Spearman’s rank correlation coefficient. k, Performance of forecasting as an evolution of the MC metric. l, Performance of transformation as an evolution of the CP metric.

Reservoir metrics

To gain further insights into our reservoir properties, we use task-agnostic reservoir metrics, that is nonlinearity (NL), memory capacity (MC) and complexity (CP)34,35, to characterize the reservoir properties (see Supplementary Note 4 for details) as well as correlation between these metrics and the normalized MSE for the forecasting (transformation) task (\({{{{\rm{MSE}}}}}_{{{{\rm{FC}}}}({{{\rm{TR}}}})}^{{\prime} }\)). We performed both forecasting and transformation tasks across a wide range of Hc values at 4 K, as shown in Fig. 4h. In parallel, the metric scores were evaluated for each Hc, as plotted in Fig. 4i. It is clear that the MC metric shows similar behaviour to the performance of \({{{{\rm{MSE}}}}}_{{{{\rm{FC}}}}}^{{\prime} }\) with Hc, i.e., forecasting performance and MC increases then decreases with Hc, suggesting that MC is a key property for a better performance in forecasting tasks. As discussed earlier, MC in the skyrmion phase stems from the history-dependent fading memory property generated by its gradual skyrmion nucleation with repeated field cycles40,41. Rich and complex spin-wave mode dispersion in the conical/ferrimagnetic phases provides the physical basis for the high NL and CP scores, offering a strong transformation task performance (see more detailed discussions in Supplementary Note 2).

The correlation between different parameters can be numerically discussed using the standard Spearman’s rank correlation coefficient46 as shown in Fig. 4j (Methods). Here, the algorithm outputs [−1, 1], where 1 (−1) corresponds to perfect proportionality (inverse proportionality) and 0 denotes no correlation. Note that since the better performance in each task is represented by a lower MSE′, the correlation with a negative value to each metric indicates a positive correlation in our analysis. The performance of time series forecasting strongly correlates with MC (−0.89) and CP (0.57), revealing that MC (CP) is favoured (disfavoured) for these particular types of task, while the opposite is true for transformation tasks. It is also important to highlight that MC and CP have a clear negative correlation (−0.68), indicating the trade-off nature between these two reservoir properties. Subsequently, the high correlation between NL and CP (0.67) suggests that a more nonlinear system enhances the amount of meaningful input data encoded in the reservoir to display high complexity.

We show the specific relationship between the reservoir performance evaluated by MSE′ and MC (CP) as plotted in Fig. 4k (4l), where the colour of the dots indicates which magnetic phase the metrics were evaluated against. Following the Spearman’s rank correlation values for each pair, both plots show a negative trend for each reservoir characteristic. Unlike the conical phase, the metrics of the skyrmion phase appear to be clustered around high values of MC between 4 and 7, further confirming that the skyrmion reservoir’s memory is responsible for the excellent forecasting performance. By contrast, Fig. 4l shows that the system’s ability to perform transformation tasks reaches its full potential by maximizing the complexity, which occurs when the conical phase dominates the magnet—see further discussions on the reservoir metrics and other correlations in Supplementary Fig. 7 (Supplementary Note 5).

Above-room-temperature demonstration

Finally, we present that the task-adaptive reservoir concept can be transferable to different material systems, here using other chiral magnets: Co8.5Zn8.5Mn3 (Fig. 5) and FeGe (see Supplementary Note 7 and Supplementary Fig. 9). Consistent with earlier work on the same class of Co-Zn-Mn alloy materials (for example, refs. 47,48), multiple magnetic phases in Co8.5Zn8.5Mn3 can be clearly recognized in the plot of alternating-current (a.c.) susceptibility measurements shown in Fig. 5a. In particular, in the vicinity of its Curie temperature, we can recognize the signature of a thermodynamically stable skyrmion phase—see also Supplementary Fig. 8 (Supplementary Note 6) for the imaginary part of the a.c. susceptibility to highlight this phase. We therefore performed reservoir computing schemes at 333 K with different magnetic centre fields Hc = 15 and 60 mT with a 10 mT cycling width. In Fig. 5b,c, we show the spectra of magnetic resonance during field cycling of both the nonlinear Mackey–Glass and sine input functions to carry out the future prediction and transformation tasks, respectively. For both tasks, we observe that the spectra depend strongly on the centre field, demonstrating the phase tunability of physical reservoirs in this material. Using these physical reservoirs with different magnetic phases, we carried out both tasks, the results of which are displayed in Fig. 5d–g. For the forecasting task (Fig. 5d,f), the skyrmion-dominated reservoir (Hc = 15 mT) outperforms the ferromagnetic reservoir (Hc = 60 mT) in terms of the MSE. By contrast, the ferromagnetic reservoir can yield a better MSE than the skyrmion-dominated reservoir for the transformation task (Fig. 5e,g). See Supplementary Fig. 10 (Supplementary Note 8) for the full phase tunability of Co8.5Zn8.5Mn3 and FeGe. Although there is clear space for improving the MSE as well as making full use of the task-adaptive nature of this material system, this above-room-temperature demonstration shows no fundamental limit to using the task-adaptive concept in a wide variety of materials.

a, Two-dimensional plot of the real part of the a.c. susceptibility (χ′) to identify magnetic phases in a Co8.5Zn8.5Mn3 crystal with helical, skyrmion, conical and ferromagnetic phases. The vertical dotted line represents the temperature at which we performed the reservoir computing experiments. b,c, Spin dynamics spectra measured during field cycling N showing the reservoir computing performance for forecasting (b) and transformation (c) at 333 K, at different centre fields of 15 mT (left spectra) and 60 mT (right spectra). d–g, Reservoir computing performance of predicting the nonlinear Mackey–Glass function for five future steps (d,f) and transformation from a sine input signal to triangle output function (e,g). The red dotted curves denote the target function and the blue and purple solid curves are calculations generated via our reservoir computing.

Outlook

We have demonstrated the substantial benefits of introducing a phase-tunable approach, and hence task adaptability, to physical reservoir systems. A single physical reservoir may now be actively reconfigured on-demand for a strong performance across a broad range of tasks without the requirement for fabricating additional samples or using entirely different physical systems. This approach invites further development, such as online training and dynamic on-the-fly reservoir reconfiguration for incoming real-time datasets. Moreover, the phase-tunable approach demonstrated in our study can be transferable to a broad range of physical reservoirs, not only to magnetic materials that host chiral spin textures47,49,50 but also potentially to non-magnetic systems that have rich thermodynamical phase diagrams. This approach may also offer additional functionality for wave-based physical recurrent neural networks32. Experimental demonstration of on-demand reservoir reconfigurability brings physical reservoir computing closer to fully realizing its promise and helps to develop an alternative to software-based neural-network approaches powered by complementary metal–oxide–semiconductor technology software.

Methods

Details of experimental setup

Ferromagnetic resonance

For our experiments, a polished plate-shaped bulk Cu2OSeO3 crystal of dimensions 1.9, 1.4 and 0.3 mm (x, y and z, respectively) was placed on a coplanar waveguide with the (100) surface facing down. Here, the Cu2OSeO3 crystal was placed on a coplanar waveguide board, which sits on a copper cold finger of a closed-cycle helium cryostat. Here, we apply an external magnetic field H along the 〈100〉 crystallographic direction for the efficient generation of low-temperature skyrmions40,41. A VNA (ZNB40, Rohde & Schwarz) is employed to measure the spectral response of chiral magnetic crystals using ferromagnetic resonance (FMR) techniques. The microwave reflection coefficient S11 is recorded by the VNA as a function of the microwave frequency to characterize the spectral response for given magnetic fields and temperatures. For our measurements, we sweep the frequency, comprising 1,601 frequency points (M) at 0 dBm applied microwave power. Thus, a single raw spectral recording of S11 consists of 1,601 points, associated with the frequency dependence of the dynamic magnetic susceptibility χm.

Field-cycling scheme

In standard field-cycling schemes without envelope modulation, a single field loop N is completed when H is increased and decreased between fixed field points, for example, defined by Hlow (yellow), Hmid (red) and Hhigh (green) in Supplementary Fig. 1a, with different time steps of t as labelled. During the cycling process, the VNA records the corresponding spectrum for each magnetic field value to study the nucleation of metastable lattices such as low-temperature skyrmions40,41. This cycling scheme, however, lacks the ability to construct a time-series input function for reservoir computing.

We have, therefore, established the mapped field-cycling scheme to apply the field-cycling data-input protocol for physical reservoir computing. This technique, as shown in Supplementary Fig. 1b, modulates each of Hlow, Hmid and Hhigh for different t to generate a field-cycling-dependent input function u′(N). This makes it possible to incorporate arbitrary time-series signals u(t) in our scheme. For the mapping procedure, u(t) is normalized between −1 and 1 and is offset by a central cycling field value Hc, where two additional copies (Hhigh and Hlow) are generated above and below Hmid using the cycling width Hrange. In this work, we accommodated two specific input sequences to suit different target applications: a chaotic oscillatory Mackey–Glass time-series signal44 (for forecasting) and a sine wave (for transformations). We construct the reservoir outputs using the FMR spectra measured at the lowest field point (yellow dots) within the cycles.

The mapped field-cycling scheme thus enables FMR frequency multiplexing. Frequency multiplexing is a technique commonly used to broadcast a single-dimensional input signal to multiple outputs. In our experiments, each field point is encoded as a series of frequencies applied to the magnetic system. By measuring the FMR response, multiple output signals at different frequencies can be separated and analysed in the spectral space to be used for computation.

A typical time to solve tasks is, in total, around two hours. The breakdown of this entire process is: (1) input field mapping as pre-processing (less than one minute), (2) inputting data as a magnetic field and recording the physical reservoir output via the VNA (two hours) and (3) training/testing the reservoirs (less than one minute). For the reservoir construction process, we use the VNA to acquire the frequency spectra, which take approximately one second for each spectrum. Changing the magnetic field dominates the measurement time, and the timescale is limited by this speed. All processing in this work, including reservoir computing, is conducted by a CPU (Ryzen 9 5900X, AMD).

Details of reservoir computing protocols

Data processing

After completing a set of mapped field-cycling measurements, the spectral data are pre-processed before being added to the reservoir matrix R, as shown by the example in Supplementary Fig. 2a. Each spectrum undergoes the same processing method of a high-field (300 mT) background subtraction, a numerical lossless smoothing accommodated by the Savitzky–Golay filter51 and spectrum sampling at fixed intervals to obtain ΔS11. Data sampling is necessary to avoid an overfitting problem caused by too many data points during training (see Supplementary Note 2 and Supplementary Fig. 5 for more details). The sampling interval is determined by an automated search process that best produces the MSE of the test data.

Target generation

The transformation targets shown in the main text have been generated using the scipy.signal package52, where the input array is defined by 0.2π{1…N} for the ‘square’ waveform with a duty cycle of 0.5 and a ‘saw’ signal with a width of the rising ramp as 1. Note that the square target waveform has a very slight slope between the high and low values due to the finite sample rate.

For the forecasting tasks, Mackey–Glass, a chaotic time series derived from a nonlinear time-delayed differential equation, was employed. Its complex behaviour is commonly used as a benchmark for testing the performance of prediction algorithms. The signal is defined by: \(\frac{{{{\rm{d}}}}x}{{{{\rm{d}}}}t}=\beta \frac{{x}_{d}}{1+{x}_{d}^{\,n}}-dx\), where x and xd represent the value of the signal at time t and t-d, respectively. We have numerically generated the signal to exhibit a chaotic oscillatory behaviour with the following parameters: β = 0.2, n = 10, and d = 17.

Training and testing

For training and testing, R is subsequently separated into training and test datasets determined by a test-length factor k, in the ranges of [0, 1], as illustrated in Supplementary Fig. 2b. The training dataset is passed on to a variant of the linear regression algorithm, that is, ridge regression53, to calculate the optimal weights to reproduce the target dataset. Ridge regression is a common regression technique with a regularization term α for analysing multicollinear data. The weights are determined via \(\left.\min(w)\right)\left | \right | \chi w-y \left | \right |_{2}^{2}+\alpha \left | \right |w\left | \right |_{2}^{2}\), where w, χ and y denote the ridge coefficients (weights), reservoir elements and the target value terms, respectively. Here, α helps to penalize large w values obtained during the fitting process to stabilize the model and prevent overfitting. We have used the scikit-learn package for this calculation54. The obtained weights are then applied to the unseen test dataset to evaluate the training performance and compared with the test target data.

In this study, k was fixed at 0.3, that is, using 70% of data for training and 30% for testing with N = 1,000 cycles. The dependence of k on the MSE values for the forecasting (transformation) task is shown in Supplementary Fig. 2c (2d). As observed in these plots, sufficient training data are necessary to improve the MSE for each case; in other words, k should be reasonably smaller than unity. We confirm that the choice of k does not substantially alter our analysis and conclusions drawn in this study.

Performance evaluation of reservoir computing

The MSE is a statistical measure that quantifies the difference between the predicted and true values by averaging the squared differences across data points. A lower MSE value indicates a better predictive performance for a given task. We calculate our MSE values using the mean_squared_error function of the sklearn.metrics package54, which evaluates: \({{{\rm{MSE}}}}(\,y,\hat{y})=\frac{1}{{n}_{{{{\rm{samples}}}}}}\mathop{\sum }\nolimits_{i = 0}^{{n}_{{{{\rm{samples}}}}}-1}{({\,y}_{i}-\hat{{y}_{i}})}^{2}\), where yi and ŷi correspond to the target (true signal) and transformed/predicted values, respectively, and each consists of nsamples number of data points. Here yi and ŷi is the i-th sample of y and ŷ.

Correlation analysis

We determine the correlations for the MSE metric and the task-agnostic metrics using Spearman’s rank correlation coefficient46, which is a non-parametric measure (that is, it does not assume that the data follows a specific statistical distribution such as the normal distribution) of the strength and direction of association between two variables. It reflects the degree to which their rankings correlate, yielding values ranging from −1 to 1. The value of −1 indicates a perfect negative association (that is, where one variable increases, the other decreases). Conversely, the value of 1 implies a perfect positive association (where one variable increases, the other decreases).

Here we present correlation plots which we do not show in the main text. For this analysis, we normalize the MSE values as follows:

Note that a log value of MSE was taken to minimize the correlation anomalies arising from a large range of MSE values, resulting in an incorrect representation of the dataset. This is equivalent to plotting the MSE values on a logarithmic scale.

Data availability

The data presented in the main text and the Supplementary Information are available from the corresponding authors upon reasonable request.

Code availability

The code used in this study is available from the corresponding author upon reasonable request.

References

Marković, D., Mizrahi, A., Querlioz, D. & Grollier, J. Physics for neuromorphic computing. Nat. Rev. Phys. 2, 499–510 (2020).

Schuman, C. D. et al. Opportunities for neuromorphic computing algorithms and applications. Nat. Comput. Sci. 2, 10–19 (2022).

Jaeger, H. The “Echo State” Approach to Analysing and Training Recurrent Neural Networks – With an Erratum Note (GMD-Forschungszentrum Informationstechnik, 2010).

Maass, W., Natschläger, T. & Markram, H. Real-time computing without stable states: a new framework for neural computation based on perturbations. Neural Comput. 14, 2531–2560 (2002).

Nakajima, K. & Fischer, I. (eds) Reservoir Computing: Theory, Physical Implementations, and Applications (Springer, 2021).

Jaeger, H. Tutorial on Training Recurrent Neural Networks, Covering BPPT, RTRL, EKF and the “Echo State Network” Approach GMD Report 159 (GMD-Forschungszentrum Informationstechnik, 2002); https://books.google.co.jp/books?id=F7qEGwAACAAJ

Obst, O. et al. Nano-scale reservoir computing. Nano Commun. Netw. 4, 189–196 (2013).

Tanaka, G. et al. Recent advances in physical reservoir computing: a review. Neural Netw. 115, 100–123 (2019).

Duport, F., Schneider, B., Smerieri, A., Haelterman, M. & Massar, S. All-optical reservoir computing. Opt. Express 20, 22783–22795 (2012).

Soriano, M. C. et al. Delay-based reservoir computing: noise effects in a combined analog and digital implementation. IEEE Trans. Neural Netw. Learn. Syst. 26, 388–393 (2015).

Du, C. et al. Reservoir computing using dynamic memristors for temporal information processing. Nat. Commun. 8, 2204 (2017).

Moon, J. et al. Temporal data classification and forecasting using a memristor-based reservoir computing system. Nat. Electron. 2, 480–487 (2019).

Liu, K. et al. Multilayer reservoir computing based on ferroelectric α-In2Se3 for hierarchical information processing. Adv. Mater. 34, 2108826 (2022).

Grollier, J. et al. Neuromorphic spintronics. Nat. Electron. 3, 360–370 (2020).

Nakane, R., Tanaka, G. & Hirose, A. Reservoir computing with spin waves excited in a garnet film. IEEE Access 6, 4462–4469 (2018).

Nomura, H. et al. Reservoir computing with dipole-coupled nanomagnets. Jpn J. Appl. Phys. 58, 070901 (2019).

Tsunegi, S. et al. Physical reservoir computing based on spin torque oscillator with forced synchronization. Appl. Phys. Lett. 114, 164101 (2019).

Gartside, J. C. et al. Reconfigurable training and reservoir computing in an artificial spin-vortex ice via spin-wave fingerprinting. Nat. Nanotechnol. 17, 460–469 (2022).

Allwood, D. A. et al. A perspective on physical reservoir computing with nanomagnetic devices. Appl. Phys. Lett. 122, 040501 (2023).

Fernando, C. & Sojakka, S. Pattern recognition in a bucket. In Proc. ECAL 2003: Advances in Artificial Life (eds Banzhaf, W. et al.) 588–597 (Springer, 2003).

Prychynenko, D. et al. Magnetic skyrmion as a nonlinear resistive element: a potential building block for reservoir computing. Phys. Rev. Appl. 9, 014034 (2018).

Pinna, D., Bourianoff, G. & Everschor-Sitte, K. Reservoir computing with random skyrmion textures. Phys. Rev. Appl. 14, 054020 (2020).

Msiska, R., Love, J., Mulkers, J., Leliaert, J. & Everschor-Sitte, K. Audio classification with skyrmion reservoirs. Adv. Intell. Syst. 5, 2200388 (2023).

Lee, O. et al. Perspective on unconventional computing using magnetic skyrmions. Appl. Phys. Lett. 122, 260501 (2023).

Yokouchi, T. et al. Pattern recognition with neuromorphic computing using magnetic field-induced dynamics of skyrmions. Sci. Adv. 8, eabq5652 (2022).

Raab, K. et al. Brownian reservoir computing realized using geometrically confined skyrmion dynamics. Nat. Commun. 13, 6982 (2022).

Sun, Y. et al. Experimental demonstration of a skyrmion-enhanced strain-mediated physical reservoir computing system. Nat. Commun. 14, 3434 (2023).

Torrejon, J. et al. Neuromorphic computing with nanoscale spintronic oscillators. Nature 547, 428–431 (2017).

Zázvorka, J. et al. Thermal skyrmion diffusion used in a reshuffler device. Nat. Nanotechnol. 14, 658–661 (2019).

Song, K. M. et al. Skyrmion-based artificial synapses for neuromorphic computing. Nat. Electron. 3, 148–155 (2020).

Zahedinejad, M. et al. Memristive control of mutual spin Hall nano-oscillator synchronization for neuromorphic computing. Nature Materials 21, 81–87 (2022).

Papp, Á., Porod, W. & Csaba, G. Nanoscale neural network using non-linear spin-wave interference. Nat. Commun. 12, 6422 (2021).

Lukoševičius, M. & Jaeger, H. Reservoir computing approaches to recurrent neural network training. Comput. Sci. Rev. 3, 127–149 (2009).

Dambre, J., Verstraeten, D., Schrauwen, B. & Massar, S. Information processing capacity of dynamical systems. Sci. Rep. 2, 514 (2012).

Love, J. et al. Spatial analysis of physical reservoir computers. Preprint at https://doi.org/10.48550/arXiv.2108.01512 (2021).

Stenning, K. D. et al. Neuromorphic few-shot learning: generalization in multilayer physical neural networks. Preprint at https://doi.org/10.48550/arXiv.2211.06373 (2023).

Seki, S., Yu, X. Z., Ishiwata, S. & Tokura, Y. Observation of skyrmions in a multiferroic material. Science 336, 198–201 (2012).

Onose, Y., Okamura, Y., Seki, S., Ishiwata, S. & Tokura, Y. Observation of magnetic excitations of skyrmion crystal in a helimagnetic insulator Cu2OSeO3. Phys. Rev. Lett. 109, 037603 (2012).

Garst, M., Waizner, J. & Grundler, D. Collective spin excitations of helices and magnetic skyrmions: review and perspectives of magnonics in non-centrosymmetric magnets. J. Phys. D Appl. Phys. 50, 293002 (2017).

Aqeel, A. et al. Microwave spectroscopy of the low-temperature skyrmion state in Cu2OSeO3. Phys. Rev. Lett. 126, 017202 (2021).

Lee, O. et al. Tunable gigahertz dynamics of low-temperature skyrmion lattice in a chiral magnet. J. Phys. Condens. Matter 34, 095801 (2021).

Oike, H. et al. Interplay between topological and thermodynamic stability in a metastable magnetic skyrmion lattice. Nat. Phys. 12, 62–66 (2015).

Chacon, A. et al. Observation of two independent skyrmion phases in a chiral magnetic material. Nat. Phys. 14, 936–941 (2018).

Mackey, M. C. & Glass, L. Oscillation and chaos in physiological control systems. Science 197, 287–289 (1977).

Schwarze, T. et al. Phase diagram and magnetic relaxation phenomena in Cu2OSeO3. Nat. Mater. 14, 478–483 (2015).

Dodge, Y. in The Concise Encyclopedia of Statistics 502–505 (Springer, 2008); https://doi.org/10.1007/978-0-387-32833-1_379

Karube, K. et al. Skyrmion formation in a bulk chiral magnet at zero magnetic field and above room temperature. Phys. Rev. Mater. 1, 074405 (2017).

Takagi, R. et al. Spin-wave spectroscopy of the Dzyaloshinskii–Moriya interaction in room-temperature chiral magnets hosting skyrmions. Phys. Rev. B 95, 220406 (2017).

Back, C. et al. The 2020 skyrmionics roadmap. J. Phys. D Appl. Phys. 53, 363001 (2020).

Yu, H., Xiao, J. & Schultheiss, H. Magnetic texture based magnonics. Phys. Rep. https://doi.org/10.1016/j.physrep.2020.12.004 (2021).

Savitzky, A. & Golay, M. J. Smoothing and differentiation of data by simplified least squares procedures. Anal. Chem. 36, 1627–1639 (1964).

Virtanen, P. et al. SciPy 1.0: fundamental algorithms for scientific computing in Python. Nat. Methods 17, 261–272 (2020).

Hilt, D. E. & Seegrist, D. W. Ridge: A Computer Program for Calculating Ridge Regression Estimates Research Note NE-236 (US Department of Agriculture, 1977); https://www.biodiversitylibrary.org/item/137258

Pedregosa, F. et al. Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Acknowledgements

O.L. and H.K. thank the Leverhulme Trust for financial support via RPG-2016-391. K.S. was supported by The Eric and Wendy Schmidt Fellowship Program and the Engineering and Physical Sciences Research Council (grant no. EP/W524335/1). W.R.B. and J.C.G. were supported by the Leverhulme Trust (RPG-2017-257) and the Imperial College London President’s Excellence Fund for Frontier Research. J.C.G., W.R.B. and H.K. were supported by EPSRC grant EP/X015661/1. J.C.G. was supported by the Royal Academy of Engineering under the Research Fellowship programme. This work was partly supported by Grants-In-Aid for Scientific Research (18H03685, 20H00349, 21K18595, 21H04990, 21H04440 and 22H04965) from JSPS, PRESTO (JPMJPR18L5) and CREST (JPMJCR1874 and JPMJCR20T1) from JST, Katsu Research Encouragement Award and UTEC-UTokyo FSI Research Grant Program of the University of Tokyo, Asahi Glass Foundation. This work has also been funded by the Deutsche Forschungsgemeinschaft (DFG; German Research Foundation) under SPP2137 Skyrmionics, TRR80 (From Electronic Correlations to Functionality, Project No. 107745057, Project G9) and the excellence cluster MCQST under Germany’s Excellence Strategy EXC-2111 (Project No. 390814868). We thank A. Mehonic, P. Zubko and A. Lombardo for reading an earlier version of manuscript and the provided comments.

Author information

Authors and Affiliations

Contributions

O.L., K.D.S., J.C.G. and H.K. designed the experiments. O.L., T.W. and D.P. performed the measurements. O.L., T.W., K.D.S., J.C.G., W.R.B. and H.K. analysed the results with help from the rest of the co-authors. S.S., A.A., K.K., N.K., Y. Taguchi, C.B. and Y. Tokura grew the chiral magnetic crystals and their characterized magnetic properties. H.K. proposed and supervised the studies. O.L., J.C.G. and H.K. wrote the paper with inputs from the rest of the co-authors.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Materials thanks Johan Åkerman and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Figs. 1–10 and Notes 1–8.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lee, O., Wei, T., Stenning, K.D. et al. Task-adaptive physical reservoir computing. Nat. Mater. 23, 79–87 (2024). https://doi.org/10.1038/s41563-023-01698-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41563-023-01698-8

This article is cited by

-

Antiferromagnetic interlayer exchange coupled Co68B32/Ir/Pt multilayers

Scientific Reports (2024)