Abstract

Artificial intelligence (AI) is shifting the paradigm of two-phase heat transfer research. Recent innovations in AI and machine learning uniquely offer the potential for collecting new types of physically meaningful features that have not been addressed in the past, for making their insights available to other domains, and for solving for physical quantities based on first principles for phase-change thermofluidic systems. This review outlines core ideas of current AI technologies connected to thermal energy science to illustrate how they can be used to push the limit of our knowledge boundaries about boiling and condensation phenomena. AI technologies for meta-analysis, data extraction, and data stream analysis are described with their potential challenges, opportunities, and alternative approaches. Finally, we offer outlooks and perspectives regarding physics-centered machine learning, sustainable cyberinfrastructures, and multidisciplinary efforts that will help foster the growing trend of AI for phase-change heat and mass transfer.

Similar content being viewed by others

Introduction

Understanding phase-change heat transfer is important to numerous applications related to the advancement of energy conversion and thermal management systems. In particular, liquid-vapor phase-change processes continuously garner the interest of thermal scientists due to their ability to transfer large amounts of energy effectively1. Central to these processes is the nucleation of the dispersed phase (i.e., bubbles and droplets for boiling or condensation processes), where the continuous phases are liquid and vapor, respectively. The bubble or droplet dynamics can be manipulated by modulating experimental designs, including surface properties, structures, and materials, to push the limit of heat transfer performances2. Historically, bubble and droplet dynamics have been studied through the combination of nucleation theory3,4,5, thermodynamics6,7,8,9, and phenomenological correlations10. Yet despite phase-change heat transfer’s century-long history, fully understanding its mechanistic relationships by linking experimental factors, complex nucleation statistics, and thermal performance remains an elusive challenge. A primary reason for this obscurity can be attributed to data inconsistency caused by a wide variety of operating conditions and experimental protocols along with measurement uncertainties11. Another reason is the difficulty in quantifying boiling and condensation behaviors because of their highly dynamic, complex, and high-dimensional nature12,13. Further difficulties arise in the management and curation of data streams, which have high implications for predicting and forecasting multi-phase flow patterns. The key concepts in phase-change heat transfer are listed in Box 1.

In the era of big data, transforming large quantities of data into useful knowledge plays an increasingly important role across various engineering disciplines. Artificial intelligence (AI), a term coined by John McCarthy in 1955, was defined by him as “the science and engineering of making intelligent machines.” It has emerged as a dominant force for performing data-to-knowledge conversion and presents an attractive complement to traditional computational science. AI technologies simulate human intelligence processes through machines, particularly computer systems. Current AI technologies primarily fall in the category of “narrow” AI, which refers to an AI that can outperform humans on a particular task14. Machine learning (ML) is a subfield of AI where machines can learn without explicitly being programmed15. Neural networks trained using statistical methods make inferences from data that can lead to decision-making. Deep learning (DL), a class of ML inspired by our brain’s neural network, can learn multi-level representations of data hierarchically16. While most existing AI solutions are still considered black boxes, the scope of ML and DL transcends mere nonlinear regression. Notably, artificial neural networks (ANNs) are now able to discover new material17,18, help model path- and history-dependent problems19, inversely design material structures20, and discover hidden physics from data21, as Box 2 describes the key concepts in AI and ML. Box 3 describes key concepts of digital inference that have seen great progress with advancements in AI and ML.

Overview

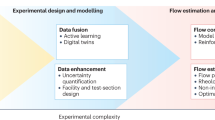

Within the phase-change heat transfer community, the plethora of recent AI-based approaches has motivated heat transfer researchers to explore their capabilities to advance fundamental nucleation sciences under a new paradigm (Fig. 1). Despite its potential, adaptations or discussions of AI technique have traditionally been slow in this field, which makes this untapped disruptive technology even more attractive. Figure 2 summarizes the number of publications on AI and two-phase heat transfer research areas. According to the results, the publications for pure AI or two-phase heat transfer (approximately 100 K) outweigh those from AI-integrated two-phase heat transfer research (i.e., AI + phase-change) categories by several orders of magnitude (approximately 100). While this ratio suggests that AI-integrated two-phase heat transfer research is relatively new, it also implies that this subfield is growing at a rate that exceeds that of both individual fields. Indeed, with the ongoing AI “gold rush” taking place in this field, the time is ripe to carefully review recent publications. To this end, the current article addresses the pressing need for a thorough review, explaining the core ideas of AI technologies, the innate challenges involved with phase-change processes and limited use of AI in the past (Fig. 1a), current implementation status and challenges (Fig. 1b), and future outlooks for two-phase heat transfer research (Fig. 1c). The article builds on prior reviews that introduced the intersection of AI and heat transfer in either a broad sense22, or for different applications facing disparate challenges than those of boiling and condensation heat transfer23,24,25,26,27. The critical review and summary will provide valuable insights into the implementation of AI for liquid-vapor phase-change heat transfer studies, with the goal to provide the thermal science community with a roadmap for future research.

AI technologies offer diverse opportunities for scientific advances in phase change heat transfer. In this review, (a) the current challenges of phase-change phenomena are discussed along with (b) AI technologies categorized from an objective-based studies, followed by (c) their outlooks and future perspectives. The tiers are chronologically ordered to reflect the past, present, and future implementation progress of AI within this field.

Graphs in (a and b) show the number of publications on AI and boiling/condensation research acquired from topic searches in Scopus on April 12, 2022, from years 2010 to 2021. The data under AI categories are obtained from keywords such as artificial intelligence, machine learning, or deep learning. The data for phase-change categories are found using keywords such as boiling or condensation. The data for phase-change studies involving AI (i.e., AI + phase-change) are obtained from the combination of the above two searches. In 2021, the number of publications on AI-based two-phase research increases by 365% compared to 2018 but still only occupies 0.8% of boiling and condensation research. The survey underlines the need for further studies that integrate AI into two-phase heat transfer research.

State-of-the-art AI technologies for phase-change heat transfer

The article first discusses current AI technologies available in the heat transfer community (Fig. 1b) categorized based on how they target major and relevant two-phase heat transfer problems existing today. AI technologies are broadly divided into three categories, namely meta-analysis, physical feature extraction, and data stream analysis.

Meta-analysis

Meta-analysis on multiple datasets is generally conducted to provide a holistic description of phase-change heat transfer by either corroborating consistent datasets or revisiting conflicting datasets, usually in the form of tabular data28. This is important because both experimental and computational data collection in this field is expensive and requires significant upfront investment. Additionally, various combinations of experimental factors—including the material, thickness, surface roughness or structures, and surface/working fluid wettability—exert significant influence on the datasets29. Moreover, the cumulative data available today have high variance and is hypersensitive towards various operating conditions30, mounting protocols31, characterization procedures32, and the personnel’s experience level. Once meta-datasets are collected, data dimensionality is orders of magnitude larger, making it challenging to understand. To address these challenges, a recent movement in the thermal science field seeks to exploit available scientific advances through machine learning-assisted meta-analysis (Fig. 3).

Data-driven hypothesis approach

ML models can be efficient by assisting professionals to make holistic data-driven hypothesis development by learning big data. ML-assisted meta-analysis can play a role in learning knowledge from data and extracting data from knowledge, as described in Fig. 3. The first step introduces building hypotheses based on accumulated datasets to answer scientific questions in phase-change heat transfer (Fig. 3a). After two-phase experiments are designed and performed (Fig. 3b), the experimental factors (tabular data) (Fig. 3c) are then used to train neural networks to find the relations between the experimental factors and their output (heat transfer performance) (Fig. 3d), thus helping researchers inform their understanding and decisions with data.

Unlike traditional theory-driven approaches, which rely on the knowledge of the underlying mechanisms of the observed phenomena, data-driven approaches can uncover statistical relationships and patterns solely from data even without explicit knowledge of the underlying physics or mathematical functions. This characteristic enables ML models achieving high-accuracy predictions to be trained in a matter of hours compared to the extensive period and resources required to develop new heat transfer theories. However, data-driven approaches typically require larger diverse datasets for generalization, the size of which depends on the specific problem, and lack the interpretability offered by theory-driven approaches.

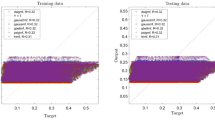

Regression models

Most ML-based meta-analysis models utilize regression analysis to link experimental factors with non-dimensional numbers or global heat transfer quantities such as CHF, HTC, or pressure drop over the entire system (Supplementary Table 1). The generic feed-forward ANN architecture, also known as a multi-layer perceptron (MLP)33, and random forest (RF) methods have been the most popular33, probably because they are some of the most well-understood techniques in literature today. Both ANNs (or MLPs) and RF are exceptional at modeling complex nonlinear relationships, with the general idea of approaching problems by deconstructing them into smaller simpler units. ANNs are organized into layers of interconnected nodes, in which “weights” are assigned to represent the value of information assigned to an individual node34. Similarly, RFs statistically identify important features, create multiple randomly chosen weak decision trees, and collect their votes to make the final electoral decision. To handle complexity and overfitting issues, ANNs can adjust hyperparameters such as the number of hidden layers and units, regularization techniques, and learning rates, whereas RFs can modulate the number of trees, the maximal size or depth of the single tree, and sampling rate35. ANNs typically have more flexibility in their architecture designs, while RFs, although less explainable than conventional decision trees36, are reported to possess potentially better interpretability than the so-called black-box predictions of typical ANNs35. In general, no single ML model works the best across all problems, and therefore it is good practice to compare the performance of different techniques or create a hybrid model that combines multiple feature types37. Other ML algorithms that have previously been investigated include the support vector machines (SVMs)33, boosting algorithms33,38, cascade feedforward (CF) networks38, radial basis function (RDF)38, adaptive neuro-fuzzy inference system (ANFIS)39, deep belief networks (DBNs)40, convolutional neural networks (CNNs)41, and physics-informed neural networks (PINNs)42,43.

Challenges and opportunities

The training data for meta-analysis models are often inadequate or sporadic, which can pose a great challenge for developing robust ML models. The training data from experiments that exist today are sparse due to the prohibitive cost of acquiring more experimental data points, especially considering the difficulties associated with replicating most nm – μm scale bottom-up fabricated surfaces2. On the other hand, training data from simulation models are relatively denser, but are limited to extremely simple cases. Recent studies have suggested that adding physics into the learning process and developing surrogate ML models can address these issues by efficiently training ML models with limited training resources44,45.

First, breakthroughs in physics-informed machine learning (e.g., PINNs) have demonstrated that data from both measurements and simulations, and the underlying laws of physics can be integrated into the loss function of the neural network, thereby providing strong theoretical constraints to prevent models from generating unreasonable predictions. This not only allows models to train with less data than is typical for over-parameterized DL models by narrowing the search space, but also enables them to estimate values that extend beyond the trained data scope. Although a major challenge remains in the search and development of physical models that best represent the increased volume, velocity, and variety of available data, other communities suggest that PINNs can succeed21,46, even for such ill-conditioned problems, in cases where other models cannot. This suggests new research opportunities for meta-analysis implementations in the phase-change heat transfer community.

Second, developing surrogate ML models that sensibly augment the training data to generate diverse problem instances can improve the model’s generalizability even with minimal training data. A surrogate model is a trained emulator that learns to approximate solutions through known input-output behaviors when an outcome of interest cannot be easily measured or computed. Therefore, filling in the gaps of these scattered data points will allow the models to learn higher-dimensional insights that can be extended to unexplored study regimes.

Physical feature extraction

Beyond the meta-data analysis, it is imperative to extract physically meaningful features from visual data. Digital inference and extraction of datasets have the potential to enhance our understanding of new physics within large datasets by enabling a full description about two-phase physics. One area of particular interest is the quantification of nucleation behaviors during two-phase processes, which has been a focus since the pioneering work of Nukiyama and Schmidt47,48. While visualizing nucleation behaviors has naturally become an inseparable part of phase-change studies49,50,51,52,53,54, the sheer complexity and volume of bubble and droplet activities (Fig. 4a and c) make quantifying these activities a daunting challenge. In this regard, AI-assisted CV can be effective at performing image analysis tasks with high-level accuracy but with far greater bandwidth12,13,55. Most noticeable advances come from characterizing two-phase nucleation features with the basis of modern CV tasks from CNN models built for digital image inference tasks (Box 3).

Quantifying the nucleation dynamics is crucial to better understand two-phase phenomena because they can explain the heat transfer performance of a and b | boiling and c and d | condensation. Literature review of b | pool boiling reveals that a significant portion of the heat transfer curve is understudied in terms of bubble dynamics. The only studies that have been able to investigate nucleation dynamics across a relatively wide heat flux range are ones that utilize bottom-to-top infrared (IR) imaging techniques, in which the translation from bottom IR bubble statistics to actual bubble morphologies are not well understood. Literature review of d | condensation shows that 86% studies either excluded heat transfer characterizations (50%) or measured heat and mass transfer in a decoupled manner, independent of droplet statistics. Only 11% of the reviewed studies demonstrated fully coupled heat and mass transfer analysis based on droplet statistics, where 4 of the 6 coupled analysis studies were done manually. The references that have helped form the conclusions in this figure for pool boiling and condensation are provided in Supplementary Table 4 and Supplementary Table 5, respectively.

Overview of nucleation statistics and heat and mass transfer

Concurrent with the advances in AI and CV has been an increasing demand to support a stronger connection between bubble and droplet statistics and thermal performances. For example, a significant portion of extant bubble dynamics studies only reports low- to moderate-heat flux regimes, leaving an extensive range of high-heat flux regimes unexplored during pool boiling (Fig. 4b; Supplementary Table 4). Similarly, droplet nucleation statistics are also highly indicative of hidden condensation heat and mass transport mechanics. However, only a handful of studies have attempted to extract spatio-temporal instances (STI) that are essential for decrypting heat transfer analysis. According to a brief literature review of 56 recent studies reporting droplet dynamics from 2015 (Fig. 4d; Supplementary Table 5), the vast majority report only spatial features or spatio-temporal group-based (STG) analysis, where relatively simple traditional CV algorithms or manual labor are still favored (Fig. 4d). In addition, only 11% of the reviewed studies demonstrate heat and mass transfer analysis coupled with droplet statistics, further underlining the need for a stronger connection between the two approaches (Fig. 4d inset).

Traditional computer vision

For a very long time, rudimentary image processing algorithms have extracted basic nucleation features to describe phase-change phenomena56,57,58,59. These traditional CV approaches use relatively simple processing algorithms such as binarization, denoising, flooding, and interactive separation of connected objects to capture the most basic physical descriptors such as bubble or droplet size and distribution57,60. While these approaches are quite useful and swift, they still ultimately rely on handcrafted features that inevitably require significant setting optimizations often under-reported in the literature61,62. To address this issue, there have been efforts to develop shape filters or reconstruction algorithms such as built-in circle finder scripts or multi-step shape reconstruction procedures that specifically exploit the circular shape of bubbles and droplets60,63,64,65,66,67. Yet, due to these methods’ inherent imposition of rules, it has been difficult for traditional CV models to adapt to image inventories from the broader research community, with numerous studies reporting unstable predictions, even with a slight change of lighting66,67.

Machine learning-assisted computer vision

Recent integrations of DL models into CV have brought a paradigm shift in how researchers perceive and utilize visual data to tackle difficult problems. DL models “learn” salient features of the target object, thereby requiring less expert analysis and parametric fine-turning, and possess superior flexibility in adapting to custom datasets compared to traditional CV approaches68. For the time being, studies implementing models with CNN backbones have seen the most success due to their proficiency at handling image data12,13,55,69.

For boiling, the ability to extract spatio-temporal bubble statistics has proven direct implications for heat flux partitioning analysis (See Supplementary Note 1), the process of understanding what heat transfer mechanisms constitute the boiling heat flux at any given stage70,71,72, as well as flow instability analysis73. However, extracting a sufficient amount of classical boiling features (i.e., departure diameter, nucleation site density, departure frequency) from imaging data has been an inherently burdensome and evasive task. The autonomous curation of high-quality bubble statistics at the large scale has been shown to be achievable through DL-assisted segmentation and tracking73,74, enabling the quantitative mapping of bubble dynamics between different boiling surfaces at high resolutions12.

Condensation studies have shown that heat transfer can be effectively quantified by collecting statistics of group of droplets or individual droplets (See Supplementary Note 2) and integrating them over the entire surface13,69. A study on group-based droplet statistics has shown that the overall time-averaged droplet shedding frequency can be used to calculate the heat transfer rate by using Supplementary Equation 5 and 6, which ultimately reduced the high (20 – 100%) measurement uncertainty of conventional temperature and flow sensors less than 10%69. In another study, collecting single-droplet-level statistics imposed with energy balance equation has allowed for the mapping of the evolving heat transfer of condensing surfaces with extreme spatio- and temporal- resolutions of 300 nm and 200 ms, respectively13.

The recent demonstrations of statistics-driven heat transfer quantifications are particularly exciting because they enable the robust comparison and de-coupling of the multi-dimensional relationship among nucleation parameters, heat transfer performances, and structural design. In addition, these techniques can be used to simultaneously compare the heat transfer performances on a single surface with different structures or wettabilities69, which not only minimizes time and labor resources but also essentially eliminates uncertainties caused during separate experiment trials.

A summary of studies that quantify boiling and condensation nucleation behaviors from 2015–2022 are provided in Fig. 5 and Supplementary Table 4–6. We define real-time prediction for models that have the capability of making predictions in live streams, quasi-real-time prediction for models that require an interventional processing step, and extensive prediction for models that report an undefined amount of time. The extracted physics are categorized as spatial, STG, STI, and hidden, which refers to the non-intuitive features of the hidden layers in ANNs.

The regime map reveals a tradeoff between the processing time and the extent of physically meaningful features extracted. A summary of 94 studies that reported bubble or droplet dynamics is shown in red tones, where the color indicates the methods utilized to extract nucleation features, and the circle size represents the number of studies normalized by the total number of studies. Individual data stream analysis studies are listed in Table Supplementary 6.

Challenges and opportunities

DL-based nucleation feature extraction models are still far from being widely adopted in the heat transfer community, as evident in Fig. 5. The fact that despite modern advances in CV, manual analysis is still a highly favored method for quantifying nucleation dynamics suggests that there exists a gap between thermal and computer scientists. Therefore, further efforts should be considered to make these ML-based quantification methods more efficient and user-friendly by fine-tuning the models to be suitable for specific two-phase conditions and improving training methodologies.

One challenge is about how two-dimensional image sequences can accurately represent three-dimensional phenomena. This representation often results in different subproblems specific to two-phase phenomena such as occlusion (situations when an object fully or partially hides another object of the same class from the optical viewpoint), cluster, and low resolution. One method that researchers have investigated involves the refining of initially predicted results through multi-step shape reconstruction processes75,76,77,78. While initial object detection methods may vary (i.e., bounding box, regional proposal, image segmentation), the concept of reconstructing the digital inference from initially rough object outlines to its true shape remains the same75,76,77,78. However, these models are not programmed or trained to inherently recognize the occlusion phenomena themselves and are therefore limited to weakly occluded instances. Researchers soon realized that the model’s predictive capability can be finetuned to specific problems by modulating the training dataset. By customarily labeling droplets that were blurred by motion, researchers successfully trained the model to predict images captured at low framerates69. In another study, a model was trained to accurately predict occluded droplets by custom-labeling droplets that formed underneath larger droplets79. Other efforts include the use of synthetic datasets created from generative adversarial networks (GAN)75, or simulations80. The rationale of using such synthetic datasets to train is to carefully design a dataset with a controlled amount of object occlusion, nucleation sites, object density, and size to emphasize model learning for difficult scenarios. Hence, there must be a continuous effort to explore various training sets to investigate what and how the model learns, depending on the research objective.

Another challenge is centered upon data preparation. Due to the intrinsically complex phenomena being studied, data labeling requires experts. Most upcoming models use supervised learning, which requires training datasets in the form of labeled images. The image labeling process, called image annotation, can take up to several months to manually prepare a complete dataset for training and validation13. More often, the ground truth for the labeling is subjective and involves human intervention, making unanimous agreement among annotators impossible81. To combat such discrepancies, researchers have proposed using cost-effective, human-in-the-loop curations or semi-supervised learning that can alleviate annotation labor and increase labeling consistency82,83. For example, a recent study proposed a multi-step cycle of initial pretrained model predictions, human-aided error identification and correction, and retraining on an updated dataset83. While training an initial model to start the loop remains an ongoing challenge, abundant research opportunities exist developing in fully autonomous labeling pipelines using deep generative models to conditionally generate sequential visual scenes that mimic real states84,85,86, or even label-free unsupervised learning-based nucleation feature extractors to reduce time and labor involved in training and validating models.

Above all, the effort-to-reward ratio for exploring key features that describe underlying boiling and condensation mechanisms significantly favors researchers today. For example, features that connect nuclei interactions and heat transfer have been rarely discussed in the literature but can now be studied in depth with light algorithmic adjustments. Furthermore, big data trends of long-term nuclei statistics can provide rational mechanistic insights but are yet to be reported. By adding a tracking module to an object detection framework, researchers have proved that features with substantially higher-order insights could be extracted from videos with minimum modifications.

Data stream analysis

Another theme in AI research related to phase-change is to comprehend the transient nature of meta-data or visual data during phase-change processes. Given the strong yet complex correlation between “in-motion” data and heat transfer performance, researchers have classified the multi-phase flow patterns into various categories2,29. For example, the cyclic nucleation behaviors that constitute the heat transfer curves (Box 1) have traditionally been categorized into natural convection, nucleation-dominated, transition, and film-dominated regimes29. Evolving flow patterns in flow boiling systems have been classified into liquid, bubbly, slug, annular, mist, and vapor regimes87. Real-time data stream analysis using AI has the potential to identify or predict nucleation phases and patterns, which can be applied in smart boiling and condensation systems (Fig. 6a) for adaptive or predictive decision-making.

Understanding streaming data can be utilized to build a smart two-phase systems that make real-time adaptations by receiving feedback from systems using either discrete or continuous models. b On the one hand, unstable and transient multi-phase flow patterns are classified into discrete outputs when using discrete models. These classifications can offer instant feedback to change device settings when differences in flow patterns are detected. c Continuous models have implications for real-time predictions as well as time-series forecasting. The models can use time-series texts or visual images to forecast a CHF event and stops the system in real-time before the event occurs.

Discrete models

It is important to identify transient and unstable two-phase patterns into discrete class outputs, which is equivalent to the traditional ML classification problem88. The successful identification of patterns will help devices or systems operate under optimal conditions depending on their specific requirements, as illustrated in Fig. 6. For example, nucleate boiling in passive two-phase heat transfer devices, such as heat pipes, is typically detrimental to the device’s performance due to significant heat and mass transfer reduction caused by entrapped vapor bubbles inside the wick89. By contrast, the creation of small and replenishable droplets and bubbles is favorable to facilitating heat transfer in high-power heat exchangers1. In these nucleation-favoring applications, identifying the transition from the nucleation-dominated regime to the film-dominated regime is crucial to maintain high heat transfer performances as well as safe operating conditions1,2

Despite its significance, there have been only a few ML studies that demonstrate these classification tasks (Fig. 6b). The classification can be executed by using different types of data, ranging from signal patterns collected from sensors to qualitative visual descriptions of gas and liquid phase morphologies90. Since visual descriptors are outwardly more intuitive, the majority of studies have utilized visualization-based ML classification models (Supplementary Table 6). Naturally, CNN models have been heavily adopted to stream classification problems, with many models exceeding 98% prediction accuracy55,91. One study showed that CNN models could even deal with difficult visual cases, such as the transition from nucleate to film boiling, which trained experts cannot distinguish with any reasonable certainty55. It is worth noting that approaches that use structured data (i.e., non-imaging data) are also important, for they allow data stream classification where visualization might be limited or even inaccessible (e.g., in-tube flows) but is rarely addressed92,93.

Continuous models

In addition to discrete outputs, ML can also be employed to predict and forecast continuous outputs (Fig. 6c) using regression analysis. Regression methods are proficient at determining casual relations between independent and dependent variables and therefore have major applications for numerical and visual time-series forecasting (Fig. 6c; Supplementary Note 3)94. Both time-series forecasting methods utilize transient data streams to predict future outcome trends (Fig. 6c), but visual forecasting approaches reconstruct the data into visual scenes and are viewed to be more computationally expensive.

Continuous models are especially appealing for prediction and forecasting tasks because they can potentially be trained to encode the context of the two-phase phenomena, offering a great advantage over traditional sensors and probes. The models often hypothesize that a DL model can be trained to predict heat and mass transfer using secondary, higher-resolution characterization methods, such as visualization as an input. This would effectively allow the model to make more “physically-intuitive” or “scene-incorporating” predictions. Recent studies have confirmed this hypothesis, showing that vision-based models are highly sensitive to physically interpretable features such as the number and size of bubbles91, and there are strong correlations between collected bubble parameters and boiling heat flux intensity55.

Challenges and opportunities

Although the main keywords of data stream analysis are “real-time” and “forecasting,” neither one of these goals has been adequately met in the existing literature. A noticeable flaw is the use of previously collected data streams instead of live-streaming data. Therefore, future goals must shift the current paradigm of data stream analysis to streaming data analysis, where models train on more realistic data. The main keys to achieving this goal are to improve model transferability, explore indicative features, and expedite prediction time.

The first key is to improve model transferability factoring in the diverse boundary conditions that contribute to the complex and heterogeneous two-phase behaviors. While data supports that models can predict accurately settings familiar to the environments in which they were trained, studies customarily mention the need for additional training datasets for new boundary conditions55,91. Data augmentation or transfer learning techniques can be leveraged to remediate this issue, but only to some extent because they instigate the constant involvement of computer engineers to update the model55,91. Considering the inevitability and necessity of building foundational models, it remains an open question as to whether sufficient data can be collected and used to train models that incorporate the full complexity of the heterogeneous boiling and condensation phenomenon in varying conditions.

Another method to improve ML models is to identify indicative features that best represent the two-phase time-series problems. The features can be identified by engineering new features or selecting a new set of features95. As such, this process requires significant domain expertise in two-phase heat transfer to understand new features that can capture complex and nonlinear heat transfer performances during phase-change. Due to these aspects, unraveling the enigmatic features that can realize real-time prediction and forecasting is an ongoing challenge. For instance, a recent study utilized dimensionally-reduced image data to forecast future bubble morphologies based on a bidirectional LSTM network96. Images provide a visual representation of nucleation dynamics, which ultimately reflect the influence of experimental factors on the system. Consequently, investigating generalizable features that establish connections between nucleation patterns and desired outputs, researchers can potentially achieve a deeper understanding of nucleation phenomena and develop models that can be applied across various experimental conditions, leading to broader and more robust scientific insights. Future work should improve forecasting ML models by exploring a wide range of data types, including structured data (i.e., numerical data, or categorical data; low-dimensional), unstructured data (text, images, audio, video, or multi-modal; high-dimensional), and their hybrids (i.e., multimodal deep learning).

Additionally, it is crucial to evaluate the features based on their processing time, ensuring that they enable real-time prediction and forecasting. Models that utilize structured data inputs have quicker prediction speeds but at the expense of lower levels of physics. In contrast, models using unstructured data can extract physically meaningful features but are time-consuming, as showcased in Fig. 6. Typically, graphic datasets are highly information-dense and therefore can easily be inundated with redundant or irrelevant pixels (i.e., background data or noise), which requires additional data processing steps to reduce the data dimensionality or filtering important information only97. While dimensionality reduction methods (e.g., principal component analysis, resolution scaling) partially address the latency issues of vision-based models, they do so by sacrificing high-frequency image features and by introducing additional preprocessing steps, which have been underreported in many cases. An alternative solution is to selectively exploit only the important information. One recent study showed that this could be done by using neuromorphic event cameras that emulate the human retina, thereby only outputting “meaningful events” (i.e., pixels that display changes in brightness)97,98. The fundamentally different way that event cameras encode visual scenes allows them to be proficient for applications with strong constraints on latency, power consumption, or bandwidth99. In addition, the ability to remove unwanted, stationary background noise potentially allows neuromorphic cameras to resist from generalizability issues stemming from measurements across multiple labs and institutes which may use different experimental setups. While the practical employment of event cameras is vastly underexplored, models that classified boiling regimes by using simulated event streams demonstrated a 300% increase in prediction speed compared to ML models reported elsewhere. Along the same lines, other potential state-of-the-art DL models, such as vision transformers and spiking neural networks, may find applications in this domain for real-time predictions and forecasting.

Outlook

The rapid growth of AI-based solutions has highlighted the potential benefits for the heat transfer community. The impact of these technologies will depend on the degree of active exploration by researchers into these exciting advancements in computer science. Moreover, it should be emphasized that the AI technologies discussed in this review are not independent attempts, but rather, share a symbiotic relationship (Fig. 1c). Meta-regression analysis allows for holistic decision-making to find optimal two-phase heat and mass transfer performances. Physical feature extraction generates big data that has not been available in the past, which can advance our understanding and serve as the basis for new physical models. The knowledge gained from these attempts is instilled into data stream analysis models to demonstrate futuristic smart phase-change systems.

Future perspectives

Physics-centered machine learning

Quantifying the turbulent and spontaneous characteristics of the multi-phase physics involved with phase-change heat transfer processes with adequate quality that meets the demands of modern research has always posed a great challenge to researchers. Physical sensors or probes, which have been the gold standard for quantitative heat transfer characterizations so far, have limited spatial resolutions due to their genetic size. Besides that, optical measurement techniques such as infrared (IR) thermometry, fluorescence thermometry, and particle image velocimetry are limited to small domains and laboratory settings. On top of all this, there is no existing tool that can measure fluid and vapor properties simultaneously, which is crucial to understanding the underlying transport physics between the two phases. Although high-fidelity two-phase simulations have evolved over the past decades as an alternative solution to this problem, they are computationally expensive and often intractable without large-scale clusters or supercomputers.

The challenges associated with acquiring high-quality data might be addressed by leveraging AI or ML models that combine data with domain knowledge. Advances in ML models that incorporate known physics have demonstrated great potential to achieve accurate and interpretable results by satisfying both the observed data as well as the underlying physical laws100,101,102,103,104 .In the context of phase-change heat transfer, the physics that domain experts can incorporate into ML models lies on such a wide spectrum, ranging from basic continuity equations to instability laws, that there exists abundant opportunities for researchers to explore integrating physics into their ML frameworks. An example of such implementations could be developing ML models that can use experimentally measured properties of one phase (e.g., vapor or liquid) and predict the velocity, pressure, and temperature fields of the other, which is the crux of many two-phase heat transfer problems. In such examples, the physics-respecting properties of ML models will allow domain experts to assess the model’s validity and reliability in a more interpretable manner when it extrapolates beyond the range of observed data. Therefore, we envision that the fusion of data-driven models and domain expertise presents a promising avenue for accelerating scientific discoveries by combining the advantages of both approaches.

Building cyberinfrastructures for two-phase heat transfer

Beyond answering core science questions, it is imperative to develop an array of cyberinfrastructure (CI) technologies (Fig. 1c), including open-source and reusable data and scalable algorithms and software for long-term sustainability. The envisioned CI ecosystem should be agile and integrated to catalyze new transformative discoveries in heat transfer. This necessitates sustained community collaboration and continuous improvement at every stage.

Due to the nature of nonlinear, multi-dimensional, and multi-modal features during phase-change processes, it is critical to collect various datasets including meta-data, visual data, and transient data that cover different boundary and operating conditions. Unfortunately, collecting all dimensions of the data from computational and experimental efforts has not been addressed in this area. Therefore, it is critical to initiate data clusters following standard procedures discussed and defined by the community. This will enable researchers to access major assets (data and developed ML tools) that are stored in cloud environments and add their own to continue the phase-change cluster development.

In addition, technologies are needed to ensure the safe sharing of data since data owners take on risks when sharing their data with the research community. For example, CryptoNets88 allows neural networks to operate over encrypted data, ensuring that data remain confidential because decryption keys are not needed in neural networks105. At the same time, privacy methods must remain sufficiently explainable and transparent to help researchers correct them and make them safe, efficient, and accurate.

With the growth of training data and the complexity of deep learning models, scalable algorithms and software become necessities for solving large-scale problems and resolving fine-scale physics. Training a neural network is generally a time-consuming and challenging process, where the difficulty of the training process scales as the network becomes larger and deeper. Moreover, approaches that incorporate PDE-based soft regularization only add to this difficulty by making the loss landscape even harder to optimize. Accordingly, the benefits of the developed DL tools outweigh the high costs and challenges associated with their training only if the resulting models are generalizable and scalable. A promising approach to improving the scalability of trained models inspired by curriculum learning is to first train with simpler constraints and then couple pre-trained models using domain decomposition strategies to solve the target problem45. Another complementary approach is to build foundational models for ML models that embrace the “pre-train and fine-tune” paradigm for science problems. This paradigm has been highly successful in other domains and holds great promise in accelerating scientific advancements, enhancing model performance, and facilitating the efficient transfer of knowledge across related scientific domains. Building on this progress, creating reusable foundational models to learn unsteady single-phase physics in two-phase processes and coupling these models to make predictions for multi-physics phenomena presents one promising future direction for building a generalizable and sustainable CI.

Communicating across multiple disciplines

This review also elucidates the important role that AI can play in transferring knowledge between multiple disciplines, perhaps with emphasis on material and thermofluidic sciences. The nucleation phenomena described in this paper are highly dependent on surface properties (based on their structures and chemistry). Heat transfer performance can be enhanced using nano- or micro-materials that have desired heat and mass transfer properties. The comprehensive understanding about phase-change physics opens new opportunities for materials scientists in the context of materials design. In earlier studies, these processes were not efficient as scientists needed to search through a large range of materials and architectures, but now they can leverage AI-based design tools. For example, AI-assisted data mining toolkits like matminer have seen success in the material sciences by aiding the development and testing of new materials106. With access to new datasets, including metadata, visual data, and transient data, a novel collaboration between the heat transfer and materials science communities becomes feasible. We can formulate topology optimization models, explore inverse design techniques, and delve into the realm of multi-objective design as new dimensions in this research. During this process, building an additional layer of communications with computer and data scientists becomes essential. Many of the AI technologies discussed in this review are prototypes derived from successful models developed by computer scientists. These models can aid in the creation of task-specific new models for two-phase tasks. Data scientists can help develop and optimize data architectures that efficiently curate large data streams into meaningful features and identify important data features to address phase-change heat transfer. Successful studies through this convergence will provide a holistic description of phase-change heat transfer dynamics, enabling blueprints for next-generation phase-change thermal management designs. These new designs will, in turn, be translated to many applications, including energy conversion devices, two-phase electronics cooling devices, and water-energy surfaces.

References

Mousa, M. H., Yang, C.-M., Nawaz, K. & Miljkovic, N. Review of heat transfer enhancement techniques in two-phase flows for highly efficient and sustainable cooling. Renew. Sust. Energ. Rev. 155, 111896 (2021).

Attinger, D. et al. Surface engineering for phase change heat transfer: A review. MRS Energ. Sustain. 1, E4 (2014).

Griffith, P. & Wallis, J. D. The role of surface conditions in nucleate boiling, no. 14 (Massechusetts Institute of Technology, Division of Industrial Cooperation, 1958).

Bankoff, S. Entrapment of gas in the spreading of a liquid over a rough surface. AIChE J. 4, 24–26 (1958).

Mccormick, J. L. & Westwater, J. W. Nucleation Sites for Dropwise Condensation. Chem. Eng. Sci. 20, 1021–1036 (1965).

Zuber, N. Hydrodynamic aspects of boiling heat transfer. Doctoral Dissertation of University of California, 4175511 (1959).

Plesset, M. S. & Zwick, S. A. The Growth of Vapor Bubbles in Superheated Liquids. J. Appl. Phys. 25, 493–500 (1954).

Tanaka, H. A Theoretical Study of Dropwise Condensation. J. Heat. Transf. 97, 72–78 (1975).

Miljkovic, N., Enright, R. & Wang, E. N. Modeling and Optimization of Superhydrophobic Condensation. J. Heat. Trans.-T Asme 135, 111004 (2013).

Ivey, H. Relationships between bubble frequency, departure diameter and rise velocity in nucleate boiling. Int. J. Heat. Mass Trans. 10, 1023–1040 (1967).

Dadhich, M. & Prajapati, O. S. A brief review on factors affecting flow and pool boiling. Renew. Sust. Energ. Rev. 112, 607–625 (2019).

Lee, J. and Suh,Y. et al. Computer vision-assisted investigation of boiling heat transfer on segmented nanowires with vertical wettability. Nanoscale 14, 13078–13089 (2022).

Suh, Y. et al. A Deep Learning Perspective on Dropwise Condensation. Adv. Sci. 8, 2101794 (2021).

Goertzel, B. & Pennachin, C. Artificial general intelligence. Vol. 2 (Springer, 2007).

Saha, S. et al. Hierarchical Deep Learning Neural Network (HiDeNN): An artificial intelligence (AI) framework for computational science and engineering. Comput. Method. Appl. M. 373, 113452 (2021).

Goodfellow, I., Bengio, Y. & Courville, A. Deep learning. Vol. 1 (MIT Press, 2016).

Tshitoyan, V. et al. Unsupervised word embeddings capture latent knowledge from materials science literature. Nature 571, 95–98 (2019).

Jain, A. et al. Commentary: The Materials Project: A materials genome approach to accelerating materials innovation. Apl. Mater. 1, 011002 (2013).

Mozaffar, M. et al. Deep learning predicts path-dependent plasticity. P. Natl Acad. Sci. USA 116, 26414–26420 (2019).

Bostanabad, R. et al. Computational microstructure characterization and reconstruction: Review of the state-of-the-art techniques. Prog. Mater. Sci. 95, 1–41 (2018).

Raissi, M., Yazdani, A. & Karniadakis, G. E. Hidden fluid mechanics: Learning velocity and pressure fields from flow visualizations. Science 367, 1026–1030 (2020).

Hughes, M. T., Kini, G. & Garimella, S. Status, Challenges, and Potential for Machine Learning in Understanding and Applying Heat Transfer Phenomena. J. Heat. Trans. Asme 143, 120802 (2021).

Wang, X. L. et al. A comprehensive review on the application of nanofluid in heat pipe based on the machine learning: Theory, application and prediction. Renew. Sust. Energ. Rev. 150, 111434 (2021).

Wang, Z. Y. et al. Advanced big-data/machine-learning techniques for optimization and performance enhancement of the heat pipe technology-A review and prospective study. Appl. Energ. 294, 116969 (2021).

Ahmadi, M. H., Kumar, R., Assad, M. E. & Ngo, P. T. T. Applications of machine learning methods in modeling various types of heat pipes: a review. J. Therm. Anal. Calorim. 146, 2333–2341 (2021).

Maleki, A., Haghighi, A. & Mahariq, I. Machine learning-based approaches for modeling thermophysical properties of hybrid nanofluids: A comprehensive review. J. Mol. Liq. 322, 114843 (2021).

Ma, T., Guo, Z. X., Lin, M. & Wang, Q. W. Recent trends on nanofluid heat transfer machine learning research applied to renewable energy. Renew. Sust. Energ. Rev. 138, 110494 (2021).

Sun, Y. L., Tang, Y., Zhang, S. W., Yuan, W. & Tang, H. A review on fabrication and pool boiling enhancement of three-dimensional complex structures. Renew. Sust. Energ. Rev. 162, 112437 (2022).

Cho, H. J., Preston, D. J., Zhu, Y. Y. & Wang, E. N. Nanoengineered materials for liquid-vapour phase-change heat transfer. Nat. Rev. Mater. 2, 1–17 (2017).

Hou, Y. M., Yu, M., Chen, X. M., Wang, Z. K. & Yao, S. H. Recurrent Filmwise and Dropwise Condensation on a Beetle Mimetic Surface. Acs Nano 9, 71–81 (2015).

Pham, Q. N. et al. Boiling Heat Transfer with a Well-Ordered Microporous Architecture. Acs. Appl. Mater. Inter. 12, 19174–19183 (2020).

Kim, S. H., Chu, I. C., Choi, M. H. & Euh, D. J. Mechanism study of departure of nucleate boiling on forced convective channel flow boiling. Int. J. Heat. Mass Trans. 126, 1049–1058 (2018).

Bard, A., Qiu, Y., Kharangate, C. R. & French, R. Consolidated modeling and prediction of heat transfer coefficients for saturated flow boiling in mini/micro-channels using machine learning methods. Appl. Therm. Eng. 210, 118305 (2022).

Choi, R. Y., Coyner, A. S., Kalpathy-Cramer, J., Chiang, M. F. & Campbell, J. P. Introduction to Machine Learning, Neural Networks, and Deep Learning. Transl. Vis. Sci. Techn. 9, 14–14 (2020).

Neto, M. P. & Paulovich, F. V. Explainable matrix-visualization for global and local interpretability of random forest classification ensembles. IEEE Trans. Vis. Computer Graph. 27, 1427–1437 (2020).

Yang, Y., Morillo, I. G. & Hospedales, T. M. Deep neural decision trees. Preprint at https://arxiv.org/abs/1806.06988 (2018).

Roßbach, P. Neural networks vs. random forests–does it always have to be deep learning. Germany: Frankfurt School of Finance and Management (2018).

Hassanpour, M., Vaferi, B. & Masoumi, M. E. Estimation of pool boiling heat transfer coefficient of alumina water-based nanofluids by various artificial intelligence (AI) approaches. Appl. Therm. Eng. 128, 1208–1222 (2018).

Mehrabi, M. & Abadi, S. M. A. N. R. Modeling of condensation heat transfer coefficients and flow regimes in flattened channels. Int. Commun. Heat. Mass. 126, 105391 (2021).

Kim, H., Moon, J., Hong, D., Cha, E. & Yun, B. Prediction of critical heat flux for narrow rectangular channels in a steady state condition using machine learning. Nucl. Eng. Technol. 53, 1796–1809 (2021).

Lee, D. H. et al. Application of the machine learning technique for the development of a condensation heat transfer model for a passive containment cooling system. Nucl. Eng. Technol. 54, 2297–2310 (2021).

Kim, K. M., Hurley, P. & Duarte, J. P. Physics-informed machine learning-aided framework for prediction of minimum film boiling temperature. Int. J. Heat. Mass Trans. 191, 122839 (2022).

Zhao, X. G., Shirvan, K., Salko, R. K. & Guo, F. D. On the prediction of critical heat flux using a physics-informed machine learning-aided framework. Appl. Therm. Eng. 164, 114540 (2020).

Karniadakis, G. E. et al. Physics-informed machine learning. Nat. Rev. Phys. 3, 422–440 (2021).

Wang, H. J., Planas, R., Chandramowlishwaran, A. & Bostanabad, R. Mosaic flows: A transferable deep learning framework for solving PDEs on unseen domains. Comput. Method. Appl. M. 389, 114424 (2022).

Cai, S. et al. Flow over an espresso cup: inferring 3-D velocity and pressure fields from tomographic background oriented Schlieren via physics-informed neural networks. J. Fluid Mech. 915, A102 (2021).

Schmidt, E., Schurig, W. & Sellschopp, W. Versuche über die Kondensation von Wasserdampf in Film-und Tropfenform. Tech. Mech. Therm. 1, 53–63 (1930).

Nukiyama, S. The maximum and minimum values of the heat Q transmitted from metal to boiling water under atmospheric pressure. Int. J. Heat. Mass Trans. 9, 1419–1433 (1966).

Benjamin, R. & Balakrishnan, A. Nucleate pool boiling heat transfer of pure liquids at low to moderate heat fluxes. Int. J. Heat. Mass Trans. 39, 2495–2504 (1996).

Graham, R. W. & Hendricks, R. C. Assessment of convection, conduction, and evaporation in nucleate boiling. (National Aeronautics and Space Administration, 1967).

Han, C.-Y. The mechanism of heat transfer in nucleate pool boiling, (Massachusetts Institute of Technology, 1962).

Judd, R. & Hwang, K. A comprehensive model for nucleate pool boiling heat transfer including microlayer evaporation. J. Heat. Mass Trans. 98, 623–629 (1976).

Mikic, B. & Rohsenow, W. A new correlation of pool-boiling data including the effect of heating surface characteristics. J. Heat. Mass Trans. 91, 245–250 (1969).

Podowski, M. Z., Alajbegovic, A., Kurul, N., Drew, D. & Lahey, R. Jr Mechanistic modeling of CHF in forced-convection subcooled boiling. (Knolls Atomic Power Lab., Schenectady, NY (United States), 1997).

Suh, Y., Bostanabad, R. & Won, Y. Deep learning predicts boiling heat transfer. Sci. Rep.-Uk 11, 5622 (2021).

Jin, Y. & Shirvan, K. Study of the film boiling heat transfer and two-phase flow interface behavior using image processing. Int. J. Heat. Mass Trans. 177, 121517 (2021).

Kulenovic, R., Mertz, R. & Groll, M. High speed flow visualization of pool boiling from structured tubular heat transfer surfaces. Proceedings of the International Thermal Science Seminar 1, 409–414 (2001).

Maurus, R., Ilchenko, V. & Sattelmayer, T. Study of the bubble characteristics and the local void fraction in subcooled flow boiling using digital imaging and analysing techniques. Exp. Therm. Fluid Sci. 26, 147–155 (2002).

Surtaev, A., Serdyukov, V., Zhou, J. J., Pavlenko, A. & Tumanov, V. An experimental study of vapor bubbles dynamics at water and ethanol pool boiling at low and high heat fluxes. Int. J. Heat. Mass Trans. 126, 297–311 (2018).

Watanabe, N. & Aritomi, M. Correlative relationship between geometric arrangement of drops in dropwise condensation and heat transfer coefficient. Int. J. Heat. Mass Trans. 105, 597–609 (2017).

Maiti, N., Desai, U. B. & Ray, A. K. Application of mathematical morphology in measurement of droplet size distribution in dropwise condensation. Thin Solid Films 376, 16–25 (2000).

Damoulakis, G., Gukeh, M. J., Moitra, S. & Megaridis, C. M. Quantifying steam dropwise condensation heat transfer via experiment, computer vision and machine learning algorithms. 2021 20th IEEE Intersociety Conference on Thermal and Thermomechanical Phenomena in Electronic Systems (iTherm) (2021).

Wikramanayake, E. & Bahadur, V. Distinct condensation droplet distribution patterns under low- and high-frequency electrowetting-on-dielectric (EWOD) effect. Heat Transfer Summer Conference. V001T013A001 (American Society of Mechanical Engineers, 2022).

Castillo, J. E., Weibel, J. A. & Garimella, S. V. The effect of relative humidity on dropwise condensation dynamics. Int. J. Heat. Mass Trans. 80, 759–766 (2015).

Castillo, J. E. & Weibel, J. A. Predicting the growth of many droplets during vapor-diffusion-driven dropwise condensation experiments using the point sink superposition method. Int. J. Heat. Mass Trans. 133, 641–651 (2019).

Watanabe, N., Aritomi, M. & Machida, A. Time-series characteristics and geometric structures of drop-size distribution density in dropwise condensation. Int. J. Heat. Mass Trans. 76, 467–483 (2014).

Parin, R. et al. Heat transfer and droplet population during dropwise condensation on durable coatings. Appl. Therm. Eng. 179, 115718 (2020).

O’Mahony, N. et al. Deep learning vs. traditional computer vision. Science and information conference. 128–144 (Springer, 2020).

Khodakarami, S., Rabbi, K. F., Suh, Y., Won, Y. & Miljkovic, N. Machine learning enabled condensation heat transfer measurement. Int. J. Heat. Mass Trans. 194, 123016 (2022).

Hoang, N. H., Song, C. H., Chu, I. C. & Euh, D. J. A bubble dynamics-based model for wall heat flux partitioning during nucleate flow boiling. Int. J. Heat. Mass Trans. 112, 454–464 (2017).

Gerardi, C., Buongiorno, J., Hu, L. W. & McKrell, T. Study of bubble growth in water pool boiling through synchronized, infrared thermometry and high-speed video. Int. J. Heat. Mass Trans. 53, 4185–4192 (2010).

Kim, S. H. et al. Heat flux partitioning analysis of pool boiling on micro structured surface using infrared visualization. Int. J. Heat. Mass Trans. 102, 756–765 (2016).

Chang, S. et al. BubbleMask: Autonomous visualization of digital flow bubbles for predicting critical heat flux. Int. J. Heat. Mass Trans. 217, 124656 (2023).

Seong, J. H., Ravichandran, M., Su, G., Phillips, B. & Bucci, M. Automated bubble analysis of high-speed subcooled flow boiling images using U-net transfer learning and global optical flow. Int. J. Multiph. Flow. 159, 104336 (2023).

Hessenkemper, H., Starke, S., Atassi, Y., Ziegenhein, T. & Lucas, D. Bubble identification from images with machine learning methods. Int. J. Heat. Mass Trans. 155, 104167 (2022).

Li, J. Q., Shao, S. Y. & Hong, J. R. Machine learning shadowgraph for particle size and shape characterization. Meas. Sci. Technol. 32, 015406 (2021).

Cerqueira, R. F. L. & Sinmec, E. E. P. Development of a deep learning-based image processing technique for bubble pattern recognition and shape reconstruction in dense bubbly flows. Chem. Eng. Sci. 230, 116163 (2021).

Torisaki, S. & Miwa, S. Robust bubble feature extraction in gas-liquid two-phase flow using object detection technique. J. Nucl. Sci. Technol. 57, 1231–1244 (2020).

Ma, C. et al. Condensation Droplet Sieve. Nat. Commun. 13, 5381 (2022).

Yan, J. Y., Ma, R. & Du, X. Consistent optical surface inspection based on open environment droplet size-controlled condensation figures. Meas. Sci. Technol. 32, 105405 (2021).

Milan, A., Leal-Taixé, L., Reid, I., Roth, S. & Schindler, K. MOT16: A benchmark for multi-object tracking. Preprint at https://arxiv.org/abs/1603.00831 (2016).

Moen, E. et al. Deep learning for cellular image analysis. Nat. Methods 16, 1233–1246 (2019).

Suh, Y. et al. VISION-iT: A Framework for Digitizing Bubbles and Droplets. Energy AI 15, 100309 (2023).

Newby, J. M., Schaefer, A. M., Lee, P. T., Forest, M. G. & Lai, S. K. Convolutional neural networks automate detection for tracking of submicron-scale particles in 2D and 3D. P. Natl Acad. Sci. USA 115, 9026–9031 (2018).

Kang, S. & Cho, K. Conditional Molecular Design with Deep Generative Models. J. Chem. Inf. Model 59, 43–52 (2019).

Denton, E. & Fergus, R. Stochastic video generation with a learned prior. International conference on machine learning. 1174–1183 (PMLR, 2018).

Cheng, L. Flow boiling heat transfer with models in microchannels. Microchannel Phase Change Transport Phenomena 141–191 (Elsevier, 2016).

Hobold, G. M. & da Silva, A. K. Machine learning classification of boiling regimes with low speed, direct and indirect visualization. Int. J. Heat. Mass Trans. 125, 1296–1309 (2018).

Mishkinis, D. & Ochterbeck, J. Homogeneous Nucleation and the Heat‐Pipe Boiling. Limit. J. Eng. Phys. Thermophys. 76, 813–818 (2003).

Nie, F. et al. Image identification for two-phase flow patterns based on CNN algorithms. Int. J. Multiphas. Flow. 152, 104067 (2022).

Hobold, G. M. & da Silva, A. K. Visualization-based nucleate boiling heat flux quantification using machine learning. Int. J. Heat. Mass Trans. 134, 511–520 (2019).

Xu, H., Tang, T., Zhang, B. R. & Liu, Y. C. Identification of two-phase flow regime in the energy industry based on modified convolutional neural network. Prog. Nucl. Energ. 147, 104191 (2022).

Ambrosio, J. D., Lazzaretti, A. E., Pipa, D. R. & da Silva, M. J. Two-phase flow pattern classification based on void fraction time series and machine learning. Flow. Meas. Instrum. 83, 102084 (2022).

Maulud, D. & Abdulazeez, A. M. A review on linear regression comprehensive in machine learning. J. Appl. Sci. Tech. Trends 1, 140–147 (2020).

Lotfian, M., Ingensand, J. & Brovelli, M. A. The Partnership of Citizen Science and Machine Learning: Benefits, Risks, and Future Challenges for Engagement, Data Collection, and Data Quality. Sustain.-Basel 13, 8087 (2021).

Rokoni, A. et al. Learning new physical descriptors from reduced-order analysis of bubble dynamics in boiling heat transfer. Int. J. Heat. Mass Trans. 186, 122501 (2022).

Gallego, G. et al. Event-based vision: A survey. IEEE Trans. pattern Anal. Mach. Intell. 44, 154–180 (2020).

Lu, D. Y., Suh, Y. & Won, Y. Rapid identification of boiling crisis with event-based visual streaming analysis. Appl. Therm. Eng. 239, 122004 (2024).

Sironi, A., Brambilla, M., Bourdis, N., Lagorce, X. & Benosman, R. HATS: Histograms of averaged time surfaces for robust event-basted object classification. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 1731–1740 (2018).

Baker, N. et al. Workshop report on basic research needs for scientific machine learning: Core technologies for artificial intelligence. USDOE Office of Science (SC), Washington, DC (United States) (2019).

Lu, L., Jin, P., Pang, G., Zhang, Z. & Karniadakis, G. E. Learning nonlinear operators via DeepONet based on the universal approximation theorem of operators. Nat. Mach. Int. 3, 218–229 (2021).

Li, Z. et al. Fourier neural operator for parametric partial differential equations. Preprint at https://arxiv.org/abs/2010.08895 (2020).

Hassan, S. M. S. et al. BubbleML: A Multi-Physics Dataset and Benchmarks for Machine Learning. Adv. Neural Inf. Process. Syst. 36 (2023)

Li, Z. et al. Physics-informed neural operator for learning partial differential equations. Preprint at https://arxiv.org/abs/2111.03794 (2021)

Gilad-Bachrach, R. et al. Cryptonets: Applying neural networks to encrypted data with high throughput and accuracy. Int. Confer. Mach. Learn. 48, 201–210 (2016).

Ward, L. et al. Matminer: An open source toolkit for materials data mining. Comput. Mater. Sci. 152, 60–69 (2018).

Upot, N. V. et al. Advances in micro and nanoengineered surfaces for enhanced boiling and condensation heat transfer: a review. Nanoscale Adv. 5, 1232–1270 (2023).

Samek, W., Montavon, G., Lapuschkin, S., Anders, C. J. & Muller, K. R. Explaining Deep Neural Networks and Beyond: A Review of Methods and Applications. P. IEEE 109, 247–278 (2021).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Rosenblatt, F. The Perceptron - a Probabilistic Model for Information-Storage and Organization in the Brain. Psychol. Rev. 65, 386–408 (1958).

Hosny, A., Parmar, C., Quackenbush, J., Schwartz, L. H. & Aerts, H. J. W. L. Artificial intelligence in radiology. Nat. Rev. Cancer 18, 500–510 (2018).

Mater, A. C. & Coote, M. L. Deep Learning in Chemistry. J. Chem. Inf. Model. 59, 2545–2559 (2019).

Li, X. H. et al. Transfer learning in computer vision tasks: Remember where you come from. Image Vision. Comput. 93, 103853 (2020).

Deng, J. et al. Imagenet: A large-scale hierarchical image database. 2009 IEEE conference on computer vision and pattern recognitionp. 248–255 (IEEE).

Lin, T.-Y. et al. Microsoft coco: Common objects in context. European conference on computer vision. 740–755 (Springer).

Pan, S. J. & Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 22, 1345–1359 (2009).

Hafiz, A. M. & Bhat, G. M. A survey on instance segmentation: state of the art. Int. J. Multimed. Inf. R. 9, 171–189 (2020).

Zhao, Z. Q., Zheng, P., Xu, S. T. & Wu, X. D. Object Detection With Deep Learning: A Review. IEEE. T. Neur. Net. Lear. 30, 3212–3232 (2019).

Long, J., Shelhamer, E. & Darrell, T. Fully convolutional networks for semantic segmantation. Proceedings of the IEEE conference on computer vision and pattern recognition. 3431–3440 (2015).

Acknowledgements

The authors give thanks to Tiffany B. Inouye for assistance in the preparation of this manuscript. The authors gratefully acknowledge the funding support from the Office of Naval Research (ONR) with Dr. Mark Spector as the program manager (Grant No. N00014-22-1-2063) and the National Science Foundation (NSF) under Award No. TTP 2045322.

Author information

Authors and Affiliations

Contributions

Y.S., A.C., and Y.W. conceptualized the article structure, content, and figures. Y.S., A.C., and Y.W. wrote and edited the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Suh, Y., Chandramowlishwaran, A. & Won, Y. Recent progress of artificial intelligence for liquid-vapor phase change heat transfer. npj Comput Mater 10, 65 (2024). https://doi.org/10.1038/s41524-024-01223-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41524-024-01223-8